Текст

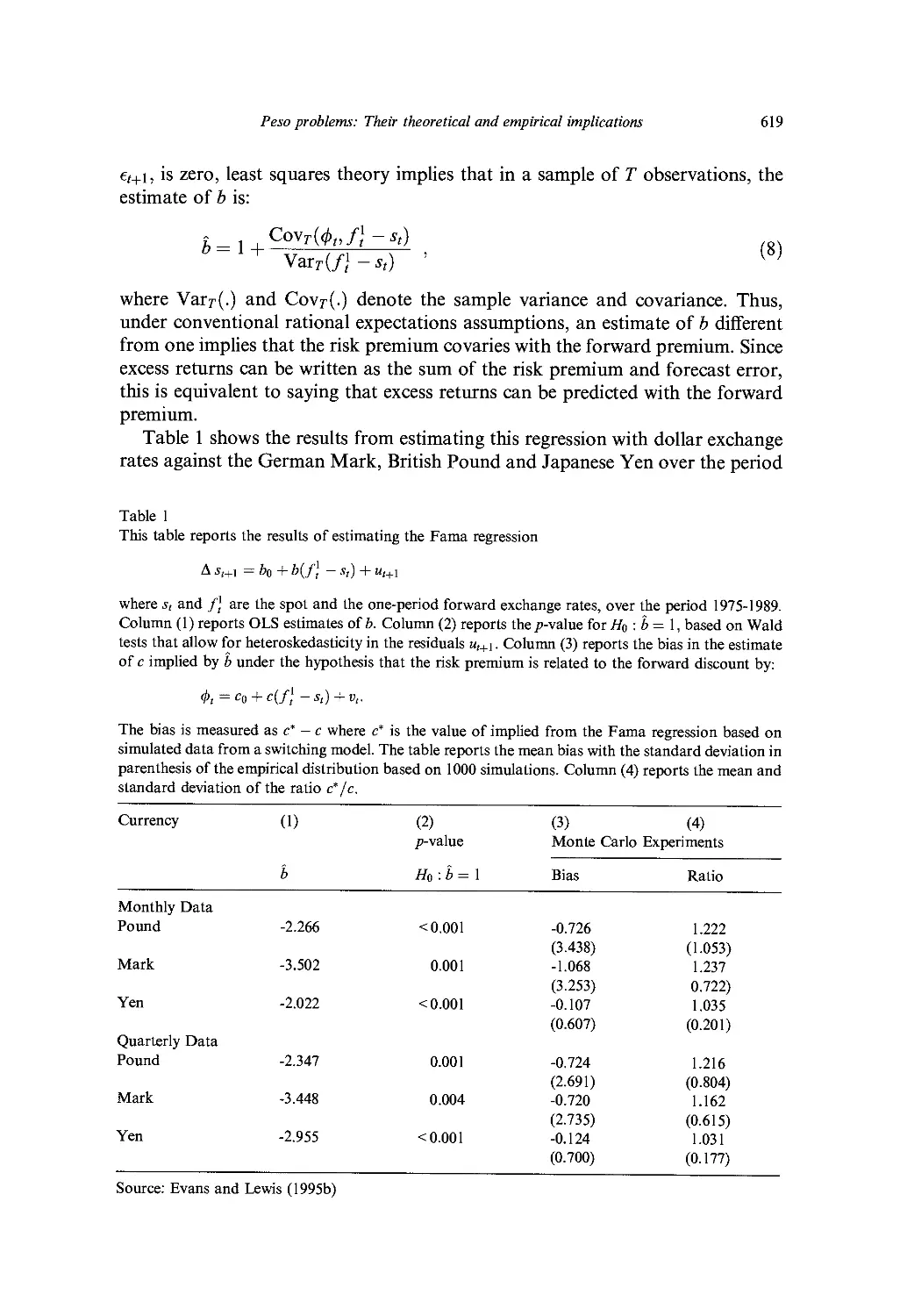

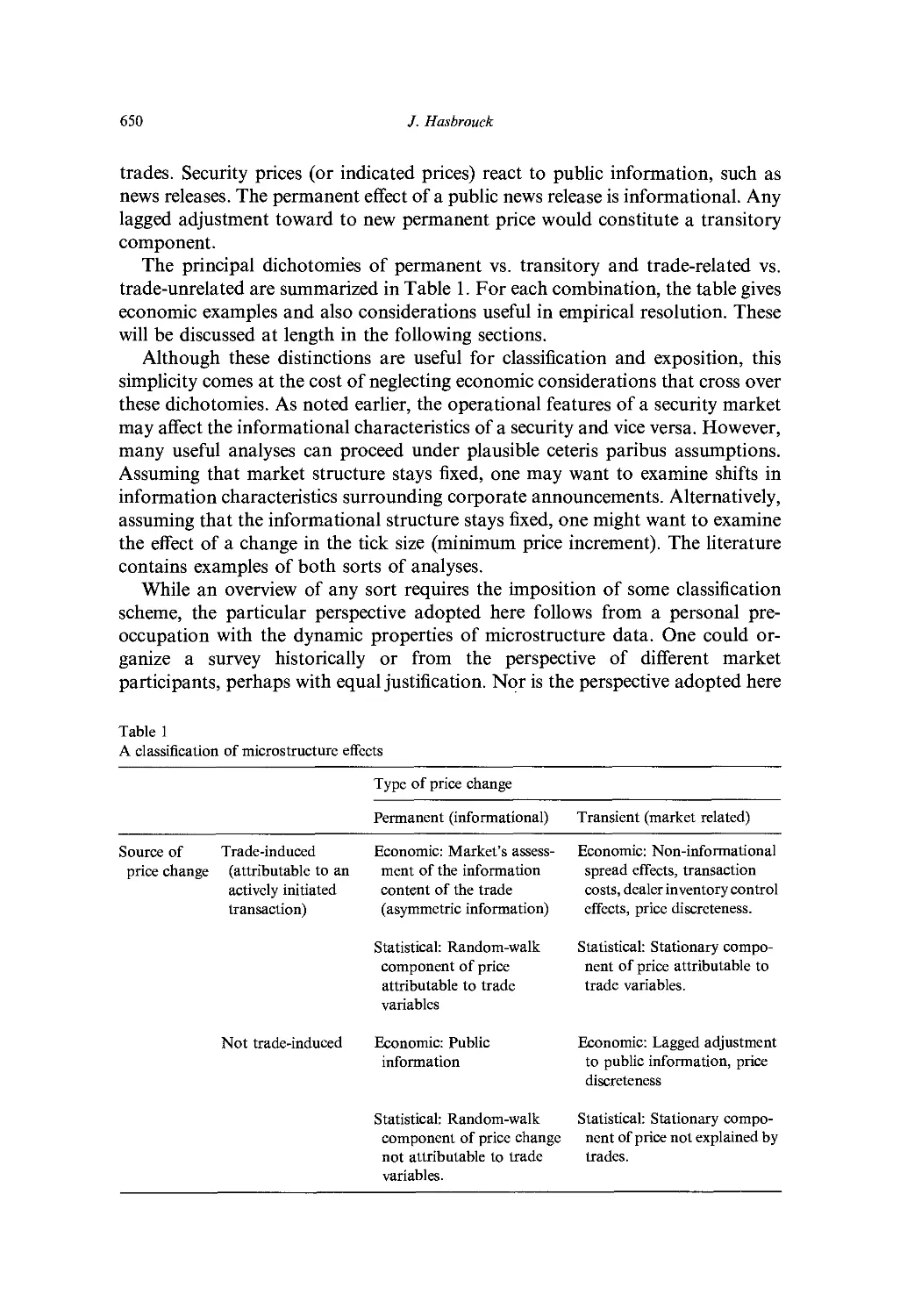

handbook of

statistics 14

Statistical Methods

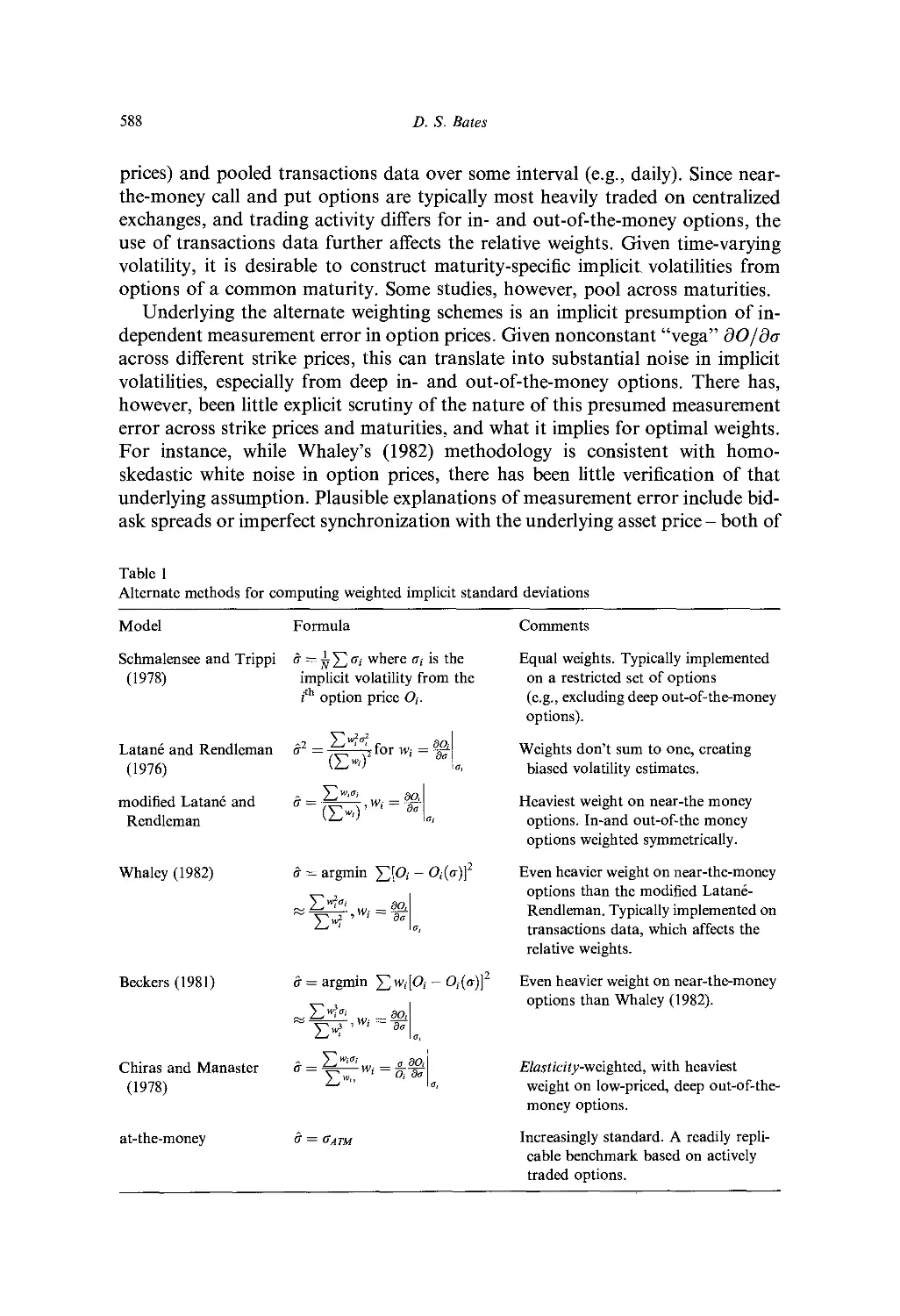

in Finance

Edited by

i ;.s

C.R.kau

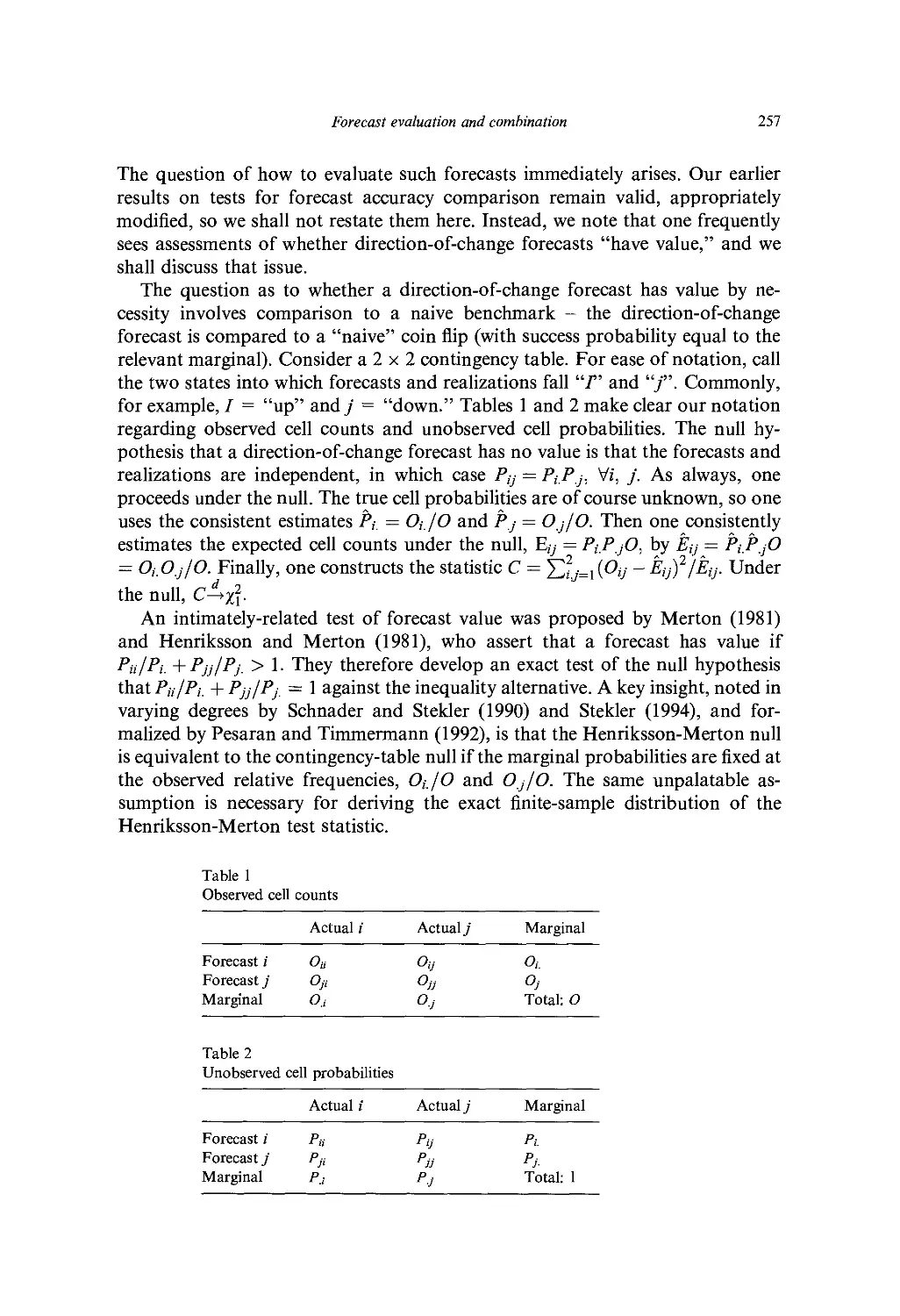

l

ELSEVIER SCIENCE B.V.

Sara Burgerhartstraal 25

P.O. Box 211, 1000 AE Amsterdam, The Netherlands

ISBN: 0-444-81964-9

© 1996 Elsevier Science B.V. All rights reserved.

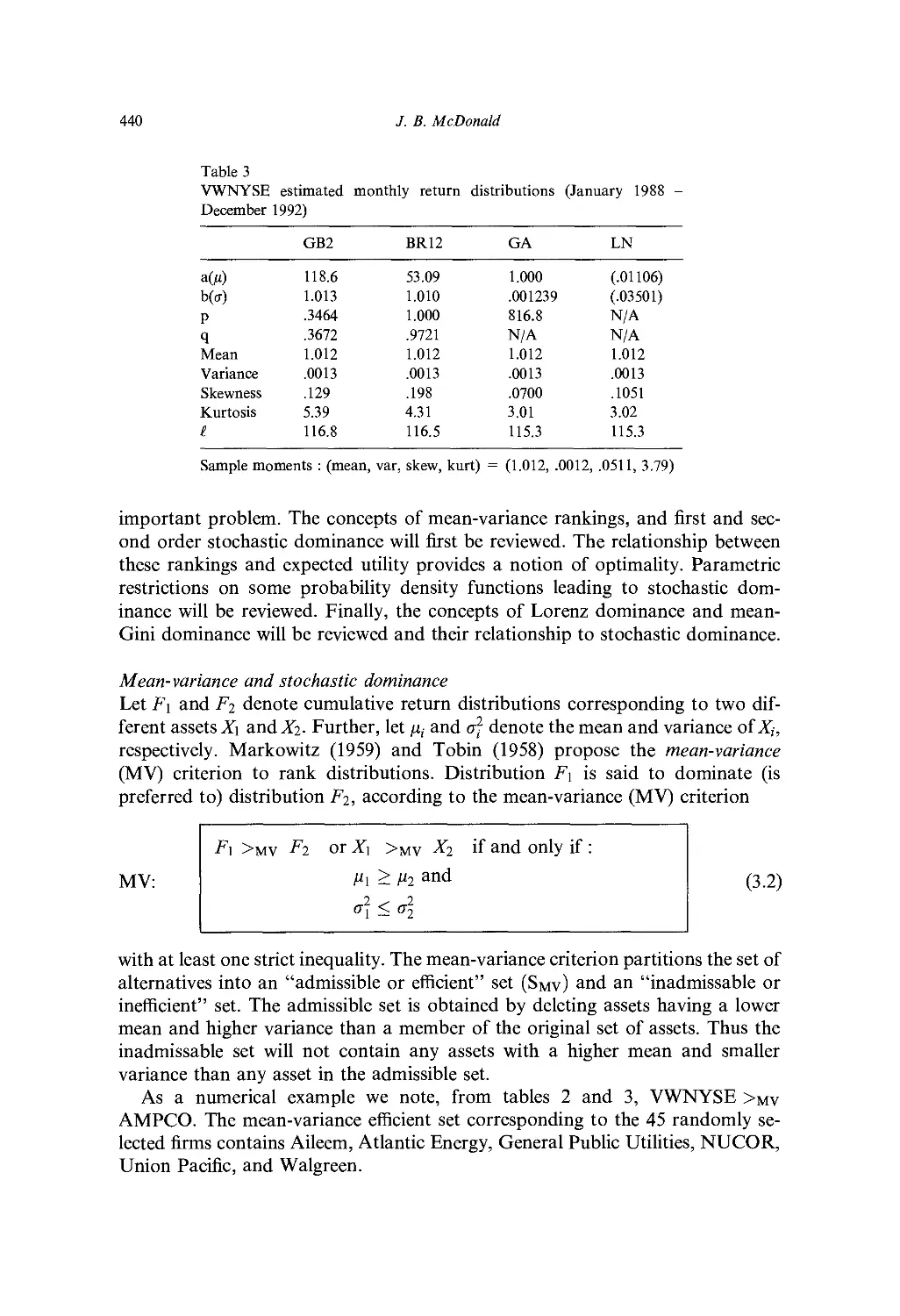

No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form

or by any means, electronic, mechanical, photocopying, recording or otherwise, without the prior

written permission of the publisher, Elsevier Science B.V . Copyright & Permissions Department. P.O.

Box 521, 1000 AM Amsterdam. The Netherlands.

Special regulations for readers in the U.S.A.-This publication has been registered wilh the Copyright

Clearance Center Inc. (CCC). 222 Rosewood Drive. Danvers, MA 0192.1. Information can be obtained

from the CCC about conditions under which photocopies of parts oi' this publication may be made in

the U.S.A. All other copyright questions, including photocopying outside the U.S.A., should be

referred to the Publishers unless otherwise specified.

No responsibility is assumed by the publisher for any injury and or damage to persons or property as a

matter of products liability, negligence or otherwise, or from any use of operation of any methods,

products, instructions or ideas contained in the material herein.

This book is printed on acid-free paper.

Printed in The Netherlands.

Table of contents

Preface v

Contributors xv

PART I. ASSET PRICING

Ch. I. Econometric Evaluation of Asset Pricing Models 1

W. E. Person and R. Jagannathan

1. Introduction 1

2. Cross-sectionaI regression methods for testing beta pricing models 3

3. Asset pricing models and stochastic discount factors 10

4. The generalized method of moments 15

5. Model diagnostics 23

6. Conclusions 28

Appendix 29

References 30

Ch. 2. Instrumental Variables Estimation of Conditional Beta

Pricing Models 35

C. R. Harvey and C. M. Kirby

1. Introduction 35

2. Single beta models 37

3. Models with multiple betas 44

4. Latent variables models 46

5. Generalized method of moments estimation 47

6. Closing remarks 58

References 58

VIII

Table of contents

Ch. 3. Semiparametric Mclhods for Asset Pricing Models 61

B. N. Lehmann

1. Introduction 61

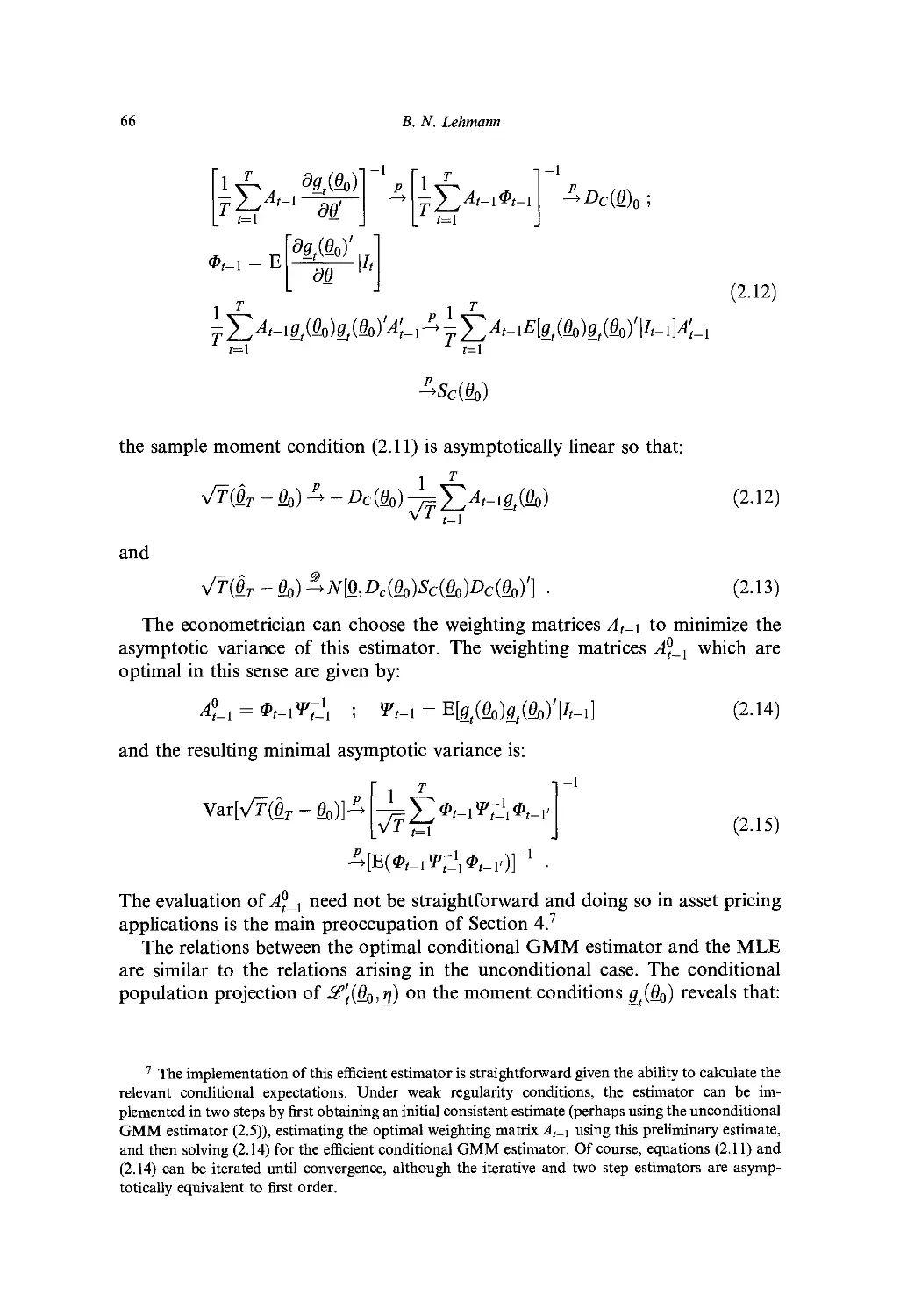

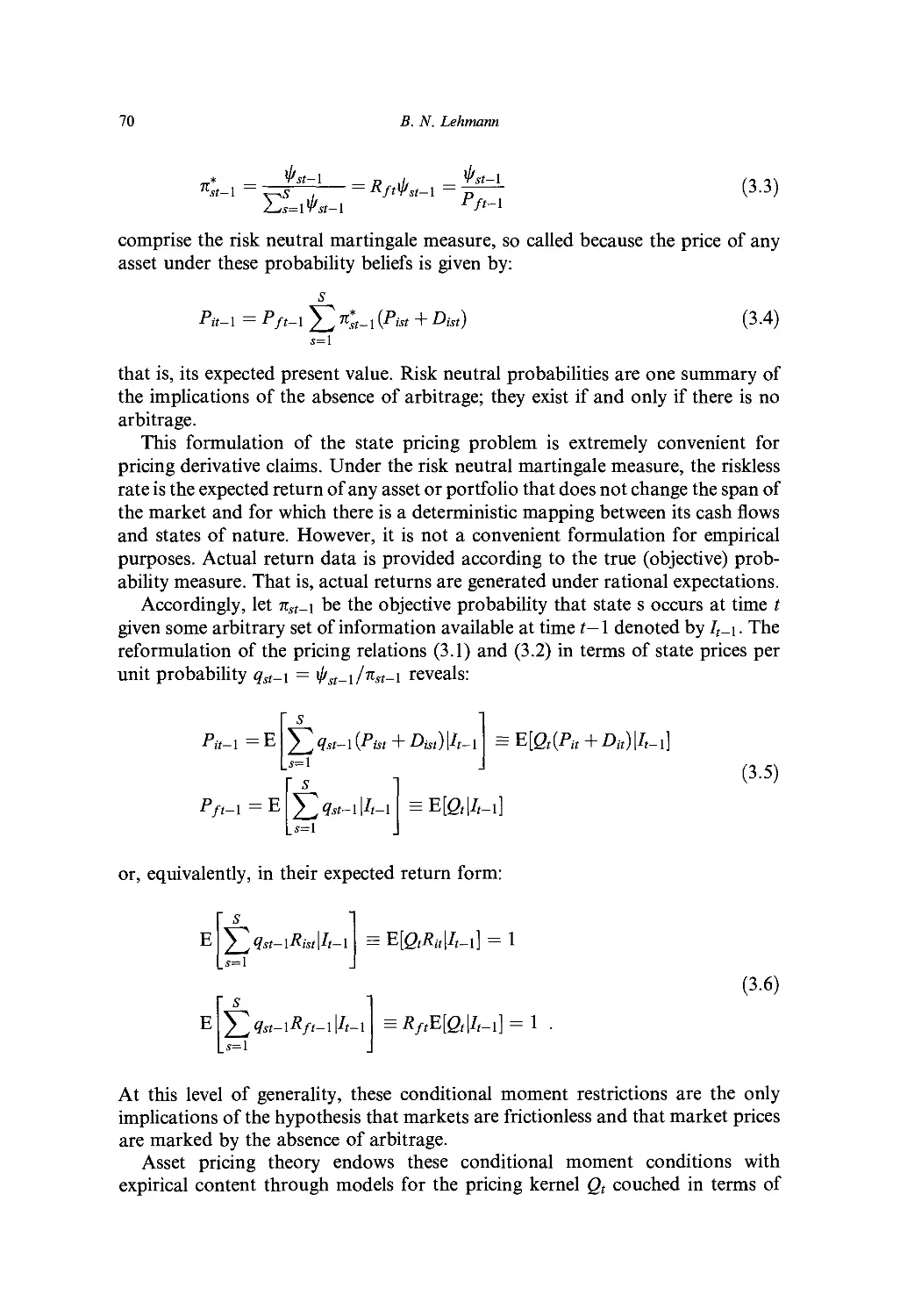

2. Some relevant aspects of the generalized method of moments (GMM) 62

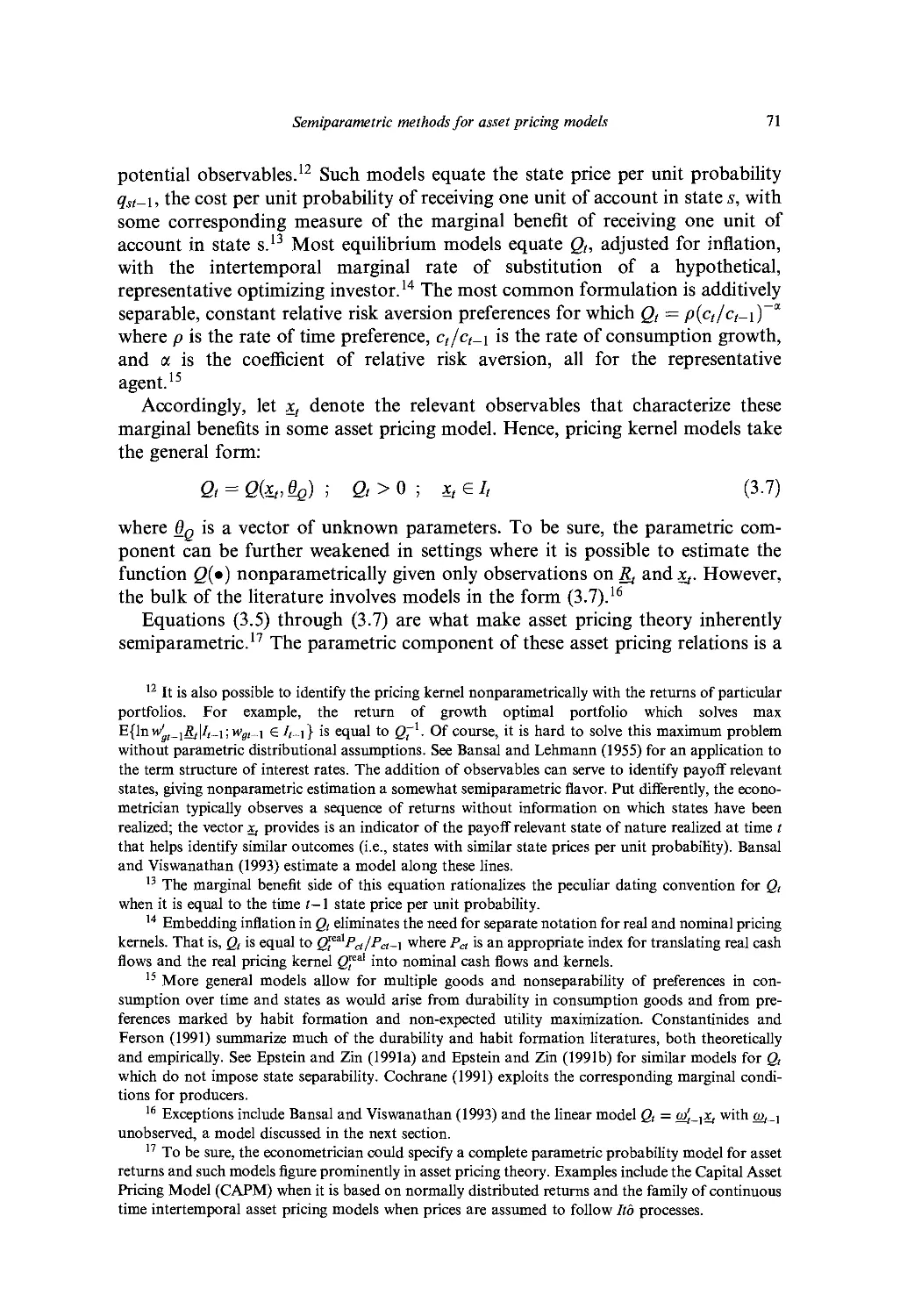

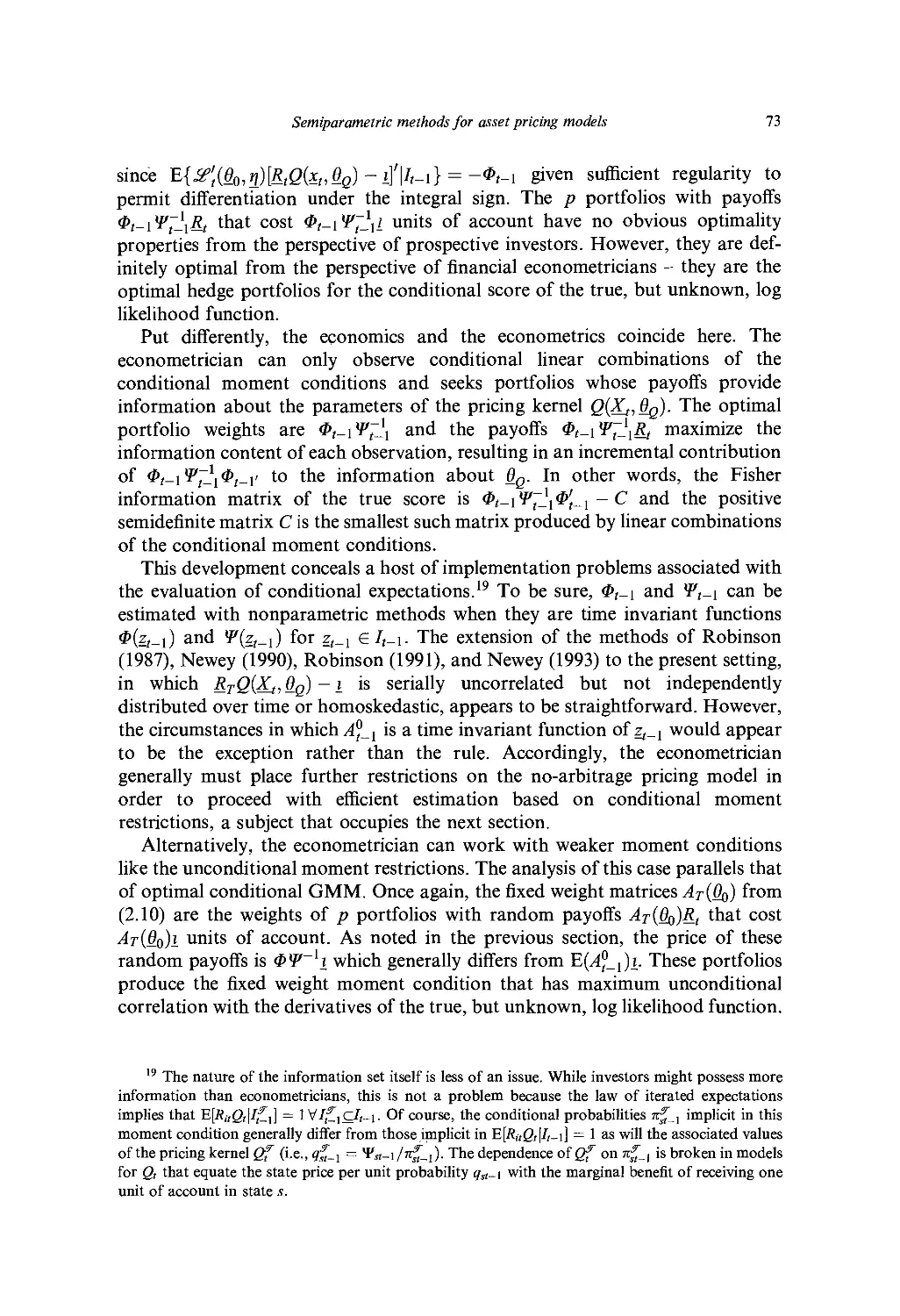

3. Asset pricing relations and their econometric implications 68

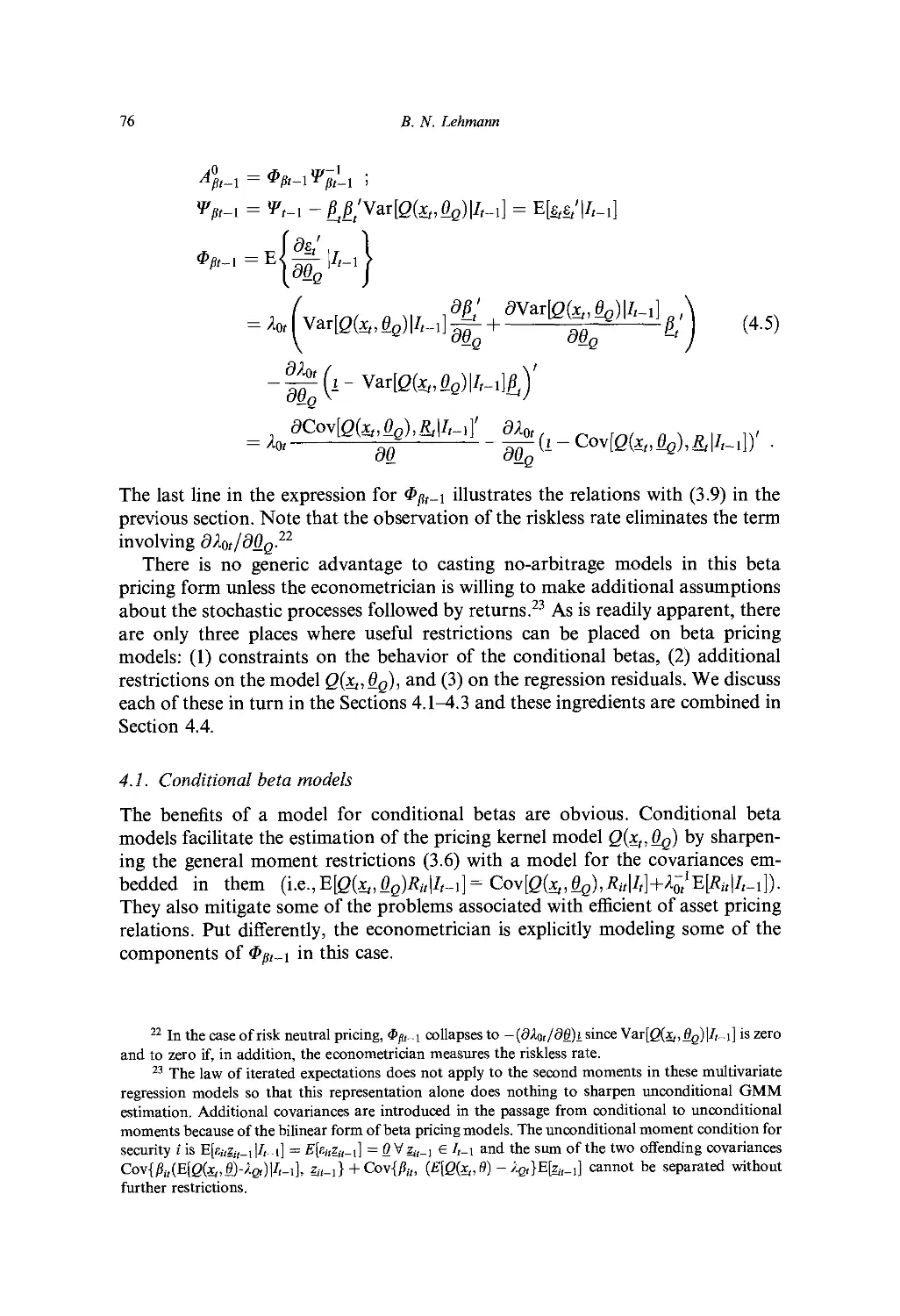

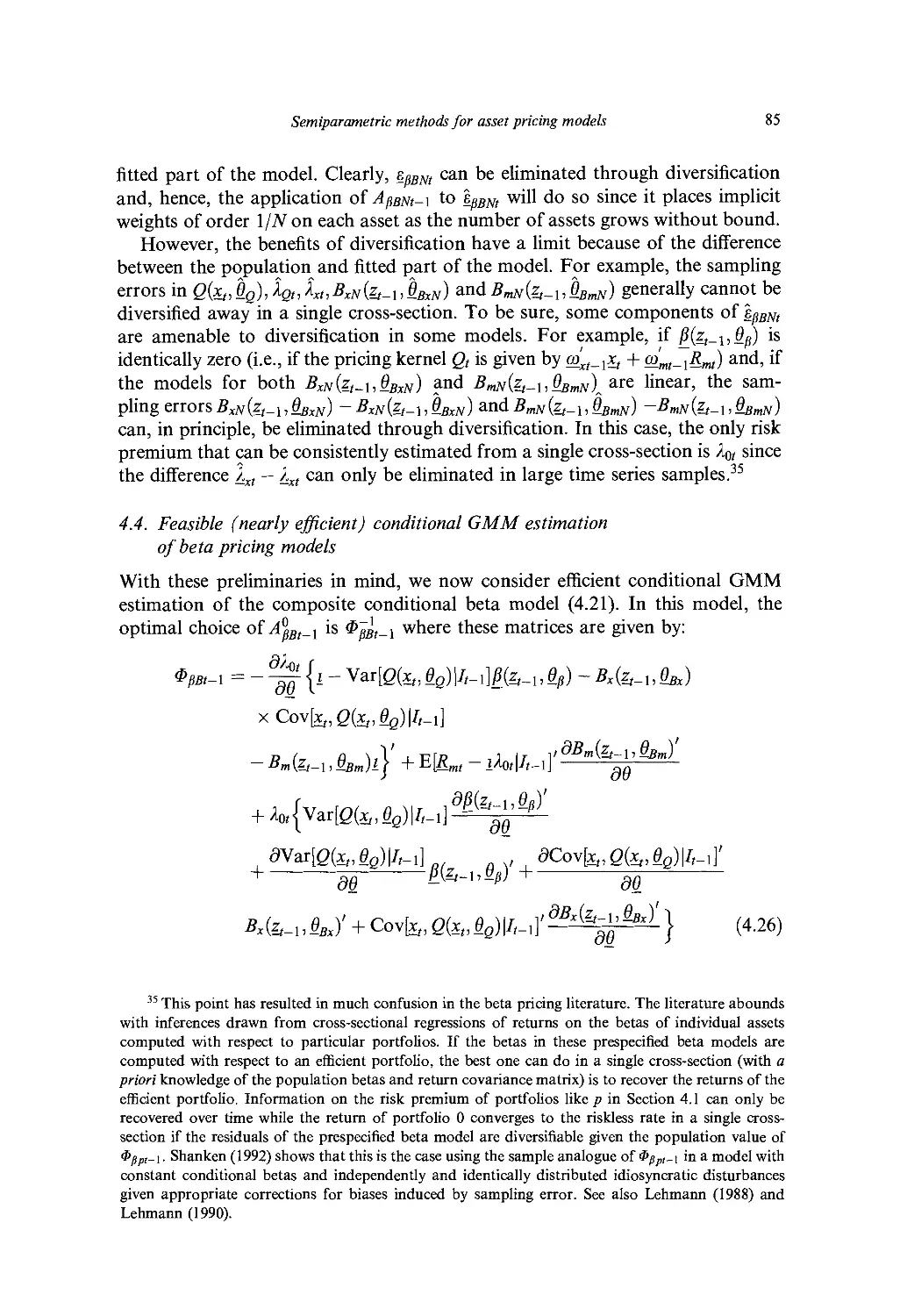

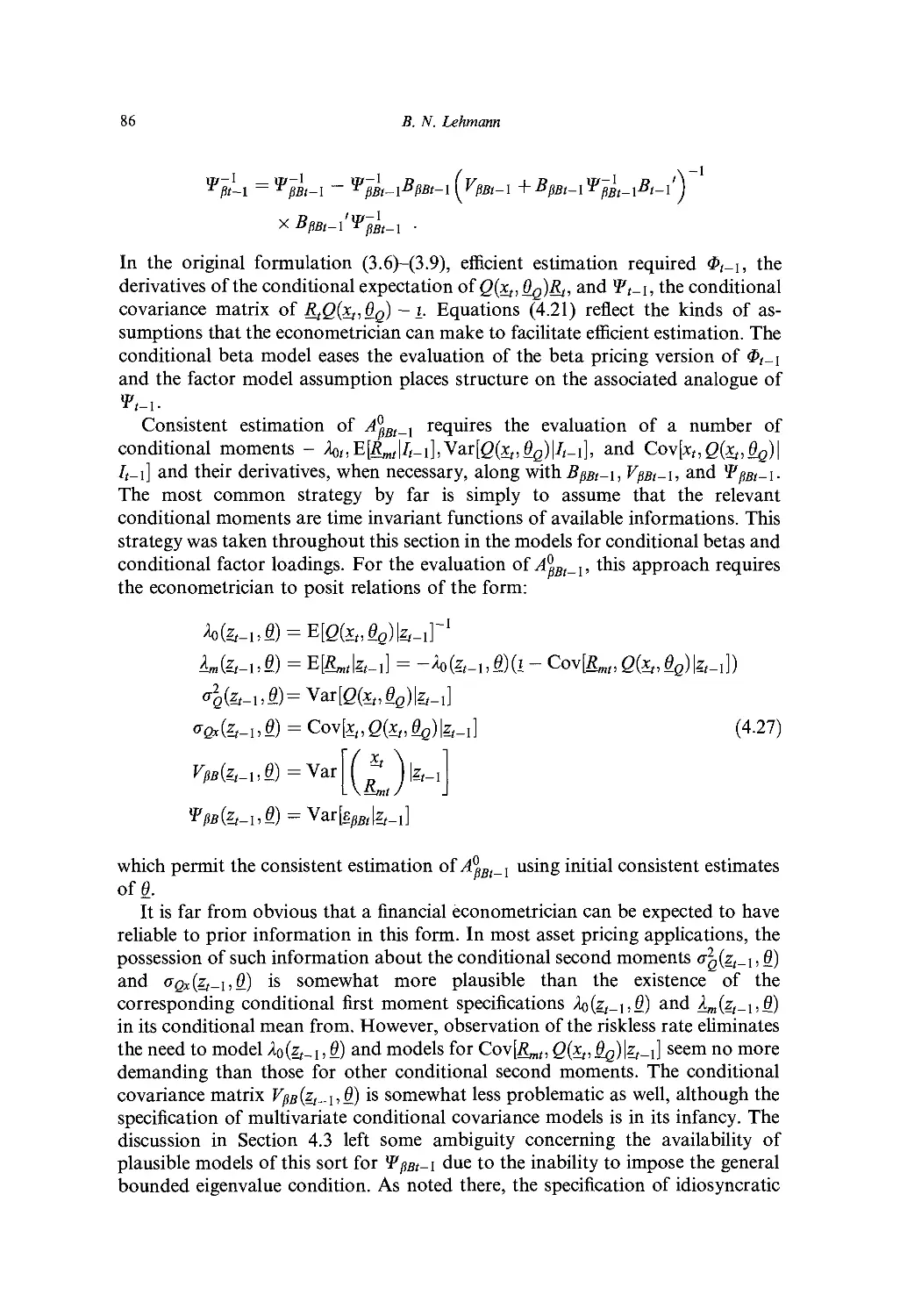

4. Efficiency gains within alternative beta pricing formulations 74

5. Concluding remarks 87

References 88

PART II. TERM STRUCTURES OF INTEREST RATES

Ch. 4. Modeling the Term Structure 91

A. R. Pagan, A. D. Hall, and V. Martin

1. Introduction 91

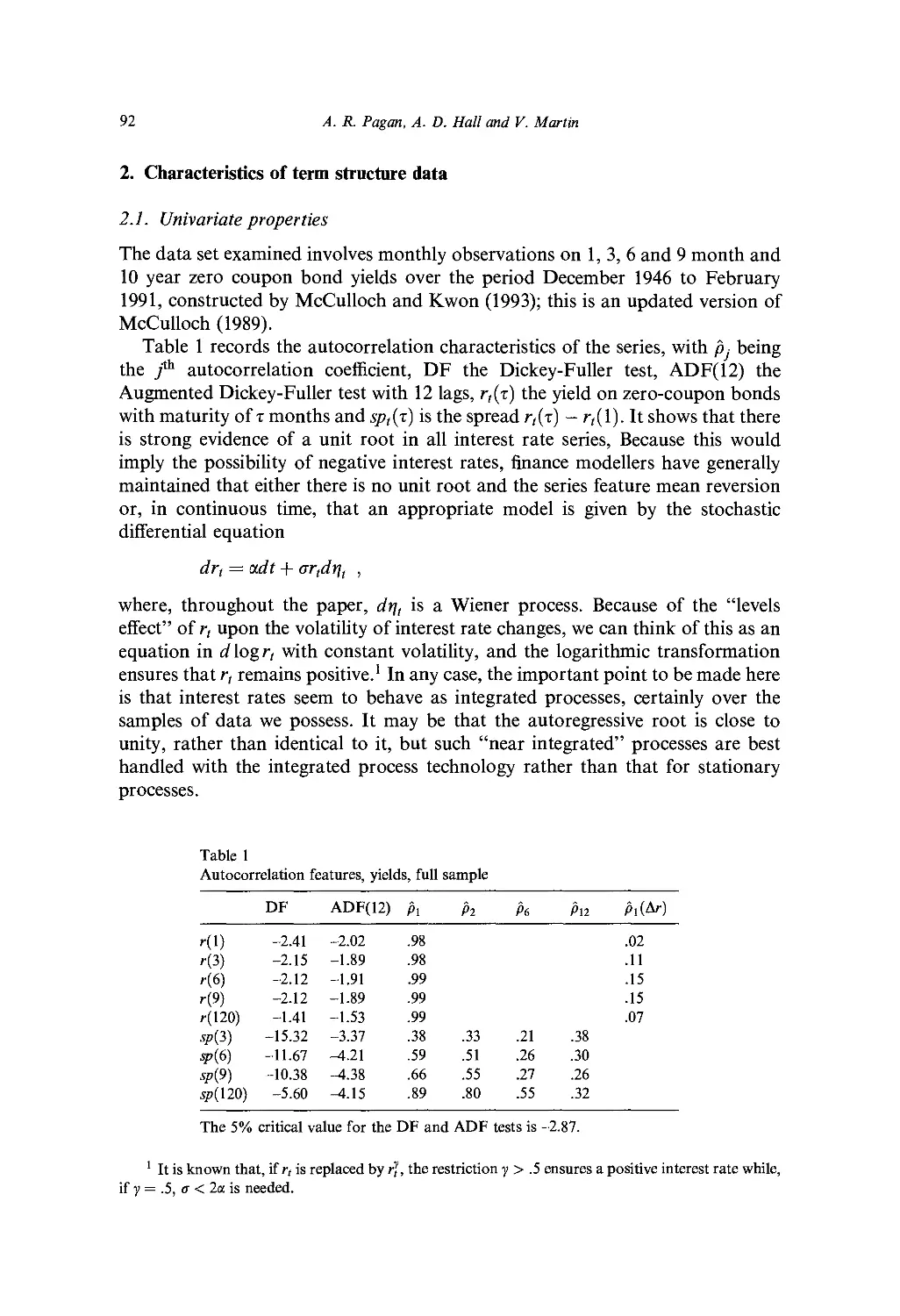

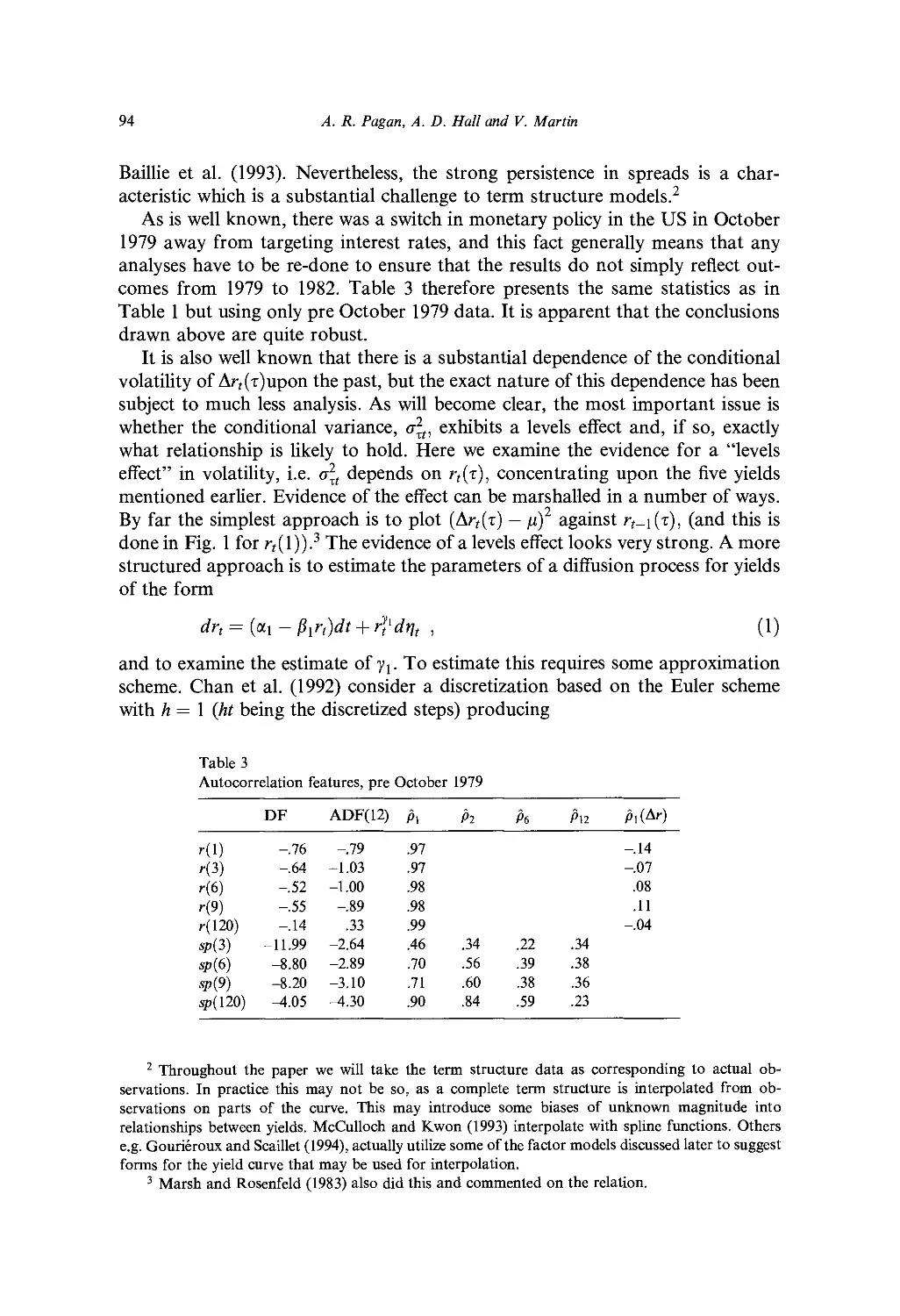

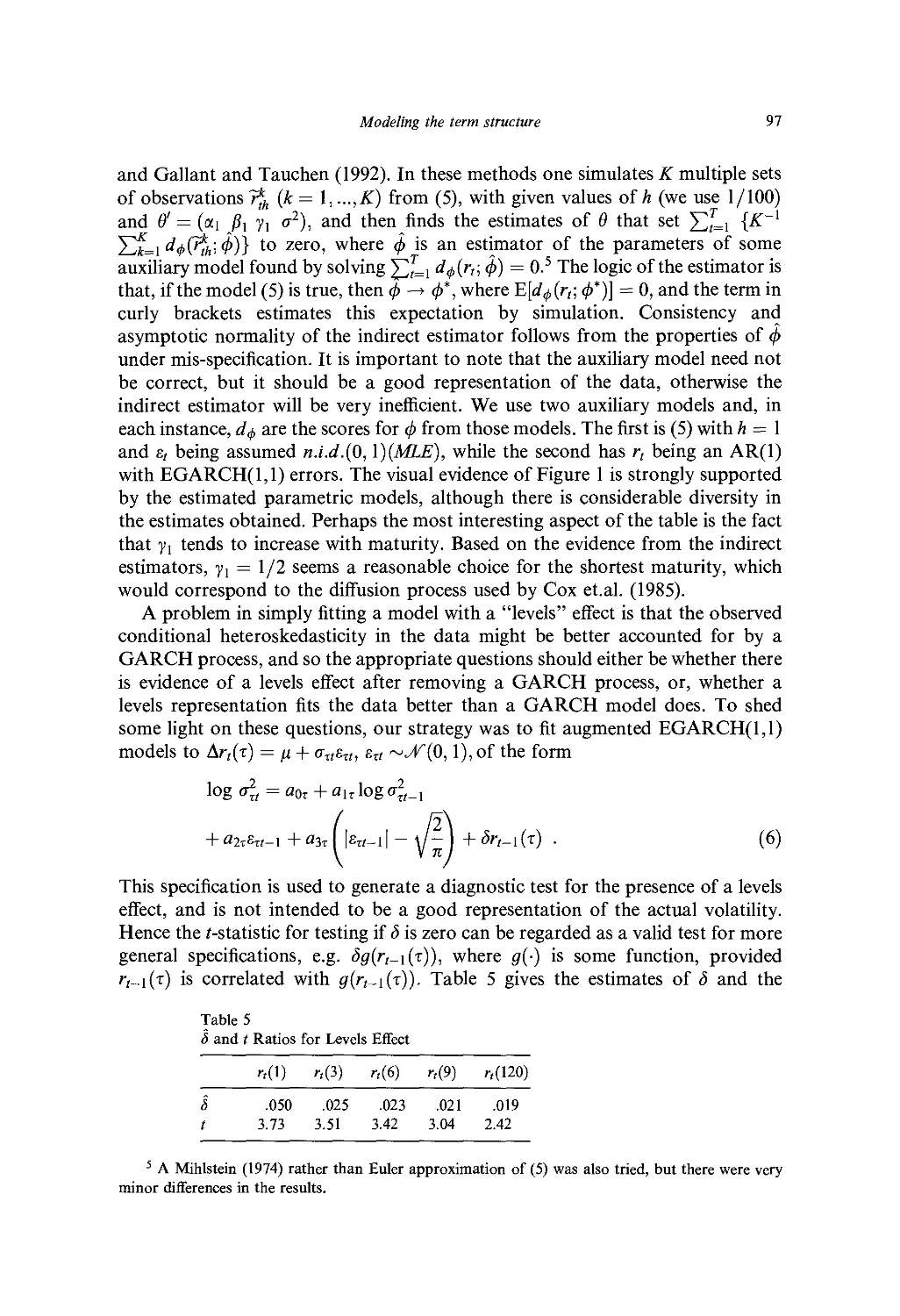

2. Characteristics of term structure data 92

3. Models of the term structure 104

4. Conclusion 116

References 116

PART III. VOLATILITY

Ch. 5. Stochastic Volatility 119

E. Ghysels, A. C. Harvey and E. Renault

1. Introduction 119

2. Volatility in financial markets 120

3. Discrete lime models 139

4. Continuous time models 153

5. Statistical inference 167

6. Conclusions 182

References 183

Ch. 6. Slock Price Volatility 193

£ E. LeRoy

1. Introduction 193

2. Statistical issues 195

3. Dividend-smoothing and non stationarity 198

4. Bubbles 201

5. Time-varying discount rates 203

Table of contents

ix

6. Interpretation 204

7. Conclusion 206

References 207

Ch. 7. GARCH Models of Volatility 209

1. Introduction 209

2. GARCH models 210

3. Statistical inference 224

4. Statistical properties 229

5. Conclusions 234

References 235

PART IV. PREDICTION

Ch. 8. Forecast Evaluation and Combination 241

F. X. Diebold and J. A. Lopez

1. Evaluating a single forecast 242

2. Comparing the accuracy of multiple forecasts 247

3. Combining forecasts 252

4. Special topics in evaluating economic and financial forecasts 256

5. Concluding remarks 264

References 265

Ch. 9. Predictable Components in Stock Returns 269

G. Kaul

I Introduction 269

2. Why predictability 270

3. Predictability of stock returns: The methodology 273

4. Power comparisons 287

5. Conclusion 291

References 292

Ch. 10. Interest Rate Spreads as Predictors of Business Cycles 297

K. Lahiri and 7. G. Wang

1. Introduction 297

2. Hamilton's non-linear filter 299

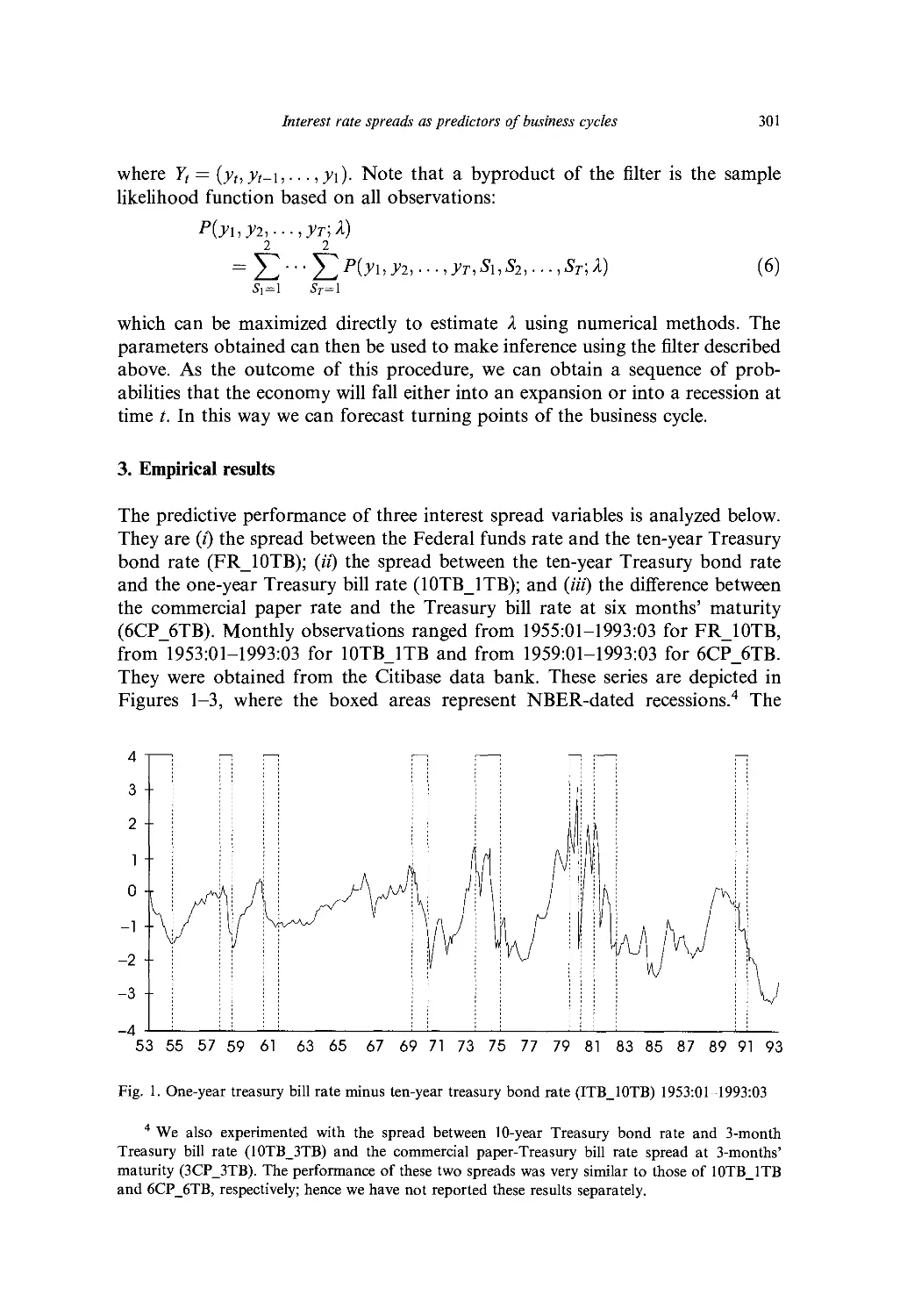

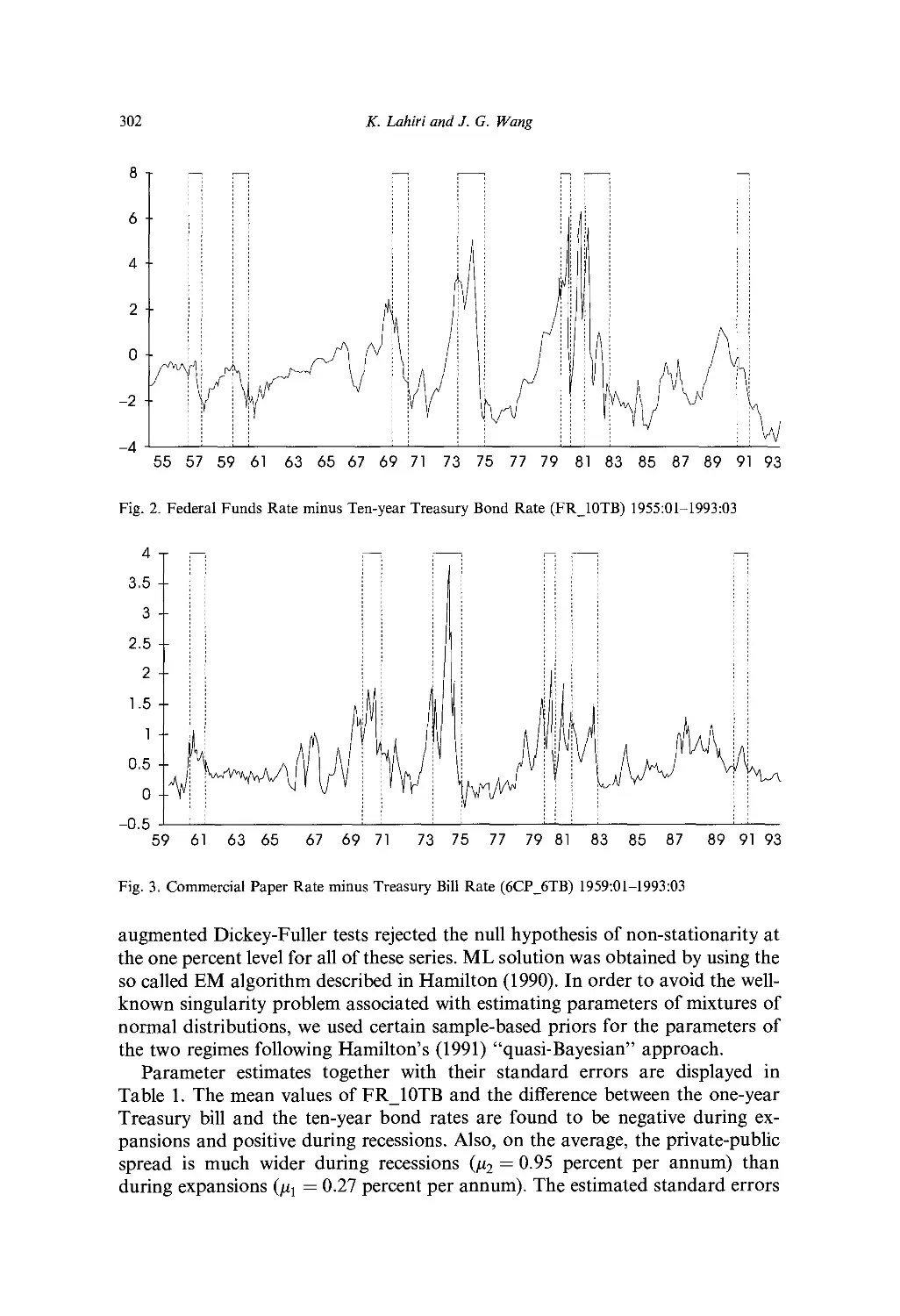

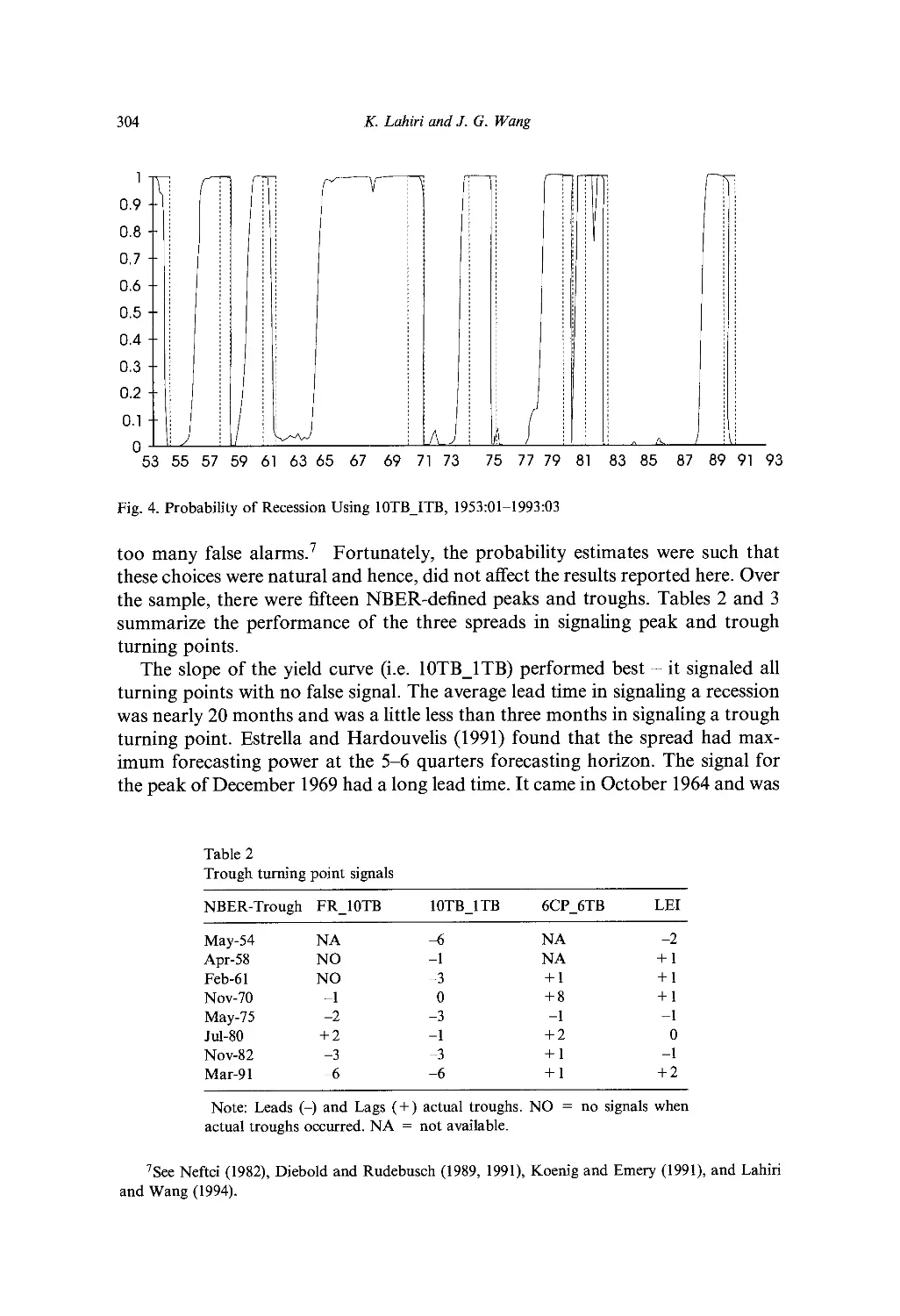

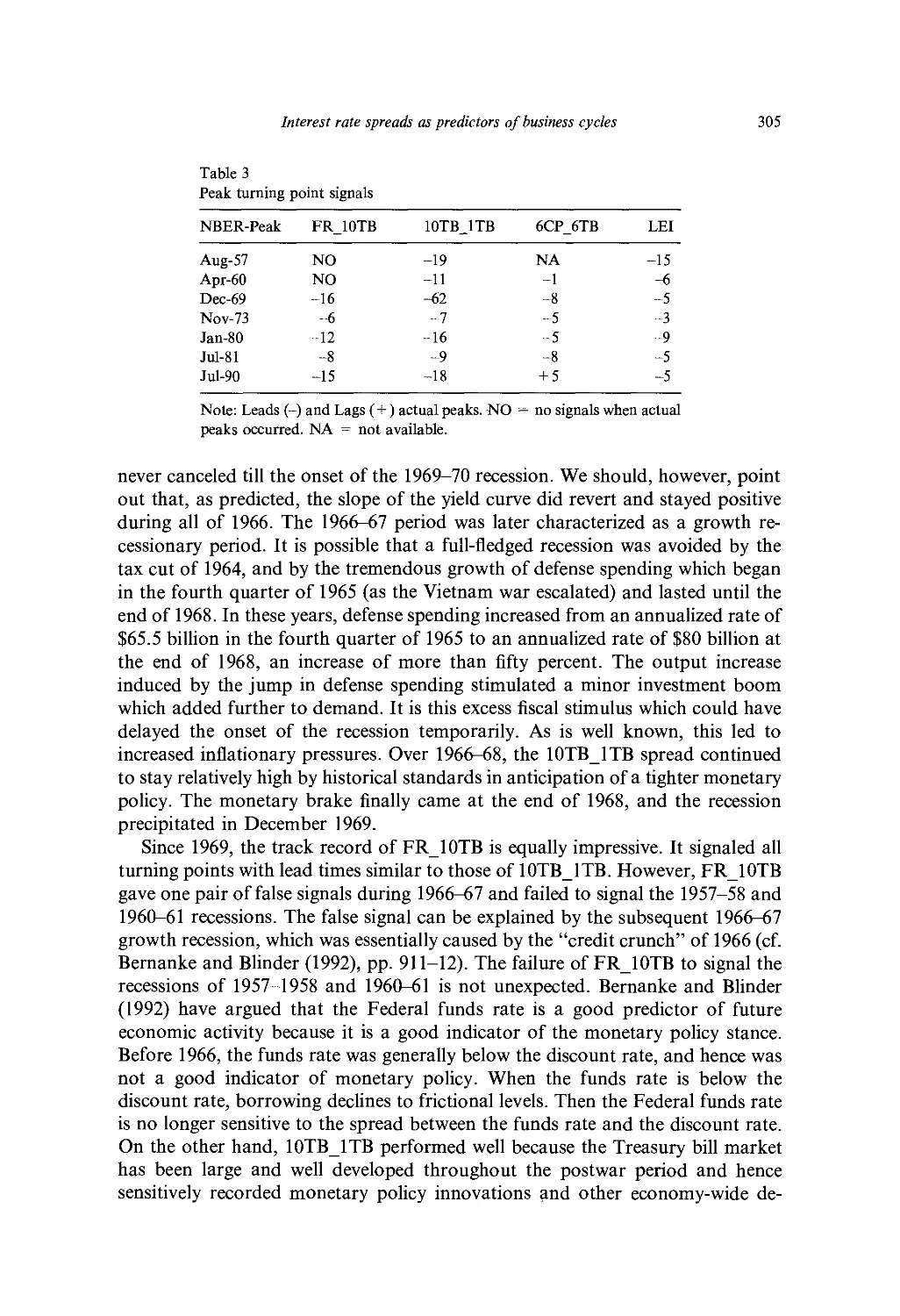

3. Empirical results 301

4. Implications for the monetary transmission mechanism 308

X

Table of contents

5. Conclusion 311

Acknowledgement 313

References 313

PART V. ALTERNATIVE PROBABILISTIC MODELS

Ch. 11. Nonlinear Time Series, Complexity Theory, and Finance 317

W. A. Brock and P. J. F. de Lima

1. Introduction 317

2. Nonlinearity in stock returns 326

3. Long memory in stock returns 337

4. Asymmetric information structural models and stylized features of stock returns 349

5. Concluding remarks 353

References 353

Ch. 12. Count Data Models for Financial Data 363

A. C. Cameron and P. K. Trivedi

1. Introduction 363

2. Stochastic process models for count and duration data 366

3. Econometric models of counts 371

4. Concluding remarks 388

Acknowledgement 389

References 390

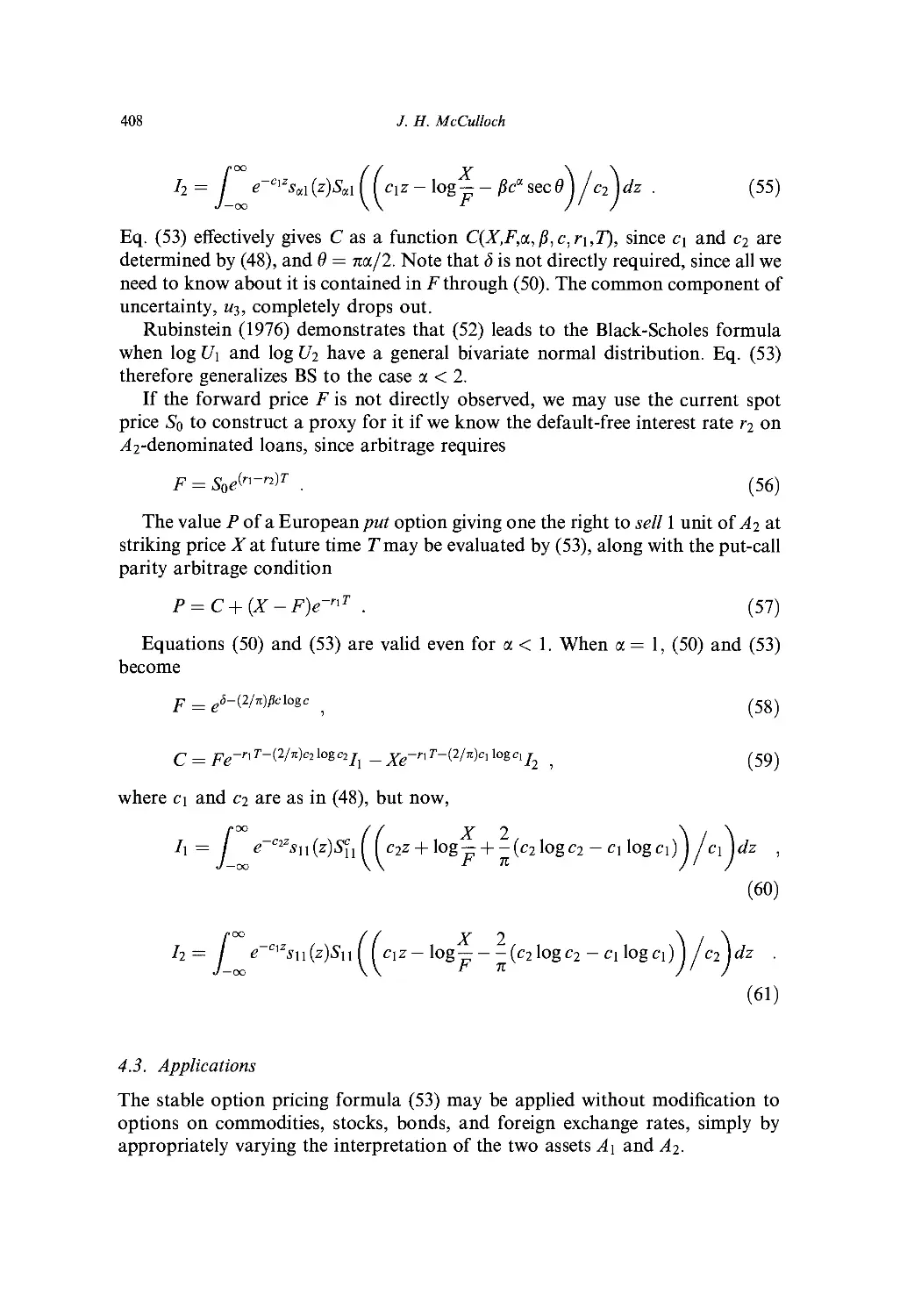

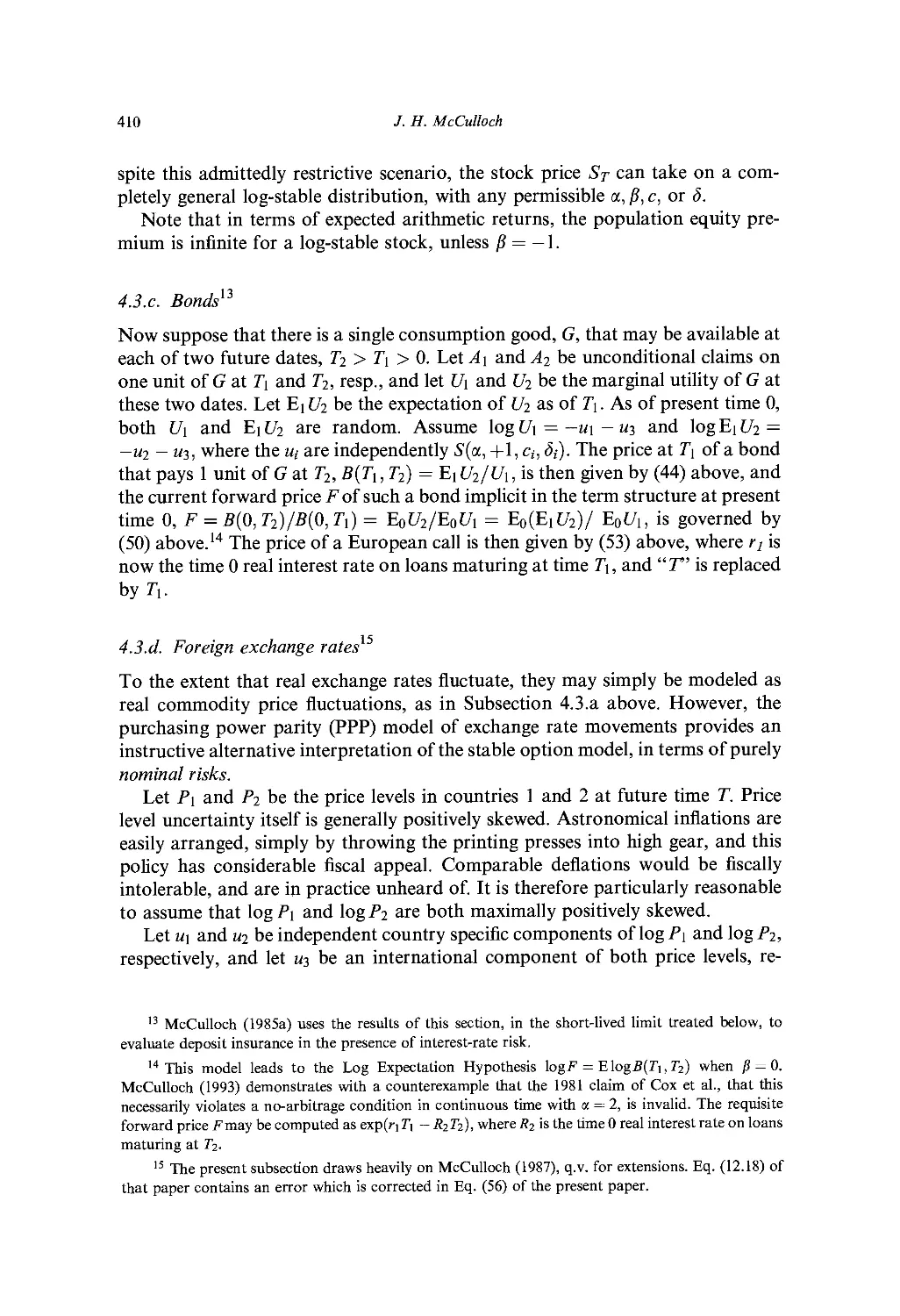

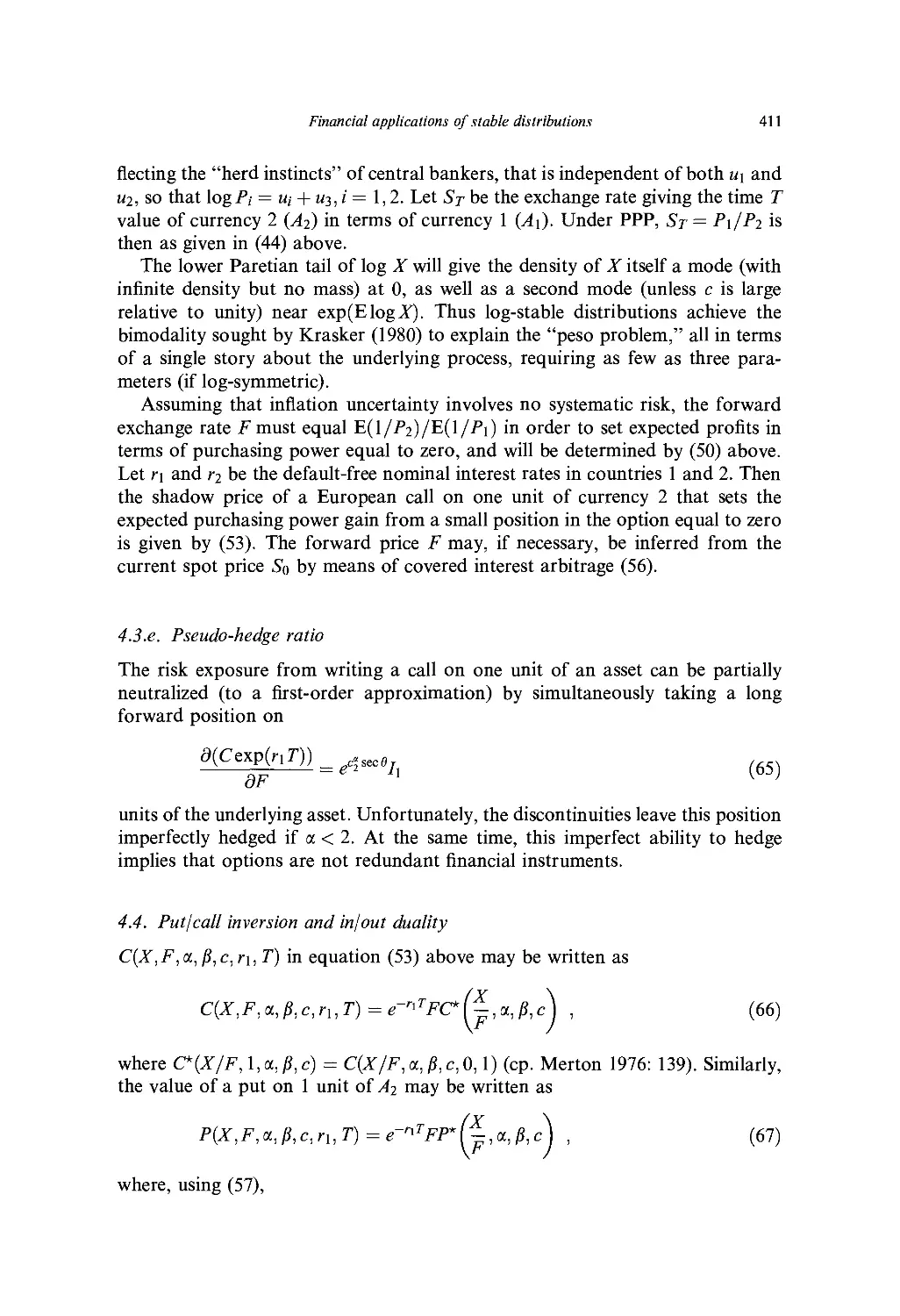

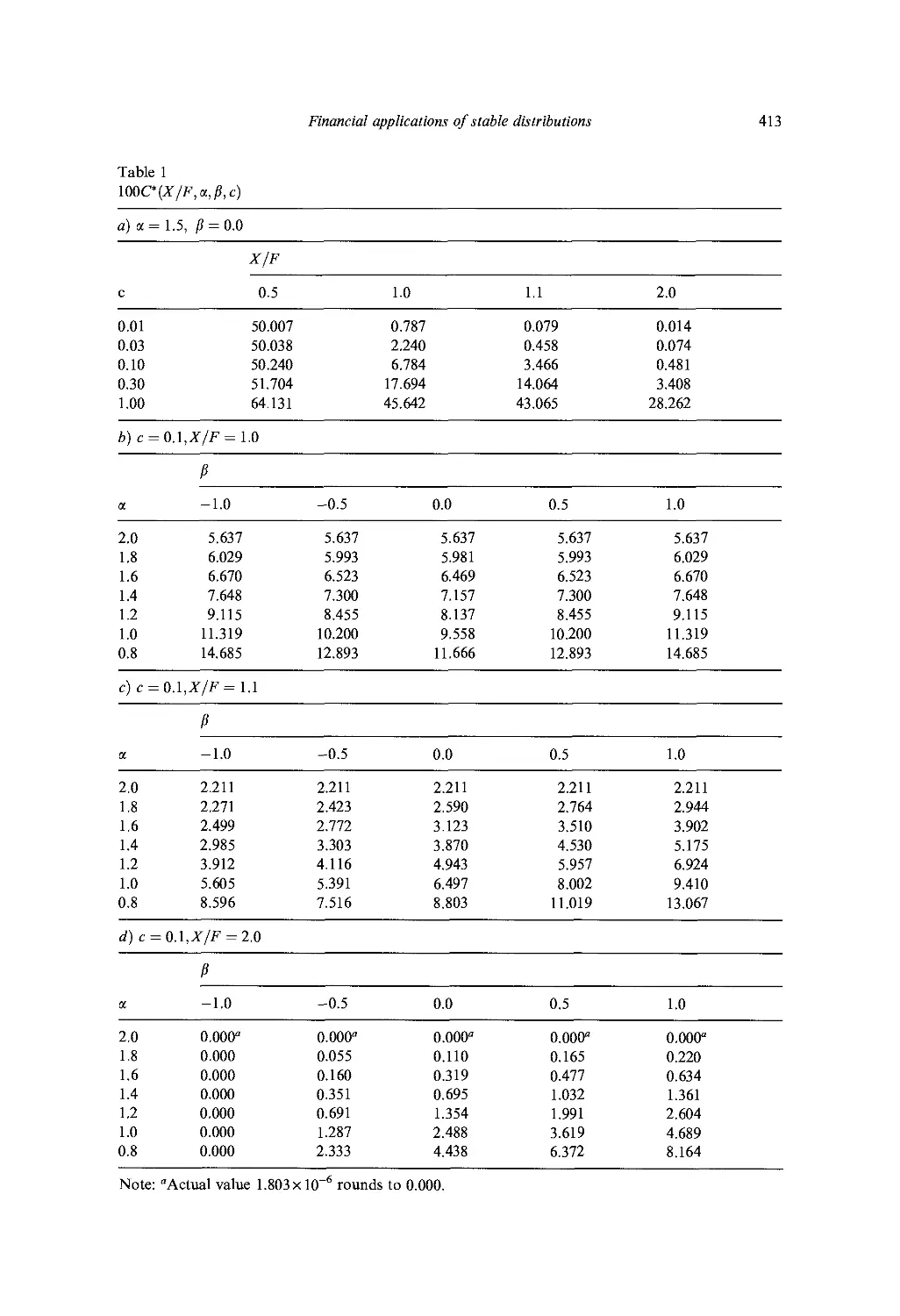

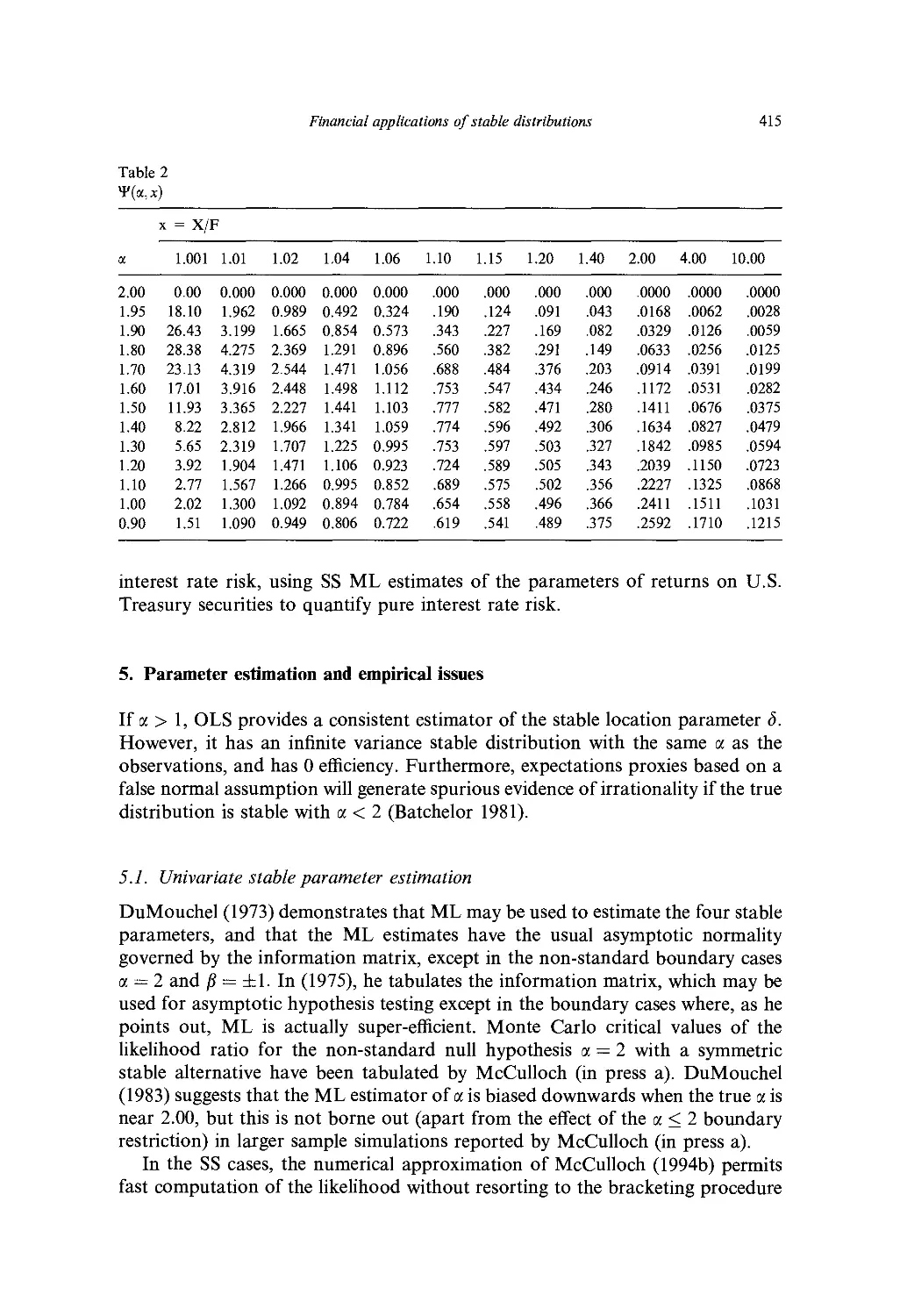

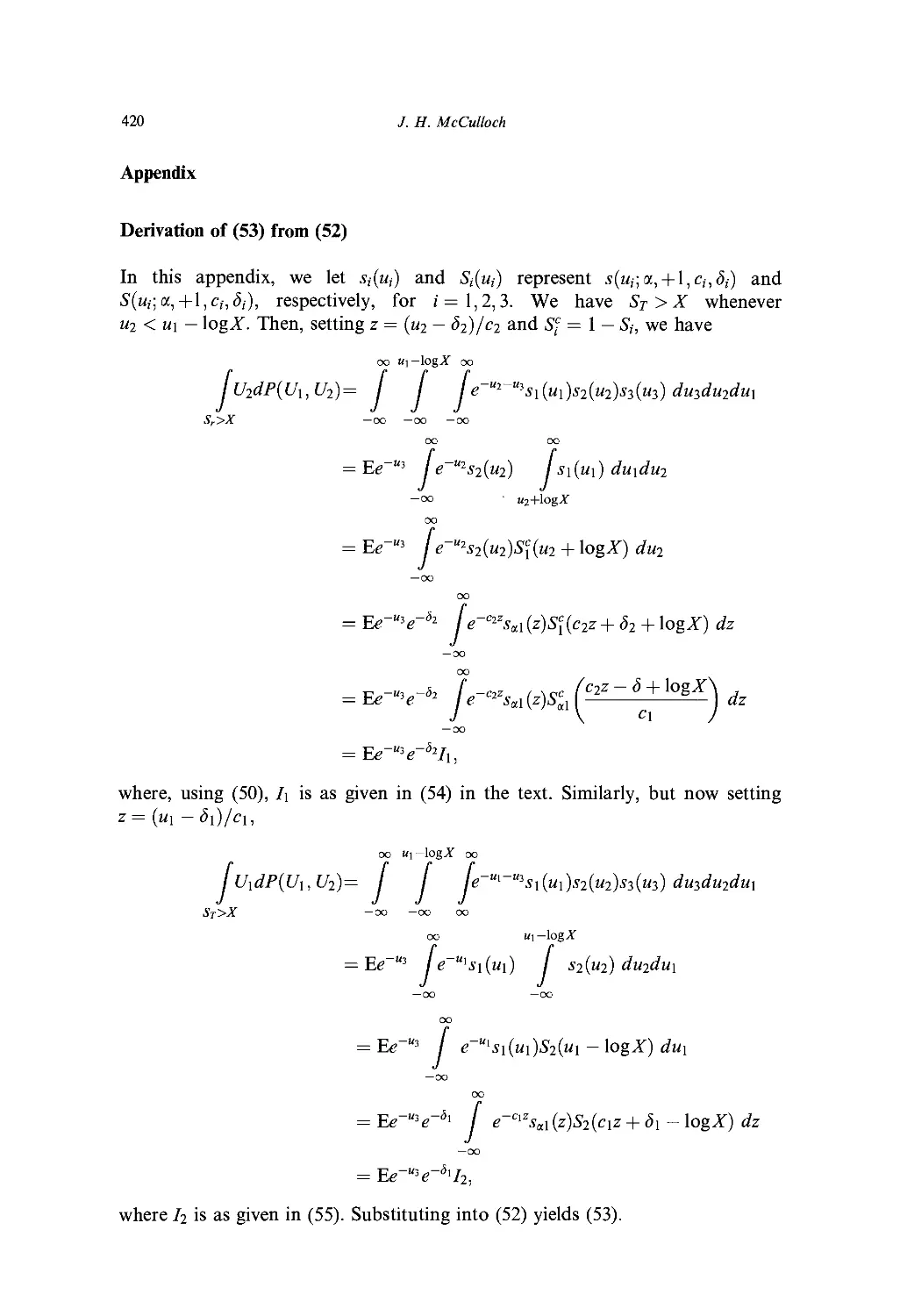

Ch. 13. Financial Applications of Stable Distributions 393

/. //. McCulloch

1. Introduction 393

2. Basic properties of stable distributions 394

3. Stable portfolio theory 401

4. Log-stable option pricing 404

5. Parameter estimation and empirical issues 415

Appendix 420

Acknowledgements 421

References 421

Ch. 14. Probability Distributions for Financial Models 427

./. B. McDonald

1. Introduction 427

2. Alternative models 428

Table of contents

xi

Applicalions in finance 437

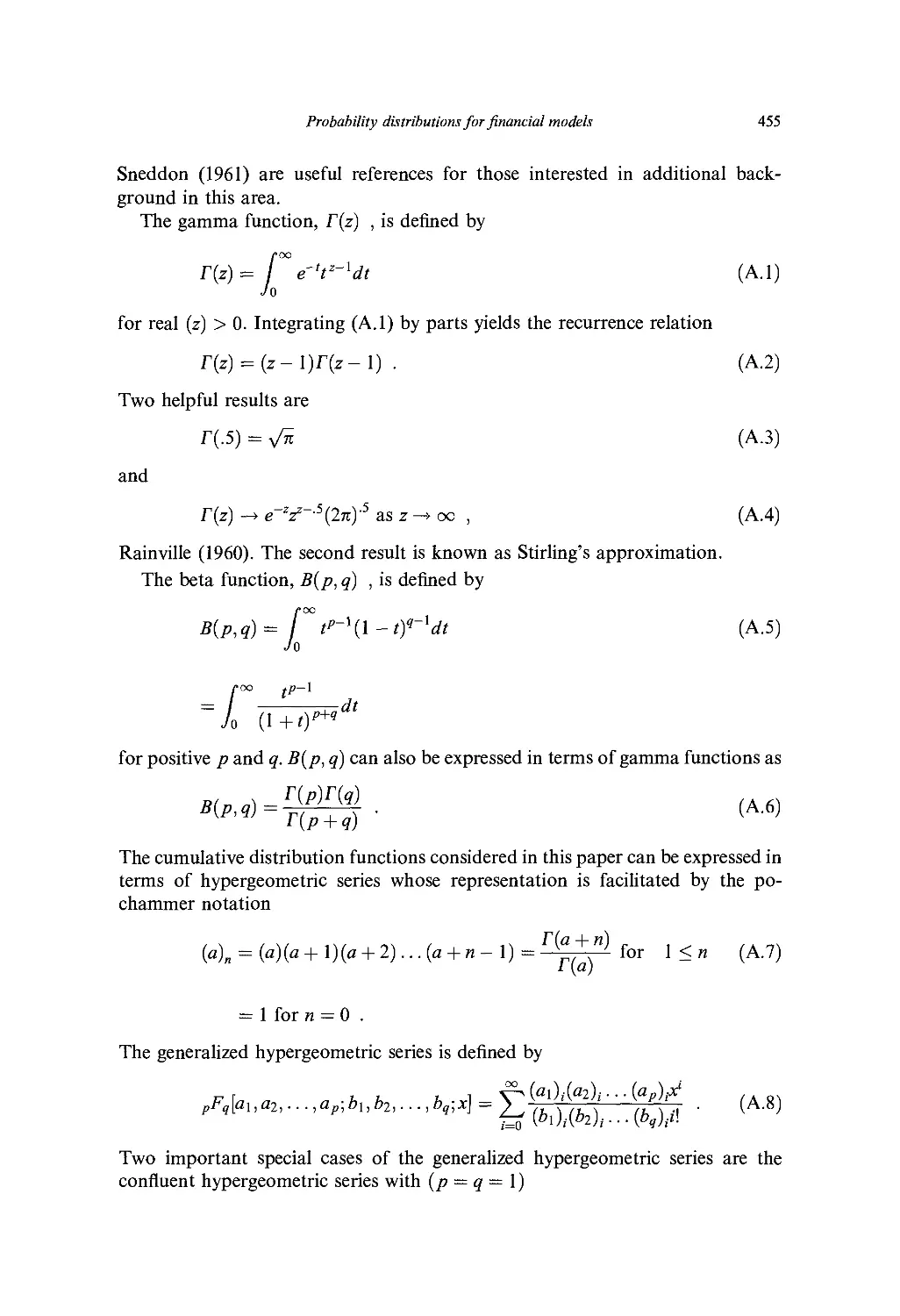

Appendix A: Special functions 454

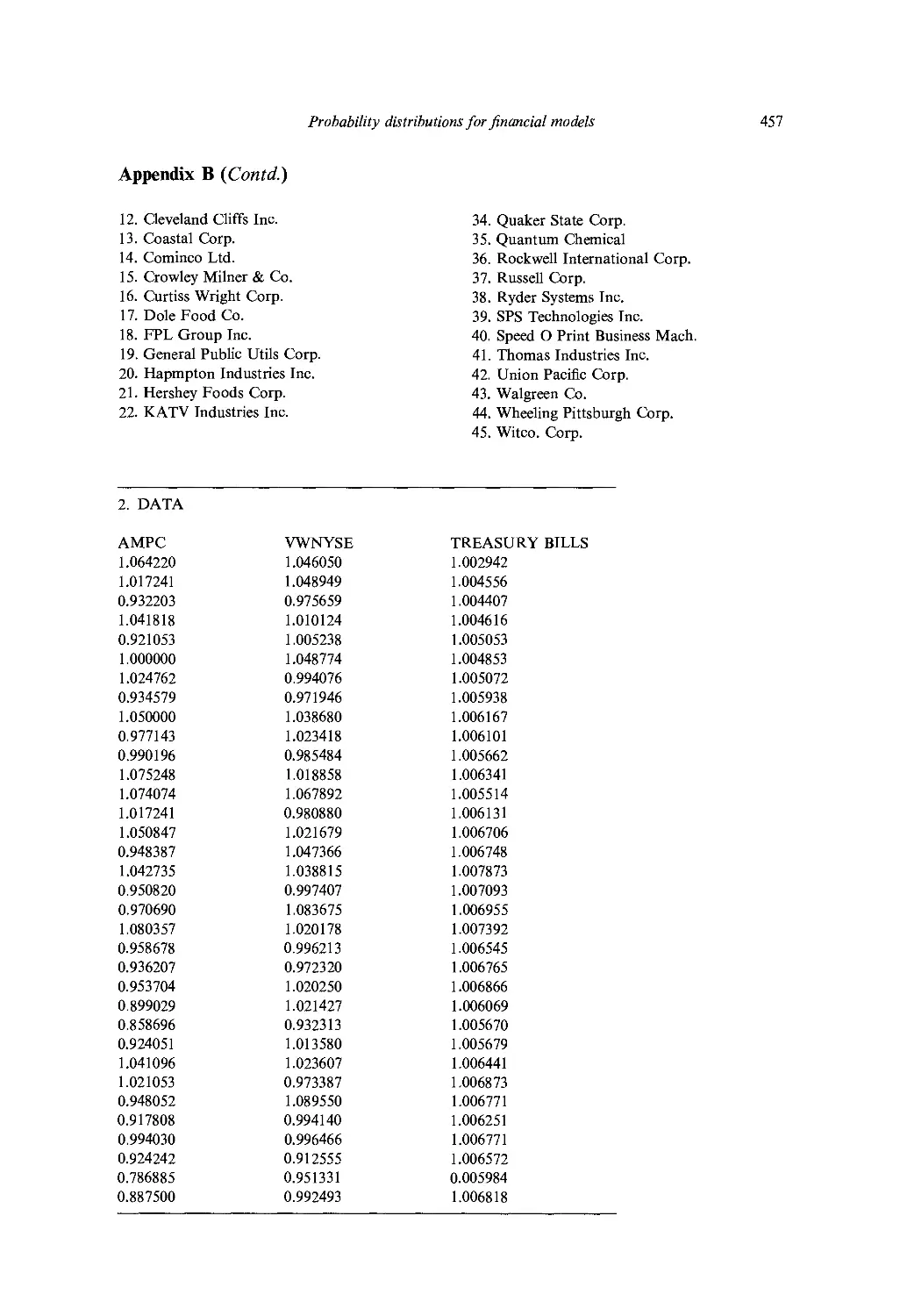

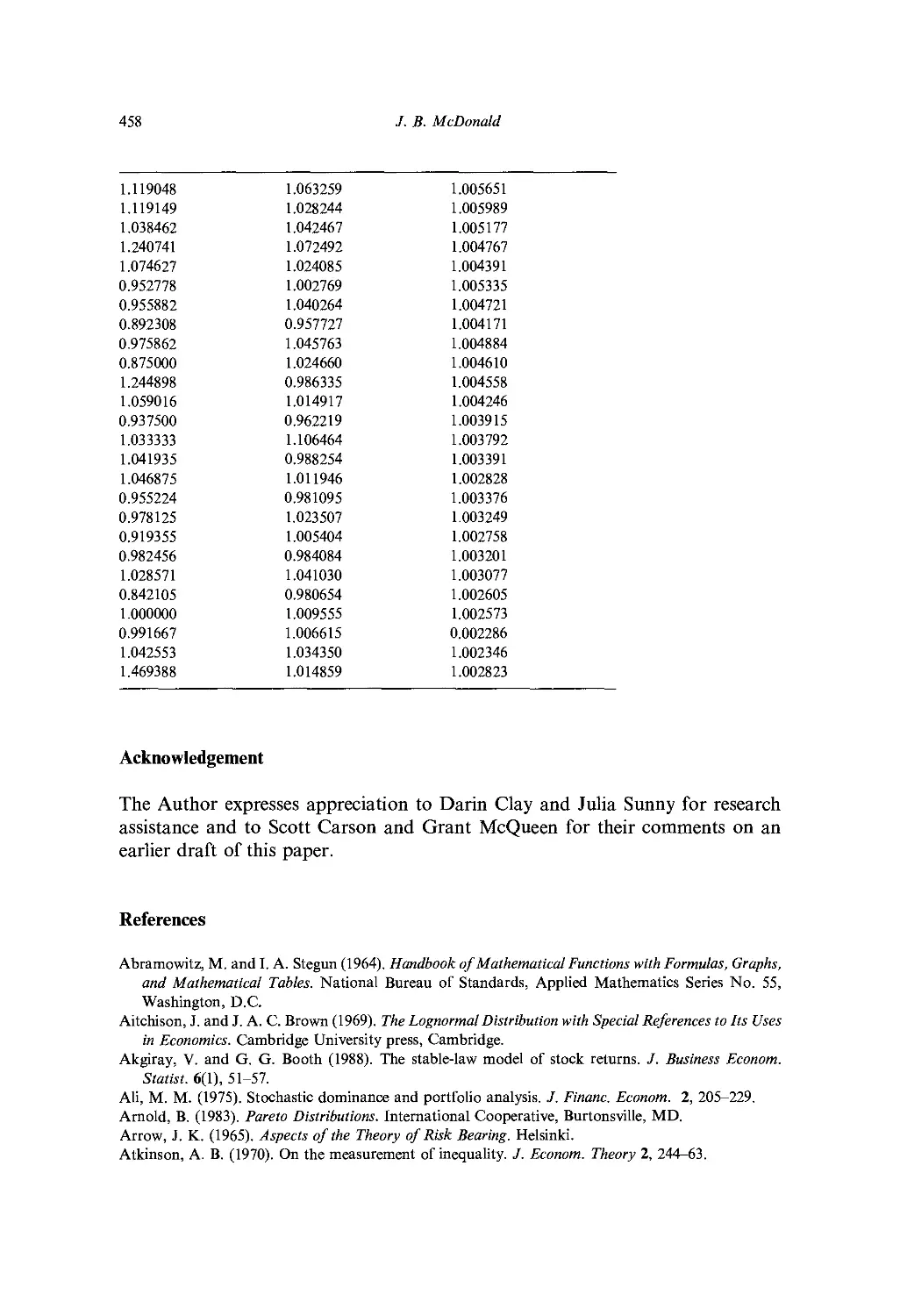

Appendix B: Data 456

Acknowledgement 458

References 45H

PART VI. APPLICATIONS OF SPECIALIZED STATISTICAL METHODS

Ch. 15. Bootstrap Based Tests in Financial Models 463

(7. S, Maddala and H. Li

I. Introduction 463

2

3

4

5

6

7

8

A review of different bootstrap methods 464

Issues in the generation of bootstrap samples and the test statistics 466

A critique of the application of bootstrap methods in financial models 469

Bootstrap methods for model selection using trading rules 476

Bootstrap methods in long-horizon regressions 478

Impulse response analysis in nonlinear models 483

Conclusions 484

References 485

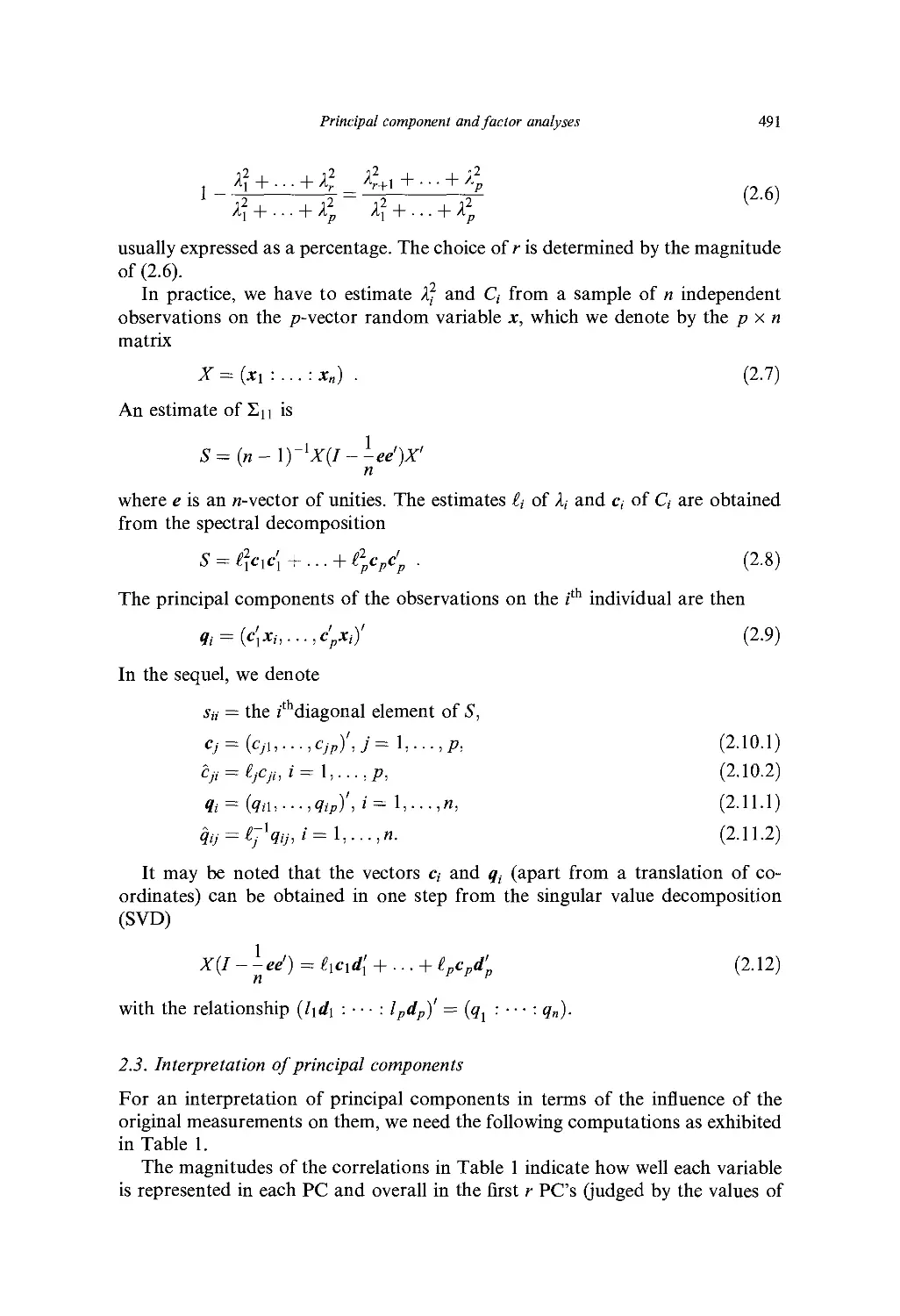

Ch 16. Principal Components and Factor Analyses 489

C, R. Rao

1. Introduction 489

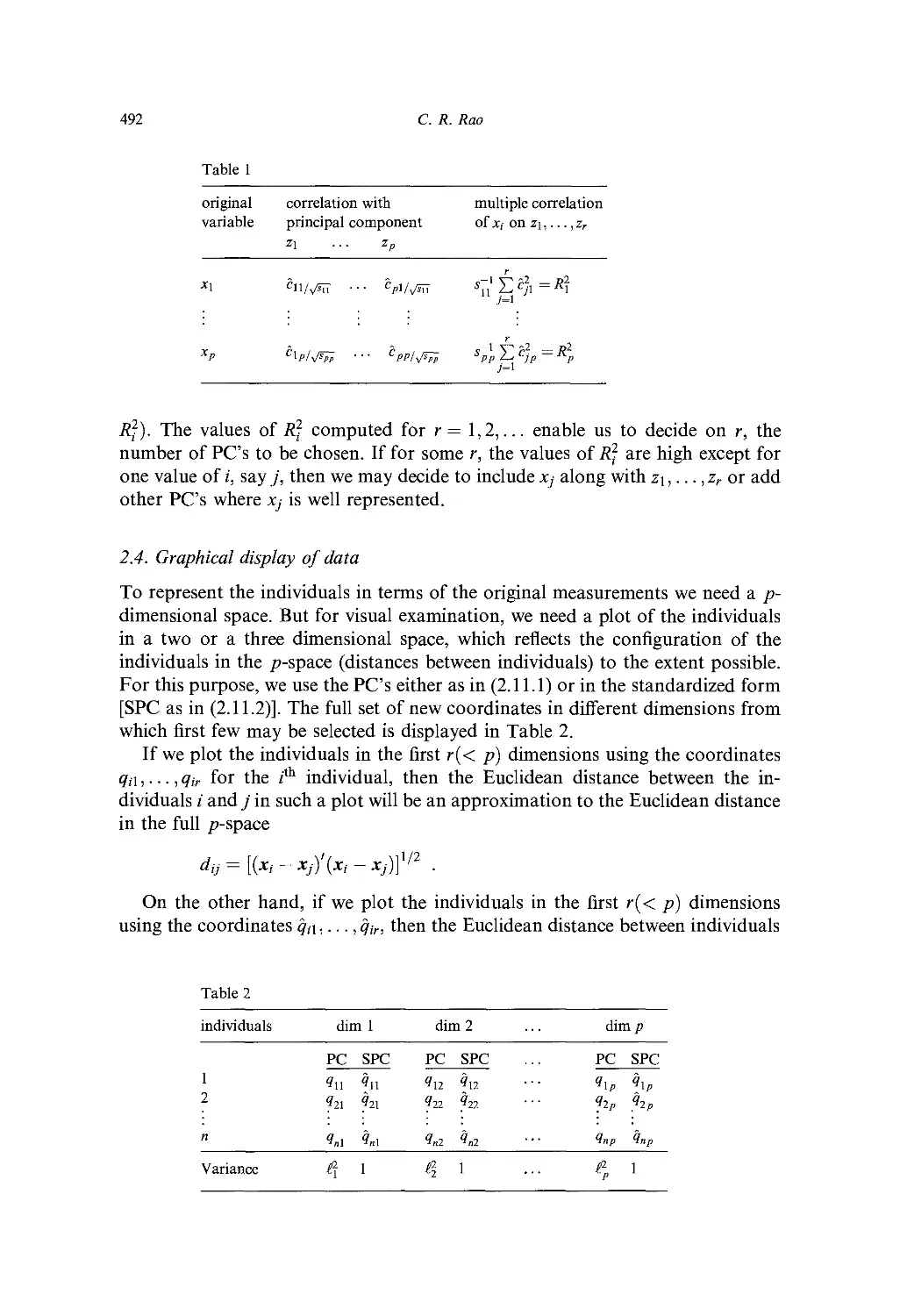

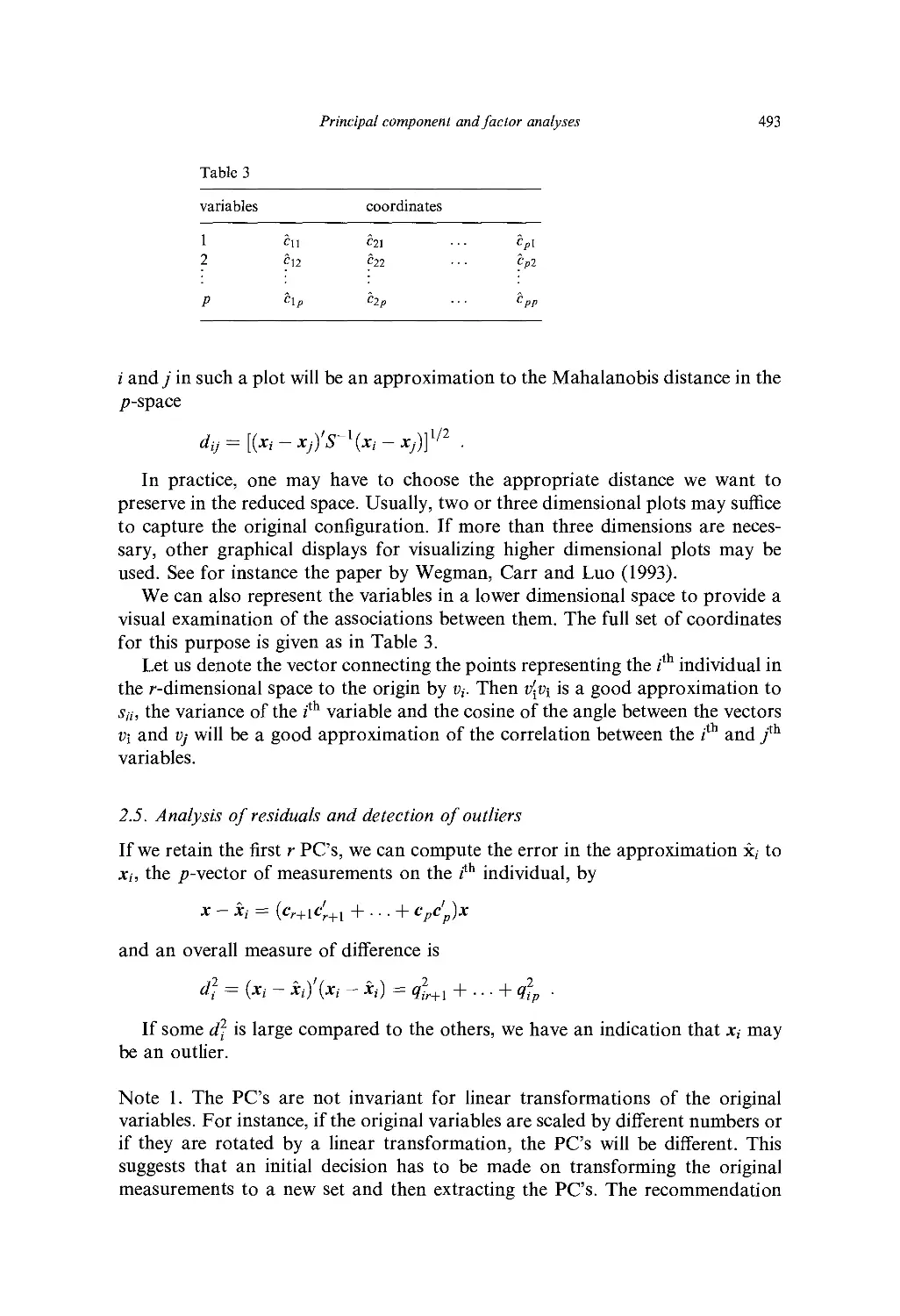

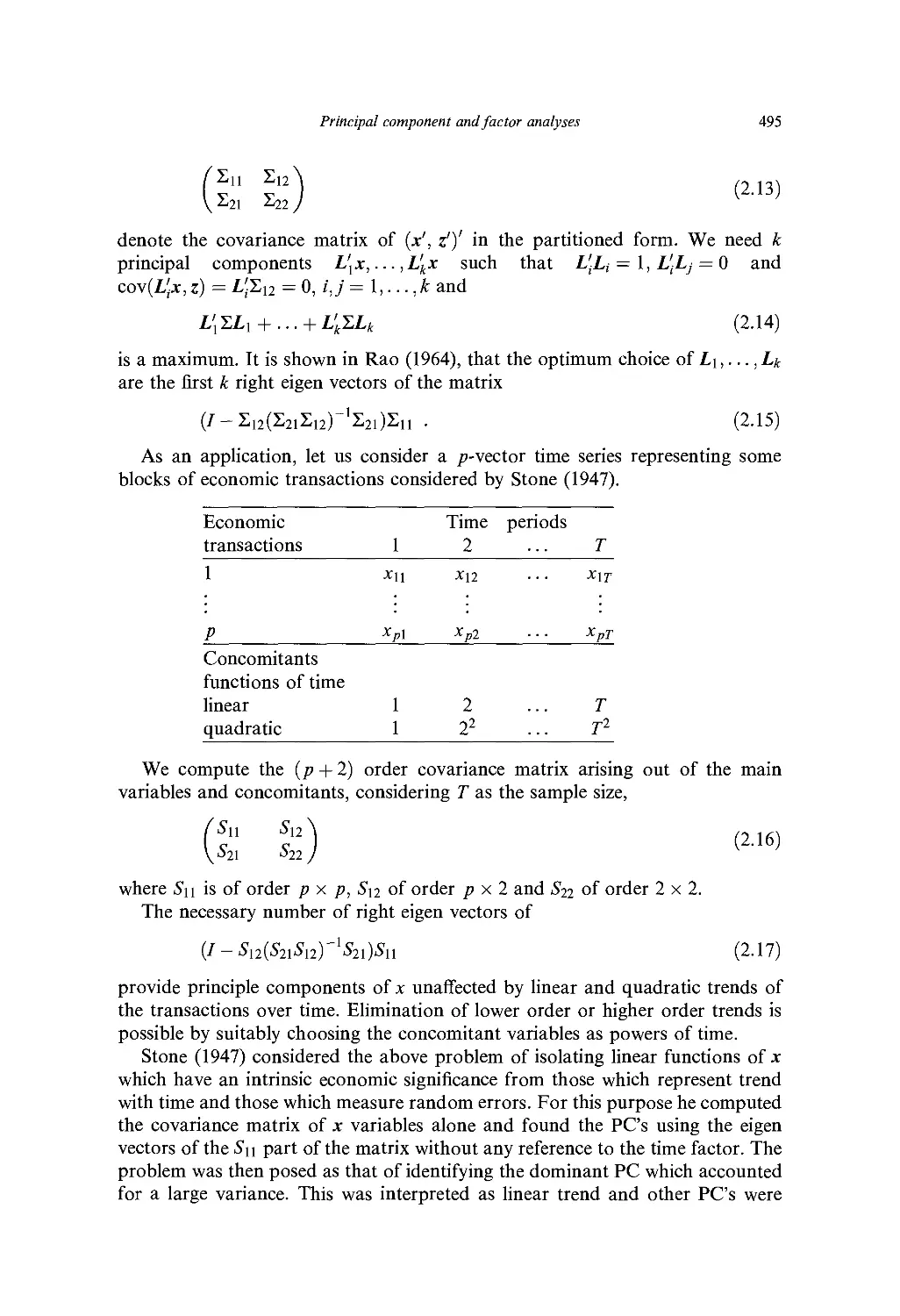

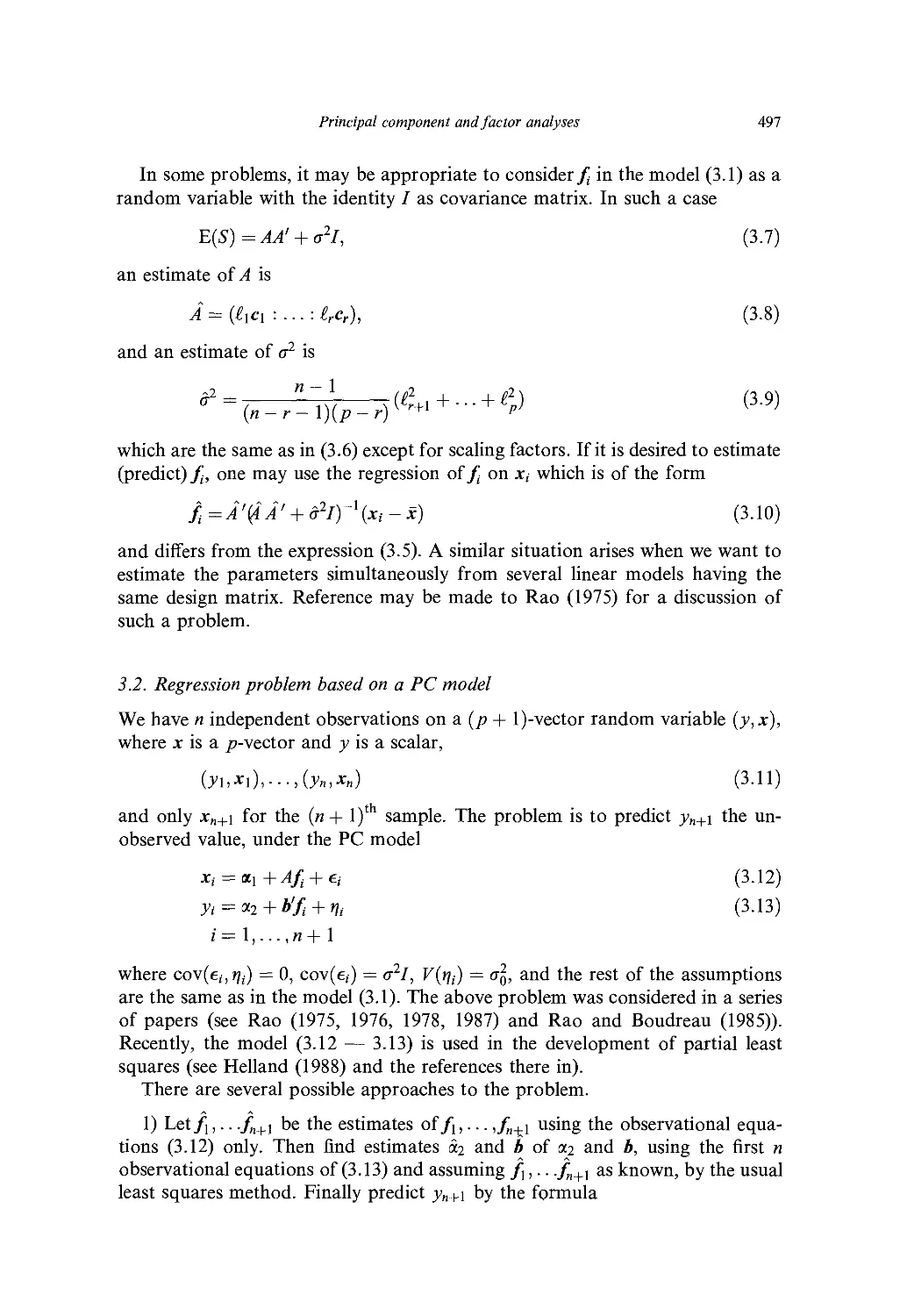

2. Principal components 490

3. Model based principal components 496

4. Factor analysis 498

5. Conclusions 503

References 5(M

Ch. 17. Errors-in-Variables Problems in Financial Models 507

G. S. Maddala and M'. Nimalendran

1. Introduction 507

2. Grouping methods 508

3. Alternatives to the two-pass estimation method 513

4. Direct and reverse regression methods 514

5. Latent variables / structural equation models with measurement 515

6. Artificial neural networks (ANN) as alternatives to MIMIC models 522

7. Signal extraction methods and tests for rationality 523

8. Qualitative and limited dependent variable models 523

9. Factor analysis with measurement errors 524

10. Conclusion 525

References 525

Ml

Table of contents

Ch. 18. Financial Applications of Artificial Neural Networks 529

M. Qi

\. Introduction 529

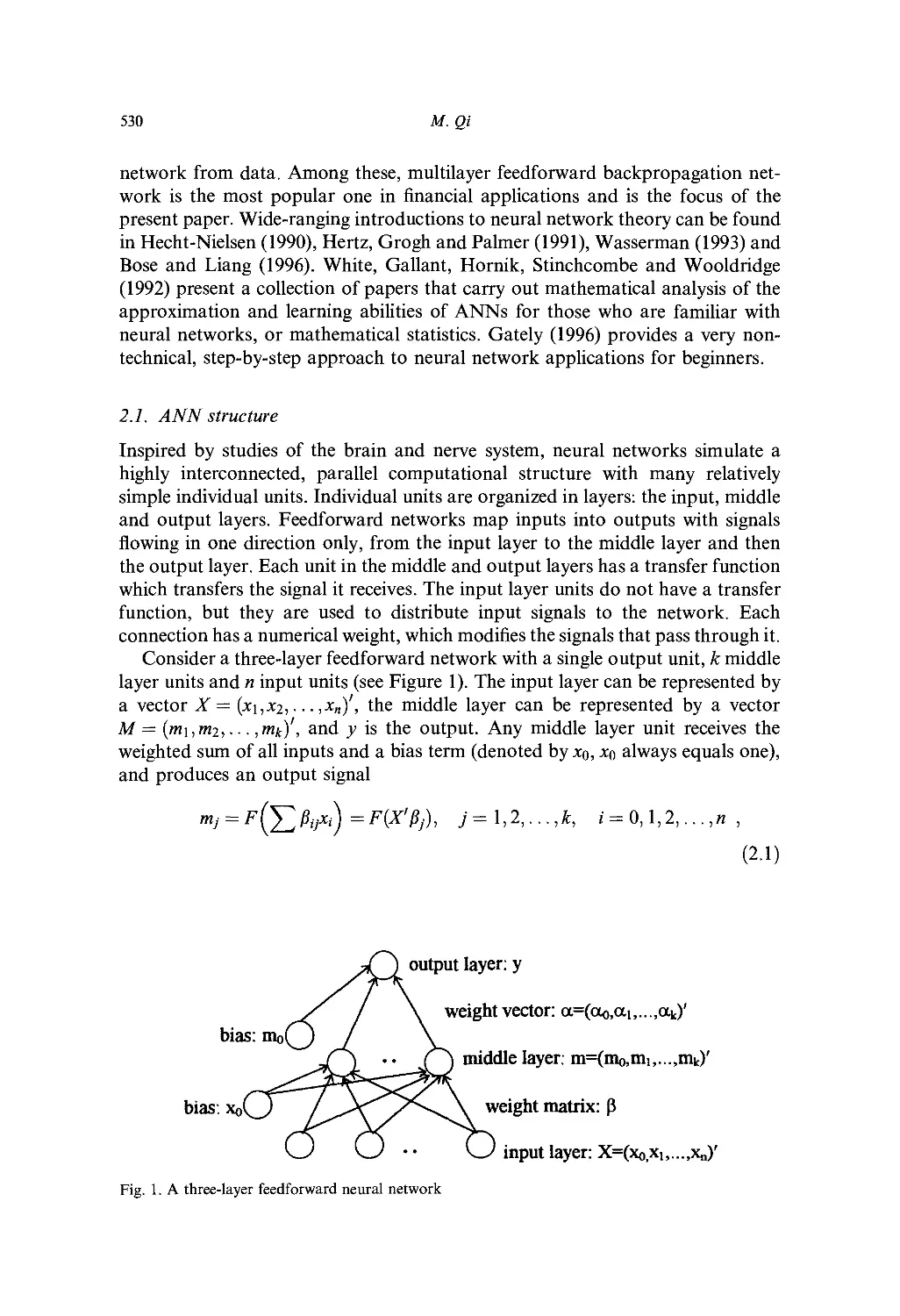

2. Artificial Neural Networks 529

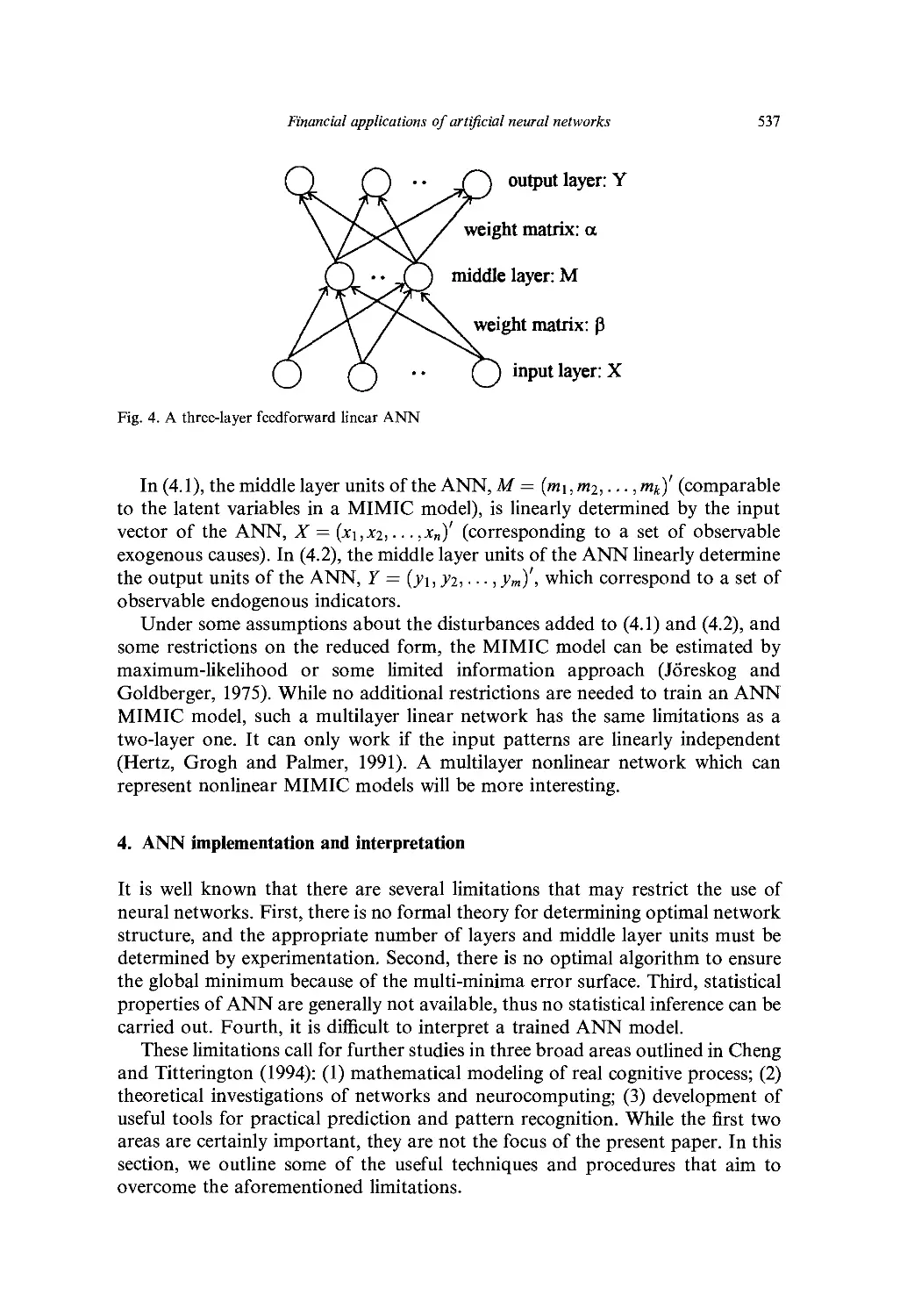

3. Relationship between ANN and interpretational statistical models 533

4. ANN implementation and interpretation 537

5. Financial applications 544

6. Conclusions 547

Acknowledgement 548

References 548

Ch. 19. Applications of Limited Dependent Variable Models in Finance 553

G. S. Maddala

1. Introduction 553

2. Studies on loan discrimination and default 553

3. Studies on bond ratings and bond yields 555

4. Event studies 557

5. Savings and loan and bank failures 559

6. Miscellaneous other applications 562

7. Suggestions for future research 564

References 565

PART VII. MISCELLANEOUS OTHER PROBLEMS

Ch. 20. Testing Option Pricing Models 567

D. S. Bates

1. Introduction 367

2. Option pricing fundamentals 568

3. Time series-based tests of option pricing models 574

4. Implicit parameter estimation 587

5. Implicit parameter tests of alternate distributional hypotheses 600

6. Summary and conclusions 604

References 605

Ch. 21. Peso Problems: Their Theoretical and Empirical Implications 613

M. D. D. Evans

1. Introduction 613

2. Peso problems and forecast errors 615

3. Peso problems, asset prices and fundamentals 626

4. Risk aversion and peso problems 634

Table of contents

5. Econometric issues 641

6. Conclusion 644

References 645

Ch. 22. Modeling Market Microstructure Time Series 647

J. Ilasbrouck

1. Introduction 647

2. Simple univariate models of prices 651

3. Simple bivariate models of prices and trades 657

4. General specifications 667

5. Time 673

6. Discreteness 677

7. Nonlinearity 679

8. Multiple mechanisms and markets 680

9. Summary and directions for further work 685

References 687

Ch. 23. Statistical Methods in Tests of Portfolio Efficiency: A Synthesis

J. Shanken

1. Introduction 693

2. Testing efficiency with a riskless asset 695

3. Testing efficiency without a riskless asset 701

4. Related work 708

References 709

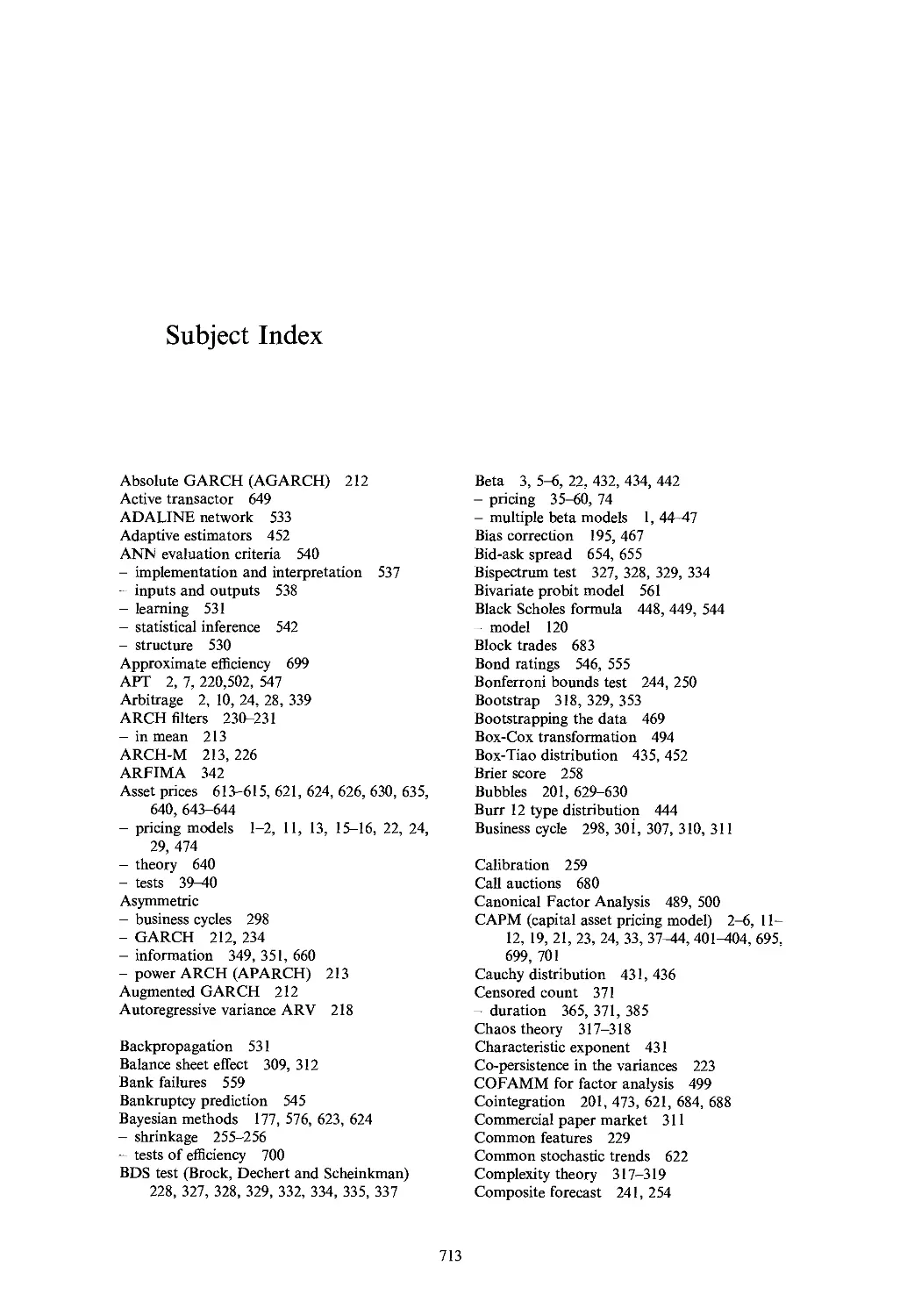

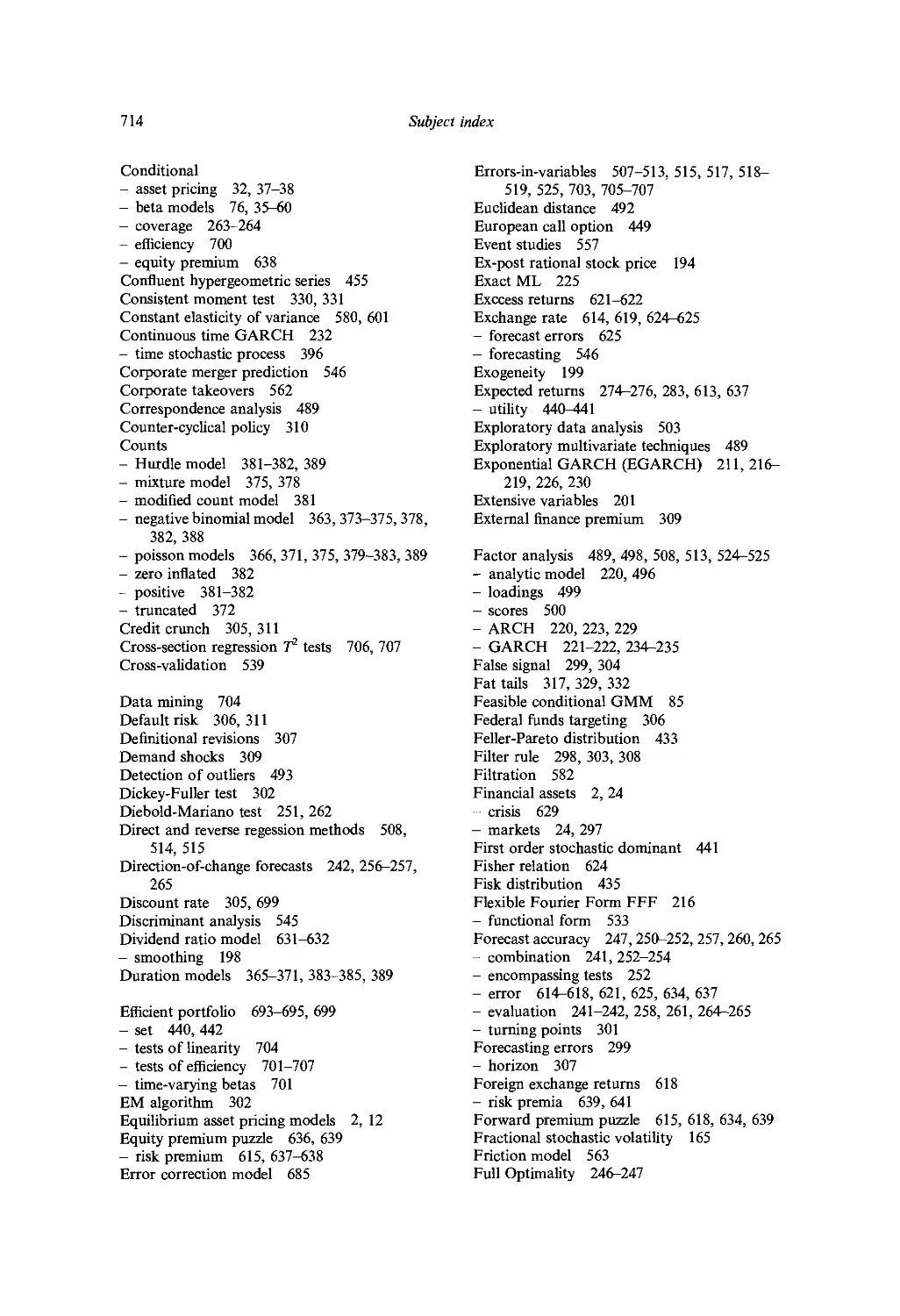

Subject Index 713

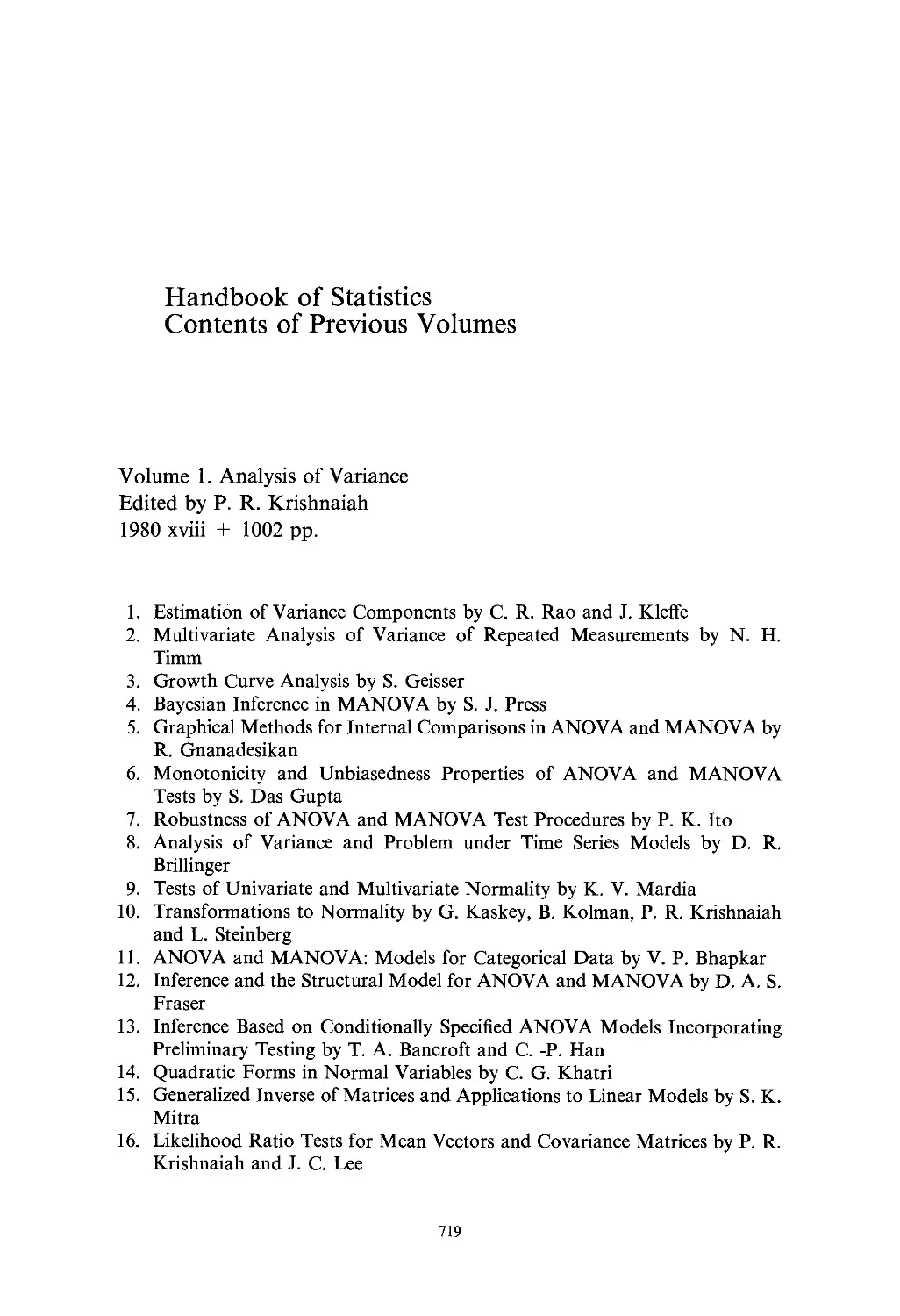

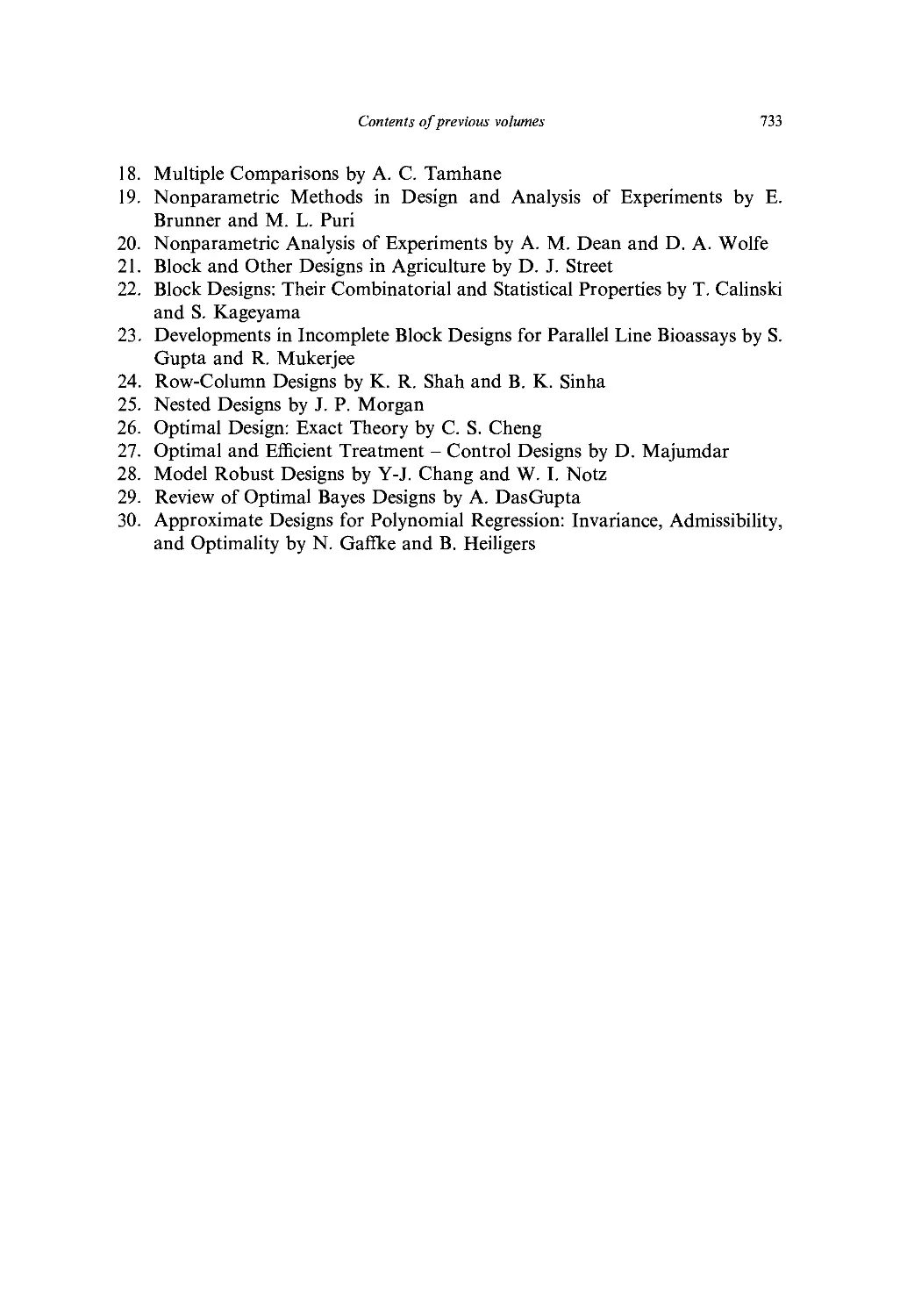

Contents of Previous Volumes 719

Preface

As with the earlier volumes in this series, the main purpose of this volume of

the Handbook of Statistics is to serve as a source reference and teaching

supplement to courses in empirical finance. Many graduate students and researchers in

the finance area today use sophisticated statistical methods but there is as yet no

comprehensive reference volume on this subject. The present volume is intended

to fill this gap.

The first part of the volume covers the area of asset pricing. In the first paper,

Ferson and Jagannathan present a comprehensive survey of the literature on

econometric evaluation of asset pricing models. The next paper by Harvey and

Kirby discusses the problems of instrumental variable estimation in latent

variable models of asset pricing. The next paper by Lehman reviews semi-parametric

methods in asset pricing models. Chapter 23 by Shanken also falls in the category

of asset pricing.

Part II of the volume on term structure of interest rates consists of only one

paper by Pagan, Hall and Martin. The paper surveys both the econometric and

finance literature in this area, and shows some similarities and divergences

between the two approaches. The paper also documents several stylized facts in the

data that prove useful in assessing the adequacy of the different models.

Part III of the volume deals with different aspects of volatility. The first paper

by Ghysels, Harvey and Renault present a comprehensive survey on the

important topic of stochastic volatility models. These models have their roots both

in mathematical finance and financial econometrics and are an attractive

alternative to the popular ARCH models. The next paper by LeRoy presents a critical

review of the literature on variance-bounds tests for market efficiency. The third

paper by Palm on GARCH models of stock price volatility, surveys some more

recent developments in this area. Several surveys on the ARCH models have

appeared in the literature and these are cited in the paper. The paper surveys

developments since the appearance of these surveys.

Part IV of the volume deals with prediction problems. The first paper by Diebold

and Lopez deals with the statistical methods of evaluation of forecasts. The second

paper by Kaul, reviews the literature on the predictability of stock returns. This area

has always fascinated those involved in making money in financial markets as well

as academics who presumably are interested in studying whether one can, in fact,

make money in the financial markets. The third paper by Lahiri reviews statistical

VI

Preface

evidence on interest rate spreads as predictors of business cycles. Since there is not

much of a literature to survey in this area, Lahiri presents some new results.

Part V of the volume deals with alternative probabilistic models in finance. The

first paper by Brock and deLima surveys several areas subsumed under the rubic

"complexity theory." This includes chaos theory, nonlinear time series models,

long memory models and models with asymmetric information. The next paper

by Cameron and Trivedi surveys the area of count data models in finance. In

some financial studies, the dependent variable is a count, taking non-negative

integer values. The next paper by McCulloch surveys the literature on stable

distributions. This area was very active in finance in the early 60's due to the work

by Mandelbrot but since then has not received much attention until recently when

interest in stable distributions has revived. The last paper by McDonald reviews

the variety of probability distributions which have been and can be used in the

statistical analysis of financial data.

Part VI deals with application of specialized statistical methods in finance. This

part covers important statistical methods that are of general applicability (to all

the models considered in the previous sections) and not covered adequately in the

other chapters. The first paper by Maddala and Li covers the area of bootstrap

methods. The second paper by Rao covers the area of principal component and

factor analyses which has, during recent years, been widely used in financial

research particularly in arbitrage pricing theory (APT). The third paper by

Maddala and Nimalendran reviews the area of errors in variables models as

applied to finance. Almost all variables in finance suffer from the errors in

variables problems. The fourth paper by Qi surveys the applications of artificial

neutral networks in financial research. These are general nonparametric nonlinear

models. The final paper by Maddala reviews the applications of limited dependent

variable models in financial research.

Part VII of the volume contains surveys of miscellaneous other problems. The

first paper by Bates surveys the literature on testing option pricing models. The

next paper by Evans discusses what are known in the financial literature as "peso

problems." The next paper by Hasbrouck covers market microstructure, which is

an active area of research in finance. The paper discusses the fime series work in

this area. The final paper by Shanken gives a comprehensive survey of tests of

portfolio efficiency.

One important area left out has been the use of Bayesian methods in finance.

In principle, all the problems discussed in the several chapters of the volume can

be analyzed from the Bayesian point of view. Much of this work remains to be

done.

Finally, we would like to thank Ms. Jo Ducey for her invaluable help at several

stages in the preparation of this volume and patient assistance in seeing the

manuscript through to publication.

G. S. Maddala

C. R. Rao

Contributors

D. S. Bates, Department of Finance, Wharton School, University of Pennsylvania,

Philadelphia, PA 19104, USA (Ch. 20)

W. A. Brock, Department of Economics, University of Wisconsin, Madison, WI

53706, USA (Ch. 11)

A. C. Cameron, Department of Economics, University of California at Davis,

Davis, CA 95616-8578, USA (Ch. 12)

P. J. F. de Lima, Department of Economics, The Johns Hopkins University,

Baltimore, MD 21218, USA (Ch. 11)

F. X. Diebold, Department of Economics, University of Pennsylvania,

Philadelphia, PA 19104, USA (Ch. 8)

M. D. D. Evans, Department of Economics, Georgetown University, Washington

DC 20057-1045, USA (Ch. 21)

W. E. Ferson, Department of Finance, University of Washington, Seattle, WA

98195, USA (Ch. 1)

E. Ghysels, Department of Economics, The Pennsylvania State University,

University Park, PA 16802 and CIRANO (Centre interuniversitaire de

recherche en analyse des organisations), Universite de Montreal, Montreal,

Quebec, Canada H3A2A5 (Ch. 5)

A. D. Hall, School of Business, Bond University, Gold Coast, QLD 4229, Australia

(Ch. 4)

A. C. Harvey, Department of Statistics, London School of Economics, Houghton

Street, London WC2A 2AE, UK (Ch. 5)

C. R. Harvey, Department of Finance, Fuqua School of Business, Box 90120, Duke

University, Durham, NC 27708-0120, USA (Ch. 2)

J. Hasbrouck, Department of Finance, Stern School of Business, 44 West 4th

Street, New York, NY 10012-1126, USA (Ch. 22)

R. Jagannathan, Finance Department, School of Business and Management, The

Hong Kong University of Science and Technology, Clear Water Bay, Kowloon,

Hong Kong (Ch. 1)

G. Kaul, University of Michigan Business School, Ann Harbor, MZ 48109-

1234 (Ch. 9)

C. M. Kirby, Department of Finance, College of Business & Mgm., University of

Maryland, College Park, MD 20742, USA (Ch. 2)

K. Lahiri, Department of Economics, State University of New York at Albany,

Albany, NY 12222 USA (Ch. 10)

XV

XVI

Contributors

B. N. Lehmann, Graduate School of International Relations, University of

California at San Diego, 9500 Gilman Drive, LaJolla, CA 92093-0519, USA

(Ch. 3)

S. F. LeRoy, Department of Economics, University of California at Santa

Barbara, Santa Barbara, CA 93106-9210 (Ch. 6)

H. Li, Department of Management Science, The Chinese University of Hongkong,

302 Leung Kau Kui Building, Shatin, NT, Hong Kong (Ch. 15)

J. A. Lopez, Department of Economics, University of Pennsylvania, Philadelphia,

PA 19104, USA (Ch. 8)

G. S. Maddala, Department of Economics, Ohio State University, 1945 N. High

Street, Columbus, OH 43210-1172, USA (Chs. 15, 17, 19)

V. Martin, Department of Economics, University of Melbourne, Parkville, VIC

3052, Australia (Ch. 4)

J. H. McCulloch, Department of Economics and Finance, 410 Arps Hall, 1945 N.

High Street, Columbus, OH 43210-1172, USA (Ch. 13)

J. B. McDonald, Department of Economics, Brigham Young University, Provo, UT

84602, USA (Ch. 14)

M. Nimalendran, Department of Finance, College of Business, University of

Florida, Gainesville, FL 32611, USA (Ch. 17)

A. R. Pagan, Economics Program, RSSS, Australian National University,

Canberra, ACT 0200, Australia (Ch. 4)

F. C. Palm, Department of Quantitative Economics, University of Limburg, P.O.

Box 616, 6200 MD Maastricht, The Netherlands (Ch. 7)

M. Qi, Department of Economics, College of Business Administration, Kent State

University, P.O. Box 5190, Kent, OH 44242 (Ch. 18)

C. R. Rao, The Pennsylvania State University, Center for Multivariate Analysis,

Department of Statistics, 325 Classroom Bldg., University park, PA 16802-

6105, USA (Ch. 16)

E. Renault, Institut D'Economie Industrielle, Universite des Sciences Sociales,

Place Anatole France, F-31042 Toulouse Cedex, France (Ch. 5)

J. Shanken, Department of Finance, Simon School of Business, University of

Rochester, Rochester, NY 14627, USA (Ch. 23)

P. K. Trivedi, Department of Economics, Indiana University, Bloomington, IN

47405-6620, USA (Ch. 12)

J. G. Wang, AT&T, Rm. N460-WOS, 412 Mt. Kemble Avenue, Morristown, NJ

07960, USA (Ch. 10)

G. S. Maddala and C. R. Rao, eds., Handbook of Statistics, Vol. 14

© 1996 Elsevier Science B.V. All rights reserved.

Econometric Evaluation of Asset Pricing Models

Wayne E. Ferson and Ravi Jagannathan

We provide a brief review of the techniques that are based on the generalized

method of moments (GMM) and used for evaluating capital asset pricing models.

We first develop the CAPM and multi-beta models and discuss the classical two-

stage regression method originally used to evaluate them. We then describe the

pricing kernel representation of a generic asset pricing model; this representation

facilitates use of the GMM in a natural way for evaluating the conditional and

unconditional versions of most asset pricing models. We also discuss diagnostic

methods that provide additional insights.

1. Introduction

A major part of the research effort in finance is directed toward understanding

why we observe a variety of financial assets with different expected rates of return.

For example, the U.S. stock market as a whole earned an average annual return

of 11.94% during the period from January of 1926 to the end of 1991. U.S.

Treasury bills, in contrast, earned only 3.64%. The inflation rate during the same

period was 3.11% (see Ibbotson Associates 1992).

To appreciate the magnitude of these differences, note that in 1926 a nice

dinner for two in New York would have cost about $10. If the same $10 had been

invested in Treasury bills, by the end of 1991 it would have grown to $110, still

enough for a nice dinner for two. Yet $10 invested in stocks would have grown to

$6,756. The point is that the average return differentials among financial assets

are both substantial and economically important.

A variety of asset pricing models have been proposed to explain this

phenomenon. Asset pricing models describe how the price of a claim to a future

payoff is determined in securities markets. Alternatively, we may view asset pri-

* Ferson acknowledges financial support from the Pigott-PACCAR Professorship at the

University of Washington. Jagannathan acknowledges financial support from the National Science

Foundation, grant SBR-9409824. The views expressed herein are those of the authors and not

necessarily those of the Federal Reserve Bank of Minneapolis or the Federal Reserve System.

1

2

W. E. Ferson and R. Jagannathan

cing models as describing the expected rates of return on financial assets, such as

stocks, bonds, futures, options, and other securities. Differences among the

various asset pricing models arise from differences in their assumptions that restrict

investors' preferences, endowments, production, and information sets; the

stochastic process governing the arrival of news in the financial markets; and the

type of frictions allowed in the markets for real and financial assets.

While there are differences among asset pricing models, there are also

important commonalities. All asset pricing models are based on one or more of three

central concepts. The first is the law of one price, according to which the prices of

any two claims which promise the same future payoff must be the same. The law

of one price arises as an implication of the second concept, the no-arbitrage

principle. The no-arbitrage principle states that market forces tend to align the

prices of financial assets to eliminate arbitrage opportunities. Arbitrage

opportunities arise when assets can be combined, by buying and selling, to form

portfolios that have zero net cost, no chance of producing a loss, and a positive

probability of gain. Arbitrage opportunities tend to be eliminated by trading in

financial markets, because prices adjust as investors attempt to exploit them. For

example, if there is an arbitrage opportunity because the price of security A is too

low, then traders' efforts to purchase security A will tend to drive up its price. The

law of one price follows from the no-arbitrage principle, when it is possible to buy

or sell two claims to the same future payoff. If the two claims do not have the

same price, and if transaction costs are smaller than the difference between their

prices, then an arbitrage opportunity is created. The arbitrage pricing theory

(APT, Ross 1976) is one of the most well-known asset pricing model based on

arbitrage principles.

The third central concept behind asset pricing models is financial market

equilibrium. Investors' desired holdings of financial assets are derived from an

optimization problem. A necessary condition for financial market equilibrium in

a market with no frictions is that the first-order conditions of the investors'

optimization problem be satisfied. This requires that investors be indifferent at the

margin to small changes in their asset holdings. Equilibrium asset pricing models

follow from the first-order conditions for the investors' portfolio choice problem

and from a market-clearing condition. The market-clearing condition states that

the aggregate of investors' desired asset holdings must equal the aggregate

"market portfolio" of securities in supply.

The earliest of the equilibrium asset pricing models is the Sharpe-Lintner-

Mossin-Black capital asset pricing model (CAPM), developed in the early

1960s. The CAPM states that expected asset returns are given by a linear function

of the assets' betas, which are their regression coefficients against the market

portfolio. Merton (1973) extended the CAPM, which is a single-period model, to

an economic environment where investors make consumption, savings, and

investment decisions repetitively over time. Econometrically, Merton's model

generalizes the CAPM from a model with a single beta to one with multiple betas.

A multiple-beta model states that assets' expected returns are linear functions of a

number of betas. The APT of Ross (1976) is another example of a multiple-beta

Econometric evaluation of asset pricing models

3

asset pricing model, although in the APT the expected returns are only

approximately a linear function of the relevant betas.

In this paper we emphasize (but not exclusively) the econometric evaluation of

asset pricing models using the generalized method of moments (GMM, Hansen

1982). We focus on the GMM because, in our opinion, it is the most important

innovation in empirical methods in finance within the past fifteen years. The

approach is simple, flexible, valid under general statistical assumptions, and often

powerful in financial applications. One reason the GMM is "general" is that

many empirical methods used in finance and other areas can be viewed as special

cases of the GMM.

The rest of this paper is organized as follows. In Section 2 we develop the

CAPM and multiple-beta models and discuss the classical two-stage regression

procedure that was originally used to evaluate these models. This material

provides an introduction to the various statistical issues involved in the empirical

study of the models; it also motivates the need for multivariate estimation

methods. In Section 3 we describe an alternative representation of the asset

pricing models which facilitates the use of the GMM. We show that most asset

pricing models can be represented in this stochastic discount factor form. In

Section 4 we describe the GMM procedure and illustrate how to use it to estimate

and test conditional and unconditional versions of asset pricing models. In

Section 5 we discuss model diagnostics that provide additional insight into the causes

for statistical rejections and that help assess specification errors in the models. In

order to avoid a proliferation of symbols, we sometimes use the same symbols to

mean different things in different subsections. The definitions should be clear from

the context. We conclude with a summary in Section 6.

2. Cross-sectional regression methods for testing beta pricing models

In this section we first derive the CAPM and generalize its empirical specification

to include multiple-beta models. We then describe the intuitively appealing cross-

sectional regression method that was first employed by Black, Jensen, and Scholes

(1972, abbreviated here as BJS) and discuss its shortcomings.

2.1. The capital asset pricing model

The CAPM was the first equilibrium asset pricing model, and it remains one of

the foundations of financial economics. The model was developed by Sharpe

(1964), Lintner (1965), Mossin (1966), and Black (1972). There are a huge number

of theoretical gapers which refine the necessary assumptions and provide

derivations of the CAPM. HeTewe provide-a-brief-review of the theory.

Let Rit denote one plus the return on asset i during period t, i = 1,2,..., N. Let

Rmt denote the corresponding gross return for the market portfolio of all assets in

the economy. The return on the market portfolio envisioned by the theory is not

observable. In view of this, empirical studies of the CAPM commonly assume

4

W. E. Ferson and R. Jagannathan

that the market return is an exact linear function of the return on an observable

portfolio of common stocks.1 Then, according to the CAPM,

E(Ru) = So + SiPt (2.1)

where

ft = Cov(tf;„tfm,)/Var(tfm,).

According to the CAPM, the market portfolio with return Rmt is on the

minimum-variance frontier of returns. A return is said to be on the minimum-

variance frontier if there is no other portfolio with the same expected return but

lower variance. If investors are risk averse, the CAPM implies that Rmt is on the

positively sloped portion of the minimum-variance frontier, which implies that

the coefficient b\ > 0. In equation (2.1), d0 = E(Ro,), where the return i?0i is

referred to as a zero-beta asset to Rmt because of the condition Cov(Rot,Rmt) = 0.

To derive the CAPM, assume that investors choose asset holdings at each date

t — 1 so as to maximize the following one-period objective function:

V[E(Rpt\I),Var(Rpl\I)} (2.2)

where Rpt denotes the date t return on the optimally chosen portfolio and E(-\I)

and Var(-|7) denote the expectation and variance of return, conditional on the

information set / of the investor as of time t-\. We assume that the function V[- > •]

is increasing and concave in its first argument, decreasing in its second argument,

and time-invariant. For the moment we assume that the information set /includes

only the unconditional moments of asset returns, and we drop the symbol / to

simplify the notation. The first-order conditions for the optimization problem

given above can be manipulated to show that the following must hold:

E(RU) = E(R0t) + pipE(Rpt - R0I) (2.3)

for every asset / = 1, 2, ..., N, where Rpt is the return on the optimally chosen

portfolio, Rot is the return on the asset that has zero covariance with Rpt, and pip

= Cov(Rit,Rpt)IVar(Rpt).

To get from the first-order condition for an investor's optimization problem, as

stated in equation (2.3), to the CAPM, it is useful to understand some of the

properties of the minimum-variance frontier, that is, the set of portfolio returns

with the minimum variance, given their expected returns. It can be readily verified

that the optimally chosen portfolio of the investor is on the minimum-variance

frontier.

One property of the minimum-variance frontier is that it is closed to portfolio

formation. That is, portfolios of frontier portfolios are also on the frontier.

1 When this assumption fails, it introduces market proxy error. This source of error is studied by

Roll (1977), Stambaugh (1982), Kandel (1984), Kandel and Stambaugh (1987), Shanken (1987),

Hansen and Jagannathan (1994), and Jagannathan and Wang (1996), among others. We will ignore

proxy error in our discussion.

Econometric evaluation of asset pricing models

5

Suppose that all investors have the same beliefs. Then every investor's optimally

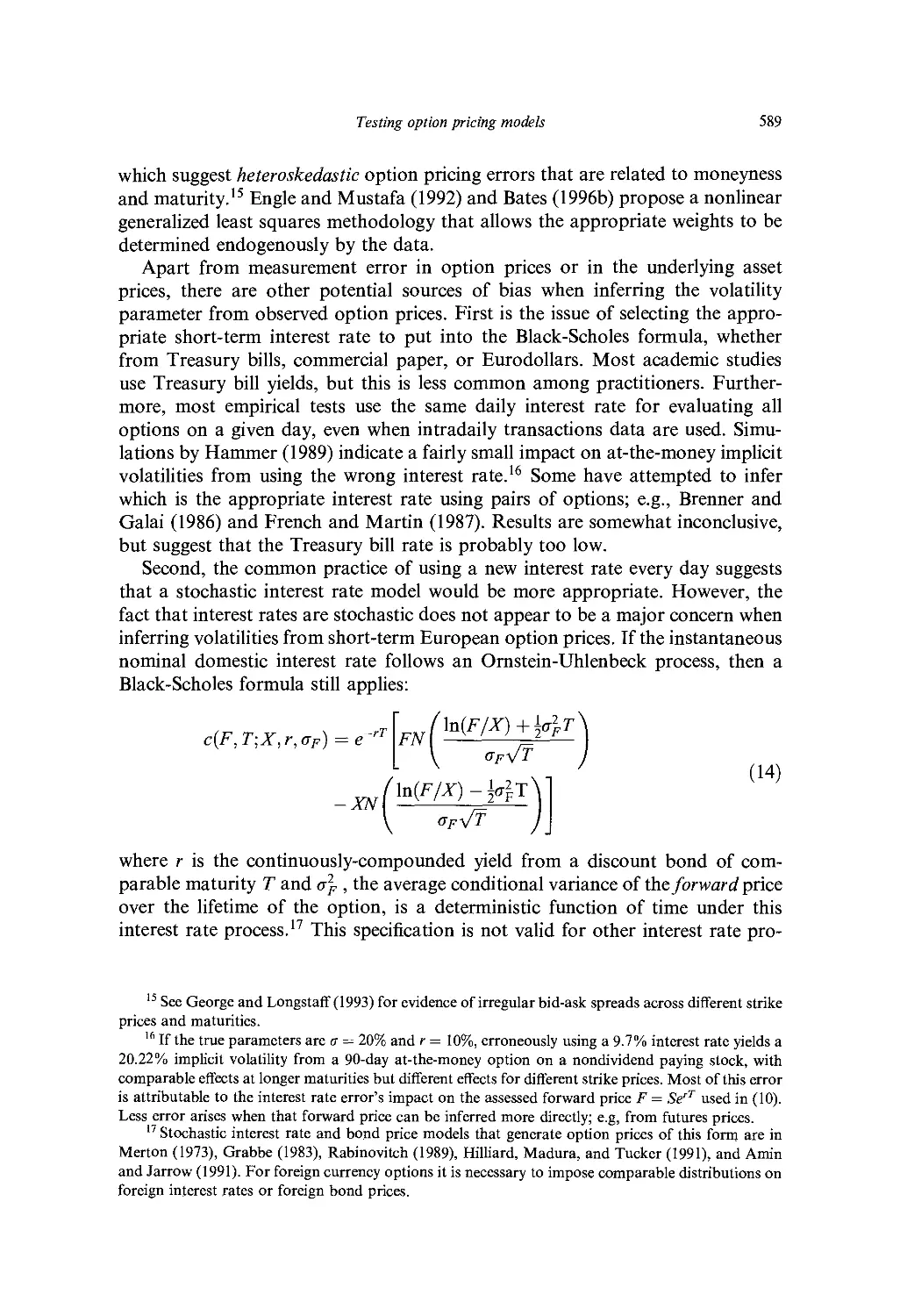

chosen portfolio will be on the same frontier, and hence the market portfolio of

all assets in the economy - which is a portfolio of every investor's optimally

chosen portfolio - will also be on the frontier. It can be shown (Roll 1977) that

equation (2.3) will hold if Rpt is replaced by the return of any portfolio on the

frontier and RQt is replaced by its corresponding zero-beta return. Hence we can

replace an investor's optimal portfolio in equation (2.3) with the return on the

market portfolio to get the CAPM, as given by equation (2.1).

2.2. Testable implications of the CAPM

Given an interesting collection of assets, and if their expected returns and market-

portfolio betas Pi are known, a natural way to examine the CAPM would be

to estimate the empirical relation between the expected returns and the betas and

see if that relation is linear. However, neither betas nor expected returns are

observed by the econometrician. Both must be estimated. The finance literature

first attacked this problem by using a two-step, time-series, cross-sectional

approach.

Consider the following sample analogue of the population relation given in

(2.1):

Rt = 50 + Sibi + <?,-, i = 1,... ,JV (2.4)

which is a cross-sectional regression of i?; on bt, with regression coefficients equal

to S0 and ^i- IQ equation (2.4), i?, denotes the sample average return of the asset, i,

and bt is the (OLS) slope coefficient estimate from a regression of the return, Rit,

over time on the market index return, Rmt; bt is a constant. Let ut = Ri—E(Rit)

and vt = Pf-bi. Substituting these relations for E(RU) and /?, in (2.1) leads to (2.4)

and specifies the composite error as et = Ut + d^t- This gives rise to a classic

errors-in-variables problem, as the regressor bt in the cross-sectional regression

model (2.4) is measured with error. Using finite time-series samples for the

estimate of bh the regression (2.4) will deliver inconsistent estimates of d0 and di, even

with an infinite cross-sectional sample. However, the cross-sectional regression

will provide consistent estimates of the coefficients as the time-series sample size T

(which is used in the first step to estimate the beta coefficient /},) becomes very

large. This is because the first-step estimate of pt is consistent, so as T becomes

large, the errors-in-variables problem of the second-stage regression vanishes.

The measurement error in beta may be large for individual securities, but it is

smaller for portfolios. In view of this fact, early research focused on creating

portfolios of securities in such a way that the betas of the portfolios could be

estimated precisely. Hence one solution to the errors-in-variables problem is to

work with portfolios instead of individual securities. This creates another

problem. Arbitrarily chosen portfolios tend to exhibit little dispersion in their betas. If

all the portfolios available to the econometrician have the same betas, then

equation (2.1) has no empirical content as a cross-sectional relation. Black,

Jensen, and Scholes (BJS, 1972) came up with an innovative solution to overcome

6

W. E. Ferson and R. Jagannathan

this difficulty. At every point in time for which a cross-sectional regression is run,

they estimate betas on individual securities based on past history, sort the

securities based on the estimated values of beta, and assign individual securities to

beta groups. This results in portfolios with a substantial dispersion in their betas.

Similar portfolio formation techniques have become standard practice in the

empirical finance literature.

Suppose that we can create portfolios in such a way that we can view the

errors-in-variables problem as being of second-order importance. We still have to

determine how to assess whether there is empirical support for the CAPM. A

standard approach in the literature is to consider specific alternative hypotheses

about the variables which determine expected asset returns. According to the

CAPM, the expected return for any asset is a linear function of its beta only.

Therefore, one natural test would be to examine if any other cross-sectional

variable has the ability to explain the deviations from equation (2.1). This is the

strategy that Fama and MacBeth (1973) followed by incorporating the square of

beta and measures of nonmarket (or residual time-series) variance as additional

variables in the cross-sectional regressions. More recent empirical studies have

used the relative size of firms, measured by the market value of their equity, the

ratio of book-to-market-equity, and related variables.2 For example, the

following model may be specified:

E(Ru) = d0 + SiP, + <5size LMEt (2.5)

where LMEt is the natural logarithm of the total market value of the equity

capital of firm i. In what follows we will first show that these ideas extend easily to

the general multiple-beta model. We will then develop a sampling theory for the

cross-sectional regression estimators.

2.3. Multiple-beta pricing models and cross-sectional regression methods

According to the CAPM, the expected return on an asset is a linear function of its

market beta. A multiple-beta model asserts that the expected return is a linear

function of several betas, i.e.,

E(Rit)=50+ J2 W* (2-6)

k=\,...JC

where fiik, k — 1,... ,K, are the multiple regression coefficients of the return of

asset i on K economy-wide pervasive risk factors, fk,k — \,...,K. The coefficient

(5o is the expected return on an asset that has p0k = 0, for k = 1,..., K; i.e., it is the

expected return on a zero- (multiple-) beta asset. The coefficient bk, corresponding

to the £th factor, has the following interpretation: it is the expected return

differential, or premium, for a portfolio that has Pik = 1 and ptJ = 0 for all j =£ k,

2 Fama and French (1992) is a prominent recent example of this approach. Berk (1995) provides a

justification for using relative market value and book-to-price ratios as measures of expected returns.

Econometric evaluation of asset pricing models

7

measured in excess of the zero-beta asset's expected return. In other words, it is

the expected return premium per unit of beta risk for the risk factor, k. Ross

(1976) showed that an approximate version of (2.6) will hold in an arbitrage-free

economy. Connor (1984) provided sufficient conditions for (2.6) to hold exactly in

an economy with an infinite number of assets in general equilibrium. This version

of the multiple-beta model, the exact APT, has received wide attention in the

finance literature. When the factors, /&, are observed by the econometrician, the

cross-sectional regression method can be used to empirically evaluate the

multiple-beta model.3 For example, the alternative hypothesis that the size of the firm

is related to expected returns, given the factor betas, may be examined by using

cross-sectional regressions of returns on the K factor betas and the LMEh similar

to equation (2.5), and by examining whether the coefficient Ssize is different from

zero.

2.4. Sampling distributions for coefficient estimators: The two-stage,

cross-sectional regression method

In this section we follow Shanken (1992) and Jagannathan and Wang (1993, 1996)

in deriving the asymptotic distribution of the coefficients that are estimated using

the cross-sectional regression method. For the purposes of developing the

sampling theory, we will work with the following generalization of equation (2.6):

E(**)=f>,^* + f>2*fl* (2.7)

fc=0 k=\

where {Aik} are observable characteristics of firm /, which are assumed to be

measured without error (the first "characteristic," when k = 0, is the constant

1.0). One of the attributes may be the size variable LMEt. The ft are regression

betas on a set of A^ economic risk factors, which may include the market index

return. Equation (2.7) can be written more compactly using matrix notation as

li=Xy (2.8)

where Rt = [Rlt,... ,Rm], A* = E(Rt),X = [A : ft1, and the definition of the matrices

A and ji and the vector y follow from (2.7).

The cross-sectional method proceeds in two stages. First, ft is estimated by

time-series regressions of Ru on the risk factors and a constant. The estimates are

denoted by b. Let x = [A : b], and let R denote the time-series average of the return

vector Rt. Let g denote the estimator of the coefficient vector obtained from the

following cross-sectional regression:

g = (x'x)~xx!R (2.9)

3 See Chen (1983), Connor and Korajczyk (1986), Lehmann and Modest (1987), and McElroy and

Burmeister (1988) for discussions on estimating and testing the model when the factor realizations are

not observable under some additional auxiliary assumptions.

8

W. E. Ferson and R. Jagannathan

where we assume that x is of rank I + K\ + K2. If b and R converge respectively

to fi and E(Rt) in probability, then g will converge in probability to y.

Black, Jensen, and Scholes (1972) suggest estimating the sampling errors

associated with the estimator, g, as follows. Regress Rt on x at each date / to obtain

gt, where

g, = {x'x)~lx'Rt . (2.10)

The BJS estimate of the covariance matrix of Tl! (g — y) is given by

v = T-1'£(gt-g)(gt-g)' (2.11)

t

which uses the fact that g is the sample mean of the gt's. Substituting the

expression for gt given in (2.10) into the expression for v given in (2.11) gives

v = (x'x)-lx'[T-l^2(Rt - R)(Rt - R)'}x{x'XyX . (2.12)

t

To analyze the BJS covariance matrix estimator, we write the average return

vector, R, as

R=xy + (R-n) -{x-X)y . (2.13)

Substitute this expression for R into the expression for g in (2.9) to obtain

g-y = (x'xylx'[(R - ») - (b - fi)y2] . (2.14)

Assume that b is a consistent estimate of ft and that TXI2{R — fi) —>d u and

Txl2{b — fi) —>,/ h, where u and h are random variables with well-defined

distributions and —></ indicates convergence in distribution. We then have

Tl/2(g - y) -^d (x'x)~lx'u - (x'x)~lx'hy2 . (2.15)

In (2.15) the first term on the right side is that component of the sampling error

that arises from replacing u by the sample average R. The second term is the

component of the sampling error that arises due to replacing fi by its estimate b.

The usual consistent estimate of the asymptotic variance of u is given by

T-lJ2(Rt-R)(Rt-R)' . (2.16)

t

Therefore, a consistent estimate of variance of the first term in (2.15) is given by

(x'x)-lx'[T-1 J2(Rt -R)(Rt -R)']x{x'xYx

t

which is the same as the expression for the BJS estimate for the covariance matrix

of the estimated coefficients v, given in (2.12). Hence if we ignore the sampling

error that arises from using estimated betas, then the BJS covariance estimator

Econometric evaluation of asset pricing models

9

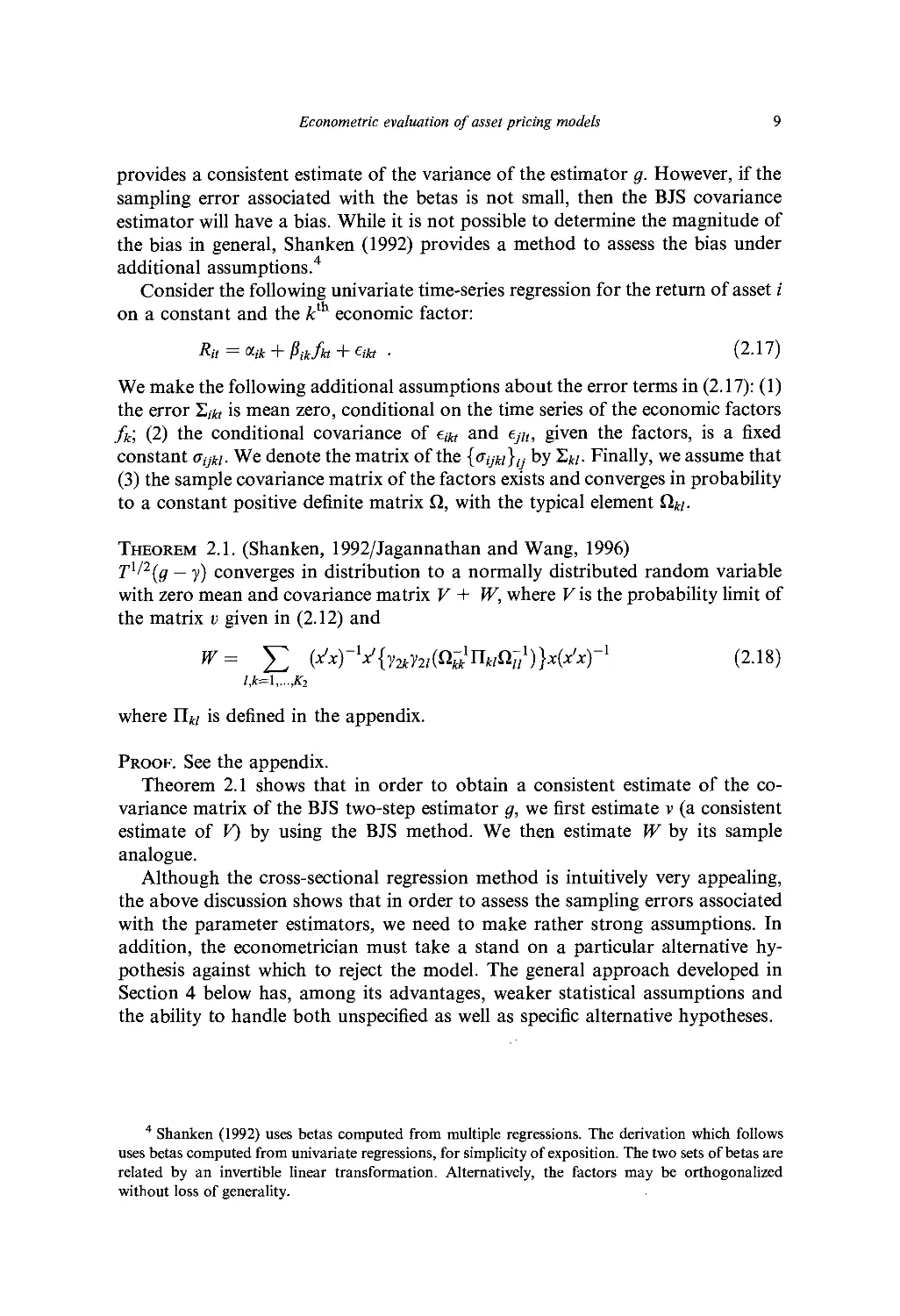

provides a consistent estimate of the variance of the estimator g. However, if the

sampling error associated with the betas is not small, then the BJS covariance

estimator will have a bias. While it is not possible to determine the magnitude of

the bias in general, Shanken (1992) provides a method to assess the bias under

additional assumptions.4

Consider the following univariate time-series regression for the return of asset i

on a constant and the kth economic factor:

Ru = ocik + Pikfkt + eat . (2.17)

We make the following additional assumptions about the error terms in (2.17): (1)

the error £,& is mean zero, conditional on the time series of the economic factors

fk; (2) the conditional covariance of eikt and £/&, given the factors, is a fixed

constant <7y«. We denote the matrix of the {ffywjy by Z«. Finally, we assume that

(3) the sample covariance matrix of the factors exists and converges in probability

to a constant positive definite matrix Q, with the typical element Q«.

Theorem 2.1. (Shanken, 1992/Jagannathan and Wang, 1996)

Txl2(g — y) converges in distribution to a normally distributed random variable

with zero mean and covariance matrix V + W, where V is the probability limit of

the matrix v given in (2.12) and

W= Y. {^x)-xX'{y2ky2l{Qr^Ilkl^)}x{xlx)-x (2.18)

l,k=\,...fa

where JJW is defined in the appendix.

Proof. See the appendix.

Theorem 2.1 shows that in order to obtain a consistent estimate of the co-

variance matrix of the BJS two-step estimator g, we first estimate v (a consistent

estimate of V) by using the BJS method. We then estimate W by its sample

analogue.

Although the cross-sectional regression method is intuitively very appealing,

the above discussion shows that in order to assess the sampling errors associated

with the parameter estimators, we need to make rather strong assumptions. In

addition, the econometrician must take a stand on a particular alternative

hypothesis against which to reject the model. The general approach developed in

Section 4 below has, among its advantages, weaker statistical assumptions and

the ability to handle both unspecified as well as specific alternative hypotheses.

4 Shanken (1992) uses betas computed from multiple regressions. The derivation which follows

uses betas computed from univariate regressions, for simplicity of exposition. The two sets of betas are

related by an invertible linear transformation. Alternatively, the factors may be orthogonalized

without loss of generality.

10

W. E. Ferson and R. Jagannathan

3. Asset pricing models and stochastic discount factors

Virtually all financial asset pricing models imply that any gross asset return

Ri,t+i, multiplied by some market-wide random variable mt+1, has a constant

conditional expectation:

E, {mt+iRitt+i} = l,all i. (3.1)

The notation Et{} will be used to denote the conditional expectation, given a

market-wide information set. Sometimes it will be convenient to refer to

expectations conditional on a subset Z, of the market information, which are

denoted as E(-1 Zt). For example, Zt can represent a vector of instrumental variables

for the public information set which are available to the econometrician. When Zt

is the null information set, the unconditional expectation is denoted as E(-). If we

take the expected values of equation (3.1), it follows that versions of the same

equation must hold for the expectations E(-1Zt) and E().

The random variable mt+\ has various names in the literature. It is known as a

stochastic discount factor, an equivalent martingale measure, a Radon-Nicodym

derivative, or an intertemporal marginal rate of substitution. We will refer to an

mt + i which satisfies (3.1) as a valid stochastic discount factor. The motivation for

use of this term arises from the following observation. Write equation (3.1) as

Pit = E,{m,+iXij+i}

where Xiyt+1 is the payoff of asset i at time t + 1 (the market value plus any cash

payments) and R^t+i = Xif+\jPit. Equation (3.1) says that if we multiply a future

payoff Xtj+i by the stochastic discount factor mt+\ and take the expected value,

we obtain the present value of the future payoff.

The existence of an mt+\ that satisfies (3.1) says that all assets with the same

payoffs have the same price (i.e., the law of one price). With the restriction that

mt+1 is a strictly positive random variable, equation (3.1) becomes equivalent to a

no-arbitrage condition. The condition is that all portfolios of assets with payoffs

that can never be negative, but are positive with positive probability, must have

positive prices.

The no-arbitrage condition does not uniquely identify mt+\ unless markets are

complete, which means that there are as many linearly independent payoffs

available in the securities markets as there are states of nature at date t + 1. To

obtain additional insights about the stochastic discount factor and the

no-arbitrage condition, assume for the moment that the markets are complete. Given

complete markets, positive state prices are required to rule out arbitrage

opportunities.5 Let qts denote the time t price of a security that pays one unit at date

t + 1 if, and only if, the state of nature at t + 1 is s. Then the time t price of a

5 See Debreu (1959) and Arrow (1970) for models of complete markets. See Beja (1971),

Rubinstein (1976), Ross (1977), Harrison and Kreps (1979), and Hansen and Richard (1987) for further

theoretical discussions.

Econometric evaluation of asset pricing models

11

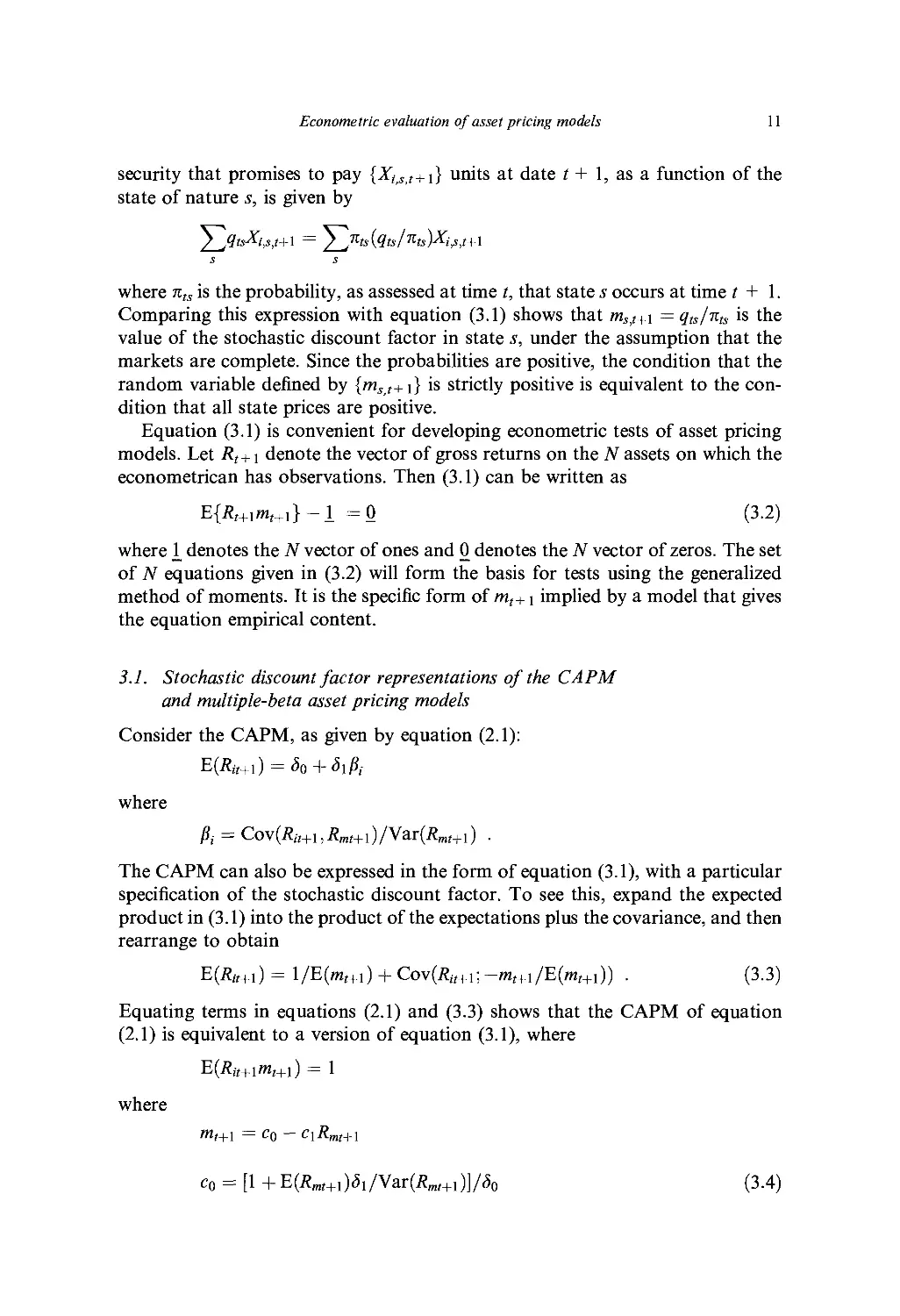

security that promises to pay {XiiS>l+l} units at date t + 1, as a function of the

state of nature s, is given by

2_^1ts^i,s,t+\ = / Kts{<ltslftts)Xi,s,t+\

s s

where nts is the probability, as assessed at time t, that state s occurs at time t + 1.

Comparing this expression with equation (3.1) shows that mStt+\ = qts/nts is the

value of the stochastic discount factor in state s, under the assumption that the

markets are complete. Since the probabilities are positive, the condition that the

random variable defined by {mst+\} is strictly positive is equivalent to the

condition that all state prices are positive.

Equation (3.1) is convenient for developing econometric tests of asset pricing

models. Let Rt+\ denote the vector of gross returns on the N assets on which the

econometrican has observations. Then (3.1) can be written as

E{Rt+lmt+l} - I =0 (3.2)

where 1_ denotes the N vector of ones and 0 denotes the N vector of zeros. The set

of N equations given in (3.2) will form the basis for tests using the generalized

method of moments. It is the specific form of mt+ \ implied by a model that gives

the equation empirical content.

3.1. Stochastic discount factor representations of the CAPM

and multiple-beta asset pricing models

Consider the CAPM, as given by equation (2.1):

E(Rit+l) = d0 + dlpi

where

ft = Cov(Ri!+uRmt+i)/Yar(Rmt+i) .

The CAPM can also be expressed in the form of equation (3.1), with a particular

specification of the stochastic discount factor. To see this, expand the expected

product in (3.1) into the product of the expectations plus the covariance, and then

rearrange to obtain

E(Ri[+l) = l/E(i»r+i) + Cov(Rit+i;-mt+i/E(mt+l)) . (3.3)

Equating terms in equations (2.1) and (3.3) shows that the CAPM of equation

(2.1) is equivalent to a version of equation (3.1), where

E(Rit+iml+i) = 1

where

mt+\ = cq - c\Rmt+\

c0 = [1 +E(Rmt+l)dl/Yar(Rml+l)}/So

(3.4)

12

W. E. Ferson and R. Jagannathan

and

ci = <5i/[<50Var(tfm(+i)].

Equation (3.4) was originally derived by Dybvig and Ingersoll (1982).

Now consider the following multiple-beta model which was given in equation

(2.6):

E(Rlt+i) = S0 + ]T dkpik .

k=\,...,K

It can be readily verified by substitution that this model implies the following

stochastic discount factor representation:

E(Rit+lmit+l) = 1

where

mu+i = Co + ci/h+i + ■ • • + cKfKt+i

with

co = [1 + £{4E(/*)/Var(/t)}]/«5o (3.5)

k

and

cj = - {8j/50Var(fj)}, j=l,...,K .

The preceding results apply to the CAPM and multiple-beta models, interpreted

as statements about the unconditional expected returns of the assets. These

models are also interpreted as statements about conditional expected returns in

some tests where the expectations are conditioned on predetermined, publicly

available information. All of the analysis of this section can be interpreted as

applying to conditional expectations, with the appropriate changes in notation. In

this case, the parameters c0, cu S0, Su etc., will be functions of the time /

information set.

3.2. Other examples of stochastic discount factors

In equilibrium asset pricing models, equation (3.1) arises as a first-order condition

for a consumer-investor's optimization problem. The agent maximizes a lifetime

utility function of consumption (including possibly a bequest to heirs). Denote

this function by V(-). If the allocation of resources to consumption and to

investment assets is optimal, it is not possible to obtain higher utility by changing

the allocation. Suppose that an investor considers reducing consumption at time /

to purchase more of (any) asset. The utility cost at time / of the forgone

consumption is the marginal utility of consumption expenditures Ct, denoted

by (dV jdCt) > 0, multiplied by the price Pu of the asset, measured in the same

units as the consumption expenditures. The expected utility gain of selling the

share and consuming the proceeds at time / + 1 is

Econometric evaluation of asset pricing models

13

Et{{Pif+l+Dtt+l){dVldCt+l)}

where A,*+i is the cash flow or dividend paid at time t+l. If the allocation

maximizes expected utility, the following must hold:

p^Midv/dc,)} = e,{(/Vh +Dit!+l)(dv/dct+l)}.

This intertemporal Euler equation is equivalent to equation (3.1), with

mt+l = (dv/dct+l)/Et{(dv/dct)} . (3.6)

The mt+i in equation (3.6) is the intertemporal marginal rate of substitution

(IMRS) of the representative consumer. The rest of this section shows how many

models in the asset pricing literature are special cases of (3.1), where mt+\ is

defined by equation (3.6).6

If a representative consumer's lifetime utility function V(-) is time-separable,

the marginal utility of consumption at time t, (dV/dCt), depends only on variables

dated at time t. Lucas (1978) and Breeden (1979) derived consumption-based

asset pricing models of the following type, assuming that the preferences are time-

separable and additive:

V = ^P'u(Ct)

t

where ft is a time discount parameter and «(■) is increasing and concave in current

consumption Ct. A convenient specification for w() is

u(C) = [Cl-« - 1]/(1 - a) . (3.7)

In equation (3.7), a > 0 is the concavity parameter of the period utility function.

This function displays constant relative risk aversion equal to a.7 Based on these

assumptions and using aggregate consumption data, a number of empirical

studies test the consumption-based asset pricing model.8

Dunn and Singleton (1986) and Eichenbaum, Hansen, and Singleton (1988),

among others, model consumption expenditures that may be durable in nature.

Durability introduces nonseparability over time, since the flow of consumption

services depends on the consumer's previous expenditures, and the utility is de-

6 Asset pricing models typically focus on the relation of security returns to aggregate quantities. It

is therefore necessary to aggregate the Euler equations of individuals to obtain equilibrium expressions

in terms of aggregate quantities. Theoretical conditions which justify the use of aggregate quantities

are discussed by Gorman (1953), Wilson (1968), Rubinstein (1974), Constantinides (1982), Lewbel

(1989), Luttmer (1993), and Constantinides and Duffle (1994).

7 Relative risk aversion in consumption is defined as -Cw"(C)/w'(C). Absolute risk aversion is

—u"(C)/u'(C), where a prime denotes a derivative. Ferson (1983) studies a consumption-based asset

pricing model with constant absolute risk aversion.

8 Substituting (3.7) into (3.6) shows that m,+1 = /3(C,+i/'C,)~a:. Empirical studies of this model

include Hansen and Singleton (1982, 1983), Ferson (1983), Brown and Gibbons (1985), Jagannathan

(1985), Ferson and Merrick (1987), and Wheatley (1988).

14

W. E. Ferson and R. Jagannathan

fined over the services. Current expenditures increase the consumer's future

utility of services if the expenditures are durable. The consumer optimizes over the

expenditures Ct; thus, durability implies that the marginal utility, (dV/dCt),

depends on variables dated other than date t.

Another form of time-nonseparability arises if the utility function exhibits

habit persistence. Habit persistence means that consumption at two points in time

are complements. For example, the utility of current consumption is evaluated

relative to what was consumed in the past. Such models are derived by Ryder and

Heal (1973), Becker and Murphy (1988), Sundaresan (1989), Constantinides

(1990), Detemple and Zapatero (1991), and Novales (1992), among others.

Ferson and Constantinides (1991) model both the durability of consumption

expenditures and habit persistence in consumption services. They show that the

two combine as opposing effects. In an example where the effect is truncated at a

single lag, the derived utility of expenditures is

V=(l~arlJ2P'(Ct + bCt^0i . (3.8)

t

The marginal utility at time t is

(dv/dct) = p{c, + bc-x)-" + pt+lbEt {(ct+y + bctya} . (3.9)

The coefficient b is positive and measures the rate of depreciation if the good is

durable and there is no habit persistence. If habit persistence is present and the

good is nondurable, this implies that the lagged expenditures enter with a negative

effect (b < 0).

Ferson and Harvey (1992) and Heaton (1995) consider a form of

time-nonseparability which emphasizes seasonality. The utility function is

(l-ay^PiQ + bQ-rf-"

t

where the consumption expenditure decisions are assumed to be quarterly. The

subsistence level (in the case of habit persistence) or the flow of services (in the

case of durability) is assumed to depend only on the consumption expenditure in

the same quarter of the previous year.

Abel (1990) studies a form of habit persistence in which the consumer evaluates

current consumption relative to the aggregate consumption in the previous

period, consumption that he or she takes as exogenous. The utility function is like

equation (3.8), except that the "habit stock," bCt-\, refers to the aggregate

consumption. The idea is that people care about "keeping up with the Joneses."

Campbell and Cochrane (1995) also develop a model in which the habit stock is

taken as exogenous by the consumer. This approach results in a simpler and more

tractable model, since the consumer's optimization does not have to take account

of the effects of current decisions on the future habit stock.

Epstein and Zin (1989, 1991) consider a class of recursive preferences which

can be written as Vt =F(Ct,CEQt{Vt+i)). CEQt{-) is a time t "certainty equiva-

Econometric evaluation of asset pricing models

15

lent" for the future lifetime utility Vt+\. The function F(-,CEQt(-)) generalizes the

usual expected utility function of lifetime consumption and may be time-non-

separable.

Epstein and Zin (1989) study a special case of the recursive preference model in

which the preferences are

V, = [(1 - P)C? + pEt(V?-nP/(l~x)]l/p ■ (3-10)

They show that when p =£ 0 and 1 - a =£ 0, the IMRS for a representative agent

becomes

[P(Ct+ilCt)p-l}(l-*)lp{Rm,t+x}({l-a-p)lp) (3.11)

where Rm>t+\ is the gross market portfolio return. The coefficient of relative risk

aversion for timeless consumption gambles is a, and the elasticity of substitution

for deterministic consumption is (1 — p)~ . If a = 1 — p, the model reduces to the

time-separable, power utility model. If a = 1, the log utility model of Rubinstein

(1976) is obtained.

In summary, many asset pricing models are special cases of the equation (3.1).

Each model specifies that a particular function of the data and the model

parameters is a valid stochastic discount factor. We now turn to the issue of estimating

the models stated in this form.

4. The generalized method of moments

In this section we provide an overview of the generalized method of moments and

a brief review of the associated asymptotic test statistics. We then show how the

GMM is used to estimate and test various specifications of asset pricing models.

4.1. An overview of the generalized method of moments in asset pricing models

Let xt+\ be a vector of observable variables. Given a model which specifies

mt+\ = m(8,xt+\), estimation of the parameters 8 and tests of the model can then

proceed under weak assumptions, using the GMM as developed by Hansen

(1982) and illustrated by Hansen and Singleton (1982) and Brown and Gibbons

(1985). Define the following model error term:

Uij+i =m{e,xt+])Rut+i - 1 . (4.1)

The equation (3.1) implies that Et{uiit+i} = 0 for all i. Given a sample of TV assets

and T time periods, combine the error terms from (4.1) into a T x N matrix u,

with typical row u't+1. By the law of iterated expectations, the model implies that

E(iijj+i \Zt) = 0 for all / and t (for any Zt in the information set at time t), and

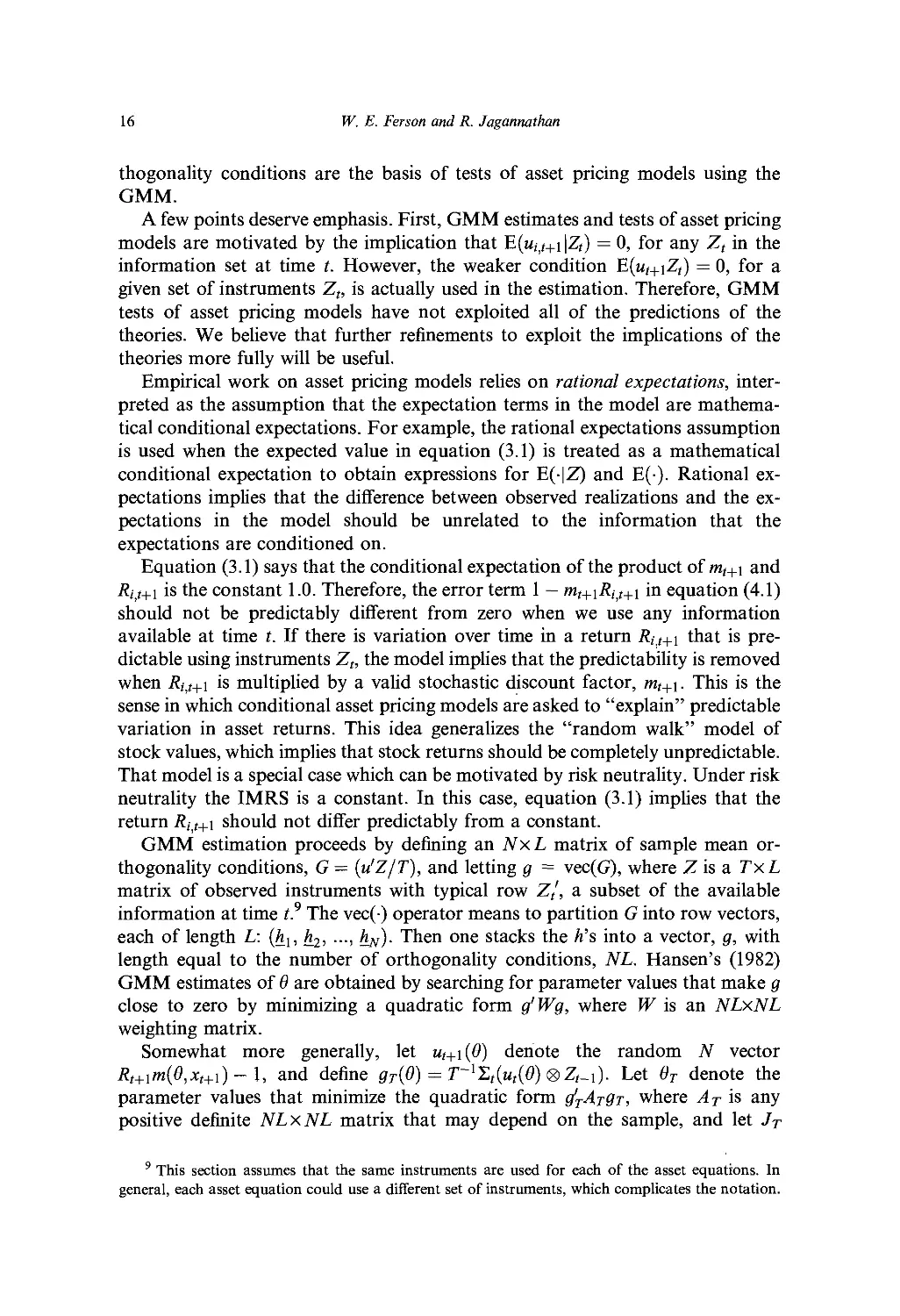

therefore E(ut+\Zt) = 0 for all t. The condition E(ut+\Zt) = 0 says that ut+\ is

orthogonal to Zt and is therefore called an orthogonality condition. These or-

16

W. E. Ferson and R. Jagannathan

thogonality conditions are the basis of tests of asset pricing models using the

GMM.

A few points deserve emphasis. First, GMM estimates and tests of asset pricing

models are motivated by the implication that E(«,-]t+i \Zt) = 0, for any Zt in the

information set at time t. However, the weaker condition H(ut+\Zt) = 0, for a

given set of instruments Zt, is actually used in the estimation. Therefore, GMM

tests of asset pricing models have not exploited all of the predictions of the

theories. We believe that further refinements to exploit the implications of the

theories more fully will be useful.

Empirical work on asset pricing models relies on rational expectations,

interpreted as the assumption that the expectation terms in the model are

mathematical conditional expectations. For example, the rational expectations assumption

is used when the expected value in equation (3.1) is treated as a mathematical

conditional expectation to obtain expressions for E(|Z) and E(). Rational

expectations implies that the difference between observed realizations and the

expectations in the model should be unrelated to the information that the

expectations are conditioned on.

Equation (3.1) says that the conditional expectation of the product of mt+\ and

Ritt+i is the constant 1.0. Therefore, the error term 1 — mt+\Riit+\ in equation (4.1)

should not be predictably different from zero when we use any information

available at time t. If there is variation over time in a return Ri^+\ that is

predictable using instruments Z„ the model implies that the predictability is removed

when Ri,t+\ is multiplied by a valid stochastic discount factor, mt+\. This is the

sense in which conditional asset pricing models are asked to "explain" predictable

variation in asset returns. This idea generalizes the "random walk" model of

stock values, which implies that stock returns should be completely unpredictable.

That model is a special case which can be motivated by risk neutrality. Under risk

neutrality the IMRS is a constant. In this case, equation (3.1) implies that the

return Riit+\ should not differ predictably from a constant.

GMM estimation proceeds by defining an NxL matrix of sample mean

orthogonality conditions, G — (u'Z/T), and letting g = vec(G), where Z is a TxL

matrix of observed instruments with typical row Z/, a subset of the available

information at time t? The vec(-) operator means to partition G into row vectors,

each of length L: (hu h2, ..., hN). Then one stacks the h's into a vector, g, with

length equal to the number of orthogonality conditions, NL. Hansen's (1982)

GMM estimates of 8 are obtained by searching for parameter values that make g

close to zero by minimizing a quadratic form g'Wg, where W is an NLxNL

weighting matrix.

Somewhat more generally, let ut+\{&) denote the random TV vector

Rt+xm(0,x,+\)-\, and define gT(0) = T~%(u,(8) <8>Zt_i). Let 8T denote the

parameter values that minimize the quadratic form c/tAt9t, where AT is any

positive definite NLxNL matrix that may depend on the sample, and let JT

9 This section assumes that the same instruments are used for each of the asset equations. In

general, each asset equation could use a different set of instruments, which complicates the notation.

Econometric evaluation of asset pricing models

17

denote the minimized value of the quadratic form g'TArgT- Jagannathan and

Wang (1993) show that JT will have a weighted chi-square distribution which can

be used for testing the hypothesis that (3.1) holds.

Theorem 4.1. (Jagannathan and Wang, 1993). Suppose that the matrix AT

converges in probability to a constant positive definite matrix A. Assume also that

VTgriOo) -+d N(0,S), where iV(-> •) denotes the multivariate normal distribution,

do are the true parameter values, and S is a positive definite matrix. Let

D = E[dgT/de}\g=9o

and let

Q= (Sl'2)(Al?2)[I - {All2)'D{D'AD)-lD'{All2)\(All2){Sll2)

where A1'2 and S1'2 are the upper triangular matrices from the Cholesky

decompositions of A and S. Then the matrix Q has NL-d\m(Q) nonzero, positive

eigenvalues. Denote these eigenvalues by 1;, i = 1,2,..., NL-dim(6). Then JT

converges to

^lZl + • • • + ^NL-dim(9)XNL-dim(e)

where Xi, i = 1,2,..., NL-dim(6) independent random variables, each with a Chi-

Square distribution with one degree of freedom.

Proof. See Jagannathan and Wang (1993).

Notice that when the matrix A is W = S~l, the matrix Q is idempotent of rank

NL-dim(6). Hence the nonzero eigenvalues of Q are unity. In this case, the

asymptotic distribution reduces to a simple chi-square distribution with NL-

dim(0) degrees of freedom. This is the special case considered by Hansen (1982),

who originally derived the asymptotic distribution of the /r-statistic. The JT-

statistic and its extension, as provided in Theorem 4.1, provide a goodness-of-fit

test for models estimated by the GMM.

Hansen (1982) shows that the estimators of 6 that minimize g'Wg are consistent

and asymptotically normal, for any fixed W. If the weighting matrix Wis chosen

to be the inverse of a consistent estimate of the covariance matrix of the

orthogonality conditions S, the estimators are asymptotically efficient in the class of

estimators that minimize g'Wg for fixed Ws. The asymptotic variance matrix of

this optimal GMM estimator of the parameter vector is given as

Cov(0) = [E(dg/de)'WE(dg/de)]-1 (4.2)

where dg/86 is an NLxdim(6) matrix of derivatives. A consistent estimator for

the asymptotic covariance of the sample mean of the orthogonality conditions is

used in practice. That is, we replace W in (4.2) with Cov(#)-1 and replace

E(dg/d6) with its sample analogue. An example of a consistent estimator for the

optimal weighting matrix is given by Hansen (1982) as

18

W. E. Ferson and R. Jagannathan

Cov(gr) = [(l/r)£ 5>n-i«;+w) ® (Z^)] (4.3)

where <g> denotes the Kronecker product. A special case that often proves useful

arises when the orthogonality conditions are not serially correlated. In that

special case, the optimal weighting matrix is the inverse of the matrix Cov(gr), where

Cov(gr) = [(1/T) 5>,+i«;+1) ® (ZtZ't)} . (4.4)

t

The GMM weighting matrices originally proposed by Hansen (1982) have some

drawbacks. The estimators are not guaranteed to be positive definite, and they

may have poor finite sample properties in some applications. A number of studies

have explored alternative estimators for the GMM weighting matrix. A

prominent example by Newey and West (1987a) suggests weighting the autocovariance

terms in (4.3) with Bartlett weights to achieve a positive semi-definite matrix.

Additional refinements to improve the finite sample properties are proposed by

Andrews (1991), Andrews and Monahan (1992), and Ferson and Foerster (1994).

4.2. Testing hypotheses with the GMM

As we noted above, the /^-statistic provides a goodness-of-fit test for a model

that is estimated by the GMM, when the model is overidentified. Hansen's JT-

statistic is the most commonly used test in the finance literature that has used the

GMM. Other standard statistical tests based on the GMM are also used in the

finance literature for testing asset pricing models. One is a generalization of the

Wald test, and a second is analogous to a likelihood ratio test statistic. Additional

test statistics based on the GMM are reviewed by Newey (1985) and Newey and

West (1987b).

For the Wald test, consider the hypothesis to be tested as expressed in the M-

vector valued function H{9) = 0, where M < dim(0). The GMM estimates of 9 are

asymptotically normal, with mean 9 and variance matrix Cov(0). Given standard

regularity conditions, it follows that the estimates of H are asymptotically

normal, with mean zero and variance matrix HeCov(9)H'e, where subscripts denote

partial derivatives, and that the quadratic form

TH>[HeCov(9)H'e]~lH

is asymptotically chi-square, providing a standard Wald test.

A likelihood ratio type test is described by Newey and West (1987b), Eichen-

baum, Hansen, and Singleton (1988, appendix C), and Gallant (1987). Newey and

West (1987b) call this the D test. Assume that the null hypothesis implies that the

orthogonality conditions E(gr*) — 0 hold, while, under the alternative, a subset

E(gr) — 0 hold. For example, g* = (g, h). When we estimate the model under the

null hypothesis, the quadratic form g*'W*g* is minimized. Let W\x be the upper

left block of W; that is, let it be the estimate of Cov (g)'1 under the null. When we

Econometric evaluation of asset pricing models

19

hold this matrix fixed the model can be estimated under the alternative by

minimizing (/W^ g. The difference of the two quadratic forms

T[g*'Wg* - g'W*ng]

is asymptotically chi-square, with degrees of freedom equal to M if the null

hypothesis is true. Newey and West (1987b) describe additional variations on

these tests.

4.3. Illustrations: Using the GMM to test the conditional CAPM

The CAPM imposes nonlinear overidentifying restrictions on the first and second

moments of asset returns. These restrictions can form a basis for econometric

tests. To see these restrictions more clearly, notice that if an econometrician

knows or can estimate Cov(Rit,Rmt), E(Rmt), Var(i?mr), and E(R0t), it is possible to

compute E(Rit) from the CAPM, using equation (2.1). Given a direct sample

estimate of E(Rit), the expected return is overidentified. It is possible to use the

overidentification to construct a test of the CAPM by asking if the expected

return on the asset is different from the expected return assigned by the model. In

this section we illustrate such tests by using both the traditional, return-beta

formulation and the stochastic discount factor representation of the CAPM.

These examples extend easily to the multiple-beta models.

4.3.1. Static or unconditional CAPMs

If we make the assumption that all the expectation terms in the CAPM refer to

the unconditional expectations, we have an unconditional version of the CAPM. It

is straightforward to estimate and then test an unconditional version of the

CAPM, using equation (3.1) and the stochastic discount factor representation

given in equation (3.4). The stochastic discount factor is

mt+\ = co + c\Rmt+\

where c0 and c\ are fixed parameters. Using only the unconditional expectations,

the model implies that

E{(c0+ci^mr+i)^+i-l} = 0

where Rt+i is the vector of gross asset returns. The vector of sample

orthogonality conditions is

gr = gT(co,ci) = (l/T)^2{(c0 + ciRmt+l)Rt+l - 1} .

t

With assets N > 2, the number of orthogonality conditions is N and the number

of parameters is 2, so the /^-statistic has N - 2 degrees of freedom. Tests of the

unconditional CAPM using the stochastic discount factor representation are

conducted by Carhart et al. (1995) and Jagannathan and Wang (1996), who reject

the model using monthly data for the postwar United States.

20

W. E. Ferson and R. Jagannathan

Tests of the unconditional CAPM may also be conducted using the linear,

return-beta formulation of equation (2.1) and the GMM. Let rt = Rt-.%! be the

vector of excess returns, where i?or is the gross return on some reference asset and

1 is an N vector of ones; also let ut = rt- f5rmt, where /? is the N vector of the betas

of the excess returns, relative to the market, and rmt = Rmt - Rot is the excess

return on the market portfolio. The model implies that

E(ut) = E(utrmt) = 0 .

Let the instruments be Zt = (l,rmt)'. The sample orthogonality condition is then

gT(P) = T-lJ2(rt-Prmt)®Zt .

t

The number of orthogonality conditions is 2N and the number of parameters is

N, so the model is overidentified and may be tested using the /r-statistic.

An alternative approach to testing the model using the return-beta formulation

is to estimate the model under the hypothesis that expected returns depart from

the predictions of the CAPM by a vector of parameters a, which are called

Jensen's alphas. Redefining ut = rt — a — firmt, the model has 2N parameters and

2N orthogonality conditions, so it is exactly identified. It is easy to show that the

GMM estimators of a and /? are the same as the OLS estimators, and equation

(4.4) delivers White's (1980) heteroskedasticity-consistent standard errors. The

CAPM may be tested using a Wald test or the D-statistic, as described above.

Tests of the unconditional CAPM using the linear return-beta formulation are

conducted with the GMM by MacKinlay and Richardson (1991), who reject the

model for monthly U.S. data.

4.3.2. Conditional CAPMs

Empirical studies that rejected the unconditional CAPM, as well as mounting

evidence of predictable variation in the distribution of security rates of return, led

to empirical work on conditional versions of the CAPM starting in the early

1980s. In a conditional asset pricing model it is assumed that the expectation terms

in the model are conditional expectations, given a public information set that is

represented by a vector of predetermined instrumental variables Zt. The multiple-

beta models of Merton (1973) and Cox, Ingersoll, and Ross (1985) are intended to

accommodate conditional expectations. Merton (1973, 1980) and Cox-Ingersoll-

Ross also showed how a conditional version of the CAPM may be derived as a

special case of their intertemporal models. Hansen and Richard (1987) describe

theoretical relations between conditional and unconditional versions of mean-

variance efficiency.

The earliest empirical formulations of conditional asset pricing models were

the latent variable models developed by Hansen and Hodrick (1983) and Gibbons

and Ferson (1985) and later refined by Campbell (1987) and Ferson, Foerster,

and Keim (1993). These models allow time-varying expected returns, but

maintain the assumption that the conditional betas are fixed parameters. Consider the

Econometric evaluation of asset pricing models

21

linear, return-beta representation of the CAPM under these assumptions, writing

E(r(|Z(_i) = /}E(r„,f|Zf_i). The returns are measured in excess of a risk-free asset.

Let r1( be some reference asset with nonzero /?i, so that

E(r1(|Z(_1)=jS1E(rm(|Z(_1) .

Solving this expression for E(rmt\Zt-i) and substituting, we have

E(r,|Zi_i) = CE(n,|£_i)

where C= (j3.//?i) and ./ denotes element-by-element division. With this

substitution, the expected market risk premium is the latent variable in the model,

and C is the N vector of the model parameters. When we form the error term