Текст

MM

RAY 1MIN6

PETER SHIRLEY

1

V

r

^

r

' i

Realistic Ray Tracing

PETER SHIRLEY

H

A K Peters

Natick, Massachusetts

Editorial, Sales, and Customer Service Office

A K Peters, Ltd.

63 South Avenue

Natick, MA 01760

Copyright © 2000 by A K Peters. Ltd.

All rigffts reserved. No part of the materia3 protected by this copyright notice may

be reproduced or utilized in any form, electronic or mechanical, including

photocopying, recording, or by any information storage and retrieval system, without

written permission from the copyright owner.

Library of Congress Cataloging-in-Publication Data

Shirley. P. (Peter), 1963-

Realistic ray tracing / Peter Shirley.

p. cm.

Includes bibliographical references and index.

ISBN 1-56881-110-1 (alk. paper)

1. Computer graphics. I. Title.

T385 .S438 2000

006.6'2—dc2I 00-039987

Printed in Canada

04 03 02 01 00 10 9 8 7 6 5 4 3 2 1

Contents

Preface ix

Introduction xi

I The Basic Ray Tracing Program 1

1 Getting Started 3

1.1 RGB Colors 3

1.2 Images 4

1.3 Vectors 4

1.4 Rays 7

1.5 Intervals 7

1.6 Orthonormal Bases and Frames 7

1.7 Transformation Matrices 9

2 Ray-Object Intersections 17

2.1 Parametric Lines 17

2.2 General Ray-Object Intersections 18

2.3 Ray-Sphere Intersection 21

2.4 Ray-Box Intersection 22

2.5 Ray-Triangle Intersection 24

2.6 More Than One Object 28

2.7 A Simple Ray Tracer 29

3 Lighting and Shadows 31

3.1 Implementing Lighting 31

3.2 Adding Shadows 32

3.3 Outward Normal Vectors 34

V

vi Contents

4 Viewing 37

4.1 Axis-aligned viewing 37

4.2 Setting View Parameters 39

5 Basic Materials 43

5.1 Smooth Metal 43

5.2 Smooth Dielectrics 44

5.3 Polished Surfaces 47

II Bells and Whistles 51

6 Solid Texture Mapping 53

6.1 Stripe Textures 53

6.2 Solid Noise 54

6.3 Turbulence 56

6.4 Bump Textures 56

7 Image Texture Mapping 59

8 Triangle Meshes 63

9 Instancing 67

9.1 Intersecting Rays with Transformed Objects 68

9.2 Lattices 69

10 Grids for Acceleration of Intersection 71

10.1 Grid Traversal 71

10.2 Grid Creation 76

III Advanced Ray Tracing 79

11 Monte Carlo Integration 81

11.1 Monte Carlo Overview 81

11.2 A Little Probability 83

11.3 Estimating Definite Integrals 87

11.4 Quasi-Monte Carlo Integration 88

11.5 Multidimensional Monte Carlo Integration 89

11.6 Weighted Averages 91

11.7 Multidimensional Quasi-Monte Carlo Integration 92

12 Choosing Sample Points 95

12.1 Overview 95

12.2 Generating ID Random Numbers With Nonuniform Densities . . 96

12.3 Generating 2D Random Numbers With Nonuniform Densities . . 97

Contents

98

12.4 Sampling Triangles

12.5 Sampling Disks . iUU

13 Antialiasing ^

13.1 Rasters 105

13.2 Display Devices 106

13.3 Filtering 107

13.4 Sampling U0

14 Cameras 113

14.1 Thin-lens cameras 113

14.2 Reai Cameras 113

14.3 Multidimensional sampling 116

15 Soft Shadows 119

15.1 Mathematical Framework 119

15.2 Sampling a Spherical Luminaire 122

15.3 Nondiffuse Luminaires 124

15.4 Direct Lighting from Many Luminaires 124

16 Path Tracing 129

16.1 Simple Path Tracing 129

16.2 Adding Shadow Rays 133

16.3 Adding Specularity 135

17 General Light Reflection 137

17.1 Scattering functions 137

17-2 An Advanced Model 139

17.3 Implementing the Model 142

17-4 Including Indirect Light 145

18 Spectral Color and Tone Reproduction 147

18.1 Spectral Operations 147

18.2 Luminance 148

18.3 CIE Tristimulous Values 148

18-4 Tone Mapping 151

19 Going Further 153

Bibliography 155

Index

163

Preface

The main code I use to generate images is a ray tracer. The basie ray tracing

concepts were developed and popularized primarily by Turner Whitted in the late

1970s and early 1980s. These were expanded into some probabilistic ray tracing

by Rob Cook and Jim Kajiya in the mid 1980s. Most of the book's content is

due to these pioneers. Of course, many other people dealt with tracking the

movement of rays around an environment, such as Appel and Kay, and with

luck a full history of computer graphics will get written up someday.

I have taught people about ray tracers in several classes and seminars at

Indiana University. Cornell University, and the University of Utah. The notes

from these classes have grown into this book. This book is intended to fill in

missing details that I am always wishing were in the books I read. Itwas never

meant to be a survey, and I hope that it doesn't read like one. Its subtitle could

be "how Pete Shirley implements a ray tracer," and it should be read in that

light. There are many things I don't cover due to my priorities and sometimes

ignorance, but if you find you are missing something important, or have a better

way to do something, especially if it is simpler. I'd love to hearabout it.

If you want to learn everything about ray tracing, there is a variety of books

that you should read, but the most complete collection is the online Ray Tracing

News, edited by Eric Haines. This is a remarkably complete discussion of ray

tracing issues by hard-core ray tracing geeks. Eric also runs the annual ray

tracing meeting at the SIGGRAPH annual conference. I hope you will make

it there! Eric deserves special praise for his many selfless contnbutions to this

field.

ABOUT THE COVER

The cover image was modeled by Bill Martin at the University of Utah. It uses

the "Utah Teapot" developed by Martin Newell in the 1970s. In the reflections

x Preface

are one of the first ray traced images by Turner Whitted, and one of the first

bump-mapped images by Jim Blinn. The ray tracer that generated the image was

written by Steve Parker at The University of Utah with a little help fromPeter-

Pike Sloan, Bill Martin, and myself. It can run interactively on medium-sized

images on a parallel computer. The serial portion of that code is essentially what

is presented in the first two parts of this book.

ACKNOWLEDGEMENTS

This book would not have been possible without the many students who have

helped me determine which presentation methods work best. In addition, much

of the materia3 in the book has been taught to me by my teachers, students,

and co-workers. In particular. Teresa Hughey taught me many fundamentals

of ray tracing; Don Hearn taught me how to make transform matrices simple:

Greg Rogers and Kelvin Sung helped me understand how to use object-oriented

programming without becoming a zealot: Don Mitchell provided many e-mail

answers to sampling questions I never would have worked out on my own: Jim

Arvo helped me understand much of the mathematics behind rendering

algorithms: Steve Marschner helped me with many subtle coding and algorithmic

issues'. Brian Smits and Steve Parker have given me many details of

implementation and optimization that have made my code simpler and faster.

Michael Herf. Steve Marschner, Peter-Pike Sloan, and Mike Stark were

extremely helpful in improving drafts of the book. Michael Ashikhmin develpedthe

reflection model presented in Chapter 17 and graciously allowed me to include it

before its publication. My parents. Bob Shirley and Molly Lind, provided some

crucial baby-sitting that allowed the book to get done. My wife Jean did allthe

work in our household for a year, or the book never would have happened.

Alice and Klaus Peters deserve special credit for encouraging me to write

this book, and I am very grateful to the publisher of this book. A K Peters, for

their great dedication to quality publications.

Peter Shirley

Salt Lake City. USA

April, 2000

Introduction

This book will show you how to implement a rax tracer. A ray tracer uses simple

local algorithms to make pictures that are realistic. Ray tracing is becoming more

and more popular because of increases in computing power and because of lts

ability to cleanly deal with effects such as shadows and transparency. It has even

been used in feature animations, and it is just a matter of time before it is used

in video oames. Most importantly. I think writing ray tracing code is fun. and it

is a great way to learn many graphics concepts.

An overview of a ray tracing program is shown in Figure 1- Here. fae eye js

at point e and it is looking through a 3D "window" at two shaded spheres. For

each point on the window we send a 3D "ray" to that point and extend it into

the scene as shown by the arrow and dotted line. Whatever this ray hits first is

what is seen in that pixel. Each pixel can be computed independently by simple

computations to form the image shown in the lower-lettof the figure. The two

majn tasks of the program are determining what point on what object js hit, and

what coior that point is. The first task will require intersecting 3D 'ines with

surfaces sucn as spheres, which will require some mathematics. Determining

the color will require some simple physics and the generation of rays from the

intersection point to see how the world "looks" to that point. All of this will turn

out to be quite elegant, which is why people usually enjoy writing ray tracing

programs.

The book is divided into three parts:

• part I The Basic Ray Tracing Program shows how to write a program

to make simple images.

• Part II Bells and Whistles gives several independent extensions which

can be added to your program. These chapters can be read in any order.

• Part m Advanced Ray Tracing shows you how to add special effects

such as soft shadows, depth-of-field, motion-blur, and indirect lighting.

Introduction

IMgure 1. An 'overview of the ray tracing .concept.

My (general3[philosophy iisithat'dleani mathematics* maps' directly to> clean'code.

The text 'wSlllibe-veryidetail-orientedi Nothing 'will Ibe Heft as ;an exercise to the

ireader. )I also assume some comfort with high-school mathematics in genera8. If

you are math-phobic, mow iis (the itiime ito iGure yourself; 'take lit: slowly, and keep

itJhe (a%dbr<a ifirmly mapped to the geometry: Read every .-'cction carefeffly. and

jgo on to itlhe imexit section only when you are iready. Just don't give up! A firm

grasp of the fundamentals iis lhard if© (get. but wiffll Uas't ■& Hifetime.

Throughout ittie Ibook, )I .assume (thatiilight obeys ithe i niles'of ,'geometrie op-

itics andithatjpolarization'does mo't'exist. This iis'Of course not true—Konnen's

took l[2S] Contains teamy 'examples 'where polarization is very important, and

diffraction is visible 'on isany animals' 'exteriors—butffor at lleast the next couple

'of decades,.a lack of polarization .or physicalioptics effects willi not be the biggest

llifiitationf 6ft rendering'cotes. iFfaorescence is another issue I do not deal with.

This is am iimnpoiitamtt 'effect, especially in man-made environments where almost

revery bright rcolor iis ipartially'duetto J fluorescence. fQlassner |[1:S] developed a

Metkod (to iindltrde (fluorescence in ra Tenderer which interested readers should

"Consult.

il'do fflot 'talk.'about/radiosity, which'already ifias ! three/good books 'devoted

ito ift l[5., % 36]. 'There .'are also riiahy topics related to. Monte' Carlo sampling,

variants 'of jpath 'tracing, and hybrid algorithms which will be skipped entirely.

iFor a teoaier (treatment of image synthesis algorithms, the reader should con--

rsrilt(theUWo^v6tonieslhyrfflassner([r6],randfthelbook by Watt and Watt |[7'0].

JFor an excellent treatment of interactive rendering, see the book by Moller arid

iHainesf35].

Introduction

I build up the ray tracer one step at a time, and your progg™™ wlU expand

wira each chapter. There will ibe images in each chapter you j should duplicate.

I emphasize a clean program and .leave -efficiency as a second^ pnonty. C ean

programs .are usually :fast programs, so this won't cause as ms^any problems as

you .might expect. At the end, you willhave a really powerful ] program wou

a jot ,of code. This program can be improved in the many ways s discussed ^ gjg

:last chapter, but it will be very useful as is. Let's begin!

Parti

The Basic Ray Tracing Program

1

Getting Started

Underlying any graphics program is utility code that allows us to think and

program at a higher level. In ray tracing programs, there are certain pieces of

utility code that pop up repeatedly and are worth coding up front. This chapter

descnbes what code you can write before getting started. It uses the vocabulary

of an object-oriented language such as C++ or Java, but the principles apply to

any language.

1.1 RGB COLORS

Your output image will be in a "red-green-blue" color space, referred to for the

rest of the book as RGB. You should have three real numbers to represent the

three components.

You will want to make all the "normal" arithmetic operations work with

RGBs. Given RGBs a, b. and scalar k, the following operators should be

implemented:

-l-a = (a,-. a3. Oj,).

-a = (-a,..-ag.-a6).

k * a = {kar,kag. kcib) .

a*k = (kar.kag. kai,) ,

a/k = (ar/k,ag/k,abfk).

a + b = {ar+br,ag + bg,at, +bb),

a-b = (aT—br,ag—bg,ai,—bb),

a*b = (aTbT,agbg,abbb),

a/b = (aT/br,ag/by,ab/bt,)

3

4

1.2 IMAGES

You will want to use simple 2D arrays of RGB colors both for storing your

computed image and for texture-mapping.

You will want to write a few utility functions to output images, either as

they are computed or as they are finished. If you output to a file, use whatever

format is easiest to implement. You can always convert the files you want to

save to a compact or portable format offline.

For now, assume the convention that colors whose components are all zero

are black, and colors whose components are all one are white. That is, if

RGB = (1, 1, 1) that represents the color white. You must usually convert each

of these numbers / € [0.1) into a one byte quantity i € {0,1,..., 255}, where

[0,1) indicates the interval of numbers between zero to one including zero but

not one. This is accomplished with i = mi(256* /). If you are not sure that /

is in that range, an "if" should be added to force i within the range. On most

systSms, the intensity on the screen is nonlinear; ;' = 128 is significantly less

than half as bright as i = 255. The standard way to handle this is to assume the

screen or printer has a "power curve" described by a parameter gamma -..

/ ' V

briahtness x 1 -— ] .

V255/

Thus the "gamma-corrected" image that you output should be

i = mr(256*/%

The values of gamma for most systems range from 1.7 to 2.3. Most PCs are 2.2

and most Macs are 1.8. The emerging standard "sRGB"' uses gamma 2.2. See

www.srgb.com for details. A more complete discussion of this topie is given in

Glassner's two volume text [16] and in Chapter 13.

1.3 VECTORS

A vector describes an n-dimensional offset or location. For our purposes, we

need 2D and 3D vectors. Rather than trying to write an n-dimensional class,

you should write two classes vector2 and vector3.

For the actual storage of the (x, y, z] cordinates you should use an array.

For example, you should have dotal3J rather than x, y, z. This allows a

member function element(i) which returns the ith data element without using any ;/

statements. For example, v.element(l) should return the y coordinate of v. This

is most efficient if an array is used.

1,3, Vectors

5

You may want to make a separate point class to store locations, but my

current belief is that although this improves type checking and aids implicit

documentation, it makes coding more awkward in practice. So I advocatejust

using vectors to store points; the value of the vector is the displacement from

the point to the origin.

The length of a vector a = (ax,ay, az) is given by

llal'l = ya* -t-aj+a*.

You should have member functions that return Mall and ||a||2. If the length of

v is one, it is called a unit vector. You may want a special class for that, and

you may not. It strengthens type checking, but can interfere with efficiencyanQ

increases the number of possibly nonobvious type conversions.

You will want to make all the "normal" arithmetic operations for vectors.

Given vectors a b. and scalar k, the following operators should be implemented:

-i-a

-a

k * a

a,* k

a/fr

a4- b

a-b

=

=

=

=

=

=

=

(ax.ay.az).

(-ar.-ay.-a:),

[kaT.kay. kaz).

{ktij.kay. ka-).

[ar/k.ay/k.a:/k),

(«.,.+ bj-- fiy + by. az + b:).

(<!• _ fcj-.ay - bu.a: - b:) .

There are two important operators for 3D vectors; the dot product (•) and

the cross product (•<). To compute these use the following formulae:

a-b = aTbx + ayby + a:bz.

a x b = ( a:/b: - azby. a-ftr - axb:, axby - aybz ) ■

Note that the dot product returns a scalar and the cross product returns a 3D

vector. The dot product has a property that is used in an amazing number of

ways in various graphics programs:

a b = ||a]| ||b|| cos0,

where d is the angle between a and b. The most common use of the dot product

is to compute the cosine of the angle between two vectors If a and b are unit

vectors, this is accomplished with three multiplications and two additions.

The cross product a x b has a different, but equally interesting, geometrie

property. First, the length of the resulting vector is related to sin 8:

||axb|| = l|a||||b||sin0.

6 /. Getting Started

,,

f)

t£

aXb

J

-"""h

a

Figure 1.1. To establish whether a x b points "up" or "down." place your right hand in a way that

your wrist is at the bases of a and b and your palm will push [he arrow of a toward the arrow of

b. You thumb will point in the direction of the resulting cross product. Since x. y. and z obey this,

they are referred to as a "right-handed" coordinate system.

In addition, a x b is perpendicular to both a and b. Note that there are only two

possible directions for such a vector. For example, if the vectors in the direction

of the.r-. y- and c-axes are given by

x = (1.0.0),

y = '(0.1,0),

z = (0.0.1).

then x x y must be in the plus or minus z direction. You can compute this to

determine that in fact, x x y = z. But how do we visualize what the geometrie

configuration of these vectors is? To settle this, a convention is used: the right-

hand rule. This rule in illustrated in Figure 1.1. Note that this implies the useful

properties:

x x y = +z,

y x x = -z,

y X z = +X,

z '-x y = —x,

z x x = -t-y,

x x z = —y.

1.4. Rays 1

1.4 RAYS

A 3D ray is really a 3D parametric line. It is usually called a ray by graphics

programmers for reasons discussed in the next chapter. A ray is defined by an

origin location o and a direction vector d. A ray class is very simple; it has

these data members and one function: point-at-parameter(t) which returns the

point o + td for scalar /. There should be no restriction that d be a unit vector,

as will be discussed in Chapter 9.

1.5 INTERVALS

An interval is a set of points bounded by an ordered pair [a, b\ where b > a.

Intervals are useful in a variety of contexts. You will want to implement a

boolean function that tells whether a scalar t is within the interval, a boolean

function that tells whether two intervals overlap, and a function that computes

the (possibly empty) interval of overlap between two intervals. For example, the

overlap between [1.3jand [2. 7j is [2. 3j.

1.6 ORTHONORMAL BASES AND FRAMES

Graphics programs usually manage many coordinate systems. For example, in a

flight simulator the airplane is stored in the coordinate system aligned with the

fuselage, wings, and tail. The orientation part of such a coordinate system is

stored as three orrhonormal vectors u. v, and w. which are unit length, mutually

perpendicular, and right-handed (u x v = w). These three vectors are called the

basis vectors of the coordinate system. As a set they are an orthonormal basis

(ONB).

The x, y, and z vectors form a special ONB called the canonicalbasis. This

•s the natural basis of our program, and all other bases are stored in terms of it.

For example, any vector can be stored in xyz coordinates:

a = («j-, ov, fij.) = axx + ayy + a.z.

We are so : familiar with this that we sometimes forget what these coordinates

mean. The coordinates (ar,ay.az)are computed by dot product as

ax = a • x,

ay = ay,

a, = a • z.

g

1. Getting Started

The same would apply for any ONB. For example,

a = (a • u)u + (a • v)v + (a • w)w.

In programs we would often store these coordinates as [0^,^,0^. You might

have two different vectors declared a and a-uvw that have different numbers in

them, but represent the same directions. Note that I do not say the first vector

is a-xyz—the default coordinate system is the canonical one. These vectors

are the same geometrically even though they have different components. You

would multiply them by different basis vectors before you would compare their

coordinates. As you will see, we will use a noncanonical coordinate system when

it is convenient, and that will make a-xyz have elements like (7,0,0) or some

other simple set of numbers because we are in a "natural" coordinate system.

This may all sound like a pain, but stick with it because your program will be

simpler and more efficient.

You should add some constructors or utility functions for ONBs. For

example, you should make one constrttct-from-uvthat takes two vectors a and b and

produces an ONB where u is parallel to a. v is in the piane of a and b, and w

is parallel to a x b. To accomplish this proceed with the following assignments:

a

Ni'

a x b

w = .

||axb||

V = W X U

Note that neither a nor b need be unit length, but neither can be zero length, and

they cannot be parallel. Also note that the order of these operations must be as

above. You should make five other constructors. The first is constnct-fmm-vit

which makes v parallel to the first vector. The other four are for vw. iiv. ««•,

and wit.

Sometimes you want a coordinate system where one of the vectors has a

particular direction, and the other two basis vectors can be arbitrary as long as

they make a valid ONB. So implement a function constritct-from-vw/hich makes

w parallel to a. Unfortunately, this requires an if:

w = -—

, i|a|1 .

if \wx\ < \wy\and \wx\ < wz\ then

_ (o.^.-*'J

\\(a,wz.-wy)\\

else if \wy\ < \wz\ then

_ (mt.'D.-uij)

IKii,,, 0.-^)11

else

(u.,,-11^,0)

V 11(1^.-^,0)11

u = v x w

jj. TransformationMatrices

9

This is all so complicated because some components of a couldbe zero. You may

want to clean this up using utility functions of vectors such as perpendicular-

vector, unit-vector, and index-of-min-abs-component.

Once you have an ONB defined by u, v, and w, it is very easy to1 convert

from a = (ax,ay,az)back and forth to autIW = (a

au = a ♦ u,

av = a v,

aw = a • w.

a = auu + avv + o„w.

Note that the asymmetry of these equations arises because a has components

stored in the canonical coordinate system while aul,U! does not.

To define a whole coordinate system, you will also need an origin point. This

origin combined with an orthonormal basis can be called a frame of reference

or frame. Define a class frame which is just an ONB and a point. It needs no

member functions other than for access and construction.

1.7 TRANSFORMATION MATRICES

You should implement a class tnmsform-matrixvihich stores a 4 x 4 matrix of

scalars. So a matrix has values

mm m0i ni{)2 rnm

M_ "tin "Hi m 12 "'13

r"2n m-2\ hi 22 ni2:i

_ 0 0 0 1 _

This matrix is used to transform vectors to other vectors. For convenience. I

advocate storing the inverse matrix N = M" along with M. Also, you can of

course not bother to store the bottom row of the matrix. Note that the matrix

should transform points and displacements and normal vectors differently. I will

not go over the details why these matrices work the way they do here; for more

information see any introductory computer graphics book.

Suppose we are given a transform matrix M and a point (location) p, then

the transform rule is

m-ooPz + maiPy + mo2Pz + mo3

mioPx + mnPy + muPz + TU3

m20px 4- mupy + rn^xpz + m-23

jn-ioPx + m3ipy + m32pz + m33

Mp =

moo m-oi fn02 m0s

m10 ma mi2 mi3

m20 m21 m22 m23

m30 m.31 m.32 m33

Px

Py

P;

1

10

/. Getting Started

Figure 1.2. When the normal vector in local coordinates is transformed as a conventional vector,

you get a nonnormal vector (dotted). A different transformation rule is needed for normalst0 ?et

the solid (not dotted) vector.

In practice, all the matrices we deal with will have a bottom row of (0.0.0.1)

so the transformed point will have a one for its last coordinate.

Because vectors indicate a direction they do not change when translated (e.g„

"North" is the same everywhere). But we do want to have scales and rotate count.

Fortunately, we can use the row that points set to one to manage this for the

transform of a vector v:

Mv =

"'00 »»01 "1t>2 77(03

ml0 mn m12 m13

rraao ni2i n?22 m23

m-M m:il m-si mX\

I's

0

mo<>t\r + moivy+ m02r,

m-wfr + mm'y-L- ml2t':

f7!2()('r + m-nl'n + ni22l':

niMvT + rn3ii'j,+ m-s,v.

So when we transform a ray p(t) = o + tv, we need to make sure we transform

o and v according to location and displacement mies respectively. In your code

this implies you should have separate functions such as "transformAsLocation."

"transformAsOffset." and "transformAsNormal."

Surface normal vectors transform a different way still (Figure 1.2) A nice

discussion of this is available in the OpenGL Programming Guide [72] which

nas a wonderful discussion of transformation matrices in genera3. Instead of

Mn, as it would be for a conventional vector, it is (M~"l)r, where T indicates

transpose. Ifyou follow my recommendation and store N = M_1.this gives

(M_1)Tn = NTn

"00 n 10 "20 "30

ram rin ri2i «3i

7102 TJl2 "22 7732

7103 7113 123 «33_

noofz + nmvu + niovs

"Olfn + 7lllWj,+ n2lv*

7l02"x + rii2Vy + ri2lVz

no3Vx ■+- nisVy + 1231;.

I J. Transformation Matrices

11

The first operator the matrix should have is transform-location which takes

the location o to anothor location o':

ITloOOi + TTloiOj, + 771020s. + 77103

mioOi + mnOt, + 77ii20z + mu

m20Ox + 771210„ + m22°z + 7123

1

This matrix is used to transfer vectors to other vectors. Since vectors do not

get changed when translated, we "turn off the last column of the matrix for a

vector v:

O'z

o\

1

Oz

1

77100

77110

77120

0

77101

mu

77121

0

77102

77112

77122

0

77103

77113

77123

1

Ox

Oy

Oz

1

moo "lot m02 m03

mio mu m.12 mn

771-20 T»21 m22 m23

0 0 0 1

Vz

0

moot'i + ^rioivy+ mm'vz

m\ovx +TnnVy + mi2Vz

m,'>QVx+ 77121 Vy + Wl22 l>2

0

normal vector n' to a surface transformed by M is

n'=(M-1)rn =

"00 "11 n20 0

"01 "11 "21 O

Tl02 "12 "22 0

"03

1

••13 "23 1.

n

where n^ are the elements of N = M

Your class should contain both M and N. and should have functions to

transform points, vectors, and surface normal vectors. This is a total of six

functions: three for each of two matrices.

You will also need functions or constructors for common matrix types. First

is an identity that leaves points unchanged:

f'l 0 0 Ol

Identity

" " n

0 10 0

JO-"

To translate (move) by a vector t = [tx.tu.t:):

r

1 Q 0 **

0 1 0 ty

0 0 1 t,

0 0 0 1

translate(t)

To scale by coefficients {sx,sy, s:

scaic(sx,sv,s~) =

0 Oj

o o 1

0 0 Sz

|_0 0 0 lj

12

/. Getting Started

To rotate counterclockwise around the x, y, or z axis by angle 0:

rotate-x(0) =

rotate- y(9) =

1 0

0 cosO

O sine

0 0

cosO 0

0 1

-sin6 0

0 0

0

— sin 9

cos 9

0

sinO

0

cos 9

0

0

0

Q

1_

0'

0

0

1

rotate-z(S)

cos d — sin 0 0 0

sin6» cosO 0 0

O 0 10

O 0 0 1

For more arbitrary rotations, we employ ONBs. This may be the most |m.

portant measure to avoid ugly programs, so make sure you absorb this pan of

the materia3 if you aren't familiar with it.

Suppose we want to find the unique rotation matrix which takes the ONB

defined by u. v. w to the canonical ONB definedby x. y. z (Figure 1.3). A nice

way to work out what matrix is required to represent that transform. Whatever

this matrix is, its inverse will take the canonical basis in the other direction, i.e.,

it will take x to u. y to v, and z to w. So if we assume Mx = u. we can

see what the first column of the matrix is. The following matrix accomplishes

exactly that:

0

0

0 0 1

11111

0

0

L°

=

uT

Uy

u.

0

You can verify that there are no other possibilities for these numbers in the first

column, and that the numbers in the blank fields don't matter. We can observe

similar behavior by transforming the y and z axes to determine

rotate-xyz-to-uvw

ux

uy

"z

0

vx

vy

vz

0

wx

•V,

w2

0

0

0

0

1

/ 7. Transformation Matrices

Figure 13. Some rotation matrix takes an ONB defined by u, v, w to the canonical ONB defined

by x, y, z.

Because the algebraic inverse of a transform matrix is always the matrix

that performs the opposite geometrie transform, and the algebraic inverse of a

rotation matrix-is its transpose, we know

rotate-uvw-to-xyz =

Note that this transpose property assumes the matrix is orthonormal, which all

rotation matrices are. If we apply this matrix to u we get

Ujr

Vx

Wx

0

Uy

Vy

U>i,

0

u-

Vz

V-':

o

0

0

0

1

"T

t\l

U\r

0

uu

l'y

Wy

0

u.

V;

U';

0

uT

Uy

_0_

=

u • u

V • U

w • u

L o,

=

HI It

0

0

0

1

The dot products v • u and w • u are zero because u, v, and w are mutually

orthogonal. Similiar behavior is true for transforming v and w.

1.7.1 Using Transformation Matrices

Transform matrices are usually applied in series. So, for example, to rotate a

sphere centered at c about its own center by an angle O, where the "north pole"

is an axis in d'rection a, we

1. move the sphere to the origin (Mi),

2. rotate a to align with z (M2),

3. rotate by 6 about z (M3),

14

1. Getting Started

4. rotate z back to a (M~2 *),

5. move the sphere back to c (M^1).

Algebraically, this composite transform M is given by

M = M^Mj-'MaMaM!.

Because the vector is multiplied on the right of the matrix, the transforms tnat

are applied first are 0n the right. The only nonobvious rnatrix is M2 which

aligns a with z. This is surprisingly easy: Just construct an ONB where w is

aligned to a as described earlier, and then create a rotate-u\w-to-x\z matrix as

described earlier.

1.7.2 Coordinate Changes

Coordinate transforms are a pain because it's easy to get things backwards.

Suppose we nave a canonical coordinate system, which is the implicit one that

forms tne defauit frame of reference in our program. This is defined bv an

origin location o and basis vectors x. y, and z Note mat 0 x y and z' are

not really written down anywhere; they are our universal reference and we thus

not expressible numerically. They do, however, allow us to set up geometrie

Information that relates locations to other locations. For example we know

that point (3.4.2) is one Unit away from (3. 4. 3). We sometimes forsjet nlj

the coordinate machinery that tells us this is true, -phe point (3 4 0) is "reallv

shorthand for 0 + 3x + 4y + 2z. Some grinding tells us the difference vector

between the two points is lx so they are one unit apart.

Suppose I don't like the origin at o For convenience I wish it were ar

o = (2.0.0). If 1 were to write down the coordinates of a point p = (x. u. :)

in this new coordinate system it would be (x- 2. y , ;). Recall that this is really

shorthand for (2. 0,j)) + (x - 2)x + yy + zz. If we want t0 generaliZe this, and

the new origin is o' = (o'xo'.,o'z), the canonical representation of the point is

(x, y, z), and the representation in the new coordinate system is [x' 1/ z') then

we know \x <y • z) = (x - o'x,y - o'. z - ol). In matrix form this is expressed

as

"l 0 0

0 1 0

0 0 1

0 0 0

And of course to go from an (*', y', 2')n our newoordinate systerto

canonical coordinates is achieved by

"x'~

y'

-r'

x

"~

<

4

-o\

1 "

X

y

z

1

//. TransformationMatrices

15

"1

0

0

0

0

1

0

0

0

0

1

0

o'x

°'y

o'z

1

1

y'

z'

1

1

Note that in all of the above, nothing moved; we are only changing how the

same location is described in two different coordinate systems.

The same reasoning applies if we want to express the location (x, y. z) in a

coordinate system with origin o and an ONB u, v, w. We know that if (u, v, w)

represents the point in the new coordinate system then

o + xx + yy + zz = o — uu + w + u'w.

Since the canonical basis vectors are linearly independent, we can expand an

equation in each dimension:

UU r + W .

U'U'j.

In matrix form this becomes

0

1

L~J

L

0 0

Wy

If;

0

D V

0' ' tf

11 11

And to go from a point in eanonicaioordinates to tne new coordinates we

can take advantage of the matrix above being orthonormal (invfflfis'piose):

n ux Uy U; 0

v _ i'x vy <■': o

W WT ICy ii'; 0

■A' L» ° ° ^ '^

Note that these are just the same matnces we used to actually rotate the coordinate

systems to each other!

Now suppose we want a coordinate system with a new origin o and basis

vectors u, v, and w- We can move from one coordinate system to another by

setting up a third coordinate system with canonical basis vectors and origin o .

This implies

x

y

1

0

0

0

0

1

0

0

0

0

1

0

<

°y

0'.

1"

, Ur

Uy

U;

0

vx

Vy-

Vz

0

irx

Wy

IV z

0

0,

0

1

0

1

1

, u

V

1

w

1

1

L.

16

/. Getting Started

And of course the reverse.

ux

vx

Wx

0

H7jy

Vy

Wy

0

Uz

vz

wz

0

o

O

O

1

1

0

0

0

0

1

0

0

0

0

1

0

-o[

-°;

-o'.

1

4'

y

X

y

z

[l\

Now let's apply this to a problem:

Change FROM a point written in terms of a coordinate system with origin at

(a, b, c) and basis vectors u = (ux,uy,uz),v = (vx, vy, vz),w = (wx.wy,wz)

(e.g., the point (u, v, w) is location ox+ by + cz + uu+ w + «rw) TO a point

written in terms of coordinate system with origin at (d,e, f) and basis vectors

r = (rx,ry, rz),s = (sx.sy,sz),t = (tx.ty,t:).

This is just switching from one frame to the canonical frame and from the

canonical frame to a third frame:

"1 0 0 -J

0 1 0 -e

t„ r- 0 O O 1 -/

0 0 lj [o 0 0 1

All of that may seem confusing, but it will get easier as you use it more.

And if you are serious about graphics, read it and reread it. playing around with

2D examples, until you are comfortable with it. Almost all graphics programs,

ray tracers, and otherwise use these concepts. The matrix stacks and transform

nodes of graphics APIs are built assuming the programmer is familiar with these

concepts.

1

0

0

0

0

1

0

0

0

0

1

0

o"

6

c

1

0'

0

o

1

u

u

w

J.

Ray-Object Intersections

This chapter describes how to compute intersections between rays and objects,

and how to produce a simple ray traced image.

The genera3 problem we are trying so solve is at what point, if any, a 3D line

intersects a 3D surface. The line is always given in parametric form because that

is the most convenient for ray tracing. The surface is sometimes given in implicit

form and sometimes in parametric form. Solving for intersection is usually a

mechanical algebraic exercise.

2.1 PARAMETRIC LINES

A line in 2D can be given in implicit form, usually y — rnx-b = 0. or parametric

form. Parametric form uses some real parameter to mark position on the line.

For example, a parametric line in 2D might be written as

x(t) = 2 + 7t, y(3) = 1 + 7t.

This would be equivalent to the explicit form v = x - 1.

In a parametric 3D line might be

x{t) =2+7*, y(t) = 1 + 2t. Z(t) = 3 - 5t.

This is a cumbersome thing to write, and does not translate well to code variables,

so we will write it in vector formi:

p(i) = o + td,

where, for this example, o and d are given by

o=(2,l,3), d = (7,2,-5).

18

2. Ray-Object Intersections

Figure 2.1. A parametric line. Note that the point o can be expressed as p(0) = o + Od, and the

point labeled t = 2.5 at the right can be expressed as p(2.5) = o + 2.5d. Every point along the

line has a corresponding t value, and every value of t has a corresponding point.

The way to visualize this is to imagine that the line passes though o and is

parallel to d. Given any value of t, you get some point p(() on the line. For

example. at t - 2, p(t) = (2.1,3) + 2(7.2. -5) = (16.5. -71. This genera3

concept is illustrated in Figure 2.1.

A line segment can be described by a 3D parametric line and an interval

t e [ta,tk]. The line segment between two points a and b is given by p(f) .=

a + t(b-a) with* [0.1]. Here p(0) = a. p(l) = b. and p(0.5) = (a + b),,2.

the midpoint between a and b.

A ray, or half-line,is a 3D parametric line with a half-open interval, usually

[0. -x.). From now on we will refer to all lines, line segments, and rays as -'rays."

This is sloppy, but corresponds to common usage, and makes the discussion

simpler.

2.2 GENERAL RAY-OBJECT INTERSECTIONS

Surfaces are described in one of two ways; either as implicit equations,

OTparametric equations. This section describes standard ways to intersect rays with

each type of object.

2.2.1 Implicit Surfaces

Implicit equations implicitly define a set of po'nts that are on the surface

f(x,y,z)=0.

2.2. General Ray-Object Intersections

19

Any point (x, y, z) that is on the surface returns zero when given as an argument

to /. Any point not on the surface returns some number other than zero. This

is called implicit rather than explicit because you can check whether a point is

on the surface by evaluating /, but you cannot always explicitly construct a set

of points on the surface. As a convenient shorthand, I will write such functions

of p = (x, y, z) as

/(p) = 0.

Given a ray p(£) = o + id and an implicit surface /(p) = 0. we'd like to

know where they intersect. The intersection points occur when points on the ray

satisfy the implicit equation

/(p(0) = o.

This is just

/(o + fd) = 0.

As an example, consider the infinite piane though point a with surface normal

n. The implicit equation to describe this piane is given by

(p-a)n = 0.

Note that a and n are known quantities. The point p is any unknown point that

satisfies the equation. In geometrie terms this equation says "the vector from

a to p is perpendicular to the piane normal." If p were not in the plane, then

(p -a) would not make a right angle with n. Plugging in the ray p(f) = o + td

we get

(o + rd - a) • n = 0.

Note that the only unknown here is /. The rest of the variables are known

properties of the ray or piane. If you expand this in terms of the components

(x. y. z) of p you will get the familiar form of the piane equation Ax + By +

Cz + D = 0. If we find a t that satisfies the equation, the corresponding point

p(f) will be a point where the line intersects the piane. Solving for /, we get

(a — o) • n

f~ d n '

If we are interested only in intersections on some interval on the line, then this

t must be tested to see if it is in that range. Note that there is at most one

solution to the above equation, which is good because a line should hit the piane

at most once. The case where the denominator on the right is zero corresponds

20

2. Ray-Object Intersections

to when the ray is parallel to the piane, and thus perpendicular to n, and there

is no defined intersection. When both numerator and denominator are zero, the

ray is in the piane. Note that finding the intersection with implicit surfaces is

often not this easy algebraically.

A surface normal, which is needed for lighting computations, is a vector

perpendular to the surface. Each point on the surface may have a different

normal vector. The surface normal at the intersection point p is given by the

gradient of the implicit function

A/(P) 9/(p) df(P\

dx ' ay " dz )'

The gradient vector may point "into" the surface or may point "out" from the

surface.

2.2.2 Parametric Surfaces

Another way to specify 3D surfaces is with 2D ' puranierenThesc surfaces have

the form

x = f(u. r).

y - gU'-v)-

2 = h(u.i).

For example, a point on the surface of the earth is given by the two parameters

longitude and latitude. For example, if we put a polar coordinate system on

a unit sphere with center at the origin (see Figure 2.2), we get the parametric

equations

x = cos <5 sin O,

y = sin 0 sin 6*.

2 = cos 9.

Ideally, we'd like to write this in vector form like the piane equation, but it isn't

possible for this particular parametric form. We will return to this equation when

we texture map a sphere.

To intersect a ray with a parametric surface, we set up a system of equations

where the Cartesian coordinates all match:

ox + tdx = f(u, v),

oy + tdy = g(u,v),

oz + tdz = h(u,v).

n = V/(p) =

2.5. Ray-Sphere Intersection 21

Figure 2.2. Polar coordinator on the sphere. These eoordinates will be u>ed in se\em! p!uces later

in the book.

Here we have three equations and three unknowns (/. u. and <■). so we can

numerically solve for the unknowns. If we are lucky, we can solve for them

analytically.

The normal vector for a parametric surface is given by

, , fdf dy 0h\ (Of 0() 0h\

\Ou On du \c)r dr dv I

2.3 RAY-SPHERE INTERSECTION

A sphere with center c = (cT, c,,.c-) and radius R can be represented by the

implicit equation

(x - cTf + (y -r,,)- + (; - cz)2 - Ft2 = O.

We can write this same equation in vector form:

(p-c)-(P-c)-fl2 = 0.

Again, any point p that satisfies this equation is on the sphere. If we plug points

on the ray p(t) = o + td into this equation we can solve for the values of t on

the ray that yield points on the sphere:

(o + id - c) • (o + td - c) - B ? = O.

22

2. Ray-Object Intersections

Moving terms around yields

(d • d)t2 + 2d • (o - c)t+ (o-c)*(o-c)-R2 = 0.

Here, everything is known but the parameter t, so this is a classic quadratic

equation in t, meaning it nas the form

At2 +Bt+C-0.

The solution to this equation is

t _. -B ± %/B2- 4AC

2A

Here, the term under the square root sign, B2 — 4AC, is called the discriminant

and te"s us how many real solutions there are. If the discriminant is negative.

Its square root is imaginary and there are no intersections between the sphere

and the line.Jf the discriminant is positive, there are two solutions;one solution

where the ray enters the sphere, and one where it leaves. If the discriminant is

zero, the ray grazes the sphere touching it at exactly one point. Plugging in the

actual terms for the sphere and eliminating the common factors of two. we get:

-d ■ (o - c) ± y'(d • (o - c))' - fd • di |(o - c) • (o - c) _ #J)

(d~d) '

'n an actual implementation, you should first check the value of the discriminant

before computing other terms. If the sphere is used just as a bound ins objeaet

for more complex objects, then we need only determine whether we hit it and

checking the discriminant suffices.

The normal vector at point p is given by the gradient n = 2(p — c). The

unit normal is (p — c) 'Ft.

2.4 RAY-BOX INTERSECTION

When y0U nave an axis-aligned box with corners pn = (.r0. ;/n. ;„) and p _

[xi.yi. zi), one could do an intersection with all six rectangular sides, but there

is a standard trick that is faster. Instead, think of three infinite slabs °f space:

x 6 t^Oj^iJ. y € illo, 2/i].and ~ € [20.21.]. We can compute the intersection of

ray p( ) = o + td and slab x e [i0lI,]:

^o = ox + tx0 dx,

xi = ox + t.xldx.

2.4. Ray-Box Intersection

Figure 2.3. A 2D ray intersecting a 2D box. Left: The intersections with the x slab are elven

between the solid circles, while the intersections with the y slab are given between the open circles.

Right: the intersection of the two intervals in ray parameter (4) space is represented by the bold line.

Points within this ray parameter interval are in the box.

The unknowns here are fr() and txl. so the line segment on ray ray is given by

t e !(.r0 - ox)/dr. (xi - or)/dx}.

Note that it would be nice computationally if a line segment t [a. 6; were

a valid increasing interval, that is b > a. but we don't know this for the slab

because the ray is going "backwards" in x if d,r < 0. So. we can convert the

interval t 6 [f.r(l.r.ri] to t € tx ,„,„,f rm„,r))y setting it to [fro-fritf (^> 0

and [^ri-frol otherwise. Similarly, we can get the intersections with the y and

z slabs t e [t,im,„. tuiniir] and t e [tz ,„,„. t: „mr\.

Each of these intervals can be merged into overlapping intervals. For

example, if the .c slab interval is f e [2.4] and the y interval is / 6 3.5.5 . then the

overlap of these two intervals is i 6 [3.4]. The intersection of all three •''• y,

and z intervals is where the ray is inside the box. This principle is illustrated

in Figure 2.3. If the intersection of the three intervals is empty, the ray misses

thebox.

There is a nice way to code described by Smits and discussed further in his

article on efficient ray tracing [57]. It avoids tests for degenerate cases by taking

advantage of the IEEE floating point properties: Comparing anything against

NaN returns.false, everything is less than infinity.sad everything is greater than

minus infinity.

if dx > O then

txmin <— (-£() - Ox)/dx

txmax *~ ixl - Ox)l'dx

else

txmin •*- Ol - ox)/dx

txmax <— {XQ ~ Ox)/dx

4 Z Ray-Object Intersections

if dy > O then

ty min <~ (j/0-— Ovi/dj,

Xy m-iu: <—'(jl - Oy)/dy

else

futnin <— .(yr- oyydy

tymax*- (yo - oy)/dy

if dt > 0 then

^min <~ "2{)"- 0:)/az

t z max +- (21 - O- )/ds

else

fe min 4-(21 — 0.j/dz

tz max ■<— (-0 - Oz)/d2

{Findthe biggest of txmul. tymln,tznun}

if f.r mm > tj, „„■„ thdl

f(] <"j.r .-,-,„„

else

t(> '"'Otllll!

if /; ,„, „ > to then

t<) *~~tz „i ,„

{Findthe smallest offx.,„„.r. tumnj.,tz ,„„.,.}

'■* *.r Fiidi < fy/ri«(f then

tl '<-'^m«.r

else

' 1 ^ < u m; av r

if t: ma < 3\ then

ti '*- t.nuir

.{Intersection with box at /(1 and ti if t0 < fj

hit -<— .f -< 11;

2.5 RAY TRIANGLE INTERSECTION

There ;>ire -a variety'of ray-polygon and ray-triangle intersection routines. These

are discussed extensively by Eric Haines in various ray tracing news articles that

you should read' once you get your basie ray;, tracer working. 1 will present only

one simple routine for triangles.

'A 'triangle is defined by three points a, b and c. If not co-linear, these

points define a piang. A common way to describe this piane is with barycentric

coordinates:

p(ay0,7} = aa+ ,3b + 7c

(2.1)

2.5. Ray-Triangle Intersection 25

Figure 2A Barycentric coordinates can be found by computing the areas of subtriangks.

with the constraint

Q + J-- = 1.

Barycentric coordinates seem like an abstract and unintuitive construct at first,

but they turn out to be powerful and convenient. Barycentric coordinates are

defined for all points on the piane. The point p is inside the triangle if and only

if

O < Q < 1.

0<j < 1.

O < -. < 1.

If one of the coordinates is zero, you are on an edge. If two are zero, you are at

a vertex.

One way to compute barycentric coordinates is to compute the areas A„. Ab.

and Ac, of subtriangles as shown in Figure 2.4. Barycentric coordinates obey

the rule

••: Q = a, i a.

0 = AbIA.

1 = AJA,

where A is the area of the triangle. This rule still holds for points outside the

triangle if the areas are allowed to be signed.

We can eliminate one of the variables by plugging in a = 1 — /3 — 7 into

Equation 2.1:

p(a, /?,7) = a + 0{b - a) + 7(c - a).

26

2. Ray-Object Intersections 2.5. Ray-Triangle Intersection

figure 2.5. An intersection of a ray and the plane aintainina the triangle.

Figure 2.6. Bup-centrie coordinates on a piane intersected by a ray.

Now points are in the triangle if and only if

.?-rv< i.

0 <J.

0 < -v.

Together 3 and 7 parameterize nonorthogonal coordinate system on the piane as

shown in Figure 2.5.

The ray t = o + rd hits the piane when

o + td= a + ;i(b - a) + -{c - a).

(2.2)

This hitpoint is in the triangle if and only if J > 0. 7 > 0, and j + 7 < 1. A

configuration where the ray hits at (3. 7) — (1.2. 0.8) is shown in Figure 2.6,

which is not inside the triangle as predicted by the sum of 3 and 7 being two.

To solve for t, 3, and 7 in Equation 2.2, we expand it from its vector form

into the three equations for the three coordinates:

This can be rewritten as a standard linear equation:

"x

a,j

a.

-bx

^y

-b.

a.r - cx

°y ~ cy

a. — c-

dx

rfv

rf-

"J"

■)

J

-

=

aT - Ox

ay - oy

az — o-

The fastest classic method to solve this 3 x 3 linear system is Cramet

This gives us the solutions:

ax

ay

a-

- Or

~ <>»

— o-

(>.t - Cr

au - ?a

a._— c,

d.

dy

d;

Or-

av

az -

-bx

- by

-b-

Qj- -

a,j -

d~-

-ox

°y

- o~

dx

d«

d.

ox + tdx =

0(bx

-f y(cx - ax

o„ _i_ td.

+

y + ""y

o. j. td.

o-y+P(by~ay)+1(cy-ay)>

= a, + 0(bz-az)+j(cz

ax

ay-

a~-

- bx

-by

-b:

ax —

*Iy -

az —

Cx

cy

c,

ax - ox

*r - o,

az — oz

2. Ray-Object Intersections

where the matrix A is

b,

by

b2

a,j

a

a

A =

and |A| denotes the determinant of A. The 3x3 determinants have common

subterms that can be exploited. Looking at the linear systems with dummy

variables

a d

b e

F f

~3

7

t

f

k

J

Cramer's rule gives us

3 =

j(ei - hf)

where

t =

M

k(gf - di) + l(dh- eg)

M

i((ih-jb)+ h(je al) 4- g(bl- kc)

M

/(((A- - jh) + f(jc- al) + d(bl - kc)

M

a(ei - hf) + b(gf- di) + c(dh - eg).

These equations can be put into code mechanically by creating variables suchas

a and ei-miiuis-hfanA assuming that the compiler will (at least eventually) do

its job.

There are faster ways to compute triangle intersection (e.g. [36]). but this way

is straightforward and requires no global storage besides the triangle vertices.

2.6 MORE THAN ONE OBJECT

Once you have multiple objects, you will need a routine to return the firstobject

hit by a ray. Usually you will be only interested in hits for / € [fo.fi] for a

ray p(f) = o + fd. For example, if you are interested only in hit points "in

front" of o, that corresponds to t e [0. do]. If you are using an object-oriented

language, you should make ah abstract class or interface or superclass whatever

your language calls a class you inherit from. This class is for anything you can

hit which I will call surface. This class needs a routine hit that returns true or

false for whether a ray hits it, and fills in some data (like the / value) if it returns

true. Since a list of objects can be hit, the list is also a surface and has a hit

function:

27. A Simple Ray Tracer

29

function surfacelist.hit(ray o + td, t0, ti.prim) {primis a pointer to the object

hit} [t and prim are filled in if hit returns true}

bool hitone <- false

for all surf in list do

if surf->hit(o + id, to, t, prim) then

hitone ■*— true

return hitone

Note a trick in this code: The interval passed to the object is [to-t] where

t is the smallest t of an object hit so far. This uses the interval to sort

for you. With this interface the code for a sphere intersection is as follows:

function sphere.hit(ray o + td. to. ti, prim) {sphere has center c and radius

R}

float A <- dot(d.d)

float B <r- 2*dot(d.o-c)

float C <— dot(o-c.o-c)

discriminant <- B*B-4*A*C

if B > epsilon then

prim <— this

sqrtd 4— sqrt(discriminant)

t <- (-B - sqrtd) / (2*A)

if f > to and t < fi then

return true

t 4- (-B + sqrtd) / (2*A)

if t > to and t < t\ then

return true

return hitone

The epsilon in the code above is to avoid degenerate code when the ray is

tangent to the sphere. You may want to also return the hitpoint p = o + td and

the surface normal at the hitpoint. I usually have a class that contains all the

"returned" variables I compute that I pass in as an argument, and I think you

will find you will like it if you do the same.

2.7 A SIMPLE RAY TRACER

We can now generate a simple image using spheres and triangles with an

orthographies camera. Let's assume we have 101 by 101 pixels {i,j) numbered

2. Ray-Object Intersections

Figure 2.7. Sample image with sphere and triarmlc

(0. 0) through (100. 100). For each pixel generate a ray r = o + td. such that

o = (i.j.O).

d = (0.0,-1).

where me negative c direction is chosen for d to avoid reinventina the left-handed

coordinate system.

Let's create a sphere with center (0. 0. —150) and radius 100.1, and trianale

with vertices (0,0.-100). (100.1.0,-100). and (50.100.1.-100). Now put

these [W0 objects into a list and for each ray find the closest hitpoint. if you

hit the sphere, color pixel (i.j) light grey. If you hit the triangle, color it dark

grey. If you hit neither, color it black. You should get an itnage that looks like

Figure 2.7.

Lighting and Shadows

To make your images more realistic, the shading on objects should depend on

both surface orientation and lighting direction. For marre (nonshiny) materials.a

good approximation to how surfaces react to lighting is given by Lambert's Law:

L = ER cos Q

where E is the color of the light source (RGB). R is the reflectance of thesurface

(RGB), and d is the angle between the surface normal at the illuminated point,

and the direction from which the viewing ray comes.

3.1 IMPLEMENTING LIGHTING

Recall that the viewing ray is coming into the illuminated point. Let's define a

unit-length vector e pointing in the direction d comes from (it points away from

the surface):

_ d

e"_iidi:

Figure 3.1. Geometry for lighting computation.

31

32

3. Lighting and Shadows

Figure 3.2. Two images illustrating lighting. These images have the same viewing rays used at the

end ofthe last chapter, and two spheres with centers (50.0. -80.0. -1000) and (50.0. 50.0. -IIXX)). and

radi* 100 and 30. The lighting vector 1 is (0.1.0). Left: Using absolute value when L is negative.

Right: Clamping negative L to zero.

You should define 1 to be a unit vector toward the direction from which the light

comes (it also points away from the surface). In this context. Lambert's Law

becomes

L = EH(n-l).

The thing to note is that there is no e in this equation! This means the surface

does not change brightness as you move your eye. You should observe that many

"matte" surfaces in the real world don't change brightness much as you move

your viewpoint, so this is not a bad property. A problem with this formu3a is that

L can be negative. There are two potential solutions to this: Take the absolute

value, or clamp answers below zero. These alternatives are shown in Figure 3.2.

3.2 ADDING SHADOWS

Shadows should occur whenever a point "sees" another object in direction 1.

This can be accomplished as follows:

1. When the viewing ray hits an object at point q, create a shadow ray

p(t) = 1 + tl.

2. If the shadow ray hits no objects, then apply the lighting equation.

Otherwise, set L to zero.

3.2. Adding Shadows

33

1 1

incoming light

1 1 i 1 f T

k

viewing ray

/shadow ray >i

/ J5

1

incoming

* 1 i

viewing ray

u

>1

2 j f

jr: ^>

y

7fq

]

1

light

3 ♦ T

}

Figure 3.3. Shadow ravs originate at q and propagate in the direction from which the light comes.

Intersections at or behind q (f < 0) are not of interest.

An important thing to realize is that "hits no objects" above does nnot include

any hits with 3 < 0. This can be seen in Figure 3.3 where the shado'»w ray hits

the lower object at both / = O (where p(0) = q + 01) and some tmegative /.

Instead, we are only interested in positive values of t.

A problem to keep in mind is that because of finite-precision arithmetic, the

shadow ray may intersect the surface q at some t value other than zesro. Tf this

number is positive, we will detect a false shadow as shown in Figure: 3.4 (left).

<<$$^\>

Figure 3.4. Left: Using an interval t £ (0. oo) causes some rays to hit the surface : at q causing

black pixels. Right: Using t € (e, oo) avoids these artifacts.

34

3. Lighting and Shadows

^

unclosed model

arbitrary normals

outward normals

Figure 3.5. The three classes of models.

Sometimes these dark pixels are more irregular and resemble a Moire' pattern.

Instead, we pick some smali e that t must be bigger than. This is an inelegant

hack, but doe^ usually solve the problem. For a more principled discussion of

such problems, see the paper by Amanatides and Mitchell [11.

3.3 OUTWARD NORMAL VECTORS

When you implement the simple lighting in this chapter, an important issue will

arise: The normal vector n for a polygon may point into the surface. This

matters because it changes how your code computes cosines. It rays can come

from either side, this has to happen for one side of the polygon or the other. You

must now decide on a convention for your ray tracer: Does it assume outward-

facing surface normals? This problem is shown in Figure 3.5 where three classes

of models are shown. In the first, surfaces can be hanging in space, so normal

vectors are arbitrary. In the second, surfaces must be closed, meaning they huve

a well-defined inside and outside, but the normals can tace in or out. The third

has outward-facing normals.

Outward-facing normals certainly make the job of writing your program

easier, so I would suggest you assume your models will have outward facing

normals. This is in the long tradition of leaving any hard work to the modeling

program. A convention that is usually followed is that polygonal models will

obey the right-hand rule, meaning that a polygon that contains three vertices p0,

Pj, and p2 will generate a normal

:(Pi -Po) x (P2-P0) ■

If you do assume outward-facing normals, you can eliminate the epsilon-hack

from shadow ray testing. When testing for shadow ray intersections, disregard

3.3. Outward Nurmul Vectors

35

any intersections where n • 1 > O at the intersection points. This counts only

intersections where the ray is entering the object, rather than leaving it as is true

at q. Alternatively, you can flip normals when they point "into" the surface, i.e.,

if (d • n) > 0.

Viewing

The simplest image aquisition device we can makers ^pinhole camera: We ju'st

take a box painted black cm the inside^ poke" a' hole in one side;, and tape film;

to the inside of the opposite side-(Figure 4.1). In this chapter; we'sittiuliate ' such

a device. As shown in the figure, we can compute light passing through some

rectangle in front of the camera.- In effect, this is computing the: light coming

through a "window" in front of the ""viewer." The only reason fo do'this is; that

it may be more intuitive: it is for most people.

r^^^m

\ i f ■*—_ : Mr '"

",' - ':.? '■"'

pCohofc *V---J

Figure14.1. A real pinhole- camera. Light enters the pinhole froma' specific direction a'tfd' exposes;

a particular part of the film. The film will capture a scene in sharp focus, but it fequifes significant

exposure lime. The color of Tight at point s in the difectioh shown will stay the sanie as it passes-

through the pinhole, and as it hits the film at f.

41 AXIS-ALIGNED VIEWING

We can implement a synthetic version of a pinhole camera; A" nice'thing isul&t

we can create a virtual film piane in front of the camera" and forget-that-the filhi1

is behind the pinhole. To do this, we need to set up a projection of .the film-out

37

4. Viewing

Figure 4.2. An image passing through a rectangle at distance s wili he mapped unto the tilm.

in space. This is shown in Figure 4.2. Note that the selection ofthe distance

s is arbitrary: if we make it bigger the rectangle and the image grow, and the

same image will be saved.

To implement the camera, we pick a specific rectangle aligned to our viewing

system as shown in Figure 4.3. The viewing system has center o which is defined

to be the origin in uvw coordinates and is aligned to the uvw basis. Points a

and b are the corner points of this rectangle. The rectangle need not be centered

on the gaze direction. The rectangle is in the negative w direction because we

want to both maintain a right-handed coordinate system, and have u go to the

right on the rectangle. The points we sample on the image are mapped onto that

rectangle as shown in Figure 4.4. The equations for mapping a pixel (i.j) on

an nx by ny pixel image to the correct (u, v) are

i

u = a„+(6„-a„) .

Tlj - 1

v = av + (6t - o„)—1—.-.

ny - 1

This means the ray in uvw coordinates is

' i i

p(t) = (0, 0, 0) + t ( an + (6U - au) - -,a„ + {bv - av)— s

\ rij. - 1 ny - / •

4,2. Setting View Parameters

39

Figure 4.3. The viewing rectangle is a distance s from o along the negative w axis, and is parallel

to the uv piane.

(0.0,0)

-.

b = (blrb,.-s)

a = (au.a,,-s)

Figure 4.4. Pixel coordinates are mapped to the 3D window in uvw coordinates.

4.2 SETTING VffiW PARAMETERS

If we choose to have an axis-aligned view from the origin then we can use just

the ray above as xyz coordinates. However, we would usually like to be able

to set view location and orientation. There are many ways to do this, but I will

describe only my favorite, shown in Figure 4.5.

40

4. Viewing

Figure 4.5. Viewing trom an arbiirury poiition.

The variables that are specified are:

• e. the xyz eyepoint (pinhole);

g, the xyz direction of gaze (This is also the normal to the viewing

rectangle. so it is sometimes called the v/Vvv '-plane nortnal '"•

• v,,p. tne view-up vector. This is any vector in the piane of the £aze

direction g and the vector pointing out of the top of the heatl;

• s. the distance from e to the viewing rectangle:

a„,a,,.6„.6„, the uv coordinates of the rectangle corners.

From this information we can establish an aligned uvw frame where e is the

origin and the basis vectors are

w = -A.

llvupX W||

V = W X U.

Note that y°u could also just make a call to make-ONB-from-WV(g,vupfc

have such a function implemented

So we have the ray in Uvw coordinates, and we have the xyz coordinates

of the uvw basis vectors and origin. We could construct a coordinate transform

A 2 Setting View Parameters

41

matrix aS described111 Chapter 2, or we can just proceed from the definition^

coordinates:

plf) = e + t((a» + (bu- o.u)^^) u + (a, + (fc, - a»)^Ti) v + ~sw)

Note that this 1S a Prettv genera3 viewing system so some care mustbe taken

in setting parameters. If you want the viewing 'rectangle centered around the

gaze airectl011> make sure au = -bu and av- -&„. if you want t0 have square

pixels, make sure that

bu — Ou _ Ttj

bv - av ny

Fln!jly' note tnat although s is arbitrary as long as it is positive, the bigger it

is the bigger (a a„.bu.bt,)have to be to get the same imaee. The ratio of

bu~au t0 s 1S the tanSent of half of the -'vertical field-of-view." Tf vn]] are more

comfortable with that concept, set s to one and input the vertical field-of-view.

One nice thing about not requiring the viewing rectangle to be centered

around the direction is that you can run different "tiles' of a si le ■

in concurrently running programs and then join them together for a lazy

programmer's Parallel code- You can also generate stereo images with proper eye

separation; neither eye is centered on the screen if the nose is.

example

is shown in Figure 4.6 with parameters e = (0-0.2). vup =

^■l-°)J = (0-0-2)- (*u,av) = (-2.-2). (bB.M = (2.2) s = 2

- 101, and a sphere with center (0.0.0). radius >/2. and the u8"f vector

Figure 4.6. A sample use of viewing parameters.

42

4. Viewing

(0,1,0). An ambient factor of 0.1 is added, and the sphere reflectance is 0-9,

so colors range from 0.1 to 1.0.

Nol:e that you could change g to anything with a positive y component and

a zero x component and the image won't change. Change vup to point in the

x direction and the image should rotate 90 degrees. Decrease ,s and the sphere

should '°°k smaller. Change e to be any vector in the xz piane where x*+z2 = 2

and the image should not change.

Basic Materials

Materials such as glass and metal which show mirror-like reflection and

refraction are collectively known as specular surfaces. The physics of these surfaces

is well-understood and is straightforward to approximate in an implementation.

Surfaces that show some diffuse and some specular behavior, such as smooth

plastic, are more complex, but the approximation I like to use works reasonably

well and is not too difficult to implement.

5.1 SMOOTH METAL

Smooth metals are the simplest materials to describe, and they are easy to

implement in a ray tracer. Smooth metals obey two principles:

• The law of reflection, which States that the angle of incident light relative

to the surface normal is the same as the angle of reflected light, and that

the incident direction, surface normal, and reflected direction are coplanar;

• The Fresnel eauations. which describe how much of the light is reflected,

and by complement how much is absorbed.

Let's assume we have functions to compute the lighting on a diffuse surface,

the reflected vector, and the reflectance. The function for computing a ray color

that "sees" a diffuse or metal surface is

function color(ray o + t d)

if r hits object at point p with normal n then

if p is diffuse then

return R{p) * direct-light(p.n)

else

r <— reflect(d. n)

return R(pj * color(p + tr)

else

return background

43

44

5. Basic Materials

- - ^ i

Figure 5.1. The reflection from a smooth surface.

To implement the law of reflection, observe Figure 5.1. where the incident

direction is d. the surface normal vector is n, and the reflected vector is r. As can

be seen in the Figure, r = d + 2a. The vector a is parallel to n but has length

of d scaled by the cosine of the angle between d and n. This yields

r = d 4- 2a

d» n

d — £ n

Note that if n is a unit vector, dividing by the square of its length is not needed.

To compute the reflectance, the simplest approximation is to set it to some

constant RGB value. This works well enough for most images. However, the

reflectance of real metals increases as the angle between d and n increases. Schlick

developed ah accurate approximation to the rather uglv Fresnel equations [48]

to simulate the change in reflectance:

R(8) a R0 + (1 - Ro)(l -cos£)5

(5.1)

where Rq is the reflectance when d is parallel to n. Try setting Rn to 0.8 for

all three channels RGB. This will make an object that behaves similarly to any

modem "stainless" alloy. Although there is a smali error implicit in Schlick's

approximation, it is no bigger than the error we imposed when we decided not

to use polarization, so you shouldn't worry about that.

5.2 SMOOTH DIELECTRICS

A dielectric is a transparent materia3 that refracts light. Diamonds, glass, water,

and air are dielectrics. Dielectrics also filter light; some glass filters out more

5.2. Smooth Dielectrics

45

red and blue than green light, so the glass takes on a green tint. When a ray

travels from a medium with refractive index n into one with a refractive index

ntt some of the light is transmitted, and it bends. This is shown for nt > n in

Figure 5.2. Snell's Law tells us that

n sin 8 = nt sin O .

From this and the equation shr d+ cos20 = 1, we can derive a relationship

for cosines:

"2 (l-COS^)

cos-6' = 1

nt

From the figure, we can see that n and b form a basis for the piane of refraction.

Important: The following discussion assumes d and n are unit vectors, so when

you implement this be sure to make unit length variables in this routine. By

definition, we can describe t in this basis:

t = sini9'b - cos#'n.

Since we can describe d in the same basis, and d is known, we can solvefor b:

d

b

sin #b - cos On.

d — n cos 0

sin 0

Figure 5.2. The refraction of light at a smooth surface.

46

5. Basic Materials

This means We can solve for t with known variables:

n (d + ncos#))

t = — --ncos^'

nt

n (d - n(d • n)) f n2 (1 - (d - n)20)

— — ni /1 = .

nt \j nf

Note that this equation works regardless of which of n and nt is bigger.

For homogeneous impurities as is found in typical glass, a light-carrying

ray's intensity will be attenuated according to Beer's Lena. As the ray travels

through the medium, it loses intensity according to dl = —CIdxwhere dx

is distance. This means dl/dx = —CI. This is solved by the exponential

/ = fcexp(-Cx) + k'. The strength of attenuation is described by the RGB

attenuation constant a, which is the attenuation after one unit of distance. Putting

in boundary conditions, we know that 7(0) = /()• and ^(1) = al(0). The

first implies I(x) = Iq exp(-Cr). The second implies /,,« = 70exp(-C) so

-C = ln(a). .So the fina3 formu3a is

I{*) - l(0)e-inil"s

where Us) is the intensity of the beam at distance * from the interface, hi

practice, we reverse-engineer a by eye because such data is rarelv easy to find.

To add transparent materials in our code, we need a way to determine when

a ray is going "into" an object. The simplest way to do this is to assume that

all objects are embedded in air with refractive index verv close to 1.0, and

that surface normals point "out" (toward the air). This concept is illustrated in

Figure 5.3.

The code segment for rays and dielectrics with these assumptions is:

if p is dielectric then

r = reflect! d. n)

if d • n < O then

t <- refractfd, n,n)

c < d n

Kj- ^- K„ i— fjflj i— /

else

t <-- refract(d. -n. 1/n)

c <- t n

kT «■- exp(-ori)

kg*-exp{-ast)

kb f- exp(-a(,r.)

i?o<-(n-l)2/(n+l)2

R <- R0 + (1-Ro)* (1 -c)5

return k(R * color(p + tr) + (1 - R) * color(p + tt))

5.3. Polished Surfaces

Figure 5.3. If a direction and the surface normal have a negative dot product, the direction points

into the object. This is useful for deciding whether the ray bends in or out.

The code above assumes that the natural log has been folded into the constants

(ar. as. ai,). An image that uses both Fresnel equations and Beer's law is shown

in Figure 5.4.

5.3 POLISHED SURFACES

Many surfaces are dielectrics, but have smali reflecting particles embedded which

diffusely scatter light (e.g., plastic), or coat an underlying diffuse surface (e-g-

finished wood). Such surfaces act as combinations of specular and diffuse

surfaces. An interesting aspect of this behavior is that when the incoming light is

"grazing" (9 is near 90 degrees), the specular component dominates, and when

the incoming light is near-normal (0 is small), the diffuse interaction dominates.

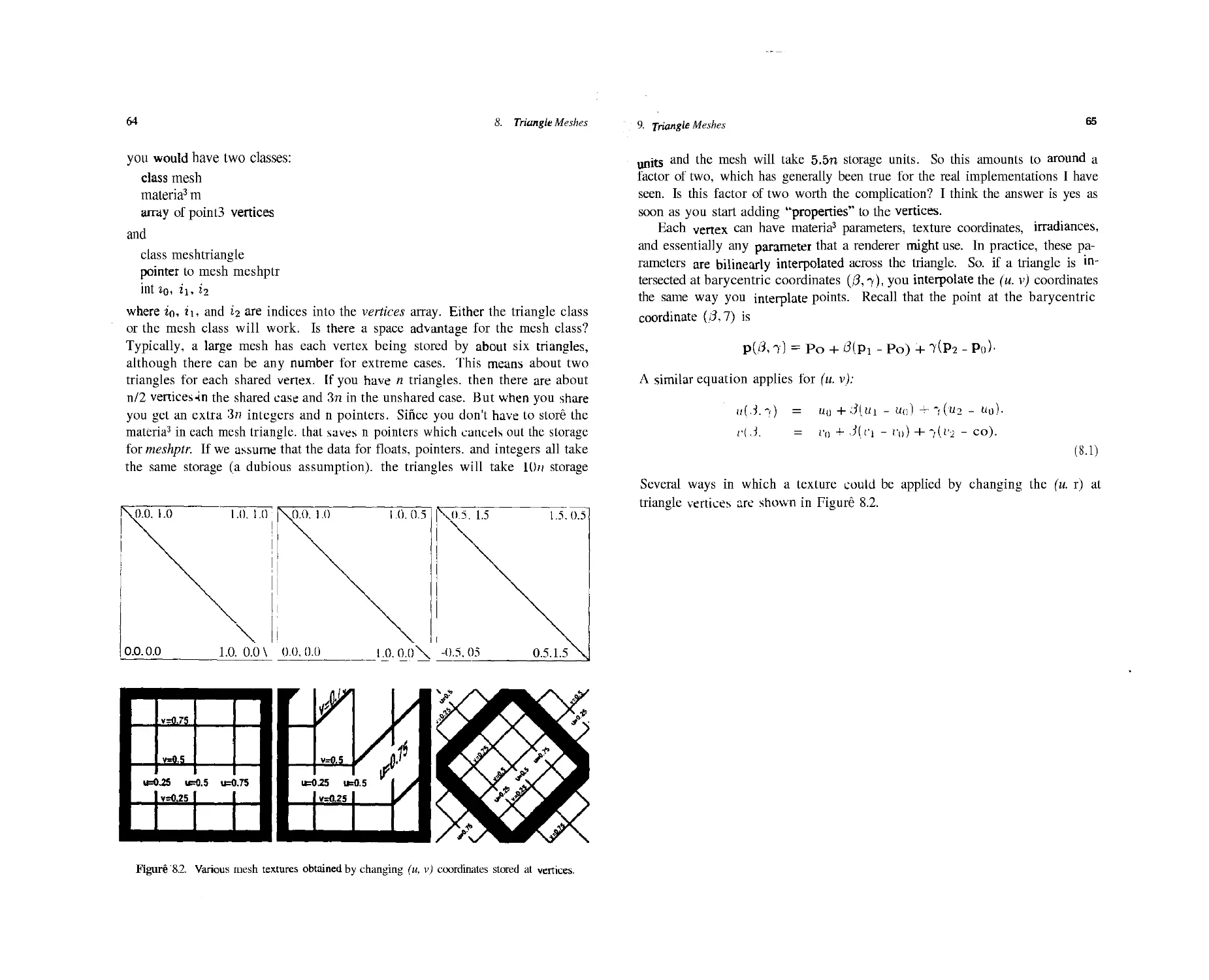

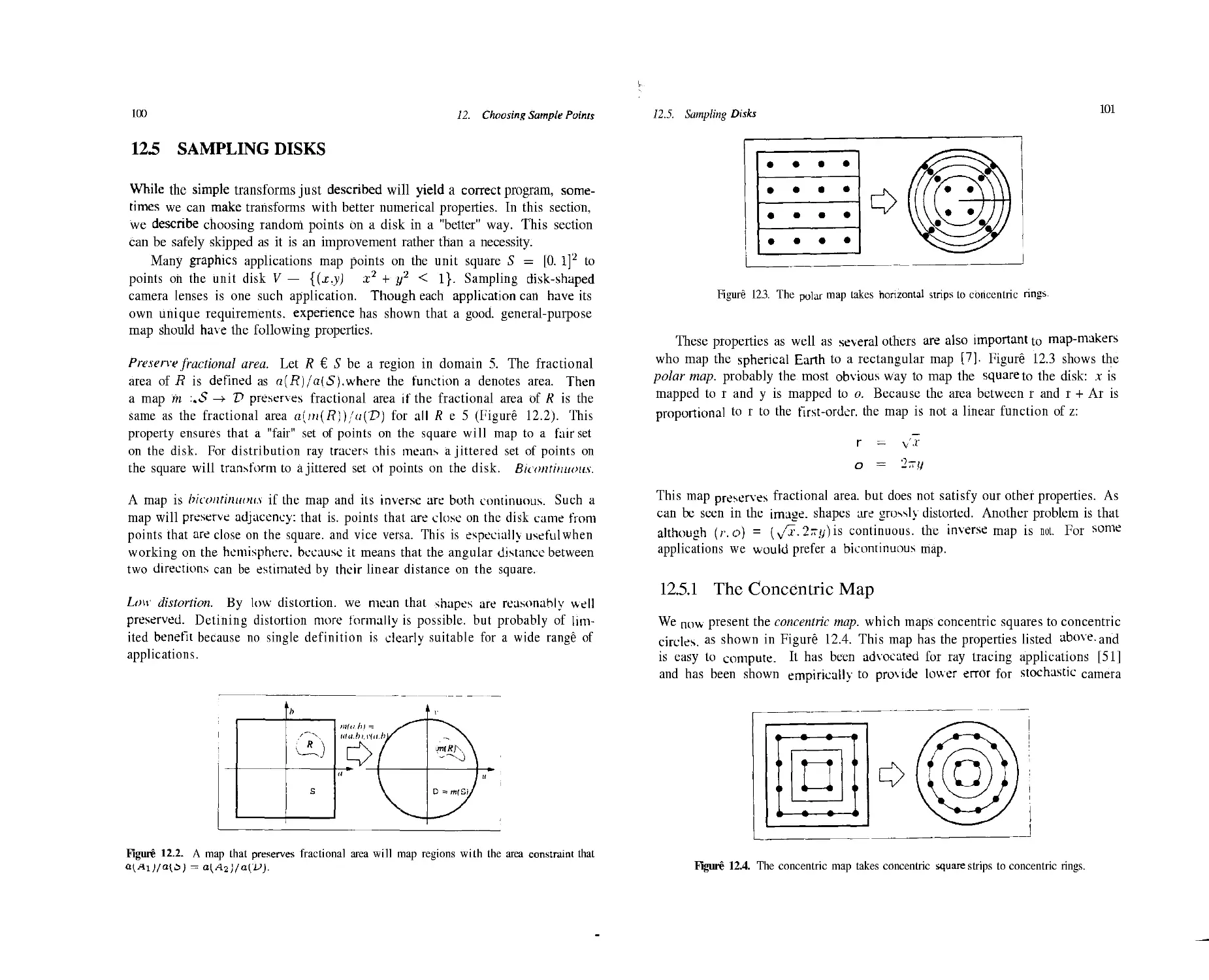

This is actually not surprising; the specular reflectance approximated by