Текст

para/today

no es un proyecto lucrative sino

un esfuerzo colectivo de estudiantes у profesores de la UNAM

para facilitar el acceso a los materiales necesarios para la

education de la mayor cantidad de gente posible. Pensamos

editar en formato digital libros que por su alto costo, о bien

porque ya no se consiguen en bibliotecas у librerias, no son

accesibles para todos.

Invitamos a todos los interesados en participar en este proyecto a

sugerir titulos, a prestarnos los textos para su digitalizacion у а

ayudarnos en toda la labor tecnica que implica su reproduction.

El nuestro, es un proyecto colectivo abierto a la participation de

cualquier persona у todas las colaboraciones son bienvenidas.

Nos encuentras en los Talleres Estudiantiles de la Facultad de

Ciencias у puedes ponerte en contacto con nosotros a la siguiente

direction de correo electronico:

eduktodosG/ hotmail.com

http://eduktodos.dvndns.org

MATHEMATICAL

METHODS

FOR PHYSICISTS

Third Edition

MATHEMATICAL

METHODS

FOR PHYSICISTS

Third Edition

GEORGE ARFKEN

Miami University

Oxford, Ohio

ACADEMIC PRESS, INC.

Harcourt Brace Jovanovich, Publishers

San Diego New York Berkeley Boston

London Sydney Tokyo Toronto

Copyright ©1985 by Academic Press, Inc.

All rights reserved.

No part of this publication may be reproduced or transmitted in any form or by any

means, electronic or mechanical, including photocopy, recording, or any information

storage and retrieval system, without permission in writing from the publisher.

ACADEMIC PRESS, INC.

San Diego, California 92101

United Kingdom Edition Published by Academic Press, Inc. (London) Ltd.,

24/28 Oval Road, London NW1 7DX

ISBN: 0-12-059820-5

ISBN: 0-12-059810-8 (paper)

Library of Congress Catalog Card Number: 84-71328

PRINTED IN THE UNITED STATES OF AMERICA

87 gg 89 9 g 7 6 5 4 3

To Carolyn

CONTENTS

Chapter 1 VECTOR ANALYSIS 1

1.1 Definitions, Elementary Approach 1

1.2 Advanced Definitions 7

1.3 Scalar or Dot Product 13

1.4 Vector or Cross Product 18

1.5 Triple Scalar Product, Triple Vector Product 26

1.6 Gradient 33

1.7 Divergence 37

1.8 Curl 42

1.9 Successive Applications of V 47

1.10 Vector Integration 51

1.11 Gauss's Theorem 57

1.12 Stokes's Theorem 61

1.13 Potential Theory 64

1.14 Gauss's Law, Poisson's Equation 74

1.15 Helmholtz's Theorem 78

Chapter 2 COORDINATE SYSTEMS 85

2.1 Curvilinear Coordinates 86

2.2 Differential Vector Operations 90

2.3 Special Coordinate Systems—Rectangular Cartesian

Coordinates 94

2.4 Circular Cylindrical Coordinates (p,(p,z) 95

2.5 Spherical Polar Coordinates (r, 9, q>) 102

2.6 Separation of Variables 111

Chapter 3 TENSOR ANALYSIS 118

3.1 Introduction, Definitions 118

3.2 Contraction, Direct Product 124

vii

viii CONTENTS

3.3 Quotient Rule 126

3.4 Pseudotensors, Dual Tensors 128

3.5 Dyadics 137

3.6 Theory of Elasticity 140

3.7 Lorentz Covariance of Maxwell's Equations 150

3.8 Noncartesian Tensors, Covariant Differentiation 158

3.9 Tensor Differential Operations 164

Chapter 4

DETERMINANTS, MATRICES, AND GROUP

THEORY 168

4.1 Determinants 168

4.2 Matrices 176

4.3 Orthogonal Matrices 191

4.4 Oblique Coordinates 206

4.5 Hermitian Matrices, Unitary Matrices 209

4.6 Diagonalization of Matrices 217

4.7 Eigenvectors, Eigenvalues 229

4.8 Introduction to Group Theory 237

4.9 Discrete Groups 243

4.10 Continuous Groups 251

4.11 Generators 261

4.12 SUB), SUC), and Nuclear Particles 267

4.13 Homogeneous Lorentz Group 271

Chapter 5 INFINITE SERIES 277

5.1 Fundamental Concepts 277

5.2 Convergence Tests 280

5.3 Alternating Series 293-

5.4 Algebra of Series 295

5.5 Series of Functions 299

5.6 Taylor's Expansion 303

5.7 Power Series 313

5.8 Elliptic Integrals 321

5.9 Bernoulli Numbers, Euler-Maclaurin Formula

5.10 Asymptotic or Semiconvergent Series 339

5.11 Infinite Products 346

327

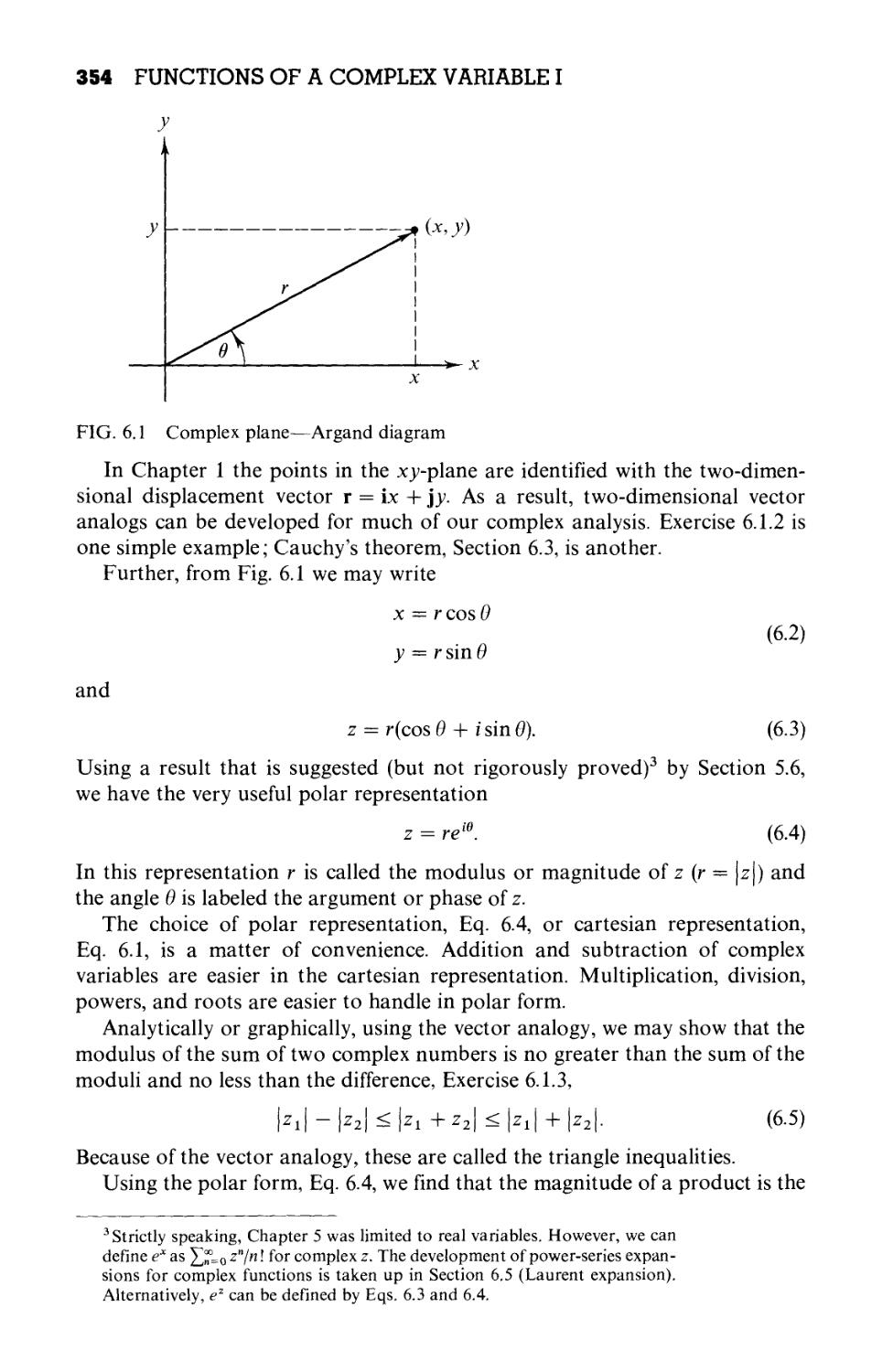

Chapter 6 FUNCTIONS OF A COMPLEX VARIABLE I 352

6.1 Complex Algebra 353

6.2 Cauchy-Riemann Conditions 360

Chapter 7

Chapter 8

6.3

6.4

6.5

6.6

6.7

CONTENTS ix

Cauchy's Integral Theorem 365

Cauchy's Integral Formula 371

Laurent Expansion 376

Mapping 384

Conformal Mapping 392

FUNCTIONS OF A COMPLEX VARIABLE II: Calculus

of Residues 396

7.1

7.2

7.3

7.4

Singularities 396

Calculus of Residues 400

Dispersion Relations 421

The Method of Steepest Descents 428

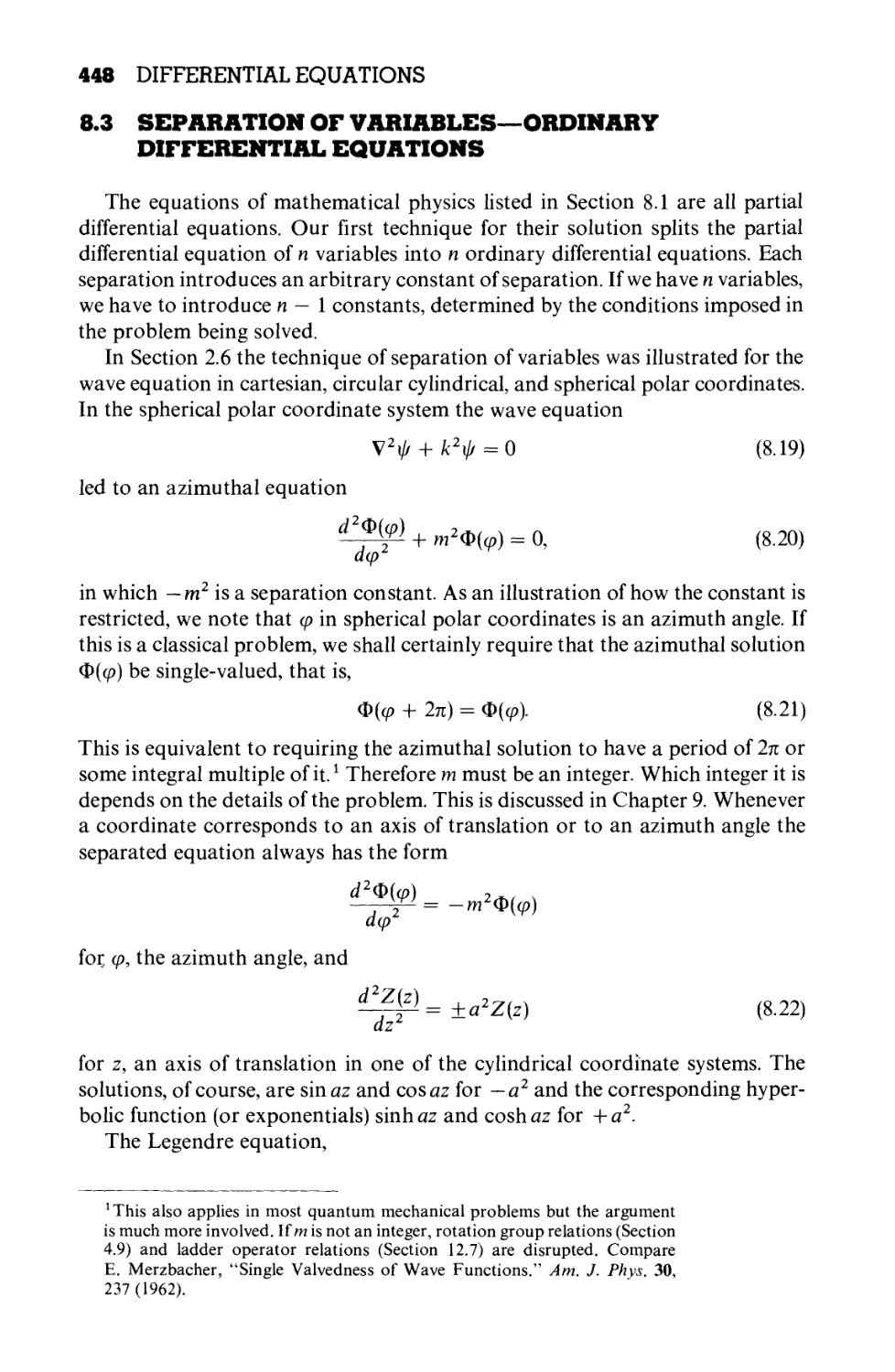

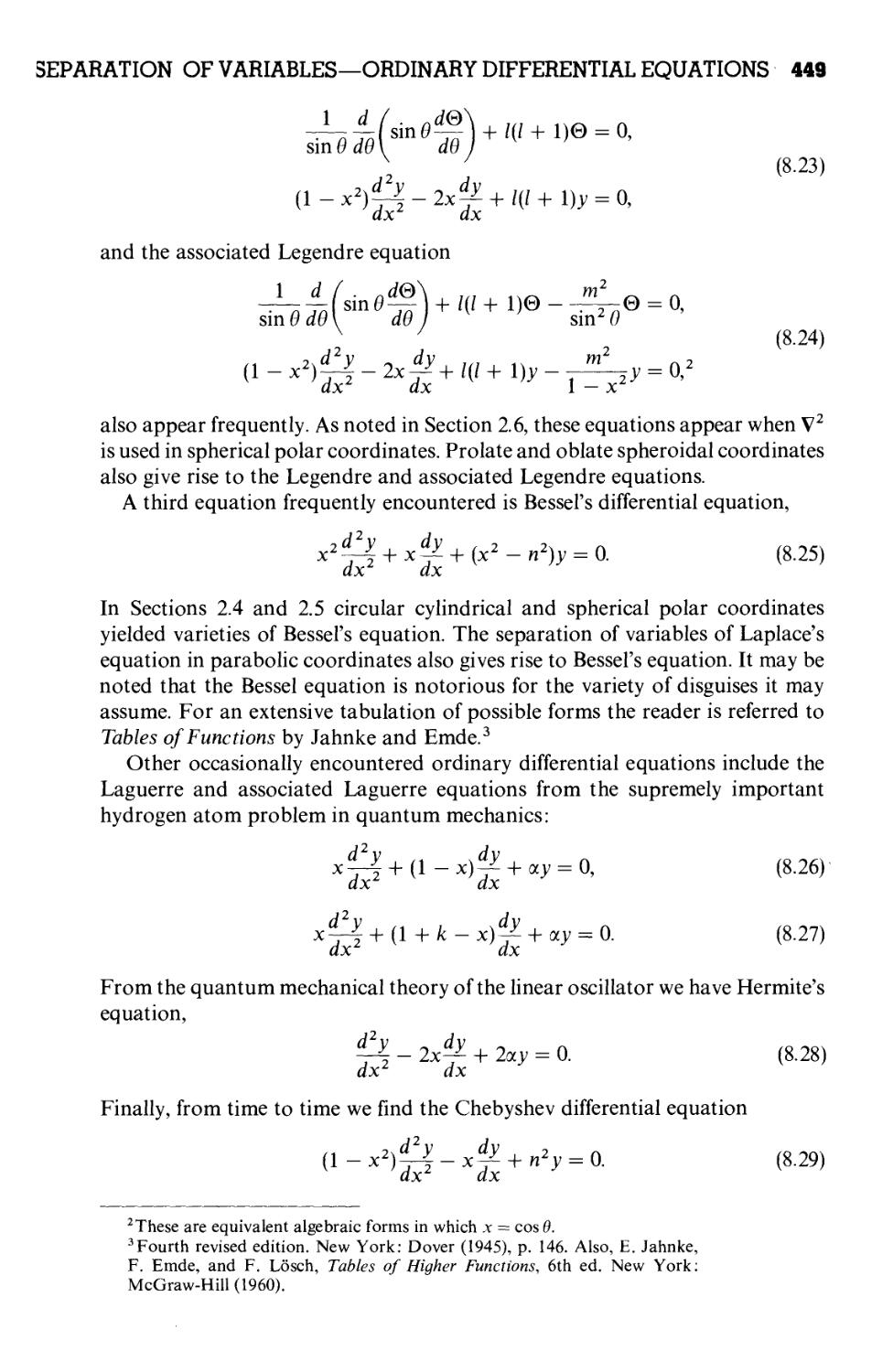

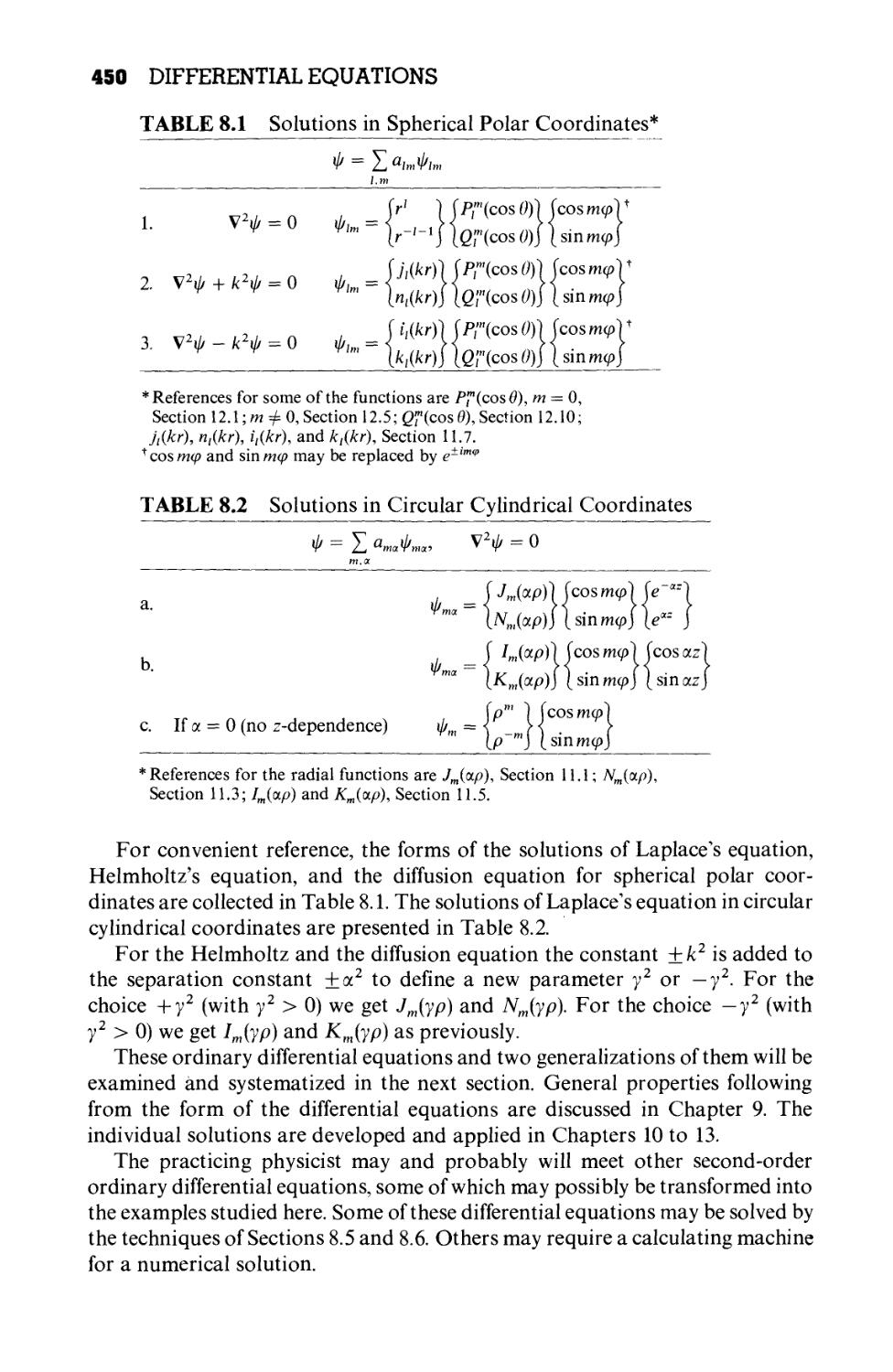

DIFFERENTIAL EQUATIONS 437

8.1

8.2

8.3

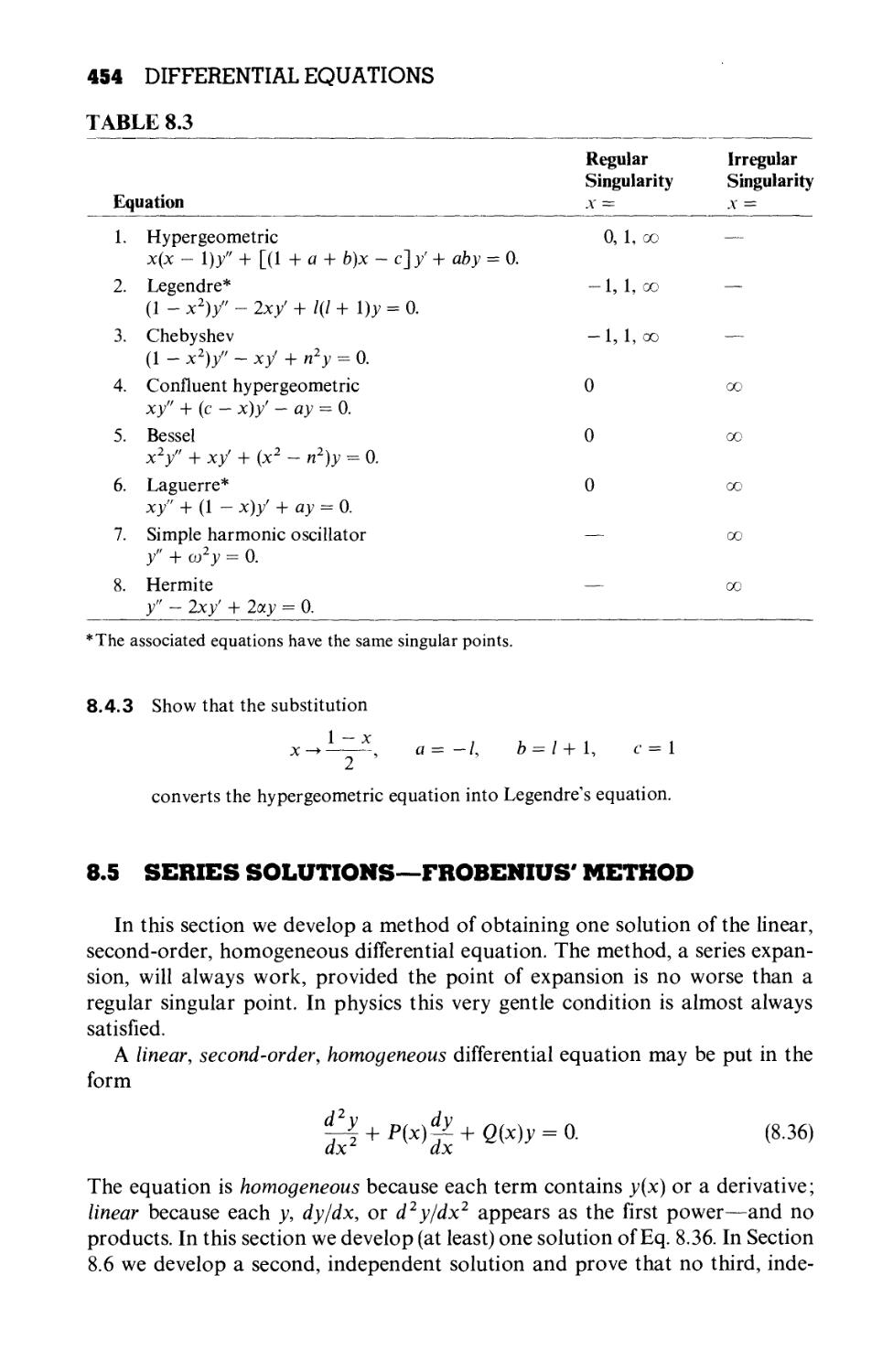

8.4

8.5

8.6

8.7

8.8

Partial Differential Equations of Theoretical

Physics 437

First-Order Differential Equations 440

Separation of Variables—Ordinary Differential

Equations 448

Singular Points 451

Series Solutions—Frobenius' Method 454

A Second Solution 467

Nonhomogeneous Equation—Green's Function 480

Numerical Solutions 491

Chapter 9

STURM-LIOUVILLE THEORY—ORTHOGONAL

FUNCTIONS 497

9.1 Self-Adjoint Differential Equations 497

9.2 Hermitian (Self-Adjoint) Operators 510

9.3 Gram-Schmidt Orthogonalization 516

9.4 Completeness of Eigenfunctions 523

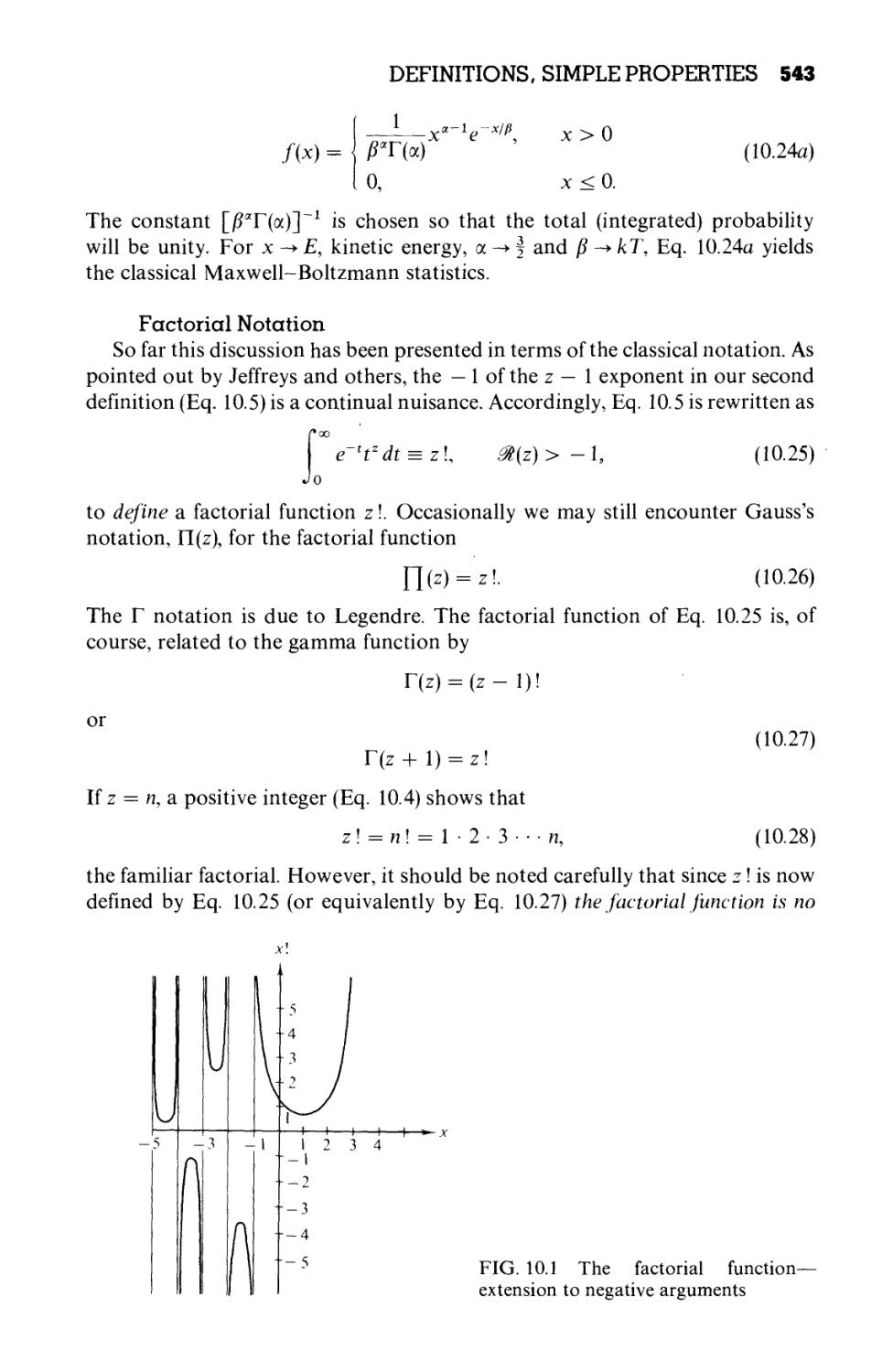

Chapter 10 THE GAMMA FUNCTION (FACTORIAL

FUNCTION) 539

10.1 Definitions, Simple Properties 539

10.2 Digamma and Poly gamma Functions 549

10.3 Stirling's Series 555

10.4 The Beta Function 560

10.5 The Incomplete Gamma Functions and Related

Functions 565

x CONTENTS

Chapter 11 BESSEL FUNCTIONS 573

11.1 Bessel Functions of the First Kind, Jv(x) 573

11.2 Orthogonality 591

11.3 Neumann Functions, Bessel Functions of the Second

Kind, Nv(x) 596

11.4 Hankel Functions 603

11.5 Modified Bessel Functions, Iv(x) and Kv(x) 610

11.6 Asymptotic Expansions 616

11.7 Spherical Bessel Functions 622

Chapter 12 LEGENDRE FUNCTIONS 637

12.1 Generating Function 637

12.2 Recurrence Relations and Special Properties 645

12.3 Orthogonality 652

12.4 Alternate Definitions of Legendre Polynomials 663

12.5 Associated Legendre Functions 666

12.6 Spherical Harmonics 680

12.7 Angular Momentum Ladder Operators 685

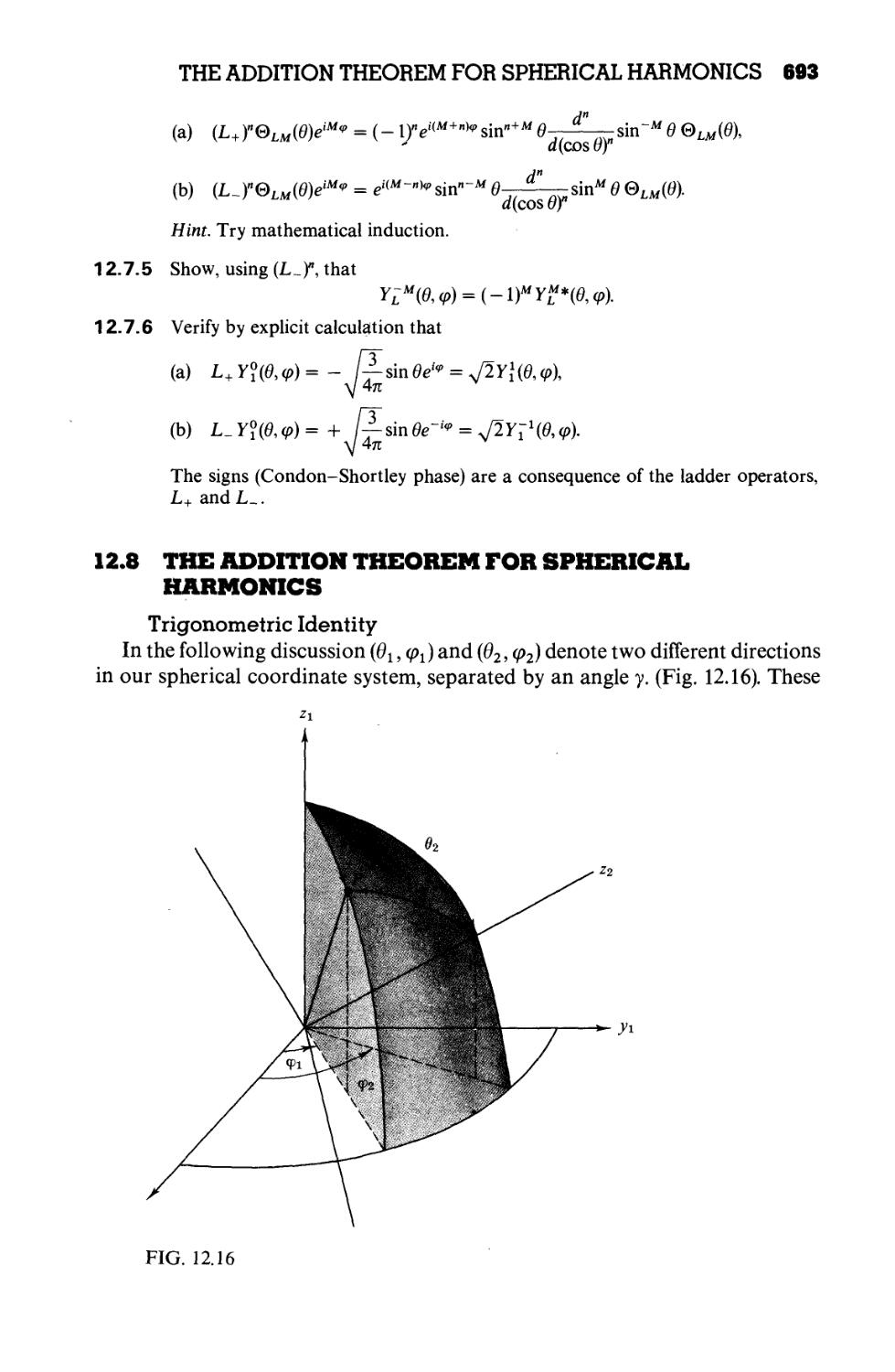

12.8 The Addition Theorem for Spherical Harmonics 693

12.9 Integrals of the Product of Three Spherical

Harmonics 698

12.10 Legendre Functions of the Second Kind, Qn(x) 701

12.11 Vector Spherical Harmonics 707

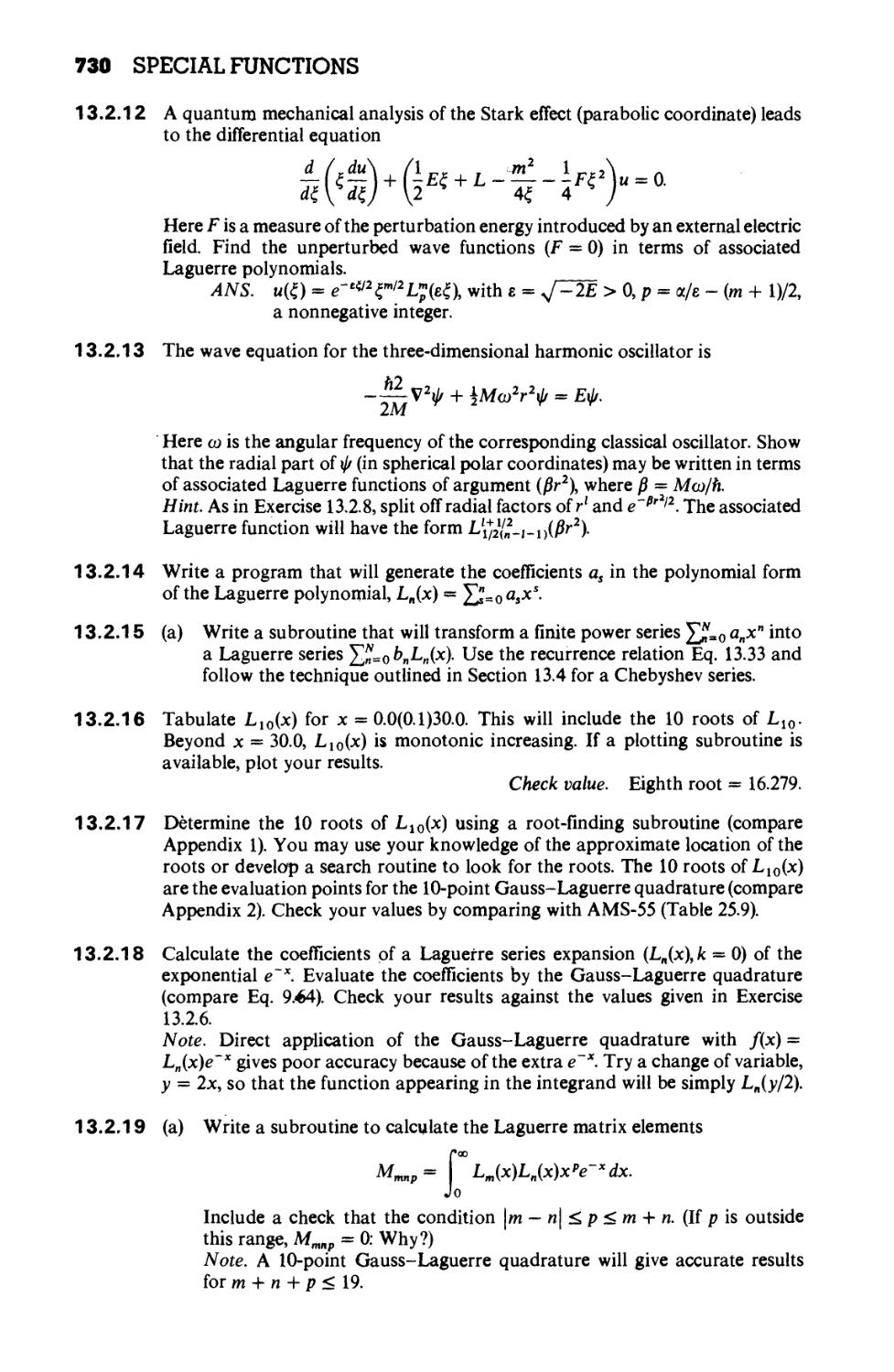

Chapter 13 SPECIAL FUNCTIONS 712

13.1 Hermite Functions 712

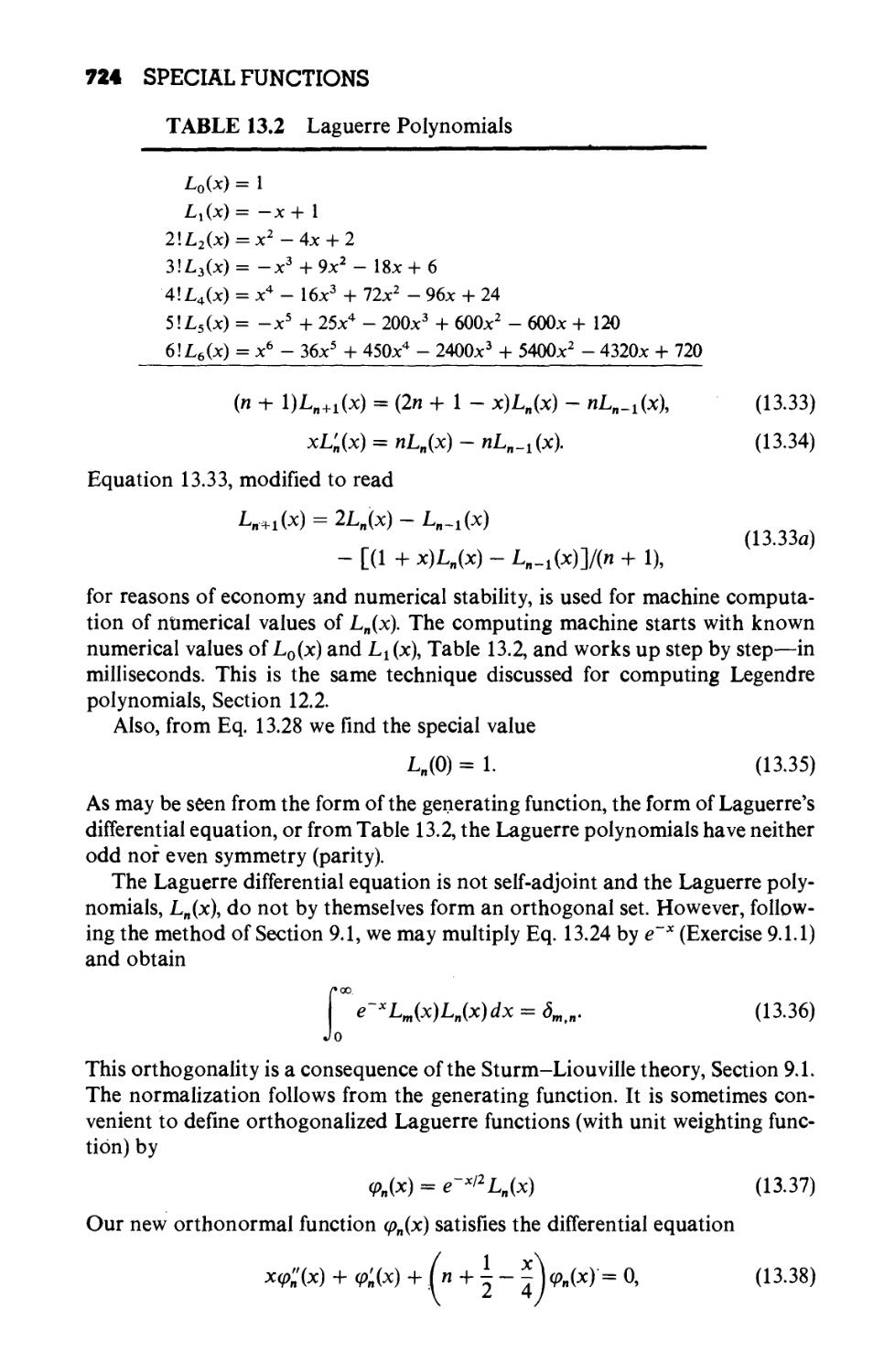

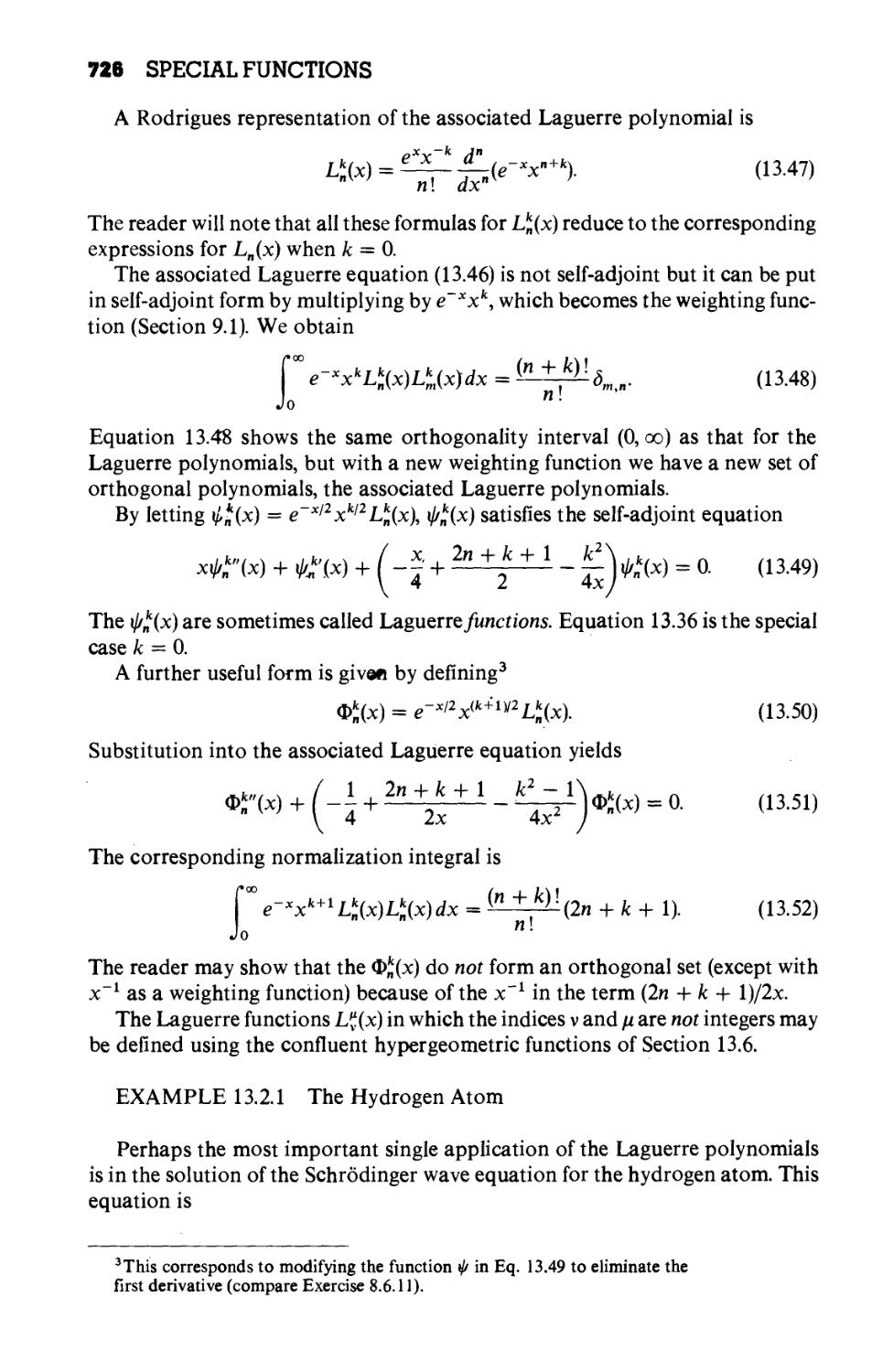

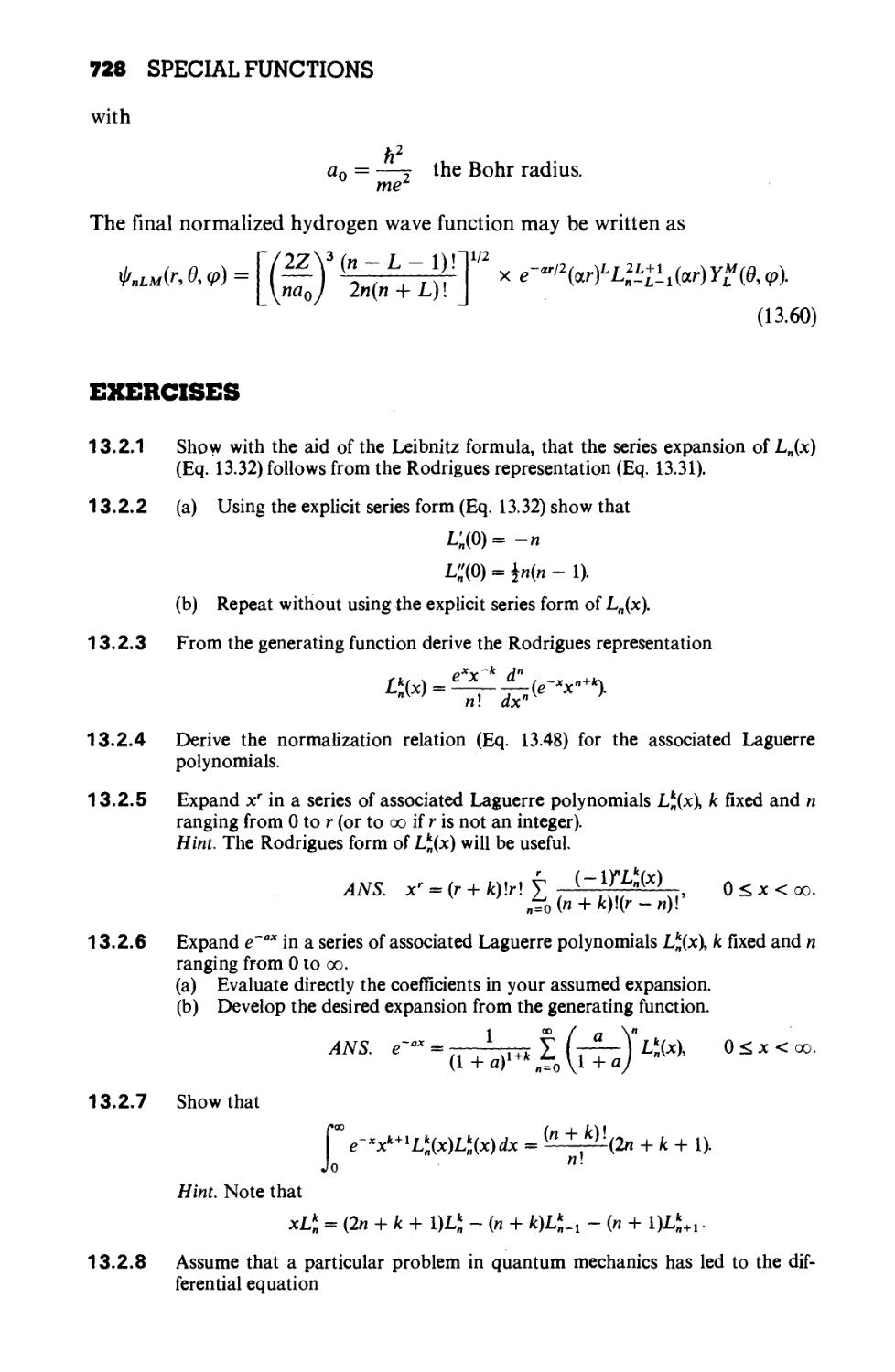

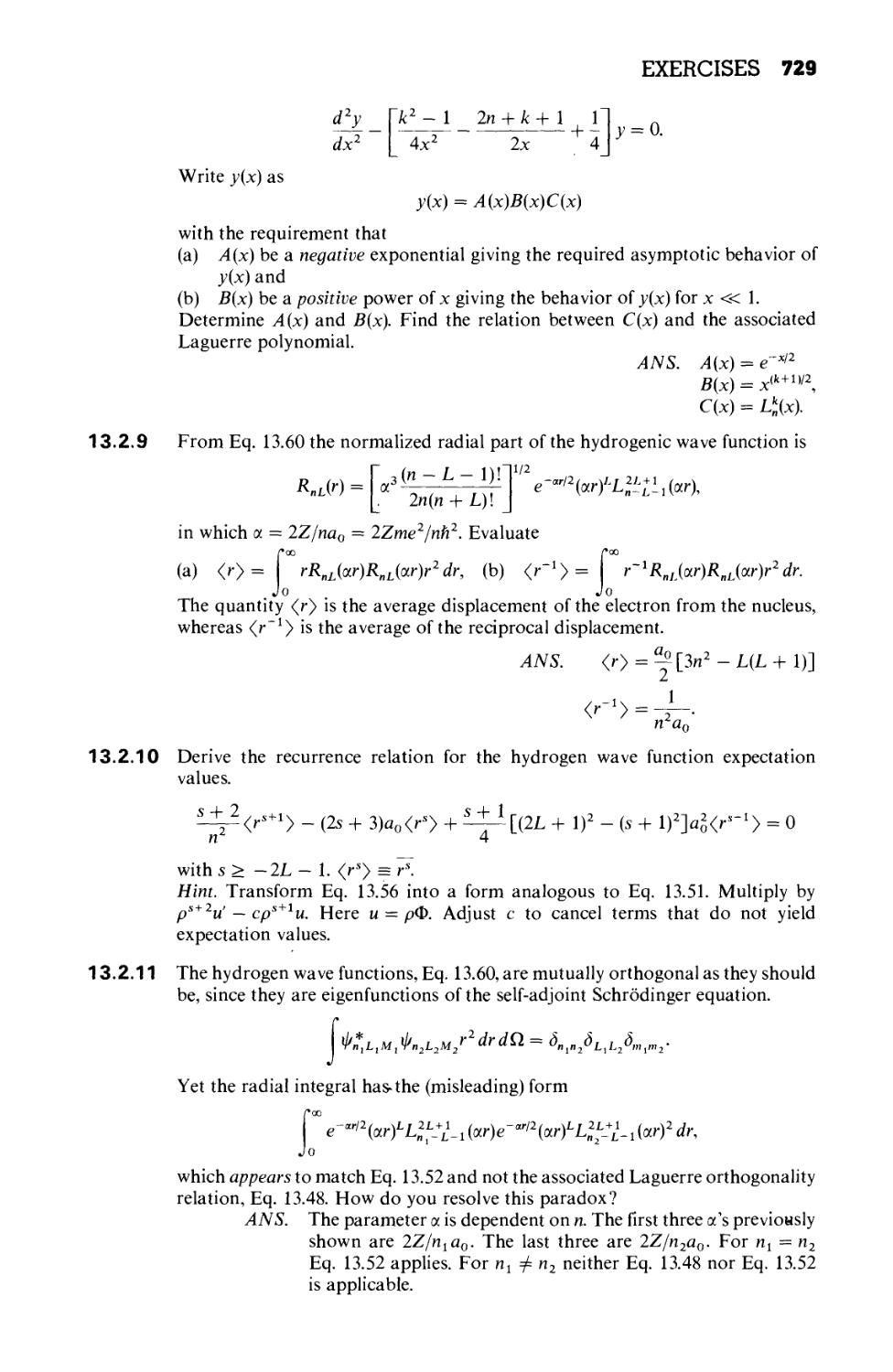

13.2 Laguerre Functions 721

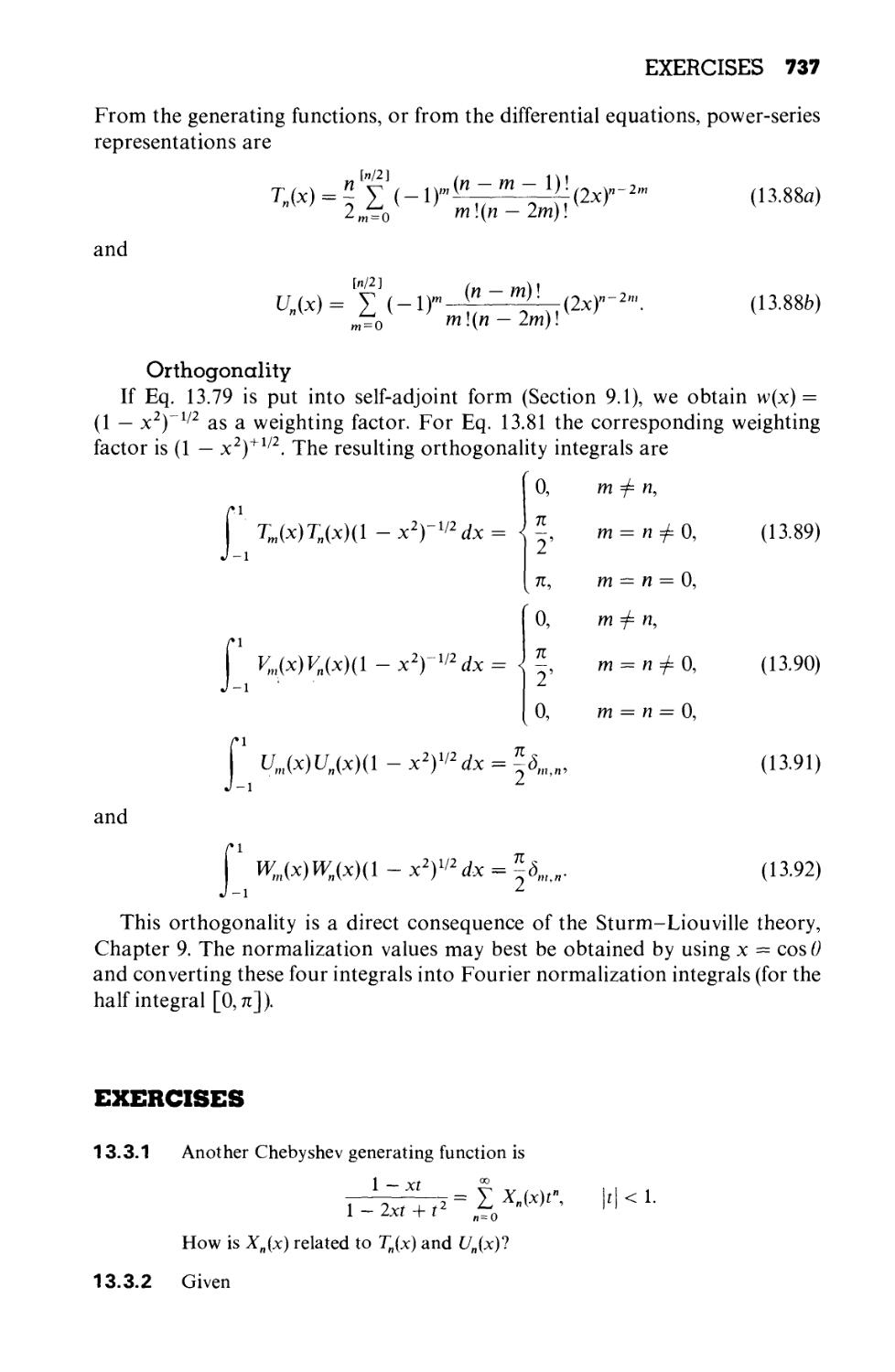

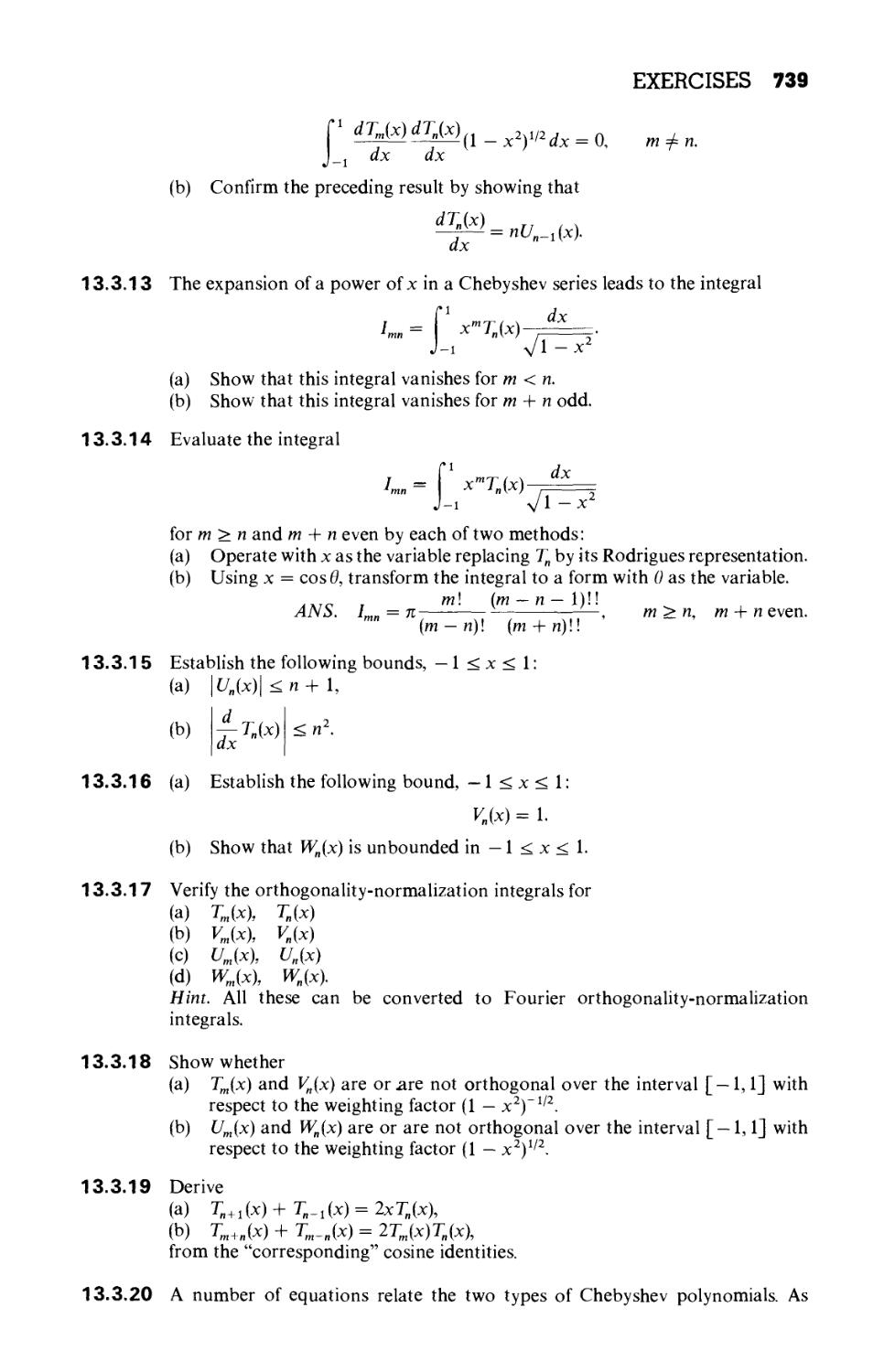

13.3 Chebyshev (Tschebyscheff) Polynomials 731

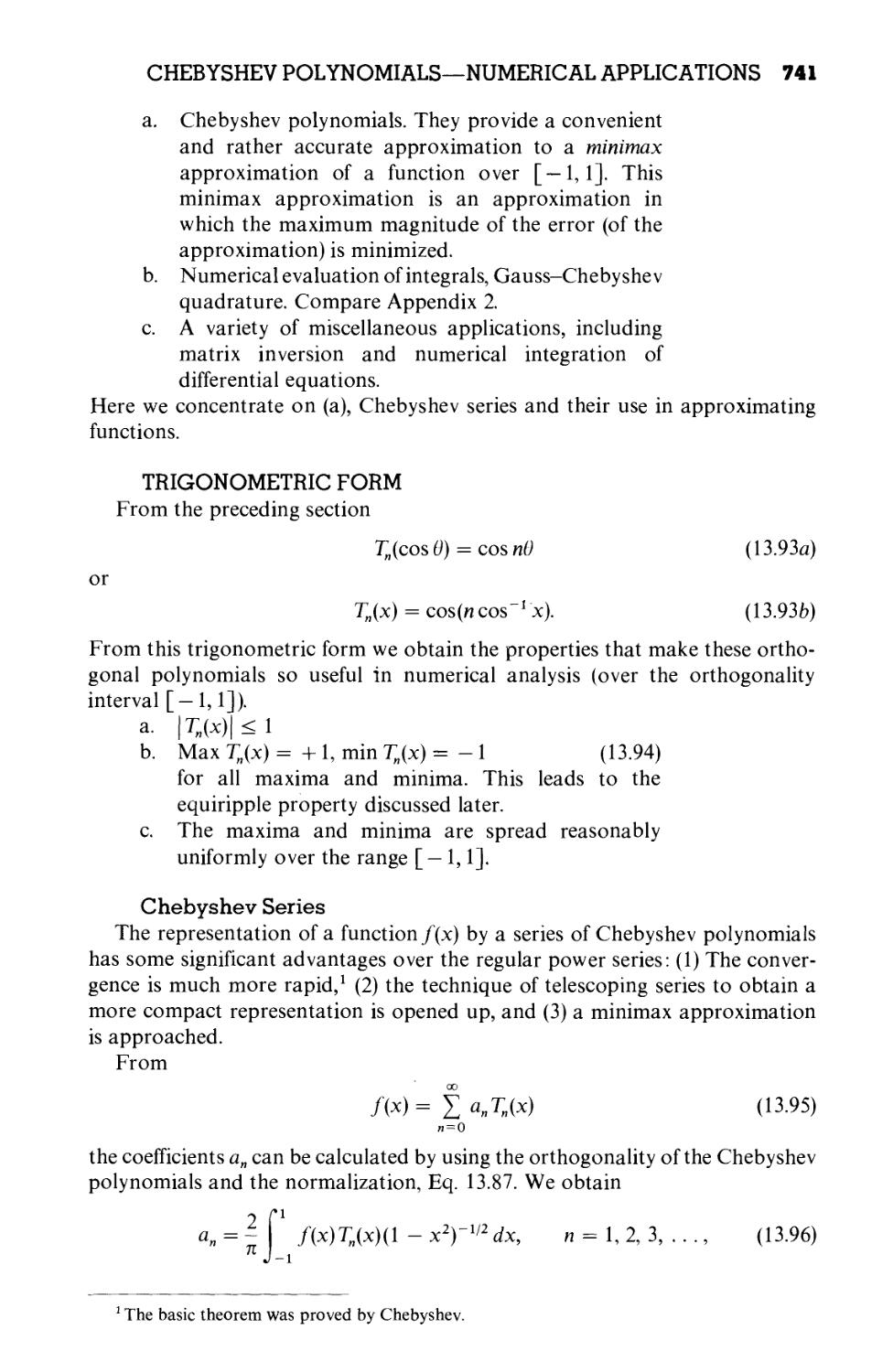

13.4 Chebyshev Polynomials—Numerical

Applications 740

13.5 Hypergeometric Functions 748

13.6 Confluent Hypergeometric Functions 753

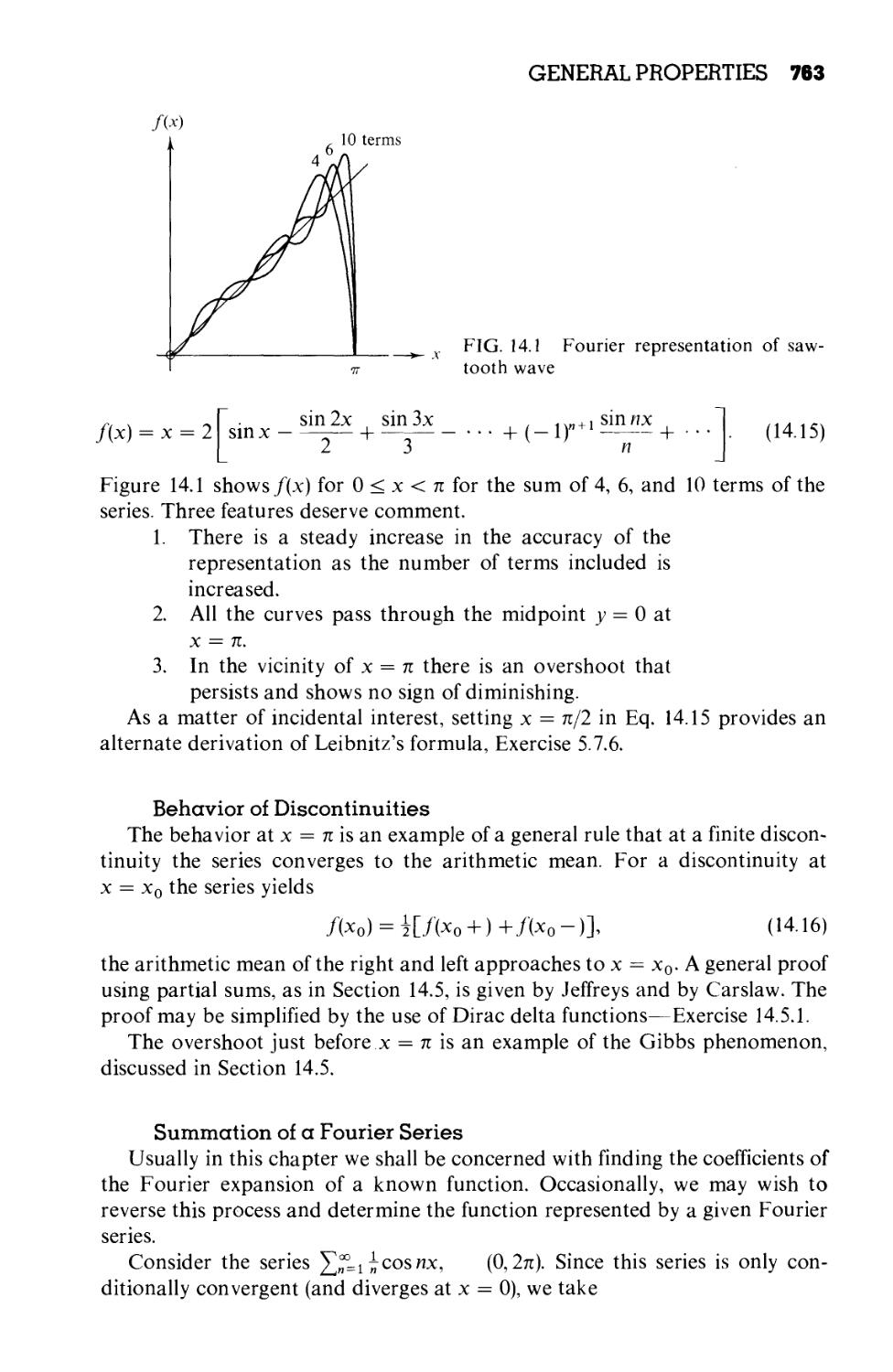

Chapter 14 FOURIER SERIES 760

14.1 General Properties 760

14.2 Advantages, Uses of Fourier Series 766

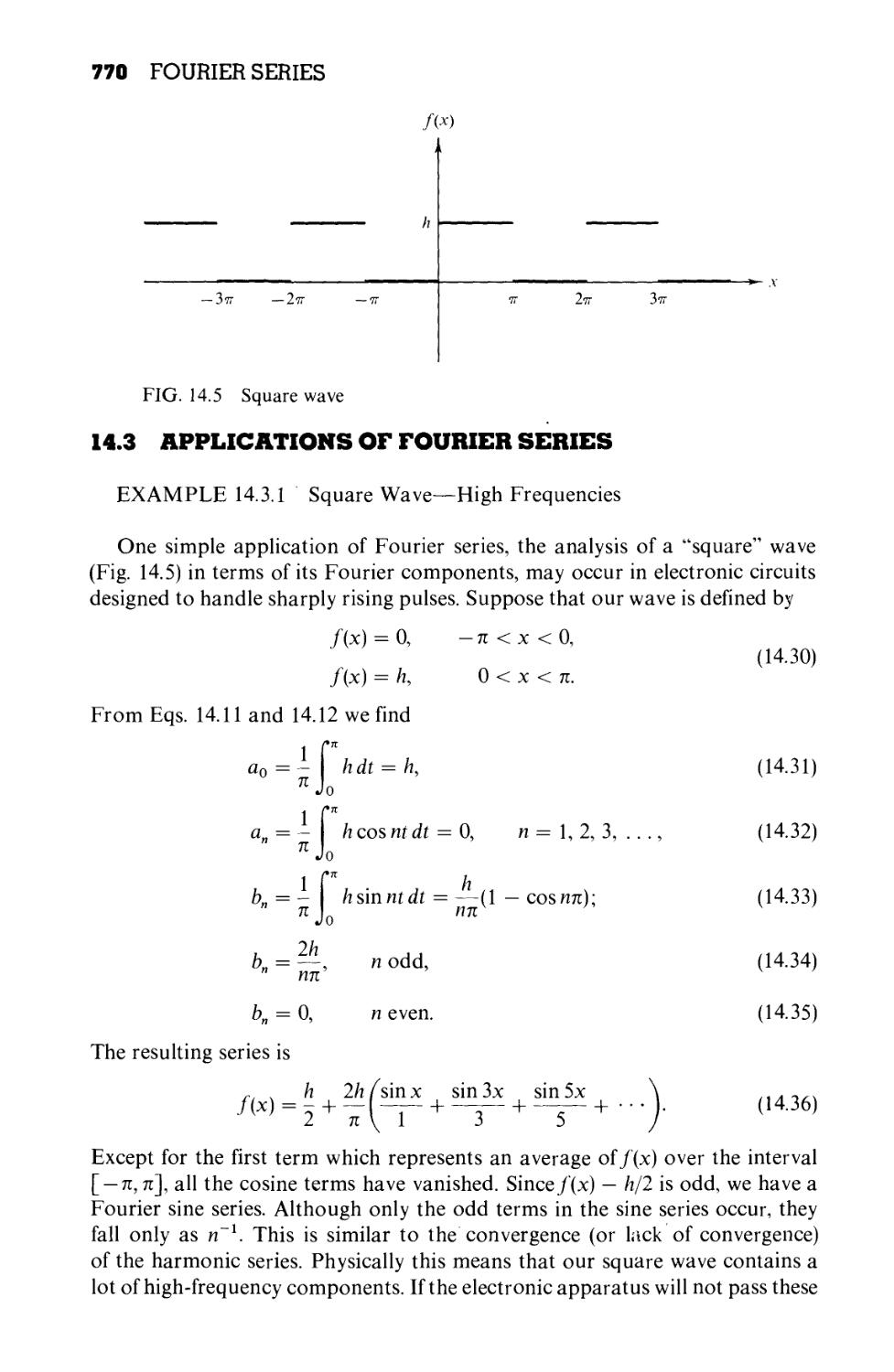

14.3 Applications of Fourier Series 770

14.4 Properties of Fourier Series 778

14.5 Gibbs Phenomenon 783

14.6 Discrete Orthogonality—Discrete Fourier

Transform 787

CONTENTS xi

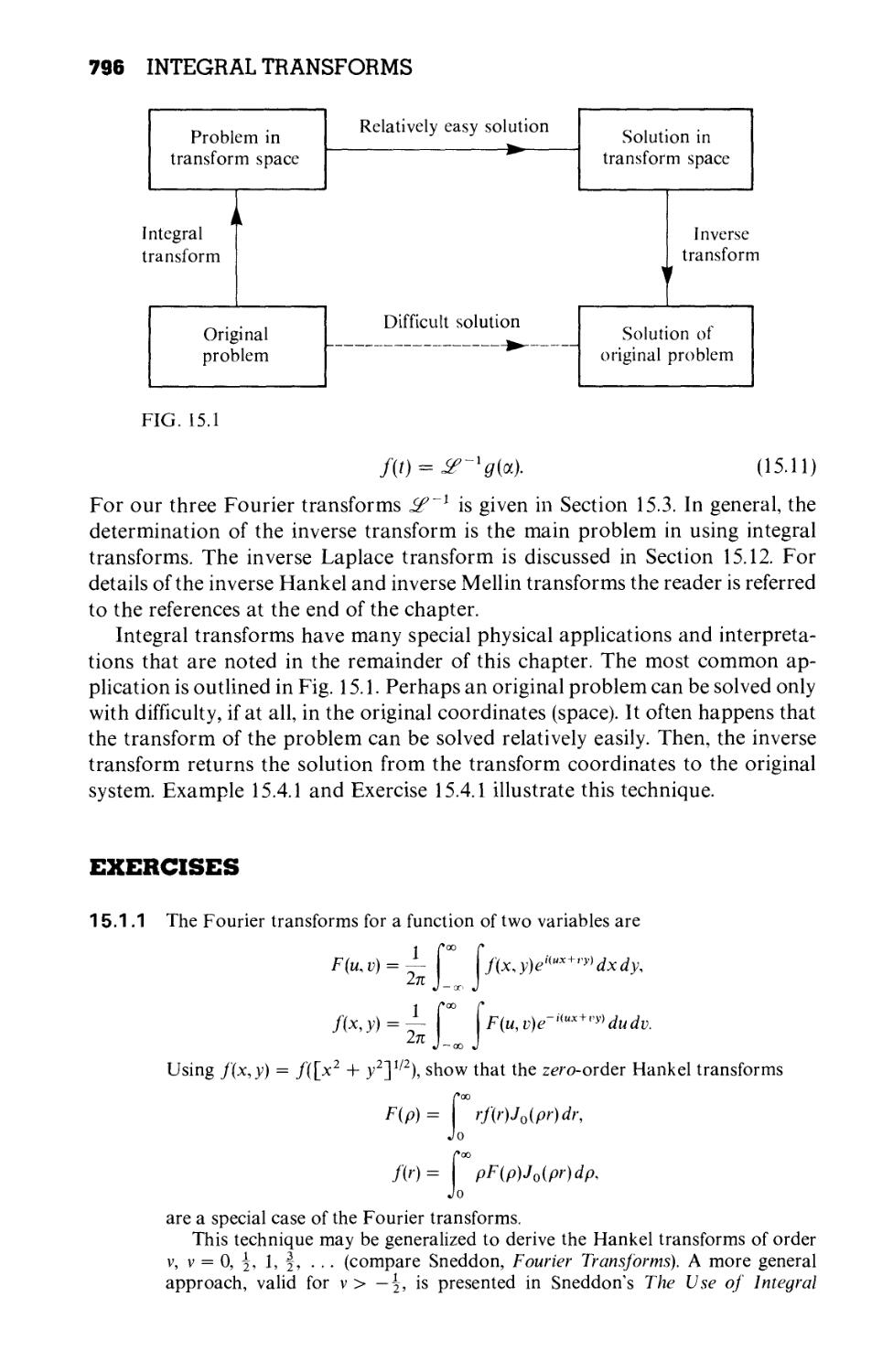

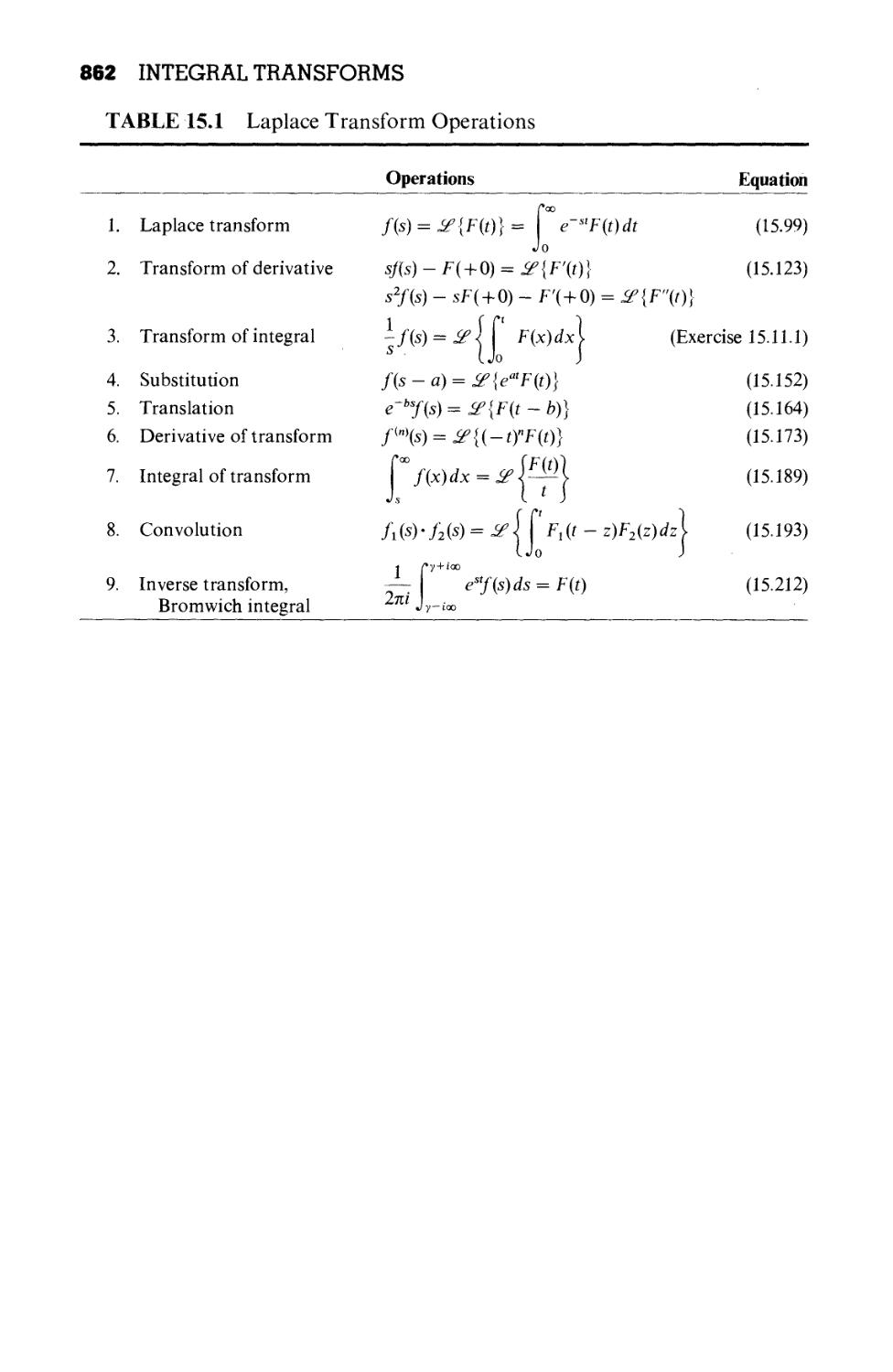

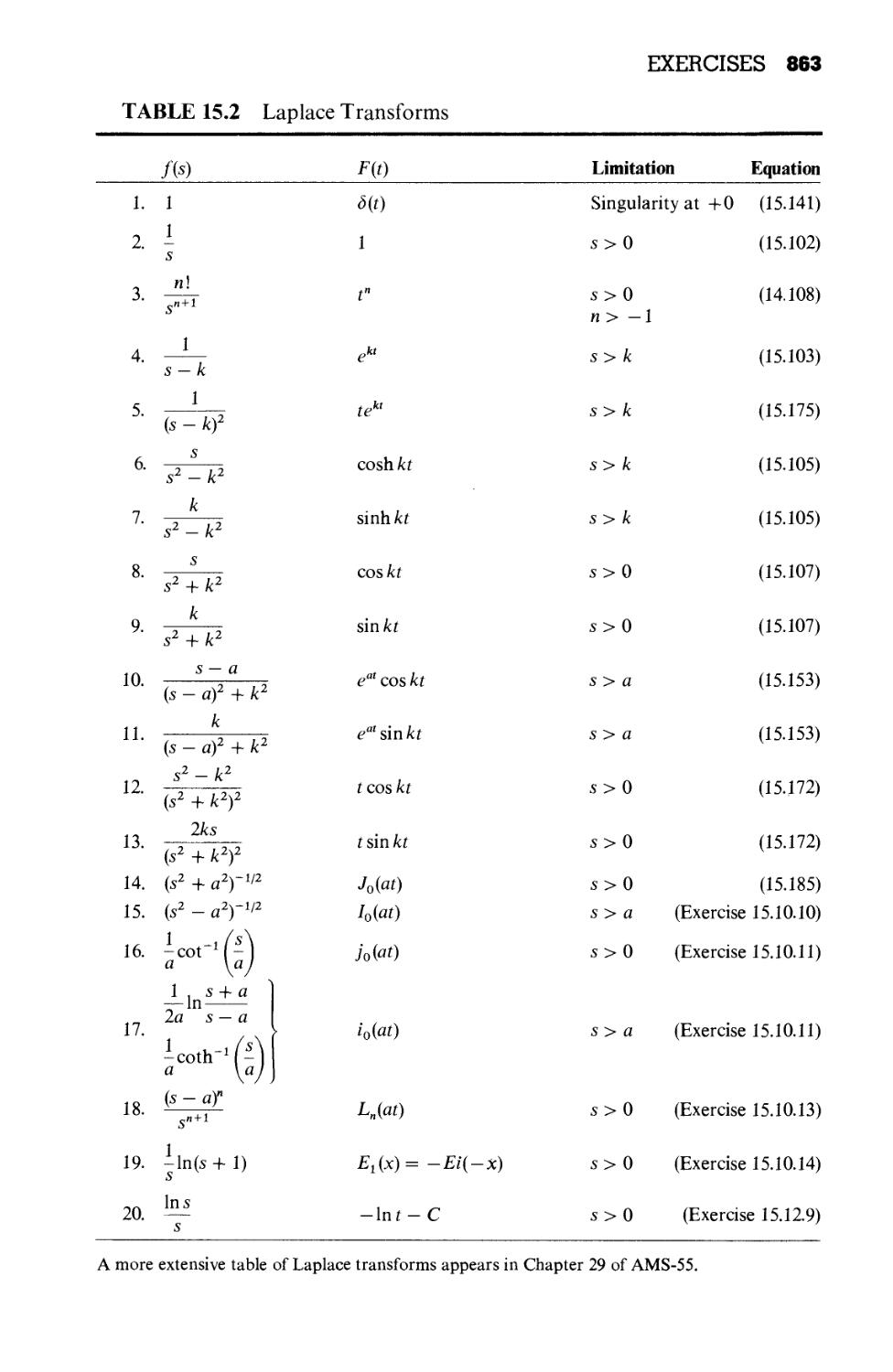

Chapter 15 INTEGRAL TRANSFORMS 794

15.1 Integral Transforms 794

15.2 Development of the Fourier Integral 797

15.3 Fourier Transforms—Inversion Theorem

15.4 Fourier Transform of Derivatives 807

15.5 Convolution Theorem 810

15.6 Momentum Representation 814

15.7 Transfer Functions 820

15.8 Elementary Laplace Transforms 824

15.9 Laplace Transform of Derivatives 831

15.10 Other Properties 838

15.11 Convolution or Faltung Theorem 849

15.12 Inverse Laplace Transformation 853

800

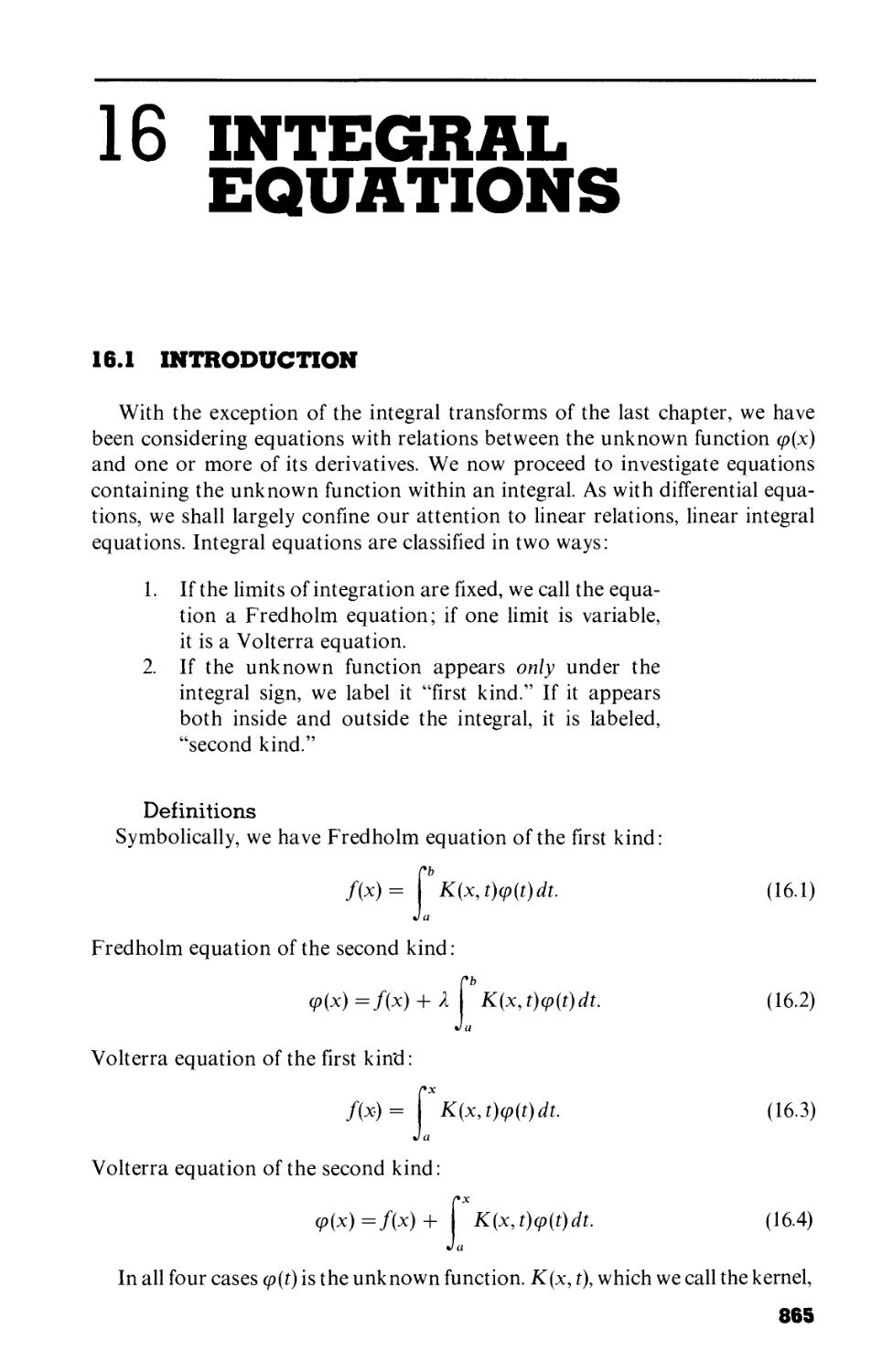

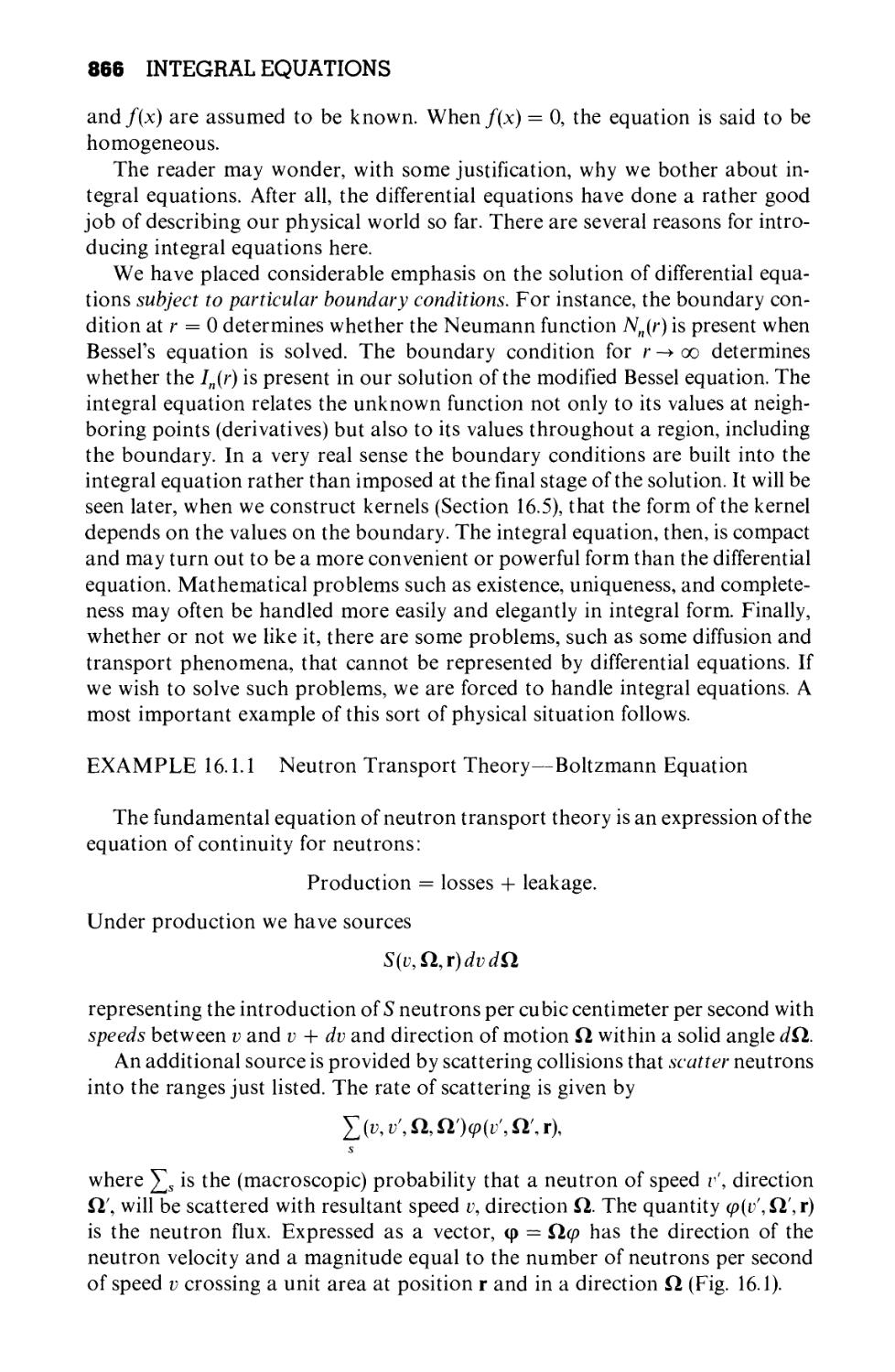

Chapter 16 INTEGRAL EQUATIONS 865

16.1 Introduction 865

16.2 Integral Transforms, Generating Functions 873

16.3 Neumann Series, Separable (Degenerate) Kernels

16.4 Hilbert-Schmidt Theory 890

16.5 Green's Functions—One Dimension 897

16.6 Green's Functions—Two and Three Dimensions

879

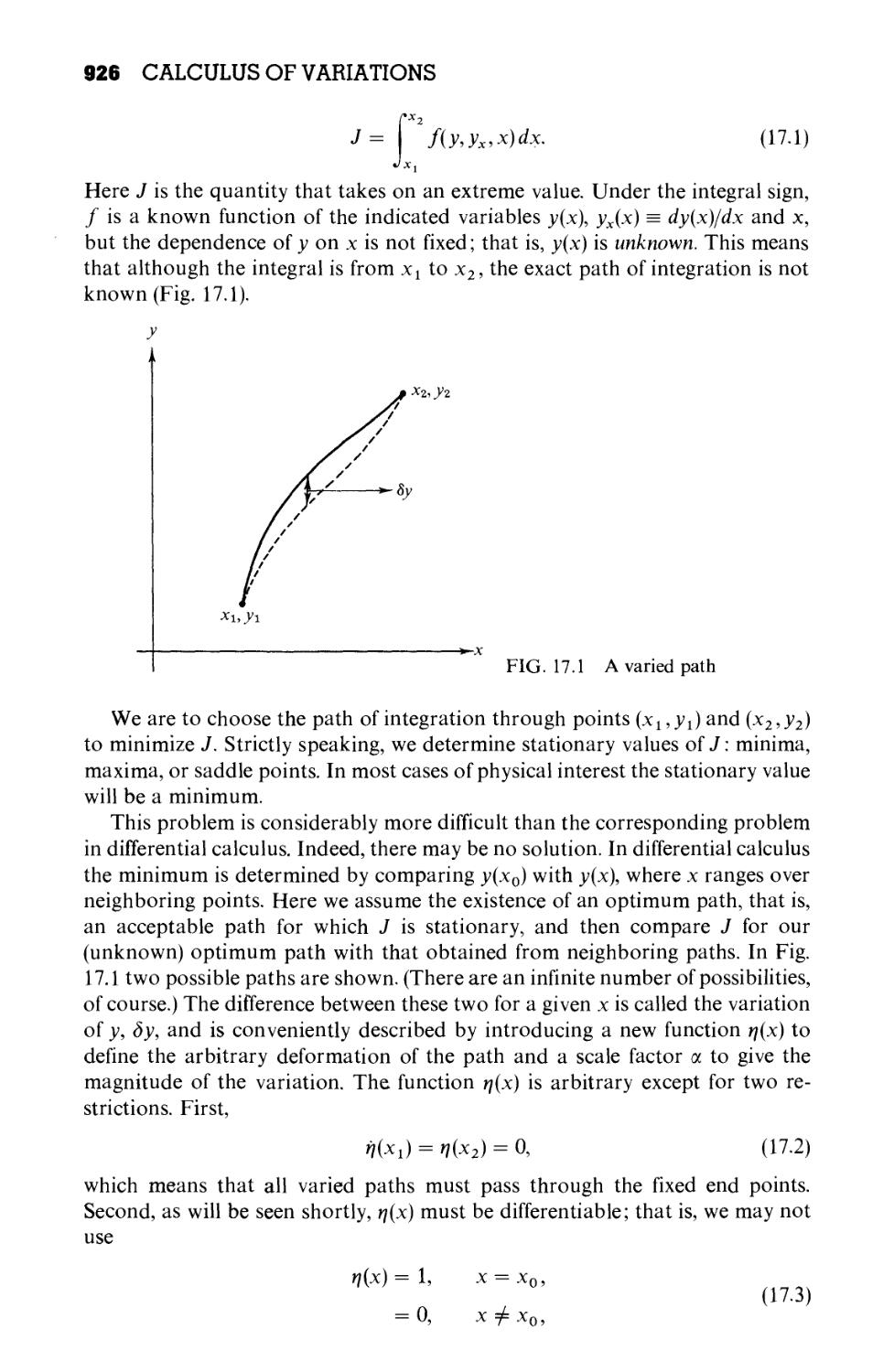

Chapter 17 CALCULUS OF VARIATIONS 925

17.1 One-Dependent and One-Independent Variable 925

17.2 Applications of the Euler Equation 930

17.3 Generalizations, Several Dependent Variables 937

17.4 Several Independent Variables 942

17.5 More Than One Dependent, More Than One

Independent Variable 944

17.6 Lagrangian Multipliers 945

17.7 Variation Subject to Constraints 950

17.8 Rayleigh-Ritz Variational Technique 957

Appendix 1 REAL ZEROS OF A FUNCTION 963

Appendix 2 GAUSSIAN QUADRATURE 968

GENERAL REFERENCES 974

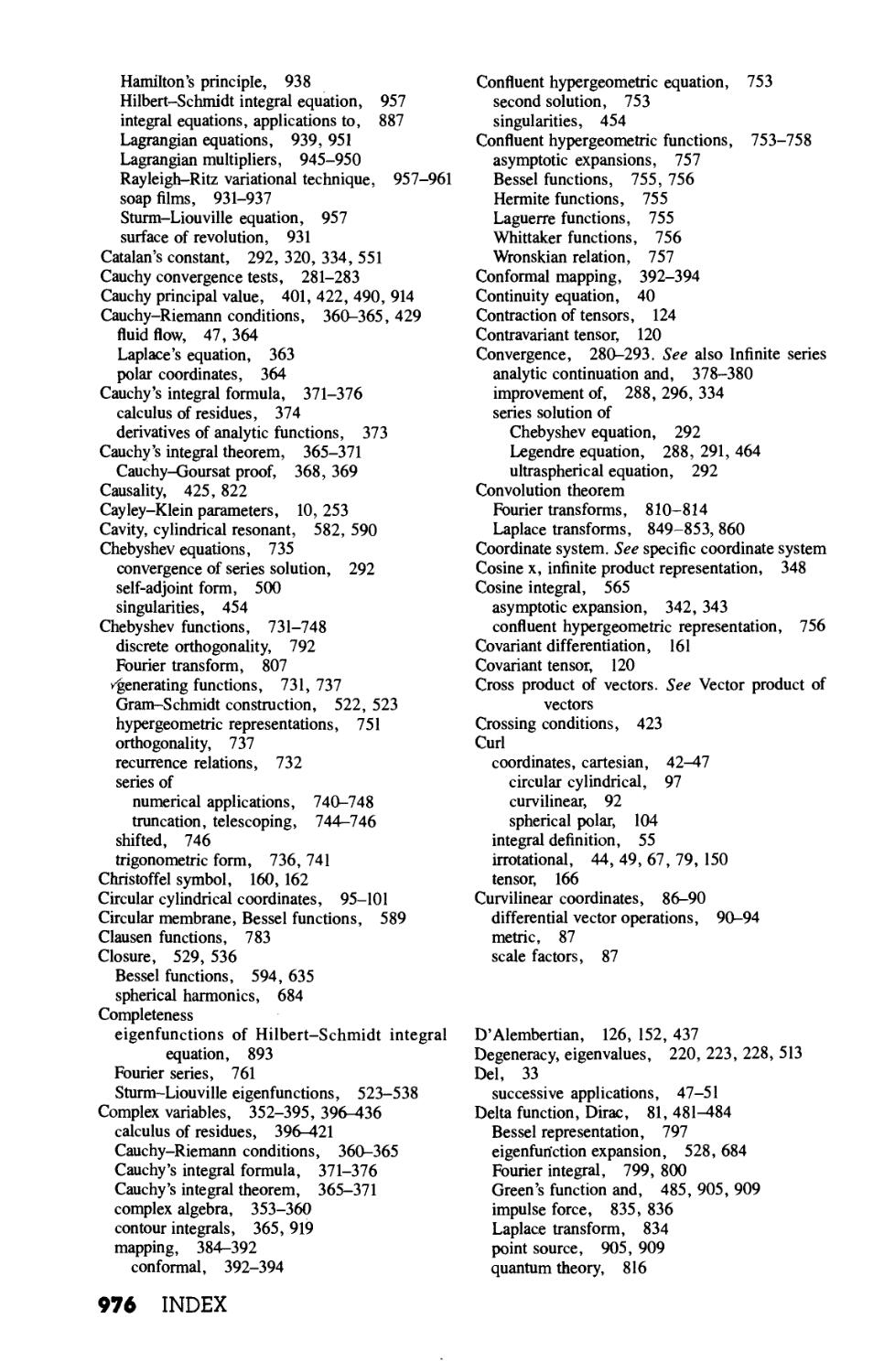

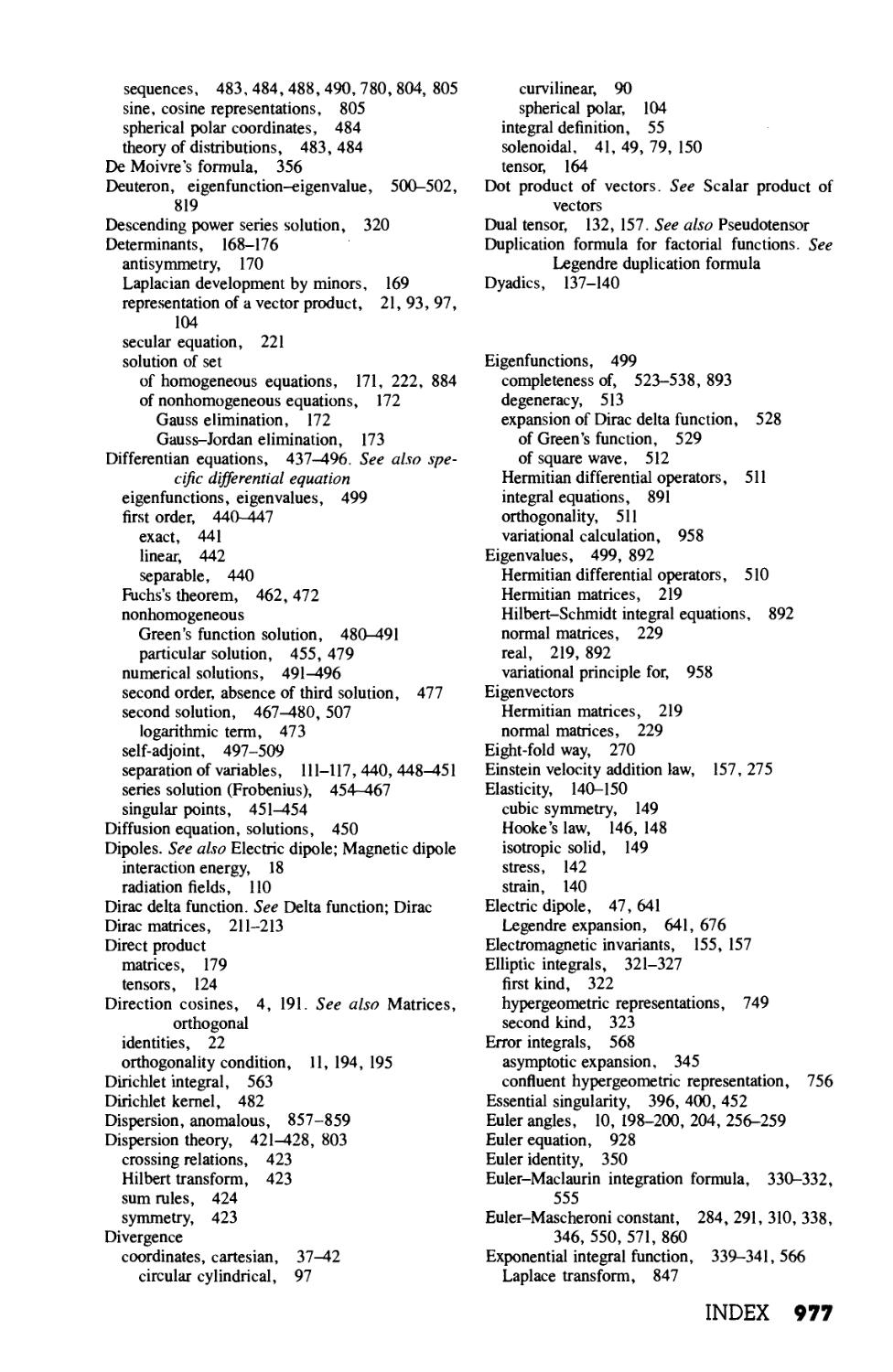

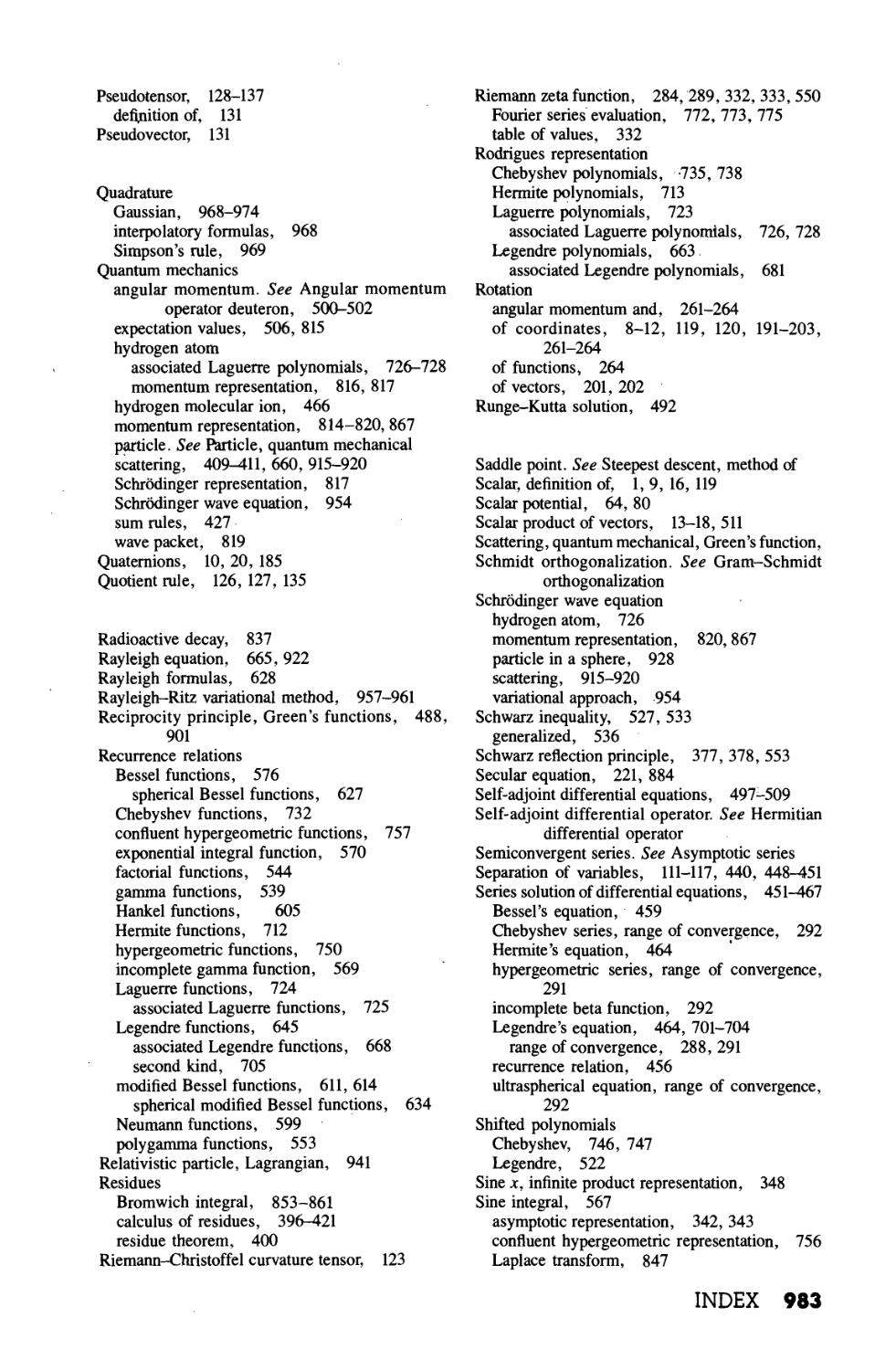

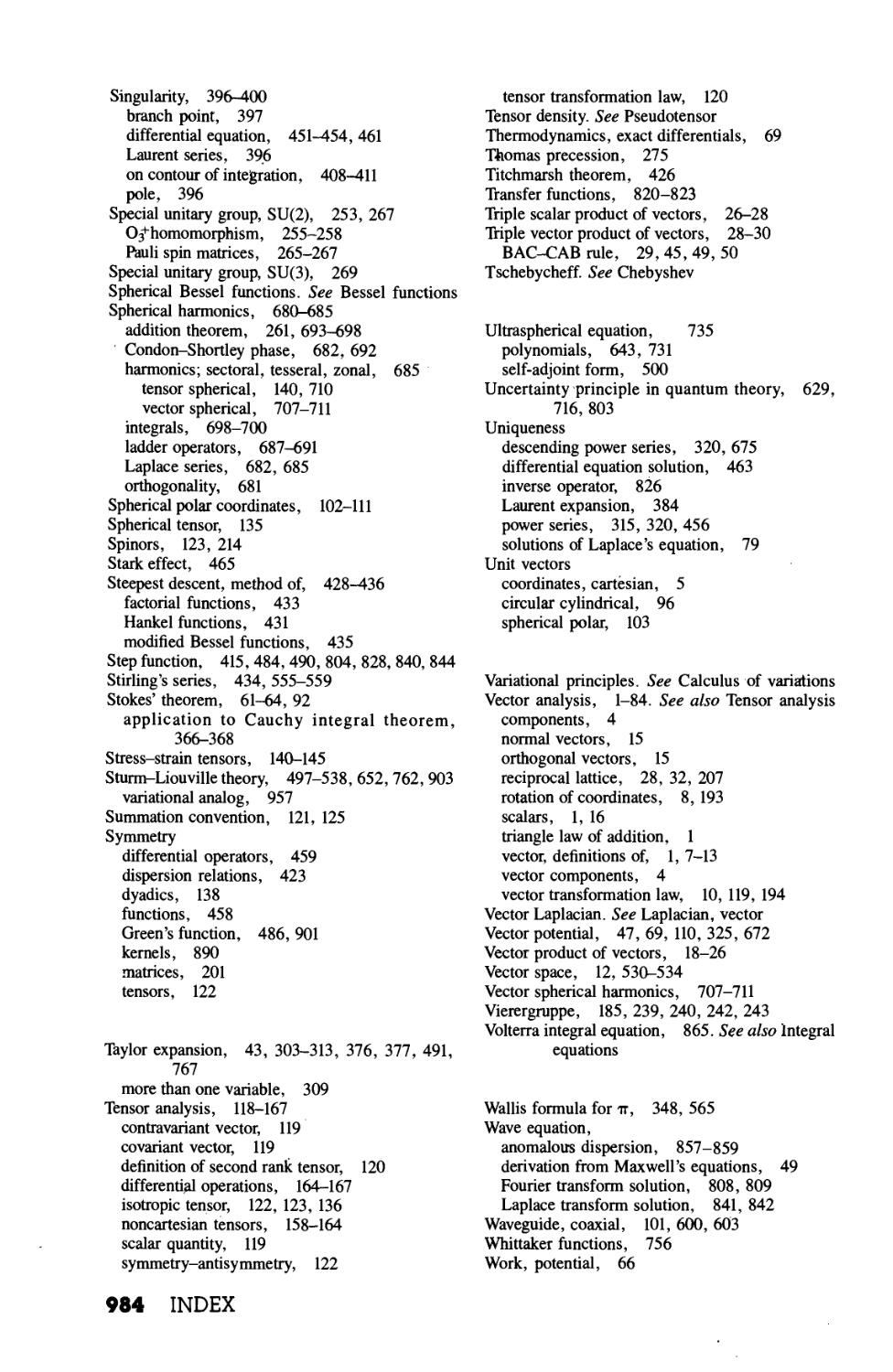

Index 975

PREFACE TO THE

THIRD EDITION

The many additions and revisions in this third edition of Mathematical

Methods for Physicists are based on 15 years of teaching from the second edition,

on the questions from current students, and on the advice of colleagues, reviewers,

and former students. Almost every section has been revised; many of the sections

have been completely rewritten. In most sections, there are new exercises, all class

tested. New sections have been added on non-Cartesian tensors, dispersion

theory, first-order differential equations, numerical application of Chebyshev

polynomials, the fast Fourier transform, and on transfer functions.

Throughout the text, I have placed significant additional emphasis on numer-

numerical applications and on the relation of these mathematical methods to comput-

computing and to numerical analysis.

For students studying graduate level physics, particularly theoretical physics,

a number of topics including Hermitian operators, Hilbert space, and the concept

of completeness have been expanded.

xiii

PREFACE TO THE

SECOND EDITION

This second edition of Mathematical Methods for Physicists incorporates a

number of changes, additions, and improvements made on the basis of experience

with the first edition and the helpful suggestions of a number of people. Major

revisions have been made in the sections on complex variables, Dirac delta func-

function, and Green's functions. New sections have been included on oblique co-

coordinates, Fourier-Bessel series, and angular momentum ladder operators. The

major addition is a series of sections on group theory. While these could have

been presented as a separate group theofy chapter, there seemed to be several

advantages to include them in Chapter 4, Matrices. Since the group theory is

developed in terms of matrices the arrangement seems a reasonable one.

xv

PREFACE TO THE

FIRST EDITION

Mathematical Methods for Physicists is based upon two courses in mathematics

for physicists given by the author over the past fourteen years, one at the junior

level and one at the beginning graduate level. This book is intended to provide

the student with the mathematics he needs for advanced undergraduate and

beginning graduate study in physical science and to develop a strong background

for those who will continue into the mathematics of advanced theoretical physics.

A mastery of calculus and a willingness to build on this mathematical foundation

are assumed.

This text has been organized with two basic principles in view. First, it has been

written in a form that it is hoped will encourage independent study. There are

frequent cross references but no fixed, rigid page-by-page or chapter-by-chapter

sequence is demanded.

The reader will see that mathematics as a language is beautiful and elegant.

Unfortunately, elegance all too often means elegance for the expert and obscurity

for the beginner. While still attempting to point out the intrinsic beauty of mathe-

mathematics, elegance has occasionally been reluctantly but deliberately sacrificed in

the hope of achieving greater flexibility and greater clarity for the student.

Mathematical rigor has been treated in a similar spirit. It is not stressed to the

point of becoming a mental block to the use of mathematics. Limitations are

explained, however, and warnings given against blind, uncomprehending appli-

application of mathematical relations.

The second basic principle has been to emphasize and re-emphasize physical

examples in the text and in the exercises to help motivate the student, to illustrate

the relevance of mathematics to his science and engineering.

This principle has also played a decisive role in the selection and development

of material. The subject of differential equations, for example, is no longer a

series of trick solutions of abstract, relatively meaningless puzzles but the solu-

solutions and general properties of the differential equations the student will most

frequently encounter in a description of our real physical world.

xvii

ACKNOWLEDGMENTS

A major revision of this sort necessarily represents the influence and help of

many people. Many of the revisions resulted from current students' requests for

clarification. Many of the additions were a response to the comments and advice

of former students. To all my students, my thanks for their help. Professor P. A.

Macklin has been most helpful with his suggestions and corrections. The final

form of this text owes much to the talents of Senior Editor Jeff Holtmeier of

Academic Press, Inc. and of Carol Kosik of Editing, Design & Production, Inc. A

special acknowledgment is owed Mrs. Jane Kelly for so patiently and conscien-

conscientiously typing this manuscript.

XIX

INTRODUCTION

Many of the physical examples used to illustrate the applications of mathemat-

mathematics are taken from the fields of electromagnetic theory and quantum mechanics.

For convenience the main equations are listed below and the symbols identified.

References in these fields are also given.

ELECTROMAGNETIC THEORY

MAXWELL'S EQUATIONS (MKS UNITS—VACUUM)

6B

\ D = p V x E= -

dt

VB = 0 V xH = ?U

dt

Here E is the electric field defined in terms of force on a static charge and В the

magnetic induction defined in terms of force on a moving charge. The related

fields D and H are given (in vacuum) by

D = £0E and В = jU0H

The quantity p represents free charge density while J is the corresponding

current. The electric field E and the magnetic induction В are often expressed in

terms of the scalar potential cp and the magnetic vector potential A.

ЗА

E = ?<p B = V x A

dt

For additional details see: J. M. Marion, Classical Electromagnetic Radiation,

New York: Academic Press A965); J. D. Jackson, Classical Electrodynamics, 2nd

ed. New York: Wiley A975).

Note that Marion and Jackson prefer Gaussian units. A glance at the last two

xxi

xxii INTRODUCTION

texts and the great demands they make upon the student's mathematical com-

competence should provide considerable motivation for the study of this book.

QUANTUM MECHANICS

SCHRODINGER WAVE EQUATION (TIME INDEPENDENT)

h2

-—VV +

2m

ф is the (unknown) wave function. The potential energy, often a function of

position, is denoted by V while E is the total energy of the system. The mass of the

particle being described by \jj\sm.h is Planck's constant h divided by In. Among

the extremely large number of beginning or intermediate texts we might note: A.

Messiah, Quantum Mechanics B vols), New York; Wiley A961): R. H. Dicke and J.

P. Wittke, Introduction to Quantum Mechanics, Reading Mass.: Addison-Wesley

A960); E. Merzbacher, Quantum Mechanics, 2nd Ed. New York: Wiley A970).

1 VECTOR

ANALYSIS

1.1 DEFINITIONS, ELEMENTARY APPROACH

In science and engineering we frequently encounter quantities that have

magnitude and magnitude only: mass, time, and temperature. These we label

scalar quantities. In contrast, many interesting physical quantities have mag-

magnitude and, in addition, an associated direction. This second group includes

displacement, velocity, acceleration, force, momentum, and angular momen-

momentum. Quantities with magnitude and direction are labeled vector quantities.

Usually, in elementary treatments, a vector is defined as a quantity having

magnitude and direction. To distinguish vectors from scalars, we identify vector

quantities with boldface type, that is, V.

As an historical sidelight, it is interesting to note that the vector quantities

listed are all taken from mechanics but that vector analysis was not used in the

development of mechanics and, indeed, had not been created. The need for

vector analysis became apparent only with the development of Maxwell's

electromagnetic theory and in appreciation of the inherent vector nature of

quantities such as the electric field and magnetic field.

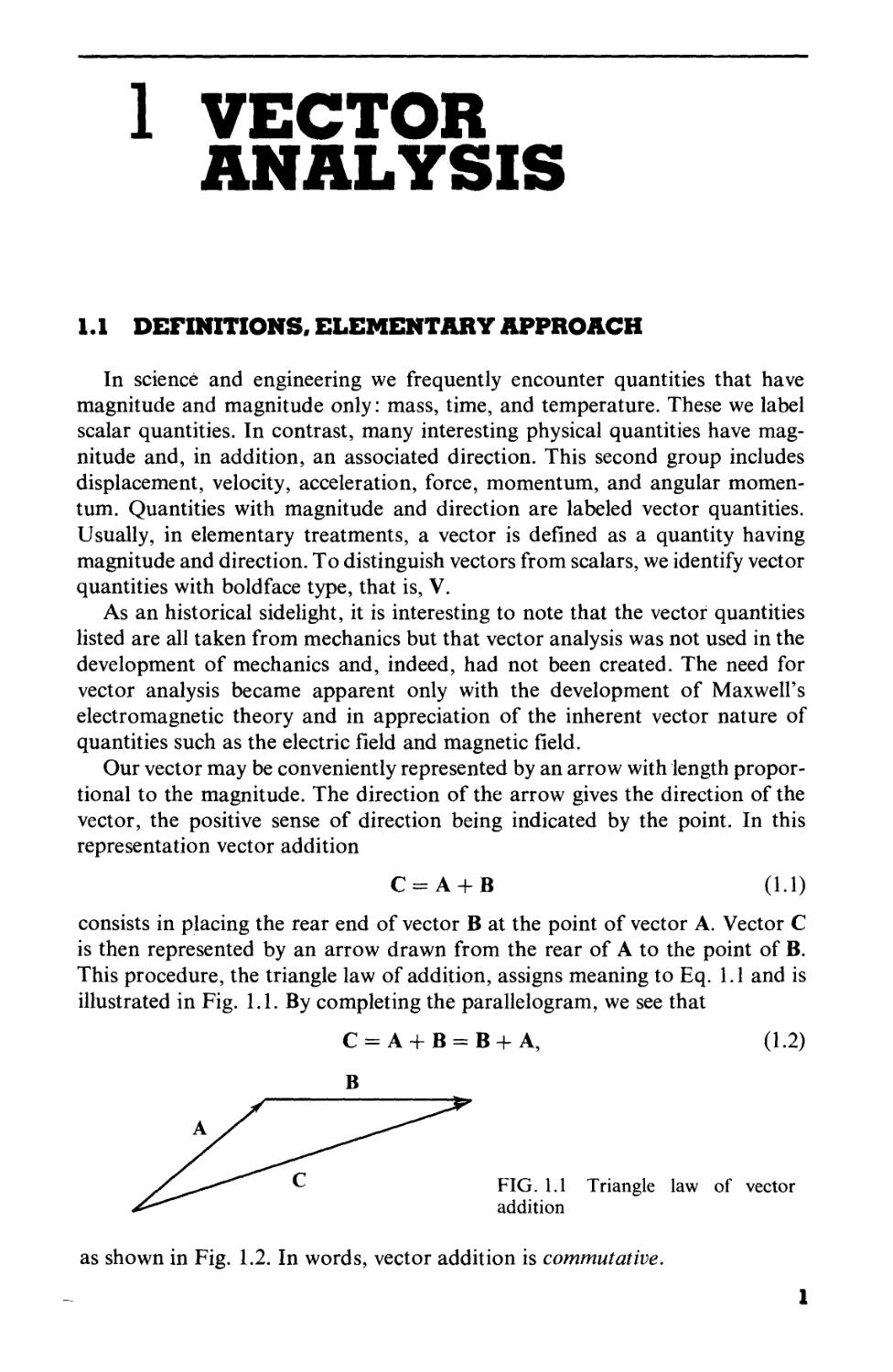

Our vector may be conveniently represented by an arrow with length propor-

proportional to the magnitude. The direction of the arrow gives the direction of the

vector, the positive sense of direction being indicated by the point. In this

representation vector addition

C = A + B A.1)

consists in placing the rear end of vector В at the point of vector A. Vector С

is then represented by an arrow drawn from the rear of A to the point of B.

This procedure, the triangle law of addition, assigns meaning to Eq. 1.1 and is

illustrated in Fig. 1.1. By completing the parallelogram, we see that

C = A + B = B + A, A.2)

FIG. 1.1 Triangle law of vector

addition

as shown in Fig. 1.2. In words, vector addition is commutative.

2 VECTOR ANALYSIS

FIG. 1.2 Parallelogram law of vec-

vector addition

For the sum of three vectors

D = A + B + C,

Fig. 1.3, we may first add A and В

A + В = E.

Then this sum is added to С

D = E + С.

Similarly, we may first add В and С

В + С = F.

Then

D = A + F.

In terms of the original expression,

(A + В) + С = A + (B + C).

Vector addition is associative.

FIG. 1.3 Vector addition is associa-

associative

A direct physical example of the parallelogram addition law is provided by

a weight suspended by two cords. If the junction point (O in Fig. 1.4) is in

equilibrium, the vector sum of the two forces F2 and F2 must just cancel the

downward force of gravity, F3. Here the parallelogram addition law is subject

to immediate experimental verification.*

1 Strictly speaking the parallelogram addition was introduced as a definition.

Experiments show that if we assume that the forces are vector quantities and

we combine them by parallelogram addition the equilibrium condition of

zero resultant force is satisfied.

DEFINITIONS, ELEMENTARY APPROACH 3

FIG. 1.4 Equilibrium of forces. F1 + F2 = -F3

Subtraction may be handled by defining the negative of a vector as a vector

of the same magnitude but with reversed direction. Then

In Fig. 1.3

A = E - B.

Note that the vectors are treated as geometrical objects that are independent

of any coordinate system. Indeed, we have not yet introduced a coordinate

system. This concept of independence of a preferred coordinate system is

developed in considerable detail in the next section.

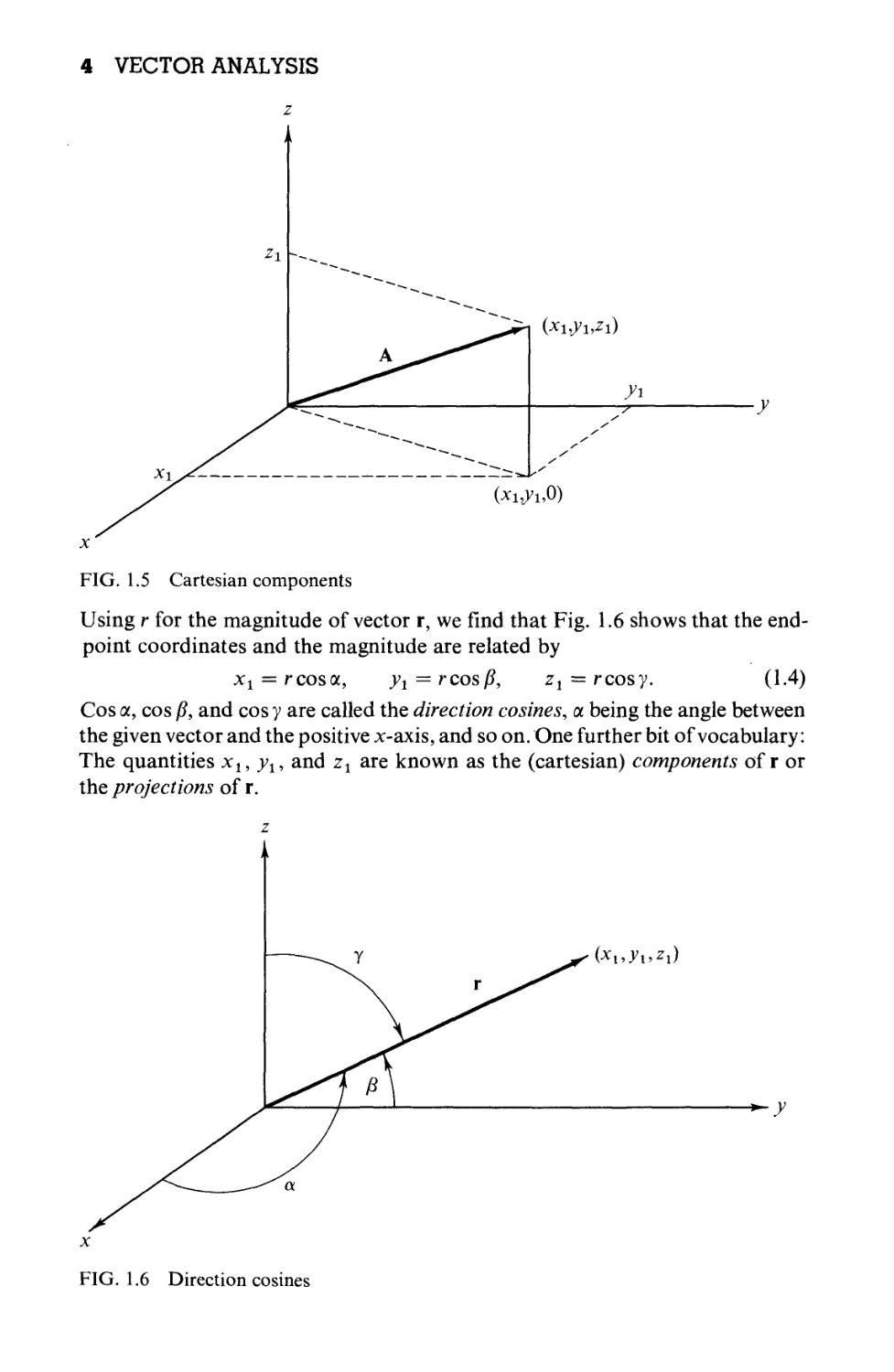

The representation of vector A by an arrow suggests a second possibility.

Arrow A (Fig. 1.5), starting from the origin,2 terminates at the point (xt, yx, zx).

Thus, if we agree that the vector is to start at the origin, the positive end may

be specified by giving the cartesian coordinates (xx,yx, zx) of the arrow head.

Although A could have represented any vector quantity (momentum,

electric field, etc.,), one particularly important vector quantity, the displacement

from the origin to the point (xx, yx, zx), is denoted by the special symbol r. We

then have a choice of referring to the displacement as either the vector г or the

collection (xx,y1,z1), the coordinates of its end point.

*,*i). A-3)

2 The reader will see that we could start from any point in our cartesian

reference frame, we choose the origin for simplicity.

4 VECTOR ANALYSIS

FIG. 1.5 Cartesian components

Using r for the magnitude of vector r, we find that Fig. 1.6 shows that the end-

point coordinates and the magnitude are related by

xt = rcosot, yx = rcos/3, zx = rcosy. A.4)

Cos a, cos /?, and cos у are called the direction cosines, a. being the angle between

the given vector and the positive x-axis, and so on. One further bit of vocabulary:

The quantities xl9 yl, and zx are known as the (cartesian) components of г or

the projections of r.

FIG. 1.6 Direction cosines

DEFINITIONS, ELEMENTARY APPROACH 5

If we proceed in the same manner, any vector A may be resolved into its

components (or projected onto the coordinate axes) to yield

Ax = Acosa, A.5)

in which a is the angle between A and the positive x-axis. Again, we may choose

to refer to the vector as a single quantity A or to its components (Ax,Ay,Az).

Note that the subscript x in Ax denotes the x component and not a dependence

on the variable x. Ax may be a function of x, y, and z as Ax(x,y,z). The choice

between using A or its components (Ax,Ay,Az) is essentially a choice between

a geometric or an algebraic representation. In the language of group theory

(Chapter 4), the two representations are isomorphic.

Use either representation at your convenience. The geometric "arrow in,

space" may aid in visualization. The algebraic set of components is usually

much more suitable for precise numerical or algebraic calculations.

Vectors enter physics in two distinct forms. A) Vector A may represent a

single force acting at a single point. The force of gravity acting at the center of

gravity illustrates this form. B) Vector A may be defined over some extended

region; that is, A and its components may be functions of position: Ax =

Ax(x,y,z), and so on. Examples of this sort include the velocity of a fluid

varying from point to point over a given volume and electric and magnetic

fields. Some writers distinguish these two cases by referring to the vector

defined over a region as a vector field. The concept of the vector defined over a

region and being a function of position will be extremely important in Section

1.2 and in later sections where we differentiate and integrate vectors.

At this stage it is convenient to introduce unit vectors along each of the

coordinate axes. Let i be a vector of unit magnitude pointing in the positive

x-direction, j, a vector of unit magnitude in the positive ^-direction, and к, а

vector of unit magnitude in the positive z-direction. Then \AX is a vector with

magnitude equal to Ax and in the positive x-direction. By vector addition

A = iAx+jAy + kAz, A.6)

which states that a vector equals the vector sum of its components. Note that if

A vanishes, all of its components must vanish individually; that is, if

A = 0, then Ax = Ay = Az = 0.

Finally, by the Pythagorean theorem, the magnitude of vector A is

A = (A2X + A] + Al1I/2. A.7a)

This resolution of a vector into its components can be carried out in a variety

of coordinate systems, as shown in Chapter 2. Here we restrict ourselves to

cartesian coordinates.

Equation 1.6 is actually an assertion that the three unit vectors i, j, and к

span our real three-dimensional space: Any constant vector may be written as

a linear combination of i, j, and k. Since i, j, and к are linearly independent

(no one is a linear combination of the other two), they form a basis for the real

three-dimensional space.

6 VECTOR ANALYSIS

As a replacement of the graphical technique, addition and subtraction of

vectors may now be carried out in terms of their components. For A = \AX +

}Ay + kAz and В = \BX + jBy + kBz,

A + В = i(Ax ± Bx) + j(Ay ± By) + k(Az + Bz). A.76)

EXAMPLE 1.1.1

Let

A = 6i + 4j + 3k

В = 2i - 3j - 3k.

Then by Eq. 1.76

A + В = 8i + j

and

A - В = 4i + 7j + 6k.

It should be emphasized here that the unit vectors i, j, and к are used for

convenience. They are not essential; we can describe vectors and use them

entirely in terms of their components: A<-+(AX, Ay, Az). This is the approach of

the two more powerful, more sophisticated definitions of vector discussed in

the next section. However, i, j, and к emphasize the direction, which will be

useful in Chapter 2.

So far we have defined the operations of addition and subtraction of vectors.

Three varieties of multiplication are defined on the basis of their applicability:

a scalar or inner product in Section 1.3, a vector product peculiar to three-

dimensional space in, Section 1.4, and a direct or outer product yielding a

second-rank tensor in Section 3.2. Division by a vector is not defined. See

Exercises 4.2.21 and 22.

EXERCISES

1.1.1 Show how to find A and B, given A + В and A — B.

1.1.2 The vector A whose magnitude is 10 units makes equal angles with the coordinate

axes. Find Ax, Ay, and Az.

1.1.3 Calculate the components of a unit vector that lies in the xy-plane and makes

equal angles with the positive directions of the x- and j'-axes.

1.1.4 The velocity of sailboat A relative to sailboat B, vrel, is defined by the equation

Vrei = V4 — vB, where V4 is the velocity of A and vB is the velocity of B. Determine

the velocity of A relative to В if

V4 = 30 km/hr east

vB = 40 km/hr north

ANS. vrc, = 50 km/hr, 53.1° south of east.

ADVANCED DEFINITIONS 7

1.1.5 A sailboat sails for 1 hr at 4 km/hr (relative to the water) on a steady compass

heading of 40° east of north. The sailboat is simultaneously carried along by a

current. At the end of the hour the boat is 6.12 km from its starting point. The

line from its starting point to its location lies 60° east of north. Find the x (east-

(easterly) and у (northerly) components of the water's velocity.

ANS. v^t = 2.73 km/hr, t>north = 0 km/hr.

1.1.6 A vector equation can be reduced to the form A = B. From this show that the

one vector equation is equivalent to three scalar equations.

Assuming the validity of Newton's second law F = ma as a vector equation,

this means that ax depends only on Fx and is independent of Fy and Fz.

1.1.7 The vertices of a triangle A, B, and Care given by the points (—1,0,2), @,1,0),

and A, — 1,0), respectively. Find point D so that the figure ABDC forms a plane

parallelogram.

ANS. B,0,-2).

1.1.8 A triangle is defined by the vertices of three vectors, A, B, and С that extend

from the origin. In terms of A, B, and С show that the vector sum of the successive

sides of the triangle (AB + ВС + CA) is zero.

1.1.9 A sphere of radius a is centered at a point r1.

(a) Write out the algebraic equation for the sphere.

(b) Write out a vector equation for the sphere.

ANS. (a) (x-x1J + (y-ylJ + (z-z1J = a2.

(b) г = rj + a.

(a takes on all directions but has a fixed magnitude, a.)

1.1.10 A corner reflector is formed by three mutually perpendicular reflecting surfaces.

Show that a ray of light incident upon the corner reflector (striking all three

surfaces) is reflected back along a line parallel to the line of incidence.

Hint. Consider the effect of a reflection on the components of a vector describing

the direction of the light ray.

1.1.11 Hubble's law. Hubble found that distant galaxies are receding with a velocity

proportional to their distance from where we are on Earth. For the /th galaxy

V; = #or;

with us at the origin. Show that this recession of the galaxies from us does not

imply that we are at the center of the universe. Specifically, take the galaxy

at rx as a new origin and show that Hubble's law is still obeyed.

1.2 ADVANCED DEFINITIONS*

In the preceding section vectors were defined or represented in two equiv-

equivalent ways: A) geometrically by specifying magnitude and direction, as with an

arrow, and B) algebraically by specifying the components relative to cartesian

coordinate axes. The second definition is adequate for the vector analysis of

this chapter. In this section two more refined, sophisticated, and powerful

*This section is optional. It is not essential for the remaining sections of this

chapter.

8 VECTOR ANALYSIS

definitions are presented. First, the vector field is defined in terms of the

behavior of its components under rotation of the coordinate axes. This trans-

transformation theory approach leads into the tensor analysis of Chapter 3. Second,

the component definition of Section 1.1 is refined and generalized according to

the mathematician's concepts of vector and vector space. This approach leads

to function spaces including the Hilbert space—Section 9.4.

ROTATION OF THE COORDINATE AXES

The definition of vector as a quantity with magnitude and direction breaks

down in advanced work. On the one hand, we encounter quantities, such as

elastic constants and index of refraction in anisotropic crystals, that have

magnitude and direction but which are not vectors. On the other hand, our

naive approach is awkward to generalize, to extend to more complex quantities.

We seek a new definition of vector field, using our displacement vector r as a

prototype.

There is an important physical basis for our development of a new definition.

We describe our physical world by mathematics, but it and any physical

predictions we may make must be independent of our mathematical analysis.

Some writers compare the physical system to a building and the mathematical

analysis to the scaffolding used to construct the building. In the end the scaffold-

scaffolding is stripped off and the building stands.

In our specific case we assume that space is isotropic; that is, there is no

preferred direction or all directions are equivalent. Then the physical system

being analyzed or the physical law being enunciated cannot and must not

depend on our choice or orientation of the coordinate axes.

Now we return to the concept of vector r as a geometric object independent

of the coordinate system. Let us look at r in two different systems, one rotated

in relation to the other.

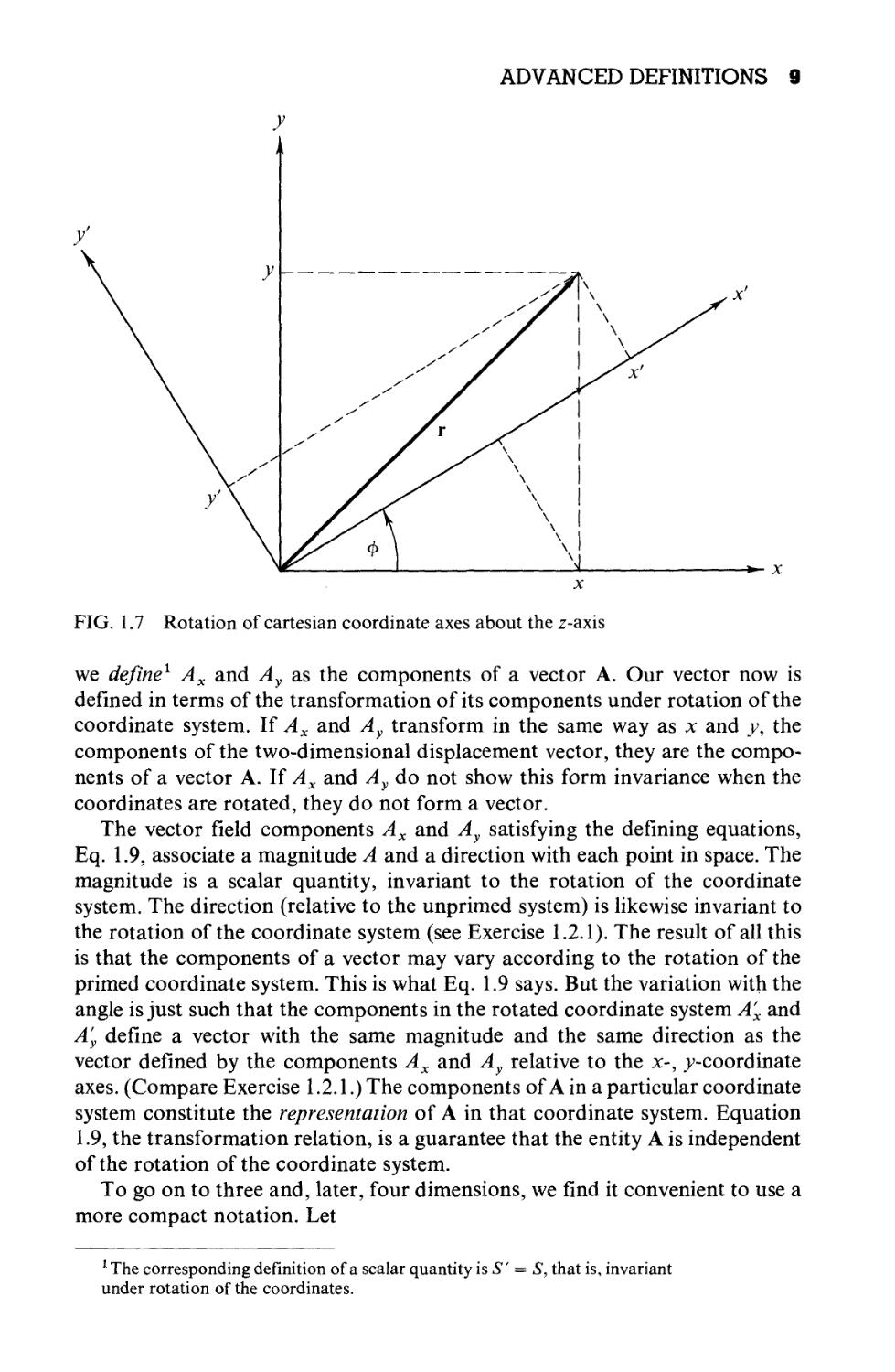

For simplicity we consider first the two-dimensional case. If the x-, y-

coordinates are rotated counterclockwise through an angle cp, keeping г fixed

(Fig. 1.7), we get the following relations between the components resolved in

the original system (unprimed) and those resolved in the new rotated system

(primed):

x' = x cos cp + v sin cp,

A.8)

y' — — x sin cp + у cos cp

We saw in Section 1.1 that a vector could be represented by the coordinates

of a point; that is, the coordinates were proportional to the vector components.

Hence the components of a vector must transform under rotation as coordinates

of a point (such as r). Therefore whenever any pair of quantities Ax(x, y) and

Ay(x, y) in the xy-coordinate system is transformed into (A'x, A'y) by this rotation

of the coordinate system with

A' = Axcoscp + A v sing)

A.9)

A'y = —Ax sin cp + Ay cos cp,

ADVANCED DEFINITIONS 9

*- x

FIG. 1.7 Rotation of cartesian coordinate axes about the z-axis

we define1 Ax and Ay as the components of a vector A. Our vector now is

defined in terms of the transformation of its components under rotation of the

coordinate system. If Ax and Ay transform in the same way as x and y, the

components of the two-dimensional displacement vector, they are the compo-

components of a vector A. If Ax and Ay do not show this form invariance when the

coordinates are rotated, they do not form a vector.

The vector field components Ax and Ay satisfying the defining equations,

Eq. 1.9, associate a magnitude A and a direction with each point in space. The

magnitude is a scalar quantity, invariant to the rotation of the coordinate

system. The direction (relative to the unprimed system) is likewise invariant to

the rotation of the coordinate system (see Exercise 1.2.1). The result of all this

is that the components of a vector may vary according to the rotation of the

primed coordinate system. This is what Eq. 1.9 says. But the variation with the

angle is just such that the components in the rotated coordinate system A'x and

A'y define a vector with the same magnitude and the same direction as the

vector defined by the components Ax and Ay relative to the л>, ^-coordinate

axes. (Compare Exercise 1.2.1.) The components of A in a particular coordinate

system constitute the representation of A in that coordinate system. Equation

1.9, the transformation relation, is a guarantee that the entity A is independent

of the rotation of the coordinate system.

To go on to three and, later, four dimensions, we find it convenient to use a

more compact notation. Let

1 The corresponding definition of a scalar quantity is S' = S, that is, invariant

under rotation of the coordinates.

10 VECTOR ANALYSIS

X~^Xi A.10)

a21 = coscp, a12 =

12 q,

a2l = — sin<p, a22 = coscp.

Then Eq. 1.8 becomes

The coefficient afj- may be interpreted as a direction cosine, the cosine of the

angle between x\ and Xj; that is,

a12 = cos{x1,x2) = sm<p,

a2x = cos(x2, х2) = cos I <p + — I = — sin cp.

The advantage of the new notation2 is that it permits us to use the summation

symbol ]T and to rewrite Eqs. 1.12 as

Note that / remains as a parameter that gives rise to one equation when it is

set equal to 1 and to a second equation when it is set equal to 2. The index j,

of course, is a summation index, a dummy index, and as with a variable of

integration, j may be replaced by any other convenient symbol.

The generalization to three, four, or N dimensions is now very simple. The

set of N quantities, Vj, is said to be the components of an jV-dimensional vector,

V, if and only if their values relative to the rotated coordinate axes are given by

Л i=l,2, ...,#. A.15)

As before, ац is the cosine of the angle between x\ and x}. Often the upper limit

N and the corresponding range of i will not be indicated. It is taken for granted

that the reader knows how many dimensions his or her space has.

From the definition of ai} as the cosine of the angle between the positive x\

2 The reader may wonder at the replacement of one parameter cp by four

parameters a{i. Clearly, the ai} do not constitute a minimum set of parameters.

For two dimensions the four atJ are subject to the three constraints given in

Eq. 1.18. The justification for the redundant set of direction cosines is the

convenience it provides. Hopefully, this convenience will become more

apparent in Chapters 3 and 4. For three dimensional rotations (9 ay but only

three independent) alternate descriptions are provided by: A) the Euler angles

discussed in Section 4.3, B) quaternions, and C) the Cayley-Klein parameters.

These alternatives have their respective advantages and disadvantages.

ADVANCED DEFINITIONS 11

direction and the positive Xj direction we may write (cartesian coordinatesK

Note carefully that these are partial derivatives. By use of Eq. 1.16, Eq. 1.15

becomes

V! = f ™±V-= У —^-V- A17)

1 ^ Fix J ^ fix' r \1-1')

The direction cosines ai} satisfy an orthogonality condition

ijaik = ejk A.18)

or, equivalently,

YJiald = b}k. A.19)

The symbol Sjk is the Kronecker delta defined by

8jk = 1 for у = к,

A.20)

<5д = 0 for ./=£*.

The reader may easily verify that Eqs. 1.18 and 1.19 hold in the two-dimensional

case by substituting in the specific atj from Eq. 1.11. The result is the well-known

identity sin2 cp + cos2 cp = 1 for the nonvanishing case. To verify Eq. 1.18 in

general form, we may use the partial derivative forms of Eqs. 1.16 to obtain

dx' dxl

'i dxl i dx'i дхк дхк'

The last step follows by the standard rules for partial differentiation, assuming

that Xj is a function of x\, x'2, x'3, and so on. The final result, dXjjdxk, is equal

to 5jk, since Xj and xk as coordinate lines (j ф к) are assumed to be perpendicular

(two or three dimensions) or orthogonal (for any number of dimensions).

Equivalently, we may assume that Xj and xk (j ф к) are totally independent

variables. If j = k, the partial derivative is clearly equal to 1.

In redefining a vector in terms of how its components transform under a

rotation of the coordinate system, we should emphasize two points:

1. This definition is developed because it is useful and appropriate in

describing our physical world. Our vector equations will be independent of

any particular coordinate system. (The coordinate system need not even be

cartesian.) The vector equation can always be expressed in some particular

coordinate systeto and, to obtain numerical results, we must ultimately express

the equation in some specific coordinate system.

3 Differentiate x\ — £aikxk with respect to Xj. See the discussion following

Eq. 1.21. Section 4.3 provides an alternate approach.

12 VECTOR ANALYSIS

2. This definition is subject to a generalization that will open up the branch

of mathematics known as tensor analysis (Chapter 3).

A qualification is also in order. The behavior of the vector components

under rotation of the coordinates is used in Section 1.3 to prove that a scalar

product is a scalar, in Section 1.4 to prove that a vector product is a vector,

and in Section 1.6 to show that the gradient of a scalar, Vxjj, is a vector. The

remainder of this chapter proceeds on the basis of the less restrictive definitions

of the vector given in Section 1.1.

Vectors and Vector Space

It is customary in mathematics to label an ordered triple of real numbers

(x2, x2, x3) a vector x. The number xn is called the nth component of vector x.

The collection of all such vectors (obeying the properties that follow) form a

three-dimensional real vector space. We ascribe five properties to our vectors:

If x = (xj,x2,x3) and у = (У!,у2,Уз),

1. Vector equality: x = у means xt = yh i= 1, 2, 3

2. Vector addition: x + у = z means xt + yt = zt,

3. Scalar multiplication: ax<^(axi,ax2,ax3) (with

a real)

4. Negative of a vector: — x = (— l)x •*-►( — x1? — x2,

-x3)

5. Null vector: There exists a null vector 0 <-> @,0,0).

Since our vector components are simply numbers, the following properties

also hold:

1. Addition of vectors is commutative: x + у = у + x.

2. Addition of vectors is associative: (x + y) + z =

x + (y + z).

3. Scalar multiplication is distributive:

a(x + y) = ax + ay, also (a + b)x = ax + bx.

4. Scalar multiplication is associative: {ab)x = a(bx).

Further, the null vector 0 is unique as is the negative of a given vector x.

So far as the vectors themselves are concerned this approach merely for-

formalizes the component discussion of Section 1.1. The importance lies in the

extensions which will be considered in later chapters. In Chapter 4, we show that

vectors form both an Abelian group under addition and a linear space with

the transformations in the linear space described by matrices. Finally, and

perhaps most important, for advanced physics the concept of vectors presented

here may be generalized to A) complex quantities,4 B) functions, and C) an

infinite number of components. This leads to infinite dimensional function

4 The «-dimensional vector space of real n-tuples is often labeled R" and the

«-dimensional vector space of complex n-tuples is labeled C".

SCALAR OR DOT PRODUCT 13

spaces, the Hilbert spaces, which are, important in modern quantum theory. A

brief introduction to function expansions and Hilbert space appears in Section

9.4.

EXERCISES

1.2.1 (a) Show that the magnitude of a vector A, A - (Al + A2I'2 is independent

of the orientation of the rotated coordinate system,

(Al + a)Y* = (л;2 + а;2I'2

;

independent of the rotation angle <p.

This independence of angle is expressed by saying that A is invariant under

rotations.

(b) At a given point (x,y) A defines an angle a relative to the positive x-axis

and a' relative to the positive x'-axis. The angle from x to x' is (p. Show that

A = A' defines the same direction in space when expressed in terms of its

primed components, as in terms of its unprimed components; that is,

a' = a — <p.

1.2.2 Prove the orthogonality condition ^апаш ~ $jk- As a special case of this the

direction cosines of Section 1.1 satisfy the relation

cos2 a + cos2 P + cos2 у = 1,

a result that also follows from Eq. 1.7a.

1.3 SCALAR OR DOT PRODUCT

Having defined vectors, we now proceed to combine them. The laws for

combining vectors must be mathematically consistent. From the possibilities

that are consistent we select two that are both mathematically and physically

interesting. A third possibility is introduced in Chapter 3, in which we form

tensors.

The combination of AB cos в, in which A and В are the magnitudes of two

vectors and в, the angle between them, occurs frequently in physics (Fig. 1.8).

FIG. 1.8 Scalar product A-B =

АВсоьв

14 VECTOR ANALYSIS

For instance,

work = force x displacement x cos в

is usually interpreted as displacement times the projection of the force along

the displacement.

With such applications in mind, we define

AB = AXBX + AyBy + AZBZ = £ЛД. A.22)

as the scalar, dot, or inner product of A and B. The scalar product of two

vectors is a scalar quantity. We note that from this definition A • В = В • A;

the scalar product is commutative. The unit vectors i, j, and к satisfy the relations

i-i = j-j = k-k = 1, A.22a)

whereas

ij = ik = jk = O,

A.22b)

j-i = k*i = k-j = 0.

If we reorient our axes and let A define a new лг-axis,1 then

and

Bx = В cos в.

Then by Eq. 1.22

A-B = ,4£cos0, A.23)

which may be taken as a second definition of scalar product. The component

definition, Eq. 1.22, might be labeled an algebraic definition. Then Eq. 1.23

would be a geometric definition. One of the most common applications of the

scalar product in physics is in the calculation of work, W=¥-s, the scalar

product of force and displacement.

EXAMPLE 1.3.1

For the two vectors A and В of Example 1.1.1, A = 6i + 4j + Зк, В =

2i — 3j — 3k,

A«B = A2- 12-9)= -9

by Eq. 1.22. In this case the projection of A on В (or В on A) is negative. Actually,

A| = C6 + 16 + 9I/2 = F1I/2 = 7.81,

В | = D + 9 + 9)i/2 = B2I/2 = 4.69,

and cos0 = -0.246, в = 104.2°.

1 The invariance of A ■ В under rotation of the coordinate axes is proved later

in this section.

SCALAR OR DOT PRODUCT 15

If A • В = 0 and we know that А ф 0 and В =/= 0, then from Eq. 1.23 cos в = 0

or 9 = 90°, 270°, and so on. The vectors A and В must be perpendicular.

Alternately, we may say A and В are orthogonal. The unit vectors i, j, and к

are mutually orthogonal. To develop this notion of orthogonality one more

step, suppose that n is a unit vector and г is a nonzero vector in the xy-plane;

that is, г = ix + \y (Fig. 1.9). If

n-r = 0

for all choices of r, then n must be perpendicular (orthogonal) to the xy-plane.

FIG. 1.9 A normal vector

Often it is convenient to replace i, j, and к by subscripted unit vectors em,

m— 1, 2, 3 with i = e1? and so on. Then Eqs. 1.22л and b become

ет*е„ = <5т„. A.22c)

For m Ф n the unit vectors em and е„ are orthogonal. For m = n each vector is

normalized to unity, that is, has unit magnitude. The set em is said to be orthonor-

mal. A major advantage of Eq. 1.22c over Eqs. 1.22йг and b is that Eq. 1.22c

may readily be generalized to ^-dimensional space: m, n = 1, 2, ..., N.

Finally, we are picking sets of unit vectors em that are orthonormal for con-

convenience—a very great convenience. The nonorthogonal situation is explored

in Section 4.4, "Oblique Coordinates."

SCALAR PROPERTY

We have not yet shown that the word scalar is justified or that the scalar

product is indeed a scalar quantity. To do this, we investigate the behavior of

A-B under a rotation of the coordinate system. By use of Eq. 1.15

16 VECTOR ANALYSIS

A'XB'X + A'yB'y + A'ZB'Z = 2>^ %

i j i j i J

A.24)

Using the indices к and / to sum over x, y, and z, we obtain

к I i j

and, by rearranging the terms on the right-hand side, we have

i j I

The last two steps follow by using Eq. 1.18, the orthogonality condition of the

direction cosines, and Eq. 1.20, which defines the Kronecker delta. The effect

of the Kronecker delta is to cancel all terms in a summation over either index

except the term for which the indices are equal. In Eq. 1.26 its effect is to set

j = i and to eliminate the summation over j. Of course, we could equally well

set i =j and eliminate the summation over i. Equation 1.26 gives us

ь A.27)

which is just our definition of a scalar quantity, one that remains invariant under

the rotation of the coordinate system.

In a similar approach which exploits this concept of invariance, we take

С = A + В and dot it into itself.

С • С = (A + В) • (A + В)

A.28)

= AA + BB + 2AB.

Since

CC = C2, A.29)

the square of the magnitude of vector С and thus an invariant quantity, we

see that

A-B = i(C2 -A2 -B2), invariant. A.30)

Since the right-hand side of Eq. 1.30 is invariant—that is, a scalar quantity—

the left-hand side, A • B, must also be invariant under rotation of the coordinate

system. Hence A • В is a scalar.

Equation 1.28 is really another form of the law of cosines which is

C2 = A2 + B2 + 2ABcosd. A.31)

Comparing Eqs. 1.28 and 1.31, we have another verification of Eq. 1.23, or,

if preferred, a vector derivation of the law of cosines (Fig. 1.10).

EXERCISES 17

В

FIG. 1.10 The law of cosines

An interesting illustration of the geometric interpretation of the scalar

product is provided by an example from a branch of general relativity. Consider

a four-dimensional sphere

x2 + y2 + z2 + w2 = 1

in x, y, z, w space. The surface of this four-dimensional sphere may be described

by the vector г = (x,y,z,w) with the restriction that |r| = 1. It is possible to

construct a unit vector t that is tangential to this four-dimensional sphere over

its entire surface. As one possible example,

t = (y, -x,w, -z).

The reader may verify that

therefore unit magnitude, and

therefore tangential, over the entire sphere.

The two-dimensional analog exists but there is no three-dimensional analog.

Hair growing out of a sphere cannot be combed down all over. There will be

a cowlick.

The dot product, given by Eq. 1.22, may be generalized in two ways. The

space need not be restricted to three dimensions. In «-dimensional space,

Eq. 1.22 applies with the sum running from 1 to п. п may be infinity, with the

sum then a convergent infinite series (Section 5.2). The other generalization

extends the concept of vector to embrace functions. The function analog of a

dot or inner product appears in Section 9.4.

EXERCISES

1.3.1 What is the cosine of the angle between the vectors

A = 3i + 4j + к

and

B = i-j + k?

ANS. cos 9 = 0, 6 = -.

18 VECTOR ANALYSIS

1.3.2 Two unit magnitude vectors e; and e,- are required to be either parallel or per-

perpendicular to each other. Show that e^e,- provides an interpretation of Eq. 1.18,

the direction cosine orthogonality relation.

1.3.3 Given that A) the dot product of a unit vector with itself is unity and B) this

relation is valid in all (rotated) coordinate systems, show that i' • Г = 1 (with the

primed system rotated 45° about the z-axis relative to the unprimed) implies that

i-j = 0.

1.3.4 The vector r, starting at the origin, terminates at and specifies the point in space

(x,y, z). Find the surface swept out by the tip of r if

(a) (r-a)-a = 0,

(b) (r - a) • г = 0.

The vector a is a constant (constant in magnitude and direction).

1.3.5 Ml

The interaction energy between two dipoles of moments щ and ц2 may be written

in the vector form

V=- Pi'tb .

Г3

and in the scalar form

V = f^1 B cos 0, cos 62 - sin 0, sin 0, cos <p).

Here Bx and 02 are the angles of ]i1 and ц2 relative to r, while <p is the azimuth of

\i2 relative to the щ — r plane. Show that these two forms are equivalent.

Hint. Eq. 12.198 will be helpful.

1.3.6 A pipe comes diagonally down the south wall of a building, making an angle

of 45° with the horizontal. Coming into a corner, the pipe turns and continues

diagonally down a west-facing wall, still making an angle of 45° with the horizontal.

What is the angle between the south-wall and west-wall sections of the pipe?

ANS. 120°.

1.4 VECTOR OR CROSS PRODUCT

A second form of vector multiplication employs the sine of the included

angle instead of the cosine. For instance, the angular momentum of a body is

defined as

angular momentum = radius arm x linear momentum

= distance x linear momentum x sin в.

For convenience in treating problems relating to quantities such as angular

momentum, torque, and angular velocity, we define the vector or cross product

as

VECTOR OR CROSS PRODUCT 19

Linear momentum

*- x

FIG. 1.11 Angular momentum

with

С = A x B,

С = AB sind.

A.32)

Unlike the preceding case of the scalar product, С is now a vector, and we assign

it a direction perpendicular to the plane of A and В such that A, B, and С form a

right-handed system. With this choice of direction we have

AxB=—BxA, anticommutation.

From this definition of cross product we have

A.32л)

A.326)

whereas

and

ixj = k, j x к = i, kxi = j

j x i = —к, к x j = —i, ixk= —j.

A.32c)

Among the examples of the cross product in mathematical physics are the

relation between linear momentum p and angular momentum L (defining

angular momentum),

L = г x p

and the relation between linear velocity v and angular velocity со,

V = CO X Г.

Vectors v and p describe properties of the particle or physical system. However,

20 VECTOR ANALYSIS

the position vector г is determined by the choice of the origin of the coordinates.

This means that со and L depend on the choice of the origin.

The familiar magnetic induction В is usually defined by the vector product

force equation1

Fm = ?vx B.

Here v is the velocity of the electric charge q and FM is the resulting force on

the moving charge.

The cross product has an important geometrical interpretation which we

shall use in subsequent sections. In the parallelogram defined by A and В

(Fig. 1.12) В sin в is the height if A is taken as the length of the base. Then

A x В | = А В sin в is the area of the parallelogram. As a vector, A x В is the

area of the parallelogram defined by A and B, with the area vector normal to

the plane of the parallelogram. This suggests that area may be treated as a

vector quantity.

В sin 9

FIG. 1.12 Parallelogram representation of the vector product

Parenthetically, it might be noted that Eq. 1.32c and a modified Eq. 1.326

form the starting point for the development of quaternions. Equation 1.326

is replaced byixi = jxj = kxk = —1.

An alternate definition of the vector product С = А х В consists in specifying

the components of С:

Cx = AyBz-AzBy,

— AZBX — AXBZ,

A.33)

or

lrThe electric field E is assumed here to be zero.

VECTOR OR CROSS PRODUCT 21

= AjBk -AkBj, i, j, к all different,

A.34)

and with cyclic permutation of the indices i, j, and к. The vector product С

may be conveniently represented by a determinant2

г-i

i J к

Ax Ay Az

вх ву bz

A.35)

Expansion of the determinant across the top row reproduces the three com-

components of С listed in Eq. 1.33.

Equation 1.32 might be called a geometric definition of the vector product.

Then Eq. 1.33 would be an algebraic definition.

EXAMPLE 1.4.1

With A and В given in Example 1.1.1,

A = 6i + 4j + 3k,

В = 2i - 3j - 3k,

A x B =

1 j к

6 4 3

2 -3 -3

= i(- 12 + 9) - j(- 18 - 6) + k(- 18 - 8)

= -3i + 24j-26k.

To show the equivalence of Eq. 1.32 and the component definition, Eq. 1.33,

let us form A • С and В • С, using Eq. 1.33. We have

A-C = A-(A x B)

= Ax(AyBz - AzBy) + Ay(AzBx - AXBZ) + Az(AxBy - AyBx)

= 0.

Similarly,

BC = B(A x B) = 0.

A.36)

A.37)

Equations 1.36 and 1.37 show that С is perpendicular to both A and В (cos 0 = 0,

в = ± 90°) and therefore perpendicular to the plane they determine. The positive

direction is determined by considering special cases such as the unit vectors

!See Section 4.1 for a summary of determinants.

22 VECTOR ANALYSIS

The magnitude is obtained from

(A x B)-(A x B) = A2B2-(A-BJ

= A2B2-A2B2cos2d A.38)

= A2B2sin20.

Hence

C = ABsmd. A.39)

The big first step in Eq. 1.38 may be verified by expanding out in component

form, using Eq. 1.33 for A x В and Eq. 1.22 for the dot product. From Eqs.

1.36, 1.37, and 1.39 we see the equivalence of Eqs. 1.32 and 1.33, the two

definitions of vector product.

There still remains the problem of verifying that С = А х В is indeed a

vector; that is, it obeys Eq. 1.15, the vector transformation law. Starting in a

rotated (primed system)

C[ = AjB'k — A'kB'j, i,j, and к in cyclic order,

1тВт A.40)

l,m

The combination of direction cosines in parentheses vanishes for m — l. We

therefore have j and к taking on fixed values, dependent on the choice of /,

and six combinations of / and m. If i = 3, then j = 1, к = 2 (cyclic order), and

we have the following direction cosine combinations

atla22 - a2Xal2 = аъъ,

a12a23 - a22ai3 = a31

and their negatives. Equations 1.41 are identities satisfied by the direction

cosines. They may be verified with the use of determinants and matrices

(see Exercise 4.3.3). Substituting back into Eq. 1.40,

С'ъ = a33A1B2 + a32A3B1 + a3iA2B3

-a33A2Bl-a32AlB3-a3lA3B2

= a31C1 + a32C2 + a33C3

By permuting indices to pick up C[ and C2, we see that Eq. 1.15 is satisfied

and С is indeed a vector. It should be mentioned here that this vector nature of

the cross product is an accident associated with the three-dimensional nature

EXERCISES 23

of ordinary space.3 It will be seen in Chapter 3 that the cross product may also

be treated as a second-rank antisymmetric tensor!

If we define a vector as an ordered triple of numbers (or functions) as in the

latter part of Section 1.2, then there is no problem identifying the cross product

as a vector. The cross-product operation maps the two triples A and В into a

third triple С which by definition is a vector.

We now have two ways of multiplying vectors; a third form appears in

Chapter 3. But what about division by a vector? It turns out that the ratio

B/A is not uniquely specified (Exercise 4.2.19) unless A and В are also required

to be parallel. Hence division of one vector by another is not defined.

EXERCISES

1.4.1 Two vectors A and В are given by

A = 2i + 4j + 6k,

В = 3i - 3j - 5k.

Compute the scalar and vector products A • В and A x B.

1.4.2 Show the equivalence of Eq. 1.32 and the component definition Eq. 1.33 by

expanding A, B, and С in С = А х В in cartesian components.

1.4.3 Starting with С = A + B, show that С х С leads to

A x B= -B x A.

1.4.4 Show that

(a) (А-В)-(А + В) = Л2-Я2,

(b) (A - B) x (A + B) = 2A x B.

The distributive laws needed here,

A-(B + C) = A-B + A-C

and

Ax(B + C) = AxB + AxC,

may easily be verified (if desired) by expansion in cartesian components.

1.4.5 Given the three vectors,

P = 3i + 2j - k,

Q= _6i-4j + 2k,

R = i - 2j - k,

find two that are perpendicular and two that are parallel or antiparallel.

3 Specifically Eq. 1.41 holds only for three-dimensional space. Technically, it

is also possible to define a cross product in R1, seven-dimensional space, but

the cross product turns out to have unacceptable (pathological) properties.

24 VECTOR ANALYSIS

1.4.6 IfP = iPx + j/^andQ = \QX -+- JGyare any two nonparallel (also nonantiparallel)

vectors in the xy-plane, show that P x Q is in the z-direction.

1.4.7 Prove that (A x B) • (A x B) = (ABJ - (A • BJ.

1.4.8 Using the vectors

P = icos0 + jsin0,

Q = icos<p — jsin<p,

R = icos<p + }sin<p,

prove the familiar trigonometric identities

sin@ + <p) = sin в cos <p + cos 9 sin <p,

cos@ + (p) = cos 9 cos cp — sin в sin (p.

1.4.9 (a) Find a vector A that is perpendicular to

(b) What is A if, in addition to this requirement, we also demand that it have

unit magnitude?

1.4.10 If four vectors a, b, c, and d all lie in the same plane, show that

(a x b) x (c x d) = 0.

Hint. Consider the directions of the cross-product vectors.

1.4.11 The coordinates of the three vertices of a triangle are B,1,5), E,2,8), and D,8,2).

Compute its area by vector methods.

1.4.12 The vertices of parallelogram ABCD are A,0,0), B,-1,0), @,-1,1), and

(—1,0,1) in order. Calculate the vector areas of triangle ABD and of triangle

BCD. Are the two vector areas equal?

ANS. Area^D = -^(i + j + 2k).

1.4.13 The origin and the three vectors A, B, and С (all of which start at the origin)

define a tetrahedron. Taking the outward direction as positive, calculate the total

vector area of the four tetrahedral surfaces.

Note. In Section 1.11 this result is generalized to any closed surface.

1.4.14 Find the sides and angles of the spherical triangle ABC defined by the three vectors

A = A,0,0),

and

Each vector starts from the origin (Fig. 1.13).

EXERCISES 25

В

FIG. 1.13 Spherical triangle

1.4.15 Derive the law of sines:

sin a _ sin /? _ sin у

*- У

x

1.4.16 The magnetic induction В is defined by the Lorentz force equation

F=^(vx B).

Carrying out three experiments, we find that if

26 VECTOR ANALYSIS

v - i, - = 2k - 4j,

g

v = j, — = 4i - k,

4

and

ь F • т

v = k, — = j - 2i,

From the results of these three separate experiments calculate the magnetic

induction B.

1.5 TRIPLE SCALAR PRODUCT, TRIPLE VECTOR

PRODUCT

TRIPLE SCALAR PRODUCT

Sections 1.3 and 1.4 cover the two types of multiplication of interest here.

However, there are combinations of three vectors, А* (В х QandA x (В х C),

which occur with sufficient frequency to deserve further attention. The com-

combination

A • (B x C)

is known as the triple scalar product. В х С yields a vector which, dotted into

A, gives a scalar. We note that (A • В) х С represents a scalar crossed into a

vector, an operation that is not defined. Hence, if we agree to exclude this

undefined interpretation, the parentheses may be omitted and the triple scalar

product written A • В x C.

Using Eq. 1.33 for the cross product and Eq. 1.22 for the dot product, we

obtain

А-В x С = Ax(ByCz - BzCy) + Ay(BzCx - BXCZ) + Az(BxCy - ByCx)

— R • Г1 v A — С • A у R

= -A-C x B= -C-B x A = -B-A x C, and so on.

A.43)

The high degree of symmetry present in the component expansion should be

noted. Every term contains the factors At, Bj, and Ck. If i,j, and к are in cyclic

order (x,y,z), the sign is positive. If the order is anticyclic, the sign is negative.

Further, the dot and the cross may be interchanged,

A-BxC = AxB-C A.44)

A convenient representation of the component expansion of Eq. 1.43 is provided

by the determinant

A A A

/±x /±y /±z

A-BxC= Bx By Bz A.45)

С С С

y^x y^v W

TRIPLE SCALAR PRODUCT, TRIPLE VECTOR PRODUCT 27

The rules for interchanging rows and columns of a determinant1 provide an

immediate verification of the permutations listed in Eq. 1.43, whereas the

symmetry of A, B, and С in the determinant form suggests the relation given in

Eq. 1.44.

The triple products encountered in Section 1.4, which showed that A x В

was perpendicular to both A and B, were special cases of the general result

(Eq. 1.43).

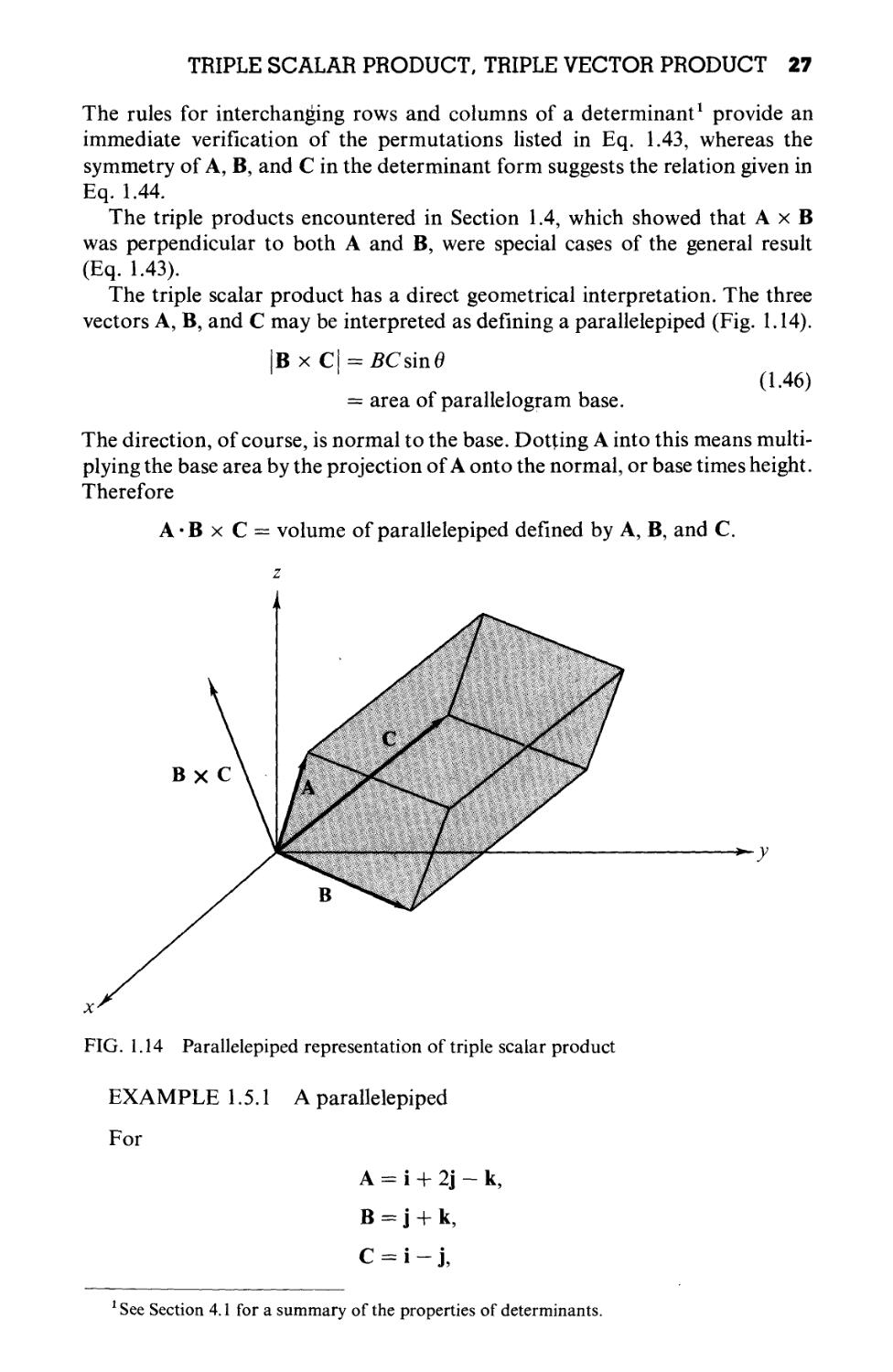

The triple scalar product has a direct geometrical interpretation. The three

vectors A, B, and С may be interpreted as defining a parallelepiped (Fig. 1.14).

В x

= area of parallelogram base.

A.46)

The direction, of course, is normal to the base. Dotting A into this means multi-

multiplying the base area by the projection of A onto the normal, or base times height.

Therefore

A'BxC = volume of parallelepiped defined by A, B, and C.

FIG. 1.14 Parallelepiped representation of triple scalar product

EXAMPLE 1.5.1 A parallelepiped

For

A = i + 2j - k,

1 See Section 4.1 for a summary of the properties of determinants.

28 VECTOR ANALYSIS

AB x C =

1 2 -1

0 1 1

1 -1 0

A.47)

By expansion by minors across the top row the determinant equals

1@ + 1) - 2@ - 1) - 1@ - 1) = 4.

This is the volume of the parallelepiped defined by A, B, and C. The reader

should note that A-BxC may sometimes turn out to be negative! This

problem and its interpretation are considered in Chapter 3.

The triple scalar product finds an interesting and important application

in the construction of a reciprocal crystal lattice. Let a, b, and с (not necessarily

mutually perpendicular) represent the vectors that define a crystal lattice. The

distance from one lattice point to another may then be written

г = naa + nbb + ncc, A-48)

with na,nb, and nc taking on integral values. With these vectors we may form

bxc ., cxa , axb t, ло \

a' = — , b=— , с ' = — . A.48a)

a«b xc a*b x с a*bxc

We see that a' is perpendicular to the plane containing b and с and has a magni-

magnitude proportional to a~x. In fact, we can readily show that

a/'a = b/-b = c'-c= 1, A.486)

whereas

a' • b = a ' • с = b' • a = b ' • с = с • a = с ' • b = 0. A 48c)

It is from Eqs. 1.486 and 1.48c that the name reciprocal lattice is derived. The

mathematical space in which this reciprocal lattice exists is sometimes called

a Fourier space, on the basis of relations to the Fourier analysis of Chapters

14 and 15. This reciprocal lattice is useful in problems involving the scattering

of waves from the various planes in a crystal. Further details may be found in

R. B. Leighton's Principles of Modem Physics, pp. 440-448 [New York:

McGraw-Hill A959)]. We encounter the reciprocal lattice again in an analysis

of oblique coordinate systems, Section 4.4.

TRIPLE VECTOR PRODUCT

The second triple product of interest is A x (В х С). Here the parentheses

must be retained, as may be seen by considering the special case

ix (ix j) = ixk=-j A.49)

but

(i x i) x j = 0.

The fact that the triple vector product is a vector follows from our discussion

TRIPLE SCALAR PRODUCT, TRIPLE VECTOR PRODUCT 29

of vector product. Also, we see that the direction of the resulting vector is

perpendicular to A and to В х С The plane defined by В and С is perpendicular

to В x С and so A x (В x С) lies in this plane. Specifically, if В and С lie in

the xy-plane, then В х С is in the z-direction and A x (В х С) is back in the

xy-plane (Fig. 1.15). This means that A x (В х С) will be a linear combination

of В and C.We find that

A x (B x C) = B(A-C)-C(A-B),

A-50)

a relation sometimes known as the В AC-CAB rule. This result may be verified

by the direct though not very elegant method of expanding into cartesian

components (see Exercise 1.5.2).

'A X (B X C)

FIG. 1.15 В and С are in the

.xy-plane. В x С is perpen-

perpendicular to the xy-plane and is

shown here along the z-axis.

Then A x (B x C) is perpen-

perpendicular to the z-axis and there-

therefore is back in the xy-plane.

An alternate derivation using the Levi-Civita eijk of Section 3.4 is the topic

of Exercise 3.4.8.

The В AC-CAB rule is probably the single most important vector identity.

Because of its frequent use in problems and in future derivations, the rule

probably should be memorized.

It might be noted here that as vectors are independent of the coordinates

so a vector equation is independent of the particular coordinate system. The

coordinate system only determines the components. If the vector equation

can be established in cartesian coordinates, it is established and valid in any

of the coordinate systems to be introduced in Chapter 2.

EXAMPLE 1.5.2 A triple vector product

By using the three vectors given in Example 1.5.1, we obtain

A x (B x C) = (j + k)(l - 2) - (i - j)B - 1)

= -i-k

byEq. 1.50. In detail,

30 VECTOR ANALYSIS

BxC =

0 1 1

1 -1 0

and

A x (B x C) =

1 2 -1

1 1 -1

= -i-k.

Other, more complicated, products may be simplified by using these forms

of the triple scalar and triple vector products.

EXERCISES

1.5.1

1.5.2

1.5.3

One vertex of a glass parallelepiped is at the origin. The three adjacent vertices

are at C,0,0), @,0,2), and @,3,1). All lengths are in centimeters. Calculate the

number of cubic centimeters of glass in the parallelepiped by using the triple

scalar product.

Verify the expansion of the triple vector product

Ax (BxC) = B(AC)-C(AB)

by direct expansion in cartesian coordinates.

Show that the first step in Eq. 1.38, which is

(A x B)(A x B) = A2B2-(A-BJ,

is consistent with the В AC-CAB rule for a triple vector product.

EXERCISES 31

1.5.4 Given the three vectors A, B, and C,

A = i+j,

В = j + k,

С = i - k.

(a) Compute the triple scalar product, A • В x C. Noting that A = В + С, give

a geometric interpretation of your result for the triple scalar product.

(b) Compute A x (В х С).

1.5.5 The angular momentum L of a particle is given by L = r x p = mr x v, where p

is the linear momentum. With linear and angular velocity related by v = и х г,

show that

L = mr2[w-ro(ro.<o)].

Here r0 is a unit vector in the г direction. For г • со = 0 this reduces to L = /to,

with the moment of inertia / given by mr2. In Section 4.6 this result is generalized

to form an inertia tensor.

1.5.6 The kinetic energy of a single particle is given by T—\mv2. For rotational

motion this becomes \rn{m x rJ. Show that

Г=£/и[г2с»2-(г-юJ].

For г ♦ со = 0 this reduces to T = jlco2 with the moment of inertia / given by mr2.

1.5.7 Show that

a x (b x c) + b x (c x a) + с x (a x b) = 0.

1.5.8 A vector A is decomposed into a radial vector Ar and a tangential vector A,.

If r0 is a unit vector in the radial direction, show that

(a) Ar = r0(A-r0)

and

(b) Ar= -r0 x (r0 x A).

1.5.9 Prove that a necessary and sufficient condition for the three (nonvanishing)

vectors A, B, and С to be coplanar is the vanishing of the triple scalar product

A-B x C = 0.

1.5.10 Three vectors A, B, and С are given by

A = 3i - 2j + 2k,

В = 6i + 4j - 2k,

С = - 3i - 2j - 4k.

Compute the values of А-В х С and A x (В х С), С x (A x B) and В х

(C x A).

1.5.11 Vector D is a linear combination of three noncoplanar (and nonorthogonal)

vectors:

D = aA + bB + cC.

Show that the coefficients are given by a ratio of triple scalar products,

D-BxC

a — an(j so on

A-B x С

32 VECTOR ANALYSIS

1.5.12 Show that

(Ax B)(C xD) = (A-C)(B-D)-(A.D)(B-C).

1.5.13 Show that

(A x B) x (C x D) = (AB x D)C - (AB x C)D.

1.5.14 For a spherical triangle such as pictured in Fig. 1.13 show that

sin Л sin В sin С

sin ВС sin С A sin А В

Here sin A is the sine of the included angle at A while ВС is the side opposite

(in radians).

Hint. Exercise 1.5.13 will be useful.

1.5.15 Given

bxc ., cxa , axb , . ,„

a = , b = , с = and a-bxcfO,

a-b x с a-b x с a-b x с

show that

(a) x'-y = Sxy, (x,y = a,b,c),

(b) a b х c=(a-bx c)~\

, . b'xc'

()

1.5.16 If x'-y = 5xy, (x,y = a,b,c), prove that

bxc

a =

a-b x с

(This is the converse of Problem 1.5.15.)

1.5.17 Show that any vector V may be expressed in terms of the reciprocal vectors

a', b, c' by

V = (V-a)a' + (V-b)b'+(V-c)c'.

1.5.18 An electric charge qt moving with velocity vx produces a magnetic induction

В given by

В = ^^211о (mks units),

4л: Г

where r0 points from q^ to the point at which В is measured (Biot and Savart law),

(a) Show that the magnetic force on a second charge q2, velocity v2, is given

by the triple vector product

(b) Write out the corresponding magnetic force Fx that q2 exerts onq1. Define

your unit radial vector. How do Fx and F2 compare?

(c) Calculate ¥Y and F2 for the case of qt and q2 moving along parallel tra-

trajectories side by side.

ANS. (b) Fl

Flf4x(Y2xr0).

In general, there is no simple relation between Fx and

F2. Specifically, Newton's third law, F, = — F2, does not

hold.

GRADIENT, V 33

(С) t у = -—- —-jf- V Го = — * 2 •

Mutual attraction.

1.6 GRADIENT, V

Suppose that cp(x,y,z) is a scalar point function, that is, a function whose

value depends on the values of the coordinates (x,y, z). As a scalar, it must have

the same value at a given fixed point in space, independent of the rotation of

our coordinate system, or

<p'(xfux2ix'3) = q>{xux2,x3). A.51)

By differentiating with respect to x[ we obtain

dcp'{x\, x'2, x3) = dq>jxu x2, хъ)

dx'i dx[

A.52)

JdXjdx; у ijdxj

by the rules of partial differentiation and Eq. 1.16. But comparison with Eq.

1.17, the vector transformation law, now shows that we have constructed a

vector with components dcpjdXj. This vector we label the gradient of (p.

A convenient symbolism is

^ ^ ^ A.53)

6x By

or

3 A54)

dz

\(p (or del cp) is our gradient of the scalar cp, whereas V (del) itself is a vector

differential operator (available to operate on or to differentiate a scalar cp). It

should be emphasized that this operator is a hybrid creature that must satisfy

both the laws for handling vectors and the laws of partial differentiation.

EXAMPLE 1.6.1 The Gradient of a Function of r.

Let us calculate the gradient of/(r) =f(\fxr+y2 +~z*).

+да+

=да+да+k

dx J dy dz

Now/(r) depends on x through the dependence of r on x. Therefore1

1 This is a special case of the chain rule of partial differentiation:

дДг,в,ф) = dfdr d/dl df<hp

dx dr dx дв дх дер dx

Here df/дв = df/d<p - 0, df/dr -> df/dr.

34 VECTOR ANALYSIS

= dfjr) _ dr

dx dr dx

From r as a function of x, y, z

dr ^d^+y2 + z2I12 ^

^ ^ =

dx~ dx ~ (x2 + y2 + z2I12 ~ r '

Therefore

= df(r) x

dx dr r

Permuting coordinates (x-+y, у -» z, z -» x) to obtain the у and z derivatives,

we get

r dr

= T°Jr-

Here r0 is a unit vector (r/r) in the positive radial direction. The gradient of a

function of r is a vector in the (positive or negative) radial direction. In Section

2.5 r0 is seen as one of the three orthonormal unit vectors of spherical polar

coordinates.

A GEOMETRICAL INTERPRETATION

One immediate application of V<p is to dot it into an increment of length

dr = idx+jdy + kdz. A.55)

Thus we obtain

d d d

A.56)

dx dy dz

= d<p,

the change in the scalar function cp corresponding to a change in position dr.

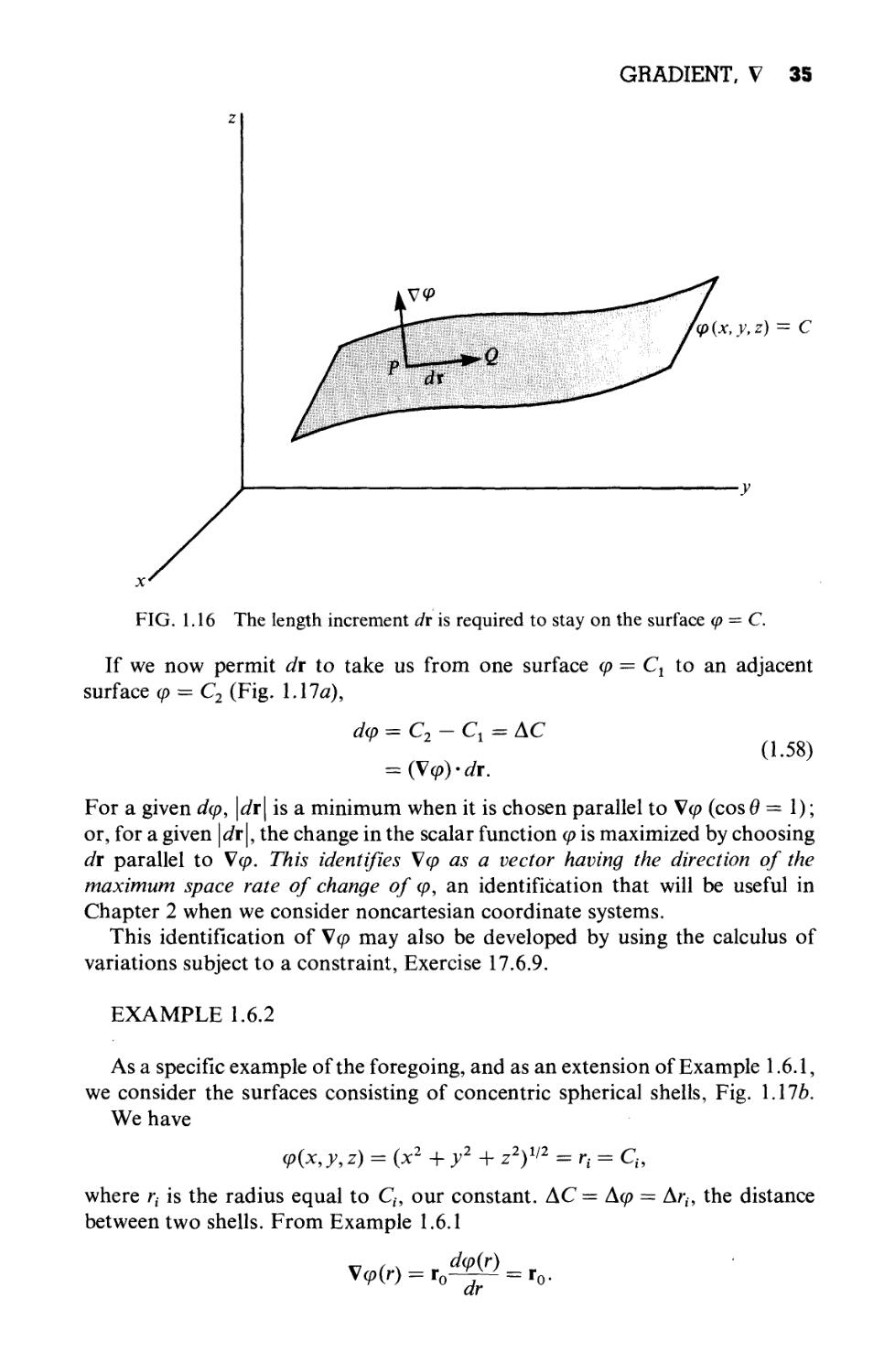

Now consider P and Q to be two points on a surface cp(x,y, z) = C, a constant.

These points are chosen so that Q is a distance dr from P. Then moving from

P to Q, the change in cp(x,y, z) = С is given by

dm = (Va>) • dr

A.57)

= 0,

since we stay on the surface cp(x, y, z) = С This shows that \cp is perpendicular

to dr. Since dr may have any direction from P as long as it stays in the surface

(p, point Q being restricted to the surface, but having arbitrary direction, V<p is

seen as normal to the surface cp = constant (Fig. 1.16).

GRADIENT, V 35

q> (x, y,z) = С

FIG. 1.16 The length increment dr is required to stay on the surface cp — С.

If we now permit dr to take us from one surface cp — C2 to an adjacent

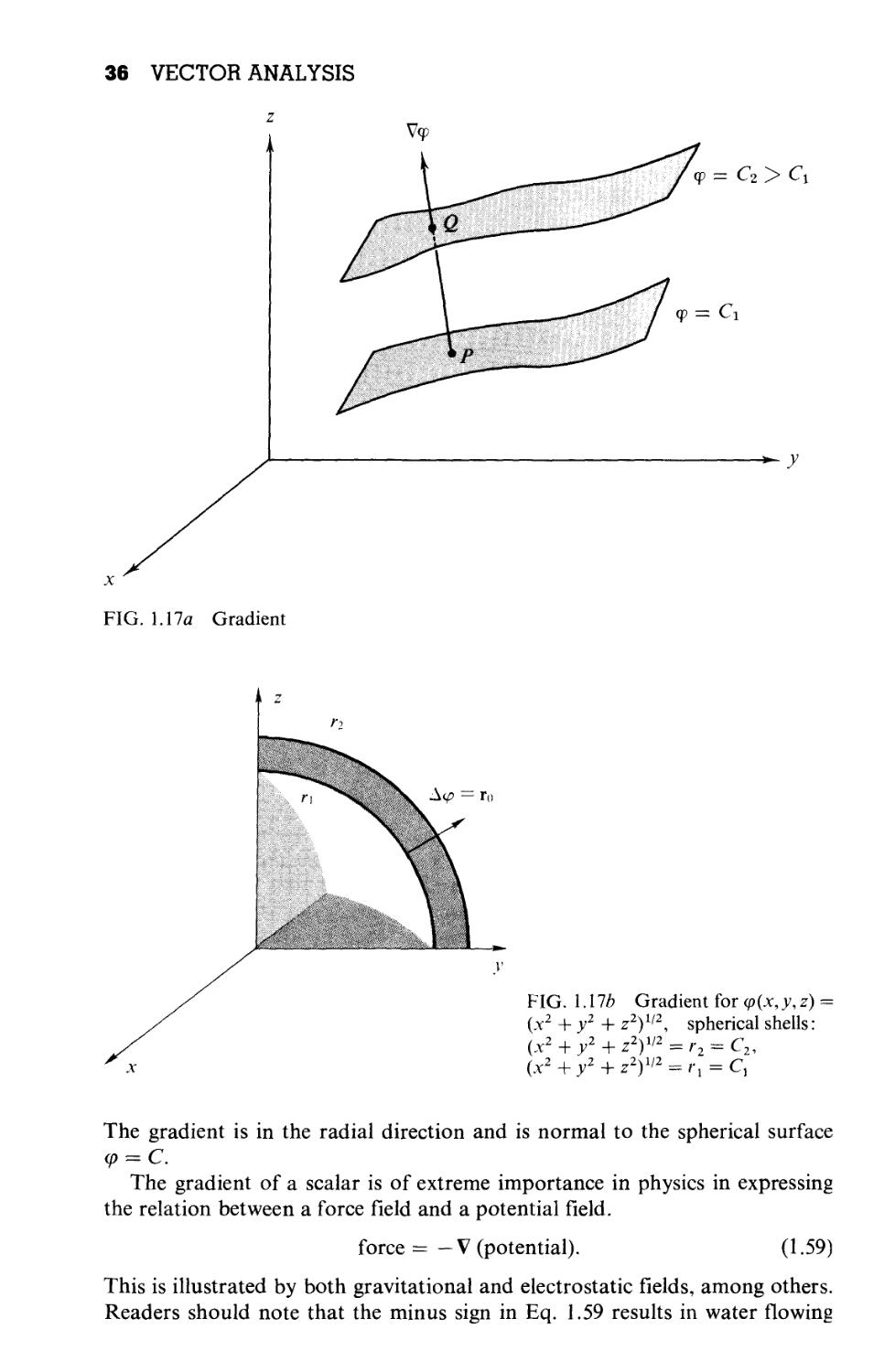

surface cp = C2 (Fig. 1.17л),

dcp = C2 — Cl = AC

A.58)

For a given dcp, \dr\ is a minimum when it is chosen parallel to \cp (cos 0=1);

or, for a given |dr\, the change in the scalar function cp is maximized by choosing

dr parallel to V<p. This identifies \cp as a vector having the direction of the

maximum space rate of change of cp, an identification that will be useful in

Chapter 2 when we consider noncartesian coordinate systems.

This identification of \cp may also be developed by using the calculus of

variations subject to a constraint, Exercise 17.6.9.

EXAMPLE 1.6.2

As a specific example of the foregoing, and as an extension of Example 1.6.1,

we consider the surfaces consisting of concentric spherical shells, Fig. \.\lb.

We have

cp(x,y,z) = (x2 +y2+ z2I'2 = ri = Ch

where r{ is the radius equal to Ch our constant. AC = Acp = Ar,-, the distance

between two shells. From Example 1.6.1

dcp(r)

36 VECTOR ANALYSIS

ф = Сг >

Ф =

■^ у

FIG. 1.17a Gradient

FIG. 1.176 Gradient for cp(x,y,z)

{x2 + y2 + z2I'2, spherical shells:

(x2 + y2 + z2I'2 = r2 = C2,

(x2 +y2 + z2I'2 = rj = C,

The gradient is in the radial direction and is normal to the spherical surface

q> = C.

The gradient of a scalar is of extreme importance in physics in expressing

the relation between a force field and a potential field.

force = — V (potential).

A.59)

This is illustrated by both gravitational and electrostatic fields, among others.

Readers should note that the minus sign in Eq. 1.59 results in water flowing

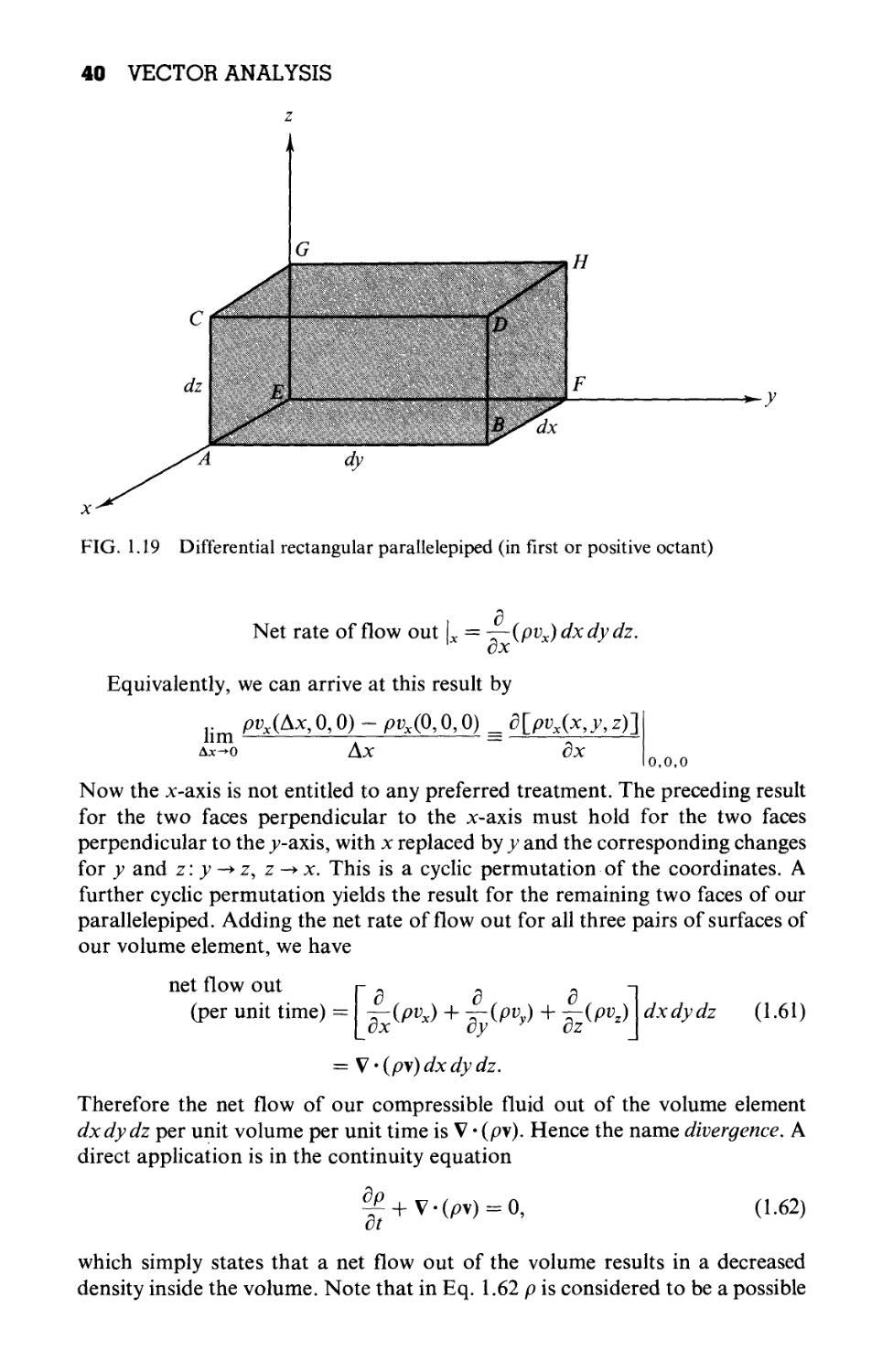

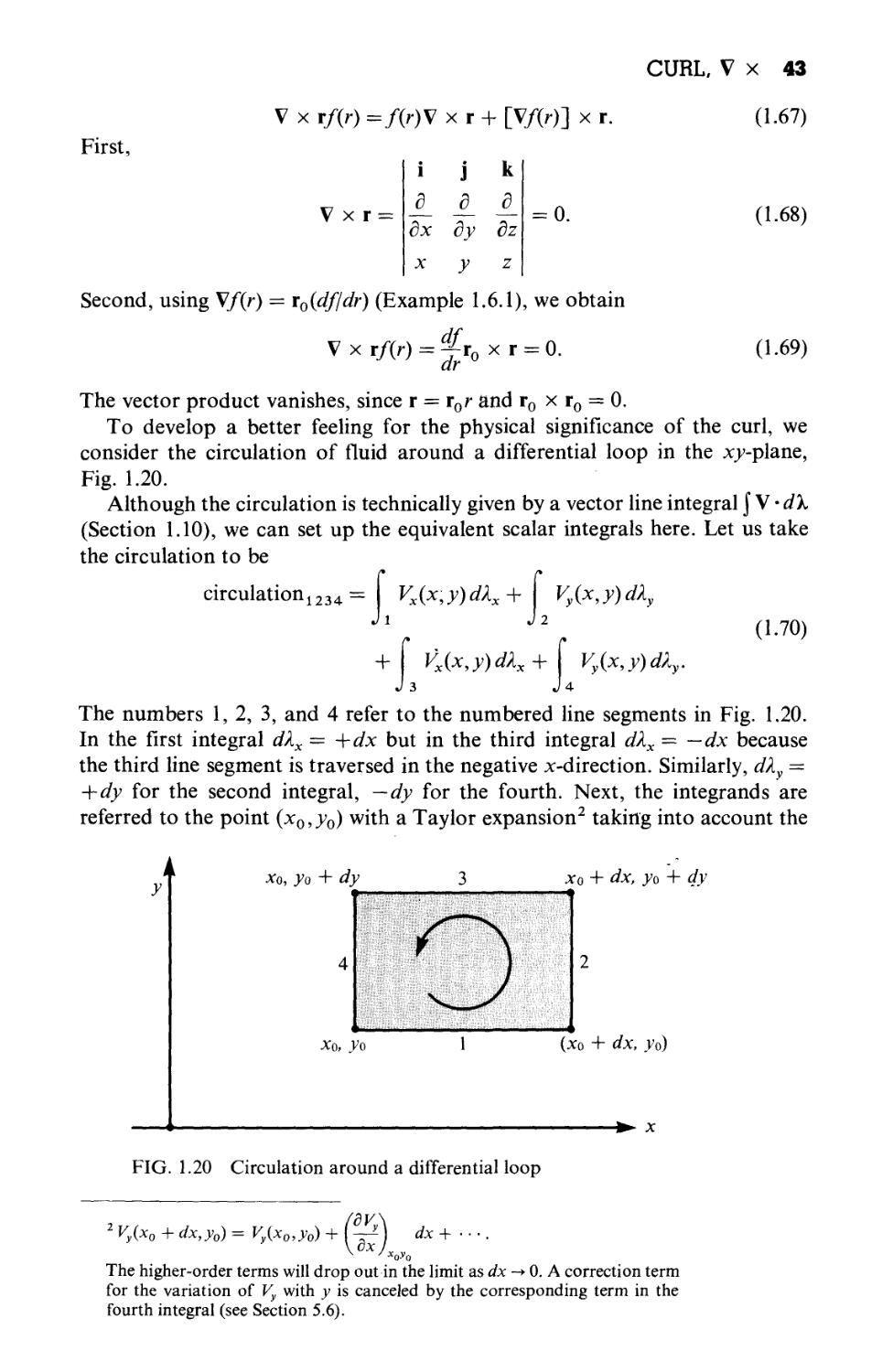

DIVERGENCE, V • 37

downhill rather than uphill! We reconsider Eq. 1.59 in a broader context in

Section 1.13.

EXERCISES

1.6.1 If S(x,y,z) = (x2 +y2 + z2)~3/2, find

(a) \S at the point A,2,3);

(b) the magnitude of the gradient of S,\\S\ at A,2,3);

and

(c) the direction cosines of VS at A,2,3).

1.6.2 (a) Find a unit vector perpendicular to the surface

x2 + y2 + z2 = 3

at the point A,1,1).

(b) Derive the equation of the plane tangent to the surface at A,1,1).

ANS. (a) (i + j V

(b) x + у + z = 3.

1.6.3 Given a vector r12 = i(xy — x2) + \(y1 — y2) + k(zy — z2), show that 4 Jr12 (gra-

(gradient with respect to xlf yt, and zv of the magnitude rl2) is a unit vector in the

direction of rx 2.

1.6.4 If a vector function F depends on both space coordinates (x, y, z) and time t, show