Текст

HACKER’S MANUAL

>2021

EXPERT TIPS& ADVICE

ADVANCE YOUR LINUX SKILLS

• THE KERNEL • NETWORKS

• SERVERS • HARDWARE • SECURITY

Digital

Edition

164 PAGES OF TUTORIALS

ENHANCE YOUR KNOWLEDGE WITH IN-DEPTH PROJECTS AND GUIDES

Welcome to the 2021 edition of the Hacker's Manual! You hold in your hands 164 pages of Linux hacking tutorials, guides and features from the experts at Linux Format magazine - the home of open source software. In this edition we've gone in hard for security. You’ll find guides to securing servers, getting a grounding in hacking, using security tools such as Kali and Fedora Security Lab, alongside solid features explaining how to protect your privacy online using established tools like Tails. But we shouldn't live in fear! Hacking is monumental fun. Never mind setting up and playing with Linux, we take a look at hacking tablets, media servers, virtual machines, cloud servers, multl-bootlng with Grub and much more. If you're a little timid when It comes to the Terminal we even have a meaty reference section at the back.

So dive in and enjoy1

FUTURE

HACKER’S

MANUAL

2021

Future PvC QuOy Mouse, The Arrtury. EutTi BAJ1UA

Editorial Editor Den Peel DoUgnpf Steve* Dacombe Senior Art Editor Andy Downes I lead uf Art & Design, Greg Whitaker Editorial Director 3on WW<e

Photography

AJi седому» ,r° traoemartrs are recognwc md resc*<Tec

AdrtrllUnq

•Лмяь pecks we auariabe on request Corrmcdai Drccior Clare Dow

international Hoad of Prrx Ucens>rg йкМ Shaw

Circulate on

Head oT Newstrade Tim Machers

Production

-Kud of DroducttoTi Mark Constance P-jduLifcr Project MarwQet Matthew Eginton AiMrtnr^ hoicliiiri Митку? Jcurn» Croeby D git я I Etinrre Frrt-nl»- Зеясп Mudecn Rroductjon Managers Keefy MAar, Note Cokey VManna CWvert Ran Twrcyman

Pnneed try AMijrn CiDbons 26 Raneesr» Road AiScrrhoU Wee MKHandA *W13 3XT

DfctritMrted by Maritettrce. 5 CbuoMI Ptace Canary Vrixa»f. uondon. E14 wwwmarkctfcrcc-oaiA R< '32317&7ЭХП

HACKER’S MANUAL 2021

Distros

The distro is the core of Linux, so make sure you get the right one.

10 Ubuntu 20.04

Get the lowdown on the latest Ubuntu release and discover its secrets.

18 The best distros

We look back at how distros came to be and the best distros in history!

24 Inside the Linux kernel

How did Linux come to be? What makes it tick? We answer all this and more.

32 Compile the kernel

It's the ultimate nerd credential, compile your own custom kernel, here’s how...

36 The ultimate home server

We guide you through building, configuring and using an all-singing home server.

44 FirelP firewall

Build a wall, not of tacos, but of fire! Keep out hackers with a dedicated firewall.

48 Rescatux repair

Explore one of the most famous rescue and repair systems powered by Linux.

Security

The best defence is a good offence, but also a good defence.

54 Protect your privacy

Leverage Linux to fight off the invasion of your privacy using Tails and more.

62 Kali Linux

We take you inside the ultimate hacking toolkit and explain how to use it in anger

66 Secure chat clients

Chat online without anyone snooping in on what you have to say.

72 Lock down Linux

We outline the essentials of locking down your Linux boxes for secure networking.

76 Fedora security lab

There's more than one way to skin a cat. so try out the Fedora hacking lab.

80 Key management

Learn how to create a good GnuPG key and keep it safe from online thieves.

Software

Discover the most powerful Linux software and get using it.

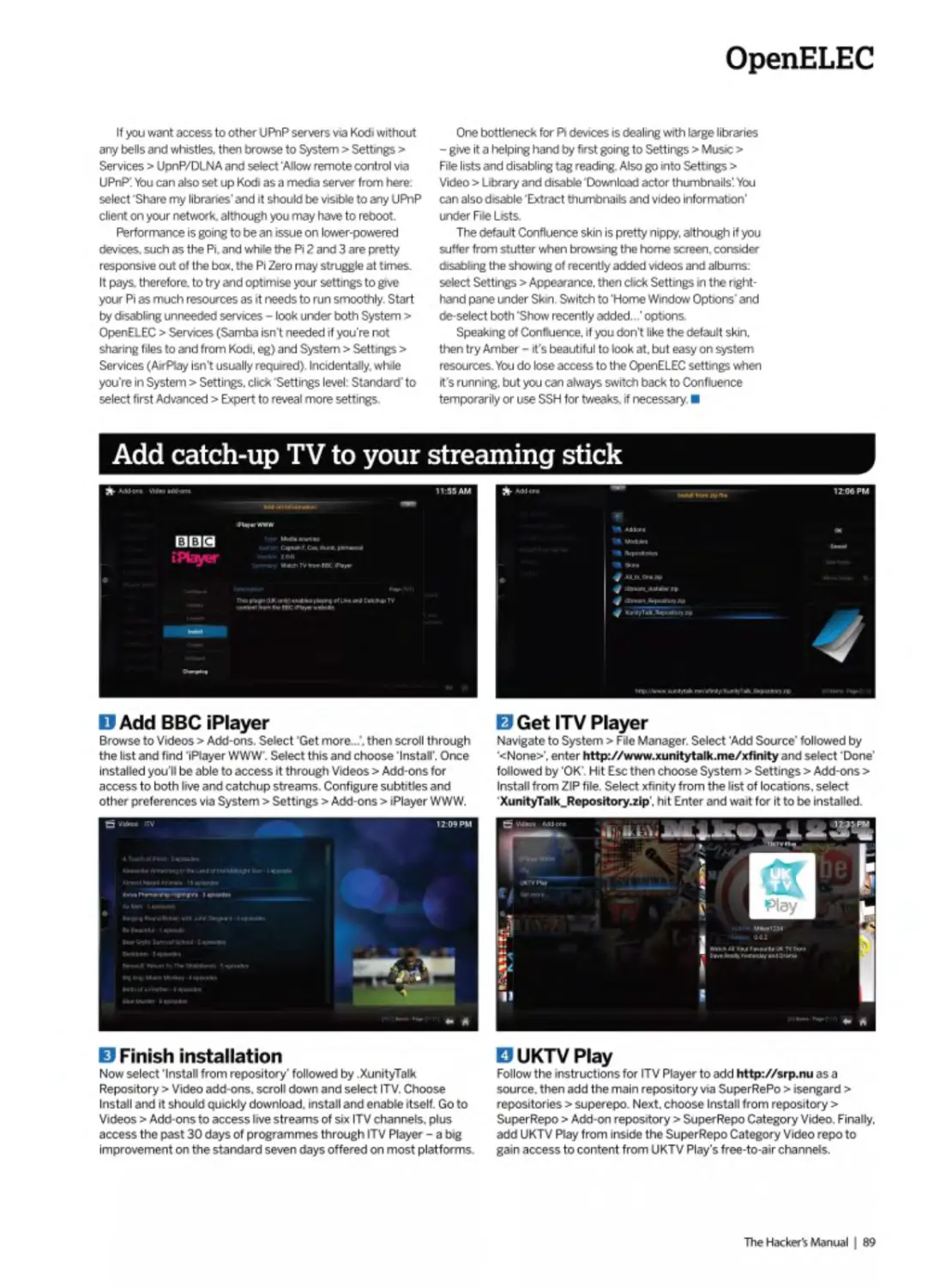

86 OpenELEC

Get to grips with the media system for desktops and embedded systems.

90 Virtual Box

Ensure you get the best out of your virtual systems with our essential guide.

94 NextCloud

The break away, all new cloud storage and document system is live for all.

98 NagiOS

Industry-level system monitoring so you can track all your Linux PCs.

102 Octave

Get to grips with the high-end scientific and mathematical language.

106 Inside KDE 5

Discover the building blocks that help build the prettiest desktop and apps around.

Hacking

Take your Linux skills to the next level and beyond.

112 Learn to hack

Discover the basics of hacking to help keep your systems safe.

120 Linux on a Linx tablet

Get Linux up and running on a low-cost

Windows tablet without the hassle.

124 Multi-boot Linux

Discover the inner workings of Grub and boot lots of OSes from one PC

128 Libreboot laptop

Kick ’the man' out of your hardware and install the Libreboot firmware.

132 LTTng monitoring

Get to know what all your programs are up to by tracing Linux app activity.

136 USB multi-boot

We explain how you can carry multiple distros on a single USB drive.

The terminal

Feel like a 1337 hacker and get to grips with the powerful terminal.

142 Get started

The best way to use the terminal is to dive in with both feet and start using it.

144 Files and folders

We explain how you can navigate the file system and start manipulating things.

146 Edit config files

Discover how you can edit configuration files from within the text terminal.

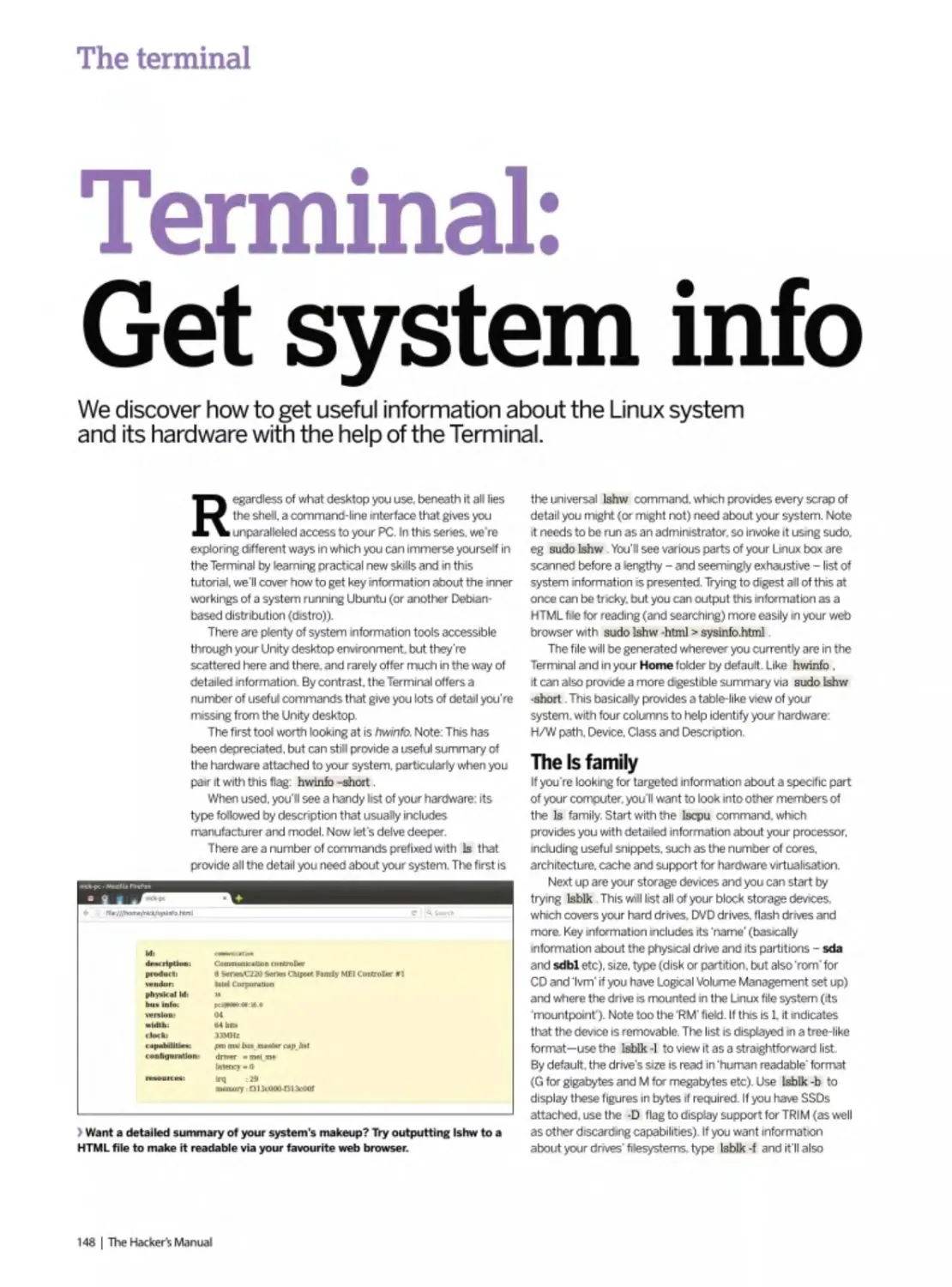

148 System information

Interrogate the local system to discover all of its dirty little secrets.

150 Drive partitions

Control, edit and create hard drive partitions and permissions.

152 Remote X access

Set up and access remote GUI applications using Xll.

154 Display control

Sticking with the world of Xll we take some randr for resolution control.

156 Core commands

20 essential terminal commands that all Linux web server admins should know.

U ЛГ KFD’Q

MANUAL 2021

ars * for i in rangefMAX .STARS) star ~ (randrange(O.639), randrange(O.479), randrangefi, 16)| stars append(star) while True: clock.tick(30) for event in pygame.

ity. to. tasks priorityinteger S bundle exec rake dbmigrate $ bundle exec rake db:rmgrate S bundle exec rails server validate :due at.is in the past def due .at.is ine.now Wusr/bin/en python import pygame from random import randrange MAX STARS - 100 pygame.init() screen pygame.display.set mode((640, 480)) c star - [randrangefO. 639). randrangefO. 479), randrangefl, 16)) stars.appendfstar) white True. clock.tick(30) for event in pygamc.event.getf) if event type — pyg tes qw(uslecp); use Curses; Sscreen - new Curses, noecho; curs.set(O); tor ($i - 0; Si < Snumstars ; $i'+) ($stai_x)Si] - randlBO); Sstar jt$i) = rand(24); Sstar _s($i| s , $i+<) { Sstar.x|$i) - Sstar s|$i], if (Sstar.xJSi] < 0) [ Sstai_x($i] 80;} Sscreen->addch($star_y[$i], Sstar x(Si|. " "k ) Sscrccn->refresh; usleep 50000; gem then is", 2.13.0" $ gem install bundfer S gem install rails -version=3.2.12 $ rbenv rehash S rails new todolist -skip-test-unit respond.to do format! if ®task.update. 1) format.json (head ;no_content) else tormaLhtml (render action; ‘edit*) formatfson {render json; ©task.errors, status; unproeessahle.entity) $ bundle exec rails exec rake dbimigrate S bundle exec rake db:migrate $ bundle exec rails server validate :due_at_is_m_the_past def due_at_is_in_the_past errors.add(:due_at, ‘is in tt tgame from random import randrange MAX.STARS = 100 pygameinitf) screen = pygame display set_mode((640, 480)) clock = pygame time Clockf) stars = r(0, 479), randrangefl, 16)] stara.appendfslar) while True; cJocfc.lick(30) for event in pygame.evenl.getf): if evenl.type - - pygame.QUIT: exitfO) #!/usr/bin/perl Snc new Curses; noecho; curs.set(O), for (Si = 0; Si < Snumstars , Si++) ( Sstar.x|$i] = rand(80), Sstarjy]$t] = rand(24); Sstar_s|$i| = rand(4) + I; ] while fl) ( Sscreen-|; if ($star_x|$ij < 0) ( $star_x|$i] = BO;) Sscreen->addch(Sstar_y|$i|, Sstar .x)Si|, “.’’); | Sscreen->refresh; usleep 50000; gem "therubyracer", “-> 0.11.4" group dev ndler $ gem install rails -version=3.2.12 S rbenv rehash $ rails new todolist -skip-test-unit respond.to do Iformatl if ©task update_attributes(params[:task|) formal nt} else format html (render action: "edit") format.ison (render json: ©taskerrors. status: unprocessable.entity) $ bundle exec rails generate migration add_pnoril exec rake db:migrate $ bundle exec rails server validate :due_at_is.in_thej>ast def due_at_is_in_the_past errors.add(;due_at. ‘is in the past!') if due.at < Time.zoni ndrange MAX.STARS = 100 pygame.initf) screen = pygamedisplay.set_mode((640. 480)) clock = pygame time.CIockf) stars = for i in rangefMAX.STARS): star = end(star) while True: clock.bck(30) for event in pygame.event.getf); if event type — pygame.QUIT: exitfO) Wusr/bin/perl Snumstars = 100; use Time::HiRes qwfus (Si - 0; Si < Snumstars; $it-+) {$star.x[Si] - rand(80): Sstar.yjSi] - rand(24); Sstar .s|$i| - rand(4) ♦ 1;} while (1) {Sscreen->ciear; for (Si - 0; Si < Snumstars ; $1++) reen->addch(Sstar.y($i).$star.x[Si|. * ");) $screen->refresh; usleep 50000; gem “therubyracer", “~>0.11.4* group development. :test do gem “rspec-rails", “->2.13.0

Distros

Because if there was only one form of Linux, we’d be bored

10 Ubuntu 20.04

Get the lowdown on the latest Ubuntu release and discover its secrets.

18 The best distros

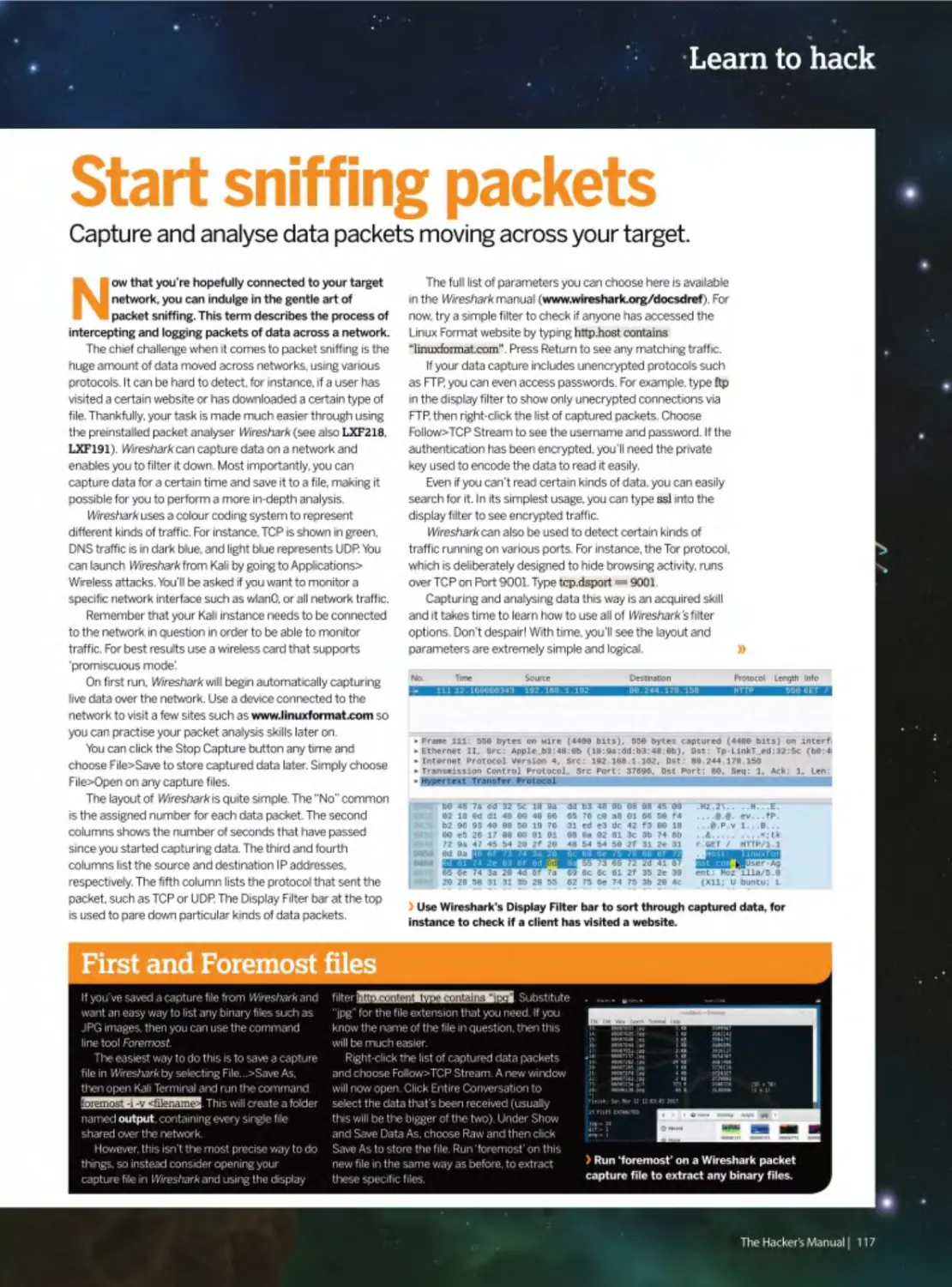

We look back at how distros came to be

and the best distros in history!

24 Inside the Linux kernel

How did Linux come to be? What makes it tick? We answer all this and more.

32 Compile the kernel

It's the ultimate nerd credential, compile your own custom kernel, here's how..

36 The ultimate home server

We guide you through building, configuring and using an all-singing home server.

44 FirelP firewall

Build a wall, not of tacos, but of fire! Keep

out hackers with a dedicated firewall.

48 Rescatux repair

Explore one of the most famous rescue and repair systems powered by Linux.

• rei-it.l-: спи)',' I s ruridlu :•.>.< г-з: г ne:'?:ate rr qiglion m the past errois addi:due at, is ui the past1') if due at lock - pygame.tun'r.ClockO stare - 'и. . i luiigetMAX □me.QUIT exit(0i »' 'usi 'bui'pei! SnuinsUts - lot). use - randM) • I. । while i ll i Ssciaer. -cltai, fci i.Si - 0. Si •• jbyracef 0 II 4' group development. test do gem .ittnlmtre-lpurams, й;-к|/ a urn r html I lediiecl to Ct'task. ;i‘!i ui. itp m.gitiliou ..ud ji: i.ii itx _ti. _t. pi i er И у i i it- past!') ll due a! -- Tiiiiu.:!u,K.now J s: •bin.-'-er» rylncin loi I n tange(MAX_STARS) srat = lari'barigeid biJi. imulars = 100; use Tirrwi.HiRes qw(usleep); use Curses: clear; for ($i = 0; $i - ISnurn.-tars , Si—) | S.-ta; xIS'.j --••elupniPlil. test &: gei:i "rstiet lr..i:-- .. 13.0 S gem I.html I redirect Jo (Siask. notice ’. ) format.|son { head y. to. tasks pnontytinteqer $ bundle exec rake dhtmigrate e now Wusr/bin/en python import pygame from random IrandrangefO, 639). randrangefO. 479), randrangefJ, 16)1 deep); use Curses; Sscreen * new Curses; noecho; curs. { Sstar_x($i] -= $star_s(Si]; if (Sstar_x|SiJ < 0) ($6tar_x[Si] ” $ gem install bundler $ gem install rails -version<3.2.12

Ubuntu blasts off!

Ubuntu is back and it's better than ever. Read on to find out why Jonni Bidwell is out of this world over this new release.

Canonical's latest Ubuntu release. “Focal Fossa", hit the mirrors at the end of April 2020. So by the time you read this thousands of people will have downloaded it, installed it and (we ll wager) been downright impressed with what it has to offer

If you haven't yet tried it. then you're in for a treat. We ll show you what's new and what you can do with Canonical's finest. And if you've never tried Linux before. Ubuntu 20.04 LTS is a great place to start.

You can try it right now (well, in the time it takes you to download and write it to a USB stick) without interfering with your current setup. We've got guides to trying Ubuntu in a virtual machine and. when you're ready, installing it on to bare metal.

There are all kinds of reasons to switch to Linux, and with the new Ubuntu release comes one more Be the envy of your proprietary OS using friends. Never see your computer crippled by updates again. Enjoy a desktop that's not an advertising

space. Explore the phenomenal selection of free software, which more often than not is at least as good as commercial offerings, and in many cases is superior

Video editing. 3D modelling, high-powered number crunching and even AAA gaming are all just a few clicks away. If your desktop habits are more sedentary. Ubuntu's got you covered too. Fast web browsing, easy email, fuss free media playing are all yours out of the box. Read on to see just how powerful it really is.

Focal fossa bossa nova

It's hard to know what to focus on with this new release, so here's a quick summary of Focal Fossa’s highlights.

Naturally everything under the Ubuntu hood has been refreshed. The new 5.4 kernel brings support for new hardware and fixes for old. What most home users will be interested in is the desktop, and you'll find Gnome 3 36 looks and behaves better than ever, It seems like there's been a two-year game of bug whack-a-mole. both within Gnome and Ubuntu's take on it. to address niggling performance and memory issues. But with the release, it's smooth like the inferior type of peanut butter

“The darkening" has come finally to Ubuntu, Like so many desktops and programs it now offers a dark mode, which some people find easier on the eyes. Light or dark, we think you'll love the new bold icons and Yaru theme. Gnome s application folders feature, by which you can drag icons in the Applications menu on top of one another to make a folder, ts less climsy now. And if you remove all but one item from a folder, then the singleton Is automatically promoted back to the main menu and the folder removed. Tidy.

If you have a HiDPI display you'll be pleased to hear that fractional scaling is finally here. And (unlike in 19.10) you don't need to invoke Gsettmgs from the command line to use it Previously, only integer scaling was available, which meant users often had to choose between tiny text and giant text. Now the Goldilocks Zone can be enabled through a simple switch in Settings>Screen Display, but do note the small print warning about increased power usage and decreased sharpness. At time of writing, there seem to be issues using this with the proprietary Nvidia driver, so keep an eye on this bug https://bugs.launchpad.net/ ubuntu/+source/gnome-control-center/+bug/1870736.

Speaking of Nvidia, its drivers are included on the install medium. No need to resort to a PPA or (shudder) downloading them from Nvidia's website. Gamers will be pleased to bear that Feral's GameMode is part of a standard install. This can rejig CPU frequency governors, schedulers and other frobs to boost framerates. And in case you're concerned about the 32-bit library palaver, don't be: everything needed by Steam. IVineand Lutrts is still in the repos. If you're running old 32-bit applications

> Ubuntu's delightful wallpapers all have a ‘focal' theme goln' on.

you might experience difficulties, so test these before getting rid of your 18 04 install There's an opportunity to help the community here if you find one that doesn't work - do your bit and package it up as a Snap. Learn more from this blog post https://snapcraft.io/blog/ how-to-preserve-old-software-with-snaps

The Ubuntu Software tool now serves Snaps as the default. Indeed, most applications we searched for were only available in this format, but some packages can be installed from the traditional Ubuntu repos. Of course, you can still install packages from the command line with apt if you're not sold on the app store' mentality We predict Snaps (and Flatpaks) are going to gain senous traction in the coming months and years.

As you'll see from the Software application, a number of tools are now packaged as Snaps. And this number will increase as developers side-step the old method of relying on distros to package up their offenngs.

Downloading the files

Usually, at this point we'd be te»mg people to fire up our DVD and get started with Utxntu right now But there is no physical DVD with the bookazine. and the free download' we’re offering features Fedora and Manjaro, The thinking (there was thinking behind this decision, honest1) was that since we're telling people to download something anyway, they may as well download Ubuntu straight from the official site https:// ubuntu.com

Of course, we re sorry for those people who don't have the connectivity to go downloadng a couple of gigabytes on a whim. But these are a small minority of users, and these are exceptional times This whole kerfuffle m«ht serve to illustrate an mportant point the distros we would normally put on our DVD are exactly the same as the ones we re telling you to download They might hide behmd a retro. VGA resolution menu, and sometmes there are

sneaky hacks to make them boot that way. but once that menu is gone they behave exactly as if you had downloaded the ISO in question and written it to a DVD yourself

We tell you this not because we don't want to make DVDs in the future - it's the closest thing Jorml gets to a break after all - but rather to empower you to go forth in the sprit of enquiry, download distros and seek cut desktop perfection.

»

Installing Ubuntu

You’ve got to try it to love it, but you’ve got to install it to really feel its power.

Just uttering the phrase'Installing Linux' will send a shiver down the spines of most humans, but it's not as terrifying as it used to be. Don't get overzealous. though: it's still possible to wipe out your existing OS by pushing the wrong button, and you should spend a couple of hours trying out Ubuntu before committing to installing it.

Running the live environment, or installing to a virtual machine will give you a handle on the look ’n' feel of this pinnacle of free software. There are a couple of hoops to lump through before we get there though. You can

USING THE ISO IMAGE “We know that many PCs and most laptops don’t have optical drives nowadays, but that’s okay - the image can be written to a USB stick.”

download the Ubuntu install image from whatever operating system you're comfortable with. Fire up your browser and head to https://utxintu.com/download/ desktop and press the Download button. If you’re feeling charitable you can also make a donation to Canonical and choose how the company invests it. but this is optional . We'd recommend making a cup of tea while the download completes. You should now have a

2.5GB disk image (ISO) file which you can write to a DVD using the software of your choice (for example. Toast on macOS. CDBumerXP on Windows. Brasero on Linux) We know that many PCs and most laptops don’t have optical drives nowadays, but that's okay - the image can be written to a USB stick, which will probably boot much faster and certainly will be much quieter than booting from DVD. There are programs like Unetbootin that can automatically download distros and set them up in all kinds of fancy ways, but we prefer to keep things simple so here we re recommending the cross-platform Etchertoot Grab it from https:// etcher.io. start it up. plug in a USB drive (make sure there’s nothing precious on it because its contents will all be destroyed), and away you go

Boot from your ISO file

You’ll need to figure out how to make your PC boot from USB or optical media before you can enjoy Ubuntu You have two options: one is to open any provided Boot Menu - not all devices offer this - the key used varies. HP systems use F9. Dell and Lenovo use F12. older AMIBIOS-based systems use F8. Award-based systems use FU. You need to slowly tap the key just after switching on the system. Select any CD-ROM/Optical dnve option and you're good to go.

If no boot menu is available the other option is to select the order of boot devices within the BIOS/UEFI settings A message should appear during the system start saying which key to press. Just as with the boot

-

Installing in VirtualBox

4____________________________

Get VirtualBox

Head to www.virtualbox.org and download VirtualBox 6for your operating system, be that Windows or OS X Install it and be aware you’ll need at least 20GB of space drive space to store the virtual OS file. You’ll also need the Ubuntu ISO file from www.ubuntu.com/desktop/ download Once installed start it and click the New button and call it Ubuntu.

Create a machine

Choose Ubuntu and the bits should match the ISO you downloaded, then click Next. Under Memory we'd recommend 2,048, but if you have an 8GB PC 4,096 is best. You can leave all the rest as default settings, apart from the dynamic hard drive size The default is 8GB. but we'd suggest at least 32GB if you can spare. Finish and click Start to get going.

3

Starting virtual Ubuntu

A prompt will appear asking for a

disc - locate the Ubuntu ISO file and click

Start. Linux Ubuntu will start, and once loaded you're free to try out Ubuntu or use the Install icon to properly install it to the virtual machine. For extended use. in the virtual machine’s settings under Display, you'll want to enable 3D acceleration and allocate 16MB.

menu pressing either Del (the most common). Fl, F2. Esc or a 'special' maintenance key should give access. In the BIOS locate the Boot Device menu and ensure the DVD/optical drive is first In the list. Save and reboot!

You can explore the live environment risk free, but for maximum pleasure and performance install Ubuntu to your hard drive or SSD using the handy six-step guide over the page. If you have one we’d recommend installing Ubuntu to its own device, rather than resizing the Windows partition per steps one and two. It should all work fine, and in fact it's possible to carry out those steps from the Ubuntu installer. But we've been around long enough to know things don't always go as they should. So back up any important data before proceeding. If you have a spare device, just select it and use the Erase disk and install Ubuntu option in step four. Motto semplice. If you want to play it safe, another option is to install Ubuntu to a virtual machine using Oracle s I4rfua/Soxand the guide below.

> Modern UEFI interfaces should make it easy to change the boot device.

This one even makes It possible for you to take screenshots, which Is frankly witchcraft.

Install Ubuntu with Windows

1

Make room

To create an empty partition for your Ubuntu installation, you’ll first have to squeeze your existing Windows partition. Fire up the Disk Management tool in Windows, and right-click your mam partition that's typically assigned the drive letter C. Then select the Shrink Volume option from the pop-up menu.

2

Shrink Windows

This brings up the Shrink dialog that

shows you the total size of the hard drive and the maximum amount of space that you can squeeze out of the selected partition. To create a new partition, specify

the size of the partition in the space provided in megabytes and click Shrink to start the process.

3

Updates and plugins

After your computer boots from the

Ubuntu installation medium, it'll display a checklist, Toggle the two available checkboxes on this screen. The first

checkbox option will fetch any available updates from the Internet, and the other will install the plugin required to play MP3 content and Wi-Fi firmware

Use free space

In the screen labelled Installation type, toggle the 'Something else' radio button to manually partition the disk. Ubuntu will now show you a list of partitions on the hard drive. Select the one labelled Free Space and click the plus sign (+) to create a partition out of this space you freed up in Windows.

5

Define partitions

In the Create partition box enter the

size for the Ubuntu partition. Then use the Mount point' pull-down menu to select the

/ option. If you like, you can create a separate home partition (if you want to keep user files and system files separate) in exactly the same way. Just select /home from the menu.

6

Localise and personalise

And that's it. The installer will now

start the process of installing Ubuntu. While the files are being copied to the hard drive in the background, it'll ask about your locale. In the last screen you'll be asked to enter your desired login and password details, as well as a name for

your computer.

Desktop deep dive

Here’s a quick guide to Ubuntu’s top-notch take on the Gnome desktop.

Coming from Windows or macOS. Ubuntu at first glance appears dramatically different, and perhaps even a little daunting. But don't worry, soon it will feel just like home. Your first port of call might be the Applications menu, which you can access by clicking in the top left of the screen, or by pressing the Super (Windows) key This shows any running applications, or if there aren’t any will show frequently used ones. So the first

> If you can't banish Facebook, you can at least contain it thanks to this excellent Firefox plugin.

time you click it this view will be barren, You'll find a grid of installed applications (pictured in the annotation below, which hopefully helps you get your bearings) by clicking m the bottom nght. so have a nosey around to see what interests you.

The standard install includes everything you need to get started, and tries to avoid bundling things that you don't. Perhaps we should have mentioned earlier the minimal install option if you don’t need an office suite, games or other computing fripperies. This saves you around half a gigabyte.

Sooner or later you'll want to add an application or several, and the easiest way to do this is through the Ubuntu Software shortcut in the dock (the one that looks like an orange briefcase). One of our first additions is the Gnome Tweaks tool, which makes it possible for you to customise the desktop's appearance and behaviour in lots of ways. Depending on who you believe, this either should not exist at all, or should be included by default on the Gnome desktop We ll let you be the

» Getting to Gnome you...

О Activities

Clicking here (or pressing the Super key) launches the Activities view. Helpfully, it shows previews of running programs.

0 Search Box

Start typing m the Activities view (there's no need to click in the box) to search Apps. Docs and more.

0 Calendar & Notifications area

Click here to control music, see any appointments (if you've connected an appropriate service) or activate Do not Disturb mode.

□ Status Menu

Network settings (including VPN), volume controls and application indicators can be found here The button in the bottom left launches the all-important Settings tool.

0 Applications menu

Click here to open/close a view of frequently used applications. You can see all installed applications from the selector at the bottom of this menu.

Dock

Launch your favourite

applications from here Rightclicking a running application (denoted by a dot) enables you to add it to the dock for easier access.

> KDE Plasma is traditional in the sense that there's a menu In the bottom left, but It'* sleek and modern looking, too.

I» Нагели

12 May 2020

U

Do Not rnsnxb

judge: just search for tweak in Ubuntu software, click the green install button, and confirm with your password.

The installer will have prompted you to add any online accounts you have, such as Facebook or Google. These will integrate with your desktop calendar and file manager as appropriate, so you can browse Google Drive or receive Facebook notifications. If you didn't add these accounts earlier you can always add them by going to Settings>Onlme Accounts You'll probably find you need to log out and log back in before calendars and other things sync properly. If you have successfully connected accounts and are tired of all these notifications you can remove them from here too. Alternatively, the Do Not Disturb switch in the calendar panel will silence these and hopefully keep you focused.

Browser wars

We’re particularly enamoured with Firefox 76 and its stylish new interface If you’re coming from the proprietary world then Google Chrome may be your browser of choice, and if you really want that then it’s straightforward enough to install (and its open source sibling Chromiumis even easier) But we think you should give Firefox a chance, if only for the privacy-preserving Facebook Container add-on. And indeed the multi-account container add-on. because tracking on the web is out of control nowadays.

Often it’s the hate-ridden Windows updates that cause people to switch to Linux. Ubuntu is much more considered in its updates. There's an unattended upgrades service that applies urgent fixes in the background, but you’ll be told (not forced) about general package updates These can be applied at a convenient time from the Software application, or the command line. Contrary to what you might read elsewhere, it's entirely possible to use desktop Linux without memonsing a bunch of strange

> The Xubuntu flavour I* great for lower-powered system*, or for those who prefer their desktops to stay minimal.

> The Gnome session lacks Ubuntu's characteristic left sidebar. And the darker colours bring looming deadlines into stark relief.

command line incantations or having a PhD in regular expressions. It's hard to overestimate how powerful the terminal is. but for desktop use you’d be better off memorising a couple of keyboard shortcuts for Gnome instead:

» Super (Windows key) - Bring up the activities view » Super-Left/Right - Tile current application left or right (so it fully occupies one half of the screen) » Super-Up/Down - Maximise/Restore current application

» Super pgUp/PgOown Switch virtual desktops

» Super-Shift-PgUp/Dn - Move current application to next/previous virtual desktop.

KEEP THINGS UP TO DATE “Often it’s the hate-ridden Windows updates that cause people to switch to Linux. Ubuntu is much more considered in its updates.”

Favourite flavour

If you don't like Ubuntu’s desktop, there are other flavours avatable. Kutxntu. powered by the sick KDE Plasma desktop, has a slightly more Windows like feel. Visit https://Ubuntu.com/download/flavours to see the whole selection. You don't need to install a whole new distro just to try a new desktop though. For example, if you want to try pure Gnome (without Ubuntu's tweaks) then fire up the Terminal app and type the following:

$ sudo apt install ubuntu-gnome-desktop

You can then choose the Gnome session from the login screen: |ust click the cog loon in the bottom right and choose Gnome. Unlike the Ubuntu session, this uses the newer Wayland protocol for talking to the osplays This should work well (at least with open soiree drivers) by now. but if you run into difficulties try Gnome on Xorg nstead Other desktop environments can be installed the way too. via the kiAuntu-desktop. ubuntu mate desktop. ubuntu budgie desktop and other such packages Once you've settled on your tavounte desktop, you may consider reinstalling the corresponding flavour in the name of tidiness Each desktop will install its own core applications, so there win be some duplication

Under the hood

There are subtle changes and understated improvements in Ubuntu 20.04 that really deserve mentioning...

We get <t. A new Utxintu release, even an LTS. isn't the cause celetore it once was. Even management get that now. Daubing the cover in orange and superlatives like must-have' and revolutionary new features' (those are good ideast-Ed) isn't going to fool anyone, especially savvy Linux users

We've always said that this lack of ground breaking change is a good thing. It shows Ubuntu has reached such a level of maturity that refinement, and not radical

UBUNTU FANCIES A SLICE OF PI “Having Canonical’s certification will encourage enterprises to do great things with the Raspberry Pi (models 2, 3 and 4).

rewriting of the rules, is the optimal path. We also understand that there are plenty of other distros suitable for beginners (and let's stress here that Ubuntu is popular with professionals too), and maybe some of those don't get the attention that Ubuntu does. All that aside, Ubuntu remains one of the most popular distros

among our readers, and indeed Linux users in general In this new outing you'll find support for AMD's Navi

12 and 14 GPUs as well as their new APUs. and the open source Nouveau driver has improved colour management. There's support for Intel's Comet Lake chips and initial support for their llth-generation Tiger Lake architecture. The Kernel Lockdown security module also debuts in this release, which limits how userspace code (even if it's running as root) from interfering with kernel operations. First proposed by security guru Matthew Garrett some years ago. this is aimed at admins who want to limit the damage a compromised root account can cause.

There are a couple of noteworthy filesystem changes: support for Microsoft's newly (sort of) open sourced ex FAT. as well as the speedy VirtlO-FS for sharing directories with virtual machines. Ubuntu also backport lots of features from newer kernels, and one notable addition is support for WreguardVPNs. In an age of surveillance and dubious sponsored best VPN' listicles. this will surely be a boon for privacy. It's early days yet. but we forsee Wtreguardbeing key to demystifying the VPN sphere. It’s small, fast and much easier to get your head around than OpenVPN, being much more akin to setting up an SSH server

Storage options

Moving into lower userspace, there's systemd 245, which features the new home directory regime

> Two software tools, both alike in dignity in fair Ubuntu where we lay our scene.

systemd-bomed. Don't worry though. Ubuntu doesn't use this by default, and to be honest we weren't abte to activate it so it looks like this feature hasn't been built into the package Again early days. There's also an experimental option to install using the next-generation ZFS filesystem, whose roots go back to Oracle s Solaris. Licencing conflicts have kept the out of the Linux kernel, and it's not a thing yotr average user will want. But if you have insane storage, lots of memory and deduplication requirements. ZFS is a mere checkbox in the installer away. Ubuntu's zsys middleware will automatically snapshot filesystems before software updates, so that these can be undone if things go south.

There's a new officially supported target to get excited about, the Raspberry Pi (models 2.3 and 4) Not only is this great for home users, particularly those who want to take advantage of the Pi 3 and 4's Aarch64/ ARMv8 OS hardware, but having Canonical's certification will encourage enterprises to do great things with the Pi. Read more about this on Rhys Davies post on the official Ubuntu blog at https://ubuntu. com/bk>g/ubuntu-20-04-tts-is-certified-foc-the-raspberry-pi

The certification only applies to Ubuntu Server, and that's the only Raspberry Pi download that's offered through Canonical's website, but it's straightforward to add a desktop, for example, via the xubuntu-desktop package. If you have a Pi 4 and want to take advantage of its extra oomph, why not install KDE Plasma? At the moment the official Gnome desktop isn't supported on the Pi. but don't forget an Ubuntu MATE SD card image is available (from https://ubuntu-mate.org if you want a ready-to-roll desktop The MATE desktop is ideal for lower powered devices, or just people who liked Gnome 2. MATE Images are also available for UMPCs such as the GPD Pocket and MicroPC.

Flatpaks can't be installed from the Ubuntu Software app. but are available from the command line. If you want to install them GUI style you can install the vanilla Gnome Software application, add the Flatpak plugin and then add the Flathub repo with:

S sudo apt install gnome-software gnome-software-plugui-flatpak

$ flatpak remote-add -if-not-exists flathub httpe://flathub. org/repo/flathub.flatpakrepo

Note the message about the search path: a restart is required before Flatpak apps show up in the Applications Menu. Slightly confusingly, you'll now find two app stores. Ubuntu Software and Software, in the applications menu. The latter will enable you to install from the whole gamut of package sources: Flatpaks. Snaps or good old-fashioned DEBs. So you could even uninstall the snapstore package if you want. The screenshot (teft) shows the differences between the Snap and Flatpak versions of Qutebrowser.

Our security feature in LXF262 showed you a few things you can do with hardware tokens such as the Nitrokey and Yubikey. If you have a FIDO (Fast IDentity Online) or U2F (Universal second Factor) device, then not only can you use it. as we showed in that feature, to log in locally (using the pam_u2f module), you can also use it remotely. New key types introduced in OpenSSH 8.2 can work with these devices, enhancing security when logging in to your Ubuntu device via SSH. You might already be familiar with the idea of using an SSH key instead of (or as well as) a password. Basically.

generate a key on the local system with ssh-keygen then copy it to the remote one with ssh-copy-id Well now, if you attach your hardware token and run

$ ssh-keygen -t ecdsa-sk

then you'll be prompted to tap the authenticator as well as provide an optional password. You can then copy this key to remote systems as before In order to log in with the new key. for now. you'll need to specify it with the following:

$ ssh -1 ~/.ssh/id_ecdsa_sk myserver.com

>Get your hardware

That’s all folks

And with that we must go (to the printers, like now - Ed). We haven't covered the dark art of running Ubuntu on Windows via WSL yet. but with IVSL 2going mainstream soon, that certainly will be an interesting endeavour, though perhaps one that interests developers more than home users. If you're running Ubuntu 18.04 on your server, it's probably wise to hold off upgrading until the 20.04.1 point release

There's much more to Ubuntu and we'd love to hear your opinions on it and its other flavours There are some great Ubintu derivatives out - be sure to check out our Regolith and PopLOS reviews, and there will be a slew of others in the coming weeks and months Happy fossicking among the Focal Fossa famalam! 03

secured.

Elliptic Curve DSA SSH key game on with OpenSSH 8.2.

Out with the old...

We recently made a big deal of the fact that 32-bit PC users won't be able to enjoy this new release. There's no upgrade path from 32 bit 18.04, as there was from 1604 - the last LTS to offer a 32-tnt msta* medium. And that's a bit o* a shame because it's great. But those users can continue to use 18.04 for another three years, so no need to pane yet. Also gone from the default install is Python 2. and the vast majority of packages in the repositories have been upgraded to use Python 3. The okier version reached end-of-life at the start of this year, but the package is still available for the few projects that soil rety on it.

Finally, a small thing, but one that makes us incredibly happy. That horrible Amazon ink n the latncher has gone. It has irked us since it debuted in 2012 The only thing it was useful for was teaching users how to remove icons from the dock Veterans will recall that previous Ubuntu releases included Amazon links when searching in the Unity HUD. but those days are long gone. Speaking of Unity, if you really mrss that desktop, you can Install it from the ubuntu-unity-desktop package. Alternatively, you can go all out and try the Unity remix. Find out more about this at https://discourse.ubuntu.com/t/ubuntu-untty-remix2OO4.

* О • ie» WIH^ * ♦ □ Э A !

Python 2.7 will retire in...

0 0 0 0 0 0

Years Months Days Hours Minutes Seconds

Whats al tbs. then?

Python 2.7 ml fttfl fal nururird MU202Д Of «rwly, there wm no oAkiei Sate. H«ent!у that ojte fias teen updated to unuary 1 jjJU. I oock has been updated эоосаИгфу. My onpn» idea was to throw a Python 2 CeHxecon of l*e party M PyCon 2020. «о сеМиаМ everything Pylhon 2 drl for ia Thet kiwrt ’ll I *«rirt’ (If tfm vtunrtk irtwrw«i»ng »n ymi. err л I pylhttnttonurgKg-TMt.Lanti

Python 2. thank yov for your yeurt of telhfu went*.

Python 3. yoMr t»»ne is now

RISE OF

THE DISTROS

Linus released the Linux kernel over 25 years ago, but the concept of a distribution did not come about until later. We trace the journey from a humble Usenet post to Canonical's Ubuntu.

Linus Torvold wrote "A kernel by itself gets you nowhere" in the release notes for Unux 0.01 In 199L This statement (or understatement) was apparent to early Linux adopters who actually wanted to use the OS for something and faced a bit of a struggle doing so.

Torvald had already ported

GNU's Bash interpreter and the GCC compiler to Linux, but beyond that the operating system by itself wasn't exactly capable. In fact, the 0.01 release consisted

only of source code and was only released to those that had shown an interest in Torvalds' Linux updates on Usenet. Compilation required a working copy of Minrx too. Torvalds'

I Linus Torvald: “Making Linux GPL’d was definitely the best thing I ever did.”

respected other people s licenses and so was loathe to pinch Mmix code without permission. Fortunately news of his nascent, free operating system soon spread and Unux

slowly began to grow. The original Linux copyright precluded it being distributed commercially, but in February 1992 Torvald suggested adopting the GNU GPL. This made a lot of sense: even in these early stages of Unux development it was clear that the GNU tools would be of intrinsic importance to the fledgling OS.

In December 1992.

version 0.99 would be the first version released under the GPL with Torvald later reflecting that "making Linux GPL'd was definitely the best thing I ever did"

The first distros

The first creatures to climb out from the primordial Linux soup.

Exactly what was the first Linux distribution (distro) depends very much on your definition. H. J. Lu's 'Boot-root' floppies certainly provided a minimal working Linux environment, but these were more comparable to a primitive live CD than an actual distro.

An early attempt called MCC (Manchester Computing Centre) Interim (because it was never intended to be a trial product) Linux first appeared in February 1992. It fitted on five 3.5-inch floppies, provided some classic Linux utilities and was quite luxurious in that LILO (Linux LOader) could be installed to the MBR natively Previous Linux efforts involved manually writing some bytes to the boot sector MCC didn't include the X Window

> Getting this beast up and running was traditionally a nightmare.

System, but it could be added by the user later. The MCC ideology was. in a way. similar to that of Arch Linux: to provide a slim base system which can be customised according to the user's requirements

Another early effort was Peter McDonald's Softlanding Linux System (SLS) in 1992 This was much more fully featured than MCC and included X-Windows. Today we take for granted being able to download and burn a DVD or USB stick with everything required on it, but remember in 1992 dual-speed CD-ROMs had only recently become available to the masses and the El Torito standard for bootable CDs was still three years away. So one either had to boot from a floppy disc or bootstrap from an existing Linux (or

perhaps Mmix) install. Users installing without the luxiry of network access or one of these rare optical drives (ie most everyone) would have to do battle with a bunch of floppy disks (26 for SLS 1.0). These would have to have their contents dutifully copied to the right places. Then oir intrepid installee would have to do something aoout the oootloader situation, lest they be forced to always boot using a floppy disc.

Smoke and scripts

SLS provided, relatively painlessly, what had hitherto required a Sisyphean effort to achieve: getting a working Linux installabon that booted from a hard drive and featired an impressive collection of software all nicely configured and integrated. The install could be tailored for those with resource constraints— applications could be selectively installed and while a whopping 4MB of RAM was technically required for the installation, those with less could set up a swap partition to get the job done. If you had 8MB you could even enjoy the X Windows System, but it would be some time before this could be set up without significant shouting, wailing and gnashing of teeth.

SLS may not be classed as a distro today since binaries were, on the whole, collected from other sources, but the protect didn't have the resources to be compiling and recompiling these with every new release. Indeed, source code for X-Windows was not freely available, and things would remain that way until XFree86 came along Also. SLS lacked anything comparable to a modern package management system. There were packages in the sense that tarballs containing programs could be extracted to the root directory and rudimentary pre- and post-install scripts executed, but no notion of dependencies and no nice way of removing a package. »

The distros that time forgot

At this juncture of the narrative we ll take a quick detour to mention some other pioneer' Linuxes, now consigned to the history bocks. Like MCC. TAMU was developed at the Texas A&M University for in house use only. Unlke MCC it included X-Windows. although numerous reports of magic smoke escaping from monitors' were attributed to its haphazard configuration utility. >ggdrasrf(a big of tree in Norse mythology) Linux has the honour of be«ig the first near-live Linux distro. it was

districted on CD ROM but obviously required a floppy disk from which to boot It's noteworthy that Iggdrasil was released as a commercial product, but Its $99 price tag was orders of magnitude less than that of the Unix systems available. TAMU and Vggdrasif have, like many others, largely been forgotten by history.

In 1999. Corel Linux (a Debian denvative), emerged Its main attraction was that it was compatible with the WtxdRsrfect Office Suite.

though this compatibility relied on Wine technology and performance suffered The eponymous word processor itself had been available natively on Linux since 1995 Having a mainstream office suite ought to have increased Corel’s appeal, but after an investment by Microsoft the company changed strategy and sold its distro to Xandros. The release of OpenOffice (also n 1999) effectively undermined any value to a closed office suite such as Wordperfect.

The Slack revolution

Slackware was the first proper fork of a distro in history and is the oldest Linux distro alive today—and it probably isn't going anywhere.

» V* or all its advances, though. SLS was bi considered buggy and hard to use.

In 1993. a wise undergraduate named Patrick VolkerAng was tasked with installing SLS on machines in an Al lab. He embarked on a mission to tidy it up. improving the install mechanism and noting post-install fixes. His hope was that his fixes would be incorporated into SLS. so he had no immediate plans to release them to the public. SLS, however, didn't do this, so Volkerding eventually made his efforts available via FTP. By 1993 Volkerding's contributions had made the resulting 'distro' unrecognisable and he decided to give it a new name, making it the first proper fork of a Linux distro in history.

That name (referencing the Slack doctrine, an obscure text called The Church of the Subgenius) was Slackware, The release proved popular with everyone except McDonald, who requested that Volkerding rewrite all the scnpts he'd reappropriated from SLS. It's important to note that McDonald still allowed these scripts to be distributed, he just wanted them rewntten before the next Slackware release.

I “That’s right, development of the oldest living distro takes place behind closed doors.”

Meanwhile SLS made the unpopular decision to change the format of its binary, which further increased interest m Slackware. Determined to see hrs project through. Volkerdng rewrote the offending scripts and Slackware 10. sporting version 0.9911 of the Linux kernel, was released in July 1993.

Slackware was. and still is. run as a commercial distro—CD sets and DVDs are available for $49.95 at https://store.

> Slackware is the parent of many of today's well-known distros. including the SUSE family and VectorLinux. It also inspired Arch Linux.

slackware.com and this represents the Astro's only revenue stream. Of course, those with access to an internet can download it for free and this will never change It's worth emphasising that the first few releases of Slackware were created almost entirety by

Vblkdering alone and that he remains Slackwares Benevolent Dictator for Life.

The Core Team now consists of a little over a dozen people, though there will be other contributors whose names don't see the light of day. Vblkdering continues to have the final say on ail aspects of the development process, which by the way is (and has always been) completely closed- That's right, development of the oldest Irving Astro takes place behind closed doors, there's no public source code repository (repos) and no public

bug reporting system. No one can apply to join the ranks, but they can patiently await an invite from Volkerding Users wishing to report bugs can just email Volkerding directly

Slackware is generally considered to be the most Unix-like of all the Linux distros. Nothing is hidden from the user, middleware layers are shinned and there's probably more work involved in setting it up than for other mainstream distros. Some software in the repos might be considered old. eg there's no official KDE Plasma 5 desktop, but custom packages are available There have also been no official Gnome packages since 2005. The original reason for this was the amount of developer effort required to make it fit with the rest of the distro. with Volkerding noting that KDE 4 and Xfce required much less Again the third-party Dropline Gnome package is available, but it's a little controversial since it has to replace huge swathes of system packages. It's not all bad though. Slackware is great for installing on older hardware

Slackage management

Package management in Slackware is done through the pkgiools suite which includes seif-explanatory commands such as^ESDSSI.

ВЯНГЙИ. irnong others

A common complaint is that unlike almost any other modern package manager that you'd care to name. Slackware s does not automatically resolve defiendencies. We've

already seen that this was commonplace in the early days, but for many users this will seem like something that we shouldn't have to put up with In the 21st century. But it all depends on your philosophy—Slack users kke to be in control, and they don't necessarily feel that they are in control when they are asked to install one package (presumably one they know

about, because why would a Slackware user install something without knowing all about it?) and all of a sudden the package manager goes ahead and installs all manner of other things (some of which the user may not be so familiar or trusting with) The Slackware way is to figure out or look up which dependencies are required and nstall them manually.

Debian

Frustrations with SLS were the inspiration for another famous distro too. albeit one with a much more open development model.

Like Volkdering. Ian Murdock (who tragically died at the end of 2015) was frustrated at the buggy state of SLS.

So he set about making his own distro. which he called 'the Debian Linux Release1. Debian being a portmanteau of Debra (his then girtfriend) and Ian. Murdock had tried modifying SLS but decided the best approach would be to start from scratch.

The first verson. 0.01. was released about one month after the initial Slackware release, though this (and the next few releases) were only available internally. The first public release was 0.91 at the beginning of 1994. Murdock realised that one of the reasons for SLSs shortcomings was that it was all the work of one individual. Why not reasoned Murdock, use the community development model whteh. by this stage, nad already proven itself through development of the Linux kernel

Spirit of GNU

What made Debian special were the social and hbre precepts that were baked into its make up at the very beginning, laid out m the Debian Manifesto and (later) the Debian Social Contract. Murdock said that Debian was driven by "providing a first-class product and not on profits or returns". Murdock wanted to embrace the spint of GNU and open development in order to create a better distro. His efforts were rewarded by the Free Software Foundation who provided sponsorship from 1994-95 and distnbuted Debian CDs and manuals at very near cost This support provided an enormous boost to Detxan s early evolution.

In order to facilitate distributed development. Murdock drew inspiration from the development of the kernel itself. Each subsystem had its own maintainer so work

> Red Hat Linux version 8 “Psyche", with its characteristic Gnome desktop

could be naturally distributed. Murdock realised that packaging workloads in this way naturally led to the modem notion of packages This would help cleanly divide the labour—by having clear and sane guidelines on what constituted a valid package mdrviduals were free to create their own and submit them to the team. The specificabons ensured that they would fit in with the underlying Debian base system and not tread on each other's toes and all of a sudden the arduous process of managng a distro becomes a little easier.

Eady on n the prooeedngs. the team realised that packages should consist of binaries rather than source code This absolved

users of some responsibility, but also made the process more reliable: In theory developers' toolchains should be more reliable than those of end users

The original packaging tool. dpkg. was written in Perl by Murdock and others, but still couldn't handle complexities such as dependencies This was rewritten in 1994 by lan Jackson and by 1995 Debian, now with around 200 active devetepers was starting to look ready for prime bme. Debian packages, in the spirit of openness, had adopted a standard archive format so that packages could be examined using the standard ar and tar utilities on any Unix-like system. »

Red Hat

A little known fact is that in August of 1994 Murdock received an email from one Marc Evnng saymg that he was starting a company called Red Hat (you may have heard of them) that was going to burfd a commercial product So impressed with Debian was he that he planned to use it as the base for this new financially-motivated distro. Of course, things didn't work out. but Red Hat Linux nonetheless became the last of the Big Three grandaddy distros—those from which most others were

derived The name comes from a Cornell University lacrosse hat given him by his grandfather, which Ewing had worn during his time at Carnegie Mellon.

In 1993. Bob Young formed a business, ACC. which sold, amongst other things. Linux CDs and software In 1995. ACC acquired Red Hat and changed its name to Red Hat. Inc Like Debian. Red Hat realised that a robust packaging mechanism would be key to a successful Linux distro. The company

envisioned users installing new software (all nicely bundled and kept up to date by a package maintainer) and having the option to uninstall it. safe in the notion that nothing of the original will be left and nothing will break, Unlike Debian though. Red Hat has always been driven by the dollar. When the company went pubkc in 1999 it set a record for a technology inital public offering, raising $84 motion and seeing the eighth largest single day gam in Wall Street history.

Running old distros

If all this talk of old Linux distributions has you yearning to see what they were really like - or how much pain you can endure - then read on.

» W #ou can find pretty much anything you like on the internet, provided you

I know where to look [stop looking at the internet and finish this feature—Ed], eg if you were looking for old Linux distros. then one of your first ports of call needs to be ibiblio.org (http://bit.ly/HistoricLinux) Here you’ll find MCC (1992). SLS 1.03 (1993), Slackware 1.1.2 (1993). (Debian 0.91 (1994) and Red Hat 2.0 (1995), among many others.

Getting these (particularly the very old distros) to work in a virtual machine rs sometimes tricky, primarily because the emulated hardware that the hypervisor provides isn't understood by the pnmitive kernels of the 90s. IDE CD-ROMS weren't supported by Linux until much later on. so unless you can figure out some way of emulating a SCSI CD-ROM drive then old CD images are more or less useless. However, as we ll see later, other more determned people have done a lot of work here so there are preinstalled images available.

The older distros that you'll find will feature collections of floppy disk images If you happen to have an early 90s PC (that still works) and a floppy drive (that also works) then perhaps you will denve some enjoyment from bringing it to life Or maybe it will just end in tears—it is Linux after all. This should be obvious, but don t be tempted to run one of these older distributions on new hardware.

> Bochs—it doesn't look like much, but it's the only way we managed to fire up a 1992 distro (without Jump starting Neil's 386 which no one wanted to touch).

It will not run 'quicker because distros back then weren't so full of cruft) it will likely not run at all. That hardware wasn't invented when the distro was around. If it could run (which it couldn't) you'd likely see magic smoke escaping from your monitor as you tried to run Xconltgurator. If you somehow managed to set that up (no one could set that up), then maybe you’d by and update the OS. but it wouldn't work because those repos haven't been there for 15 years. In sum. these sorts of things should only be run on virtual machines.

Virtualise the past

While some people prefer to use VirtualBox here, we've had much better luck using Qemu or Bochs. They seem to upset the older kernels significantly less. They are available for all good distros. eg on Ubuntu they can be installed with:

$ sudo apt-get install bochs bochs-x bochs-sdl

$ sudo apt-get install qetnu

We ll use Bochs to run YggdrasH 0.98.3. since someone has kindly prepared an image which you can download from http://www. oldlinux.org/Linux.old/bochs/ yggdrasH-0.98.3-040515.zip Unzip the file and enter the resulting directory This image was made for an older version of Bochs so the following command may not work nght away (Note: it didn't for us):

$ bochs -q f bochsrc-hd.bxrc

> FTP sites like this host all manner of historical distributions. Just don't expect them to actually do anything useful.

If it doesn't work, edit the config file bochsrc-hd.bxrc and put a « in front of the lines beginnng:

romimage: vqaromimage:... vga_update_interval:... ips:...

(Those lines are spread through the file). Try the command again and hopefully the emulation window appears. You' II need to control execution from the terminal though, which is probably currently displaying some mysterious Assembly instruction. Press c to continue execution and you should see Yggdras>! Linux spring to life in the emulation window After some time you can log in as root and type some commands Classics like tar and Is are all there and work much the same as they do today. Running uname -a we see Linux linux 0.98.pl3-26 02/21/041386

The 2004 date comes from when the image was actually built (the install and kernel have been tweaked to work inside a virtual machine), but the actual kernel was state of the art back in October 1992. Does it chill you. dear reader, to be cavorting with an operating system that has been defunct for so long? No? Well good, there's all manner of other distros to try out. the newer ones are much easier to get going, but beyond nostalgia value, there's not much to be gained from them. Still let us know what you manage to get running

The Future of distros

What are they? Where are they going? Will they ever end?

The term 'distribution' didn't really become commonplace until 1995. By now we have a fairly concrete (and relatively luxurious) notion that a distro consists of an installer, a set of customised packages compiled from (modified) upstream sources; a kernel (which is just a package); a desktop environment; documentation and so forth. We've come to expect some kind of support too, generally through forums and IRC channels often provided by the community.

We have also come to expect some means of creating your own packages, either in the hope that they will be included in a future release or just so that software unavailable through the standard repos can be cleanly added. But this word, distribution' has meant different things at different times, and its meaning continues to evolve with the creatures it denotes Whatever a distro is. it's unique to the open source world and acts as the magic glue that holds all the fragments together. Such a thing couldn't exist if all those fragments were proprietary—there would be at most one way to put the bits together.

Future gazing

Unux distros continue to evolve, whether its through cosmetic things sucn as desktop environments (eg Cinnamon, designed for Linux Mint) or more obscure things such as packaging systems. Fedora, the community distro sponsored by Red Hat. is often at the forefront of these developments. Looking at some recent innovations hint at where the futixe of Linux is going. One recent development is Fedora s сорт repos. It's always been possible for anyone to make some RPM packages and a repo to put them in. But the process is complicated and the

> We continue to be impressed with the direction Linux Mint has taken-it has evolved into something that is at once powerful and easy to use.

policies stnngent, since they're the same policies that apply to official packages. Сорт repos aim to make it much simpler for the more casual user to create packages and share them with the wider world without having to understand the ins and outs of

I “Flatpak enables whole apps to be distributed as a single entity which runs in its own sandbox.”

Fedora's policies New in Fedora 24 is support for the Flatpak format This enables whole applications (which may be. in the current scheme of things, a group of packages or just a part of one package) to be distributed as a sngle entity which runs in its own sandbox. There are no traditional dependencies

(beyond some standard runtimes) to speak of—a Flatpak is entirely self-contained. Ubintu introduced a similar technology in 16.04 called Snap packages

Both of these formats potentially solve what has been a distro bugbear for many years; how to get newer versions of software into fixed release distros without breaking

everything. Of course, there are potential downsides to this method, such as ending up with many copies of the same libraries in many snaps, and the sandboxing aspect means applications can t interface with the rest of the system so well. This is why traditional packages will be around for a while.

The End of Distros?

We've seen that In the early days, the distro was very much someth ng that wanted to make your life easier. The first ones probably ckdn't seem like they actually did that, but the huge popularity of Unux distros by the late 90s definitely suggests that trey had become much nicer to work with, either that or they were just so useful that people had no choice but to work with them Lately though, things seem to be changing. A small but interesting thing happened n 2014 when Gnome 3.12 was released Or rather it didn't happen Gnome 3.12 represented a major advance for the

desktop; all manner of problems in 3.10 had been corrected. It was generally considered to be the first of the Gnome 3 series in which the desktop could be happily used by normal people' The trouble was that none of the major distros adopted it.

The feature freeze for Ubuntu 14 04 had long passed and Fedora, a distro very much tied to Gnome, wouldn’t end up releasing version 21 for another nine months. Other distros such as Mint and OpenSUSE had other desktop preferences and (for a time at least) Gnome 3.12 was a desktop without a home. Certamly

3.12 was soon available on rolling release distros. but these aren't everyone's cup of tea.

If you И permit some latitude here, then this hints at distros' inherent shortcomings. In this case people wanted a particular desktop that distros, acting as gatekeepers (or even obstacles) couldn't provide. И people wanted that desktop badly enough they woud jump ship. Looking ahead we see a future of applications packaged n Flatpaks and Docker containers These can run anywhere, they don't care about the underlying distro. and in time, maybe, nerther will we.

Birth of the

hacking away on hrs home computer Linus Torvalds' attempt at creating a free alternative to the proprietary Minix operating system might have started out as a hobby project, but today it powers everything from the tiny little Raspberry Pi to the mighty New York Stock Exchange.

“Today it powers everything from the Raspberry Pi to the New York Stock Exchange.”

submarines patroling the oceans and even earth-orbiting satellites

The secret sauce behind the world's largest open source project is an effective system at collaboration, which is as much of a miracle as the software it has helped to foster. Unlike other open source communities, the kernel community has

You wouldn't realise It. but you’ve got software worth billions of pounds powering your computer. That’s what it would cost to redevelop the Linux kernel, according to estimates by the Linux Foundation. The foundation has dubbed the Linux kernel the world's largest collaborative у

development project as it L

would take thousands of man ’

years to rebuild from scratch. Л

The latest release of the i

kernel contains over 19 million Imes of code written m about a dozen programming languages, and most of the work is paid for by hundreds of multinational corporations that have thousands of dedicated kernel developers pitchrig into its development.

Yet all of this work can be traced back to one solitary bespectacled developer

Linux kernel^

We trace the origins of the kernel and recount its numerous milestones and achievements.

had to evolve its own distinct mechanism of operating in an environment where there are thousands of eyebals and where thousands of lines of code are modified every day. To do this repeatedly without fai is an achievement in itself

In this feature, we'll examine the journey of a piece of code that originated from the keyboard of a student in Finland and in over 25 years has permeated every aspect of computing all over the world and even beyond. We ll look at its incredible growth, examine the important contributions made by other developers and how they have all grown together into the indispensable tool that powers the internet and empowers everyone using it. This Is the story of Linux and how it has made a quantum leap in computing in less than a quarter of a century

The kernel’s architecture

The kernel is essentially a resource manager that mediates access to the resources available on a system.

The kernel is responsible for enabling multiple applications to effectively share the hardware by controlling access to the processor, memory, disk, networking and

other resources.

code that's designed to be linked to the kernel at runtime.

This structure of the kernel is responsible for two major contributing factors to the popularity of Linux. It allows for a large number of independent developers to collaborate and contribute to Its development while also

making provisions for extensibility, eg thanks to the kernel's structure and the use of standardised software interfaces, each driver is implemented as a separate module This allows an Individual developer to write device drivers without Interfering with the work of other developers.

Timeline

The Linux kernel is divided into various subsystems that control various components of the system (as shown in the diagram, right) and all of these are tightly integrated Each of these subsystems has a defined function and we'II explore them in detail over the next few pages. The Linux source tree also mirrors the kernel structure and these various subsystems are structured in their own subdirectory inside the kernel's source code The subsystems interact with each other using finction calls and shared data structures, such as task lists, memory maps and i nodes etc. When you drill down inside a subsystem, each is composed of several modules. A module is a piece of

Process management

Applications, tools

p— User space

System calls

Linux kernel

Memory management

File systems

Device drivers

Network

<— Components

Multitasking v'rtual File, directories Device access. Network Functionality memory глиишии terminals functionality

Scheduler, architecturespecific code

Memory manager

File system types

Block devices

Character devices

Network protocols

Network drivers

CPU

RAM

Hard disk. CO. floppy disk

Various terminal equipment

Network adaptor

> The subsystems of the Linux kernel.

Software support

Hardware support

*— Hardware

19 December 1991 vOJl __ Linux adoption got a serious bump up. Until then, Torvalds had received only small one-trie bug fixes. But now. people were sending hm new features He doubled the RAM on his machine to 8MB and bought a floating point coprocessor, because people had been asking if Linux would support it.

November 1991 v0.03 <

While the exact release date of this release is unknown, Torvalds was plugging his OS to Minix newbies on the comp, osmimx mailing list, claiming that his OS was a "bit faster than minix" and that it made "most things easy to port (easier than to Minix).''

П September 1991 vO.Ol posted on ftp.fimet.fi This release includes Bash vlOB and GCC vL4O. At ths time, the source-only OS is free of any Mirux code and has a mult>-threaded lite system. Torvalds also famously mentions that Linux currently isn't portable and "It probably never will support anything other than AT hard IP disks, as that s al' I have ’

5 October 1991 v0.02 **

The second release includes some bug fixes and a lew • additional programs Torvalds successfully ran Bash. GCC. gnu-mate, gnu-sed and compress etc under it. In addition to the source he also offers binanes of his OS.

Late November 1991 rO.10 -Torvalds was mainly using Linux to read emaX and news from the inlversity s computer via a terminal emulator he’d written One day he accidentally dialed his hard disk f/dev/hdal) instead of the modem (Zdev/ttyl) and overwrote some of the critical parts of the Minix partition Since he coukin t boot into Minx. he decided to write the programs to compile Linux under itself.

1991 I

25 August 1991

Linus announces his hobby project on comp.os.mimx Linus Torvalds, a 21 year old student at the University of Helsinki, Finland, starts toying with the idea of creating his own clone of the Minix OS. He originally Intended to call his kernel Freax. However, when he uploaded the first version of the kernel, the maintainer of the FTP server. Ari Lemmke. renamed the directory that housed the source to Linux.

SEPTEMBER I OCTOBER I NOVEMBER

DECEMBER

AUGUST

System calls

System calls are used to enable user processes to interact with the kernel. However, to understand their importance, you must first learn how the kernel maps processes. The word length of the CPU determines the maximum size of the address space that can be managed, eg on 32-bit systems, there are 2'32 bytes while 64-bit machines can manage 2*64 bytes of address space. The maximum size of the address space isn’t related to the physical RAM on the computer and is therefore known as the virtual address space.

and other components in the kernel space. These interfaces are implemented by system calls issued to the kernel.

The Posix standard defines a number ot system calls grouped into various categones. eg process management system calls can be used to create new tasks while the signals system calls can send signals and timers.

As soon as a system call is issued, the processor must change the privilege __ • i i leve) and switch from user mode to

address space into kernel kernel mode, in order to perform space and userspace.”

Unux divides virtual address space into two parts known as kernel space and userspace. This division is based on an architecture-specific value. The key difference between userspace and kernel space is that access to the latter is forbidden to the former. Instead, processes running in userspace are offered a set of interfaces to interact with the hardware

“Linux divides virtual

restricted actions such as accessing the physical hardware on the computer.

February 1992 BJ

MCC Interim Linux __

Soon alter vO 12 -----

came the first Unux distribution that was designed specifically to hep users who weren’t Unix experts. It featured a menu-driven installer that installed both the kernel and a set ot end user and programming tools Before MCC, there was HJ Lu’s Boot-root set ot Hoppy disk images, which required editing the MBR with a hex editor to install Lnux

7 March 1992 v0.95 X windows A hacker named Orest Zborowski ports X Windows to Linux This

allows users to run multiple windows simultaneously and paves the way for future developments. The massive jump in version number came about because Torvalds felt confident that Linux had traversed 95% ot the way towards a full-fledged, network-capable OS.

14 March 1994 vULO —

The first production release. Torvalds v IwF Э had been overly optmistic in naming vO.95 and it took about twoyearsto ’ —

get version 10 out the door It was eventually released in March 1994 J,

with great fanfare in the

of the Computer Sciences Department the University ot Helsinki This ИК

release of the Linux

sngle processor 1386 I

based computer

systems. Soon afterwards commercial Linux distribution makers. Red Hat and SUSE publish version LO of their Unux distributions.

1992 JANUARY

6

FEBRUARY

MARCH

5 January 1992 vO.12 GPL

Up until this point Torvalds published Linux releases under his own licence to restrict commercial activity After suggestions from users to make it compatible with the GNU copyleft licence, Torvalds switches to GPL with this release. In 2000, Torvalds clarified that the kernel was licensed under GPlv2. Later, after the GPtv3 licence was released in 2007, Torvalds and the other kernel developers decided against adopting it. citing issues with several clauses such as those dealing with DRM and patent provisions

Job control

The Linux kernel continues to improve I and stabilise. Ted Tso, who will go on to I become a long time kernel developer. I decides to implement the new |ob I control feature.

Virtual memory

The page-to-drsk feature came about when Robert Blum from Germany asked Torvalds if Linux could be compiled with a smaller compiler that took just 2MB of RAM. which was al he had. This was the first feature that Tonraids implemented based on someone’s request. It was called page-to-dlsk and it led to the creation of the mkswap utility. In his autobiography, Torvalds notes that adding this page-to-dsk functionality caused Unux to rise above the competition. ’’That's when Lmux took off. Suddenly there were people switching over from Minix to Linux.

At the time. Linux didn't do everything Minix did but It did most ot the things people reafy cared about. And it had this one capability that people with page-to disk, you could run bigger programs than you had memory for.'

1993

1994________

JANUARY

MARCH

July 1993 Slackware LO The oldest distro still actively maintained today had its first release before Unux reached vLO. Slackware was originally derived from the Softlandmg Linux System (SLS). which was the first Linux distribution to offer a comprehensive software collection on top ot an Xll graphical interface. However, SLS wasn t well mamtained, which led Patrick Volkerding to create Slackware

started work on a derivative that he named Debian Although it had been in development for several months. vO 91 was the first public release and included a simple package system which could install and uninstall packages. The release also included the Debian Unux Manifesto, which was Murdock s vision for the new OS

Process management

Managing and scheduling processes is one of the most important subsystems of the Linux kernel.

Its purpose is to control access to the computer's processors. Linux can run several processes at the same time despite the fact that a single-processor system can only run one process at any given time. To be able to multitask. Linux must switch repeatedly between multiple processes running on the system in rapid intervals.

To multitask successfully, the kernel must decide how much time to devote to each process and when to switch to the next one.

Also when it switches from one process to another, it must ensure the execution environment is exactly the same as it was when it last withdrew processor resources. Both these tasks are the responsibility of the scheduler subsystem. The set of rules used to determine when and how to select a new process to execute is called the scheduling policy.

Since processes aren't all equally important, the scheduling policy ranks them according to their pnority. This process priority is dynamic and the scheduler keeps track of what the processes are doing and adjusts their priorities periodically. Linux processes are