Теги: engineering random processes cambridge university press statistics production process theory of variety

ISBN: 978-1-107-10012-1

Год: 2015

Текст

>

г·

BRUCE HAJEK

Random Processes for Engineers

This engaging introduction to random processes provides students with the critical

tools needed to design and evaluate engineering systems that must operate reliably in

uncertain environments.

A brief review of probability theory and real analysis of deterministic functions sets

the stage for understanding random processes, while the underlying measure theoretic

notions are explained in an intuitive, straightforward style. Students will learn to manage

the complexity of randomness through the use of simple classes of random processes,

statistical means and correlations, asymptotic analysis, sampling, and effective

algorithms. Key topics covered include:

• Calculus of random processes in linear systems

• Kalman and Wiener filtering

• Hidden Markov models for statistical inference

• The estimation maximization (EM) algorithm

• An introduction to martingales and concentration inequalities

Understanding of the key concepts is reinforced through more than 100 worked

examples and 300 thoroughly tested homework problems (half of which are solved in detail

at the end of the book, with the remaining solutions available online for instructors at

www. Cambridge, org/hajek).

Bruce Hajek has been an avid student, instructor, and user of probability theory for his

entire career. He is the Mary Lou and Leonard C. Hoeft Chair of Engineering, Center

for Advanced Study Professor of Electrical and Computer Engineering, and Professor

in the Coordinated Science Laboratory at the University of Illinois. Among his many

awards, he is a member of the US National Academy of Engineering and a recipient of

the IEEE Koji Kobayashi Computers and Communications Award. He is co-author, with

E. Wong, of the more advanced book, Stochastic Processes in Engineering Systems, 2nd

edn, 1985.

"A comprehensive exposition of random processes ... Abstract concepts are nicely

explained through many examples ... The book will be very helpful for beginning

graduate students who want a firm foundational understanding of random processes.

It will also serve as a nice reference for the advanced reader."

Anima Anandkumar, The University of California, Irvine

"This is a fantastic book from one of the eminent experts in the field, and is the standard

text for the graduate class I teach in ECE at UT Austin. The material covered is perfect

for a first-year graduate class in Probability and Stochastic Processes."

Sanjay Shakkottai, The University of Texas at Austin

"This is an excellent introductory book on random processes and basic estimation theory

from the foremost expert and is suitable for advanced undergraduate students and/or

first-year graduate students who are interested in stochastic analysis. It covers an

extensive set of topics that are very much applicable to a wide range of engineering fields."

Richard La, University of Maryland

"Bruce Hajek has created a textbook for engineering students with interest in

control, signal processing, communications, machine learning, amongst other disciplines in

electrical engineering and computer science. Anyone who knows Bruce Hajek knows

that he cares deeply about the foundations of probability and statistics, and he is equally

engaged in applications. Bruce is a dedicated teacher and author in the spirit of Prof.

Joe Doob, formally at the statistics department at the University of Illinois.

I was fortunate to have a mature draft of his book when I introduced a stochastic

processes course to my department in the spring of 2014. The book provides an entirely

accessible introduction to the foundations of stochastic processes. I was surprised to

find that the students in my course enjoyed Hajek's introduction to measure theory, and

(at least by the end of the course) could appreciate the value of the abstract concepts

introduced at the start of the text.

It includes applications of this general theory to many topics that are of tremendous

interest to students and practitioners, such as nonlinear filtering, statistical methods

such as the EM-algorithm, and stability theory for Markov processes. Because the book

establishes strong foundations, in a course it is not difficult to substitute other

applications, such as Monte-Carlo methods or reinforcement learning. Graduate students will

be thrilled to learn these exciting techniques from an accessible source."

Sean Meyn, University of Florida

Random Processes for

Engineers

BRUCE HAJEK

University of Illinois

m

CAMBRIDGE

UNIVERSITY PRESS

Cambridge

UNIVERSITY PRESS

University Printing House, Cambridge CB2 8BS, United Kingdom

Cambridge University Press is part of the University of Cambridge.

It furthers the University's mission by disseminating knowledge in the pursuit of

education, learning and research at the highest international levels of excellence.

www.cambridge.org

Information on this title: www.cambridge.org/9781107100121

© Cambridge University Press 2015

This publication is in copyright. Subject to statutory exception

and to the provisions of relevant collective licensing agreements,

no reproduction of any part may take place without the written

permission of Cambridge University Press.

First published 2015

Printed in the United Kingdom by Clays, St Ives pic

A catalog record for this publication is available from the British Library

Library of Congress Cataloging in Publication Data

Hajek, Bruce.

Random processes for engineers / Bruce Hajek, Illinois.

Includes bibliographical references and index.

ISBN 978-1-107-10012-1 (Hardback)

1. Engineering mathematics-Study and teaching (Graduate) 2. Stochastic processes-Study

and teaching (Graduate) 3. Numbers, Random-Study and teaching (Graduate) I. Title.

TA340.H325 2015

519.2/3-dc23 2014035900

ISBN 978-1-107-10012-1 Hardback

Additional resources for this publication at www.cambridge.org/hajek

Cambridge University Press has no responsibility for the persistence or accuracy of

URLs for external or third-party internet websites referred to in this publication,

and does not guarantee that any content on such websites is, or will remain,

accurate or appropriate.

To Beth, for her loving support.

Contents

Preface

1 A selective review of basic probability

1.1 The axioms of probability theory

1.2 Independence and conditional probability

1.3 Random variables and their distribution

1.4 Functions of a random variable

1.5 Expectation of a random variable

1.6 Frequently used distributions

1.7 Failure rate functions

1.8 Jointly distributed random variables

1.9 Conditional densities

1.10 Correlation and covariance

1.11 Transformation of random vectors

2 Convergence of a sequence of random variables

2.1 Four definitions of convergence of random variables

2.2 Cauchy criteria for convergence of random variables

2.3 Limit theorems for sums of independent random variables

2.4 Convex functions and Jensen's inequality

2.5 Chernoff bound and large deviations theory

3 Random vectors and minimum mean squared error estimation

3.1 Basic definitions and properties

3.2 The orthogonality principle for minimum mean square error estimation

3.3 Conditional expectation and linear estimators

3.3.1 Conditional expectation as a projection

3.3.2 Linear estimators

3.3.3 Comparison of the estimators

3.4 Joint Gaussian distribution and Gaussian random vectors

3.5 Linear innovations sequences

3.6 Discrete-time Kalman filtering

viii Contents

Random processes 103

4.1 Definition of a random process 103

4.2 Random walks and gambler's ruin 106

4.3 Processes with independent increments and martingales 108

4.4 Brownian motion 110

4.5 Counting processes and the Poisson process 111

4.6 Stationarity 115

4.7 Joint properties of random processes 118

4.8 Conditional independence and Markov processes 118

4.9 Discrete-state Markov processes 122

4.10 Space-time structure of discrete-state Markov processes 129

Inference for Markov models 143

5.1 A bit of estimation theory 143

5.2 The expectation-maximization (EM) algorithm 148

5.3 Hidden Markov models 152

5.3.1 Posterior state probabilities and the forward-backward algorithm 153

5.3.2 Most likely state sequence - Viterbi algorithm 157

5.3.3 The Baum-Welch algorithm, or EM algorithm for HMM 158

5.4 Notes 160

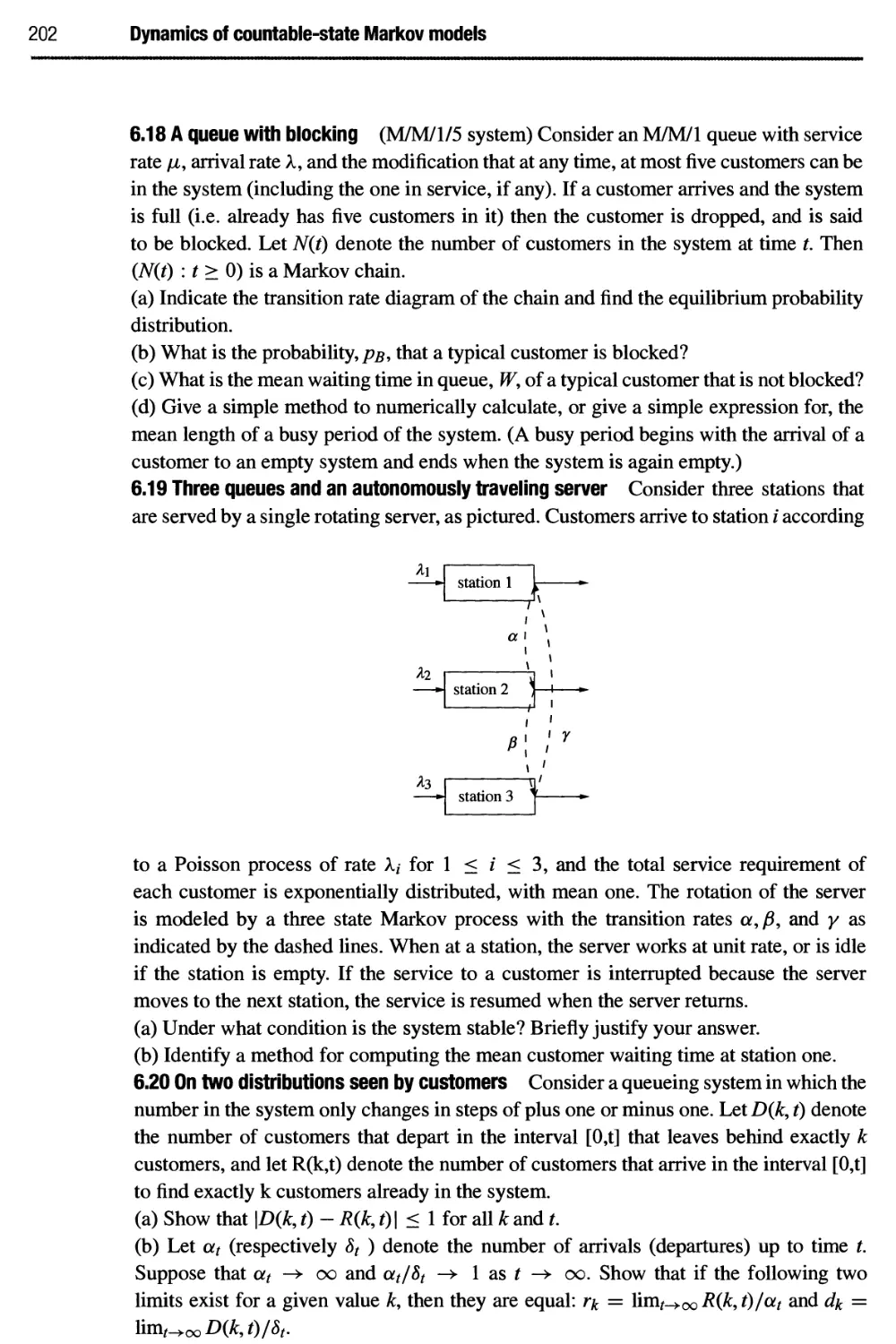

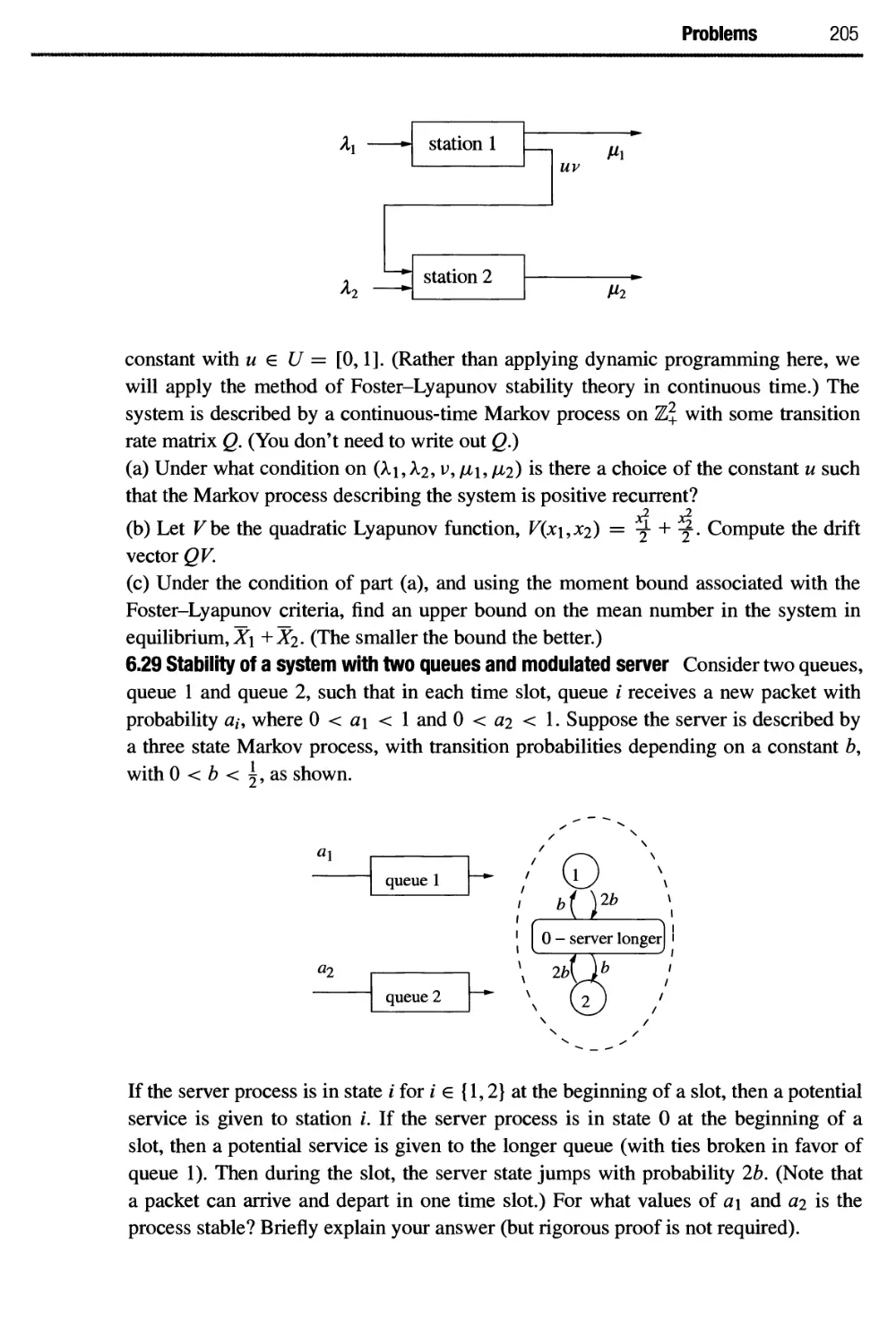

Dynamics of countable-state Markov models 167

6.1 Examples with finite state space 167

6.2 Classification and convergence of discrete-time Markov processes 169

6.3 Classification and convergence of continuous-time Markov processes 172

6.4 Classification of birth-death processes 175

6.5 Time averages vs. statistical averages 177

6.6 Queueing systems, M/M/l queue and Little's law 178

6.7 Mean arrival rate, distributions seen by arrivals, and PASTA 181

6.8 More examples of queueing systems modeled as Markov birth-death

processes 184

6.9 Foster-Lyapunov stability criterion and moment bounds 186

6.9.1 Stability criteria for discrete-time processes 186

6.9.2 Stability criteria for continuous-time processes 193

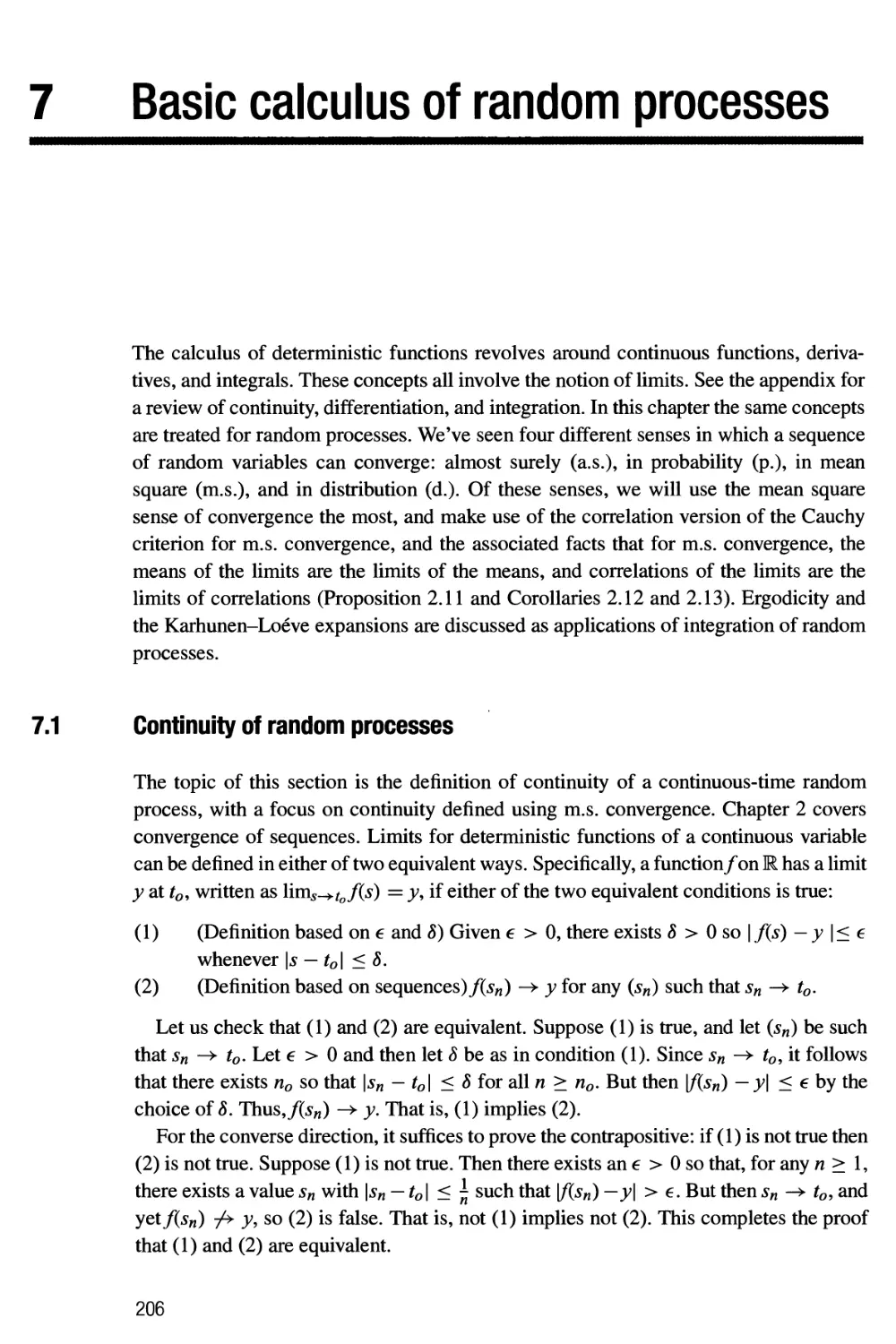

Basic calculus of random processes 206

7.1 Continuity of random processes 206

7.2 Mean square differentiation of random processes 212

7.3 Integration of random processes 216

7.4 Ergodicity 223

7.5 Complexification, Part I 229

7.6 The Karhunen-Loeve expansion 231

Contents Ix

7.7 Periodic WSS random processes 238

8 Random processes in linear systems and spectral analysis 248

8.1 Basic definitions 248

8.2 Fourier transforms, transfer functions, and power spectral densities 252

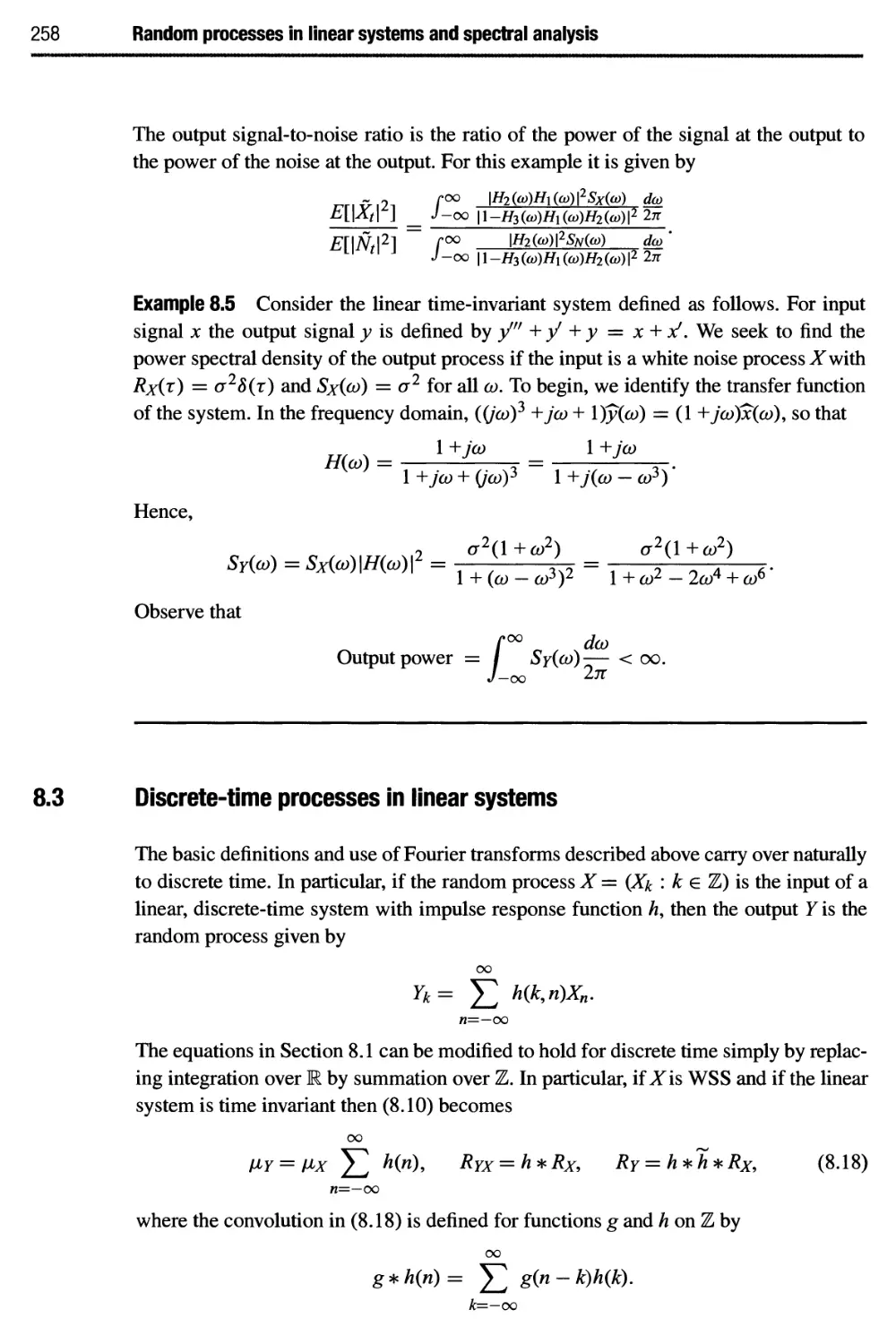

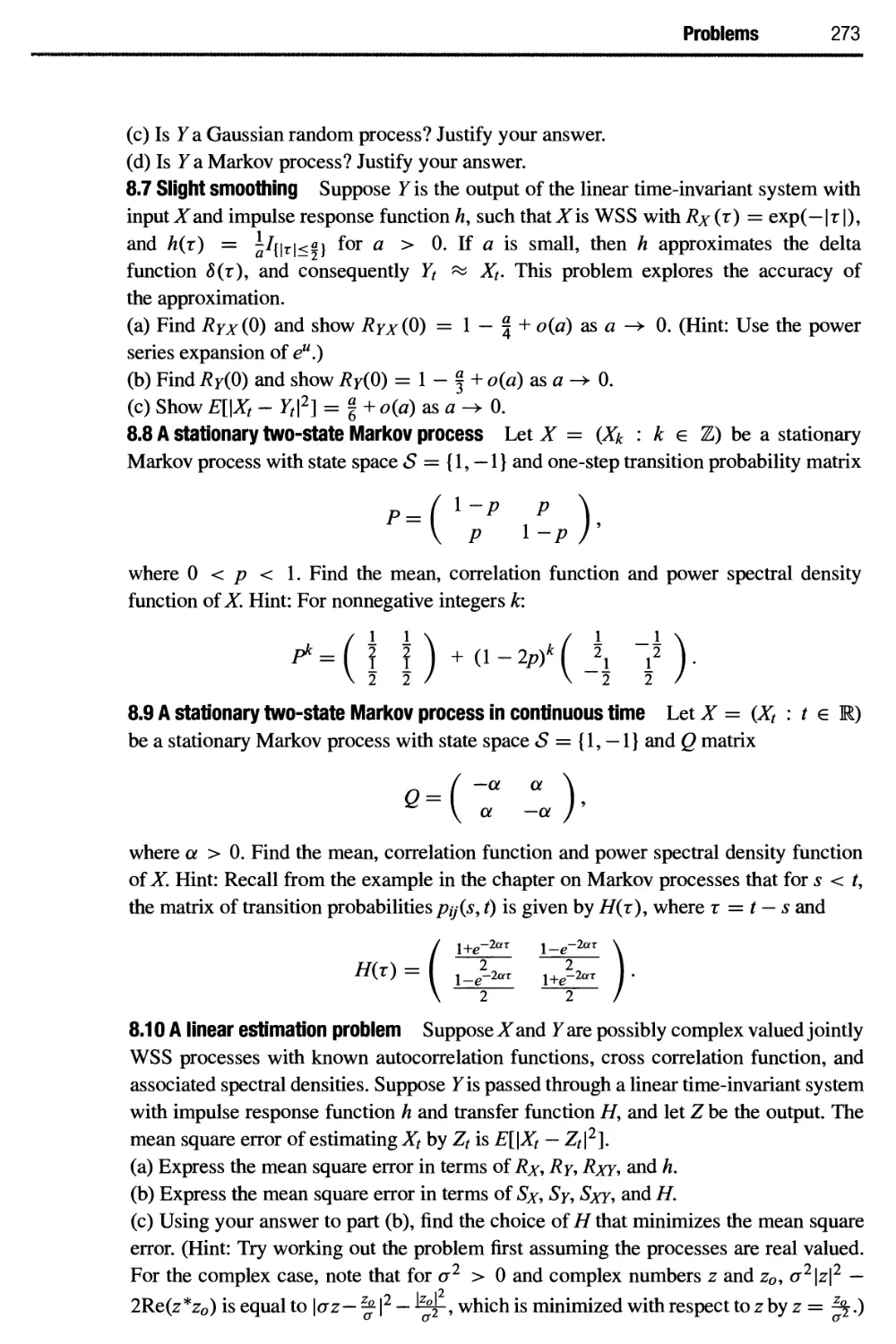

8.3 Discrete-time processes in linear systems 258

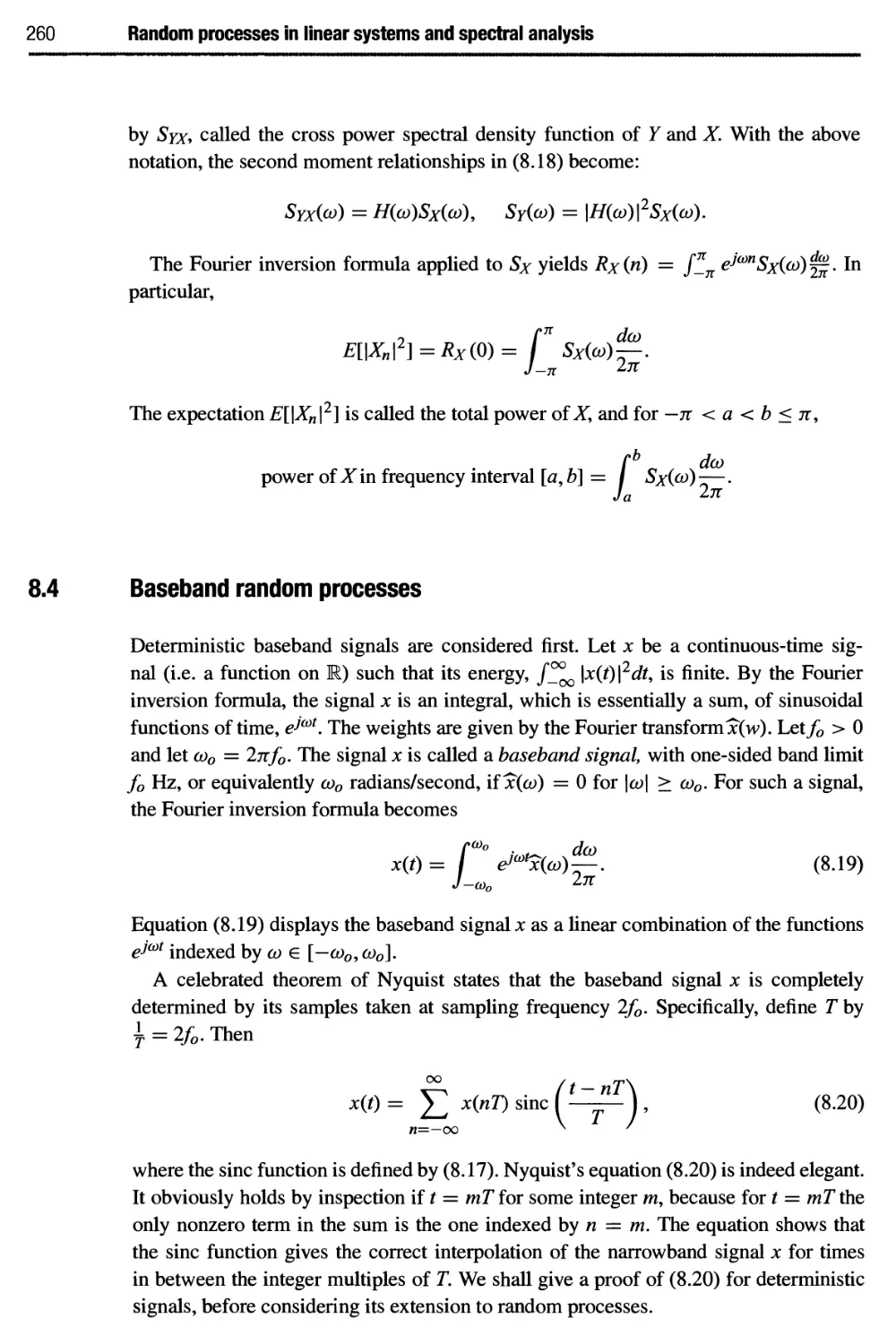

8.4 Baseband random processes 260

8.5 Narrowband random processes 263

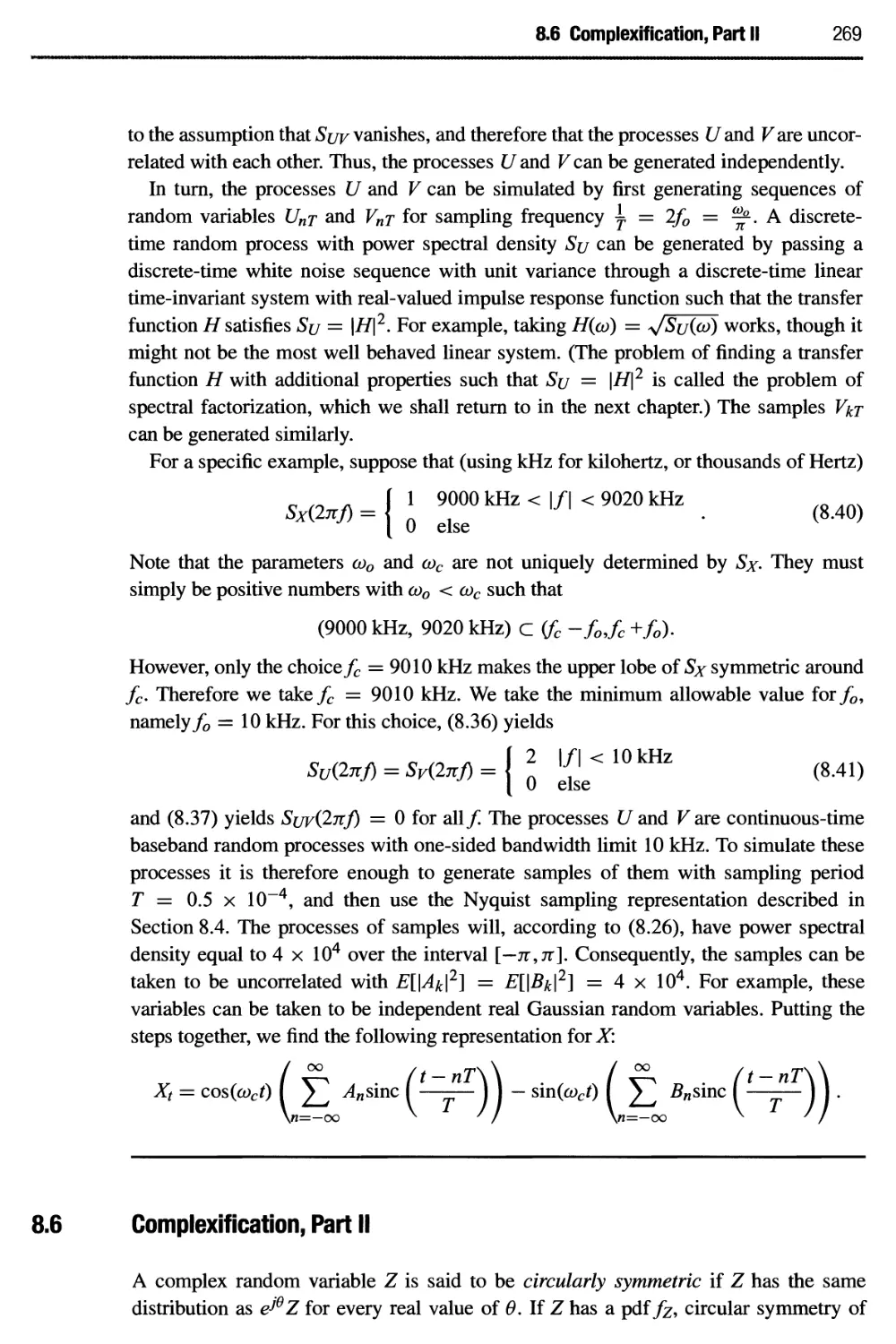

8.6 Complexification, Part II 269

9 Wiener filtering 280

9.1 Return of the orthogonality principle 280

9.2 The causal Wiener filtering problem 283

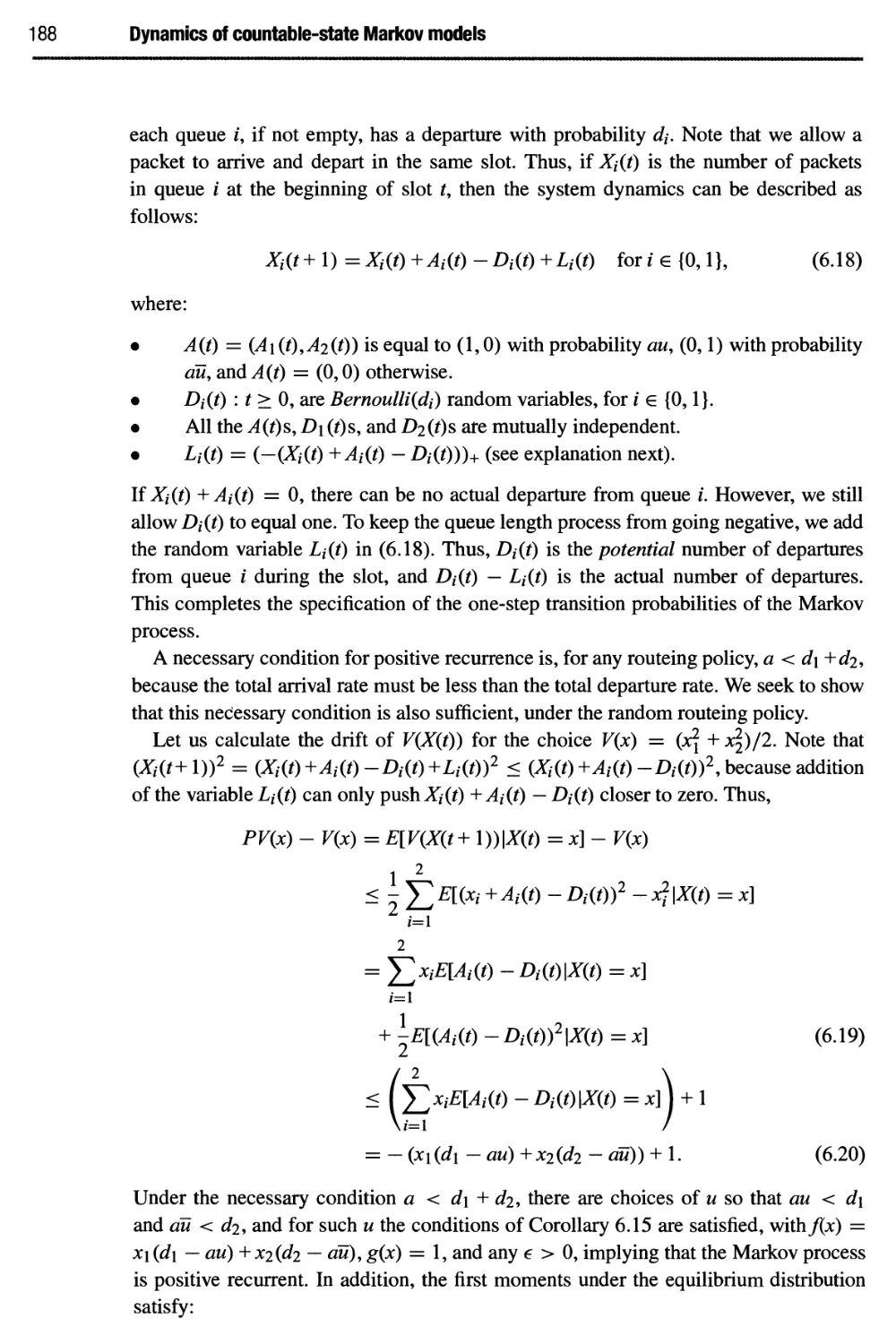

9.3 Causal functions and spectral factorization 284

9.4 Solution of the causal Wiener filtering problem for rational power

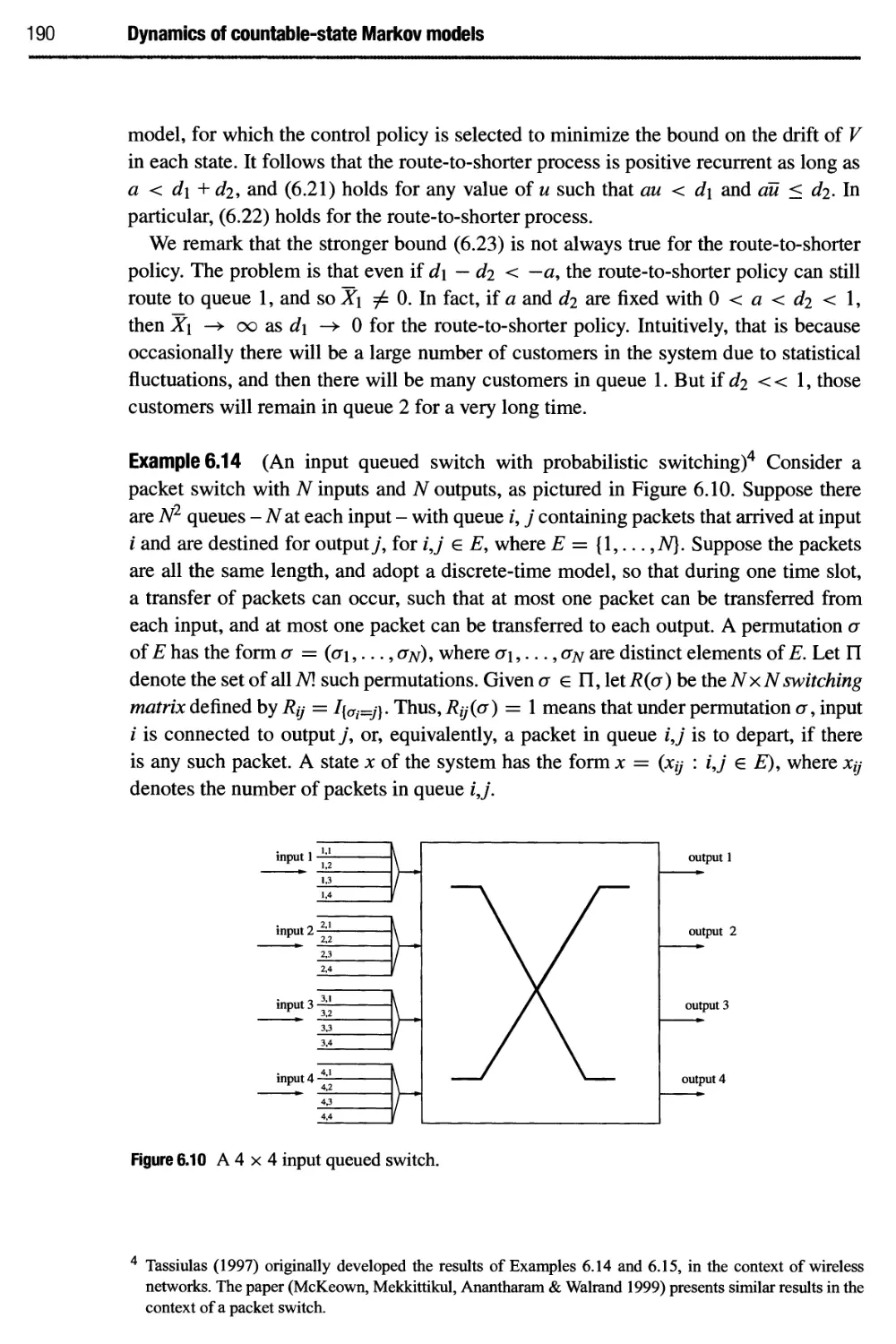

spectral densities 288

9.5 Discrete-time Wiener filtering 292

10 Martingales 304

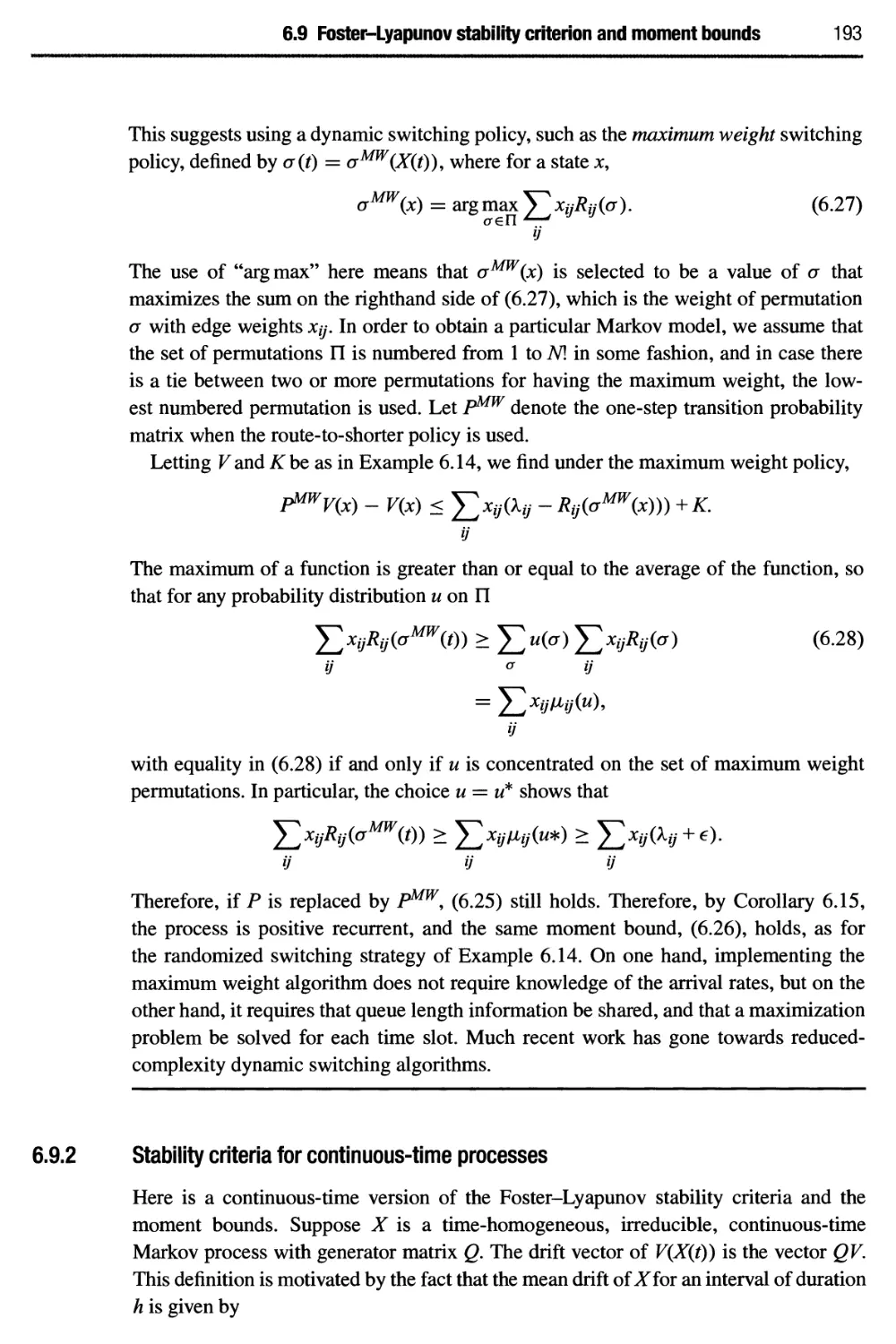

10.1 Conditional expectation revisited 304

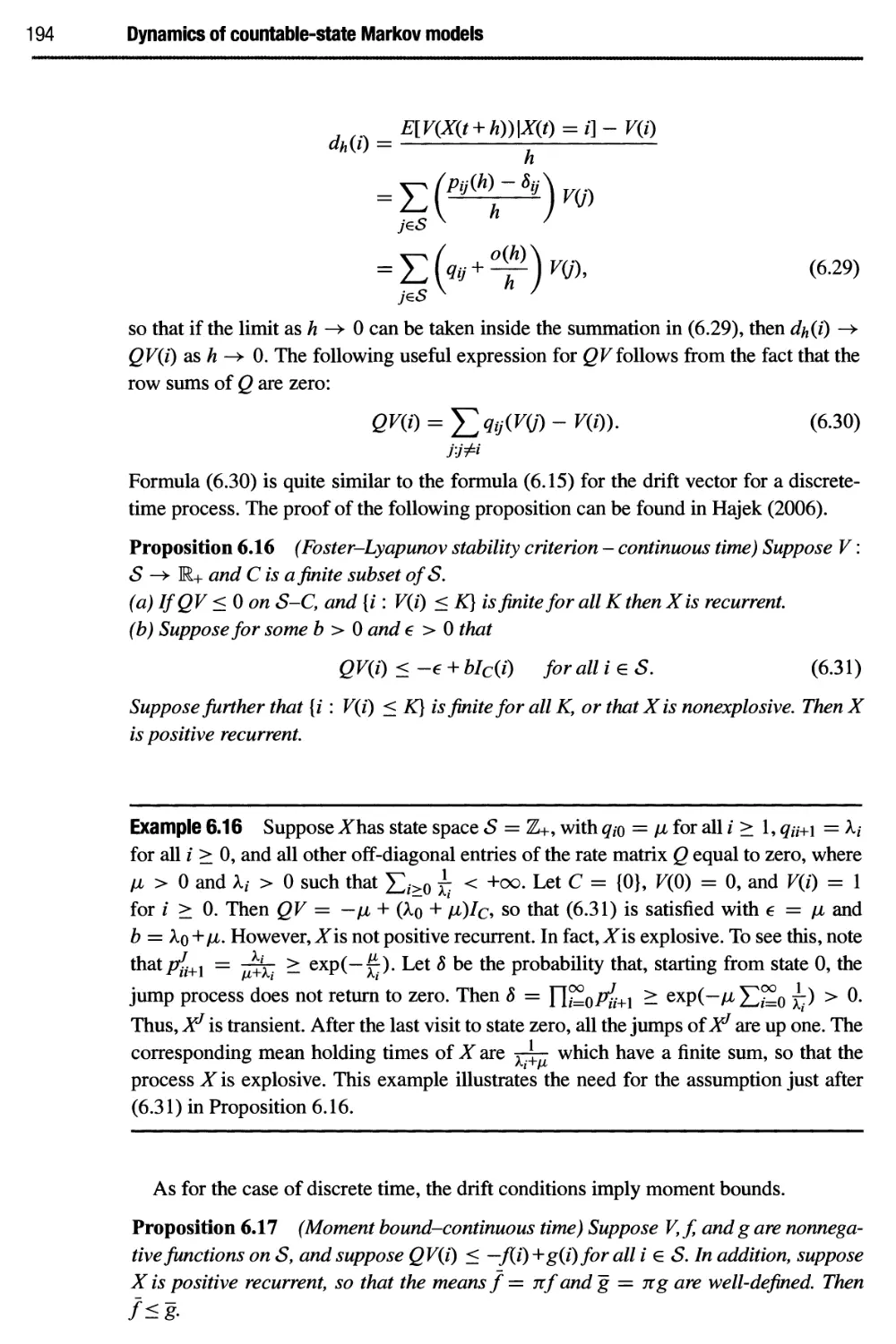

10.2 Martingales with respect to filtrations 310

10.3 Azuma-Hoeffding inequality 313

10.4 Stopping times and the optional sampling theorem 316

10.5 Notes 321

11 Appendix 325

11.1 Some notation 325

11.2 Convergence of sequences of numbers 326

11.3 Continuity of functions 330

11.4 Derivatives of functions 331

11.5 Integration 333

11.5.1 Riemann integration 333

11.5.2 Lebesgue integration 335

11.5.3 Riemann-Stieltjes integration 335

11.5.4 Lebesgue-Stieltjes integration 336

11.6 On convergence of the mean 336

11.7 Matrices 339

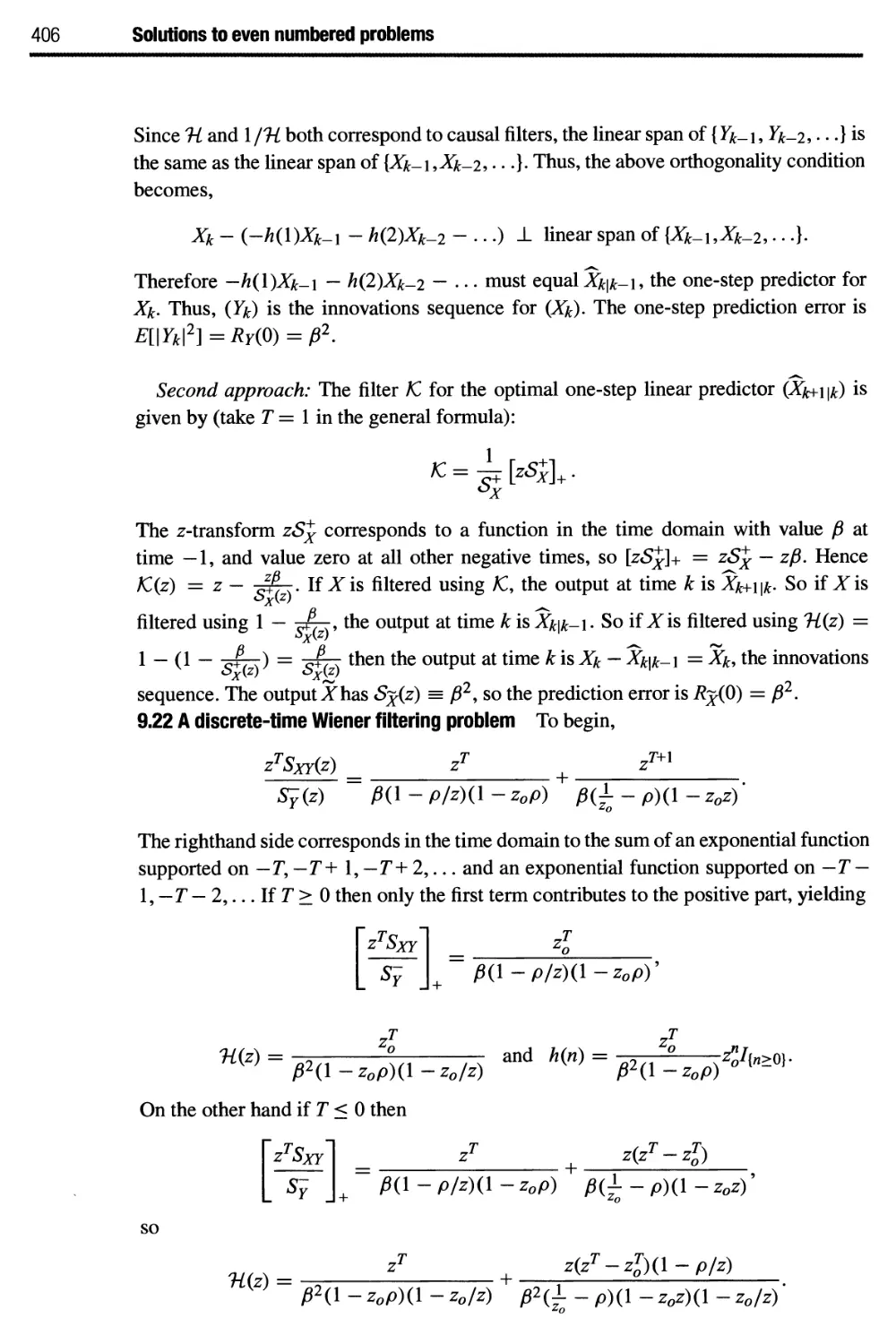

12 Solutions to even numbered problems 344

References 411

Index 412

Preface

From an applications viewpoint, the main reason to study the subject of this book is to

help deal with the complexity of describing random, time-varying functions. A random

variable can be interpreted as the result of a single measurement. The distribution of

a single random variable is fairly simple to describe. It is completely specified by

the cumulative distribution function F(x), a function of one variable. It is relatively

easy to approximately represent a cumulative distribution function on a computer. The

joint distribution of several random variables is much more complex, for in general

it is described by a joint cumulative probability distribution function, F(x\,X2,... ,xw),

which is much more complicated than η functions of one variable. A random process, for

example a model of time-varying fading in a communication channel, involves many,

possibly infinitely many (one for each time instant t within an observation interval)

random variables. Woe the complexity!

This book helps prepare the reader to understand and use the following methods for

dealing with the complexity of random processes:

• Work with moments, such as means and covariances.

• Use extensively processes with special properties. Most notably, Gaussian

processes are characterized entirely by means and covariances, Markov processes

are characterized by one-step transition probabilities or transition rates, and initial

distributions. Independent increment processes are characterized by the

distributions of single increments.

• Appeal to models or approximations based on limit theorems for reduced

complexity descriptions, especially in connection with averages of independent,

identically distributed random variables. The law of large numbers tells us, in a certain

sense, that a probability distribution can be characterized by its mean alone.

The central limit theorem similarly tells us that a probability distribution can

be characterized by its mean and variance. These limit theorems are analogous

to, and in fact examples of, perhaps the most powerful tool ever discovered for

dealing with the complexity of functions: Taylor's theorem, in which a function

in a small interval can be approximated using its value and a small number of

derivatives at a single point.

• Diagonalize. A change of coordinates reduces an arbitrary η-dimensional

Gaussian vector into a Gaussian vector with η independent coordinates. In the new

coordinates the joint probability distribution is the product of η one-dimensional

XI

xii Preface

distributions, representing a great reduction of complexity. Similarly, a random

process on an interval of time is diagonalized by the Karhunen-Loeve

representation. A periodic random process is diagonalized by a Fourier series

representation. Stationary random processes are diagonalized by Fourier transforms.

• Sample. A narrowband continuous time random process can be exactly

represented by its samples taken with sampling rate twice the highest frequency of the

random process. The samples offer a reduced complexity representation of the

original process.

• Work with baseband equivalent. The range of frequencies in a typical wireless

transmission is much smaller than the center frequency, or carrier frequency, of

the transmission. The signal could be represented directly by sampling at twice

the largest frequency component. However, the sampling frequency, and hence

the complexity, can be dramatically reduced by sampling a baseband equivalent

random process.

This book was written for the first semester graduate course on random processes

offered by the Department of Electrical and Computer Engineering at the University

of Illinois at Urbana-Champaign. Students in the class are assumed to have had a

previous course in probability, which is briefly reviewed in the first chapter. Students

are also expected to have some familiarity with real analysis and elementary linear

algebra, such as the notions of limits, definitions of derivatives, Riemann integration,

and diagonalization of symmetric matrices. These topics are reviewed in the appendix.

Finally, students are expected to have some familiarity with transform methods and

complex analysis, though the concepts used are reviewed in the relevant chapters.

Each chapter represents roughly two weeks of lectures, and includes homework

problems. Solutions to the even numbered problems without stars can be found at the end

of the book. Students are encouraged to first read a chapter, then try doing the even

numbered problems before looking at the solutions. Problems with stars, for the most

part, investigate additional theoretical issues, and solutions are not provided.

Hopefully some students reading this book will find the problems useful for

understanding the diverse technical literature on systems engineering, ranging from control

systems, signal and image processing, communication theory, and analysis of a variety

of networks and algorithms. Hopefully some students will go on to design systems, and

define and analyze stochastic models. Hopefully others will be motivated to continue

study in probability theory, going on to learn measure theory and its applications to

probability and analysis in general.

A brief comment is in order on the level of rigor and generality at which this book

is written. Engineers and scientists have great intuition and ingenuity, and routinely

use methods that are not typically taught in undergraduate mathematics courses. For

example, engineers generally have good experience and intuition about transforms, such

as Fourier transforms, Fourier series, and z-transforms, and some associated methods of

complex analysis. In addition, they routinely use generalized functions, in particular the

delta function is frequently used. The use of these concepts in this book leverages on

this knowledge, and it is consistent with mathematical definitions, but full mathematical

Preface xiii

justification is not given in every instance. The mathematical background required for

a full mathematically rigorous treatment of the material in this book is roughly at the

level of a second year graduate course in measure theoretic probability, pursued after a

course on measure theory.

The author gratefully acknowledges the many students and faculty members,

including Todd Coleman, Christoforos Hadjicostis, Jonathan Ligo, Andrew Singer,

R. Srikant, and Venu Veeravalli who gave many helpful comments and suggestions.

Organization

The first four chapters of the book are used heavily in the remaining chapters, so most

readers should cover those chapters before moving on.

Chapter 1 is meant primarily as a review of concepts found in a typical first course on

probability theory, with an emphasis on axioms and the definition of expectation.

Readers desiring a more extensive review of basic probability are referred to the

author's notes for ECE 313 at the University of Illinois.

Chapter 2 focuses on various ways in which a sequence of random variables can

converge, and the basic limit theorems of probability: law of large numbers, central

limit theorem, and the asymptotic behavior of large deviations.

Chapter 3 focuses on minimum mean square error estimation and the orthogonality

principle. Kalman filtering is explained from the geometric standpoint based on

innovations sequences.

Chapter 4 introduces the notion of a random process, and briefly covers several key

examples and classes of random processes. Markov processes and martingales are

introduced in this chapter, but are covered in greater depth in later chapters.

After Chapter 4 is covered, the following four topics can be covered independently

of each other.

Chapter 5 describes the use of Markov processes for modeling and statistical

inference. Applications include natural language processing.

Chapter 6 describes the use o/ Markov processes for modeling and analysis of

dynamical systems. Applications include modeling of queueing systems.

Chapters 7-9 develop calculus for random processes based on mean square

convergence, moving to linear filtering, orthogonal expansions, and ending with causal

and noncausal Wiener filtering.

Chapter 10 explores martingales with respect to filtrations, with emphasis on

elementary concentration inequalities, and on the optional sampling theorem.

In recent one-semester course offerings, the author covered Chapters 1-5, Sections

6.1-6.8, Chapter 7, Sections 8.1-8.4, and Section 9.1. Time did not permit covering

the Foster-Lyapunov stability criteria, noncausal Wiener filtering, and the chapter on

martingales.

A number of background topics are covered in the appendix, including basic notation.

1 A selective review of basic probability

This chapter reviews many of the main concepts in a first level course on probability

theory, with more emphasis on axioms and the definition of expectation than is typical

of a first course.

1.1 The axioms of probability theory

Random processes are widely used to model systems in engineering and scientific

applications. This book adopts the most widely used framework of probability and random

processes, namely the one based on Kolmogorov's axioms of probability. The idea is

to assume a mathematically solid definition of the model. This structure encourages a

modeler to have a consistent, if not accurate, model.

A probability space is a triplet (Ω, J7, V). The first component, Ω, is a nonempty set.

Each element ω of Ω is called an outcome and Ω is called the sample space. The second

component, J7, is a set of subsets of Ω called events. The set of events J7 is assumed

to be a σ-algebra, meaning it satisfies the following axioms (see Appendix 11.1 for set

notation):

A.1 Ω € J",

A.2 If Ле J" thence J",

A.3 If А, В e J7 then AUВ e J7. Also, if A\, Α2,... is a sequence of elements in J7

then U~i Μ e T.

lfA,Be J7, then AB e Τ by A.2, A.3 and the fact AB = (Ac U Bcf. By the same

reasoning, if A\, Α2,... is a sequence of elements in a σ-algebra J7, then Q?f j Ai e J7.

Events Ai, i e /, indexed by a set / are called mutually exclusive if the intersection

AjAj = 0 for all ij e / with i φ]. The final component, P, of the triplet (Ω, F,P) is a

probability measure on Τ satisfying the following axioms:

P.l P(A) > 0 for all A e J7,

P.2 If А, В e Τ and A and В are mutually exclusive, P(A U B) = P(A) + P(B). Also,

if A1, A2,... is a sequence of mutually exclusive events in J7 then Ρ (US 1 Ai) =

Ρ.3Ρ(Ω) = 1.

1

2 A selective review of basic probability

The axioms imply a host of properties including the following. For any subsets A, B,

С οι J·.

• If А с В then P(A) <P(B),

• P(A UB)= P(A) + P(B) - P(AB),

• P(A U В U Q = P(A) + P(B) + P(Q - P(AB) - P(AQ - P(BQ + P(ABQ,

• P(A)+P(AC) = 1,

• P(0) = 0.

Example 1.1 (Toss of a fair coin) Using "/Г for "heads" and "7" for "tails," the toss

of a fair coin is modeled by

Ω = {#,71 T= {{//}, {71, {#, 71,0}

P{//} = Ρ{Γ} = - P{H, T] = 1 P(0) = 0.

Note that, for brevity, we omitted the parentheses and wrote P{H} instead of Р({Щ).

Example 1.2 (Standard unit-interval probability space) Take Ω = {ω : 0 < ω < 1}.

Imagine an experiment in which the outcome ω is drawn from Ω with no preference

towards any subset. In particular, we want the set of events Τ to include intervals, and

the probability of an interval [a, b] with 0 < a < b < 1 to be given by:

P([a,b]) = b-a. (1.1)

Taking a = b, we see that Τ contains singleton sets {a}, and these sets have probability

zero. Since Τ is to be a σ-algebra, it must also contain all the open intervals (a, b) in Ω,

and for such an open interval, P( (a,b)) = b — a. Any open subset of Ω is the union of a

finite or countably infinite set of open intervals, so that Τ should contain all open and all

closed subsets of Ω. Thus, F must contain any set that is the intersection of countably

many open sets, the union of countably many such sets, and so on. The specification of

the probability function Ρ must be extended from intervals to all of J7. It is not a priori

clear how large Τ can be. It is tempting to take Τ to be the set of all subsets of Ω.

However, that idea doesn't work - see Problem 1.37 showing that the length of all subsets of

R can't be defined in a consistent way. The problem is resolved by taking Τ to be the

smallest σ-algebra containing all the subintervals of Ω, or equivalently, containing all

the open subsets of Ω. This σ-algebra is called the Borel σ-algebra for [0,1]» and the sets

in it are called Borel sets. While not every subset of Ω is a Borel subset, any set we are

likely to encounter in applications is a Borel set. The existence of the Borel σ-algebra is

discussed in Problem 1.38. Furthermore, extension theorems of measure theory1 imply

that Ρ can be extended from its definition (1.1) for interval sets to all Borel sets.

See, for example, (Royden 1968) or (Varadhan 2001). The σ -algebra Τ can be extended somewhat further

by requiring the following completeness property: if В с A e Τ with P(A) = 0, then В e Τ (and also

P(B) = 0).

1.1 The axioms of probability theory 3

The smallest σ-algebra, B, containing the open subsets of R is called the Borel

σ-algebra for R, and the sets in it are called Borel subsets ofR. Similarly, the Borel

σ-algebra Bn of subsets of W1 is the smallest σ-algebra containing all sets of the form

[a\,b\] χ [tf2,62] x · · · x [an, bn]. Sets in Bn are called Borel subsets of MP. The class

of Borel sets includes not only rectangle sets and countable unions of rectangle sets, but

all open sets and all closed sets. Virtually any subset of W arising in applications is a

Borel set.

Example 1.3 (Repeated binary trials) Suppose we would like to represent an infinite

sequence of binary observations, where each observation is a zero or one with equal

probability. For example, the experiment could consist of repeatedly flipping a fair coin,

and recording a one each time it shows heads and a zero each time it shows tails. Then

an outcome ω would be an infinite sequence, ω = (ω\,α>2,···), such that for each

i > 1, (Oi € {0,1}. Let Ω be the set of all such &>s. The set of events can be taken to

be large enough so that any set that can be defined in terms of only finitely many of

the observations is an event. In particular, for any binary sequence (b\,- · · , bn) of some

finite length n, the set [ω e Ω : ω,- = bi for 1 < / < n] should be in T, and the

probability of such a set is taken to be 2~n.

There are also events that don't depend on a fixed, finite number of observations. For

example, let F be the event that an even number of observations is needed until a one is

observed. Show that F is an event and then find its probability.

Solution

For к > 1, let Ek be the event that the first one occurs on the Ath observation. So Ek =

{ω : ω\ = a>2 = — - = ω#-ΐ = 0 and a>k = 1}. Then Ek depends on only a finite number

of observations, so it is an event, and P{Ek) = 2~k. Observe that F = E2 UE4 UEe U...,

so F is an event by Axiom A.3. Also, the events £2,^4,... are mutually exclusive, so

by the full version of Axiom P.2:

/>(^=№)+p(£4)+... = l(i + (i) + (I)2+...) = TiT = I.

The following lemma gives a continuity property of probability measures which is

analogous to continuity of functions on Rw, reviewed in Appendix 11.3. If B\,B2,... is

a sequence of events such that B\ С В2 С #з С ..., then we can think that Bj converges

to the set Ugji?,· as 7 -> 00. The lemma states that in this case, P{Bj) converges to the

probability of the limit set as jr —> 00.

Lemma 1.1 (Continuity of probability) Suppose B\,B2,...isa sequence of events.

(a) IfBx CB2C··· then liny-oo P(Bj) = Ρ flj~i Bi) ·

(b) IfBx DB2D··· then lim^ P(Bj) = Ρ (Π£ι Bf) .

4 A selective review of basic probability

Figure 1.1 A sequence of nested sets.

Proof Suppose 5i eft С .... Let D\ = ft, ft = ft — ft, and, in general, let

A = ft - ft_i for ι > 2, as shown in Figure 1.1. Then P(Bj) = jji=l Р(Д) for each

j > 1, so

J

lim P(Bj) = lim Г?(Д·)

—von ^ /Unn ^—-^

y-»oo

^°°£l

(a)

^ Еад

i=l

■'&*) - -&■)

where (л) is true by the definition of the sum of an infinite series, and (b) is true by

axiomP.2. This proves Lemma 1.1(a). Lemma 1.1(b) can be proved similarly, or can be

derived by applying Lemma 1.1(a) to the sets Bc-. D

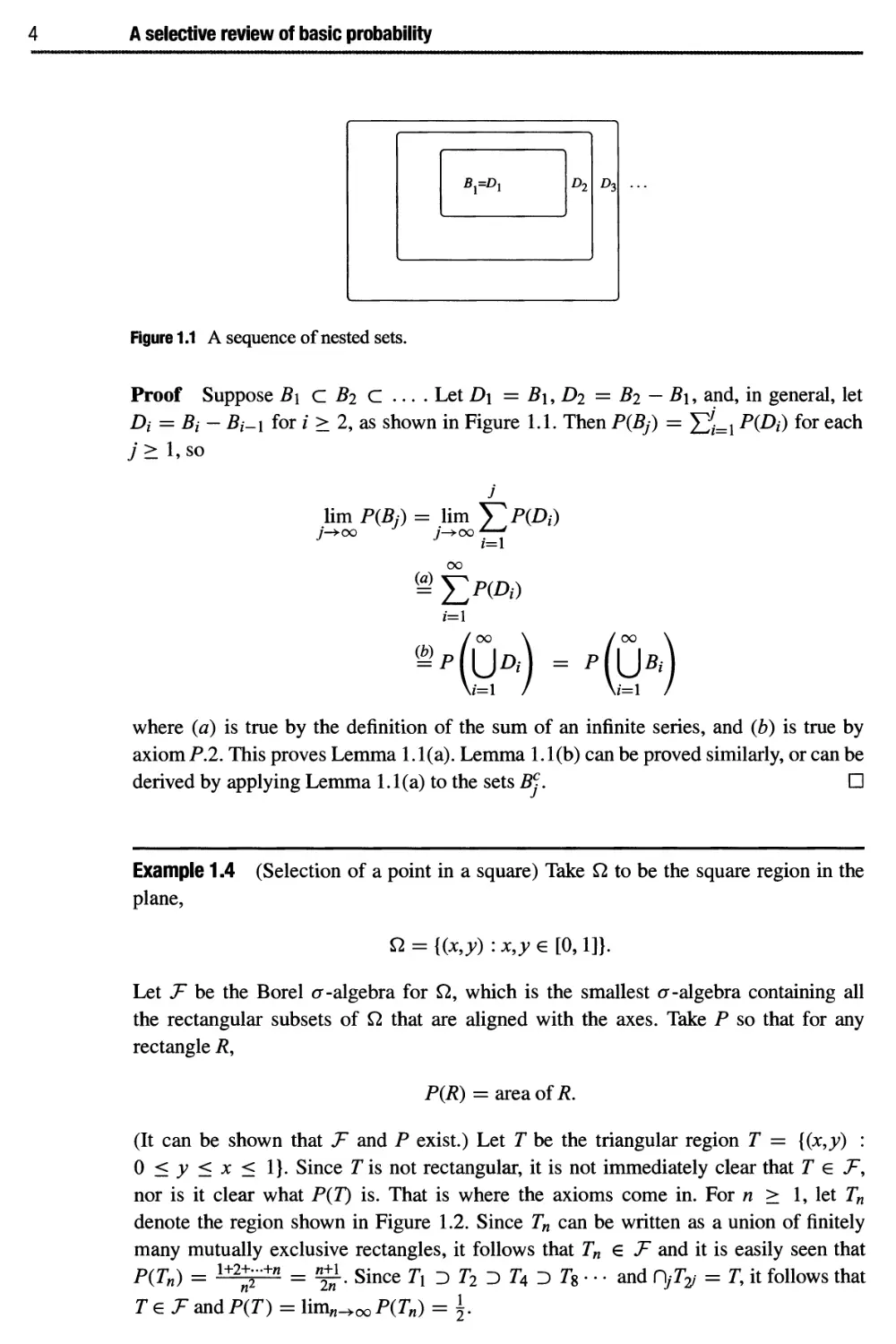

Example 1-4 (Selection of a point in a square) Take Ω to be the square region in the

plane,

Ω = {(x,y) : x,y g [0,1]}.

Let Τ be the Borel σ-algebra for Ω, which is the smallest σ-algebra containing all

the rectangular subsets of Ω that are aligned with the axes. Take Ρ so that for any

rectangle ft

P(R) = area of/?.

(It can be shown that Τ and Ρ exist.) Let Τ be the triangular region Τ = {(x,y) :

0 < у < χ < 1}. Since Г is not rectangular, it is not immediately clear that Τ e J7,

nor is it clear what P(T) is. That is where the axioms come in. For η > 1, let Tn

denote the region shown in Figure 1.2. Since Tn can be written as a union of finitely

many mutually exclusive rectangles, it follows that Tn € Τ and it is easily seen that

P(Tn) = 1+2+-"+w = *gi. Since 7Ϊ э Г2 Э Г4 Э Г8 · · · and Π,Γ^ = Γ, it follows that

Те Τ andP(T) = lim^ooP^) = {.

1.2 Independence and conditional probability 5

l·'

T„

Figure 1.2 Approximation of a triangular region.

The reader is encouraged to show that if С is the diameter one disk inscribed within

Ω then P(Q = (area of C) = f.

1.2

Independence and conditional probability

Events A\ and A2 are defined to be independent if P(A\A2) = P(A\)P(A2). More

generally, events A\ ,A2,... ,Ak are defined to be independent if

P(AhAh ... Atj) = P(Ah)P(Ah).. .Ρ(Αφ

whenever7 and /1, /2,..., ij are integers withy > 1 and 1 < /1 < /2 < · · · < ij < k. For

example, events А\,А2,Аз are independent if the following four conditions hold:

Ρ(4ιΑ2) = Ρ(Αι)Ρ(Α2)9

Ρ(4ιΑ3)=Ρ(Αι)Ρ(Α3)9

P(A2A3)=P(A2)P(A3),

P(AlA2A3)=P(Al)P(A2)P(A3).

A weaker condition is sometimes useful: Events A \,..., Ak are defined to be pairwise

independent if Ai is independent of Aj whenever 1 < / < j < k. Independence of к

events requires that 2k — к — 1 equations hold: one for each subset of {1,2,... ,k) of size

at least two. Pairwise independence only requires that (2) = -Цг-^ equations hold.

If A and В are events and P(B) φ 0, then the conditional probability oiA given В is

defined by

P(A I B) =

P(AB)

P(B) '

It is not defined if P(B) = 0, which has the following meaning. If you were to write a

computer routine to compute P(A \ B) and the inputs are P(AB) = 0 and P(B) = 0,

your routine shouldn't simply return the value 0. Rather, your routine should generate an

error message such as "input error - conditioning on event of probability zero." Such an

error message would help you or others find errors in larger computer programs which

use the routine.

6 A selective review of basic probability

As a function of A for В fixed with P(B) φ 0, the conditional probability of A given

В is itself a probability measure for Ω and J7. More explicitly, fix В with P(B) φ 0. For

each event A define P'{A) = P(A \ B). Then (Ω, F,P') is a probability space, because

P' satisfies the axioms P\ — P3. (Try showing that.)

If A and В are independent then Ac and В are independent. Indeed, if A and В are

independent then

P(ACB) = P(B) - P(AB) = (1 - P(A))P(B) = P(AC)P(B).

Similarly, if A, B, and С are independent events then А В is independent of C. More

generally, suppose E\,E2,... ,En are independent events, suppose η = щ + · · · + nk

with щ > 1 for each /, and suppose F\ is defined by Boolean operations (intersections,

complements, and unions) of the first n\ events E\,...,Eni,F2 is defined by Boolean

operations on the next «2 events, Eni+\,... ,Eni+rl2, and so on. Then F\,.. .,Fk are

independent.

Events E\,..., Ek are said to form a partition of Ω if the events are mutually exclusive

and Ω = E\ U.. .UEk. Of course for a partition, P(E\)+.. ЛР{Ек) = 1. More generally,

for any event A, the law of total probability holds because A is the union of the mutually

exclusive sets AE\,AE2,... ,AEk'.

P(A)=P(AEi) + ...+P(AEk).

If P(Ei) φ 0 for each /, this can be written as

P(A) = P(A | Ει)Ρ(Ει) + ...+P(A\ Ek)P{Ek).

Figure 1.3 illustrates the condition of the law of total probability.

Judicious use of the definition of conditional probability and the law of total

probability leads to Bayes'formula for P(E( | A) (if P(A) φ 0) in simple form

P(AEj) P(A | EdPjEj)

P(Ei A) = = ,

P(A) P(A)

or in expanded form:

P(A | ЕдР{Ед

P(Ei | A) =

P(A | EX)P{EX) + ...+P{A\ Ek)P(Ek)

The remainder of this section gives the Borel-Cantelli lemma. It is a simple result

based on continuity of probability and independence of events, but it is not typically

Figure 1.3 Partitioning a set A using a partition of Ω.

1.2 Independence and conditional probability 7

encountered in a first course on probability. Let (An : η > 0) be a sequence of events

for a probability space (Ω, J7, P).

Definition 1.2 The event [An infinitely often} is the set of ω e Ω such that ω e An for

infinitely many values of n.

Another way to describe {An infinitely often} is that it is the set of ω such that for any

k, there is an η > к such that ω e An. Therefore,

[An infinitely often} = n*>i (Un>kAn) .

For each k, the set Un>kAn is a countable union of events, so it is an event, and {An

infinitely often} is an intersection of countably many such events, so that [An infinitely

often} is also an event.

Lemma 1.3 (Borel-Cantelli lemma) Let (An : η > 1) be a sequence of events and let

pn = P{An).

(a) tfY^i Pn < oo, then P{An infinitely often] = 0.

(b) VY1^L\Pn = oo andA\,A2,... are mutually independent, then

P{An infinitely often] = 1.

Proof (a) Since {An infinitely often} is the intersection of the monotonically non-

increasing sequence of events Un>kAn, it follows from the continuity of

probability for monotone sequences of events (Lemma 1.1) that P{An infinitely often} =

\\тк-*ооР(У)п>кАп)· Lemma 1.1, the fact that the probability of a union of events

is less than or equal to the sum of the probabilities of the events, and the definition of

the sum of a sequence of numbers, yield that for any к > 1,

m oo

P(Un>kAn) = lim P(U™=kAn) < lim Υ\ρη = У>„.

n=k n=k

Therefore, P{An infinitely often} < lim^ooZ!SU/V If Σ^ιΡη < °°> tnen

lim^oo Y^LkPn = 0, which implies part (a) of the lemma.

(b) Suppose that Y^L\Pn = +oo and that the events A\,A2, · · · are mutually

independent. For any к > 1, using the fact 1 — и < exp(—u) for all w,

PQJn>kAn)

m

= lim P{U™=kAn) = lim 1 - Y\(\ -p„)

n=k

> lim 1 - exp

m—>oo

(- Σ,Ρη J = 1 - exp I - Σ?η ) = l~ exP(_0°) = L

n=k / \ n=k I

Therefore, P{An infinitely often} = lim^oo P(^n>k^n) = 1· Π

Example 1.5 Consider independent coin tosses using biased coins, such that P(An) =

pn = 1, where An is the event of getting heads on the nth toss. Since Y^L\ ^ = +oo,

δ Α selective review of basic probability

the part of the Borel-Cantelli lemma for independent events implies that P{An infinitely

often} = 1.

Example 1.6 Let (Ω,Τ,Ρ) be the standard unit-interval probability space defined

in Example 1.2, and let An = [0, ^). Then pn = £ and An+\ С An for η > 1.

The events are not independent, because for m < n, P(AmAn) = P(An) = - φ

P(Am)P(An). Of course 0 e An for all n. But for any ω e (0,1], ω g An for η > ^.

Therefore, {An infinitely often} = {0}. The single point set {0} has probability zero,

so P{An infinitely often} = 0. This conclusion holds even though Σ™=\Ρη = +oo,

illustrating the need for the independence assumption in Lemma 1.3(b).

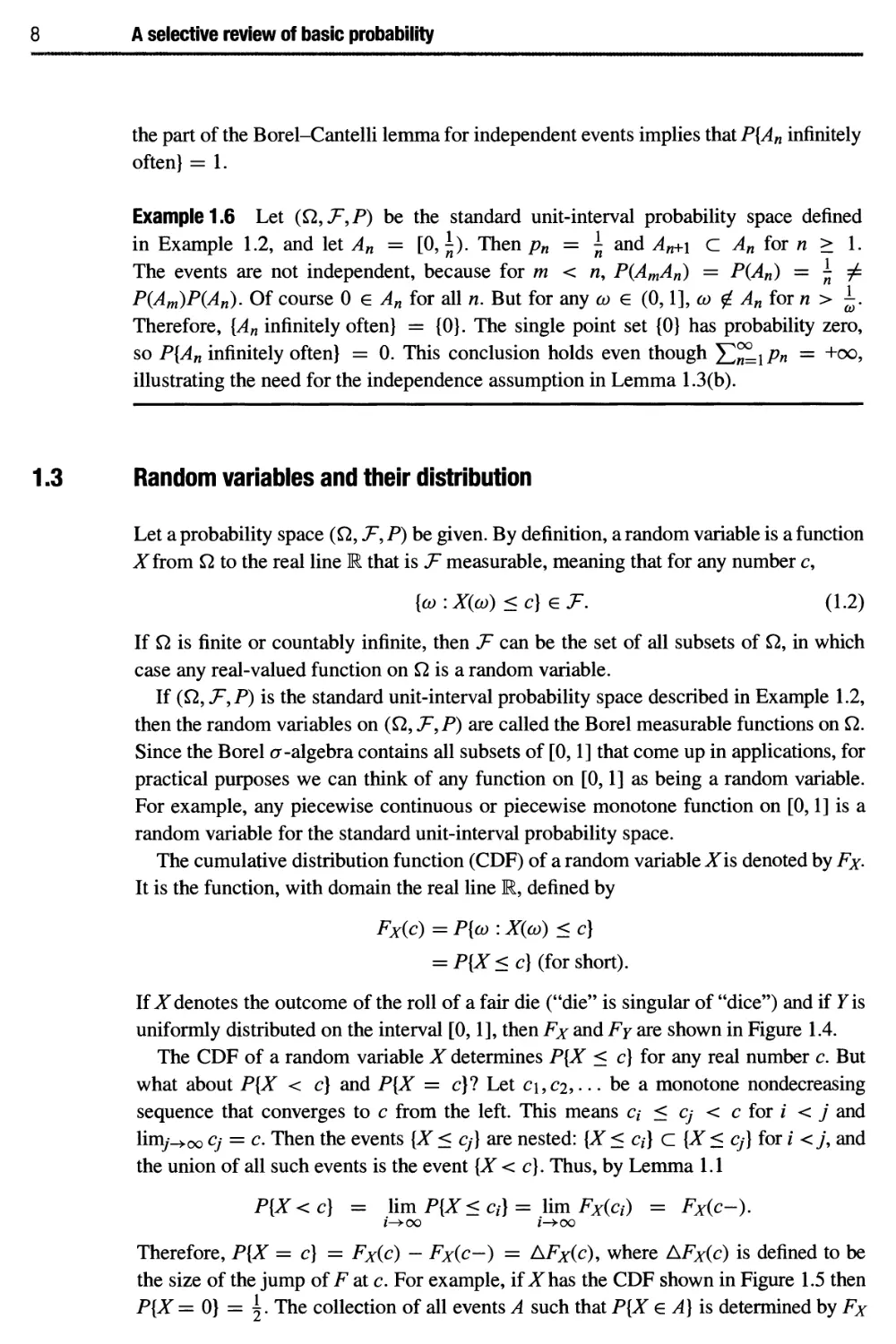

1.3 Random variables and their distribution

Let a probability space (Ω, J7, P) be given. By definition, a random variable is a function

ΛΓ from Ω to the real line R that is Τ measurable, meaning that for any number c,

{ω:Χ(ω)<ο} e J\ (1.2)

If Ω is finite or countably infinite, then Τ can be the set of all subsets of Ω, in which

case any real-valued function on Ω is a random variable.

If (Ω, Τ,Ρ) is the standard unit-interval probability space described in Example 1.2,

then the random variables on (Ω, Τ,Ρ) are called the Borel measurable functions on Ω.

Since the Borel σ -algebra contains all subsets of [0,1] that come up in applications, for

practical purposes we can think of any function on [0,1] as being a random variable.

For example, any piecewise continuous or piecewise monotone function on [0,1] is a

random variable for the standard unit-interval probability space.

The cumulative distribution function (CDF) of a random variable X'\$ denoted by Fx.

It is the function, with domain the real line R, defined by

Fx(c) = Ρ{ω : Χ{ω) < с]

= P{X < с] (for Short).

If ^f denotes the outcome of the roll of a fair die ("die" is singular of "dice") and if У is

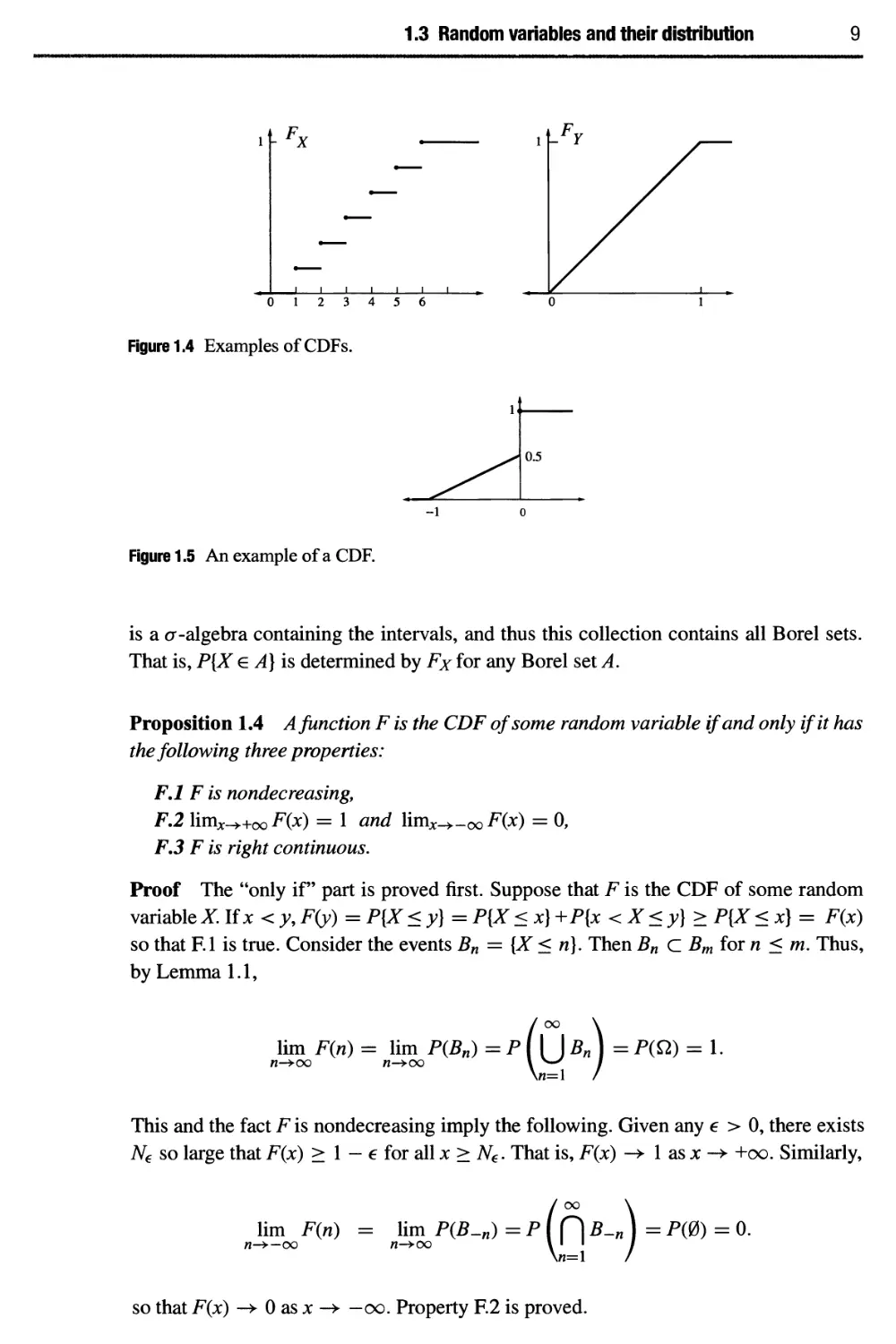

uniformly distributed on the interval [0,1]» then Fx and Fy are shown in Figure 1.4.

The CDF of a random variable X determines P{X < c] for any real number c. But

what about P{X < c] and P{X = c}? Let c\,C2, · · · be a monotone nondecreasing

sequence that converges to с from the left. This means a < Cj < с for i < j and

limy^oo Cj = c. Then the events [X < Cj] are nested: [X < q} С {X < Cj] for / <j, and

the union of all such events is the event {X < c]. Thus, by Lemma 1.1

P{X<c] = \imP{X<a}= limFxia) = Fx(c-).

Therefore, P{X = c] = Fx(c) - Fx(c-) = AFx(c), where AFx(c) is defined to be

the size of the jump of F at c. For example, if X has the CDF shown in Figure 1.5 then

P{X = 0} = \. The collection of all events A such that P[X e A] is determined by Fx

1.3 Random variables and their distribution

9

YFx

2 3 4 5 6

Figure 1.4 Examples of CDFs.

Figure 1.5 An example of a CDF.

is a σ-algebra containing the intervals, and thus this collection contains all Borel sets.

That is, P{X e A] is determined by Fx for any Borel set A.

Proposition 1.4 A function F is the CDF of some random variable if and only if it has

the following three properties:

F.l F is nondecreasing,

F.2 \imx-++<X) F(x) = 1 and lim^-oo^C*) = 0,

F.3 F is right continuous.

Proof The "only if" part is proved first. Suppose that F is the CDF of some random

variable^. If χ < у, F(y) = P{X <y} = P{X < x] +P{x < X < y] > P{X < x] = F(x)

so that F.l is true. Consider the events Bn = [X < n]. Then Bn с Bm for η < m. Thus,

by Lemma 1.1,

lim F(n) = lim P(Bt

w-»oo w-»oo

n)=pl[JBn\ =

Ρ(Ω) = 1.

This and the fact F is nondecreasing imply the following. Given any e > 0, there exists

N€ so large that F(x) > 1 - 6 for all χ > N€. That is, F(x) -> 1 as χ -> +oo. Similarly,

lim F(n) = lim P(B

л->—oo л->оо

-n)=p([\B-") =

P№ = o.

so that F(x) -¥■ 0 as χ -> —oo. Property F.2 is proved.

10 A selective review of basic probability

The proof of F.3 is similar. Fix an arbitrary real number x. Define the sequence of

events An for η > 1 by An = [X < χ + ^}. Then An cAmfovn>m so

lim F

w-»oo

(χ+λΛ=\\^Ρ{Αη)=ρΙ^^

Convergence along the sequence χ + ^, together with the fact that F is nondecreasing,

implies that F(x+) = F(x). Property F.3 is thus proved. The proof of the "only if

portion of Proposition 1.4 is complete.

To prove the "if part of Proposition 1.4, let F be a function satisfying properties

F.1-F.3. It must be shown that there exists a random variable with CDF F. Let Ω = R

and let Τ be the set В of Borel subsets of R. Define Ρ on intervals of the form (a, b] by

P((a, b]) = F(b) — F(a). It can be shown by an extension theorem of measure theory

that Ρ can be extended to all of Τ so that the axioms of probability are satisfied. Finally,

let Χ(ω) = ω for all ω e Ω. Then

P(Xe(a,b])=P((a,b]) = F(b) - F(a).

Therefore, ^has CDF F. So F is a CDF, as was to be proved. D

The vast majority of random variables described in applications are one of two types,

to be described next. A random variable X is a discrete random variable if there is a

finite or countably infinite set of values {xj : / e 1} such that P{X e {*,· : ί e 7}} = 1.

The probability mass function (pmf) of a discrete random variable X, denoted px(x), is

defined by px(x) = P[X = x]. Typically the pmf of a discrete random variable is much

more useful than the CDF. However, the pmf and CDF of a discrete random variable are

related by ρχ(χ) = AFx(x) and conversely,

Fx(x)= Σρχψ\ (1.3)

y:y<x

where the sum in (1.3) is taken only over>> such thatpxiy) Φ 0. If ^f is a discrete random

variable with only finitely many mass points in any finite interval, then Fj^is a piecewise

constant function.

A random variable Xis a continuous random variable if the CDF is the integral of a

function:

FX{x) = Γ fxiy)dy.

«/—oo

The function/^ is called the probability density function (pdf). If the pdf/Hs continuous

at a point x, then the value fx{x) has the following nice interpretation:

1 Γχ+ε

fx(x) = lim - / fxiy)dy

ε^ο ε Jx

= lim -P{x < X < χ + ε].

ε^Ο ε

1.4 Functions of a random variable 11

If A is any Borel subset of R, then

P{XeA}= ffx(x)dx. (1.4)

Ja

The integral in (1.4) can be understood as a Riemann integral if A is a finite union of

intervals and/is piecewise continuous or monotone. In general,/^ is required to be

Borel measurable and the integral is defined by Lebesgue integration.2

Any random variable Jonan arbitrary probability space has a CDF Fx. As noted in

the proof of Proposition 1.4 there exists a probability measure Ρχ (called Ρ in the proof)

on the Borel subsets of R such that for any interval (я, b],

Px((a,b])=P{Xe(a,b]}.

We define the probability distribution of Xto be the probability measure Ρχ. The

distribution Ρχ is determined uniquely by the CDF Fx. The distribution is also determined by

the pdf/Hf -^is continuous type, or the pmf ρχϊί Xis discrete type. In common usage,

the response to the question "What is the distribution of ΧΊ" is answered by giving one

or more of Fx, fx, or ρχ, or possibly a transform of one of these, whichever is most

convenient.

1.4 Functions of a random variable

Recall that a random variable X on a probability space (Ω, F,P) is a function mapping

Ω to the real line R, satisfying the condition [ω : Χ(ω) < a] e Τ for all agI. Suppose

g is a function mapping R to R that is not too bizarre. Specifically, suppose for any

constant с that \x : g(x) < c] is a Borel subset of R. Let Υ(ω) = g{X{a))). Then Υ maps

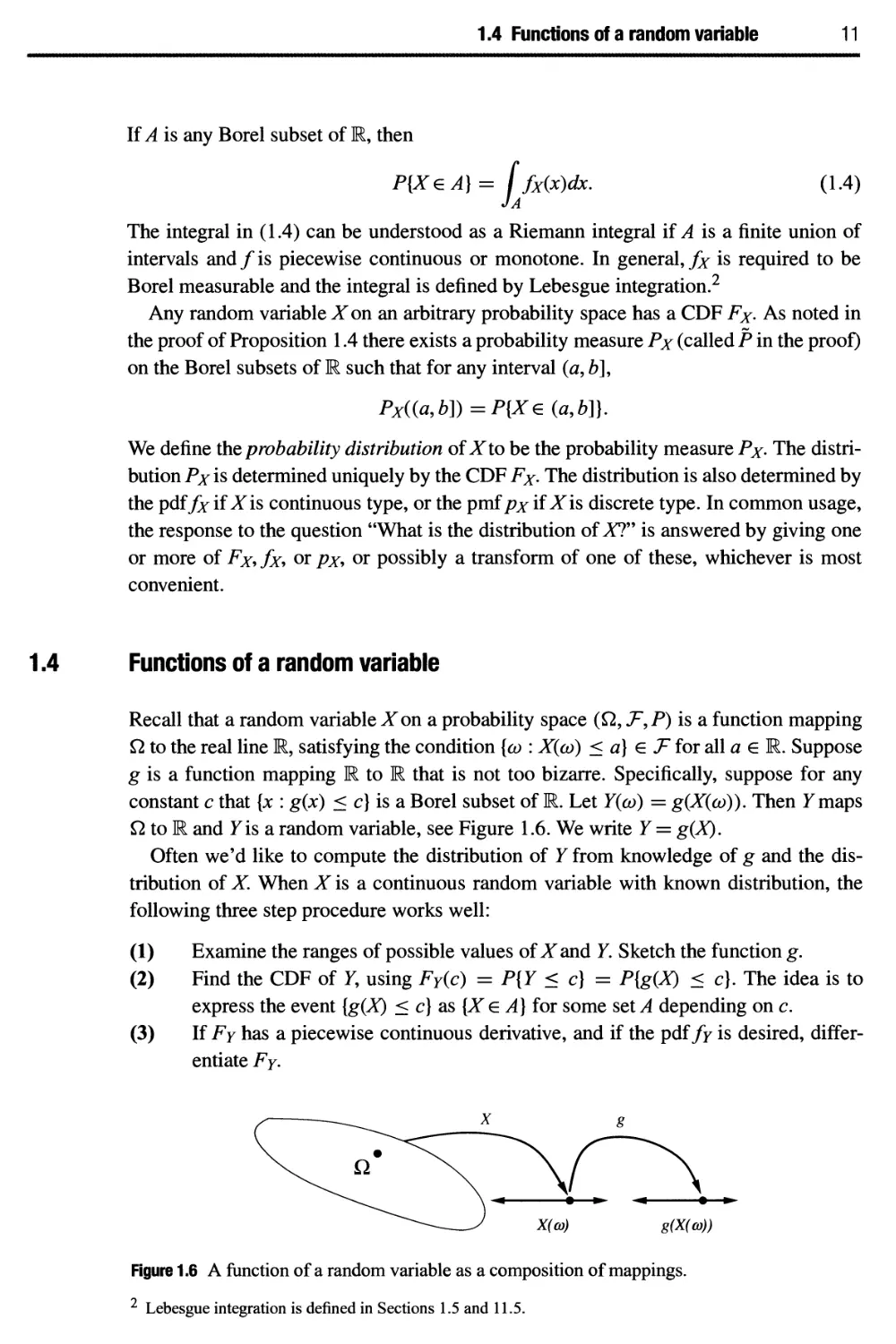

Ω to R and Υ is a random variable, see Figure 1.6. We write Υ = g(X).

Often we'd like to compute the distribution of Υ from knowledge of g and the

distribution of X. When X is a continuous random variable with known distribution, the

following three step procedure works well:

(1) Examine the ranges of possible values of X and Y. Sketch the function g.

(2) Find the CDF of 7, using FY(c) = P{Y < c] = P{g(X) < c]. The idea is to

express the event [g(X) < c] as [X e A] for some set A depending on с

(3) If Fy has a piecewise continuous derivative, and if the puffy is desired,

differentiate Fy.

Figure 1.6 A function of a random variable as a composition of mappings.

2 Lebesgue integration is defined in Sections 1.5 and 11.5.

12 A selective review of basic probability

If instead Xis a discrete random variable then step 1 should be followed. After that

the pmf of Υ can be found from the pmf of fusing

pr(y)

P{g(X)=y}= Σ Ρ**)-

x:g(x)=y

Example 1.7 Suppose Xis an Ν(μ = 2, σ2 = 3) random variable (see Section 1.6 for

the definition) and Υ = X2. Let us describe the density of Y. Note that Υ = g(X) where

g(x) = χ2. The support of the distribution of ΛΓis the whole real line, and the range of g

over this support is R+. Next we find the CDF, FY. Since P{Y > 0} = 1, FY(c) = 0 for

с < 0. For с > 0,

FY(c) = P{X2 <c}=P{-yfi<X<yfi]

= P

-y/c ■

■2 X-2 Jc-2

— < < v

л/3 л/3 л/3

f-j~c-2

}

Differentiate with respect to c, using the chain rule and O'(s) = —=exp(— y), to

)

obtain

fr(c) =

/247ГС

«К-[^П+ехр(-№Г)1 lfci0

0

ifc<0

Example 1.8 Suppose a vehicle is traveling in a straight line at speed a, and that a

random direction is selected, subtending an angle Θ from the direction of travel which

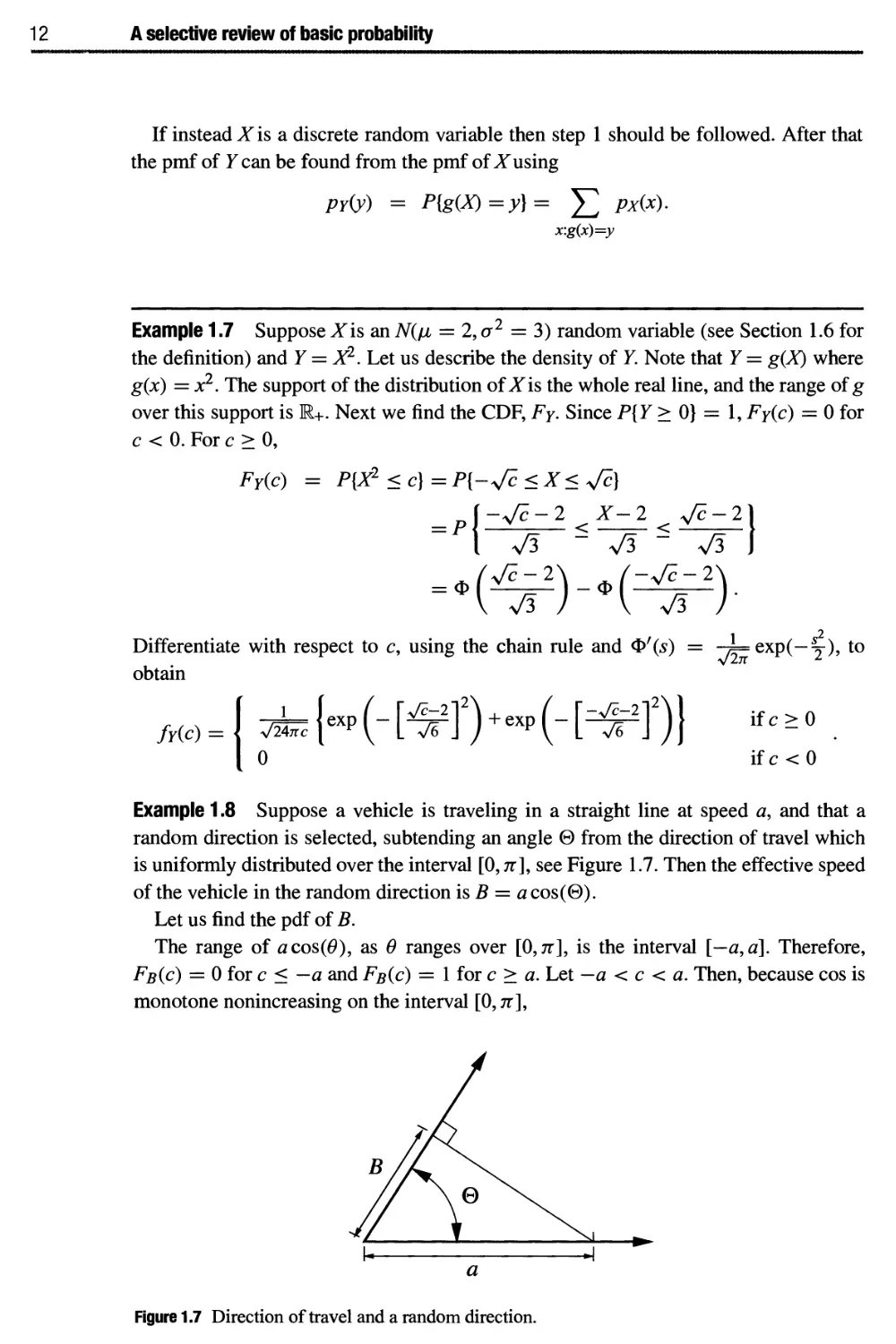

is uniformly distributed over the interval [0, π], see Figure 1.7. Then the effective speed

of the vehicle in the random direction is В = a cos(0).

Let us find the pdf of B.

The range of я cos(#), as θ ranges over [Ο,π], is the interval [—a, a]. Therefore,

Fb(c) = 0 for с < —a and Fb(c) = 1 for с > a. Let — a < с < a. Then, because cos is

monotone nonincreasing on the interval [0, π],

Figure 1.7 Direction of travel and a random direction.

1.4 Functions of a random variable 13

FB(c) = P{tfcos(0) < c] =PJcos(0) < -j

= p{0>cos-'Q)

= 1

cos

-1 (c

(h)

π

Therefore, because cos l (y) has derivative, -(1 - y2) 2,

I —/ \ >> \ с \< а

Mc)=\ *JF=?

О \ с \> a

A sketch of the density is given in Figure 1.8.

Example 1-9 Suppose Υ = tan(0), as illustrated in Figure 1.9, where Θ is uniformly

distributed over the interval (-f, f) · Let us find the pdf of Y. The function tan(#)

increases from -00 to 00 as θ ranges over the interval (-f, f )·

For any real c,

FY(c)=P{Y<c]

= P{tan(0) < c]

λ tan-4c) + f

= Ρ{Θ < tan-1 (с)} = ——2..

π

Differentiating the CDF with respect to с yields that Υ has the Cauchy pdf:

1

fY(c)

n(l+c2)

00 < с < oo.

-a 0 a

Figure 1.8 The pdf of the effective speed in a uniformly distributed direction in two dimensions.

Figure 1.9 A horizontal line, a fixed point at unit distance, and a line through the point with

random direction.

14 A selective review of basic probability

Example 1.10 Given an angle θ expressed in radians, let (Θ mod 2π) denote the

equivalent angle in the interval [0,2π]. Thus, (Θ mod 2π) is equal to θ+2πη, where the

integer η is such that 0 < θ + 2πη < 2π.

Let Θ be uniformly distributed over [0,2π], let h be a constant, and let

Θ = (0 + umod27T).

Let us find the distribution of Θ.

Clearly Θ takes values in the interval [0,2π], so fix с with 0 < с < 2π and seek to

findP{0 < cJ.LeM denote the interval [h,h+2n].Thus9 Θ+h is uniformly distributed

over A. Let В = \Jn[2nn, 2πη + с]. Thus Θ < с if and only if Θ + h e B. Therefore,

P{® <c}= f —(ΙΘ.

JApiB 2π

By sketching the set B, it is easy to see that A f] В is either a single interval of length c,

or the union of two intervals with lengths adding to c. Therefore, Ρ{Θ < с] = ^, so

that Θ is itself uniformly distributed over [0,2π].

Example 1.11 Let Xbc an exponentially distributed random variable with parameter λ.

Let Υ = \X\, which is the integer part of X, and let R = X— \X\, which is the remainder.

We shall describe the distributions of Υ and R.

Clearly Υ is a discrete random variable with possible values 0,1,2,..., so it is

sufficient to find the pmf of Y. For integers к > 0,

pY{k) =P{k<X<k+\] = / Xe'^dx = e~xk( 1 - e~x)

and /?Η&) = 0 for other к.

Turn next to the distribution of R. Clearly R takes values in the interval [0,1]. So let

0 < с < 1 and find Fr(c):

ί oo Λ

FR(c)=P{X-[X}<c} = p\xe\J[k,k + c]\

ι k=o \

oo oo 1 _^c

= £>{* <z< fc+cj = £>-ud - <τλ<) = т^г,

fc=0 fc=0

where we used the fact 1 + a + a2 + · · · = y^ for | a | < 1. Differentiating Fr yields

the pmf:

,,, ί ^ 0<c<l

I 0 otherwise

What happens to the density of R as λ -> 0 or as λ -> oo? By l'Hopital's rule,

1 0<c<1

lim fp(c) = . л

λ^ο 0 otherwise

1.4 Functions of a random variable 15

That is, in the limit as λ -> 0, the density of ^becomes more and more evenly spread

out, and R becomes uniformly distributed over the interval [0,1]. If λ is very large

then the factor 1 — e~k is nearly one , and the density of R is nearly the same as the

exponential density with parameter λ.

An important step in many computer simulations of random systems is to generate a

random variable with a specified CDF, by applying a function to a random variable that

is uniformly distributed on the interval [0,1]. Let F be a function satisfying the three

properties required of a CDF, and let C/be uniformly distributed over the interval [0,1].

The problem is to find a function g so that Fis the CDF of g(U). An appropriate function

g is given by the inverse function of F. Although F may not be strictly increasing, a

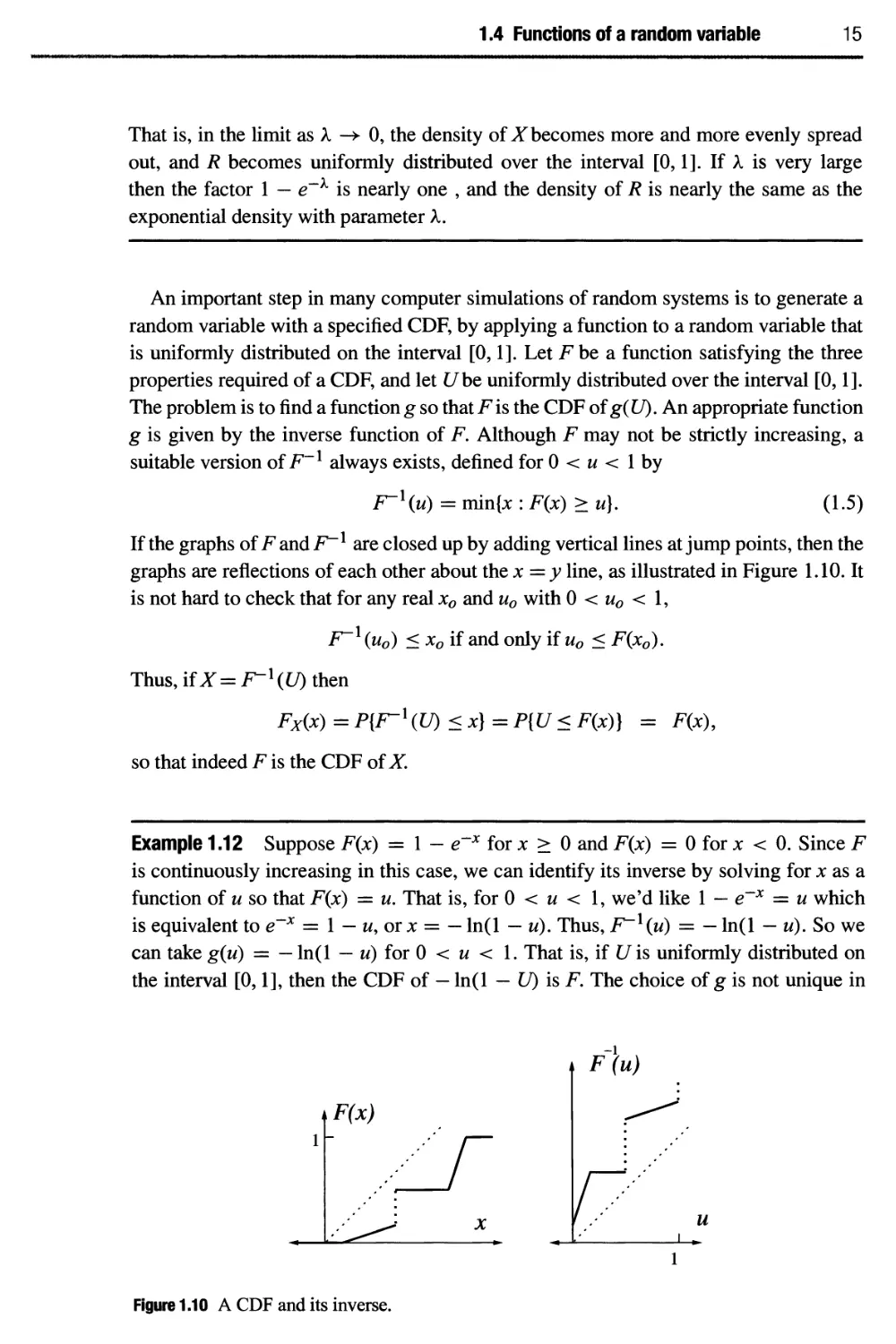

suitable version of F-1 always exists, defined for 0 < и < 1 by

F"1^) = min{x : F(x) > u]. (1.5)

If the graphs of Fandi^1 are closed up by adding vertical lines at jump points, then the

graphs are reflections of each other about the χ = у line, as illustrated in Figure 1.10. It

is not hard to check that for any real x0 and u0 with 0 < u0 < 1,

F~l (u0) < x0 if and only if uQ < F(x0).

Thus, if X=F-\U) then

Fx(x)=P{F-l(U)<x}=P{U<F(x)} = F(x),

so that indeed F is the CDF of X.

Example 1.12 Suppose F(x) = 1 - e~x for χ > 0 and F(x) = 0 for χ < 0. Since F

is continuously increasing in this case, we can identify its inverse by solving for χ as a

function of и so that F(x) = u. That is, for 0 < и < 1, we'd like 1 — e~x = и which

is equivalent to e~x = 1 — w, or χ = — ln(l — u). Thus, F~l(u) = — ln(l — u). So we

can take g(w) = — ln(l — u) for 0 < и < 1. That is, if Uis uniformly distributed on

the interval [0,1], then the CDF of — ln(l — £/) is F. The choice of g is not unique in

F(u)

F(x)

Figure 1.10 A CDF and its inverse.

1

16 A selective review of basic probability

general. For example, 1 — C/has the same distribution as t/, so the CDF of — ln(L0 is

also F. To double check the answer, note that if χ > 0, then

P{- ln(l - U) < x] = P{ln(l -U)> -x]

= P{1 - U> e~x}=P{U< l-e-x}=F(x).

Example 1.13 Suppose F is the CDF for the experiment of rolling a fair die, shown

on the left half of Figure 1.4. One way to generate a random variable with CDF F is to

actually roll a die. To simulate that on a computer, we'd seek a function g so that g(U)

has the same CDF. Using g = F~l and using (1.5) or the graphical method illustrated

in Figure 1.10 to find F-1, we get that for 0 < и < 1, g(w) = / for ^ < и < | for

1 < i < 6. To double check the answer, note that if 1 < / < 6, then

ί ι - 1 ι 1 1

p{g(t/) = ;}=p{—<t/<-J = -

so that g(U) has the correct pmf, and hence the correct CDF.

1.5 Expectation of a random variable

The expectation, alternatively called the mean, of a random variable ΛΓcan be defined in

several different ways. Before giving a general definition, we shall consider a

straightforward case. A random variable X is called simple if there is a finite set {x\,... ,xm]

such that Χ(ω) e {x\,... ,xm] for all ω. The expectation of such a random variable is

defined by

m

E[X\ = Y^XiP{X=Xi}. (1.6)

i=l

The definition (1.6) clearly shows that E[X\ for a simple random variable ^f depends

only on the pmf of X.

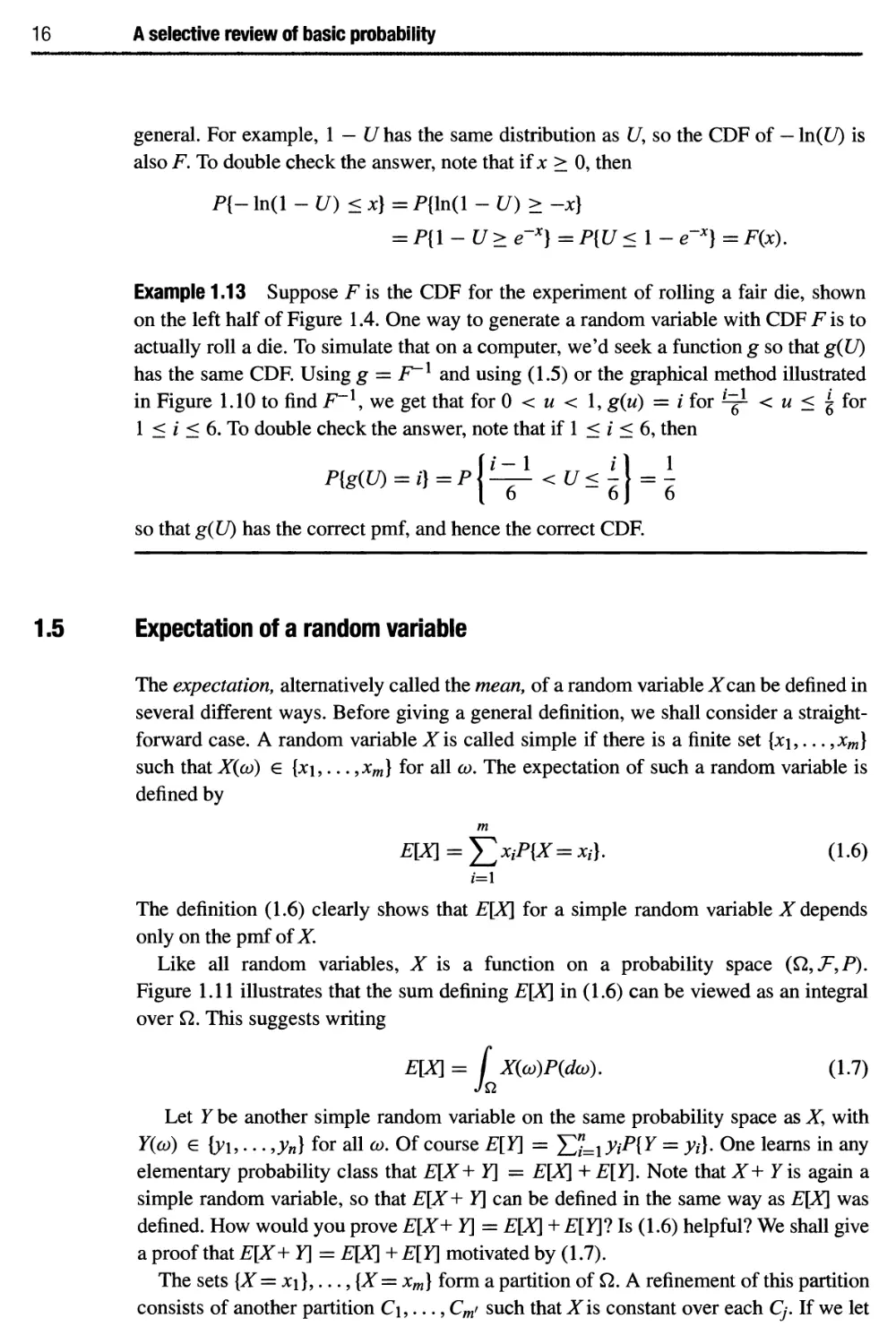

Like all random variables, X is a function on a probability space (Ω,Τ,Ρ).

Figure 1.11 illustrates that the sum defining E[X\ in (1.6) can be viewed as an integral

over Ω. This suggests writing

E[X\ = f Χ(ώ)Ρ(άώ). (1.7)

Let Υ be another simple random variable on the same probability space as X, with

Υ(ω) g {y\,... ,yn] for all ω. Of course E[Y] = Σ"=1 yiP{Y = }>/}· One learns in any

elementary probability class that E[X+ Y] = E[X\ + E[Y]. Note that X+ Υ is again a

simple random variable, so that E[X+ Y] can be defined in the same way as E[X\ was

defined. How would you prove E[X+ Y] = E[X\ + Ε[Υ]Ί Is (1.6) helpful? We shall give

a proof that E[X+ Y] = E[X\ + E[Y] motivated by (1.7).

The sets [X = x\},..., {X = xm] form a partition of Ω. A refinement of this partition

consists of another partition C\,..., Cm> such that Xis constant over each Cj. If we let

1.5 Expectation of a random variable 17

Χ(ω)=Χι

Χ(ω)=χ2

Χ(ω)=χ3

Figure 1.11 A simple random variable with three possible values.

Xj denote the value of ΛΓon Cy, then clearly

E[X\ = Y^xfi(Cj].

J

Now, it is possible to select the partition C\,..., Cm> so that both X and Υ are constant

over each C/. For example, each Cy could have the form {X = χ/} Π {7 = ^} for some

/, &. Let jc|. denote the value of X and j/. denote the value of Υ on Cj. Then У. +>/■ is the

value of X+ Υ on Cy. Therefore,

ДДГ+Ч = Y^ix'j+^PiC^^^jPiC^+Y^y^PiC^^Em+Ein

While the expression (1.7) is rather indicative, it would be overly restrictive to interpret

it as a Riemann integral over Ω. For example, if X is a random variable for the standard

unit-interval probability space denned in Example 1.2, then it is tempting to define E[X\

by Riemann integration (see the Appendix):

E[X]

Jo

Χ(ώ)άω.

(1.8)

However, suppose X is the simple random variable such that X(w) = 1 for rational

values of ω andX(cD) = 0 otherwise. Since the set of rational numbers in Ω is countably

infinite, such X satisfies P{X = 0} = 1. Clearly we'd like E[X\ = 0, but the Riemann

integral (1.8) is not convergent for this choice of X.

The expression (1.7) can be used to define E[X\ in great generality if it is interpreted

as a Lebesgue integral, defined as follows: suppose ΛΓis an arbitrary nonnegative random

variable. Then there exists a sequence of simple random variables Χχ,Χζ,... such that

for every ω e Ω, Χ\(ω) < Xzdco) < ... οηάΧη(ω) -> Χ(ω) as η -> oo. Then E[Xn]

is well defined for each η and is nondecreasing in n, so the limit of E[X„] as η -> oo

1 δ Α selective review of basic probability

exists with values in [0,+oo]. Furthermore it can be shown that the value of the limit

depends only on (Ω,Τ,Ρ) and X, not on the particular choice of the approximating

simple sequence. We thus define E[X\ = limw_>oo£Ki]· Thus, E[X\ is always well

defined in this way, with possible value +00, if X is a nonnegative random variable.

Suppose X is an arbitrary random variable. Define the positive part of X to be the

random variable X+ defined by Χ+(ω) = max{0,^f(u/)} for each value of ω. Similarly

define the negative part of ^f to be the random variable Χ- (ω) = max{0, —Χ(ω)}. Then

Χ(ω) = Χ+(ω) —Χ- (ω) for all ω, andXf. andX_ are both nonnegative random variables.

As long as at least one of E[X+] or E[X-] is finite, define E[X\ = E[X+] - E[X-]. The

expectation E[X\ is undefined if E[X+] = E[X-] = +00. This completes the definition

of E[X\ using (1.7) interpreted as a Lebesgue integral.

We will prove that E[X\ defined by the Lebesgue integral (1.7) depends only on the

CDF of X. It suffices to show this for a nonnegative random variable X. For such a

random variable, and η > 1, define the simple random variable Xn by

v/ ч ί k2~n if k2~n <Χ(ω) <(к+\)2~п, k= 0,1,.. .,22w - 1

ΧΛω) = J 0 else

Then

22n-\

E[Xn\ = Σ k2-n(Fx({k+ \)2-n)-Fx(k2-n)),

so that E[Xn] is determined by the CDF Fx for each n. Furthermore, the ^„s are nonde-

creasing in η and converge to X. Thus, E[X\ = limw_>oo E[Xn], and therefore the limit

E[X] is determined by Fx.

In Section 1.3 we defined the probability distribution Ρ χ of a random variable such

that the canonical random variable Χ(ω) = ω on (R, Β,Ρχ) has the same CDF as X.

Therefore E[X\ = E[X\, or

/00

xPx(dx) (Lebesgue). (1.9)

-00

By definition, the integral (1.9) is the Lebesgue-Stieltjes integral of χ with respect to

Fx, so that

/00

xdFx{x) (Lebesgue-Stieltjes). (1.10)

-00

Expectation has the following properties. Let Χ, Υ be random variables and с be a

constant.

E.l (Linearity) E[cX\ = cE[X\. lfE[X\, E[Y], and E[X\ +E[Y] are well defined, then

E[X+ Y] is well defined and E[X+ Y] = E[X\ + E[Y].

E.2 (Preservation of order) If P{X > Y) = 1 and E[Y] > -00, then E[X\ is well

defined and E[X\ > E[Y].

E.3If^haspdf/*rthen

/00

xfx(x)dx (Lebesgue).

-00

1.5 Expectation of a random variable 19

E.4 If ^fhas pmf/^then

E[X] = ^χρχ(χ) + Σχρχ(χ).

χ>0 χ<0

E.5 (Law of the unconscious statistician (LOTUS)) If g is Borel measurable,

E[g(X)] = [ g(X(aj))P(daj) (Lebesgue)

-Γ

g(x)dFx(x) (Lebesgue-Stieltjes),

and when X is a continuous type random variable

/00

g(x)fxix)dx

-00

E.6 (Integration by parts formula)

E[X]= [

Jo

(Lebesgue).

oo /»0

(l - Fx(x))dx - Fx(x)dx,

J — OQ

(1.11)

which is well denned whenever at least one of the two integrals in (1.11) is finite.

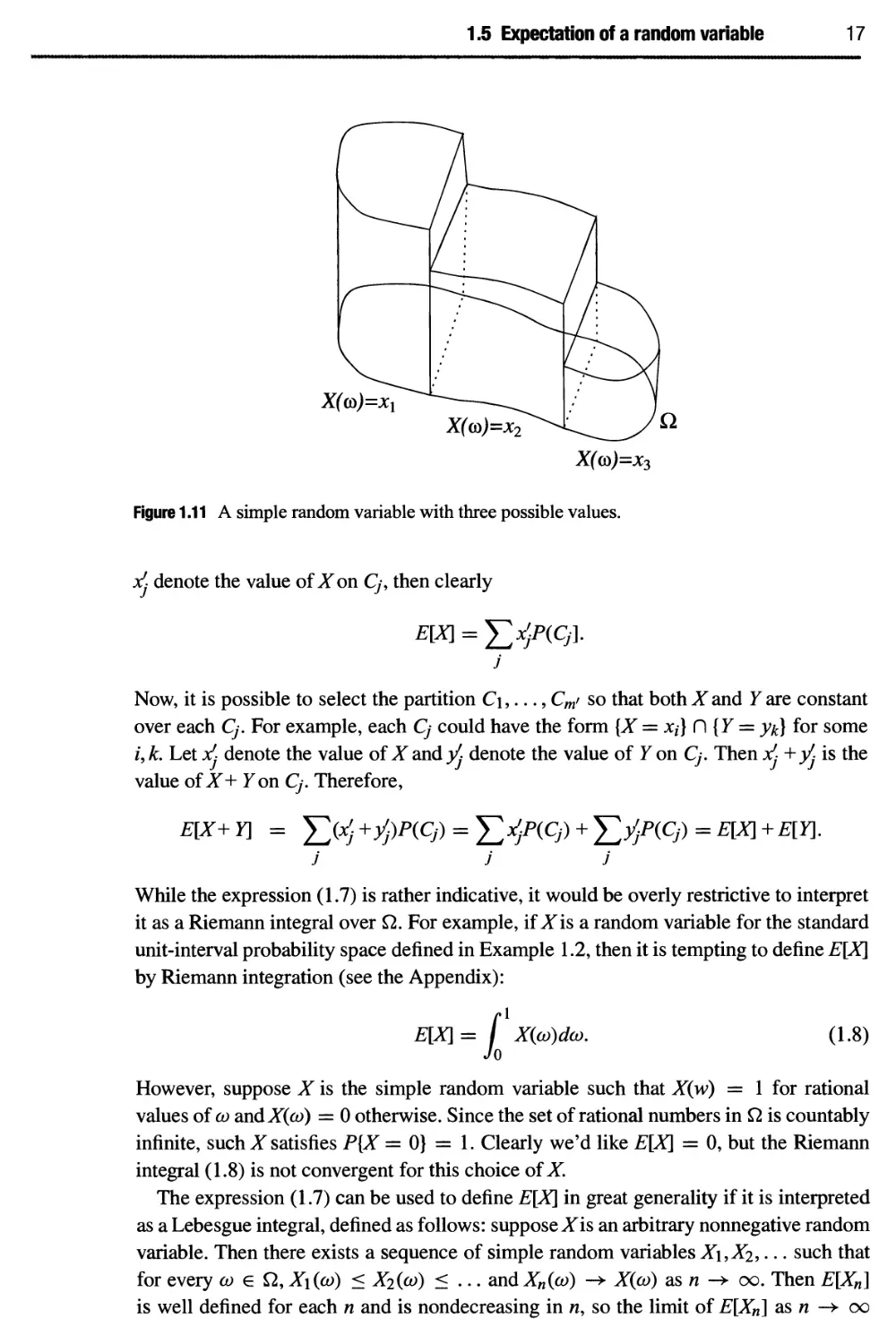

There is a simple graphical interpretation of (1.11). Namely, E[X\ is equal to the

area of the region between the horizontal line {y = 1} and the graph of Ρχ and

contained in {x > 0}, minus the area of the region bounded by the χ axis and the

graph of Fx and contained in {x < 0}, as long as at least one of these regions has

finite area, see Figure 1.12.

Properties E.l and E.2 are true for simple random variables and they carry over to

general random variables in the limit defining the Lebesgue integral (1.7). Properties

E.3 and E.4 follow from the equivalent definition (1.9) and properties of Lebesgue-

Stieltjes integrals. Property E.5 can be proved by approximating g by piecewise constant

functions. Property E.6 can be proved by integration by parts applied to (1.10).

Alternatively, since F^O^hasthe same distribution as X, if Uis uniformly distributed on the

interval [0,1], the law of the unconscious statistician yields that E[X\ = f0 F^l(u)du,

and this integral can also be interpreted as the difference of the areas of the same two

regions.

Figure 1.12 E[X] is the difference of two areas.

20 A selective review of basic probability

The variance of a random variable X with E[X] finite is defined by Var(X) =

E[(X — E[X\)2]. By the linearity of expectation, if E[X\ is finite, the variance of X

satisfies the useful relation: Мж{Х) = E[X2 - 2XE[X] + E[X\2] = E[X2] - E[X\2.

The following two inequalities are simple and fundamental. The Markov inequality

states that if Υ is a nonnegative random variable, then for с > 0,

E[Y]

P{Y>c}<

с

To prove Markov's inequality, note that I{y>c) < \, and take expectations on each side.

The Chebychev inequality states that if X is a random variable with finite mean μ and

variance σ2, then for any d > 0,

a2

P{\X-^>d}<-^.

The Chebychev inequality follows by applying the Markov inequality with Υ = \Χ—μ\2

and с = d2.

The characteristic function Φχ of a random variable ^f is defined by

Фх(и) = E[e>uX]

for real values of u, where у = \/^T. For example, if ^fhas pdf/i then

/oo

exp(jux)fx(x)dx,

-oo

which is 2π times the inverse Fourier transform of /χ.

Two random variables have the same probability distribution if and only if they have

the same characteristic function. If E[J^] exists and is finite for an integer к > 1, then

the derivatives of Φχ up to order к exist and are continuous, and

Ф^(0) =jkE[Xk].

For a nonnegative integer-valued random variable ЛГ it is often more convenient to work

with the ζ transform of the pmf, defined by

oo

4>χ(ζ)=Ε[ζχ] = Σζ*Ρχ(®

k=0

for real or complex ζ with \ ζ \< 1. Two such random variables have the same probability

distribution if and only if their ζ transforms are equal. If E[J^] is finite it can be found

from the derivatives of Ψχ up to the Ath order at ζ = 1,

Ψ^}(1) = E[X(X- 1) · · · (Х- к+ 1)].

1.6 Frequently used distributions

The following is a list of the most basic and frequently used probability distributions.

For each distribution an abbreviation, if any, and valid parameter values are given,

followed by either the CDF, pdf, or pmf, then the mean, variance, a typical example,

and significance of the distribution.

1.6 Frequently used distributions 21

The constants/?, λ, μ,σ, a, b, and a are real-valued, and η and / are integer-valued,

except η can be noninteger-valued in the case of the gamma distribution.

Bernoulli:

Be(p),0<p< 1

pmf: p(i) =

ρ / = 1

Ι-ρ i = 0

0 else

z-transform: 1 — p+pz

mean:/? variance: p{\ — p)

Example: Number of heads appearing in one flip of a coin. The coin is called fair if

p=\ and biased otherwise.

Binomial:

Bi(n,p),n > 1,0 <p < 1

•wo-(^

pmf:/?(/) = I . \p\\ -p)n~l 0<i<n

z-transform: (1 —p+pz)n

mean: np variance: np(l — p)

Example: Number of heads appearing in η independent flips of a coin.

Poisson:

Х*е~к

pmf: p(i) = —-— / > 0

/!

z-transform: exp(X(z — 1))

mean: λ variance: λ

Example: Number of phone calls placed during a ten second interval in a large city.

Significance: The Poisson pmf is the limit of the binomial pmf as η -> +oo and/? -> 0

in such a way that np -> λ.

Geometric:

Geo(p),0 <p< 1

pmf:/?(/) = (l -ρΫ V ι

pz

z-transform:

1 —z+pz

1 \-p

mean: - variance: —5—

ρ ρ2

22 A selective review of basic probability

Example: Number of independent flips of a coin until heads first appears.

Significant property: If ^fhas the geometric distribution, P{X > /} = (1 — p)1 for integers

i > 1. So Xhas the memoryless property:

P(X> i+j\X> i)=P{X>j}foviJ>l.

Any positive integer-valued random variable with this property has a geometric

distribution.

Gaussian

(also called Normal): Ν(μ, σ2), μ e R, σ > 0

pdf(if^>0):/W = -^=eXp(-^)

Prt(ifol = 0y.P(X) = {l *=£

t?c2s

characteristic function: exp I jup

( ιι2σ2\

yuix - —)

mean: μ variance: σ

Example: Instantaneous voltage difference (due to thermal noise) measured across a

resistor held at a fixed temperature.

Q(c) = 1 - Ф(с) = Г JLe-T«fc.

J с ν2π

Notation: The character Φ is often used to denote the CDF of an N(0,1) random

variable,3 and Q is often used for the complementary CDF:

r-οο л _£

Jc \fbl

Significant property (Central limit theorem): If Χι,Χζ, · · · are independent and

identically distributed with mean μ and nonzero variance σ2, then for any constant c,

lim ΡI — ==? < с \ = Ф(с).

Exponential:

Εχρ(λ), λ > О

pdf: flx) = \е~^ χ > О

characteristic function:

λ-ju

1 1

mean: — variance:

—τα A

3 As noted earlier, Φ is also used to denote characteristic functions. The meaning should be clear from the

context.

1.6 Frequently used distributions 23

Example: Time elapsed between noon sharp and the first telephone call placed in a

large city, on a given day.

Significance: If Xhas the Εχρ(λ) distribution, P{X> t] = e~Xt for t > 0. So X has the

memoryless property:

P{X> s + t | X> s] = P{X> ή s,t> 0.

Any nonnegative random variable with this property is exponentially distributed.

Uniform:

U(a, b) — oo < a < b < oo

{τΛ- α < χ <

V "else"

characteristic function:

b

ejub _ ejua

ju(b - a)

a + b . (b-a)2

mean: variance: ———

2 12

Example: The phase difference between two independent oscillators operating at the

same frequency may be modeled as uniformly distributed over [0,2π].

Significance: Uniform is uniform.

Gamma(n,a):

n, a > 0 (n real valued)

anxn-xe~ax

/•OO

where Г (л) = / sn~xe~sds

Jo

characteristic function

\a -juj

n . n

mean: — variance: -^

a az

Significance: If η is a positive integer then Γ(η) = (n - 1)! and a Gamma(n, a) random

variable has the same distribution as the sum of n independent, Exp(a) distributed

random variables.

24 A selective review of basic probability

Rayleigh(a2):

r2

CDF:l-exp(-^)

mean: crJ— variance: σ (2 J

Example: Instantaneous value of the envelope of a mean zero, narrow band noise signal.

Significance: If Xand Tare independent, N(0, σ2) random variables, (X2 + У2)з has the

Rayleigh(a2) distribution. Also notable is the simple form of the CDF.

1.7 Failure rate functions

Eventually a system or a component of a particular system will fail. Let Г be a random

variable that denotes the lifetime of this item. Suppose Г is a positive random variable

with pdf/7\ ТЫ failure rate function, h = (h(t) : t > 0), of Г (and of the item itself) is

defined by the following limit:

δ P(t<T<t + e\T>t)

h(t) = lim .

e-»0 6

That is, given the item is still working after t time units, the probability the item fails

within the next e time units is h(f)e + o(e).

The failure rate function is determined by the distribution of Γ as follows:

P{t < T<t + e]

h(t) = lim

e-»0 P{T>t}€

FT(t + €)-FT(i)

= lim

(\-FT(t))e

(1.12)

e^O (1 - FT(t))€

hit)

1-FjitY

because the pdf fy is the derivative of the CDF Fj.

Conversely, a nonnegative function h = (h(t) : t > 0) with /0°° h(t)dt = oo

determines a probability distribution with failure rate function h as follows. The CDF is

given by

F(i) = \-e-&h{s)ds. (1.13)

It is easy to check that F given by (1.13) has failure rate function h. To derive (1.13),

and hence show it gives the unique distribution with failure rate function h, start with

F7(l -F) = h. Equivalent^, (ln(l -F))f = -h or ln(l -F) = ln(l -F(0)) -Ц h(s)ds,

which is equivalent to (1.13).

1.8 Jointly distributed random variables 25

0

t>0

else

Example 1.14 (a) Find the failure rate function for an exponentially distributed random

variable with parameter λ. (b) Find the distribution with the linear failure rate function

h{f) = jp for t > 0. (c) Find the failure rate function of Τ = тт{Гь T2], where T\

and T2 are independent random variables such that T\ has failure rate function h\ and

T2 has failure rate function h2.

Solution

(a) If Τ has the exponential distribution with parameter λ, then for t > 0, fy(f) =

Xe~kt and 1 - FT(t) = e~kt, so by (1.12), hit) = λ for all t > 0. That is, the

exponential distribution with parameter λ has constant failure rate λ. The constant

failure rate property is connected with the memoryless property of the exponential

distribution; the memoryless property implies that P(t < Τ< T+e\T> t) = P{T > e},

which in view of the definition of h shows that h is constant.

(b) If h(t) = ji for t > 0, then by (1.13), FT(t) = l-e 2^. The corresponding pdf is

given by

fnt) =

This is the pdf of the Rayleigh distribution with parameter σ2.

(с) By the independence and (1.12) applied to T\ and T2,

P{T>t}=P{Ti >iandT2 > ή = Ρ{Τλ > t}P{T2 > ή

= efi-hi(s)dseft>-h2Ws = e-tih(s)ds^

where h = h\ + h2. Therefore, the failure rate function for the minimum of two

independent random variables is the sum of their failure rate functions. This makes intuitive

sense; if there is a system that fails when either of one of two components fails, then the

rate of system failure is the sum of the rates of component failure.

1.8 Jointly distributed random variables

Let X\,X2, · · · ,Xm be random variables on a single probability space (Ω,F,P). The

joint cumulative distribution function (CDF) is the function on Rm defined by

FxiXl-Xmfa'· · · >χ™) = P{X\ < X\,Xl <X2,··· Лт < Xm).

The CDF determines the probabilities of all events concerning X\,... ,Xm. For example,

if R is the rectangular region (a, b] χ (α\ bf] in the plane, then

P{(Xi,X2) eR}= FXlX2(b,b') - FXxXl(a,bf) - FXxXl(b,d) + FXxXl(a,d).

26 A selective review of basic probability

Γ

fxi(V\) = I

J-c

We write +00 as an argument of Fx in place of Xf to denote the limit as Xi -> +00. By

the countable additivity axiom of probability,

FXxx2 (x\, +00) = lim FXlχ2 Cxi, x2) = FXl (χλ).

Jt2->00

The random variables are jointly continuous if there exists a function/*^·-J^, called

the joint probability density function (pdf), such that

/X\ rxm

"· I fxiX2-xm(M\> · · · > um)dum •••du\.

-00 J—00

Note that if X\ and X2 are jointly continuous, then

FXx (x\) = FXxx2 (χι, +00)

= / / fxlx2(ui,u2)du2\dui,

so that X\ has pdf given by

r»oo

fxxx2(u\,U2)du2.

-00

The pdfs^ andfx2 are called the marginal pdfs for the joint pdf/j^ д2.

If Χι,Χζ, · · · ,Xm are each discrete random variables, then they have a joint pmf

PXiXr-Xm defined by

PXlX2-Xm(ul9U2> •••9Um)= P{X\ = Щ,Х2 = U2, . · . ,Xm = Um].

The sum of the probability masses is one, and for any subset A of Rm

P{(X\,...,Xm) eA}= Σ Px(u\,U2,---,um).

{и\,...,ит)еА

The joint pmf of subsets of X\,.. .Xm can be obtained by summing out the other

coordinates of the joint pmf. For example,

РхЛт) = ^2рххх2(щ,и2).

u2

The joint characteristic function of X\,... ,Xm is the function on Rm defined by

Фххх2...хт(т, и2, ...,ит)= E[^Xw+x^+"+x-u^l

Random variables X\,... ,Xm are defined to be independent if for any Borel subsets

A\,... ,Am of R, the events {^1 e A\],..., {Xm e Am] are independent. The random

variables are independent if and only if the joint CDF factors.

Fxxx2..xm(x\,. ..,xm)= FXx(x\) · · ·FXm(xm).

If the random variables are jointly continuous, independence is equivalent to the

condition that the joint pdf factors. If the random variables are discrete, independence is

equivalent to the condition that the joint pmf factors. Similarly, the random variables

are independent if and only if the joint characteristic function factors.

1.10 Correlation and covariance 27

1.9 Conditional densities

Suppose that X and Υ have a joint pdf /χγ. Recall that the pdf fy, the second marginal

density of/χγ, is given by

/00

fxY(x,y)dx.

-00

The conditional pdf of Xgiven 7, denoted by/χ\γ(χ | У), is undefined if fy(y) = 0. It is

defined for у such that fy(y) > 0 by

fx\r(x\y)= r/. -oo<x<+oo.

If у is fixed and fy(y) > 0, then as a function of χ,/χ\γ (χ \ y) is itself a pdf.

The expectation of the conditional pdf is called the conditional expectation (or

conditional mean) of X given Υ = у, written as

/oo

xfx\r(x\y)dx.

-OO

If the deterministic function E[X \ Υ = у] is applied to the random variable Y, the result

is a random variable denoted by E[X \ Y].

Note that conditional pdf and conditional expectation were so far defined in case

X and Υ have a joint pdf. If instead, X and Υ are both discrete random variables, the

conditional pmf ρχ\γ and the conditional expectation E[X \ Υ = у] can be defined in a

similar way. More general notions of conditional expectation are considered later.

1.10 Correlation and covariance

Let X and Υ be random variables on the same probability space with finite second

moments. Three important related quantities are:

the correlation: E[XY],

the covariance: Cov(^, Y) = E[(X- E[X\)(Y- E[Y])l

Cov(X,Y)

the correlation coefficient: ρχγ =

VVarCY)Var(y)

A fundamental inequality is Schwarz's inequality:

| E[XY\ | < у/ЕЦРЩУ2]· (114)

Furthermore, if E[Y2] φ 0, equality holds if and only if P(X = cY) = 1 for some

constant c. Schwarz's inequality (1.14) is equivalent to the L2 triangle inequality for

random variables:

E[(X+Y)2]? <E[X2V- +£[72]5. (1.15)

28 A selective review of basic probability

Schwarz's inequality can be proved as follows. If P{Y = 0} = 1 the inequality is

trivial, so suppose E[Y2] > 0. By the inequality (a + b)2 < 2a2 + 2b2 it follows that

E[(X- XT)2] < oo for any constant λ. Take λ = Ε[ΧΥ]/Ε[Υ2] and note that

0 < E[(X- λΥ)2] = E[X2] - 2λΕ[ΧΥ] + λ2Ε[Υ2]

= E{X\ Ε[ΧΥ]2

E[P] '

which is clearly equivalent to the Schwarz inequality. lfP(X = cY) = 1 for some с then

equality holds in (1.14), and conversely, if equality holds in (1.14) then P(X = cY) = 1

for с = λ.

Application of Schwarz's inequality to^f — E[X] and Υ — E[Y] in place of X and Υ

yields that

| CovtY, Y) | < yVar(^)Var(y).

Furthermore, if Var( Υ) φ 0 then equality holds if and only if X = aY+ b for some

constants a and b. Consequently, if Var(X) and Var(Y) are not zero, so that the correlation

coefficient ρχγ is well defined, then | ρχγ \< 1 with equality if and only if X = aY+ b

for some constants a, b.

The following alternative expressions for Cov(X, Y) are often useful in calculations:

Co\(X,Y) = E[X(Y-E[Y])]=E[(X-E[X])Y]=E[XY]-E[X]E[Y].

In particular, if either X or Υ has mean zero then E[XY] = Cov(X, Y).

Random variables X and Υ are called orthogonal if E[XY] = 0 and are called

uncorrected if Cov(X, Y) = 0. If X and Υ are independent then they are uncorrected. The

converse is far from true. Independence requires a large number of equations to be true,

namely Fxy(x,y) = F^(x)Fy(y) for every real value of χ and>>. The condition of being

uncorrected involves only a single equation to hold.

Covariance generalizes variance, in that Var(^Q = Co\(X,X). Covariance is linear in

each of its two arguments:

CovtT+ Y,U+V) = CovtY, U) + CovtT, V) + Cov(7, U) + Cov(7, V)

Co\(aX+ b,cY+d)= acCo\(X, Y).

for constants β, b, c, d. For example, consider the sum Sm = X\ + ... + Xmi such that

X\,...,Xm зге (pairwise) uncorrected with E[X{\ = μ and Var(^Q) = σ2 for 1 < i < m.

Then E[Sm] = ιημ and

Var(5m) = Cov(5m

= J2Var(Xi)+J2Cov(Xi,Xf)

2

= та .

Therefore, Sm~m^ has mean zero and variance one.

1.11 Transformation of random vectors 29

1.11

Transformation of random vectors

A random vector Xoi dimension m has the form

X =

ί Ъ \

U )

where X\,... ,Xm are random variables. The joint distribution of X\,... ,Xm can be

considered to be the distribution of the vector X. For example, if X\,... ,Xm are jointly

continuous, the joint pdf fxxxv.xm(x\, · · · ,Xm) can as well be written asfx(x), and be

thought of as the pdf of the random vector X.

Let X be a continuous type random vector on Rm. Let g be a one-to-one mapping

from Rm to Rm. Think of g as mapping x-space (here χ is lower case, representing a

coordinate value) into^-space. As χ varies over Rm, у varies over the range of g. All the

while,у = g(x) or, equivalently, χ = g~l (y).

Suppose that the Jacobian matrix of derivatives ^(x) is continuous in χ and non-

singular for all x. By the inverse function theorem of vector calculus, it follows that

the Jacobian matrix of the inverse mapping (from у to x) exists and satisfies Щ (y) =

(1* W ) · ^se I ^ I for a square matrix К to denote | det(K) |.

Proposition 1.5 Under the above assumptions, Υ is a continuous type random vector

and for у in the range ofg:

ш =

fxix)

=fxix)

дх.

Ту

(У)

Example 1.15 Let U, V have the joint pdf:

fuvi

(u,v) = l

u + v

0

0 < u, ν < 1

else

and let^T= U2 and Y= (7(1 + V). Let us find the pdf^. The vector (U, V) in the и - ν

plane is transformed into the vector (X, Y) in the χ — у plane under a mapping g that

maps u, ν to χ = и2 ana у = u{\ + ν). The image in the χ —у plane of the square [0, l]2

in the и — ν plane is the set A given by

A = {(x,y) : 0 < χ < 1, and yfx < у < lyfx).

The mapping from the square is one to one, for if (x,y) e A then (w, v) can be recovered

by и = y/x and ν = -y= — 1, see Figure 1.13. The Jacobian determinant is

дх

£

ди

дх

ё

dv

=

2и 0

1 + ν и

= 2и2.

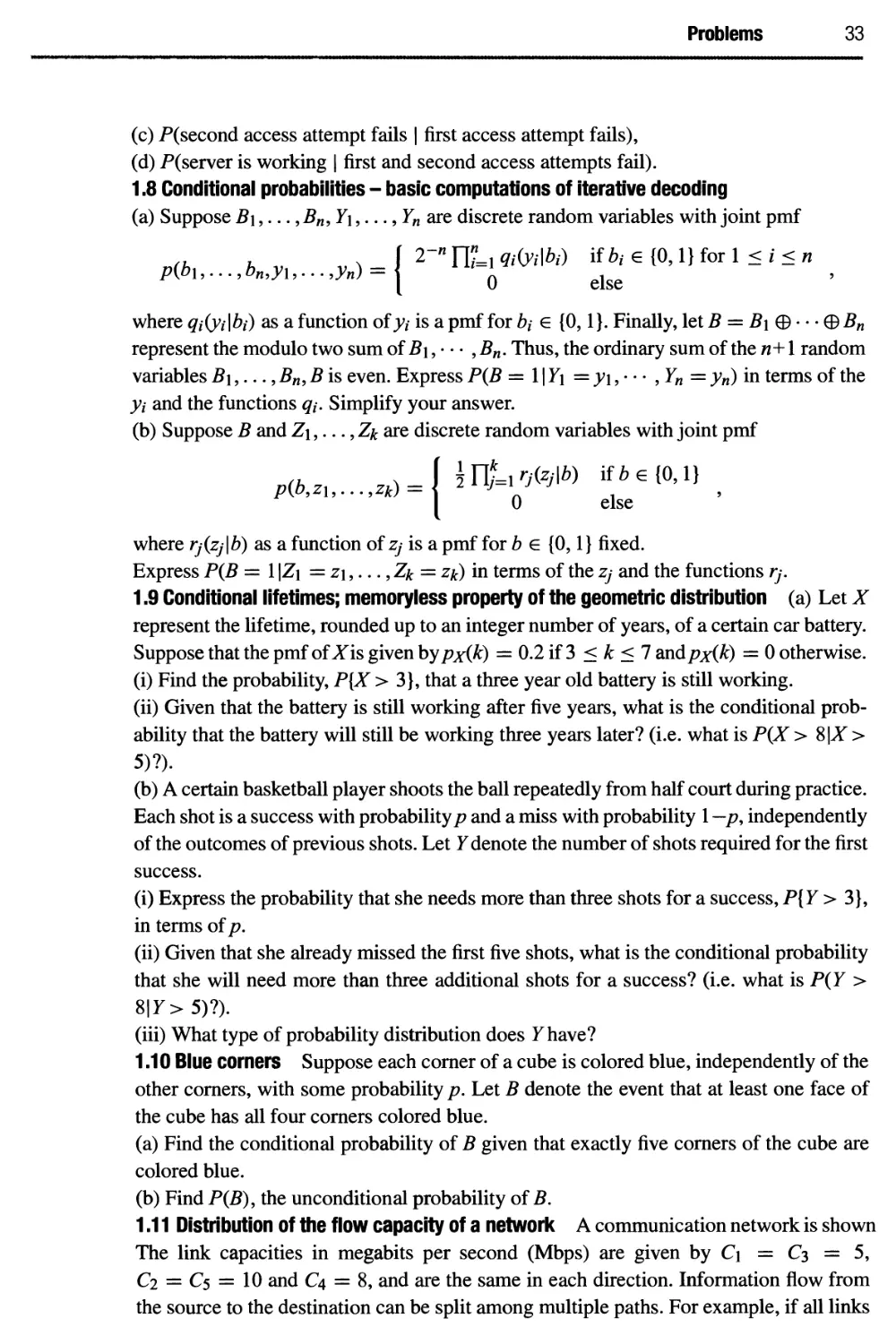

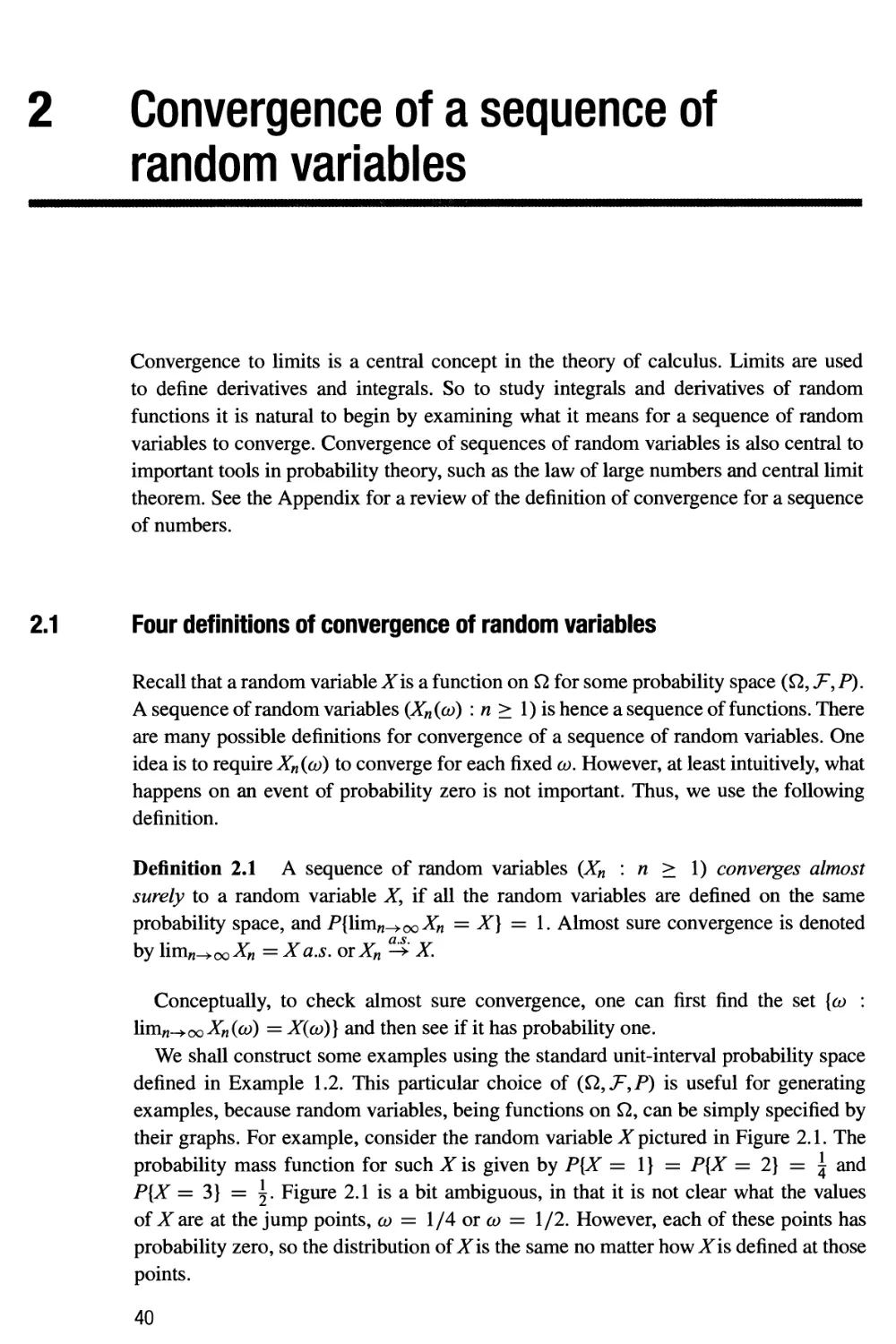

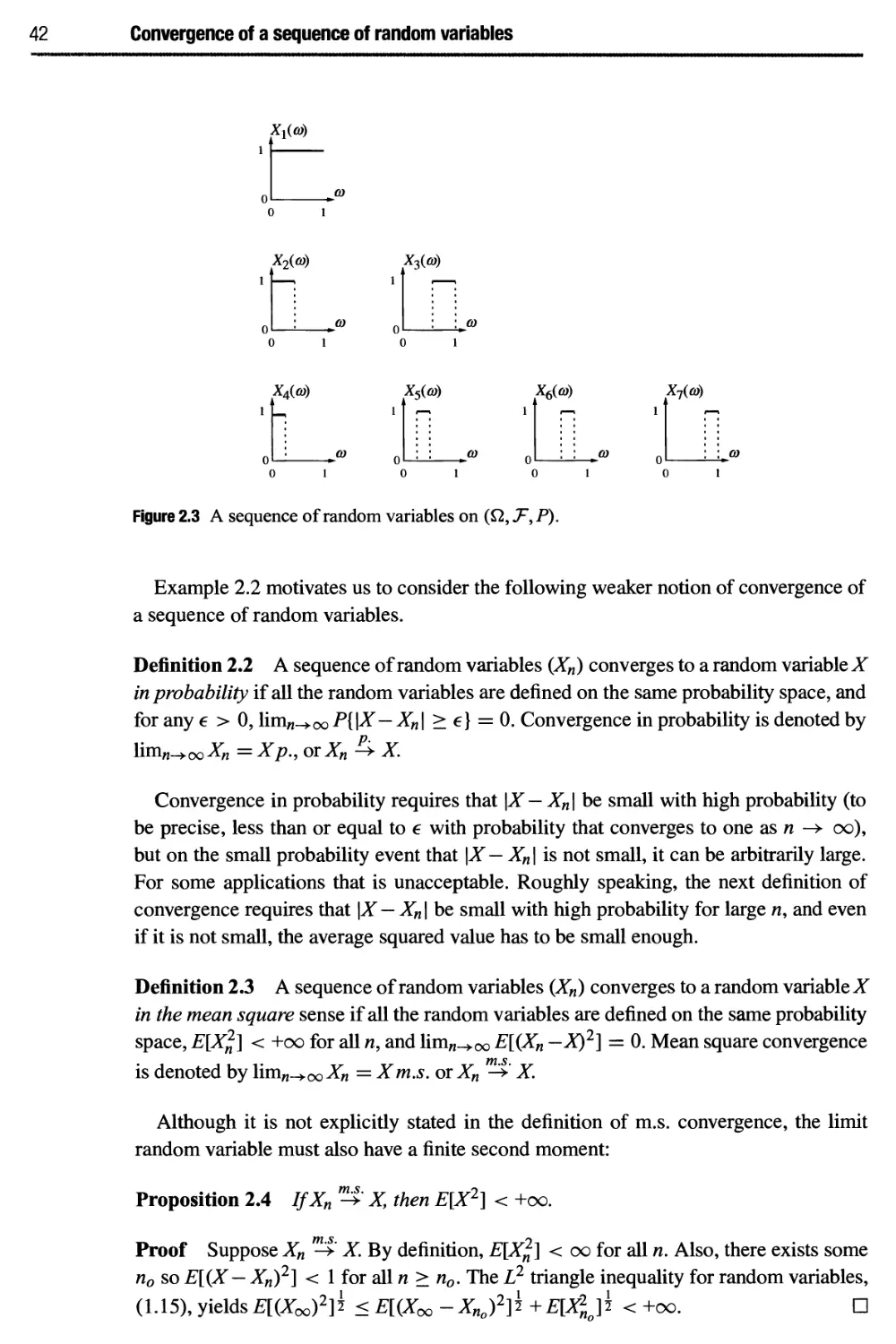

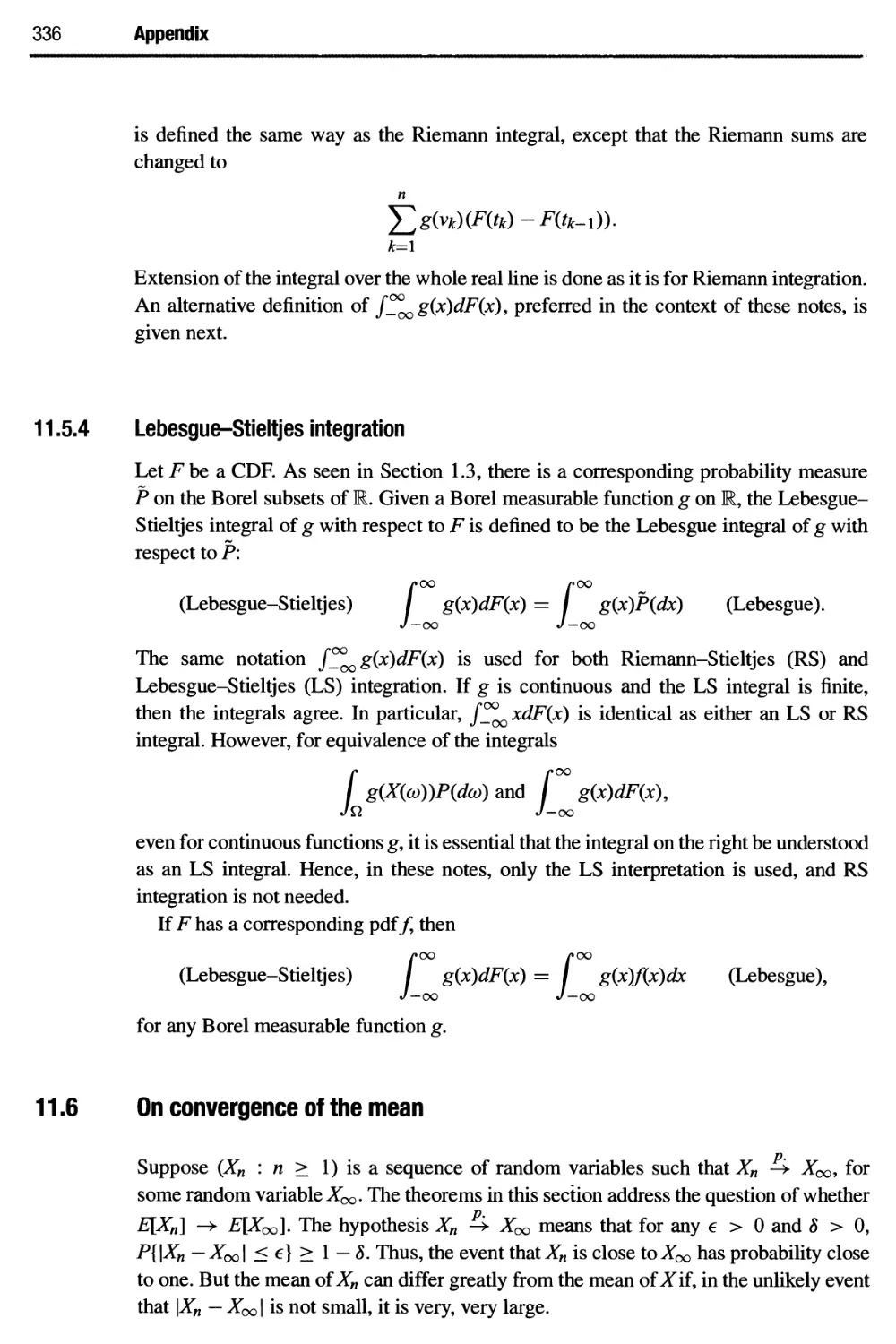

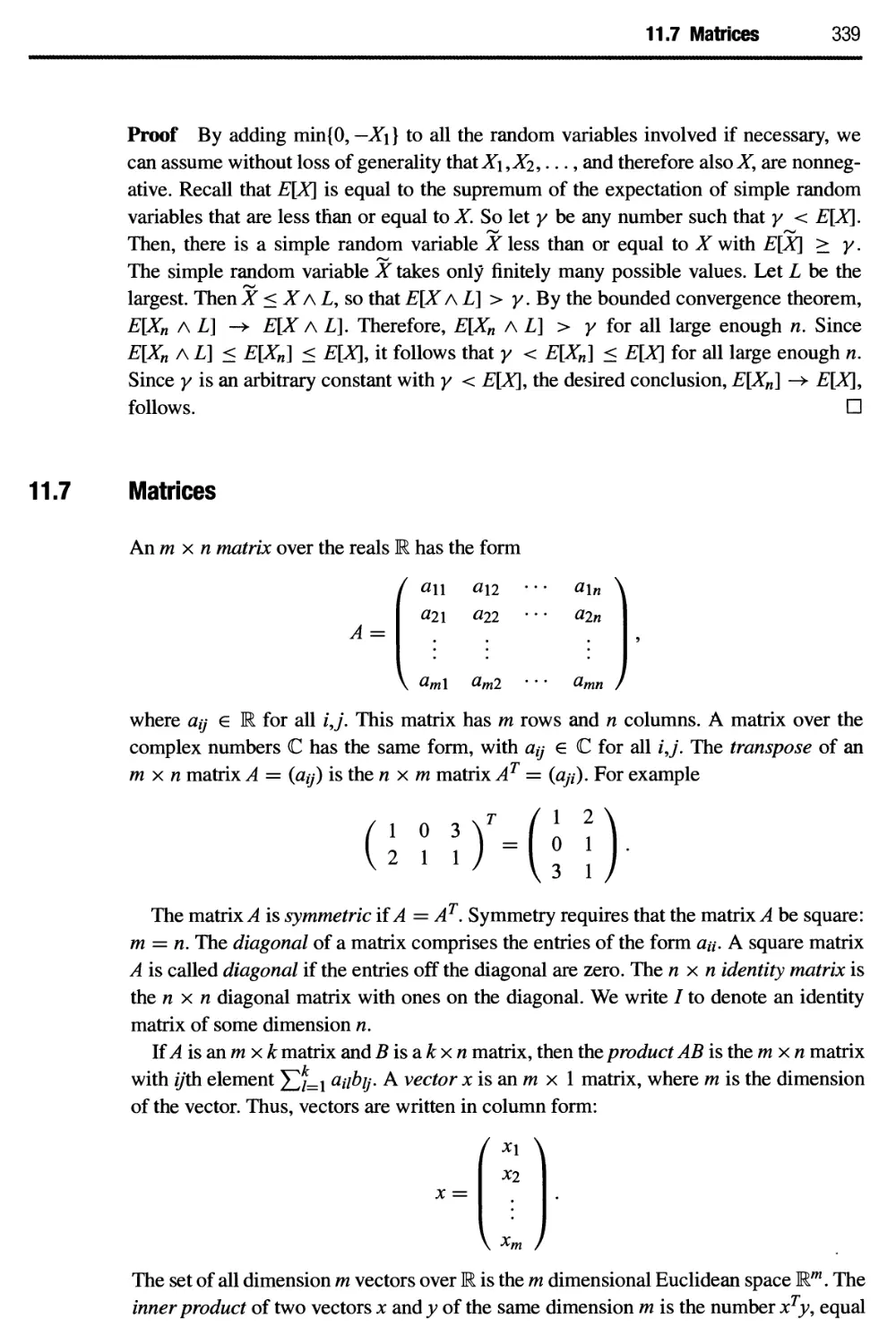

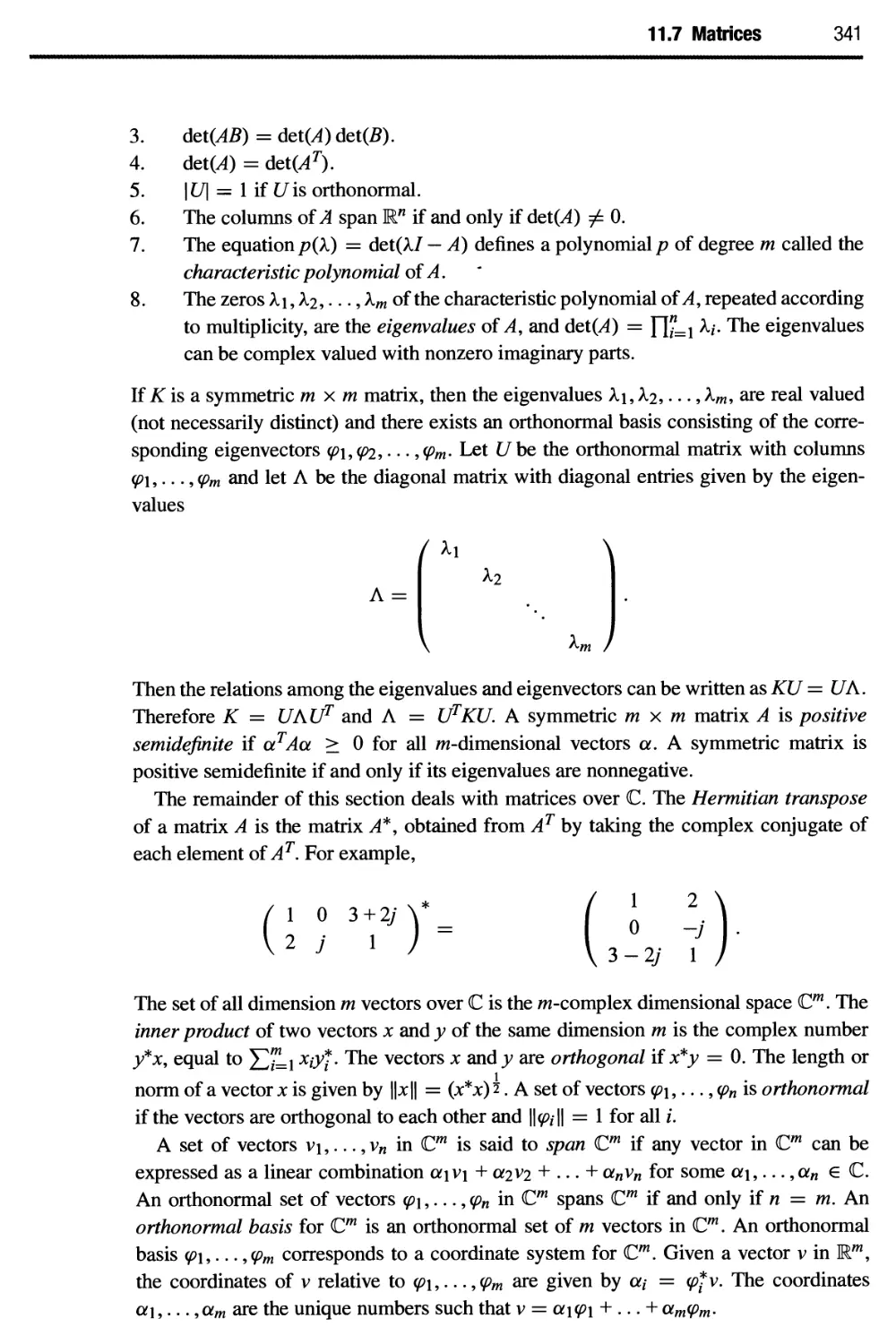

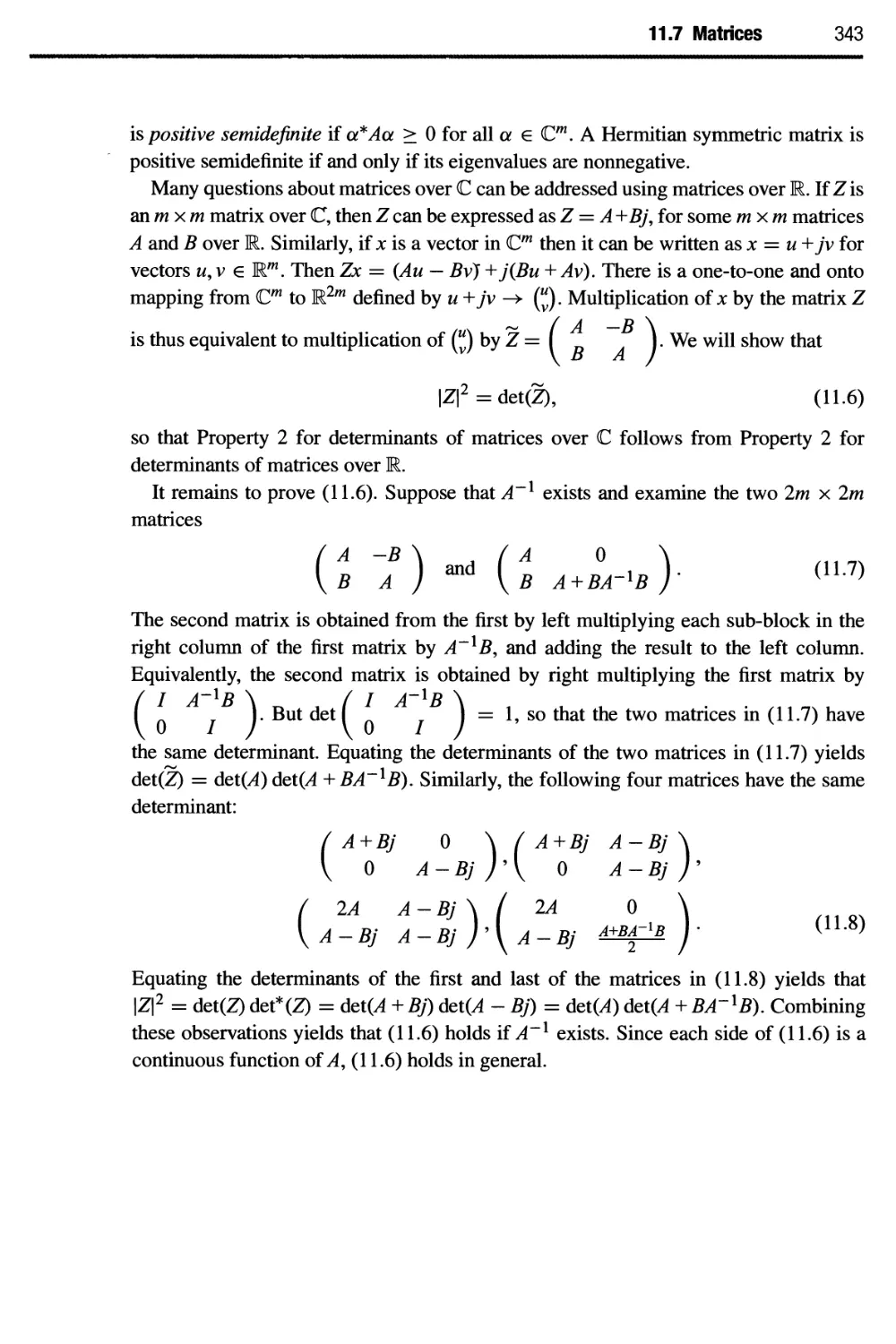

30 A selective review of basic probability