Текст

Behaviours for Concurrency

Torben Amtoft

Flemming Nielson

Hanne Riis Nielson

Imperial College Press

Behaviours for Concurrency

p ill [(feet Systems

Behaviours for Concurrency

Torben Amtoft

Flemming Nielson

Hanne Riis Nielson

University of Aarhus, Denmark

jQ

Imperial College Press

Published by

Imperial College Press

203 Electrical Engineering Building

Imperial College

London SW7 2BT

Distributed by

World Scientific Publishing Co. Pte. Ltd.

P O Box 128, Farrer Road, Singapore 912805

USA office: Suite IB, 1060 Main Street, River Edge, NJ 07661

UK office: 57 Shelton Street, Covent Garden, London WC2H 9HE

British Library Cataloguing-in-Publication Data

A catalogue record for this book is available from the British Library.

TYPE AND EFFECT SYSTEMS: BEHAVIOURS FOR CONCURRENCY

Copyright © 1999 by Imperial College Press

All rights reserved. This book, or parts thereof, may not be reproduced in any form or by any means,

electronic or mechanical, including photocopying, recording or any information storage and retrieval

system now known or to be invented, without written permission from the Publisher.

For photocopying of material in this volume, please pay a copying fee through the Copyright

Clearance Center, Inc., 222 Rosewood Drive, Danvers, MA 01923, USA. In this case permission to

photocopy is not required from the publisher.

ISBN 1-86094-154-0

This book is printed on acid-free paper.

Printed in Singapore by Uto-Print

Contents

Preface xi

1 Introduction 1

1.1 Basic Concepts 1

1.2 Polymorphism 4

1.2.1 An Inference System ' 5

1.2.2 An Inference Algorithm 7

1.2.3 Implicit Treatments of Side Effects 10

1.3 An Inference System for Effects 13

1.3.1 Effects Controlling Polymorphism 15

1.3.2 Regions 15

1.3.3 Behaviours 18

1.3.4 Subeffecting 20

1.4 An Inference Algorithm for Effects 22

1.4.1 Effect Constraints 22

1.4.2 Solving Effect Constraints 24

1.4.3 Downwards Closure for Polymorphism 25

1.5 Shape Conformant Subtyping with Effects 28

1.5.1 Inference Systems for Atomic Subtyping 29

1.5.2 Algorithmic Techniques for Atomic Subtyping 32

1.5.3 Integrating Shape Conformant Subtyping 34

1.6 Overview of the Development 36

CONTENTS

1.6.1 The Main Ideas 36

1.6.2 A Prototype Implementation 38

1.6.3 Benchmarks 39

1.6.4 A Reader's Guide to the Book 40

2 The Type and Effect System 43

2.1 The Source Language 43

2.1.1 Concurrent Programming 45

2.2 Basic Notions 48

2.2.1 Regions 48

2.2.2 Behaviours 49

2.2.3 Types 49

2.2.4 Constraints 50

2.2.5 Miscellaneous 51

2.3 Shape Conformant Subtyping 52

2.3.1 The Ordering on Behaviours 53

2.3.2 The Ordering on Types 53

2.4 The Type Inference System 54

2.5 The Rule for Generalisation 59

2.5.1 Backwards Closure 60

2.5.2 The Arrow Relation 61

2.5.3 Well-formed Type Schemes 63

2.6 Pragmatics 64

2.7 Basic Results 67

2.7.1 Trace Inclusion 70

2.7.2 Normalisation of Inference Trees 71

2.8 Conservative Extension of Hindley-Milner 72

3 The Semantics 77

3.1 The Sequential Semantics 78

CONTENTS

3.2 The Concurrent Semantics 81

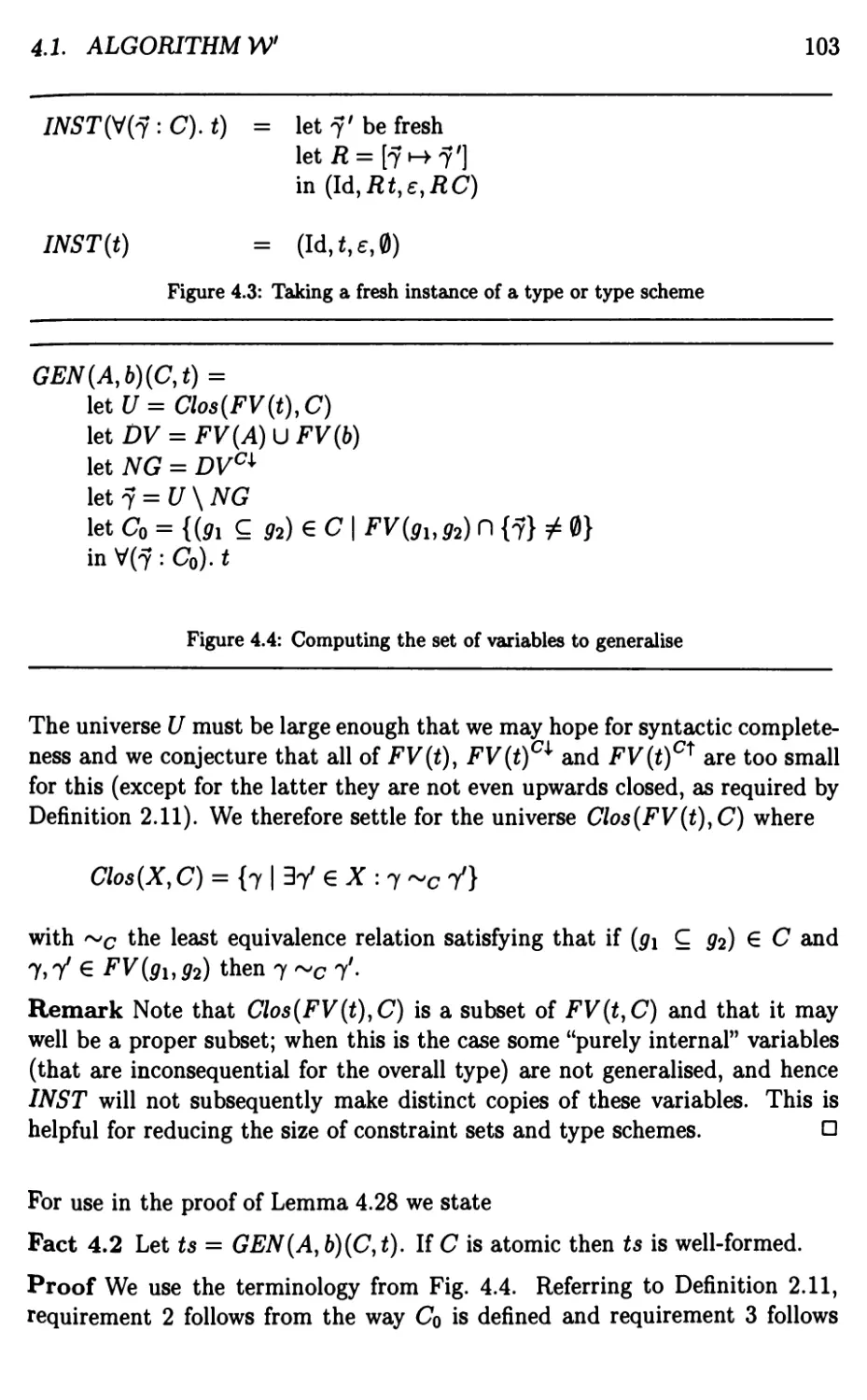

3.3 Reasoning about Inference Trees 82

3.4 Sequential Soundness 86

3.5 Erroneous Programs cannot be Typed 90

3.6 Concurrent Soundness 92

4 The Inference Algorithm 99

4.1 Algorithm W 101

4.2 Algorithm T 104

4.2.1 Termination and Soundness 109

4.3 Algorithm 11 110

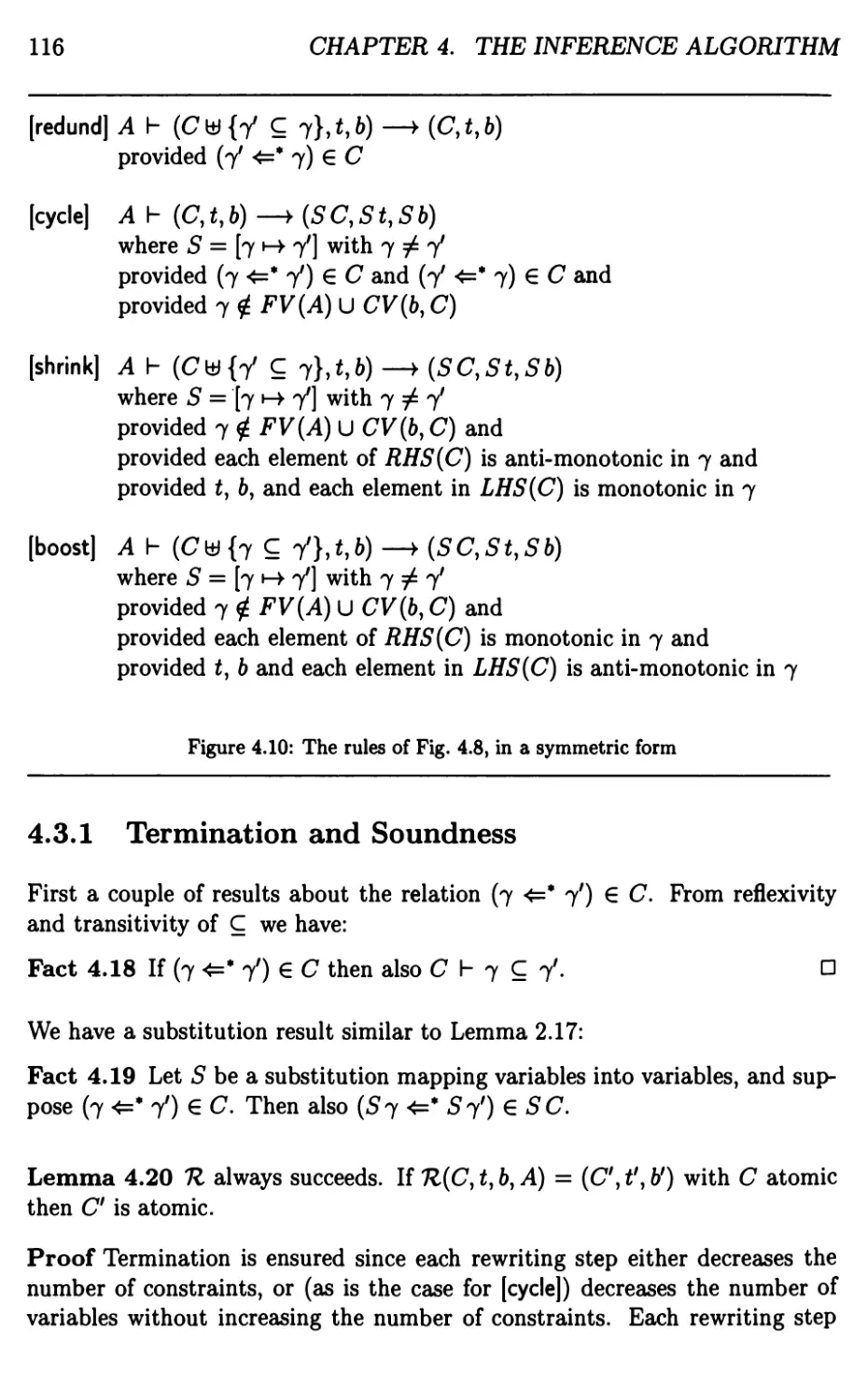

4.3.1 Termination and Soundness 116

4.3.2 Confluence Properties 117

4.4 Syntactic Soundness of Algorithm W 119

4.4.1 The Relation to Hindley-Milner Typing 120

5 The Inference Algorithm: Completeness 121

5.1 The Completeness Result for Programs 121

5.1.1 Applicability of the Completeness Result 123

5.1.2 The Relation to Hindley-Milner Typing 123

5.2 Completeness of Algorithm T 124

5.3 Completeness of Algorithm K 125

5.4 Lazy Instance for Type Schemes 126

5.5 The Completeness Result for Expressions 128

5.5.1 The Proof: Lifting from W to W 129

5.5.2 The Proof: The Case for Type Schemes 130

5.5.3 The Proof: The Case for Types 134

6 Post-processing the Analysis 141

6.1 Solving Region Constraints 142

CONTENTS

6.2 A Catalogue of Behaviour Transformations 143

6.2.1 Simplification 144

6.2.2 Hiding 145

6.2.3 Unfolding 145

6.2.4 Collapsing 146

6.3 The Notion of Bisimulation 147

6.4 Correctness of the Transformations 151

6.4.1 Simplification 152

6.4.2 Hiding 153

6.4.3 Unfolding 154

6.4.4 Collapsing 156

6.5 A Subject Reduction Property 157

6.6 Semantic Soundness of a Post-processing W 164

7 A Case Study 165

7.1 The Production Cell 166

7.1.1 Safety Conditions for the Table 167

7.1.2 An Implementation of the Table 167

7.1.3 The Behaviour of the Table 169

7.2 Validating the Safety Conditions 170

7.2.1 Vertical Bounds for the Table 171

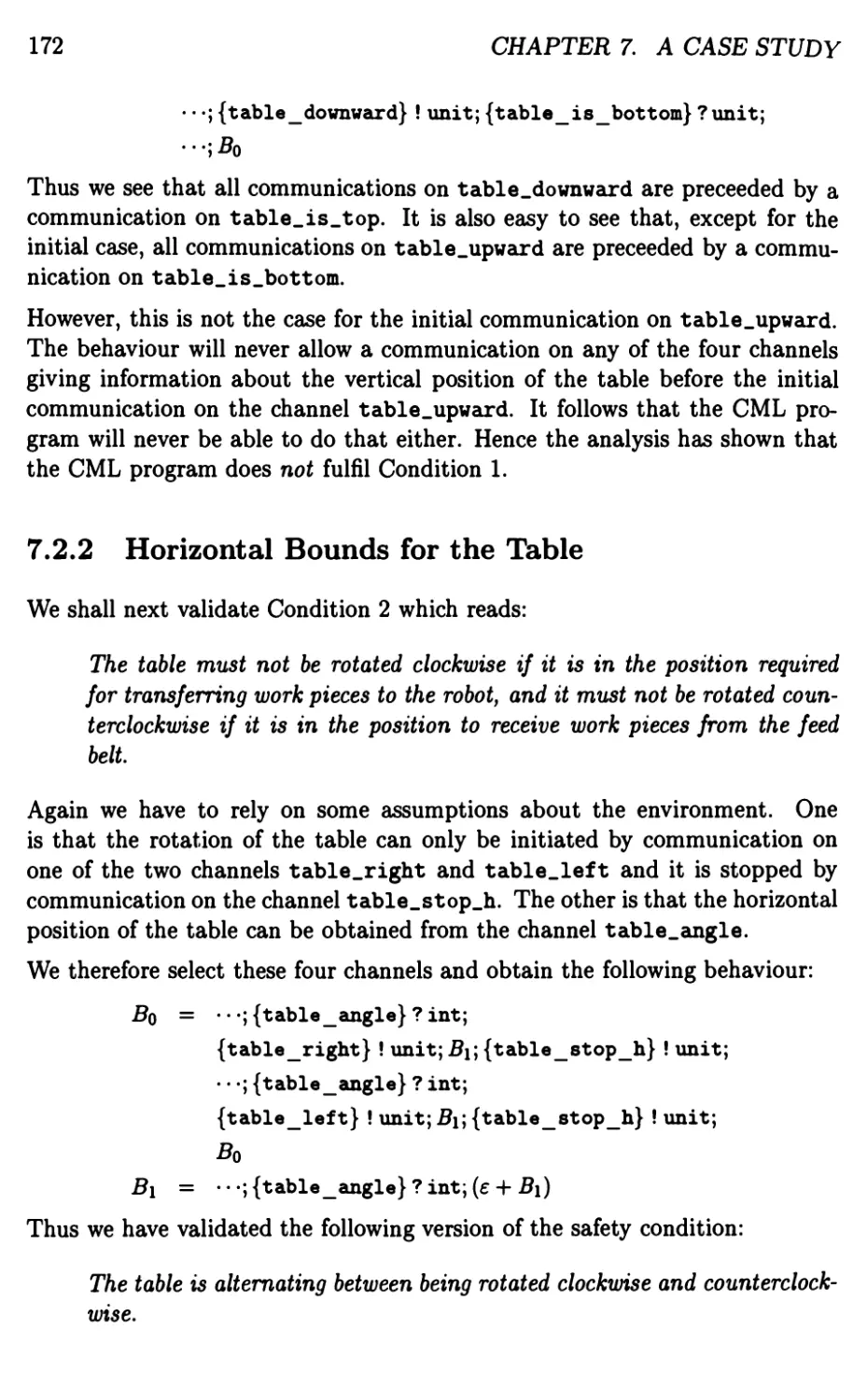

7.2.2 Horizontal Bounds for the Table 172

7.2.3 No Collisions on the Table 173

7.3 Discussion 174

A Proofs of Results in Chapter 2 177

A.l Proofs of Results in Section 2.5 177

A.2 Proofs of Results in Section 2.7 178

A.3 Proofs of Results in Section 2.8 183

CONTENTS

B Proofs of Results in Chapter 3 195

B.l Proofs of Results in Section 3.1 195

B.2 Proofs of Results in Section 3.3 197

B.3 Proofs of results in Section 3.6 202

C Proofs of Results in Chapter 4 213

C.l Proofs of Results in Section 4.3 213

C.2 Proofs of Results in Section 4.4 219

D Proofs of Results in Chapter 5 223

D.l Proofs of Results in Section 5.2 223

D.2 Proofs of Results in Section 5.4 226

D.3 The Proof of Theorem 5.12 227

E Proofs of Results in Chapter 6 233

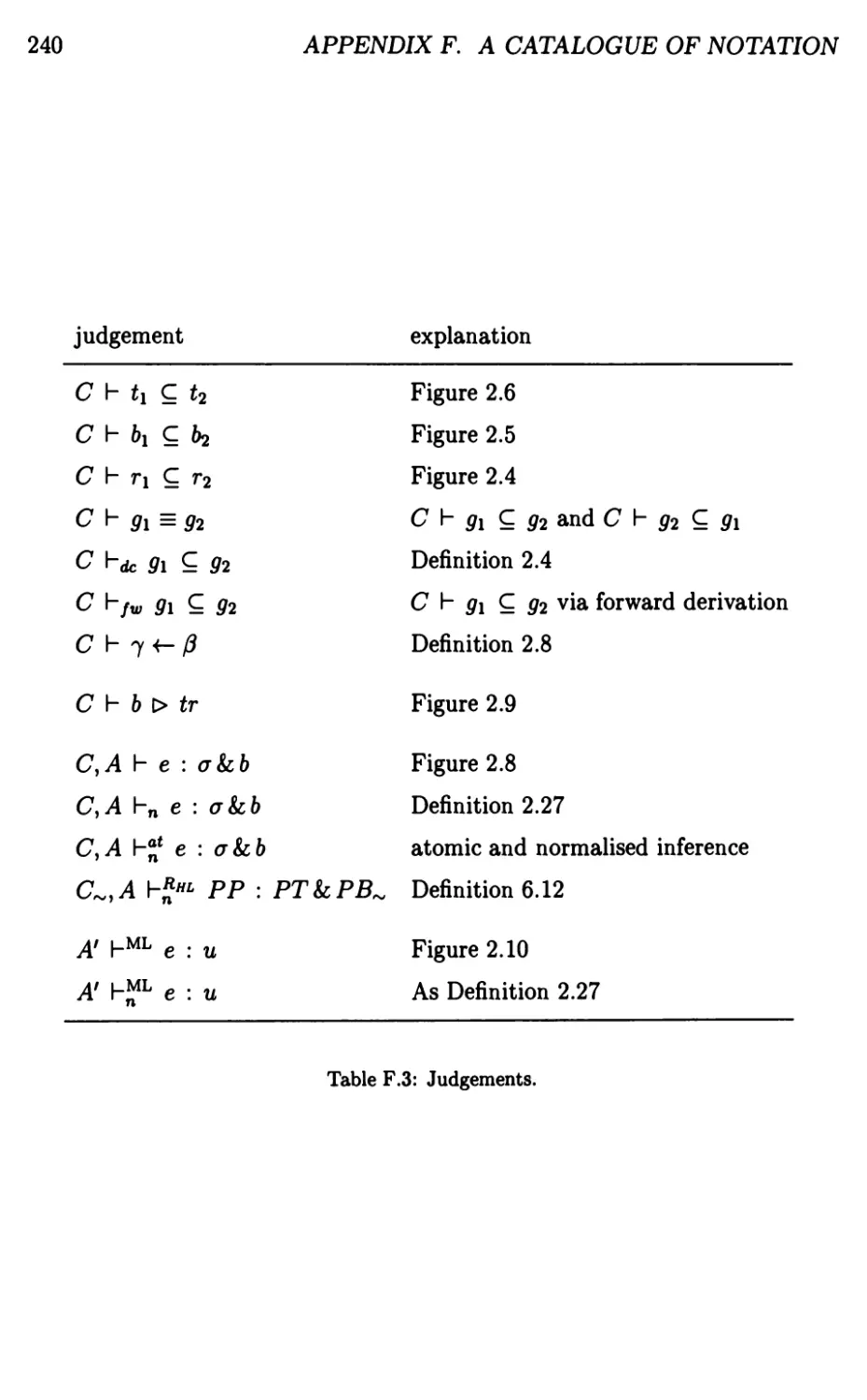

F A Catalogue of Notation 237

List of Figures

1.1 The core of the Hindley-Milner type system 6

1.2 The core of Algorithm W 9

1.3 The pre-order C with equivalence = 21

2.1 Expressions e £ Exp 44

2.2 Expressions e £ ExpQ 45

2.3 Translating from Exp to Exp® 45

2.4 The ordering on regions 52

2.5 The ordering on behaviours 54

2.6 The ordering on types 55

2.7 The types of constants 57

2.8 The type inference system 58

2.9 The possible traces of a behaviour 70

2.10 The core of the Hindley-Milner type system 74

3.1 The evaluation function S 79

3.2 The top-level sequential transition relation 79

3.3 The concurrent transition relation 82

4.1 Algorithm W: the overall structure 100

4.2 Syntax-directed constraint generation 102

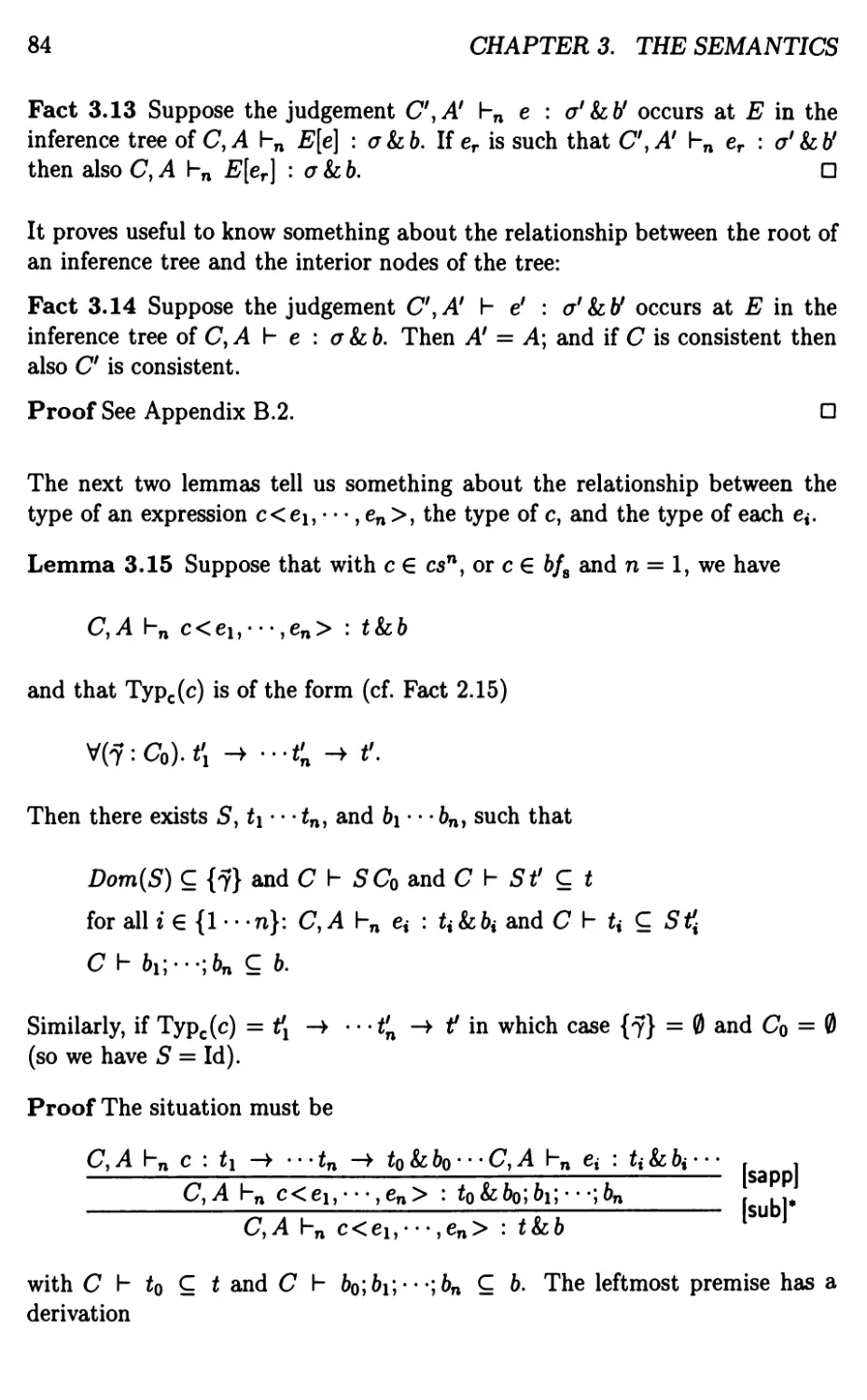

4.3 Taking a fresh instance of a type or type scheme 103

4.4 Computing the set of variables to generalise 103

4.5 Decomposition of constraints 105

LIST OF FIGURES

4.6 Rewriting rules for T 105

4.7 Forced matching 108

4.8 Eliminating constraints Ill

4.9 Monotonic and anti-monotonic variables 115

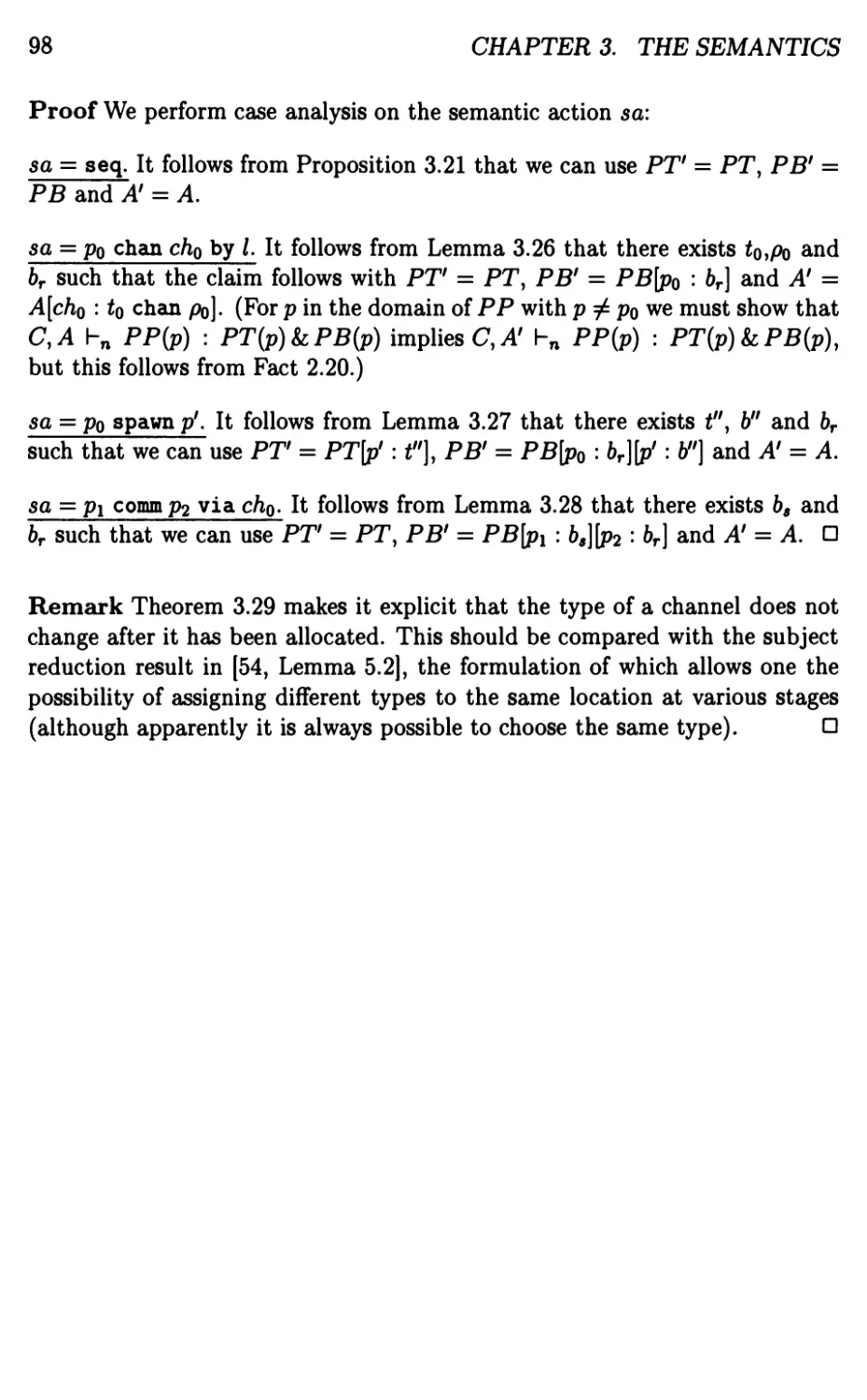

4.10 The rules of Fig. 4.8, in a symmetric form 116

6.1 The bisimulation relation ~ 147

6.2 The equivalence relation ~ on action configurations 148

6.3 The functionals Gp and Ga used for defining ~ and ~ 149

7.1 The Karlsruhe Production Cell 166

7.2 A CML program for the table 168

7.3 The behaviour for the table 170

7.4 Another CML program for the table 176

Preface

Program analysis and type systems have been recognised as important tools

in software development. By analysing a program before running it one may

catch a variety of errors in due time; this goes not only for obvious errors

such as adding an integer to a boolean, but also for "higher-level" errors such

as violating a given communication protocol. Even for programs that are

already correct, analysis is useful for reasons of efficiency: the result of the

analysis may guide the transformation into an equivalent program with better

performance; or it may guide the run-time system to improve the program's

execution profile, for example by giving directions for an optimal scheduling

of processors.

We firmly believe that for a given program analysis to be reliable, a solid

foundation must be established. This includes that the specification of the analysis

is faithful to a formal semantics for the source language, and that it is correctly

implemented. To demonstrate these issues involves stating and proving a bulk

of auxiliary results; and as analyses get more complex, it becomes increasingly

difficult to fit these bits together in a coherent way.

This book reports on a powerful type and effect analysis. Taking a type based

approach enables a succinct representation of program properties and

facilitates modular reasoning. The system meets the theoretical demands outlined

above and is useful for practical purposes, as witnessed by a prototype

implementation that is available on the world-wide web. Indeed, a main objective of

our research has been to advance the state of the art of effect system

technology, in particular by integrating polymorphism (essential for code reuse) with

a notion of subtyping (essential for the sake of precision).

The role of effects, or rather behaviours so as to emphasise that temporal

information is included, is to explicitly record the actions of interest that will

be performed at run-time. A variety of analyses on the language of behaviours

may be built on top of the system; one might even devise a tool (& la the

Mobility Workbench) for testing certain properties of behaviours.

PREFACE

Chapter 1 puts our development in perspective; prior knowledge of type

systems will be helpful but is not strictly assumed. Chapter 7 (which is based on

[39]) gives a case study illustrating the use of the system. Chapters 2-6 are

more technical and require some amount of "mathematical maturity" on part

of the reader.

The research documented in this book grew out of the ESPRIT project

LOMAPS (BRA 8130: Logical and Operational Methods in the Analysis of

Programs and Systems). For this reason our development is conducted for

a language (Concurrent ML) that integrates the functional and concurrent

paradigms, but most of the ideas can be immediately transferred to other

settings. Also, we have been partly supported by the DART project (funded by

the Danish Science Research Council).

We would like to thank our collaborators in the above projects for fruitful

discussions, in particular Pierre Jouvelot, Lone Leth, Jean-Pierre Talpin, Bent

Thomsen, and Mads Tofte; we also thank Hans Rischel for providing us with

the program used for the case study in Chapter 7.

Arhus, October 1998

Torben Amtoft Flemming Nielson Hanne Riis Nielson

Chapter 1

Introduction

1.1 Basic Concepts

Type inference. The notion of types dates long back into the history of

Computer Science. A main motivation is the desire to avoid certain kinds of

computations such as adding the boolean value true to the integer value 7;

this is permitted in untyped languages (perhaps yielding 8) but is regarded as

being meaningless by sensible programmers.

One approach (used e.g. in Scheme [58]) is to let the run-time system equip

all values with a "tyPe tag"; f°r eacn operation performed it must then be

checked that the type tags of the operands are appropriate. This "dynamic

typing" is potentially time consuming and often one prefers to do type checking

"statically", i.e. at compile-time, also so as to catch errors as early as possible.

The two approaches are not mutually exclusive and Java [13] is an interesting

mixture: it has static typing but the run-time system still has to compare tags

at some places, in particular when updating an array.

Another benefit of static typing is that it serves as a partial specification of the

program, for instance describing which kind of input a given function expects

and which kind of result it returns — if it expects an integer and returns a

boolean it will have type int -> bool. The programmer can thus check

whether this corresponds to what he had in mind.

Static typing is usually specified in terms of an inference system consisting of

a number of axiom schemas and inference rules; simple examples of such are

h ei : int, h e2 : int

h n : int and

h e\ + e2 : int

1

2

CHAPTER 1. INTRODUCTION

The axiom schema on the left states that all natural numbers n have integer

type; and the inference rule on the right states that if it is possible to assign

the expressions e\ and e2 an integer type then also their sum has integer type.

We shall often want to compare a type system T for a language L to an

"extended" type system T for a language which is equal to or encompasses

L: we say that V is a conservative extension of T if for all //-expressions e it

holds that if e is typeable in T with type t then e is also typeable in V with

type t\ and t can be "obtained from" t'.

Semantic soundness. Of course a static type system must be faithful to

the semantics of the program: if h e : int is derivable and the program

expression e at run-time evaluates to a value then we certainly expect this

value to be an integer. The precise formulation of this semantic soundness

property depends on the kind of semantics [35] used; below we sketch some

approaches in their simplest form. In all cases, the statement of semantic

soundness contains h e : tasa premise.

One approach assumes that a set of "semantic values" v are given. Then one

has to define a relation v : t, to be read "the value v has type f\ and the

soundness requirements are

• for a denotational semantics: if h e : t and [e] = v then v : t\

• for a big-step (natural) semantics: if he:t and e —> v then v : t.

As languages grow in complexity and features, it becomes harder to come

up with a suitable set of "semantic values"; and hence the approach of using

a small-step semantics [41] has become increasingly popular. Writing => for

the transition relation, semantic soundness is then formulated as the subject

reduction property

• if h e : t and e => e' then also h e' : t.

Another advantage of this approach, compared to using a big-step semantics,

is that it also caters for non-terminating programs. To ensure that "well-

typed programs do not go wrong" [24] one must also establish that "error

configurations" (those which are "stuck") cannot be typed.

It should be noted that we cannot hope for a decidable type system that is

"completely" faithful to the semantics, e.g. in the sense that h e : int is

derivable if and only if e never evaluates to something which is not an integer.

To see this, consider the program

1.1. BASIC CONCEPTS

3

let p(x) = ••• in if p(3) then 7 else true.

This expression will evaluate to a boolean if and only if p(3) returns false; and

as is well-known from computation theory [18] this is in general undecidable

for "Turing-complete" languages such as the one studied here.

Syntactic soundness and completeness. Having defined a type inference

system, a natural goal is to devise an algorithm that automatically infers types

for expressions. Clearly such a "reconstruction algorithm" must be

syntactically sound; this property in its most simple form states that if the algorithm

assigns the expression e the type t then h e : t must be derivable in the

inference system — hence syntactic and semantic soundness can be combined

into a common soundness result which ensures that the output from the

reconstruction algorithm yields trustworthy information about the input program's

run-time behaviour.

A reconstruction algorithm should preferably be not only sound but also

syntactically complete, as then the algorithm does not reject valid programs. This

property in its simplest form states that if h e : t' is derivable in the type

system then the algorithm when applied to e returns a type t which has t' as an

"instance"; in other words the algorithm computes the "most general typing".

A reader's guide to Chapter 1. Our starting point (Sect. 1.2) is the

"Hindley-Milner type system" [24, 6], used as the basis for the statically typed

functional languages ML [12], Haskell [55] etc. A crucial feature of this system

is the ability to generalise over types so as to make functions polymorphic and

hence applicable to arguments of different types. The archetypical polymorphic

function is the identity function which just returns its argument; it has type

int -> int as well as bool -> bool and these types are instances of the type

scheme Va. a -> a (which is unambiguously parsed as Va. (a -> a)). In

particular, we do not consider system F [11] where generalisation may occur

inside types (allowing (Va. a) -> a), or the intersection type system [16].

An inference system for Hindley-Milner typing is presented in Sect. 1.2.1 and

Sect. 1.2.2 discusses the design of a reconstruction algorithm W.

In accord with the trend in programming languages towards integrating a

variety of features, the Hindley-Milner system has to be extended. Our focus

is on adding "non-applicative" features like "imperative" constructs, often

essential for efficient programming, or concurrency constructs. Research from

the beginning of the 1990'es indicates how to write semantically sound type

systems for the resulting languages; in Sect. 1.2.3 we mention the approaches

4

CHAPTER 1. INTRODUCTION

of [51, 21] which are "implicit" in that the types do not explicitly record the

"side effects" performed by the non-applicative constructs.

In Sect. 1.3 we give an introduction to effect systems, a formalism that allows

types to carry detailed information about run-time behaviour, and that also

facilitates writing semantically sound type systems [53, 49] for languages with

non-applicative features. In Sect. 1.4 we address the issues involved in writing

reconstruction algorithms for effect systems.

In Sect. 1.5 we shall see that in order to obtain more precision than provided

by the techniques of Sections 1.3 and 1.4, it is fruitful to combine with

techniques previously used [8, 9, 47] for adding subtyping to purely applicative

programming languages.

Sections 1.2-1.5 thus provide the background setting for the rest of this book,

which presents a rather precise type system for a functional language with

polymorphism and concurrency as outlined in Sect. 1.6. Moreover, the type

system yields temporal information about run-time behaviour; this is very

useful for validation purposes as demonstrated in Chap. 7 (based on [39]).

1.2 Polymorphism

In this section we shall introduce the basic concepts of Hindley-Milner

typing [24, 6] and consider a small purely applicative language that forms a core

fragment of ML. Expressions e in this language are built from identifiers x,

function abstractions fn x=>e, function applications e\ e<i, conditionals

if e0 then e\ else e2, recursive function definitions rec / x=>e (which

when applied to a value v evaluates e with x bound to v and with / bound

to the function itself), and let-expressions let x = e\ in e2 where e\ is a

polymorphic definition of x. We also include a selection of constants c,

encompassing the natural numbers and the standard arithmetic operators (which are

written in infix notation).

Much of the power of ML stems from the possibility of defining functions

which are higher-order and polymorphic, a simple example of this is the twice

function given by

twice = fn f => fn x =>f (f x).

It is higher-order in that it takes a function / as input and returns the function

/ of as output (functional composition); it is polymorphic in that it can be

applied to arguments having different types:

1.2. POLYMORPHISM

5

let tw = twice in • • • tw (f n x => x + 1) • • • tw (f n y => not y) • • •

When applied to the integer successor function, fnx=>x+ 1, twice returns

a function which increments its argument by 2; when applied to the boolean

negation function twice returns the identity function on booleans.

That twice is higher-order and polymorphic can be seen from its type scheme

Va. (a -> a) -> (a -> a)

which can be instantiated to yield (int -> int) -> (int -> int) which is

the type of twice when applied to the successor function.

1.2.1 An Inference System

An inference system for our core ML fragment is depicted in Fig. 1.1. Types

t can be variables (we use a to range over these), base types like int or bool,

or composite types like the function type t\ -> t2. A type scheme ts is of the

form Va. t with a a sequence of bound variables; accordingly its free variables

FV(ts) are given as FV(t) \ {3} where FV(t) are the variables occurring in

t. To cater for free identifiers (within the body of function definitions etc.) we

need an environment A mapping identifiers into types or type schemes; hence

judgements are of the form A h e : a where a is either a type or a type

scheme. A closed type or type scheme is one without free variables, and the

notion of free variables naturally extends to environments.

We shall also need the notion of substitutions; these map type variables into

types. A substitution gives rise to a mapping on types and the type resulting

from applying substitution S to type t is denoted S t. This operation binds

stronger than function arrow, so Si t\ -> t2 = (Si *i) -> t2. The domain of a

substitution S is the set of variables affected: Dom(S) = {a | Sa / a}. The

identity substitution is denoted Id and we write S2 Si for the substitution that

first applies Si and next S2, so (S2 Si)(a) = S2 (Si a). We say that S' is an

instance of S if there exists S" such that S' = S" S.

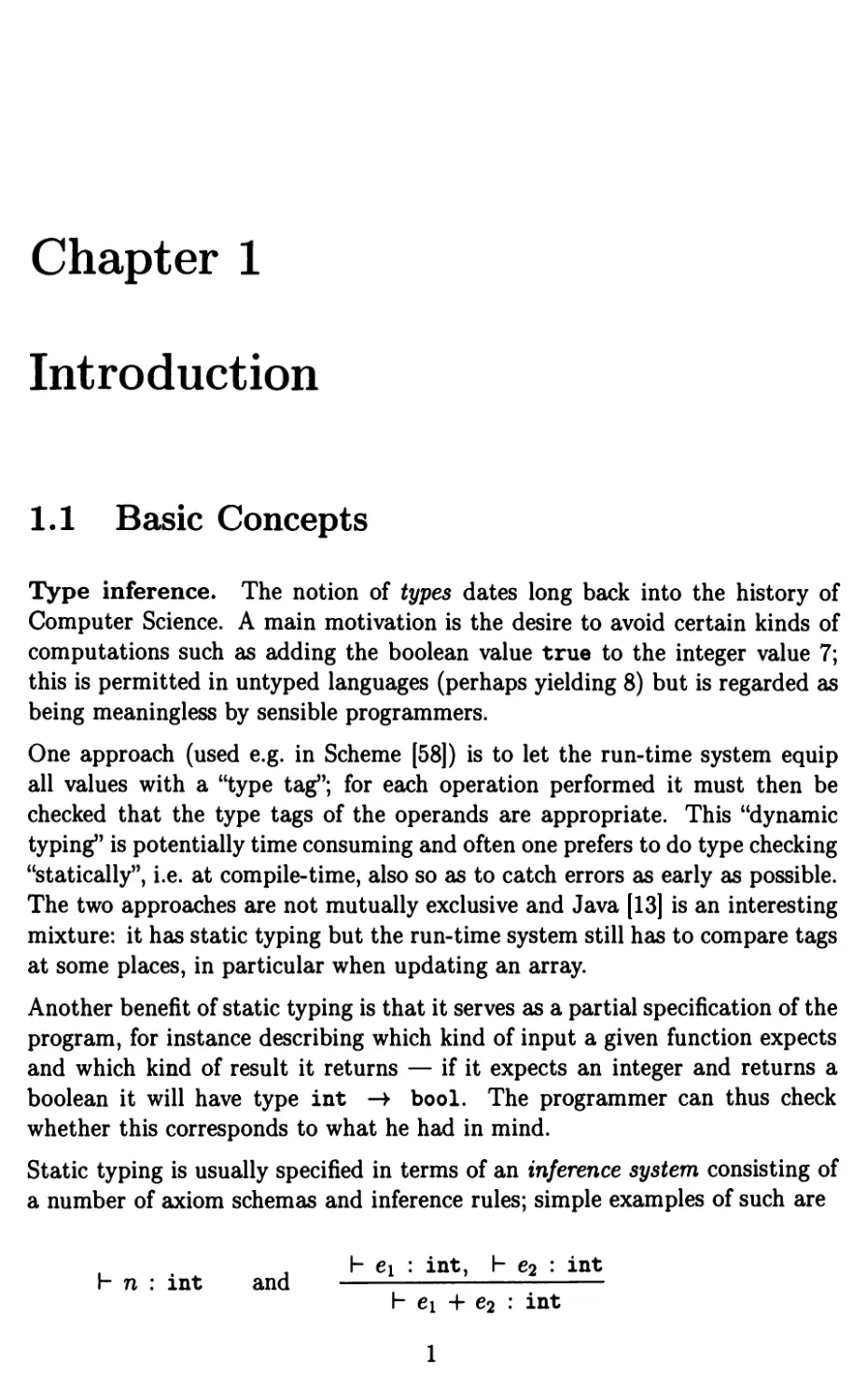

Note that in Fig. 1.1 there is one rule for each syntactic construct and two

specific rules for instantiation [ins] and generalisation [gen]; we use the term

structural for the former kind of rules. The rule [con] assumes the existence

of a function Typ that assigns a closed type or type scheme to each constant;

for example it holds that Typ(7) = int. The side condition for applying [ins]

says that an "instance substitution" must affect only the bound variables of a

type scheme. The side condition for applying [gen] is necessary for semantic

6

CHAPTER 1. INTRODUCTION

[con] A h c : Typ(c)

[id] A\- x : A(x)

A[x : ti] h e : t2

[abs]

[app]

[let]

[rec]

j4 h fnx=>e : ^ -> tf2

A h ei : t2 -+ h A h e2 : t2

A h ei e2 : ti

i4 h ei : tfsi i4[x : tfsi] h e2 : <2

j4 h let x = t\ in e2 : 22

A[/:t]hfnx=»e:t

i4 h rec f x=$>e : t

r.n ^4 h e0 : bool A h ei : t A h e2 : t

[if]

i4 h if eo then ei else e2 : 2

(.ns] A*-'■**■* if £,^(5) c {5}

l8enl /hCw^ if {3}nfF(>l)=0

i4 h e : Va. *

Figure 1.1: The core of the Hindley-Milner type system

soundness; indeed we shall show that otherwise the identity function fnx=>x

may have type int -> bool. If [gen] can be applied to [x : a] h x : a, in spite

of a being free in [x : a], we have [x : a] h x : Va. a; by [ins] we then get

[x : a] h x : bool and by [abs] also [] h fn x=^x : a -» bool; and by one

more application of [gen] we arrive at [] h fnx=>x : Va. a -> bool which

by [ins] yields the semantically incorrect [] h fnx=>x : int -> bool.

It is not difficult to prove the semantic soundness of the system in Fig. 1.1; for

a denotational semantics this can be done following the approach in [24].

Recursive functions and polymorphism. A key construct for defining

recursive functions in ML is let fun / x = e\ in e2 end which in our language

is expressible as let / = rec / x => e\ in e2. It is easy to see that the following

inference rule is derivable from Fig. 1.1:

1.2. POLYMORPHISM

7

A[f : *i -» t2][x : U] h ei : t2 A[f : Vg. fr -> *2] h e2 : t

A h let fun / x = ei in e2 end : 2

iffinFVr(i4)=0

which states that / can be used polymorphically in e2 but only monomorphi-

cally in t\.

To increase the power of the type system one can allow / to be used

polymorphically also in ei; this is termed polymorphic recursion [28]. Unfortunately,

as demonstrated in [15, 20] the full use of this feature makes type inference

equivalent to semiunification which is undecidable.

1.2.2 An Inference Algorithm

We shall now present a reconstruction algorithm for the type system in Fig. 1.1;

it is called W and is similar in spirit to the algorithm of the same name

introduced by Milner in [24].

The recursively defined algorithm W takes as input an environment A and

an expression e, and returns a type t and a substitution S which records how

the free variables in A need to be instantiated. For the "top-level" call, A is

usually the empty environment and hence S is of no interest; and thus W has

the expected "external" functionality of mapping expressions into types.

Strictly speaking, W is nondeterministic in that it generates a number of "fresh"

variables (i.e. variables not used before); as an example, when applied to the

identity function it may return the type ai -> ai or alternatively the type

a2 -> a2. One can convert W into a deterministic algorithm by letting it

carry around a "supply" of fresh variables; as is customary in the literature we

shall refrain from doing so.

Substitutions arise from Robinson unification [44]: if there exists a substitution

S that unifies t' and *", that is St' = St", then the call U(t',t") will produce

the "most general" such substitution in the sense that all other unifiers are

instances — otherwise it will fail. To see the need for unification, consider the

typing of e\ e2: if e\ has been found to have type t\ and e2 has been found to

have type t2, then we must find S such that Sti is of the form St2 -> t, and

this is tantamount to finding S' that unifies t\ and t2 -> a with a being fresh.

The function U works by decomposition: in order to unify *i -> t2 with t[ -> t'2

it recursively unifies *i with t[ and t2 with t2\ and to unify a variable a with

a type t / a it produces the substitution [a 4 <], provided that a does not

occur in t — this "occur-check" is needed to avoid generation of infinite types.

8

CHAPTER 1. INTRODUCTION

We refer to [44] for the details.

Remark There exists type reconstruction algorithms with different "internal"

functionality. The algorithm T [7, p. 37] takes as input an expression only, and

returns a type together with an "assumption list", i.e. an environment where

there may be multiple assignments to each identifier. When closing the scope

of an identifier x (when typing fni=^e) all types assigned to x must then be

unified. Another algorithm is J [24, Sect. 4.3]; it is an optimised version of

W, in that the substitution component has been "globalised". □

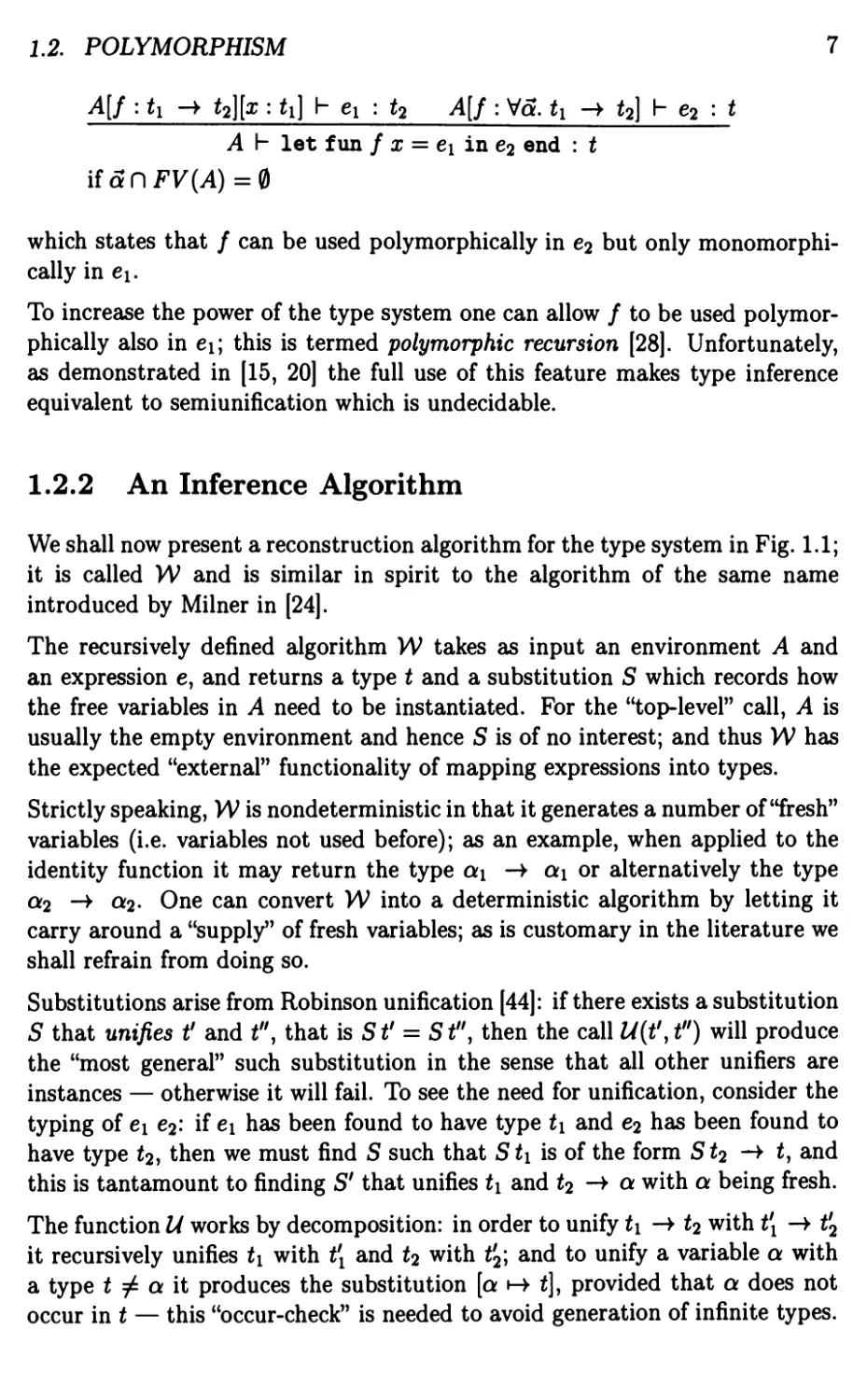

The algorithm W is defined by the clauses in Fig. 1.2, where we have omitted

the clauses for recursion and conditional as these follow a similar pattern.

The placement of instantiation and generalisation reflects a standard result

ensuring that one can assume that an inference is normalised, i.e. that all uses

of rule [gen] take place after typing a polymorphic definition, and that all uses

of rule [ins] take place immediately after an application of [con] or [id]. To cope

with generalisation, the algorithm closely follows the inference system in that

it generalises over all type variables that are not free in the environment. To

cope with instantiation, the algorithm has to generate a fresh copy (so as to

be as general as possible) of the type scheme associated with a constant or an

identifier; this is done by the function INST which maps a type t into itself

and which maps a type scheme Va. t into [a »-► dt\ ] t with dt\ fresh variables.

Soundness and completeness. The syntactic soundness of W was

demonstrated already in [24]: if W([],e) = (S,t) then [] h e : t. This is a

consequence of a more general soundness result, admitting an inductive proof, which

states that if W{A,e) = (S,i) then S A h e : t.

W is also syntactically complete, as conjectured in [24] but first proved in

[6, 7]. For closed expressions this amounts to

if []he:f

then (i) the call W([],e) succeeds

(ii)ifW([],e) = (S,t)

there exists S' such that S't = t'.

This result, together with the standard result that inferences are "closed under

substitution", establishes that the system in Fig. 1.1 has a principal typing

property: if the set {t' \ [] h e : t'} is not empty then there exists a type t

such that the entire set is expressible as {S't \ S' is a substitution}.

1.2. POLYMORPHISM

9

W(A,c) = (Id, INST(Typ(c)))

W(A,x) =if x 6 Dom(A) then (Id, INST(A(x))) else/ai7i<tent

W04,fnx=*eo) =

let a be fresh

let (So,t0) = W(A[x:a},e0)

in (S0, So a ->• t0)

W(^,eie2) =

let(S1)t1)=W(>l,e1)

let (S2,t2) = WiStAei)

let a be fresh

let S=U(S2tut2 -*• a)

in (SS2Si,Sa)

W(A, let i = tx in e2) =

let(S1,t1) = W(^,ei)

let a = JV(ti) \ FV(SX A)

let tsi = Va. ti

let(S2)t2) = W((S1>l)[a;:ts1],e2)

in(S2Si,t2)

Figure 1.2: The core of Algorithm W

To admit an inductive proof of syntactic completeness, the above definition

must be extended so as to cater also for open expressions and this is a slightly

tricky matter. One formulation is

if A' h e : f and A' = S" A

then (i) the call W(A, e) succeeds

(ii)ifW(,4,e) = (S,*)

there exists S' such that S' t = t' and S" A = S' S A.

Also the notion of principal typing can be generalised to take environments

into account.

10

CHAPTER 1. INTRODUCTION

1.2.3 Implicit Treatments of Side Effects

It is necessary to modify and extend the system developed so far in order to

type languages that are not purely applicative, as will be the case if expressions

are allowed to have side effects, i.e. change a global "run-time environment".

In the remaining chapters of this book we study the incorporation of

concurrency; here the environment is "external" and consists of a set of communication

channels. In Concurrent ML (CML) [42, 40] the expression channel e

allocates a channel; the expression accept ch receives a value from the channel

ch; and the expression send (c/i, e) transmits the value of e over the channel

ch.

A prime example in the literature is the incorporation of imperative features;

here the environment is "internal" and consists of a set of locations. In

Standard ML (SML) [26, 59] the expression ref e allocates a location and initialises

it to the value of e; the expression ! / gets the content of location /; and the

expression / : = e assigns the value of e to the location /. As is well-known from

many imperative languages, the expression e\ ; e2 evaluates first e\ and then

e2.

To manage imperative features one may introduce [51] an additional type

construct t ref, with the intention that this is the type of a location holding a value

of type t. A naive approach is then to stipulate Typ(ref) = Va. a -> a ref

and Typ(!) = Va. a ref -> a (note that ref binds stronger than function

arrow), and similarly for the infix operator :=, whereas the rule for e\ ; e2 is

straightforward.

But without further modifications, the inference system from Fig. 1.1 is now

semantically unsound! This can be demonstrated by the program

let r = ref (f n x => x) in (r : = f n x =$► x + 1 ; ! r true) (1)

which generates a run-time type error, in that the function f n x => x + 1 is

applied to true, even though (1) is typeable: from the judgement

[] h ref (fnx=*x) : (a -* a) ref (2)

one can apply [gen] and then bind r to the type scheme Va. (a -> a) ref. By

applying [ins] twice, with an instance substitution which is first [a »->• int] and

next [a »->• bool], it is thus possible to type the body of the let-expression.

That the issue is rather subtle is illustrated by the program

let r = fnx=>ref x in (r 7 ; r true)

(3)

1.2. POLYMORPHISM

11

which is typeable in much the same way, binding r to Va. a -> a ref, but

which does not generate a run-time error since two distinct locations are

allocated (one holding an integer and the other a boolean).

The problem of applying [gen] to (2) is that its environment component []

does not properly abstract the "run-time environment" created by evaluating

ref (f n x => x): this run-time environment is not empty, as the newly

allocated location is implicitly bound to a value with type (a -> a) ref. On the

other hand, evaluating fnx=>ref x as in (3) does not extend the run-time

environment.

The difficulty of typing imperative features was noted already in [24] and

subsequently the topic has received considerable attention; most of the resulting

systems enjoy the desirable property of being a conservative extension of the

Hindley-Milner type system. Below we sketch the approaches of [51] and of

[21]; in both cases the treatment of side effects is implicit and hence neither

of these developments are essential for the remainder of this book. Later work

has employed effect systems, as introduced in Sect. 1.3, where the treatment

of side effects is explicit.

The CML type system [42] is based on the approach of [51], modified to the

concurrent setting, and assigns the type t chan to channels transmitting values

of type t (cf. the use of t ref for locations).

An approach based on imperative type variables

The work of [51] distinguishes between two kinds of type variables: imperative,

which we shall write as a*, and applicative, written as a. The former is aimed

to incorporate all type variables that may occur in the run-time environment;

hence it should be ensured that if ref e is typeable then its type t does not

contain applicative variables. This can be achieved by stipulating Typ(ref) =

Va*. a* -> a* ref and by requiring all substitutions to map imperative type

variables into types containing imperative variables only.

It seems obvious that semantic soundness is obtained if we prohibit the

generalisation of imperative variables; but this is too strong as then the program

(3) cannot be typed. To repair on that a key insight [51, Sect. 5] is that also

imperative variables can be safely generalised if the polymorphic definition

in question does not extend the run-time environment. A sufficient syntactic

condition for this is that the definition is non-expansive; this is the case for

values such as constants, identifiers, and function abstractions, but is not the

case for e.g. function applications and conditionals. Similarly, neither ! nor :=

12

CHAPTER 1. INTRODUCTION

extends the run-time environment; and hence we can allow Typ to map these

operators into type schemes where the type variables are applicative.

The semantic soundness of the resulting system is demonstrated in [51] with

respect to a big-step semantics; the correctness relation which states when a

value has a given type is defined coinductively so as to cope with cycles in the

run-time environment.

The type reconstruction algorithm presented in [51] is a modification of

algorithm W. The main extension is that when unifying an imperative variable a*

with a type t, all applicative variables in t are replaced by imperative variables

(due to the abovementioned requirement on substitutions).

Being non-expansive is not a necessary condition for not extending the

runtime environment, as illustrated by the expression

(f n x =$► f n y =$► ref (f n z => z)) 7. (4)

A more precise approximation is outlined in [4] where all imperative variables

are assigned a "weakness degree" n, 0 < n < oo. We cannot generalise over

imperative variables having weakness degree 0, as these may occur in the

runtime environment, but variables with weakness degree n > 0 occurring in the

type of an expression e are guaranteed not to occur in the run-time environment

as long as e is applied to at most n — 1 arguments.

This idea is employed in [60] which assigns the expression (4) the type a ->

(a*1 -> a*1) ref where also the imperative variable a*1 can be generalised.

On the other hand, the 1997 version of SML [59] returns to a simple but

somewhat crude generalisation strategy, in that the notion of imperative type

variables is eliminated but instead the "value restriction" is imposed: in order

to generalise over variables occurring in the type of a polymorphic definition,

the defining expression must be non-expansive (i.e. a syntactic value).

An approach based on tracking dangerous type variables

The work of [21] aims at a more direct way of estimating which type variables

that may occur in the run-time environment and hence must not be generalised:

these are decreed to be the variables that occur "dangerously" in the type of a

polymorphic definition.

To explain the idea, first consider the polymorphic definition in (1). Looking at

its type, (a -> a) ref, it is apparent that a occurs in the type of the location

created by the definition and thus also in the run-time environment. This

1.3. AN INFERENCE SYSTEM FOR EFFECTS

13

motivates the defining equation DV(t ref) = FV(t), where DV computes the

set of dangerous variables.

Next consider the polymorphic definition in (3). Looking at its type, a ->

a ref, it is apparent that the definition creates a "closure". Essentially, a

closure is an expression together with an environment recording the values

of the free identifiers. These values may contain locations themselves, and

therefore we define DV(ti -* ^2) to be the union of all DV(t) where t is the

type of an identifier free in the closure — in the example there are no such

identifiers and hence a is not dangerous.

As can be seen from [21, Fig. 3] the resulting system is able to type several

programs that are (i) composed from simple building blocks and thus not

contrived, and that are (it) not typeable by [51] nor by the "weakness degree"

approach described above. Unfortunately, it fails to be a conservative extension

of the Hindley-Milner type system: there exists a purely applicative program

[21, Sect. 3.6] which is Hindley-Milner typeable but not typeable by the system

as presented in [21].

1.3 An Inference System for Effects

In recent years effect systems have become increasingly popular; a major reason

is that they allow an explicit treatment of side effects and hence they are closer

to our intuition than the approaches sketched in Sect. 1.2.3. Judgements take

the form

Ah e : tkb

where the effect b describes the "visible effect" of evaluating e. This extra

component is used to gain concise information about certain aspects of the

program's run-time behaviour, possibly enabling a more efficient implementation.

An extra benefit is that the effects may be used to "control polymorphism", as

will be described in Sect. 1.3.1, so as to yield a semantically sound system for

languages with non-applicative features.

We use e for the effect denoting that no visible action takes place; for a call-

by-value language we thus have the axiom schema

A\- x : A{x)ke

In order to write a sensible rule for function application, we shall annotate

function types with an effect component describing what happens when the

14

CHAPTER 1. INTRODUCTION

function is applied. Accordingly, the rule for function abstraction records the

effect of evaluating the body in the type of the abstraction:

A[x:ti] h e : t2kb

A h f n x => e : tx ->6 t2 & e

When applying a function e\ to an argument e2, a call-by-value language first

evaluates t\ to a closure, next evaluates e2 to a value, and finally evaluates the

closure. This is reflected in the rule for function application

A h ei : t2 ->fr t\ fe &i Ah e2 : t2kb2

Ah exe2 : txk {bx SEQ b2 SEQ b)

where SEQ is an operator (in the meta language) for sequential composition

of effects. We shall assume that SEQ is associative with e as neutral element;

it will often be the case that SEQ is commutative but more precise analyses

will be obtained if it is not, cf. Sect. 1.3.3.

The rule for conditional expresses that while the test must always be evaluated,

we cannot in general statically determine which branch will be taken:

A h eo : bool&&o A h e\ : tkb\ A h e2 : tkb2

A h if e0 then tx else e2 : tkb0 SEQ (h OR b2)

where OR is an associative and commutative operator (still in the meta

language) for "nondeterministic" composition of effects.

A seminal paper in the field is [23] which treats the LISP dialect FX.

Corresponding to the imperative features NEW, GET and SET there are effects

ALLOC, READ and WRITE; the role of SEQ (and of OR) is played by the

commutative operator MAXEFF (that can be thought of as set union). Source

programs must be pre-annotated with type information, so for example

function abstractions are written as (LAMBDA (x : t) e), and also polymorphism

is explicit.

The theory of effect systems is further developed in [48]. Here types are not

explicitly present in the source program but inferred by a reconstruction

algorithm, and a simple kind of polymorphism is employed: a let-expression

let x = ei in e2 is considered a polymorphic definition of e\ (so that the type

of t\ can be generalised) if t\ is non-expansive, cf. the discussion of [51] in

Sect. 1.2.3.

1.3. AN INFERENCE SYSTEM FOR EFFECTS

15

1.3.1 Effects Controlling Polymorphism

We now describe how effect information can be used to decide which type

variables are generalised in a polymorphic definition; it should be clear from

the discussion in Sect. 1.2.3 that for this purpose effects must estimate the

set of variables that may occur in the run-time environment. (A type now

contains not only type variables but also effect variables and we use /? to range

over the latter.)

A minimalistic approach is taken in [53]. Here effects are just sets of type

and effect variables (so e is the empty set and SEQ as well as OR is union).

As in [51] the focus is on detecting the creation of locations so we stipulate

Typ(ref) = Va. a -»<*> a ref and Typ(!) = Va. a ref -»0 a. The rule

[gen] from Fig. 1.1 avoids generalising over variables that are free in the static

environment; it must be modified to also avoid generalising over variables that

are free in the run-time environment and as FV(b) is designed to be a superset

of the latter set, the rule now reads

A h e : tkb if{ap}n(FV(A)UFV(b)) = (I>

A h e : Vap.tkb

The semantic soundness of the resulting system is established using a small-

step semantics.

By adding more structure to the effects of [53] one can obtain more refined

systems; we shall mention some of these in the sequel. The task of integrating

side effects and polymorphism can thus be solved in several ways and [53,

Sect. 5] contains an insightful comparison between some of these, including

those outlined in Sect. 1.2.3. Some interesting benchmarks, illustrating the

strengths and limitations of various approaches, are given in [49, Sect. 11].

1.3.2 Regions

By incorporating regions, effect systems may yield highly informative output,

useful for e.g. program optimisation. A region r (we use p to range over region

variables) can be considered as an abstraction of the "place" where a side effect

occurs: for imperative languages one can think of a region as a set of locations;

for concurrent languages one can think of a region as a set of communication

channels. Regions are introduced already in [23] and we shall now elaborate

on their use in [49].

Here an effect is a set of atomic effects and thus SEQ and also OR is union;

an atomic effect is either init(r,t), indicating that a location holding a value

16

CHAPTER 1. INTRODUCTION

of type t is allocated in region r, or write (r, t), indicating that a location in

region r is assigned a value of appropriate type t, or read(r,t), indicating that

a value of type t is fetched from a location in region r. (Similarly the type

construct t ref is equipped with a region component, denoting where the

location represented by this type is allocated.)

Again effects are used to control polymorphism, in particular the rule for [gen]

is as in [53], and semantic soundness is established with respect to a big-step

semantics. Moreover, as we shall see below the presence of regions enables a

significant amount of "garbage collection" to be done statically.

To illustrate the issue, first consider the expression t\ given by

let v = ref 5 in ! v + 2 (5)

which returns 7 — afterwards it is clearly safe to deallocate the location

denoted by v. Next consider the expression t<i given by

let v = ref 3 in f n x =$► ! v + x (6)

which returns a closure — as the location denoted by v is referenced each time

this closure is applied, it cannot be safely deallocated.

The effect system in [49] can distinguish between those two cases. For (6) the

judgement

[] h e2 : int -»™*(^) int& mi*(r, int)

is derivable, and as r is present in the type it is not safe to discard this region.

On the other hand, for (5) the judgement

[] h d : int kinit(r, int) U read(r, int)

is derivable and here r does not occur in the type nor in the environment.

Hence r is not "observable" and we infer that it is safe to deallocate v\

additionally we can dispense with the unobservable effect and just get [] h t\ :

int&£.

"Filtering" of effects is called effect masking and dates back to [23]. As shown

in [49, Sect. 11] (by the example id4 id4) this enlarges the set of typeable

programs.

1.3. AN INFERENCE SYSTEM FOR EFFECTS

17

An application to region-based memory management

As demonstrated most impressively in [52], effect systems can be used also for

purely applicative languages! Still the purpose is to determine the lifetime of

"locations"; these are not explicit as for imperative languages but yet they do

occur implicitly in the underlying implementation. In the presence of higher-

order functions, this task is notoriously difficult.

To illustrate the problem, we can use programs similar to (5) and (6). First

consider the "block"

let v = 5 in v + 2

where the "region" holding 5 can be pushed onto the stack at block entry and

safely popped from the stack at block exit. To make this explicit, in [52] one

may write

letregion p in let v = 5 at p in • • • end

where • • • encodes v + 2 and is given by letregion p?. in (v 4- (2 at p2)) at px

(placing the result in the persisting region pi). Next consider the block

let v = 3infnx=>v + x

where the region holding 3 cannot be safely popped at block exit as it will be

referenced each time the generated closure is applied; usually such regions are

placed in the heap rather than on the stack. Alternatively, as in [52], the region

is still pushed onto the stack but "sufficiently early" (prior to being assigned

the value 3) so that it is not popped until the closure becomes inaccessible.

The effect system of [52] records when regions in the "underlying store model"

are referenced or assigned; simultaneously it transforms the source expression

into a target language where all operations on regions are explicit (as

indicated above). Thus the target language can be implemented using a stack

discipline well-known from block-structured languages [29], avoiding

expensive run-time garbage collection. (In general, however, some of the regions

may have unbounded size so the stack must contain a pointer to those regions

rather than their actual content.) This provides a main theoretical basis for

the ML Kit [56], a compiler for Standard ML.

In the target language of [52] functions are parametrised with respect to region

information. Consider for instance a function fib for computing the fibonacci

function; then f ibfp!,^] denotes a call where the argument is taken from pi

18

CHAPTER 1. INTRODUCTION

and the result is placed in P2. If fib is called elsewhere in the program then

these calls must be allowed to use different regions as otherwise the analysis

will be far too coarse; hence the effect system has to employ polymorphism

not only for type and effect variables but also for region variables and thus

type schemes take the form Va/?p. t.

Similarly, in order to get a fine-grained analysis [52] allows the two recursive

calls of fib to use different regions. Hence also the body of a function definition

is typed in an environment where the function being defined is given a type

scheme where region variables are bound. We thus have a limited form of

polymorphic recursion, cf. Sect. 1.2.1, but as this does not involve type variables

it is nevertheless possible [52, Sect. 10] to write a reconstruction algorithm that

is sound and that issues a warning message in the rare cases where it computes

a type that might not be principal.

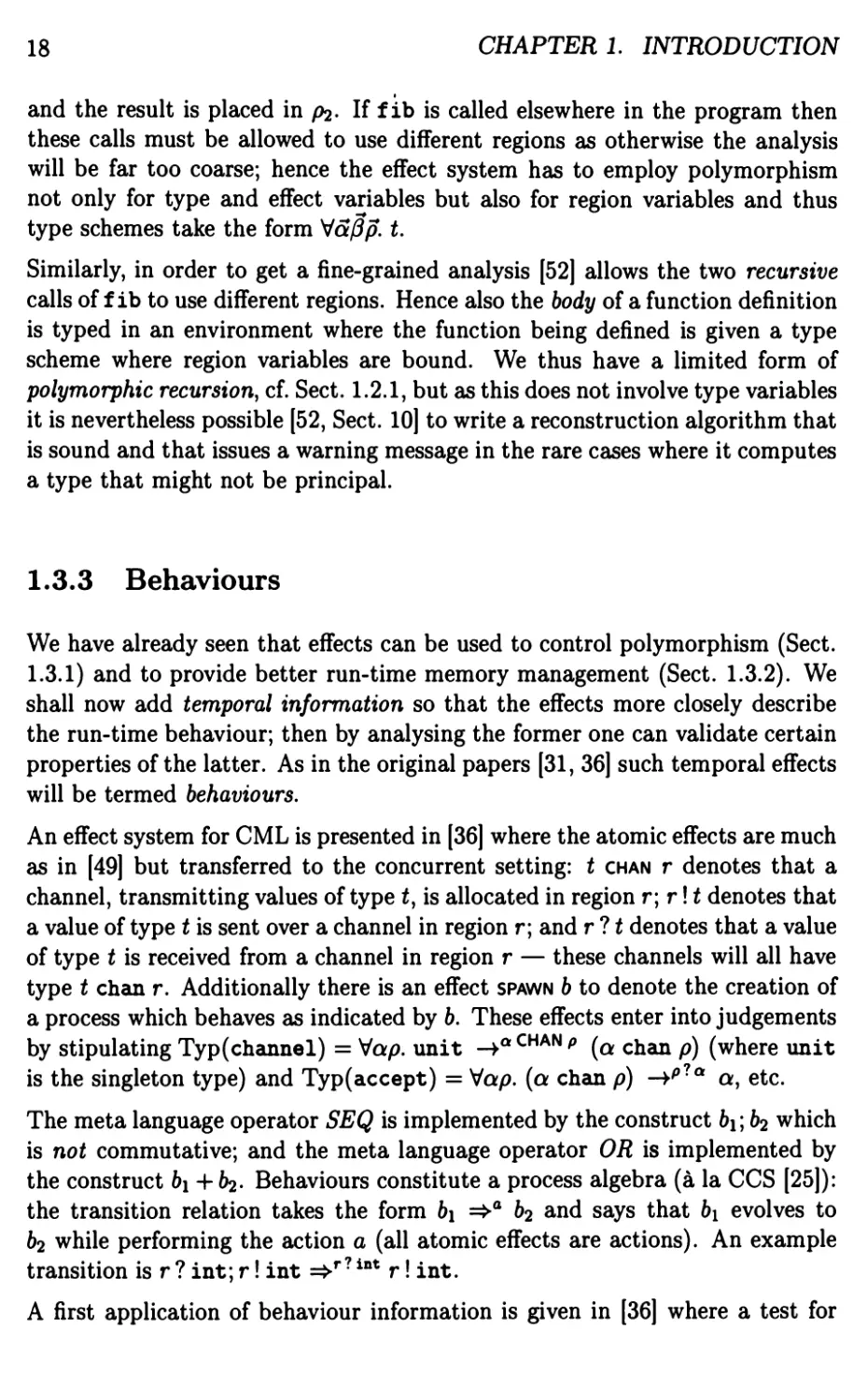

1.3.3 Behaviours

We have already seen that effects can be used to control polymorphism (Sect.

1.3.1) and to provide better run-time memory management (Sect. 1.3.2). We

shall now add temporal information so that the effects more closely describe

the run-time behaviour; then by analysing the former one can validate certain

properties of the latter. As in the original papers [31, 36] such temporal effects

will be termed behaviours.

An effect system for CML is presented in [36] where the atomic effects are much

as in [49] but transferred to the concurrent setting: t chan r denotes that a

channel, transmitting values of type t, is allocated in region r\r\t denotes that

a value of type t is sent over a channel in region r; and r ? t denotes that a value

of type t is received from a channel in region r — these channels will all have

type t chan r. Additionally there is an effect spawn b to denote the creation of

a process which behaves as indicated by b. These effects enter into judgements

by stipulating Typ(channel) = Vap. unit -4aCHANp (a chan p) (where unit

is the singleton type) and Typ(accept) = Vap. (a chan p) -V7** a, etc.

The meta language operator SEQ is implemented by the construct &i; 62 which

is not commutative; and the meta language operator OR is implemented by

the construct 61+62- Behaviours constitute a process algebra (a la CCS [25]):

the transition relation takes the form 61 =>a 62 and says that 61 evolves to

62 while performing the action a (all atomic effects are actions). An example

transition is r ? int; r! int =>r? int r! int.

A first application of behaviour information is given in [36] where a test for

1.3. AN INFERENCE SYSTEM FOR EFFECTS

19

finite communication topology is built on top of the effect system: given 6,

it computes upper bounds for the number of channels and processes that b

will create. As behaviours may be recursively defined, this is a non-trivial

task. The success of the analysis depends on the possibility of interpreting

behaviours as multisets of atomic effects; behaviours which are just sets (as in

e.g. [49]) would clearly not suffice.

To further illustrate how the temporal nature of behaviours may be used to

show (as done in [39]) the absence of certain bugs in concurrent systems,

consider the following safety criterion: a machine M must not be started until

the temperature has reached a certain level. This criterion may amount to

saying that a process P should never send a signal over a channel in region

start_M unless it has just received a signal over a channel in region temp.OK.

Now suppose we can show that P has some behaviour b: then it may be

immediately obvious that the above safety property holds, for instance if b is

defined recursively as b = • • •; temp_0K ? unit; start_M! unit; b.

In the system of [36] effects control the polymorphism: the rule for [gen] is as in

[53, 49]. Unlike [49] effects are not masked, as this might result in significant

loss of information and in particular invalidate the abovementioned test for

finite communication topology.

A new twist on semantic soundness

Below we shall briefly outline how to formulate semantic soundness in the

presence of temporal information (similar to what is done in [36]); we assume

a small-step semantics where the configurations are basically process pools PP

which map process identifiers into expressions.

To get a flavour of the subject reduction property, first consider the case where

PP evolves to PP' because process p allocates a fresh channel ch in region r

which is able to transmit values of type t'. If

A h PP(p) : tkb

holds then we must also have

A[ch : H chan r] h PP'(p) : tkV

where&=/CHANr&'.

Next consider the case where PP evolves to PP' because process p creates a

fresh process po, and suppose that

20

CHAPTER 1. INTRODUCTION

A h PP(p) : tkb

holds; then we must also have

A h PP'(p) : tkb' and A h PP'{p0) : unit&60

where&^SPAWN6o6'.

Thus the general picture is that Veil-typed programs communicate according

to their behaviour"; and that types are unchanged whereas the behaviours get

"smaller" and the environments are "extended".

1.3.4 Subeffecting

When designing an effect system for a language that already has a type system,

such as done in [36] for CML [42], one may reasonably expect the former to

be a conservative extension of the latter. To achieve this goal the inference

rules presented so far do not suffice as witnessed by the following example that

motivates the need for subeffecting.

Example 1.1 Suppose we want to type a conditional if eo then f\ else f2

occurring inside some function body e situated in the context

(fn/i^fn^^ejei e2

where t\ is of the form fn X\ =$>e\ and e2 is of the form fn x2 =>e2. Further

suppose that we have judgements

A[x\ : int] h e\ : int k &i and

A[x2 : int] h e2 : int & 62

with b\ / 62. We can thus assign t\ and hence f\ the type *i = int ->ftl int,

and we can assign e2 and hence /2 the type t2 = int ->62 int; but to use

the rule for conditional we must be able to assign f\ and /2 a common type

t = int -»612 int. D

One approach [53, 49, 36] is to find b\2 such that

A[x\ : int] h t\ : int & b\2 and

A[x2 : int] h e2 : int & b\2

1.3. AN INFERENCE SYSTEM FOR EFFECTS

21

(PI) b C 6 (P2)

6i C 62 62 C 63

61 C 63

. 61 C 62 63 C 64 61 C 62 63 C 64

( 6i;&3C&2;&4 61+63^62 + 64

61 C 62

(C3) -

SPAWN 61 C SPAWN 62

(SI) 61; (62; 63) = (61; 62); 63 (S2) (61; 63) + (62; 63) = (61 + 62); 63

(El) b = e\b (E2) &;<•=&

(Jl) 61 C 61+62, 62 C 61+62 (J2) 6 + 6 C 6

Figure 1.3: The pre-order C with equivalence =

for then e\ and e2, and hence also f\ and /2, can be assigned the type t =

int -+ftl2 int. The above judgements can be obtained provided that &i2

approximates b\ and 62, to be written 61 C 612 and 62 C 612, as we can then

apply the subeffecting rule

A\- e : tkb

Ah e : tkb'

if 6 C &'.

When effects are sets of atomic effects, as in [53, 49], the relation C is just

subset inclusion — hence OR (i.e. set union) is a least upper bound operator.

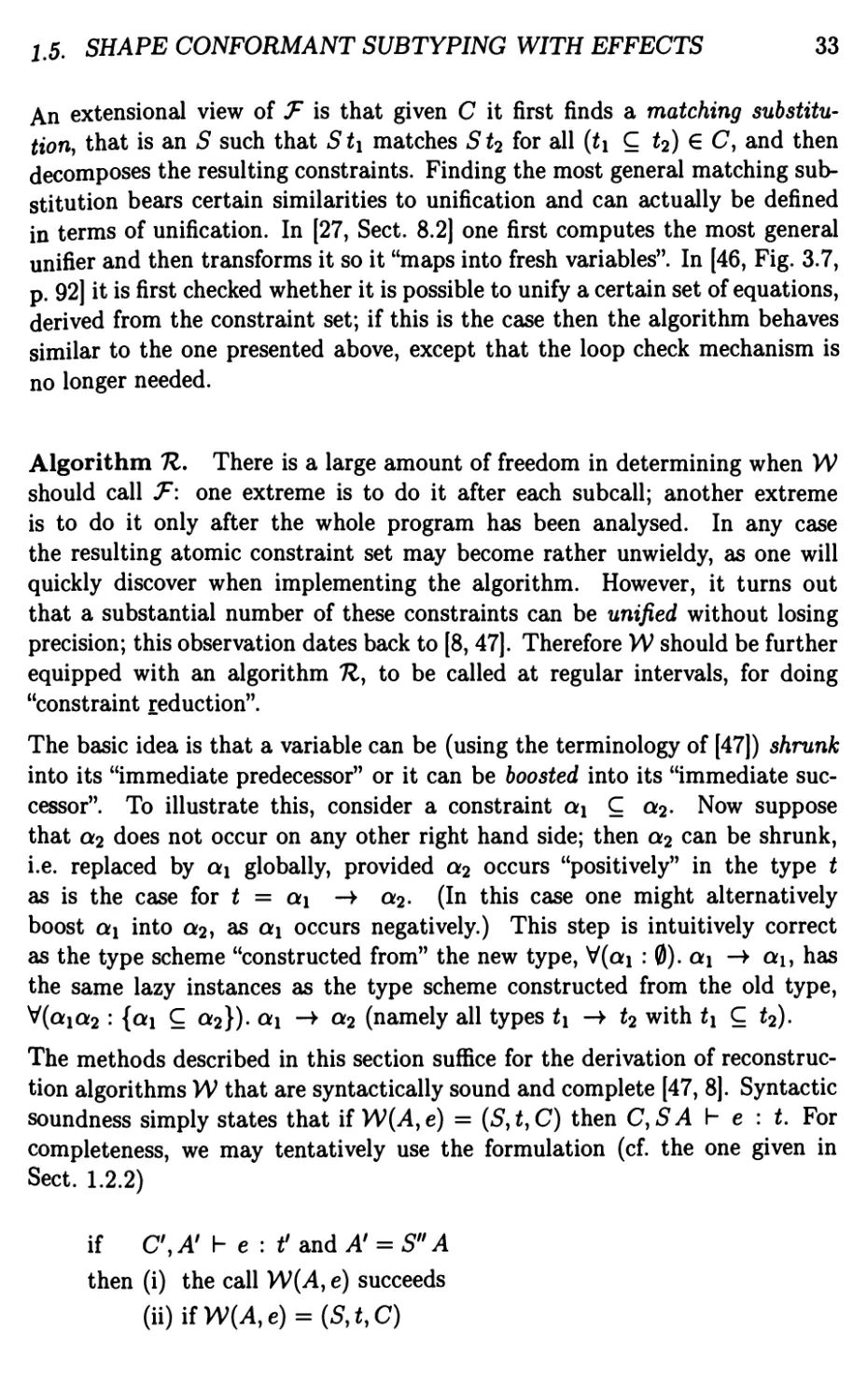

For behaviours as in [36] a more complex definition is needed. The aim is that

C should be a simulation in the sense that if 61 C 62 and 61 =>ai 6^ then also

62 =>°2 &2 for some a2 and 62, where 6^ C 62 and additionally a\ and a2 are

"suitably related". As shown in [37] the largest such simulation is undecidable,

but there exists a decidable relation C which is a simulation. This relation

can be syntactically defined as in Fig. 1.3; here 61 = 62 is a shorthand for

b\ C 62 and 62 C 61. We see that C is a pre-order (P1,P2) and a congruence

with respect to the various constructors (Cl,C2,C3); and that OR also in this

case is a least upper bound operator (J1,J2 using C2) — so in Example 1.1 we

can use &i2 = 61+ 62. As expected it holds (modulo =) that SEQ is associative

(Si) with e as neutral element (E1,E2).

22

CHAPTER 1. INTRODUCTION

1.4 An Inference Algorithm for Effects

In this section we discuss how to implement the type and effect systems

described in Sect. 1.3. Our starting point is algorithm W as defined in Fig. 1.2

and we shall see that W can be extended to infer not only types but also effects

(including those occurring within types).

Remark We shall use the name W for all algorithms that are "extensions"

of the algorithm W presented in [24], so as to emphasise their generic nature;

it should be clear from context which version we refer to and in Chap. 4 we

present the algorithm W of this book. Q

A first step is to modify the function U to operate on annotated types, and

this naturally raises the question of how to unify two effects. We can no longer

proceed by decomposition since this method is valid only for "free algebras".

To see this, consider the equation (on the effects of [49])

write(r, int) U write(r, int) = 0 U ft

Since 0 is the neutral element of U it is clearly possible to unify this equation,

even though the leftmost part of its "decomposition" is write(r, int) = 0 which

cannot be unified.

One may suggest to use results from unification theory, a survey of which is

given in [45], to solve equations such as

write(r, int) = ft U ft.

Unfortunately, for non-free algebras one cannot expect the existence of exactly

one most general unifier: in the example above, [ft »->• write(r, int), ft »->• 0]

and [ft !->• write (r, int), ft »->• 0] are both unifiers but there does not exist

a unifier having both as instances. Letting a call to U return a (potentially

infinite) tuple of unifiers does not seem attractive; instead we postpone the

unification of the effect equations. This motivates that W should accumulate

a set of effect constraints; in the following we therefore consider a version of

W returning a 4-tuple of the form (5, t, 6, C) where C is a constraint set.

1.4.1 Effect Constraints

The algorithm presented in [19], implementing what is basically the system of

[23], generates equational constraints of the form b\ = b2 where each k is a

union of effect constants and effect variables.

I A. AN INFERENCE ALGORITHM FOR EFFECTS 23

The system of [19] does not employ subeffecting and therefore many programs

are not typeable [48, p. 247]. The remedy [48] is to generate inclusion

constraints — in the future development we shall deal with this kind of constraints

only. The example in Sect. 1.3.4 suggests that to achieve the benefits of

subeffecting it is sufficient to use it immediately before applying the rule for function

abstraction; hence the key modification is to let the clause for function

abstraction generate a constraint expressing that the effect annotating the function

type approximates the effect of the body:

W(i4,f n x =* e0) =

let a be fresh

let (S0,t0,b0,Co) = W{A[x : a],e0)

let P be fresh

in (S0, So a -** *o,e,C0U{6o C /?})

An extra benefit of this modification is that the entities produced by the above

clause have a certain "simple" form in that they contain variables, rather than

more complex effects, at certain places: on the right hand side of the new

constraint, and in the top-level annotation of the type. In general we say that

a constraint set is simple if all its elements are of the form b C /?, and that

a type is simple if it does not contain effects that are not variables. When

writing the remaining clauses of W we shall maintain the invariant that all

types and constraints are simple — to do so we must additionally require that

also all substitutions are simple, that is they map type variables into simple

types and effect variables into effect variables, for then the application of a

simple substitution to a type or a constraint set will preserve the property of

being simple.

It is trivial to write a version of U that works on simple types and that returns

a simple substitution (or fails); a key clause is

W(*i V t2,t[ S t'2) =

let So = [/?' ^ p]

\etSl=U(S0tuS0t,l)

letS2 = W(SiSo*2,SiSo*'2)

in S2 Si So

With U properly defined, it is straightforward to modify most clauses of W to

deal with effects and constraints; for function application this results in

W(Aei e2) =

24

CHAPTER 1. INTRODUCTION

let(Si,til6ilCi) = W(illci)

let(52,^2,62,C2) = W(5M,e2)

let a and /? be fresh

let S = U(S2tut2 -V a)

let b = (SS2bx) SEQ (Sb2) SEQ (Sf3)

in (S S2SuSa,b,S S2CiU SC2)

The treatment of type schemes and polymorphism will be postponed until

Sect. 1.4.3 after we have addressed the issue of solving constraints.

1.4.2 Solving Effect Constraints

We say that a substitution 5 solves (or satisfies) C if Sb\ C Sb2 for all

(&i C b2) £ C. For simple constraints, finding a solution is particularly easy;

below we sketch the approach of [49] which is transferred to behaviours in [30].

The first step is to ensure that the same effect variable does not occur on more

than one right hand side. To obtain this goal we replace {(&i C /?), • • •, (bn C

/?)} (with n > 0) by (&i OR • • • ORbn C /?) and the validity of this

transformation follows from OR being a least upper bound operator (cf. Sect. 1.3.4).

The resulting constraint set C is then solved using the algorithm given below

(where the nondeterminism introduced in step 1 may be resolved e.g. by

imposing a linear order on effect variables). We use the symbol C to denote the

substitution that is returned.

1. if C is empty then return Id

else split C into C and (b C /?);

2. let recursively C be the result of solving C;, and let b' = C'ft;

3. if b' contains an effect with a type component where f3 is free

then abort

else let S0 = [/3t-+(pUb%

4. return S0 C7.

It turns out [49] that C is a principal solution to C in the sense that if S

also satisfies C then S = SC. Keep in mind, however, that in general C

is not a simple substitution and hence its only use within W is to control

polymorphism (as we shall see in Sect. 1.4.3) and effect masking.

1.4. AN INFERENCE ALGORITHM FOR EFFECTS

25

Remark To prove that C actually satisfies the constraints in C, first observe that

we can inductively assume that C" is satisfied by C and hence also by C = Sp C.

To show that C satisfies (b C 0) we perform the calculation (recall that b' = t?b)

Cb=[0^(0\Jb')]b' C 0Ub, = S00 = S0Ci0 = C0

where the inclusion follows since (due to 3) there exists b" with /3 £ FV(b") such

that either b' = b" or b' = /?U&", and where the second last equality is justified since

we can inductively assume that the domain of C is the set of right hand variables

of C and thus does not contain (3.

As can be seen from step 3, the system of [49] does not allow effects of "infinite

depth". The simplest known example of this phenomenon [49, p. 274] is where

the type tf of an input function / is forced (via a conditional) to match the

type of a function which (among other things) initialises a location to /. Hence

tf is given by int -*6/ int where bf contains the effect init(r, tf).

The system of [36] is able to cope with infinite depth as it has an explicit

construct REC/2.6 for recursion. This enables us to replace step 3 above by 3;

below, yielding an algorithm [30] that is able to solve an arbitrary set of simple

constraints. Unfortunately, due to the richer algebraic structure of behaviours,

we cannot expect the existence of a principal solution.

3' let S0 = [p i-> (RECp.b')]

Remark As above, the correctness of C boils down to the claim that Cb C C /?;

this now follows from the calculation (still with b' = C b)

Cb=[0^(REC0.b')}b' C REC0.b, = S0 0 = SpCi0 = C0

where the inclusion is a consequence of the axiomatisation of the construct REC/2.6.

1.4.3 Downwards Closure for Polymorphism

We shall next discuss how to reconstruct types and effects in the presence of

polymorphism. The first step is to allow constraints in type schemes which

thus take the form V(f : C). t where we use the letter 7 to range over a's and

/?'s and p's as appropriate. The clauses for constants and identifiers now read

W{A,c) =

\et(t1C) = INST(Ty?a(c))

26

CHAPTER 1. INTRODUCTION

in (Id,*,e,C)

W(A,x) =

let (t,C) = INST(A{x))

in (Id,*,e,C)

where the function Typa is as Typ except that it returns type schemes with

simple components: with effects as in [53] (cf. Sect. 1.3.1) we thus have e.g.

Typa(ref) = V(a/?: ({a} C /?)). a -V a ref.

Also the function INST is modified so that it returns not only a type but also

a constraint: it maps a type t into (t, 0); and it maps a type scheme V(7 : C). t

into (Rt,RC) where R = [7 *-¥ 71 ] with j[ fresh variables.

For a let-expression, we tentatively write the clause (with some holes to be

filled in later)

W(i4,let x = e\ in e2) =

let(Si,t1,61,C1) = W(>l,c1)

\etU = ---FV(tl)--

let DV = FV(Sl A) UFVih)

letNG=-DV-'

let 7 = U \ NG

let q = {(b C /?) € Ci I FV(6, /?) n {7} / 0}

let^! =V(^:C|). ii

let (52, t2,62, C2) = W((Si il)[x : t8X], e2)

in (S2 Sj, t2, (S261 5^(? 62), 52Ci U C2)

and make a sequence of remarks:

1. the set of bound variables 7 is taken from a universe U that must include

at least FV(ti) but typically also some subset of FV(C\)\

2. in the spirit of Sect. 1.3.1, we consider as dangerous (belonging to DV)

the variables free in the environment or in the effect;

3. the set of variables that cannot be generalised {NG) must clearly contain

DV but also some other variables may have to be included, as explained

below;

4. there is no point in letting ts\ contain constraints where no variables are

bound, since for such constraints INST will just make an identical copy;

14. AN INFERENCE ALGORITHM FOR EFFECTS

27

5. all constraints in C\ (rather than just C\ \C[) are returned by the clause,

in order to ensure that they are taken into account also if x does not occur

in e2-

To motivate a proper definition of NG, we again consider the program (1)

from Sect. 1.2.3 where the identifier r is defined as ref (fn x=>x) and thus

at run-time bound to a location holding values of type a -> a; recall that a

semantically sound inference system does not allow to generalise over a. It is

easy to verify that (for fresh type and effect variables) we have

W([],fnx=*x) = (Id,a ->*■ a,e,{e C &}) and

W([],ref) = (Id,a/ -V a'ref,£,{{a'} C /J1})

and hence the definition of r will be processed by W as follows:

W([],ref (fnx=*x)) =

([a; »-► (a -*A a), • • • ], (a -*"« a) ref, /?',

{eC/?ei{a,A} C /?'}).

We see that DV is the singleton set {/?'} and in particular does not contain a

which must be part of NG.

To understand what is going on, observe that for any solution S to the

constraint ({a, f3e) C /?') it will hold that S ff contains 5 a as a subpart. We can

therefore view a as a "sub-variable" of /?', so it seems right to demand that

if the latter is included in NG then also the former is included. One way of

formalising this intuition is to define NG as Z)VCl^, where in general the set

Xc^ — the downwards closure of X with respect to C — is the least set Y

that contains X and that has the property that if (b C /?) belongs to C and

0€Y then also FV{b) C Y.

Algorithms for effect reconstruction, able to deal with polymorphism, are

presented in [53, 49, 1]. Different as these approaches may be, in all cases the

way of computing (what corresponds to) NG essentially boils down to taking

the downwards closure of DV; an important insight is that with C as defined

in Sect. 1.4.2 we have that Xc^ = FV(C(X)). Moreover, in [53, 49] also the

universe is defined using downwards closure: U = FV(ti)ca.

The problem of lack of principality

When the effect constraints admit a principal solution (cf. Sect. 1.4.2), the

techniques outlined above are sufficient for the development of an algorithm

28

CHAPTER 1. INTRODUCTION

that is syntactically sound and complete [49]. When this is not the case, as for

behaviours, additional complications arise. To see this, consider an expression

e of the form

let / = co in (/ 0 ; /)

and assume that

W([],e0) = (---,int -V int,e,C)

where C "restrains" the value of /?. Since DV and hence also NG is empty,

we can wlog. assume that the body of e will be analysed in an environment

where / is bound to the type scheme V(7 : C). int -V int with f3 £ {7} =

FV(C); and for each occurrence of / a fresh copy of 7 will be created. We use

primed, respectively doubly primed, letters to denote entities created by the

first, respectively second, copy; it will then hold that

W([],e) = (•••,int -V" int,/?',CuC'UC"). (7)

Let S" and S" be arbitrary solutions to C. Then it is clearly possible to combine

them into a substitution S that solves (C U C U C") and where additionally

Sff = S'P and S/3" = S"/3 (stipulate S7' = S'7 and S7" = S"7 for each

7 £ {7}). If W is to be syntactically sound, we may therefore reasonably

expect from (7) that [] h e : int ->S0" int&S/?', that is

[]he: int -+s"^ intkS'P.

For this judgement to be derivable, S" (3 and S" f3 must both be instances of

a common behaviour (perhaps after applying a subeffecting step) and in the

absence of principality this will not always hold. The remedy of [1], which

improves on [30] in deriving a reconstruction algorithm for the type system

presented in [36], is to generate so-called "S-constraints"; in the example above

these constraints will record that the solution to C" as well as the solution

to C" must be an instance of the solution to C. The resulting algorithm is

syntactically sound and complete; unfortunately it is an open problem whether

there exists an algorithm for solving the generated S-constraints.

1.5 Shape Conformant Subtyping with Effects

It is actually possible to obtain a more precise analysis than the one provided

by the techniques from Sections 1.3 and 1.4. To justify this claim, we again

1,5. SHAPE CONFORMANT SUBTYPING WITH EFFECTS 29

consider Example 1.1 from Sect. 1.3.4. Without subeffecting this example

cannot be typed, but the price to pay for using only subeffecting is that then

all occurrences of f\ in e are indistinguishable from /2, and vice versa. This

might result in e being assigned a less precise type (or perhaps even inhibit

the typing of e), and is bad enough in itself if a "sticky" variant is used where

also the typings of subexpressions are of interest.

To increase the precision of the analysis we may use subtyping, cf. the

considerations in [50, Chap. 5]. This method allows f\ to be bound to t\ and f2 to be

bound to t2 when typing e; then *i and t2 are approximated to t immediately

before the rule for conditional is applied, using the subtyping rule

Ahe: t'kb

The subtype relation tx C t2 states that *i is a more precise type than t2 and

is naturally "induced" by the subeffecting relation via structural rules such as

t\ C h b C b' t2 C t'2

t\ -»6 t2 C t\ -»6' t'2

(note that C is contravariant in the argument position). For t\ C t2 to hold

it must thus be the case that these types are identical if their annotations are

removed; accordingly we shall use the term shape conformant subtyping for

this notion of subtyping.

In this section we shall extend the techniques from Sections 1.3 and 1.4 to

implement shape conformant subtyping rather than just subeffecting. It is

thus natural to see if we can reuse some of the ideas and techniques that were

originally developed for the purpose of adding subtyping to purely applicative

programming languages (not dealing with effects), as is done in [27, 9, 8] (only

briefly touching upon polymorphism) and in [47, 17] (treating polymorphism

in full generality). In Sections 1.5.1 and 1.5.2 we embark on outlining some of

the main insights of these papers.

1.5.1 Inference Systems for Atomic Subtyping

We shall restrict our attention to atomic subtyping, induced by a number of

inclusions between base types via structural rules (in particular saying that

*i -* t2 C t\ -> t'2 if and only if t\ C t\ and t2 C t'2). It is natural to specify

e.g. int C real; and a type system for object oriented languages must be able

to deal with inclusions such as K\ C K2 where K\ and K2 are class names.

30

CHAPTER 1. INTRODUCTION

A nice property of atomic subtyping is that if *i C t2 then *i "matches" t2 in

the sense that they have the same "tree structure", even though the leaves may

differ.

A main challenge is to formulate subtyping in a way that allows to state some

sort of principal typing property (cf. Sect. 1.2.2). A first naive attempt is to

conjecture that given typeable e there exists t such that [] h e : t' holds if

and only if S t C if for some S. To disprove this conjecture, assume that

int C real are the only base types and consider the twice function from

Sect. 1.2. It can be assigned the type tri = (real -> int) -> (real -> int)

which is in fact a minimal typing of twice: if *o is a type strictly less than t„ it is

not possible to derive [] h twice : t0l since *o will take the form (*i -> £2) -*

(real -> int) with t\ = int or t2 = real. Also ta = (int -> int) ->

(int -> int) and trr = (real -> real) -> (real -> real) are minimal

typings of twice. For a type t that is principal in the sense conjectured it

must thus be the case that tril ta, and trr are instances, but then clearly also

tir = (int -> real) -> (int -> real) will be an instance of t even though

fa/tce cannot be assigned the type tir.

The recipe for obtaining principality is to introduce type constraints of the form

t\ C t2\ in particular such constraints may occur in type schemes. Principal

typing of e now amounts to the existence of a type scheme ts such that t' is

a type of e if and only if t' is a "lazy instance" [8] of ts, where we say that a

type t' is a lazy instance of a type scheme V(a : C). t if there exists S with

Dom(S) C {a} such that S satisfies C and such that St C t'. We may expect

twice to have the principal type scheme

V(aa': {a' C a}), (a -» a') -» (a -» a'). (8)

Constraints in the inference system. To construct these extended type

schemes in the inference system, constraints must be present in judgements.

We shall write C, A h e : 2 to denote that the expression e (in environment

j4) has type t, provided that the type variables in t are related as indicated by

the constraints in C. Then the subtyping rule reads

C,A\- e : t

— if C h t C if

C,A\-e:t'

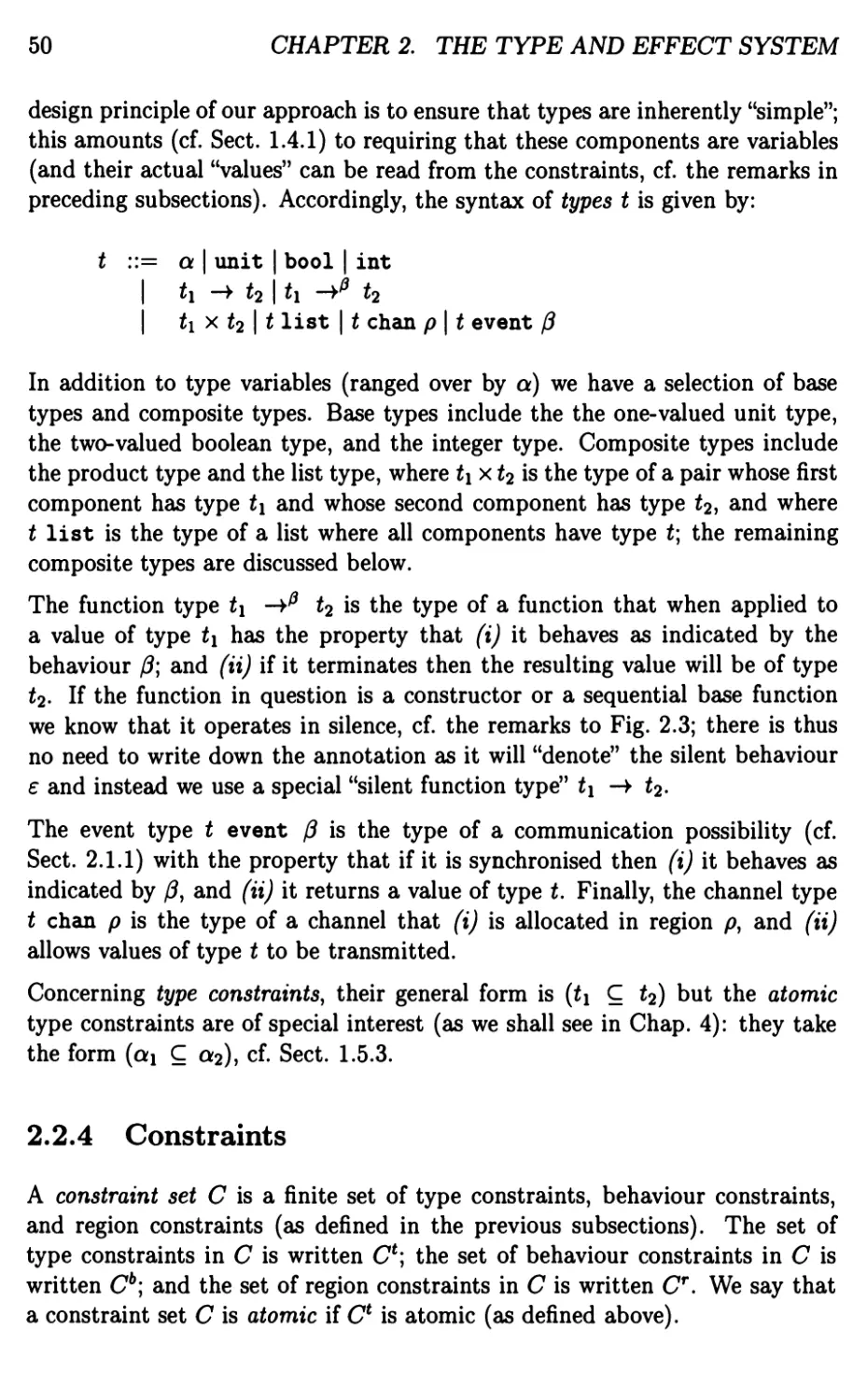

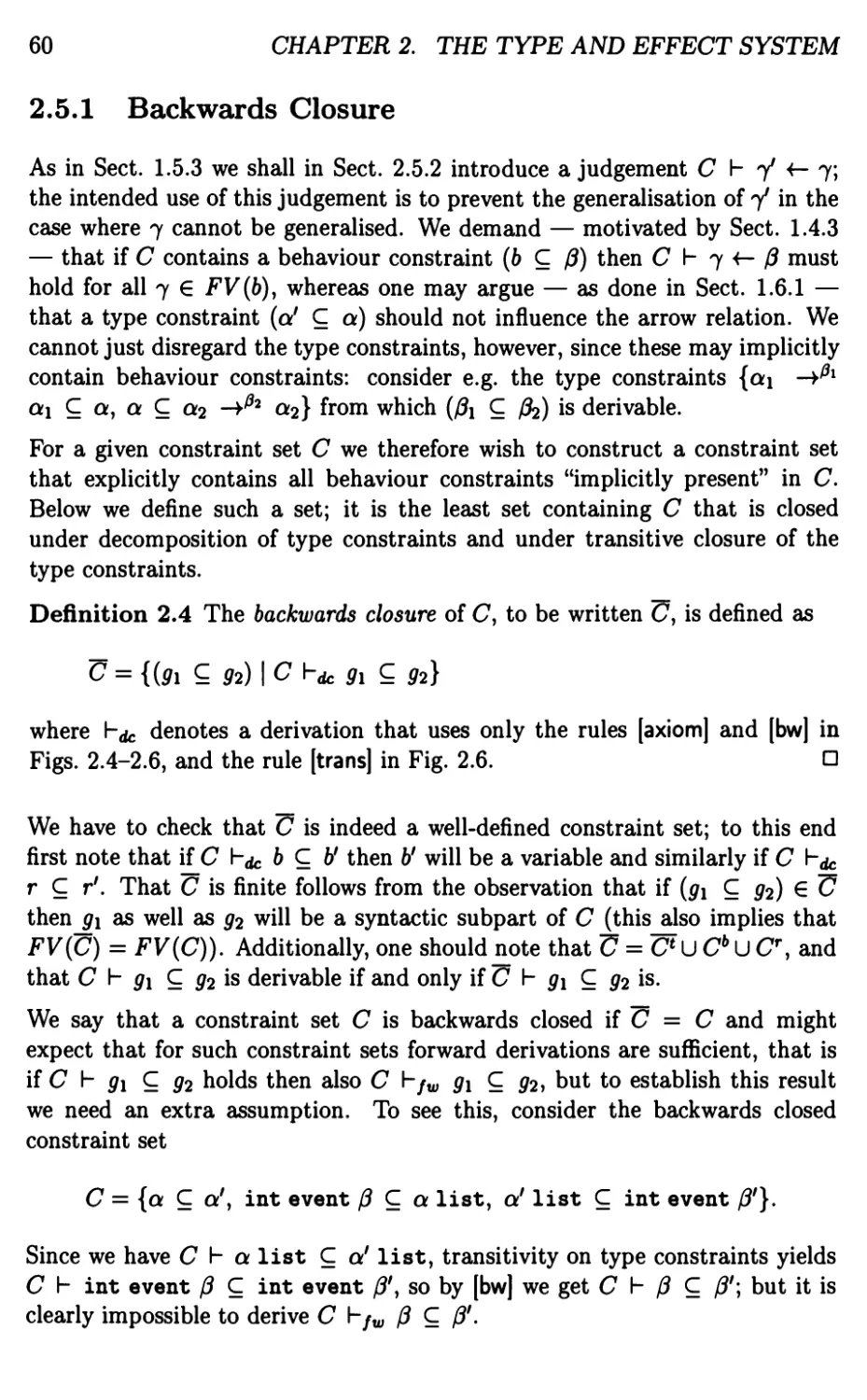

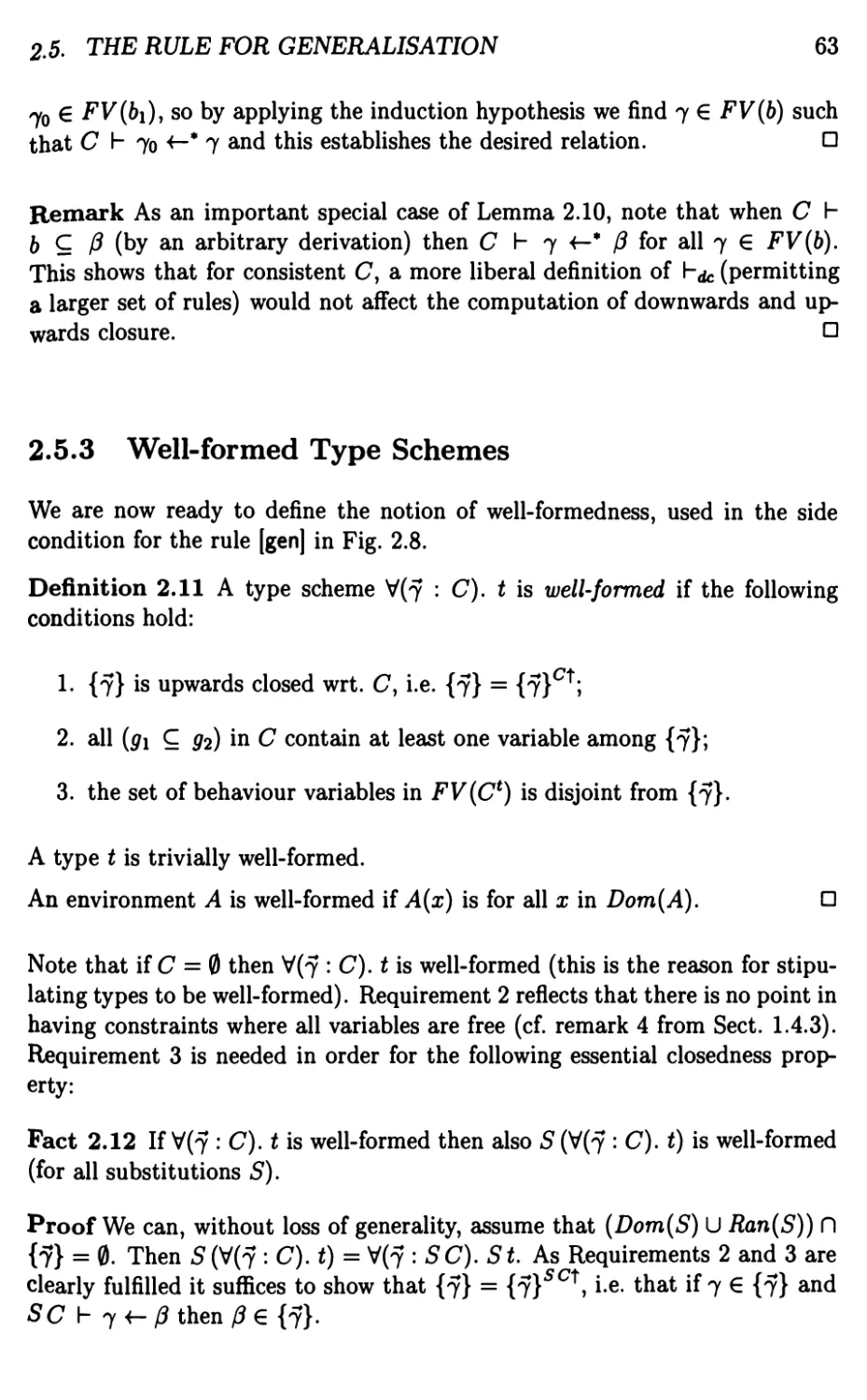

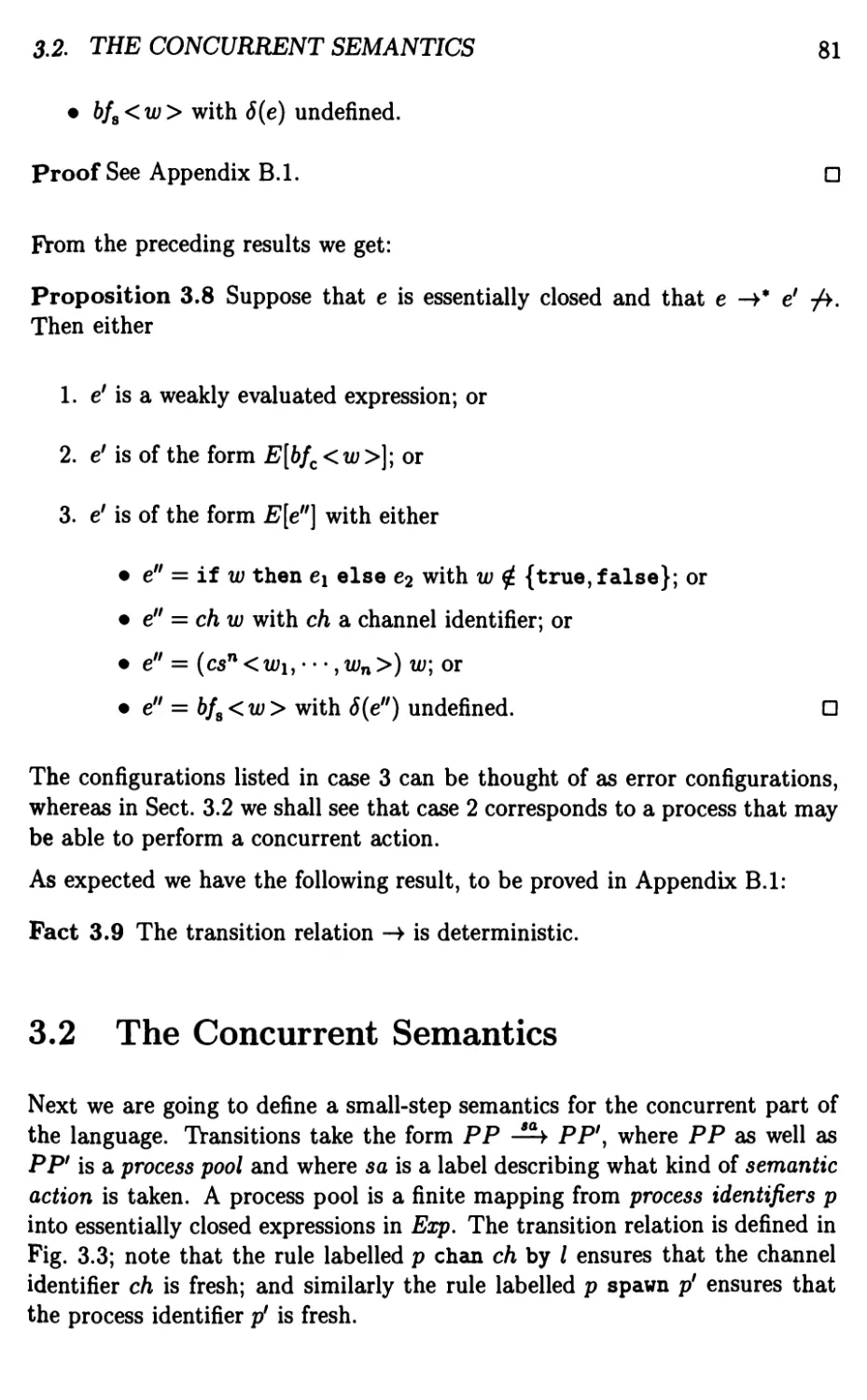

where the judgement C \- t\ C t2 formalises that *i C t2 is a consequence of