Текст

Many of the designations used by manufacturers and sellers to distinguish their prod-

ucts are claimed as trademarks. Where those designations appear in this book, and Ad-

dison-Wesley was aware of a trademark claim, the designations have been printed in

initial capital letters or ail capital letters.

The author and publisher have taken care in preparation of this book, but make no

expressed or implied warranty of any kind and assume no responsibility for errors or

omissions. No liability is assumed for incidental or consequential damages in connec-

tion with or arising out of the use of the information or programs contained herein.

The source code in this book was developed by Mark J. Kilgard and is copyright ©

1996 by Mark J. Kilgard. The author's programs are hOt in the public domain, but they

may be freely distributed without licensing fees. (See page 459.) These programs are

provided without guarantee or warranty, expressed or implied.

Library of Congress Cataloging-in-Publication Data

Kilgard, Mark J.

OpenGL programming for the X Window System ! Mark J. Kilgard.

p. cm.

Includes bibliographical references and index.

ISBN 0-201-48359-9

1. Computer graphics. 2. X Window System (Computer system)

3. OpenGL. I. Title.

T385.K347 1996

006.6'dc20 96-23162

CIP

Copyright © 1996 by Mark J. Kilgard

Ail rights reserved. No part of this publication may be reproduced, stored in a retrieval

system, or transmitted, in any form or by any means, electronic, mechanical, photo-

copying, recording, or otherwise, without the prior written permission of the publisher.

Printed in the United States of America. Published simultaneously in Canada.

Sponsoring Editor: Mary Treseler

Project Manager: John Fuller

Production Assistant: Melissa Lima

Cover design: Jean Seal

Text design: Kim Amey

Set in 10-point Sabon by Argosy

Text printed on recycled and acid-free paper.

ISBN 0-201-48359-9

5 6 7 8 9 10 MA 02010099

5th Printing June1999

Addison-Wesley books are available for bulk purchases by corporations, institutions,

and other organizations. For more information please contact the Corporate, Govern-

ment, and Special Sales Department at (800) 238-9682.

Many of the designations used by manufacturers and sellers to distinguish their prod-

ucts are claimed as trademarks. Where those designations appear in this book, and Ad-

dison-Wesley was aware of a trademark claim, the designations have been printed in

initial capital letters or ail capital letters.

The author and publisher have taken care in preparation of this book, but make no

expressed or implied warranty of any kind and assume no responsibility for errors or

omissions. No liability is assumed for incidental or consequential damages in connec-

tion with or arising out of the use of the information or programs contained herein.

The source code in this book was developed by Mark J. Kilgard and is copyright ©

1996 by Mark J. Kilgard. The author's programs are hOt in the public domain, but they

may be freely distributed without licensing fees. (See page 459.) These programs are

provided without guarantee or warranty, expressed or implied.

Library of Congress Cataloging-in-Publication Data

Kilgard, Mark J.

OpenGL programming for the X Window System ! Mark J. Kilgard.

p. cm.

Includes bibliographical references and index.

ISBN 0-201-48359-9

1. Computer graphics. 2. X Window System (Computer system)

3. OpenGL. I. Title.

T385.K347 1996

006.6'dc20 96-23162

CIP

Copyright © 1996 by Mark J. Kilgard

Ail rights reserved. No part of this publication may be reproduced, stored in a retrieval

system, or transmitted, in any form or by any means, electronic, mechanical, photo-

copying, recording, or otherwise, without the prior written permission of the publisher.

Printed in the United States of America. Published simultaneously in Canada.

Sponsoring Editor: Mary Treseler

Project Manager: John Fuller

Production Assistant: Melissa Lima

Cover design: Jean Seal

Text design: Kim Amey

Set in 10-point Sabon by Argosy

Text printed on recycled and acid-free paper.

ISBN 0-201-48359-9

5 6 7 8 9 10 MA 02010099

5th Printing June1999

Addison-Wesley books are available for bulk purchases by corporations, institutions,

and other organizations. For more information please contact the Corporate, Govern-

ment, and Special Sales Department at (800) 238-9682.

For Sharon and Dana

V]J CONTENTS

1.3 GLX: The Glue Between OpenGL and X

1.3.1 A Quick Survey of GLX

1.3.2 The GLX Protocol

1.4 The GLU Library

1.5 An Example Xlib-based OpenGL Program

1.5.1 Initialization

1.5.2 Example: glxsimple.c

1.5.3 Scene Update

1.5.4 Compiling the Example

1.6 Comparing OpenGL to PEX

1.6.1

1.6.2

1.6.3

1.6.4

1.6.5

1.6.6

Subsets and Baselines

Programming Interfaces

Rendering Functionality

Display Lists

Portability

Window System Dependency

23

24

28

29

30

31

31

36

38

39

39

4O

4O

41

41

42

2

Integrating X and OpenGL

2.1 A More lnvolved Xlib Example

2.1.1 Initialization

2.t.2 The Dinosaur Model

2.1.3 Lighting

2.1.4 View Selection

2.1.5 Event Dispatching

2.2 OpenGL and X Visuals

2.3

2.4

2.2.1

2.2.2

2.2.3

More

2.3.1

2.3.2

2.3.3

Using

2.4.1

2.4.2

2.4.3

The Visuals Whose Existence Is Guaranteed by GLX

Example: glxvisuals, c

glXChooseVisual and glXGetConfig

about Colormaps

Colormap Sharing

Managing Multiple Colormaps

lnitializing Writable Colormaps

GLX Contexts

Sharing Display Lists

Binding to GLX Contexts

Copying Context State

44

44

55

65

68

69

73

75

75

81

83

83

83

85

85

86

87

89

CONN'rS

2.5 Rendering X Fonts with OpenGL 89

2.6 Rendering OpenGL into Pixmaps 91

2.6.1 Generating Encapsulated PostScript 94

2.7 Mixing X and OpenGL Rendering 100

2.8 Debugging Tips 102

2.8.1 Finding OpenGL Errors 103

2.8.2 Xll Protocol Errors 104

2.8.3 Specialized OpenGL Debugging Tools 106

3 Using OpenGL with Widgets ...................... 109

3.1 About the X Toolkit and Motif 109

3.2 Using OpenGL Drawing Area Widgets 111

3.2.1 A Short OpenGL-specific Widget Example 112

3.3 Specifics of the OpenGL Drawing Area Widgets 121

3.3.1 The Motif and Non-Motif OpenGL Widget Differences 121

3.3.2 OpenGL Widgets and the Widget Class Hierarchy 122

3.3.3 OpenGL Widget Resources 123

3.3.4 OpenGL Widget Advice 127

3.4 A More Involved Widget Example 130

4

A Simple Toolkit for OpenGL

4.1 Introducing GLUT

4.1.1

4.1.2

4.1.3

4.2 More

4.2.1

4.2.2

4.2.3

4.2.4

4.2.5

4.2.6

4.2.7

..................... 143

A Short Example

User Input and Other Callbacks

Menus

GLUT Functionality

Subwindows

Window Management

Controlling the Cursor Shape

Color Index Mode

Other Input Device Callbacks

State Retrieval

More Menu Management

143

144

148

153

155

155

156

158

160

161

162

162

viii CONTENTS

4.3

4.4

4.2.8 Font Rendering 164

4.2.9 Geometric Shape Rendering 166

4.2.10 Overlay Support 168

Advice and Hints on Use 171

4.3.1 Callback Advice 171

4.3.2 Window Management Advice 172

4.3.3 Current Window/Menu Management Advice 172

4.3.4 Miscellaneous Advice 173

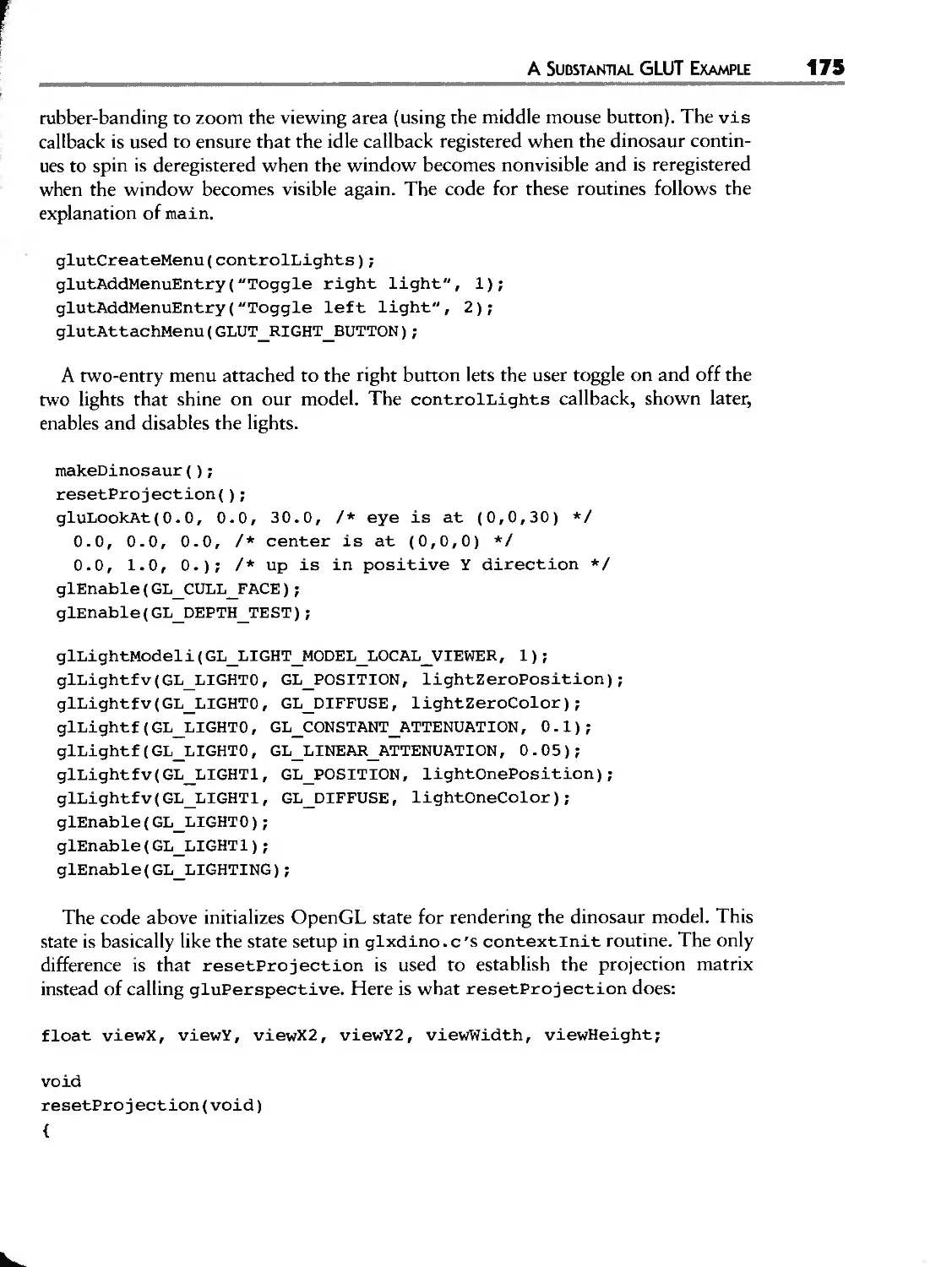

A Substantial GLUT Example 173

4.4.1 Establishing an Overlay for Rubber-Banding 176

4.4.2 Normal Plane and Overlay Rendering 177

4.4.3 Spinning and Rubber-Banding 180

4.4.4 Suspending Animation and Pop-up Menus 184

5 Exploring OpenGL with GLUT ..................... 187

5.1 Exploring Lighting with GLUT 188

5.1.1 The OpenGL LightingModel 188

5.1.2 Using OpenGL's Lighting Model 189

5.1.3 Example: lightlab.c 193

5.2 Exploring OpenGL Texture Mapping with GLUT 201

5.2.1 Using Textures with OpenGL 202

5.2.2 Fun with Textures 207

5.2.3 More on Texture Mapping 209

5.2.4 Example: mjkwarp.c 209

5.3 Exploring Blending Operations with GLUT 216

5.3.1 Uses for Blending 218

5.3.2 Antialiasing through Blending 219

5.3.3 Fog and Atmospheric Effects 221

5.3.4 Hints 222

5.3.5 Example: blender.c 222

$.4 Exploring Images and Bitmaps with GLUT 226

5.4.1 The Pixel Path 226

5.4.2 Bitmaps 232

5.4.3 Reading and Copying Pixels 233

5.4.4 Texturing as the Merging of Geometry and lmagery 234

5.4.5 Example: splatlogo.c 235

5.5 Exploring Curves and Surfaces with GLUT 240

5.5.1 Why Curves and Surfaces? 241

5.5.2 Evaluators 242

CONN OE

5.5.3 The GLU NURBS Routines 244

5.5.4 More Information 247

5.5.5 Example: molehill.c 249

5.6 Exploring the OpenGL Extensions with GLUT 252

5.6.1 OpenGL Extension Naming 252

5.6.2 Available Extensions 253

5.6.3 Extension Interoperability 256

5.6.4 GLX Extensions 257

5.6.5 Extensions as OpenGL's Future 257

5.6.6 The Polygon Offset Extension 257

5.6.7 Example: origami.c 260

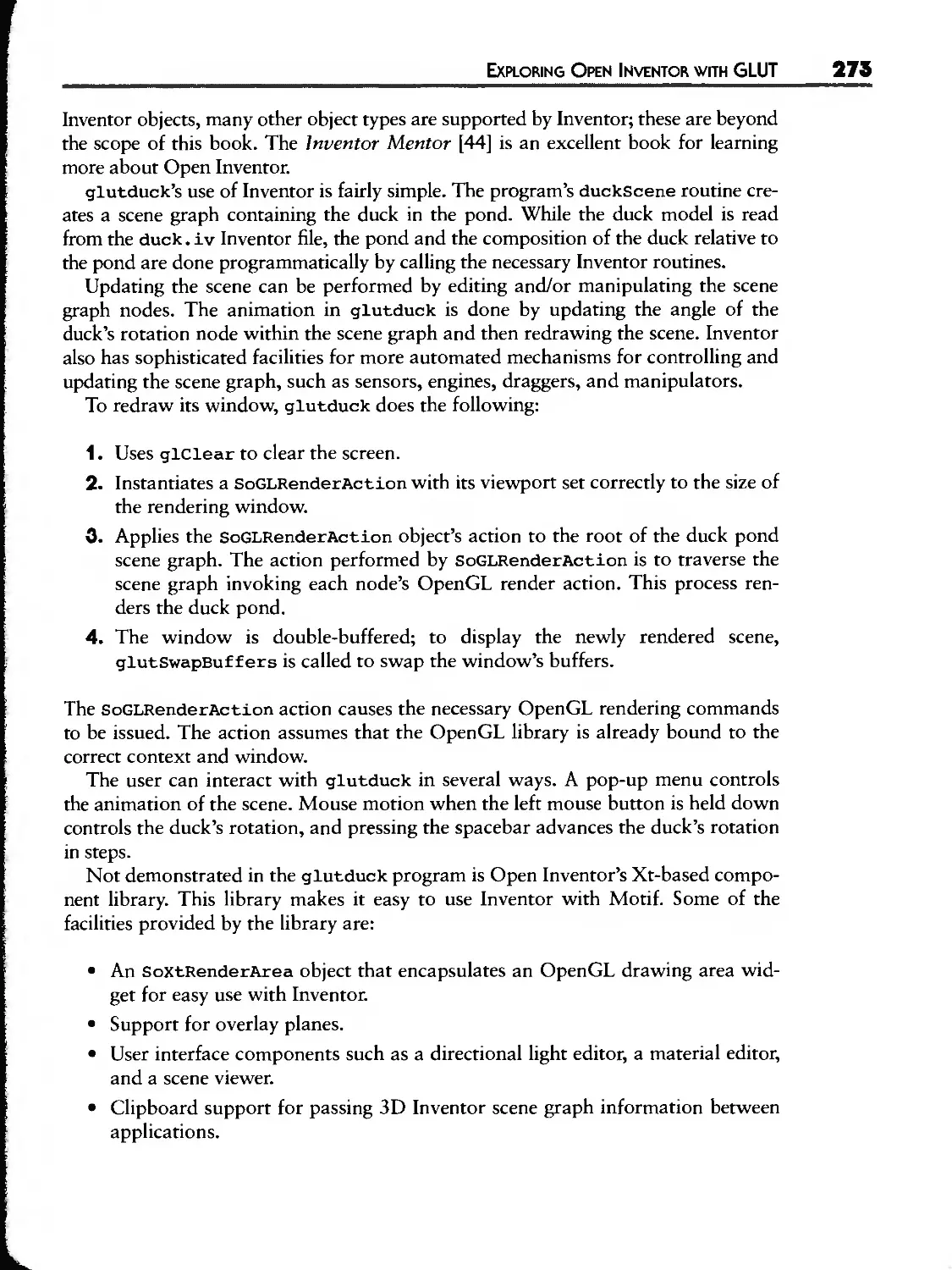

5.7 Exploring Open Inventor with GLUT 268

5.7.1 Procedural versus Descriptive 268

5.7.2 Open Inventor in Brief 269

5.7.3 Open Inventor with GLUT 271

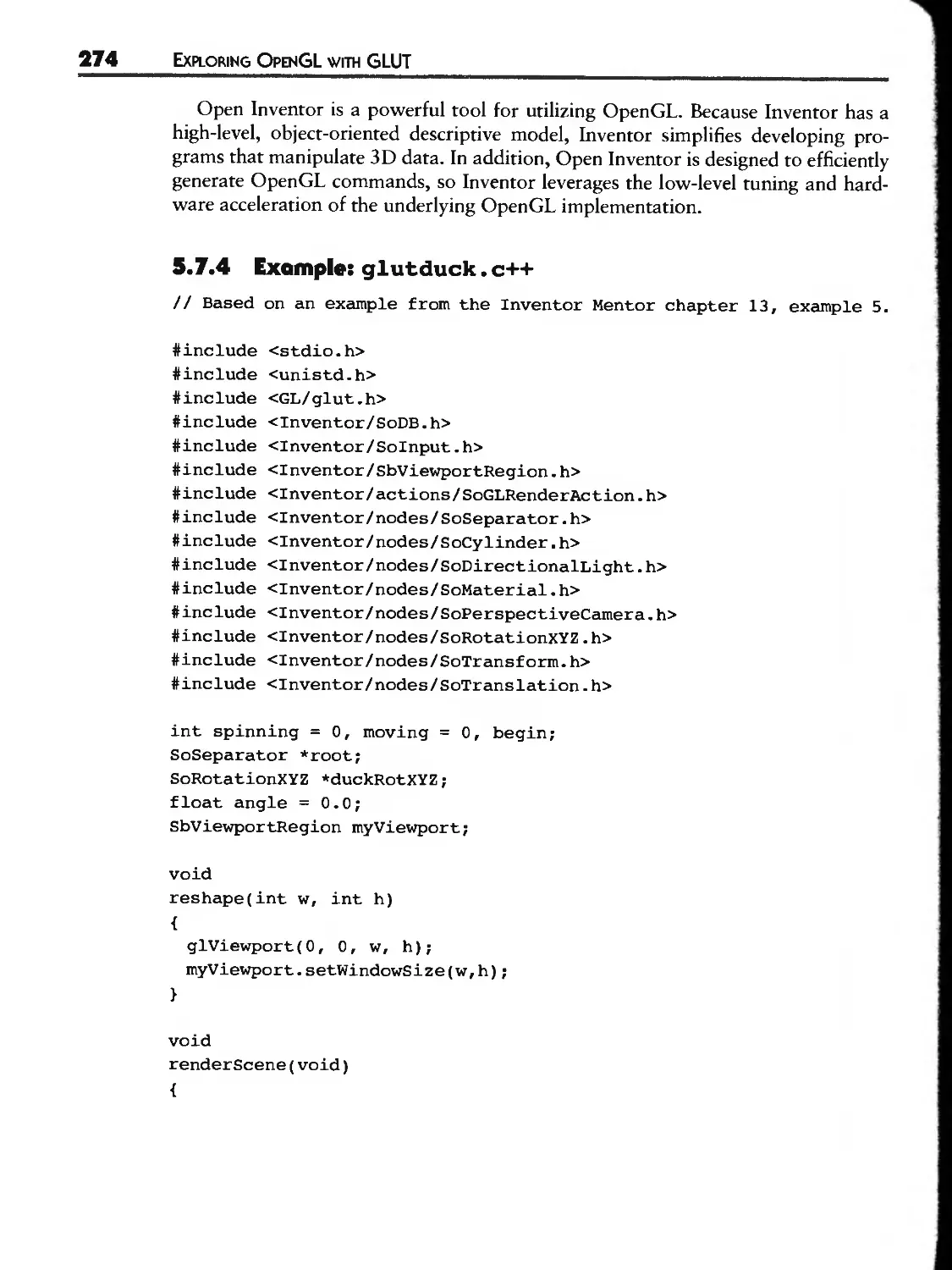

5.7.4 Example: glutduck.c++ 274

6

Advanced Topics

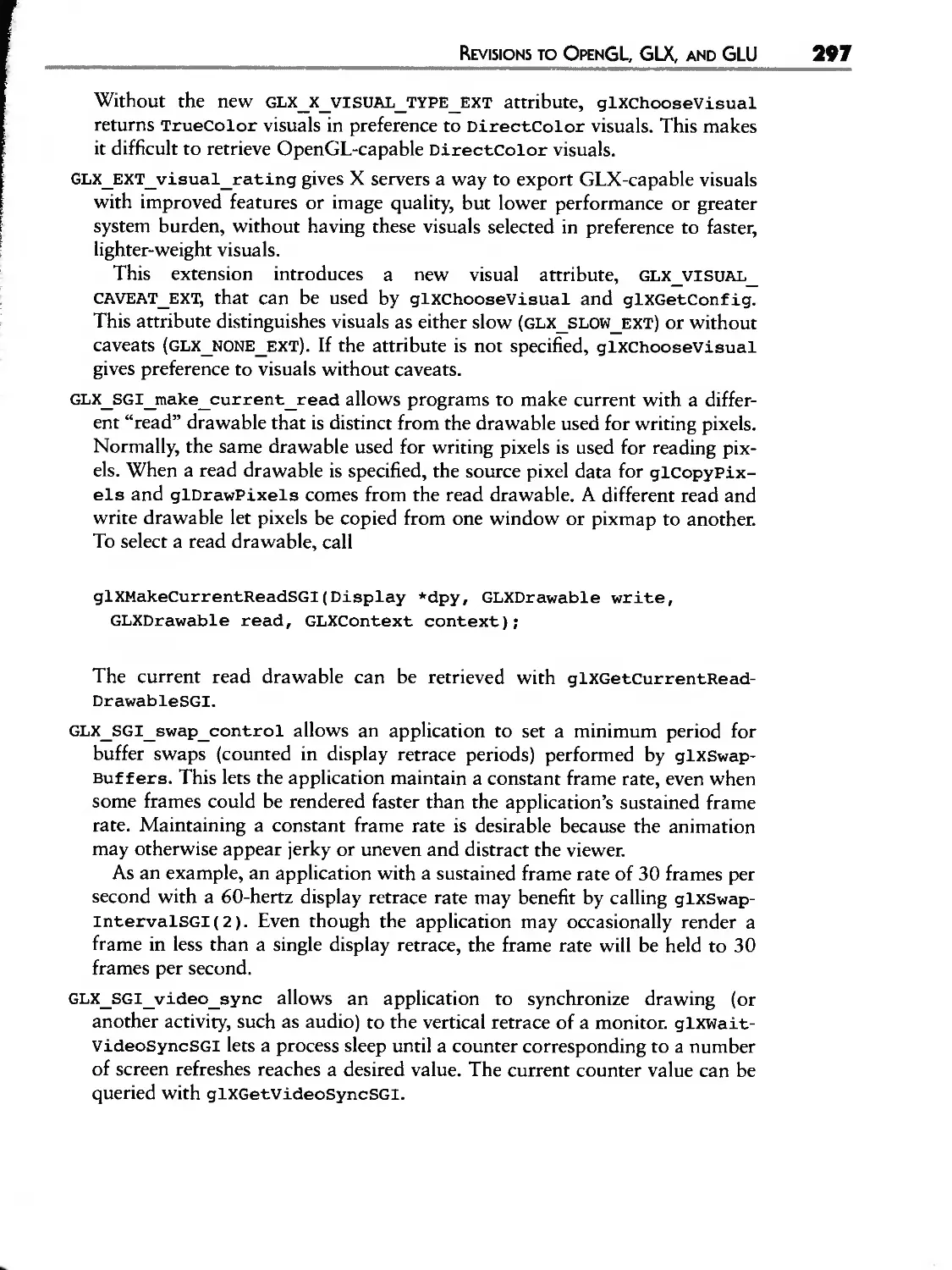

6.1 Revisions to OpenGL, GLX, and GLU

6.1.1 OpenGL 1.1

6.1.2 GLX 1.1 and GLX 1.2

6.1.3 GLU 1.1 and GLU 1.2

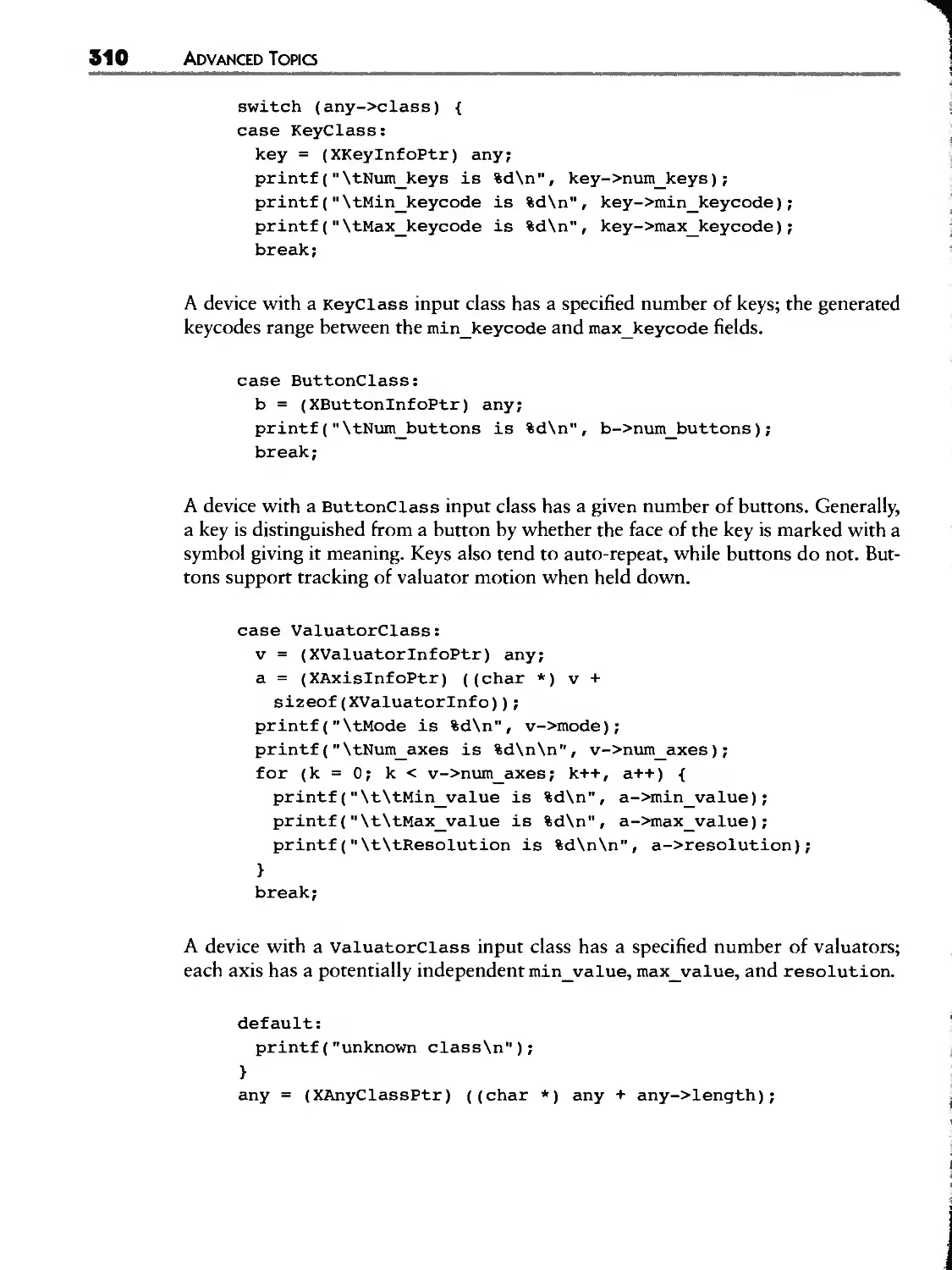

6.2 X Input Extension

6.3

6.2.1

6.2.2

6.2.3

6.2.4

6.2.5

6.2.6

6.2.7

6.2.8

6.2.9

Using

6.3.1

6.3.2

6.3.3

6.3.4

6.3.5

6.3.6

.............................. 281

Querying the Extension

Types of Extension Devices

Querying Supported Devices

Sample Devices

Opening and Selecting Events from a Device

Other X Input Extension Features

An Xlib-based OpenGL Example

X Toolkit Support for Extension Events

Motif-based OpenGL Examples

Overlays

Utility of Overlays

The Serrer Overlay Visuals Convention

An SOV Programming Interface

Listing Overlay Visuals: sovin«o, c

An Xlib-Only Overlay Example

Vendor Support for Overlays

282

282

294

300

303

304

305

306

311

315

318

318

328

329

345

345

347

349

354

357

362

X CONTENTS

6.3.7 Usage Considerations

6.3.8 Using Overlays with Motif Menus

6.4 Portability and Interoperability

6.4.1 Portability Issues

6.4.2 Interoperability Issues

6.5 Hardware for Accelerating OpenGL

6.5.1 The Graphics Pipeline

6.5.2 A Taxonomy for Graphics Hardware

6.5.3 Rendering Paths

6.5.4 Hardware for OpenGL Stages

6.5.5 Display Options

6.5.6 Rasterization

6.5.7 Transforming Geometry

6.5.8 Hardware for Window System Requirements

6.5.9 Graphics Subsystem Bottlenecks

6.6 Maximizing OpenGL Performance

6.6.1

6.6.2

6.6.3

6.6.4

6.6.5

6.6.6

6.6.7

6.6.8

6.6.9

Pipeline-based Tuning

Reducing OpenGL Command Overhead

Minimize OpenGL Mode Changes

Improving Transformation Performance

Improving Rasterization Performance

lmproving Imaging Performance

Improving Texturing Performance

Constructing Application-specific Benchmarks

Beware of Standard Benchmarks

363

365

367

367

369

373

374

375

376

378

378

38O

383

388

391

392

393

395

397

398

401

403

404

405

412

7

Example Application ......................... 415

An

7.1 . Running molview

7.2 The Molecule Data Structure: molview.h

7.3 Data Fi|e Reader: mol file.c

7.4 Virtual Trackba]]: trackball, c

7.5 4o|ecu|e Renderer: render.c

7.6 Picking: pick. c

7.7 User Interface Initia]izadon: gui_init.c

7.8 User Interface Operation: gui_run.c

416

418

42O

425

432

438

441

449

CONTENTS X

A

Obtaining GLUT, Mesa, and the Book's

OpenGL Example Code ......................... 459

A.1 GLUT and the Book's Example Code 459

A.20btaining Mesa 460

B Functional Description of the GLUT API ............... 463

B.1 Initialization 463

B.1.1 glutInit 463

B.1.2 glutInitWindowPosition, glutInitWindowSize 465

B.1.3 glutInitDisplayMode 465

B.2 Beginning Event Processing "466

B.2.1 glutMainLoop 467

B.3 Window Management 467

B.3.1 glutCreateWindow 467

B.3.2 glutCreateSubWindow 468

B.3.3 glutSetWindow, glutGetWindow 469

B.3.4 glutDestroyWindow 469

B.3.5 glutPostRedisplay 469

B.3.6 glutSwapBuffers 470

B.3.7 glutPositionWindow 470

B.3.8 glutReshapeWindow 47|

B.3.9 glutFullScreen 47]

B.3.10 glutPopWindow, glutPushWindow 472

B.3.11 glutShowWindow, glutHideWindow, glutIconifyWindow 472

B.3.12 glutSetWindowTitle, glutSetIconTitle 473

B.3.13 glutSetCursor 473

B.4 Overlay Management 475

B.4.1 gutEstablishOverlay 475

B.4.2 glutUseLayer 475

B.4.3 glutRemoveOverlay 476

B.4.4 glutPostOverlayRedisplay 476

B.4.5 glutShowOverlay, glutHideOverlay 477

B.5 Menu Management 477

B.5.1 glutCreateMenu 477

B.5.2 glutSetMenu, glutGetMenu 478

B.5.3 glutDestroyMenu 478

B.5.4 glutAddMenuEntry 479

xii CONTENTS

I).5.5

I).5.6

I).5.7

I).5 .(5

I).5.9

glutAddSubMenu

glutChangeToMenuEntry

glutChangeToSubMenu

glutRemoveMenuItem

glutAttachMenu, glutDetachMenu

D.6 Callback Registration

.6. glutDisplayFunc

.. glutOverlayDisplayFunc

.. glutReshapeFunc

..4 glutKeyboardFunc

.. glutMouseFunc

.. glutMotionFunc, glutPassiveMotionFunc

.. glutVisibilityFunc

.. glutEntryFunc

.6.9 glutSpecialFunc

.6.0 glutSpaceballMotionFunc

.. glutSpaceballRotateFunc

..| glutSpaceballButtonFunc

.6. glutButtonBoxFunc

.6.|4 glutDialsFunc

.. glutTabletMotionFunc

..| glutTabletButtonFunc

..| glutMenuStatusFunc

..| glutIdleFunc

..| glutTimerFunc

.7 Color Index Colormap Management

.7. glutSetColor

.. glutGetColor

.. glutCopyColormap

B. State Retrieval

.. glutGet

.. glutLayerGet

.. glutDeviceGet

..4 glutGetModifiers

.. glutExtensionSupported

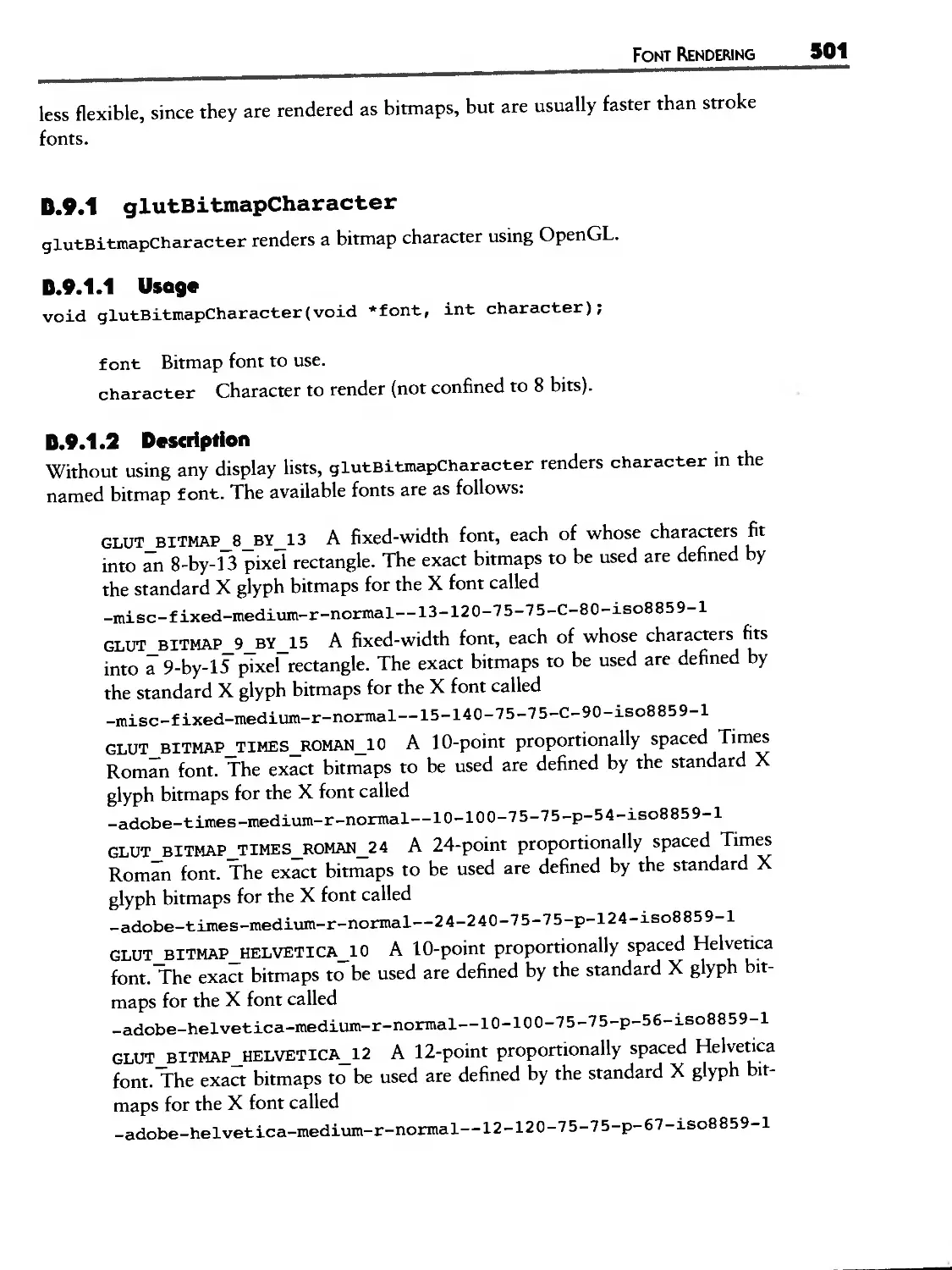

0.9 Font Rendering

.9. glutBitmapCharacter

.. glutBitmapWidth

.. glutStrokeCharacter

..4 glutStrokeWidth

479

479

480

480

481

481

482

483

483

484

484

485

486

486

487

488

488

489

490

490

491

491

492

493

493

494

494

495

495

496

496

498

499

499

500

5OO

501

502

502

503

CONTENTS xiii

B.IO

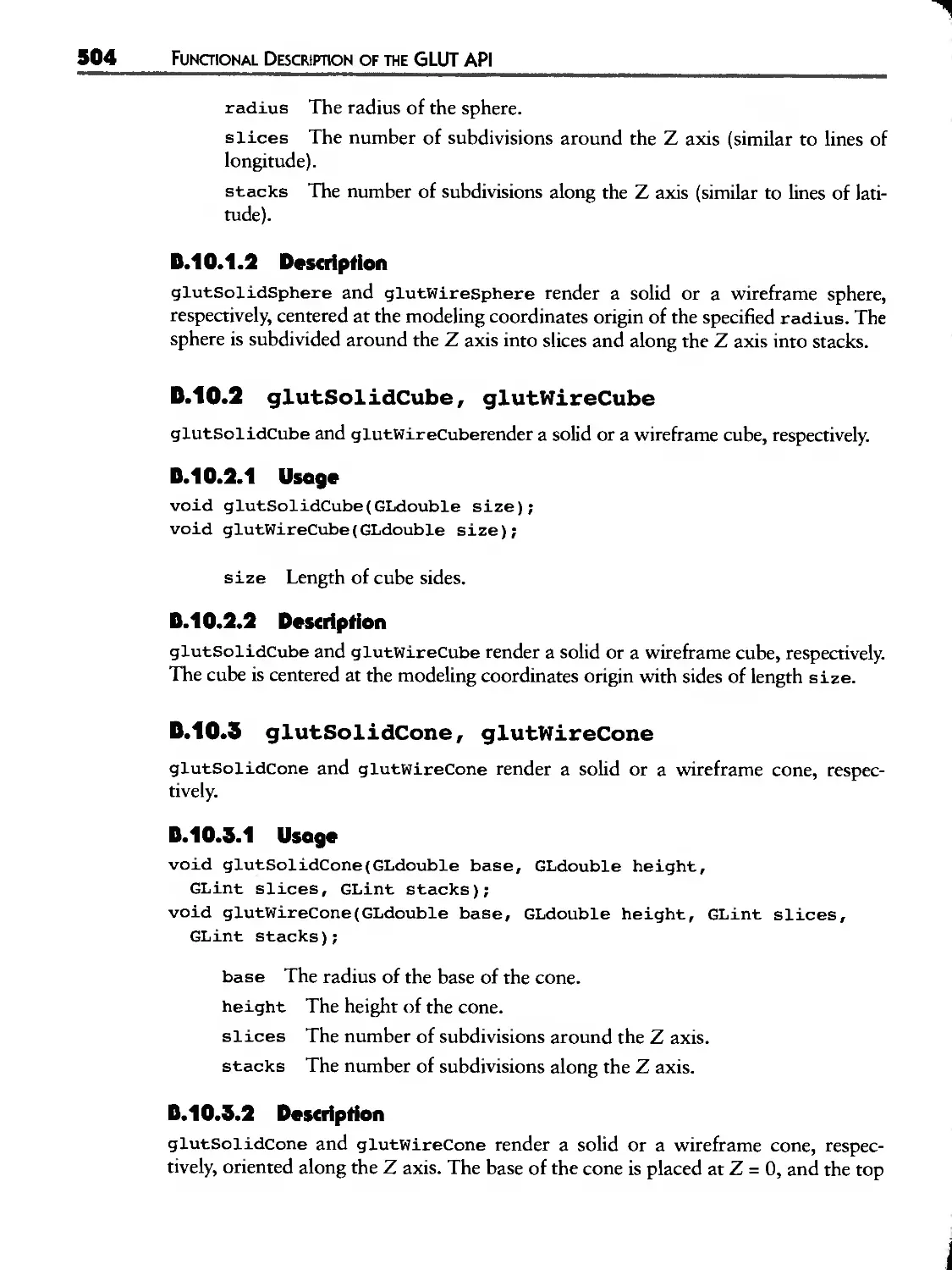

Geometric Object Rendering

B.10.1 glutSolidSphere, glutWireSphere

B.10.2

B.10.3

B.10.4

B.10.5

B.10.6

B.10.7

B.10.8

B.10.9

503

503

glutSolidCube, glutWireCube 504

glutSolidCone, glutWireCone 504

glutSolidTorus, glutWireTorus 505

glutSolidDodecahedron, glutWireDodecahedron 505

glutSolidOctahedron, glutWireOctahedron 505

glutSolidTetrahedron, glutWireTetrahedron 506

glutSolidIcosahedron, glutWireIcosahedron 506

glutSolidTeapot, glutWireTeapot 506

C GLUT State ................................... 509

C.1 Types of State 509

C.2 Global State 510

C.2.1 Program-Controlled State 510

C.2.2 Fixed System-Dependent State 511

C.3 Window State 511

C.3.1 Base State 511

C.3.2 Frame Buffer Capability State 514

C.3.3 Layer State 514

C.4 Menu State 515

Glossary .................................... 517

Bibliography ................................. 525

Index ....................................... 529

LIST OF FIGURES

2.1

2.2

2.3

2.4

2.5

2.6

2.7

2.8

2.9

2.10

2.11

Screen snapshot of glxdino

2D Complex polygons used to model the dinosaur's arm,

leg, eye, and body sides

Resulting GLU tessellation of the dinosaur polygons

Polygon orientation for back-face culling

Arrangement of lights, eye, and dinosaur in modeling space

Static view for gxd±no

Output of glxvisuals showing the OpenGL-capable visuais

supported on a 24-bit Silicon Graphics Indy

Sharing of OpenGL display lists between two GLX contexts

How OpenGL-capable GLXPixmaps are created from X Pixmaps

First 34 lines of dino. rgb. eps generated by pixmap2eps

Screen snapshot of ogldebug

3.1 Basic software library layering for Motif-based

programs (not including OpenGL)

3.2 Screen snapshot of glw OpenGL drawing area widget example

3.3 The nested widget hierarchy within glw

3.4 Software libraries used by OpenGL widget-based programs

3.5 Partial widget class hierarchy showing the standard

OpenGL widgets

3.6 GLwDr awingAr eaCal ibackSt ruct structure

3.7 Screen snapshot of paperplane

3.8 Diagram of the widget instance hierarchy for paperplane

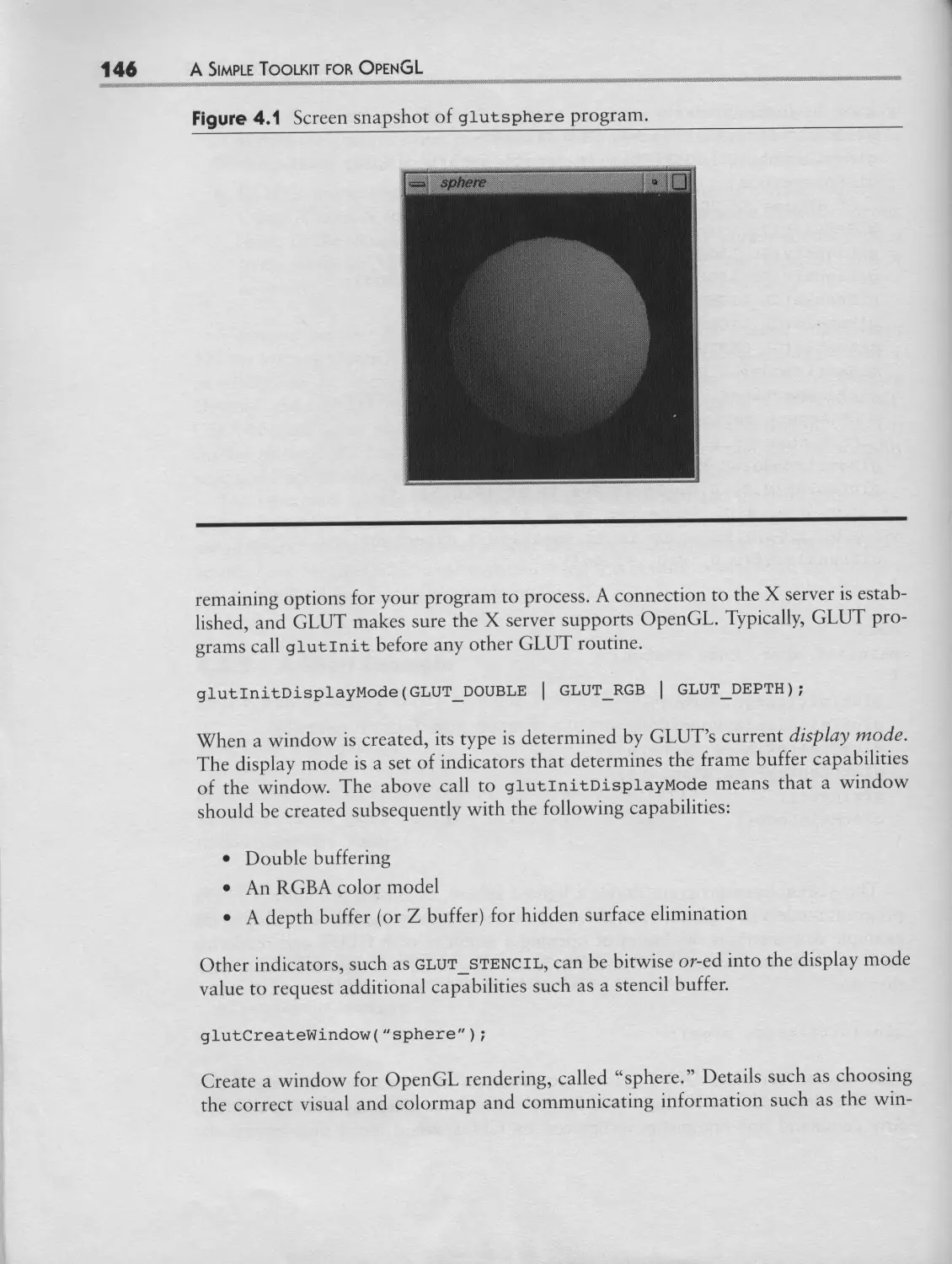

4.1 Screen snapshot of glutsphere program

4.2 The two windows generated by the modified

version of gutsphere

4.3 An example of GLUT cascaded pop-up menus

4.4 The stacking order of sibling windows can be changed

with glutPopWindow and glutPushWindow

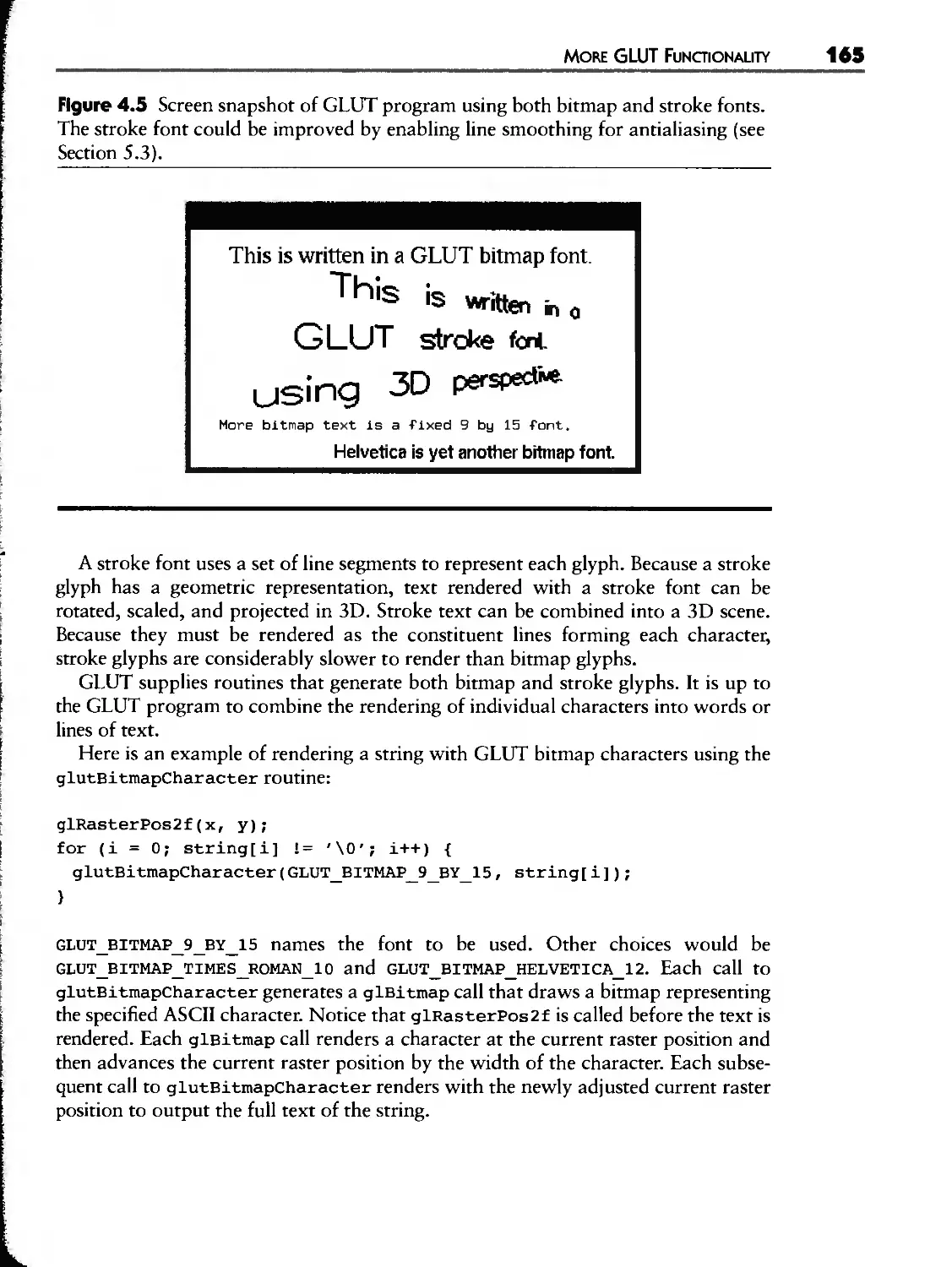

4.5 Screen snapshot of GLUT program using both

bitmap and stroke fonts

4,6 Example renderings using each of the nine GLUT

wireframe geometric shape rendering routines

55

58

63

64

76

87

93

101

106

110

112

116

121

123

125

131

139

146

149

154

157

165

166

LIST OF FIGURES X|

4.7 Example renderings using each of the nine GLUT

solid geometric shape rendering routines

4,8 Zooming in on rubber-banded subregions in zoorad±no

167

174

5.1

5.2

5.3

5.4

5.5

5.6

5.7

5.8

5.9

5.10

5.11

5.12

5.13

5.14

5.15

5.16

5.17

5.18

5.19

5.20

5.21

5.22

5.23

lightlab's initial appearance

The right red light is off; the left light shines red

Both lights are shining white and the picture is bright

lightlab with slate teapot

Cow model texture mapped with a red brick texture

Multiple levels of detail for a texture using mipmaps

Texture coordinates determine how texels in the texture

are mapped to the surface of a triangle in object coordinates

Various modes of rajkwarp. Texturing techniques can be

used to rotate, contort, and shatter an image

Formula 1 racing simulation making extensive use of texture

mapping, including texture-mapped trees

Screen snapshot showing blender example program

Magnified view of the blender example's text

Screen snapshot showing rive teapots growing more distant

from left to right

splatlogo example with menu displayed

How an image will be unpacked ffom host memory

by glDrawPixels

splatlogo demonstrating pixel zooming, scaling, and biasing

OpenGL's pixel path functionality is used to draw pixels,

read pixels, copy pixels, and load textures

Two views of the control points for a spiral B-spline curve

Screen snapshot of molehill

Varying sampling tolerances when tessellating a NURBS surface

Example of a trimmed NURBS surface

Wireframe rendering of the trimmed NURBS surface to

show the tessellation into polygons

OpenGL's extended image processing pipeline

The paper airplane is divided into eight polygons, defined

by the fold lines of the folded plane

193

194

194

195

202

204

206

208

209

218

220

221

227

229

232

235

241

246

247

248

248

256

258

]|| LIST OF FIGURES

5.24

5.25

5.26

5.27

5.28

6.1

6.2

6.3

6.4

6.5

6.6

6.7

6.8

6.9

6.10

6.11

6.12

6.13

6.14

6.15

6.16

6.17

6.18

6.19

6.20

6.21

The origami example program and three different outline modes

Graphical representation of a partial Inventor scene graph

First 25 lines of the duck. iv Open Inventor metafile

containing a 3D duck model

Relationship of Open Inventor libraries and OpenGL

and X libraries

Screen snapshot of glutduck using Open Inventor

OpenGL 1.1 additions

Vertex data interleaved for glInterleavedArrays's

GL C3F V3F configuration

GLX 1.1 and 1.2 additions

GLU 1.1 and 1.2 additions

Sophisticated input devices often used by 3D applications

XDevicelnfo structure

Possible device type names

Data structures describe X Input device characteristics

Physical layout of the valuators and buttons for the

dial-and-button box device supported by Silicon Graphics

Physical layout of the valuators and buttons for the

Spaceball device supported by Silicon Graphics

Physical layout of the valuators and buttons for the tabler

device supporred by Silicon Graphics

The XDevice structure returned by XOpenDevice

Screen snapshot showing the dials example

Screen snapshot showing the new xt tablet example

Example output of sovinfo

Screen snapshot of layerderao

How the OpenGL state machine maps fo the general

graphics pipeline

Overlay planes used fo annotate a complex underlying image

Decomposition of a shared polygon to interpolated spans

OpenGL per-fragment operations

Example of a triangle being rasterized into a frame buffer

259

270

271

272

272

282

288

295

300

303

307

307

309

312

313

314

315

319

330

356

357

375

38O

381

383

384

LIST OF FIGURES

6.22

6.23

6.24

Pipelining versus replicated parallelism

Clip rectangles define the clips for the two windows with

simple drawable regions

Two spheres rendered at different tessellations

7.1 Scree snapshot of molview

7.2 Screen snapshot of molview with its file chooser displayed

7.3 raolview data structure representing a methane molecule

7.4 A .raol file description of a methane (CH 4) molecule

7.5 Widget hierarchy for raolv±ew once it is initialized by raa±n

7.6 Two screen snapshots of raolv±ew tessellated differently

7.7 molview-menu, eps: A screen snapshot of molview

with its pop-up menu

386

390

400

416

417

418

419

442

450

450

xxii LIST OF TABLES

4.4 GLt glutLayerGet Parameters

5.1 OpenGL's Various Pixel Transfer Maps

6.t The Event Categories, Types, Classes, and Structures for the

X Input Extension

6.2 SERRER 0VERLAY VISUALS Property Entry Format

6.3 Minimum lmplementationoDependent Rendering Values for

OpenGL implementations

6.4 Various Graphics Subsystems Described Using Akeley's Taxonomy

for Classifying Graphics Architectures

6.5 Floating-Point Operations Required to Transform a Single

Vertex Using Single-source Lighting

169

23O

317

348

370

376

384

xxlv PREFACE

extension. OpenGL can be used in conjunction with standard X libraries and tool-

kits such as Xlib and OSF/Motif.

What you will learn by reading this book is hOt just how to use OpenGL, but

how to integrate your OpenGL applications tightly with the X Window System.

Previous knowledge of OpenGL is not required, but the coverage of OpenGUs func-

tionality is hardly complete. This book is not an introduction to the X Window Sys-

tem. It assumes that the reader programs in C and has experience with the Xlib and/

or Motif programming interfaces. A background in computer graphics is not

assumed, but is helpful. A basic knowledge of geometry and trigonometry is useful

for some sections.

A typical reader of this book is expected to fall into one or more of the following

categories."

• An Xlib or Motif programmer who wants to use OpenGL to implement 3D

graphics apphcations.

• A programmer proficient in IRIS GE, the predecessor to OpenGL, who would

like to migrate to OpenGL for more portability and access to the fastest cur-

rent and future hardware.

• A non-X programmer (using Windows NT or another window system) who

wants to understand X and OpenGL in order to port graphics applications

from X to Windows or to 6ther non-X window systems.

• A student of computer graphics who wants to develop practical 3D program-

ming skills.

• A technical decision maker who wants to assess the value of using OpenGL

with X for 3D graphics applications.

Unlike previous books in Addison-Wesley's OpenGL series, which present OpenGL

outside the context of a particular window system, this book delves into the ways

real OpenGL applications for the X Window System should be designed and con-

structed. Each chapter contains source code (written in ANSI C and, in one

instance, C++) demonstrating the techniques described. The overriding goal is to

allow the reader to construct real and useful 3D applications using OpenGL and X.

The material is presented in seven chapters. These chapters are tutorial in nature

and each builds on the material in previous chapters. Chapter 1 is an introduction

to OpenGL and to using OpenGL with X. Chapter 2 explains the GLX model for

integrating OpenGL and X using Xlib. Chapter 3 explains how to use OpenGL

with Motif and other widget sets. Chapter 4 describes an Xlib-based toolkit, known

as the OpenGL Utility Toolkit (or GLUT), for constructing quick-and-dirty

OpenGL programs to explore 3D programming. Chapter 5 explores OpenGL's fea-

tures with examples using GLUT. Chapter 6 presents advanced topics for OpenGL

application writers: revisions of OpenGL, how to use alternative input devices, how

to use overlays, how to ensure OpenGL portability and interoperability, approaches

to OpenGL hardware acceleration, and performance tuning. Chapter 7 combines

the techniques explained in earlier chapters to develop a practical OpenGL applica-

tion using Motif. The appendices explain how to obtain the book's source code

examples and the GLUT library source code via the Internet and provide a full

description of the OpenGL Utility Toolkit programming interface.

].'|| ACKNOWLEDGMENTS

Craig Groeschel, Randi Rost, and many of the aforementioned Silicon Graphics

engineers and managers provided invaluable feedback by reviewing this work.

Mark Segal deserves special thanks for his thorough proofreading and suggestions.

Brian Paul and a host of OpenGL Utility Toolkit users on the Internet assisted in the

debugging much of the source code developed for this book.

Much of the material in this book was developed in conjunction with my

"OpenGL and X" column, published in The X Journal. I thank the staff at SIGS

Publications for their publishing efforts, particularly Seth Bookey, Charles Bowman,

Kristina Jukodahr, and Steve Mikes.

Deirdre Tiffany's feedback, patience, and support meant the world to me. Words

cannot say enough.

There are others who have influenced this book indirectly, but fundamentally,

through their influence on my life. I count among these Nancy Denmark, John

Deuel, Michael Hicks, Thomas Kulick, Bernie Luksich, Peter Ostrin, my brothers

Mike and Matt, and certainly my parents Chris and Brenda. Thanks, and play safe!

--MJK

INTRODUC'rlON

Inc. (SGI), based on a decade of experience in computer graphics hardware and soft-

ware design. The GL in OpenGL stands for Graphics Library. To a graphics pro-

grammer, OpenGL is exactly that--a library of approximately 350 distinct graphics

routines. These routines allow a programmer to generate high-quality color images

efficiently. While OpenGL is well suited for interactive rendering of 3D graphics, it

can also be used for 2D graphics and imaging.

OpenGL is a standard originally developed by Silicon Graphics. Silicon Graphics

has turned over control of the OpenGL standard to the OpenGL Architectural

Review Board (ARB), which consists of leaders in the computer industry. Its found-

ing members included DEC, IBM, |ntel, Microsoft, and Silicon Graphics. The board

has since expanded to include (at the time of this writing) two more prominent

computer graphics companies: Intergraph and Evans & Sutherland. The collective

vision underlying OpenGL is that through a common, fast software interface for

programming graphics, 3D graphics can rapidly become a mainstream computer

technology. Much to the benefit of those using interactive 3D computer graphics,

OpenGL has realized this goal. Implementations of OpenGL are widely available,

and applications based on OpenGL are commonplace. In particular, OpenGL

implementations are now available from every major X Window System vendor.

OpenGL provides a layer of abstraction between graphics hardware and an

application program. Together, OpenGL's routines form an application program-

ming interface (API). The routines allow graphics primitives (points, lines, poly-

gons, bitmaps, and images) to be rendered to a frame buffer. Using the available

primitives and the operations that control their rendering, high-quality color images

of 3D objects can be generated.

The most commonly used programming languages for OpenGL are C and C+÷.

The OpenGL API is the saine for both languages. Nearly ail the programs in this

book are written in ANSI C; in Section 5.7, which discusses Open Inventor, a C++

program also appears. OpenGL bindings exist for other languages such as Ada and

FORTRAN. These bindings merely provide a way to call OpenGL routines from

these languages; the functionality of OpenGL is the saine in any language.

1.1.1 OpenGL's Design

The designers of OpenGL specify the graphics system as a state machine [36]* that

controls a well-defined set of drawing operations. The routines that OpenGL supplies

provide a means to manipulate OpenGL's state machine to generate the desired graph-

ics output. Figure 1.1 shows a simplified view of OpenGL's abstract state machine.

Don't be intimidated by the term "state machine"; it is not that complicated.

Think of OpenGL as a virtual device with lots of knobs and levers that controls the

way it operates. You feed geometric descriptions of what you want rendered (in the

form of vertices and images) into OpenGL. Based on the ways the levers and knobs

are set, OpenGL generates a pixelized 2D rendering of your data. For example, one

* References to the Bibliography appear in square brackets throughout this book.

WHAT IS OPENGL? 3

Figure 1.1 High-level, abstract OpenGL machine.

I P" Veex I

Operatione

Primitive

Aeombly

commands J

- I OperatlOns I

. _ I Per

Forapegr,

nt

ons

r

lever controls whether lighting calculations are done on your data, and a knob con-

trois the way your data is blended with other data already rendered. The OpenGL

state machine is hOt a black box. You can read back the value of almost every piece

of OpenGL state you can set. OpenGL's state machine has lots of advantages for

implementation and conceptual reasons. Specifying OpenGL as a state machine

results in a consistent, precise specification and eliminates ambiguity about what a

given operation does and does not do.

The model used for interpretation of OpenGL commands is client-server. This is

an abstract model; it does not demand that OpenGL be implemented as distinct cli-

ent and server processes. A client-server approach means that the boundary

between a program and the OpenGL implementation is well defined, to specify

clearly how data is passed between the application using OpenGL and the OpenGL

implementation. This allows OpenGL to operate over a wire protocol, the way the

X protocol operates, but OpenGL does not require that OpenGL rendering take

place in a separate process. Using a wire protocol means that ail OpenGL opera-

tions can be encoded in a stream of bytes that can be sent across a network. Not

requiring a wire protocol means that OpenGL can bypass the use of the protocol

when direct access to the graphics hardware is available.

In the case of the X Window System, OpenGL is implemented as an extension to

X, meaning that there is an X extension protocol for OpenGL (the GLX protocol).

So just as basic X programs can run on a remote computer while the results are dis-

played to a local workstation, X programs using OpenGL can do the saine.

The OpenGL specification is window systern independent, meaning that it provides

rendering functionality but does not specify how to manipulate windows or receive

events from the window system. This allows the OpenGL interface to be implemented

for different window systems. For example, OpenGL has been implemented for the X

Window System, Windows 95 and NT, OS/2, and the Power Macintosh. While

OpenGL itself is window system independent, for each window system, a window

, JNTRODUCTION

system dependent interface must be used to coordinate OpenGL's functionality with

that of the window system. Each distinct window system has its own interface, but

the basic functionality performed by this window system dependent interface is very

similar to what other window systems provide. The window system dependent inter-

face for the X Window System is called GLX. Note that GLX is both the name of

OpenGL's window system dependent programming interface for X and the naine of

OpenGL's X extension protocol.

One thing to keep in mind about OpenGL, particularly for the X Window System,

is that OpenGL is generally an extension to the base window system. While OpenGL

is becoming a standard feature of most current workstations, some workstations (typ-

ically older workstations and many X terminals) will not support the GLX extension

that provides OpenGL support) Section 1.3 provides further discussion of OpenGL's

X support and how to determine whether the GLX extension, and therefore OpenGL,

is supported by your workstation.

Graphics systems are often classified as one of two types: procedural or descrip-

tive. With a procedural interface, the program specifies exactly what to draw by

issuing a specific sequence of commands. With a descriptive interface, the program-

mer sets up a model of the scene to be rendered and leaves the method of drawing

the scene up to the graphics system. OpenGL is a procedural interface. In a descrip-

tive system, the programmer gives up control of exactly how the scene is tobe ren-

dered. Being procedural allows the programmer a high degree of control over the

way rendering is performed. Descriptive graphics systems are typically implemented

as a layer above a procedural interface. Two popular descriptive graphics systems

that render using OpenGL are Open Inventor [401 and IRIS Performer [35], both

developed by Silicon Graphics. Open Inventor is highly object-oriented and easy to

use. IRIS Performer is designed to optimize the rendering performance of visual sim-

ulation applications, such as flight simulators.

An overriding goal of OpenGL is to allow the construction of portable and

interoperable graphics programs. For this reason, OpenGL's rendering functionality

must be implemented in its entirety. This means ail the complex rendering function-

ality mandated by the OpenGL specification can be used with any OpenGL imple-

mentation. Previous graphics standards often allowed subsetting, meaning that not

ail rendering functionality was mandated; often the results were programs that

could hOt be expected to work on distinct implementations. To ensure compilant

implementations, every OpenGL vendor must validate its implementation using a

comprehensive set of conformance tests.

In practice, well-written OpenGL programs for the X Window System are quite

portable among X workstations from different vendors. Ail the programs presented

For such machines, the freely available Mesa library written by Bnan Paul at the University of Wiscon-

sin, provides an implementation of the OpenGL programming interface that does hot require the GLX

extension. While Mesa is portable and free, it does hot benefit from accelerated graphics hardware and

cannot emulate some detaiis of GLX's operation. To find out how to obtain Mesa, see Appendix A.

WHA]" IS OP[NGL?

in this book have been tested to ensure that they are good examples of writing por-

table OpenGL programs for X.

While OpenGI_ is portable, it is also fast, because OpenGL leverages Silicon

Graphics's design experience, acquired from a decade of building high-performance

graphics workstations. Specialized hardware for 3D rendering greatly improves

graphics performance. OpenGL is designed with such hardware in mind. As com-

puter technology advances, you can expect higher OpenGL performance from

newer hardware for the same money or less. Many of the ways in which hardware

can accelerate OpenGL performance are discussed in Section 6.5.

OpenGL is extensible, meaning that additional functionality can be added to

OpenGL incrementally as new features are warranted. While any OpenGL imple-

mentor can add a new extension, OpenGL's ARB coordinates a set of common

extensions. Many of the available extensions to OpenGL are described later, in Sec-

tion 5.6. By their nature, extensions will hOt exist in ail OpenGL implementations.

Please note that extensions to OpenGL are distinct from extensions to the X Win-

dow System. While GLX is an X extension to support OpenGL, OpenGL exten-

sions do hOt require new X extensions.

1.1.2 History of OpenGL

OpenGL is the successor to a graphics library known as IRIS GL, developed by Sili-

con Graphics as a device-independent graphics interface for use across a full line of

graphics workstations. IRIS GL [27] is used by more than 1,500 3D graphics appli-

cations. IRIS GL has evolved over the last decade and has been implemented on

numerous graphics devices of varying sophistication.

OpenGL is not backward compatible with IRIS GL. OpenGL has removed dated

IRIS GL functionality and made functionality more general in places. The most vis-

ible difference to programmers is that the routines and symbols that make up the

OpenGL API ail start with either 0:t or Gr., to avoid name space conflicts. IRIS GL

provides routines for managing windows and obtaining user input. To gain window

system independence and focus OpenGL on rendering functionality, IRIS GL's win-

dowing functionality was not made part of OpenGL. Ail window system dependent

portions of IRIS GL have been eliminated from OpenGL. What has been preserved

is the spirit of the API. OpenGL retains IRIS GL's ability to render 3D objects

quickly and efficiently. IRIS GL is network-extensible, but only by means of a pro-

prietary protocol called Distributed GL (DGLI. In contrast, OpenGL's GLX proto-

col is both network-extensible and an open standard.

Before OpenGL, every vendor of 3D graphics workstations invented its own

functionally similar, but incompatible, programming interfaces for accessing that

vendor's 3D hardware (IRIS GL was just one such proprietary interface). The result

was that 3D graphics programs for various makes of computer workstations were

quite incompatible.

INTRODUCTION

OpenGL was proposed as a standard to bring 3D graphics programming into the

mainstream of application programming by providing a standard, common, high-

performance interface to interactive 3D graphics hardware. This was the reason

that the OpenGL ARB was formed. Silicon Graphics turned over the OpenGL stan-

dard to the ARB, which now directs and approves OpenGL's further development.

Currently, over 30 companies have licensed OpenGL and many commercial imple-

mentations are available. Numerous universities have also licensed OpenGL for

educational and research purposes. Table 1.1 presents a short time line of important

events in the history of OpenGL.

OpenGL continues to evolve as a standard, and the ARB continues to standard-

ize extensions for OpenGL, enhance the core OpenGL standards, provide bindings

Table 1.1 Important milestones in the development of the OpenGL standard

Yar

1982

1987

1989

1990

1991

1992

1993

1994

1995

1996

Event

Initial development of the IRIS GL.

IRIS GL adds glvertex3 f-style vertex commands.

Kurt Akeley attempts detailed IRIS GL specification.

Disrributed GL (DGL) protocol released (precursor of GLX protocol).

lRIS GL "mixed model" interface released (precursor of GLX API).

Silicon Graphics commits itself fo an OpenGL effort.

Kurt Akeley and Mark Segal begin work on OpenGL specification.

First OpenGL Architectural Review Board (ARB) meetings held.

First OpenGL sample implementarion released to licensees.

OpenGL Re[erence Manual published.

First commercial OpenGL released by DEC and Silicon Graphics.

OpenG L Prograrnrning Guide published.

Open Inventor toolkit (based on OpenGL) released.

OpenGL specification and manual pages distributed via the lnternet.

Microsoft releases Windows NT 3.5, including OpenGL.

Work on standard OpenGL extensions begins.

OpenGL Utility Toolkir distribution announced.

GLX 1.1 approved.

Brian Paul releases Mesa, a free implementation of the OpenGL API.

Intergraph and Evans & Sutherland join the ARB.

GLU 1.2 approved.

Sun and Hewlett-Packard announce plans fo adopt OpenGL.

OpenGL add-on to Microsoft Windows 95 made available.

OpenGL 1.1 and GLX 1.2 publicly released.

OPENGL's RENDERING FUNCI'IONALITY

for additional computer languages (such as those for Ada and FORTRAN), and

promote the OpenGL standard.

The original OpenGL specification was version 1.0. Since that time, the ARB has

approved the upwardly compatible version 1.1. Most of the additions to 1.1 were

extensions to 1.0 that were deemed useful enough tobe made part of the OpenGL

standard itself. This practice of integrating proven extensions into the core OpenGL

standard allows new features tobe released and tested before their inclusion in the

core OpenGL standard. By regularly integrating successful extensions into the core

OpenGL, the ARB can ensure that the OpenGL standard will stay current and

coherent.

The GLX specification has also been revised. GLX 1.1 and GLX 1.2 further

enhance OpenGL's integration with the X Window System. Section 6.1 explains the

various revisions of OpenGL and GLX. The revisions of the OpenGL standards

mostly add advanced features. Unless otherwise noted, the material in this book

applies to the OpenGL 1.0 and GLX 1.0 standards and compatible revisions.

1.20PENGL'$ RENDERING FUS«IOLLIr

OpenGL is nota high-level 3D graphics interface. When you build a graphics pro-

gram using OpenGL, you start with a few simple geometric and image primitives.

The sophistication comes from combining the primitives and using them in various

modes. This section describes OpenGL's rendering functionality. For now, assume

that OpenGL is initialized and things are set up so that OpenGL commands render

to an onscreen window. The details of initializing OpenGL for rendering are dis-

cussed later.

1.2.1 Geometric Primitives

A geometric primitive renders a simple shape, such as a point, a line, or a triangle.

Using these primitives, complex 3D scenes can be rendered. Often scenes are con-

structed using thousands of individual triangles. Figure 1.2 shows the available geo-

metric primitives in OpenGL. Notice the ordering of the vertices, in particular for

primitives such as the GL_TRIANGLE_STRIP and the GL_TRIANGLE_FAN.

A primitive is described using a set of vertices. Figure 1.3 shows one way a poly-

gon might be specified. To begin a geometric primitive, the glBegin routine passes

in the primitive type as an argument. Then a list of vertex coordinates are specified.

Each glVertex3f call sends a vertex represented as three floating-point values

(hence the 3f suffix).

Along with the coordinates of each vertex, per-vertex information such as color,

normals, edge drawing, and texturing can be specified between a glBegin and a

gl.nd. Notice how gcoor3f is used to change the current color. Each vertex is

OP[NGL's RENDERING FuNCrlONALITY

drawn according to the current color. An OpenGL primitive is completed by calling

glEnd.

Looking at the way OpenGL routines are used, you can see OpenGL's naming

convention. Ail OpenGL routines begin with ol, and the remainder of each routine

name is in mixed case (for example, olcopyP±xels). The OpenGL constants ail

begin with Gr,_, and use only capital letters, and words are separated by underscores

(for example, Gr,_TRXANGr,V._FAN). OpenGL defines its own set of basic data types,

ail of which begin with Gr, but are otherwise lowercase (for example, Gr,float and

Gr,sizei). While these OpenGL basic data types are hOt guaranteed to be based on

the built-in C language types, they typically are for most OpenGL implementations.

Ail 14 data types used by OpenGL can be found in Table 1.2

OpenGL tends to be function call intensive. Instead of using a single routine with

many complex parameters, OpenGL's API favors multiple function calls with simple

Table 1.2 The OpenGL data types. Because ANSI C allows the sizes of basic data

types to be implementation-dependent, OpenGL uses these types to ensure that its

data types are properly sized. For Unix workstations, the OpenGL data types' map-

ping to C data types is straightforward--for example, Gr.float to loat and

GLushort to unsigned short. Types such as GLclampf are different from

Gr,loat in the assumptions OpenGL makes about values considered valid, but hOt

in the underlying type used to represent the types. Typically, ANSI C prototypes and

default type conversions make explicit casting between OpenGL and C data types

unnecessary.

OpenGL Type Minimum Number of Bits Description

GLboolean 1 Boolean

GLbyte 8 Signed integer

GLubyte 8 Unsigned integer

GLshort 16 Signed integer

GLushort 16 Unsigned integer

GLint 32 Signed integer

GLuint 32 Unsigned integer

GLsizei 32 Nonnegative integer

GLenum 32 Enumerated integer value

GLbitfield 32 Bit field

GLfloat 32 Ç]oating-point value

GLclampf 32 Floating-point value

clamped between 0 and 1

GLdouble 64 Floating-point value

GLcl ampd 64 Floating-point value

clamped between 0 and 1

10 INTRODUCI"ION

parameters. OpenGL primitives are constructed by calling multiple OpenGL rou-

tines. Calling multiple routines gives the program more flexibility and more control

over the primitives generated.

In many cases, OpenGL is flexible about the format that can be used to pass data

to OpenGL. For many basic operations, there are multiple versions of the same

basic routine and each version accepts data in a different format. For example,

glVertex3i accepts integers, while glVertex3f and glVertex3d take single- and

double-precision floating-point, respectively. For routines in a family such as

glvertex, which generates vertices, or glcoor, which sets the current color, the

suffix of routines in a given family determines the data format and the number of

parameters passed to the routine. So glVertex2f takes a two-dimensional vertex,

whHe glVertex3f takes a three-dimensional vertex. The f sufflx indicates that a

GLfloat is expected. Table 1.3 shows the correspondences between suffix letters

and OpenGL parameter types.

The v suffix indicates that the parameters for a routine are passed via a pointer to

a vector. For example, you could call

static GLfloat vertex[3] = { 1.0, 5.5, -32.0 };

glVertex3fv(vertex);

Having several functionally equivalent routines that accept different data types

allows the programmer flexibility in deciding how to store the data. A programmer

whose data is in integer format does hOt want to convertit to floating-point to pass

it to the graphics system. Another programmer does hot want to convert floating-

point data into integers. Conversions between data types can be expensive. High-

performance graphics hardware can be designed to accept multiple data formats

and offload the task of format conversion from the host processor.

You can start to ste why it makes sense to consider OpenGL as a state machine.

Commands such as glColor3f change the state of the current color. Subsequent

Table 1.3 Correspondences between OpenGL routine name suffix letters and

OpenGL parameter types

Suffix Letters Corresponding OpenGL Type

b GLbyte

ub GLubyte

s GLshort

us GLushort

i GLint

ui GLuint

f GLfloat

d GLdouble

OPENGL's RENDERING FUNCTIONALITY 11

vertices use the updated current colon glBegin puts OpenGL into a state in which

it can start drawing the specified primitive. The multiple glVertex routines load

the vertices one ata rime for a given primitive. Nearly ail of OpenGL's state that can

be set by the programmer can also be queried by the programmer. For example, the

glGetFIoatv(GL_CURRENT_COLOR, &float_array) call will retrieve the setting of

the current colon

1.2.2 Pixel Path Operations

In addition to OpenGL's geometric primitives, the other way to get pixels rendered

(and the way to read them back) is to use OpenGL's pixel operations. Images (2D

arrays of pixel data) are displayed using glDrawPixels and are retrieved from the

frame buffer using glReadPixels. (The frame buffer is the place where rendered

pixels are stored.) OpenGL also provides a means to copy pixel data from one

region of the frame buffer to another with gl¢opypixels. In addition to rectangu-

lar images, OpenGL provides a bitmap primitive, generated by glBitraap. Bitmaps

are like images, but instead of an array of pixel values, a bitmap is an array of bits

that serves as a drawing mask. Typically, bitmaps are used for rendering characters.

The location at which an image or bitmap is rendered is determined by the current

raster position which is set by the glRasterPos family of routines.

While transferring an image to and from the screen can be quite simple, OpenGL

has a sophisticated pixel path that allows pixel data to be zoomed, biased, scaled,

looked up, and further processed on the way to and from the frame buffer. This

makes OpenGL useful hOt just for 3D graphics, but for image processing tasks as

well. There are OpenGL extensions that give OpenGL still more image-processing

capabilities, allowing it to perform operations such as histograms and convolutions.

OpenGUs pixel path is discussed in more detail in Section 5.4.

OpenGL's geometric primitives and pixel operations find a special synergy in

OpenGL's texture mapping facilities. Texture mapping is a feature that allows an

image to be projected onto a geometric primitive such as a 3D triangle. You can

think of texture mapping as the logical equivalent of stretching virtual wallpaper

over polygons you render. Texture mapping is discussed in more detail in Section

5.2. The important point here is that when texture images are downloaded into

OpenGL, they are passed through the same pixel path used by images, so ail the

facilities that bias, scale, and perform lookup table operations can be applied to tex-

tures.

1.2.3 Two Color Models

OpenGL has two different color models: RGBA and color index. RGBA is generally

the preferred model.

The glColor3f call has already been demonstrated, but hOt explained. This call

assumes OpenGL's RGBA color mode. The routine takes three floating-point

OPENGL's RENDERING FUNCI'IONALITY

not have any specific routines to allocate colors. For example, in X, an Xlib color

allocation routine such as xA:t:tocco:tor would be used. The gll:nclex family of rou-

tines is used to set the current color index. The advantage of color index is that the

color of a given pixel value can be dynamically changed. There is a level of indirec-

tion (the colormap) between the pixel values in the frame buffet and the colors on

the scrcen. Typically, color index windows will not support more than 8 or 12 bits

of index resolution per pixel, simply because the size of the required colormaps.

Because color index lacks a fixed relationship for the colors available for display,

facilities such as lighting, fog, and shading are more complicated than in RGBA

mode. In color index mode, these facilities, require proper setup of the colormap for

them to operate correctly; other facilities such as texture mapping and alpha blend-

ing, are not available at ail.

Color index mode is typically used on graphics hardware that does not have

enough color resolution to adequately represent an RGB color space. For exam-

pie, a machine with 8 bits of pixel resolution bas to split up those 8 bits among

red, green, and blue components to form an RGB color space. This is typically

done by giving 3 bits to red, 3 bits to green, and 2 bits to blue. This means there

are 8 gradations of red and green and 4 gradations of blue. The resulting color

space makes 8-bit RGBA scenes exhibit quantization errors, so subtle color varia-

tions are lost.

A benefit of color index is that those same 8 bits of resolution permit a palette of

256 distinct colors that can be determined based on the scene being displayed. A

scene showing a rosy sunset could fill the palette with many red, yellow, and orange

shades and few green shades and accurately capture the colors of the scene. How-

ever, RGBA has a fixed colormap arrangement that does not have enough red, yel-

low, and orange shades to represent the scene adequately with only 8 bits of

resolution.

With plenty of bits of resolution per pixel (24 bits or more), color representation

is no longer an issue, and the complications and limitations of color index make

RGBA far superior. Also, the writability of color index colormaps becomes a serious

drawback when multiple applications simultaneously attempt to display windows

that set up differently loaded logical colormaps. Windows whose requested colors

are not loaded will display unexpected colors.

As technology continues to drive down video memory prices, graphics hardware

with higher resolution frame buffers will become common. For this reason and

because color index is harder to use and more limiting, nearly ail the examples in

this book will use the RGBA color model.

1.2.4 OpenGL Modes and Other State

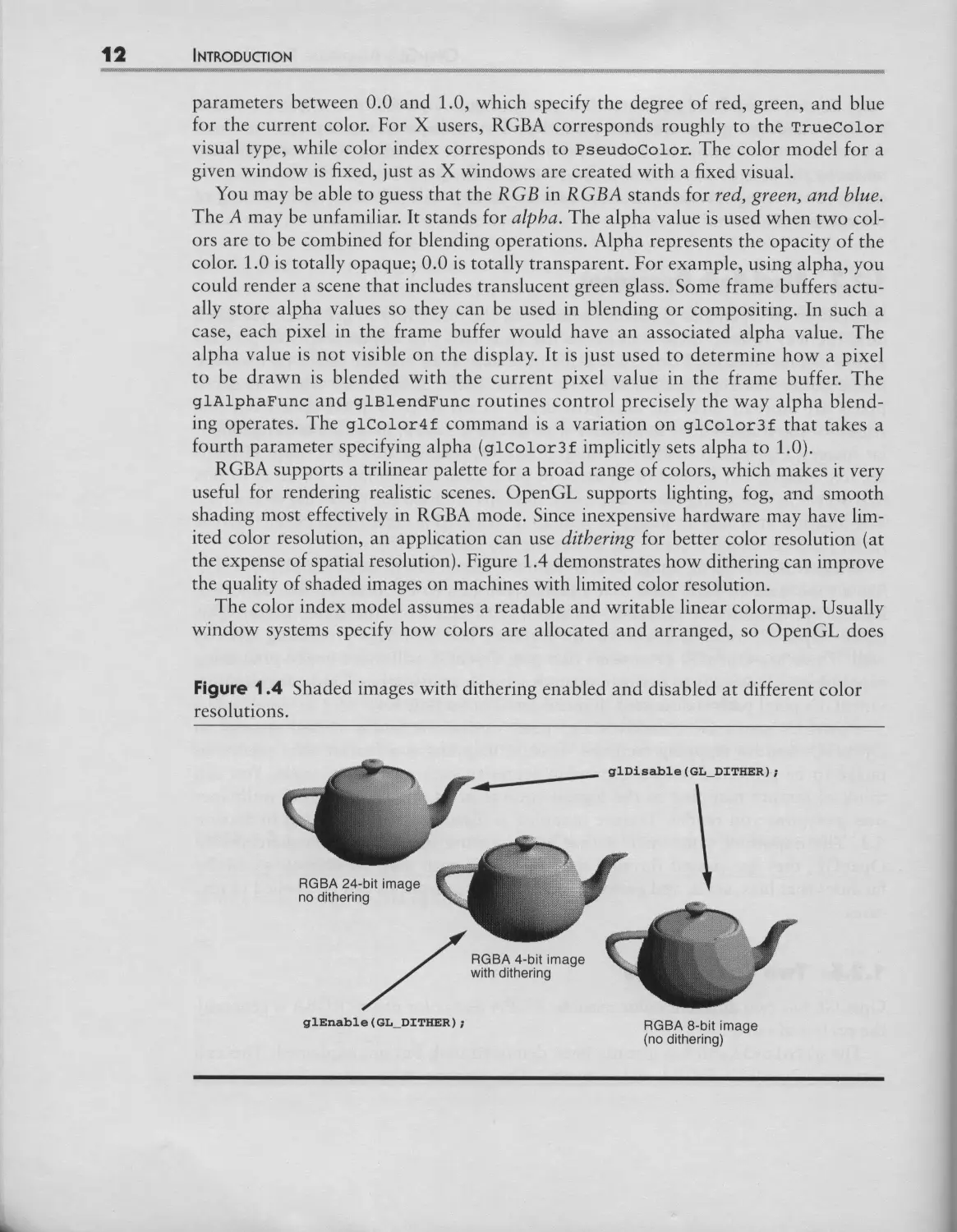

Many modes in OpenGL, such as dithering, are enabled and disabled using the

glV.nabe and gt)isabe commands, respectively. For example, dithering is enabled

by calling O.nable(GL_t)IT.R). Afterwards, drawing is done with dithering

t4 INI"RODUCrlON

enabled. You can think of glEnable and glDisable as ways to affect the operation

of the OpenGL state machine. The enable/disable state of OpenGL modes can be

retrieved using glIsEnabled.

Many modes have additional parameters that control the way the mode operates.

For example, lighting calculations are based on many different lighting parameters.

Parameters associated with the lighting model are controlled using glr,ighttodelf;

the characteristics of individual light sources are controlled using glr, ightf.

OpenGL's lighting model is described in Section 5.1, but here is an example in

which lighting is enabled with various lighting parameters being set:

GLfloat position[] = {0.0, 0.0, 1.5, 1.0};

glLightModelf(GL_LIGHT_MODEL_LOCAL_VIEWER, GL_TRUE);

glLightModelf(GL_LIGHT_MODEL_TWO_SIDE, GL_FALSE);

glLightfv(GL_LIGHT0, GL_POSITION, position);

glEnable(GL LIGHTING);

The first argument of glLightModelf indicates which OpenGL lighting parameter

to update; the final argument supplies the new parameter value. Complex facilities

such as lighting, fog, and texture mapping have many different parameters to con-

trol their operation and are activated by calling glEnable. There are also much

simpler facilities for things such as controlling the width (in pixels) of rendered

lines. The glLineWidth routine sers the line width. Because line width is something

inherent in lines, there is no need for a glEnable option for line width; there is sim-

ply a reasonable default (1.0).

While there are many OpenGL routines for setting parameters for various

OpenGL capabilities, a single family of routines retrieves most OpenGL state:

glGetBooleanv glGetDoublev, glGetFloatv, and glGetIntegerv. For example:

GLint state;

glGetIntergerv(GL LIGHT MODEL TWO SIDE, &state);

After calling glGetIntergerv, state is updated with the current two-sided light-

ing state.

OpenGL state that requires more than one enumerant to naine it (for example,

light zero's position, which takes Gr,_LIGST0 and Gr,_POSITION to specify the state)

requires a special retrieval routine (such as gGetr,ightv).

OpenGL is designed to have sensible default state. When you first instantiate a

context for OpenGL rendering (an instance of an OpenGL state machine that pro-

grams use for rendering), ail OpenGL state is initialized to predefined default val-

ues. Typically, most modes are disabled by default.

State changes immediately affect subsequent rendering. For the most part, the

commands to change OpenGL state can be executed in whatever order makes sense

OPENGL's RENDERING FUNC'rlONALITY 1

to the program. There are exceptions, however. State changes are not allowed when

a geornetric primitive is being specified (rneaning within a glBegin-and-glV.nd

pair).

Often, programs change various OpenGL state and later need to revert to the

state prior to the changes. Sometirnes (often in the case of software libraries layered

on OpenGL), the program does hOt know OpenGL's current state. While the pro-

grarn can retrieve the current values of any state it intends to change, retrieving state

can be slow relative to the rate at which commands can be sent to fast graphics

hardware. The glPushAttrib and glPopAttribute calls manage an attribute

stack that allows groupings of OpenGL state (such as all the lighting-related state)

tobe saved (pushed onto the stack) and later restored (popped from the stack).

The argument of glPushAttrib is a bit mask indicating what state to push.

glPopAttrib needs no argument, since it iust restores the state before the last

glPushAttrib. For example:

glPushAttrib(GL_LIGHTING_BIT);

modifyOpenGLlightingState();

doSomeRenderingWithChangedState();

glPopAttrib();

A/ter execution of this code fragment, OpenGL's lighting state is what it was before

glPushAttrib was called. This is a good deal more convenient than attempting to

retrieve and restore each piece of lighting state individually.

1.2.5 Ancillary Buffers

Typically, the results of OpenGL rendering are directed to a window displayed on a

user's computer. Itis also possible to render OpenGL into an in-memory data struc-

ture (such as an X pixmap) or into dedicated memory within a graphics hardware

device that cannot be displayed.

OpenGL treats the image it renders as an array (or grid) of pixels. The terre

/rame buffer refers to the memory within a graphics hardware device that maintains

the image displayed on a user's computer screen. OpenGL also uses the terre to re/er

to the array of pixel state rnaintained by a drawing surface regardless of its hard-

ware representation. For example, memory for an X pixmap is considered a/rame

bu/fer by OpenGL.

For most 2D rendering interfaces (such as Xlib), the/rame bu/fer simply contains

pixel values that represent a displayable image. OpenGL perrnits considerably more

state to be maintained within the/rame bu/fer. OpenGL considers the/rame bu/fer

to be a set of buffers. Each pixel within the/rame bu/fer has an associated value in

each bu/fer. The most important type of bu/fer is the color (or image) bu/fer, which

contains the color information that represents a displayable image. OpenGL can

actually support more than one image bu/fer within a single/rame bu/fer. As will be

16 INTRODUC'rloN

explained, multiple image buffers make possible double-buffered animation and ste-

reoscopic display.

OpenGL also supports nonimage buffers, which are often referred to as ancillary,

or helper, buffers. Several types of ancillary buffers are supported by OpenGL. The

next subsections will explain how OpenGL's various ancillary and image buffers are

used.

The set of image and ancillary buffers supported by a given frame buffer type is

referred to as a frame buffer configuration. A window system may support multiple

frame buffer configurations, each supporting a different set of buffers. Typically,

multiple windows of different configurations can be displayed atone time. Every X

window or pixmap has a single frame buffer configuration, though.

1.2.5.1 The Depth Buffer

In the rendering of a 3D scene, there is a general need to ensure that obiects that

should be obscured by closer objects in the scene are hot displayed. This is the prob-

lem of hidden line and hidden surface removal (HLHSR).

A general method for solving this problem is the use of a depth buffer (also com-

monly referred to as a Z buffer). Though the screen has only two dimensions (width

and height), 3D graphics attempts to simulate a third dimension. The depth buffer

maintains a depth value for every pixel. When 3D primitives are rendered by

OpenGL, they are rasterized into a collection of fragments. Each fragment corre-

sponds to a single pixel to be updated and includes color and depth information.

The X and Y locations corresponding to a fragment determine which pixel in the

frame buffer the fragment is intended to update. A fragment's depth (or Z value) is

used to determine how "near" the fragment is to the viewer. When OpenGL's depth

testing mode is properly enabled, the fragment is drawn only if its Z value is

"nearer" than the current Z value for the corresponding pixel in the depth buffer.

When the fragment is drawn into the frame buffer, its Z value replaces the previous

value in the depth buffer. Normally, when the scene starts to be rendered, the entire

depth buffer is cleared to the "farthest" value. As a 3D scene is rendered, the depth

buffer automatically sorts the fragments being drawn so only the nearest fragment

at each pixel location remains. Objects visually behind other objects are automati-

cally eliminated from the scene. The resolution of the depth buffer improves the

accuracy of depth testing; most depth buffers have from 24 to 32 bits of precision

per pixel.

You can see the benefit of using depth buffering in Figure 1.5. It shows two ver-

sions of a scene that displays intersecting 3D gears. In one window, OpenGL's depth

testing is enabled with glEnable(GL_DEPTH_TEST), while in the other window

depth testing is disabled with glDisable(GL_DEPTH_TEST). The window using

depth testing looks as it should, but the one with depth testing disabled shows por-

tions of a gear that should be behind the second gear. There are also places where

the "back" of a gear is displayed where the "front » should be showing (this is the

reason the lighting looks incorrect).

OPENGL's RENDERING FUNC'I'IONALITY lç

1.2.5.4 Double Buffering

Double buffering means having two image buffers, one visible front buffer and

another, nonvisible, back buffer. Unlike simple 2D rendering, generating 3D images

may take substantial time. Also, use of the depth, stencil, and accumulation buffers

all means that the image being drawn at any moment may be quite different from

the final image. It would be quite distracting for the viewer to see each scene while it

was "under construction" and would destroy the illusion of a smoothly animated

scene. Double buffering allows one image to be rendered while another is being dis-

played.

OpenGL supports this use of front and back image buffers. The glDrawBuffer

routine can be used to specify which buffer is the target of rendering operations. To

render to the back buffer, a program calls glDrawBuffer(GL_BACK). A window sys-

tem specific routine makes the back buffer visible. For X, that routine is glXSwap-

Buffers.

Double buffering is often achieved by rendering into a nonvisible image buffer in

main memory and then quickly copying the buffer's contents to screen memory. A

better alternative is to build hardware that actually supports two sets of image buff-

ers. Then the cost of a buffer swap can be extremely low, since no data has to be

copied. Instead, the video controller just changes to scanning image pixels out of the

other buffer. This technique is shown in Figure 1.13 and is further discussed in Sec-

tion 6.5.5.

1.2.5.5 Stereo

Stereo is similar to double buffering in that more than one image buffer is supported.

In addition to front and back buffers, left and right buffers are provided. (Typically

stereo and double buffering are combined, requiring four image buffers; this is some-

times called quad-buffering.) Special stereo video hardware can alternate between

scanning out the left and right buffers for every screen refresh. Viewing goggles that

synchronize with the vertical refresh of the screen alternately open and close LCD

shutters so that the left eye sees the left frame and the right eye sees the right frame.

If a program carefully draws the scene twice with slightly different perspectives in the

left and right buffers, the viewer experiences an optical illusion of 3D.

While double buffering is common on graphics workstations, stereo requires spe-

cial hardware and tends to be rather expensive, so most OpenGL implementations

do hOt support stereo.

1.2.6 Modeling and Viewing

One of the most difficult initial hurdles in learning 3D graphics programming is

how to properly set up a scene and the parameters to define a reasonable view of

that scene. It is very easy to get a blank window because the viewing parameters for

the scene are hOt properly initialized.

2J) JNTRODUCTION

Figure 1.7 Stages of vertex transformation.

Object = JModelVlew

v jMatrlx

Coordinates J

Eye

Coordinates

Projection

Matrix

L Perspective I N°emvîoe I

Division [Coordlnates J

Clip

Coordinates I

3D computer graphics uses matrix transformations to orient, view, clip, and map

the model to the screen. OpenGUs various stages in mapping vertices in object coor-

dinates into pixels in window coordinates are shown in Figure 1.7.

An OpenGL programmer is responsible for loading the modelview and projec-

tion matrices. The modelview matrix determines how the vertices of OpenGL prim-

itives are transformed to eye coordinates. The projection matrix transforms vertices

in eye coordinates to clip coordinates.

A number of OpenGL routines deal with manipulating these matrices. The

glHatrixHode routine is called with an argument of G._MODE.VIEW or

GL PROJECTION to specify the matrix to be modified. Then glLoadIdentity may

be called to set the currently modifiable matrix to the identity matrix. Routines such

as glRotatef, glTranslatef, and glscalef may be called to manipulate the cur-

rently modifiable matrix, glLoadHatrixf loads a specific matrix; glHultatrixf

multiplies the current matrix by some specified matrix and stores the resuh as the

current matrix. This book will not explain the theory behind modelview and projec-

tion matrices and will hOt provide much tutorial material on their use. Any intro-

ductory 3D graphics text will cover the topic in detail.

OpenGL provides matrix stacks that, like the attribute stack, allow you to save

and restore the modelview and projection matrices. Use glPushatrix and

glPopatrix to save and restore matrices.

OpenGL and its utility library, called GLU (described in Section 1.4), include sev-

eral routines for initializing the modelview and perspective matrices: glFrustum,

glOrtho, gluOrtho2D, gluPerspective, and so on. To help initialize the model-

view matrix, the gluLookAt routine defines a base viewing transformation. Figure

1.8 shows how changing the projection matrix and the base modelview matrix

affects the way a scene is rendered.

The final step in establishing a view of your scene is the viewport transforma-

tion. It determines how the scene will be mapped onto the computer screen. The

GLX: THE GLUE BETWEEN OPENGL AND X

Figure 1.10 Example of hierarchical display list usage. The single glCallLSst ( 3 )

call will generate 17 OpenGL commands.

IVertex2i(O, O);

glEnd();

.......................... Create display list 5

to render a square.

glPushMatrix | ) ;

Create display list 3.

Execute display list 3

to tender two squares

play lists can even call other display lists, allowing hierarchies of display lists as

shown in Figure 1.10. For networked 3D applications, display lists can greatly min-

imize the network protocol bandwidth needed and increase performance. The rou-

tines glNew.$st and glv.nd.ist are used to create a display list. A created display

list can be executed using the gcalV&st routine.

One thing to keep in mind about OpenGL is that the features described above

are hOt isolated functionalities. Each feature can be combined with others for

advanced effects. For example, lighting, fog, display lists, texture mapping, and

double buffering can ail be used simultaneously.

OpenGL has still more capabilities, but their full introduction is beyond the scope

of this chapter. Explanations and sample code in later chapters, particularly Chapter

5, provide more explanation of many of OpenGL's features and demonstrate their use.

1.3 GLX: THE GLUE BETWEEN OPENGL AND X

As explained earlier, OpenGL is window systern independent. This means that the

basic OpenGL routines do not describe how an OpenGL context is created or how

to create a window for use with OpenGL rendering. Instead, OpenGL leaves the

details of window creation and other window management tasks to the native win-

dow system. This means OpenGL can be implemented for the X Window System,

Windows NT, or any future window system.

Since the OpenGL specification does hOt describe the way OpenGL integrates

with the window system, a supplemental specification must describe the way

OpenGL integrates with a particular window system. GLX is an extension to the

cote X protocol for communicating OpenGL commands to the X server, h also

4 INTRODUC'rlON

supports window system specific operations such as creating rendering contexts for

OpenGL rendering and binding those contexts to windows.

You can tell whether a particular X server supports OpenGL by running the

xdpyinfo (X display info) command and looking for Gr.x in the list of supported

extensions. For example, on Unix systems, you can use the command

xdpyinfo I grep GLX

If GLX is printed, OpenGL is supported.

GLX defines an API that provides the routines (ail prefixed by glX) for interfac-

ing OpenGL with X. GLX also defines a byte-stream protocol for supporting

OpenGL as an X server extension. The GLX protocol allows 3D applications run-

ning on workstations from different vendors to interoperate in the saine way as the

X protocol provides network-extensible 2D graphics interoperability. Be aware that

the terre GLX is sometimes used to describe the GLX protocol and sometimes the

API. Because the API hides the details of the GLX protocol from the programmer,

most of the time GLX is used to refer to the API.

1.3.1 A QuJck Survey of GLX

can support different visuals to describe the different types of windows supported by

the server. When a window is created, a visual is specified that determines the visual

type and frame buffer capabilities of the newly created window. For the core X pro-

tocol, a visual determines how pixel values are mapped to colors on the screen. X

treats a drawable as basically a 2D array of pixels, but OpenGL has a much more

sophisticated view of a drawable's frame buffer capabilities. GLX overloads the core

X notion of a visual by associating additional information about OpenGL's frame

buffer capabilities. In addition to an image buffer, OpenGL can support the types of

ancillary buffers discussed in Section 1.2.5. For example, an OpenGL-capable win-

dow might also have a stencil buffer and a depth buffer. Multiple different frame

buffer configurations can be supported by a single X server by exporting multiple

visuals. The specific OpenGL-capable visuals an X server provides depend on the

OpenGL implementation and the available graphics hardware resources.

Ail OpenGL implementations for the X Window System rnust support at least

one RGBA visual. A color index visual is also required if the X server supports

PseudoColor or StaticColor visuals. These required visuals must support a sten-

cil buffer of at least 1 bit and a depth buffer of at least 12 bits. The required RGBA

visual must have an accumulation buffer. The alpha component of the image buffer

is not required for the RGBA visual (but alpha is still used in ail rendering calcula-

tions). Many implementations will supply many more than two visuals.

The GLX API supplies two routines, glXGetConfig and glXChooseVisual, to

help programmers select an appropriate visual. Once the appropriate visual is

selected, xCreatewindow creates the window with the selected visual.

GLX: THE GLUE BETWEEN OPENGL AND X

GLX supports offscreen rendering to pixmaps. First create a standard X pixmap

of the desired depth, using xcreatepixmap. Then call glXCroatoGLXPixraap with

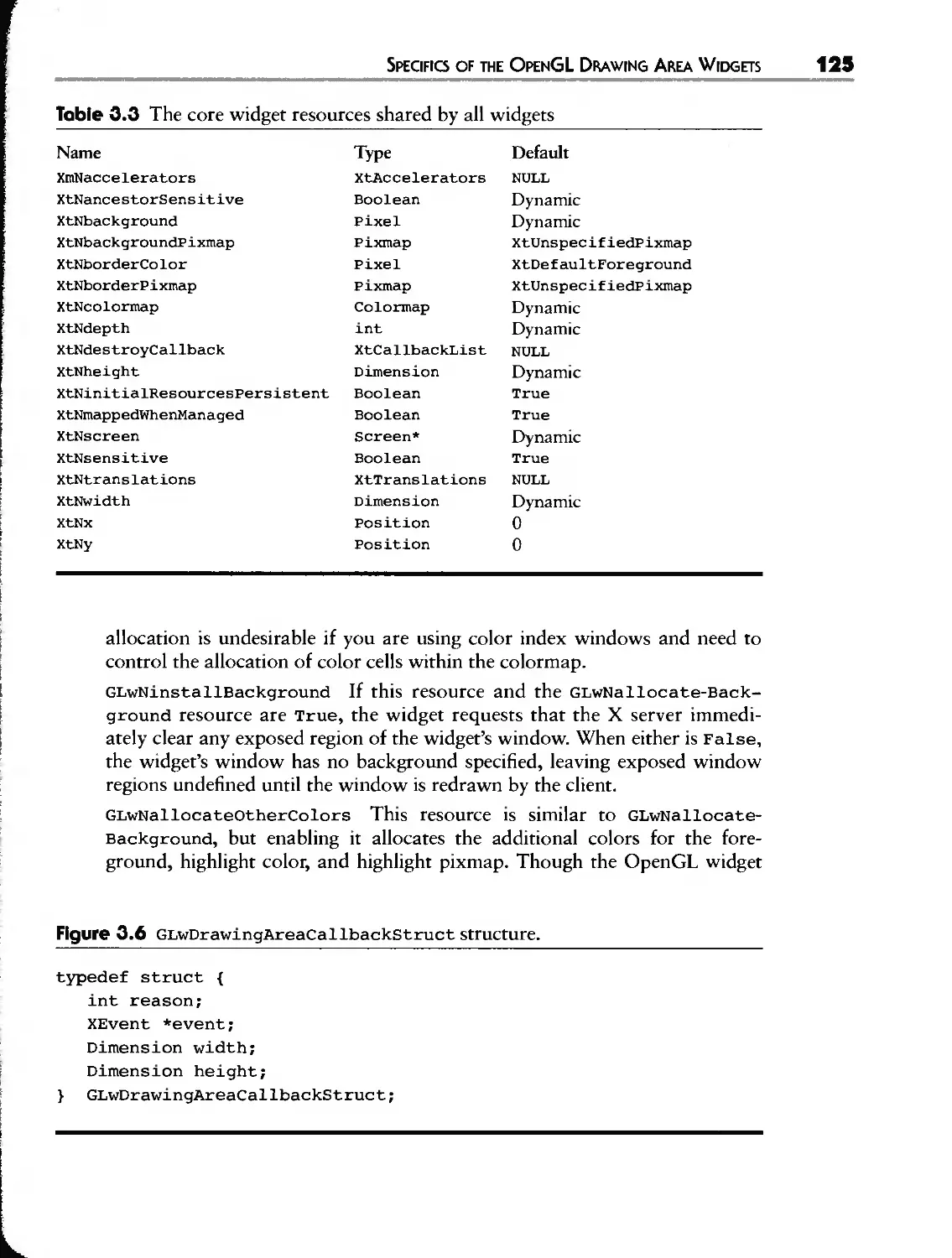

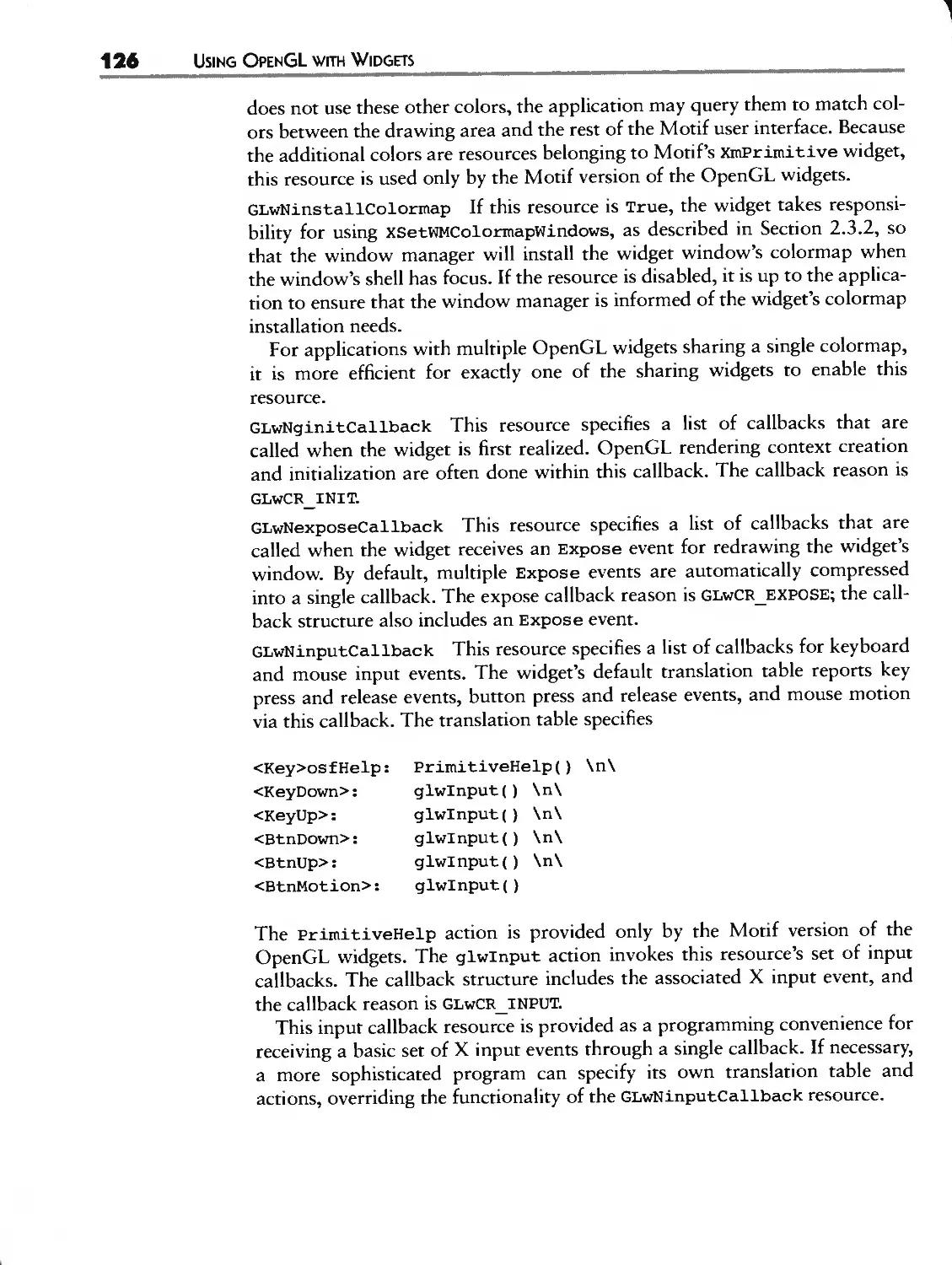

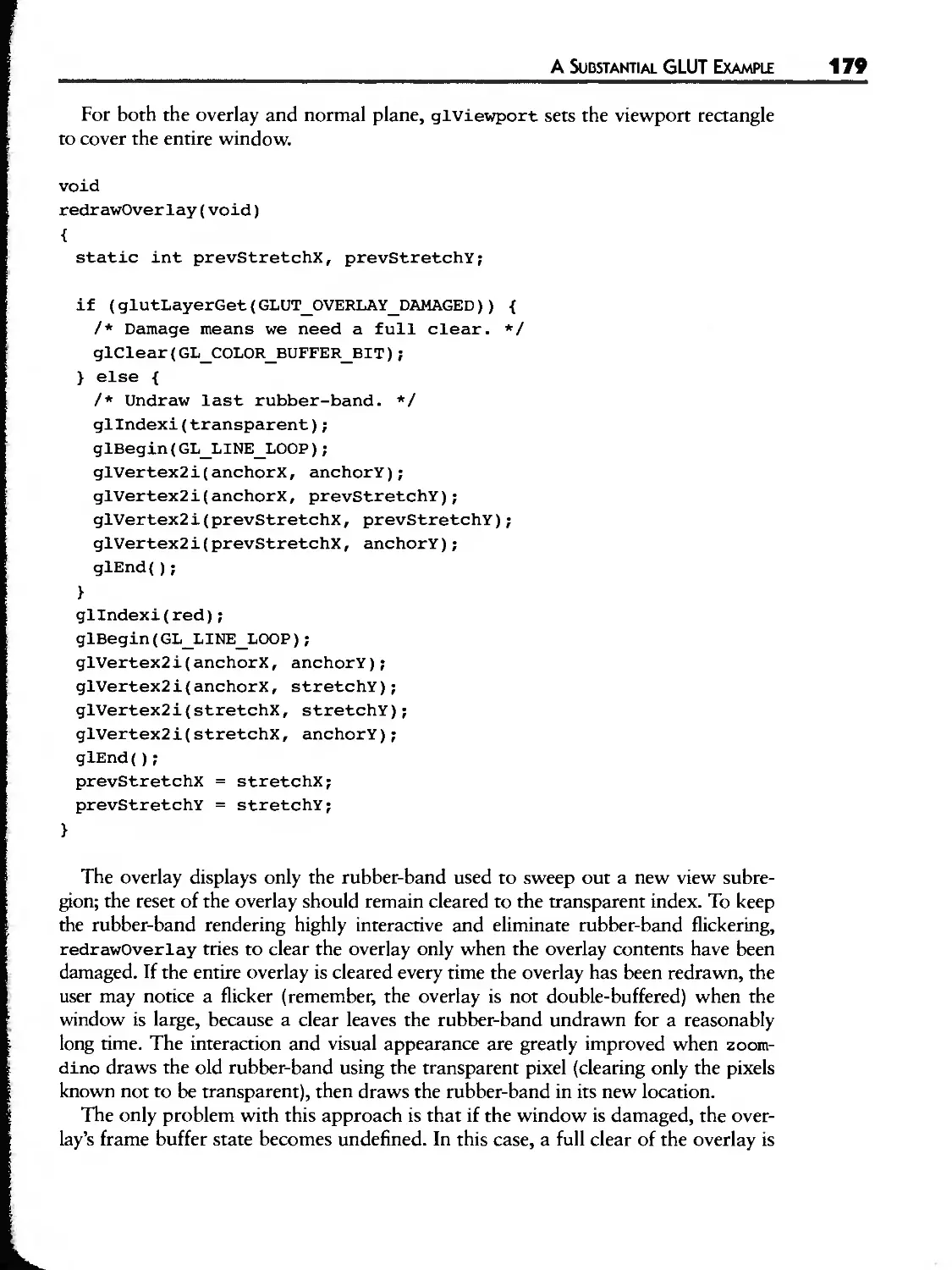

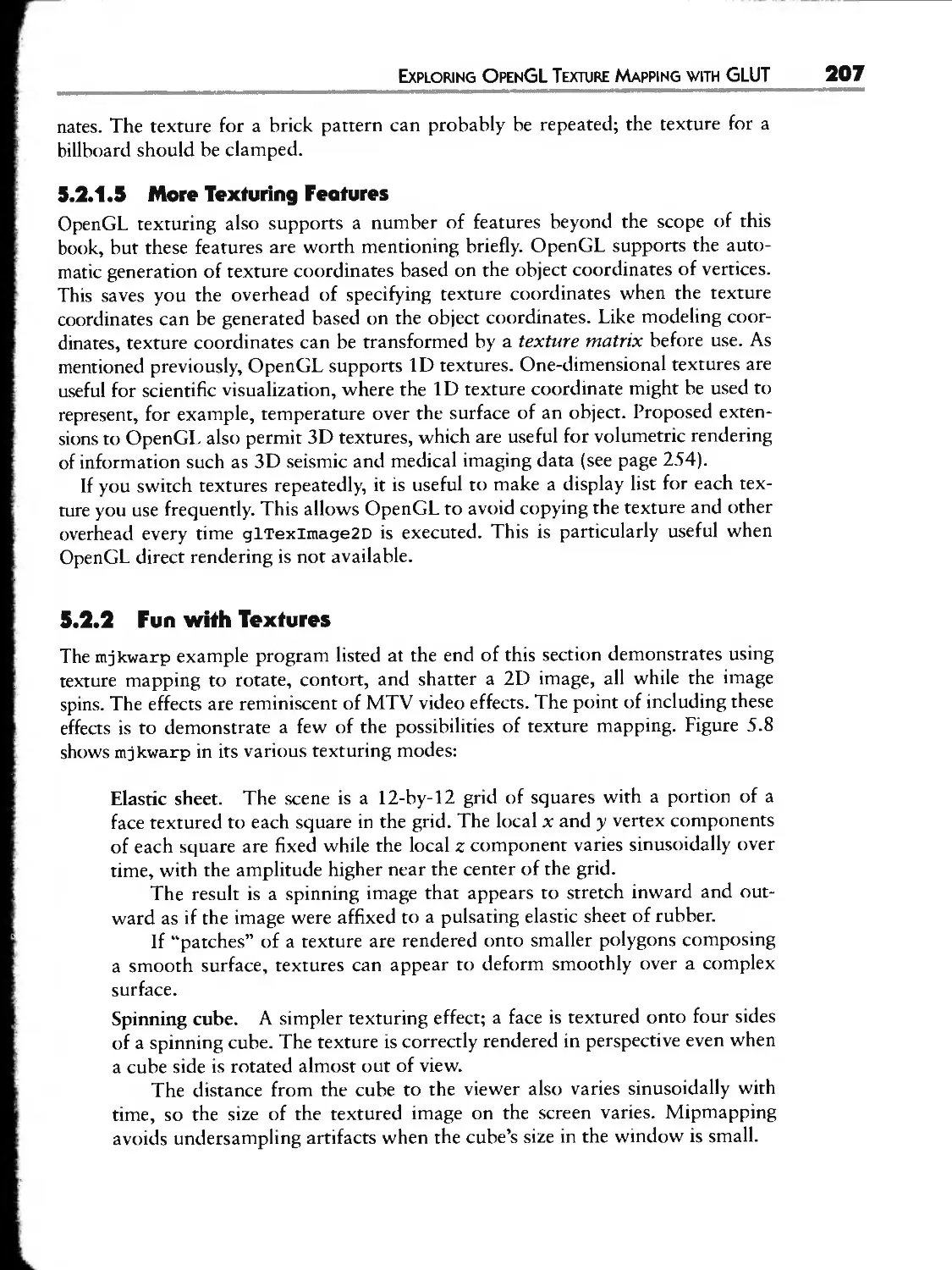

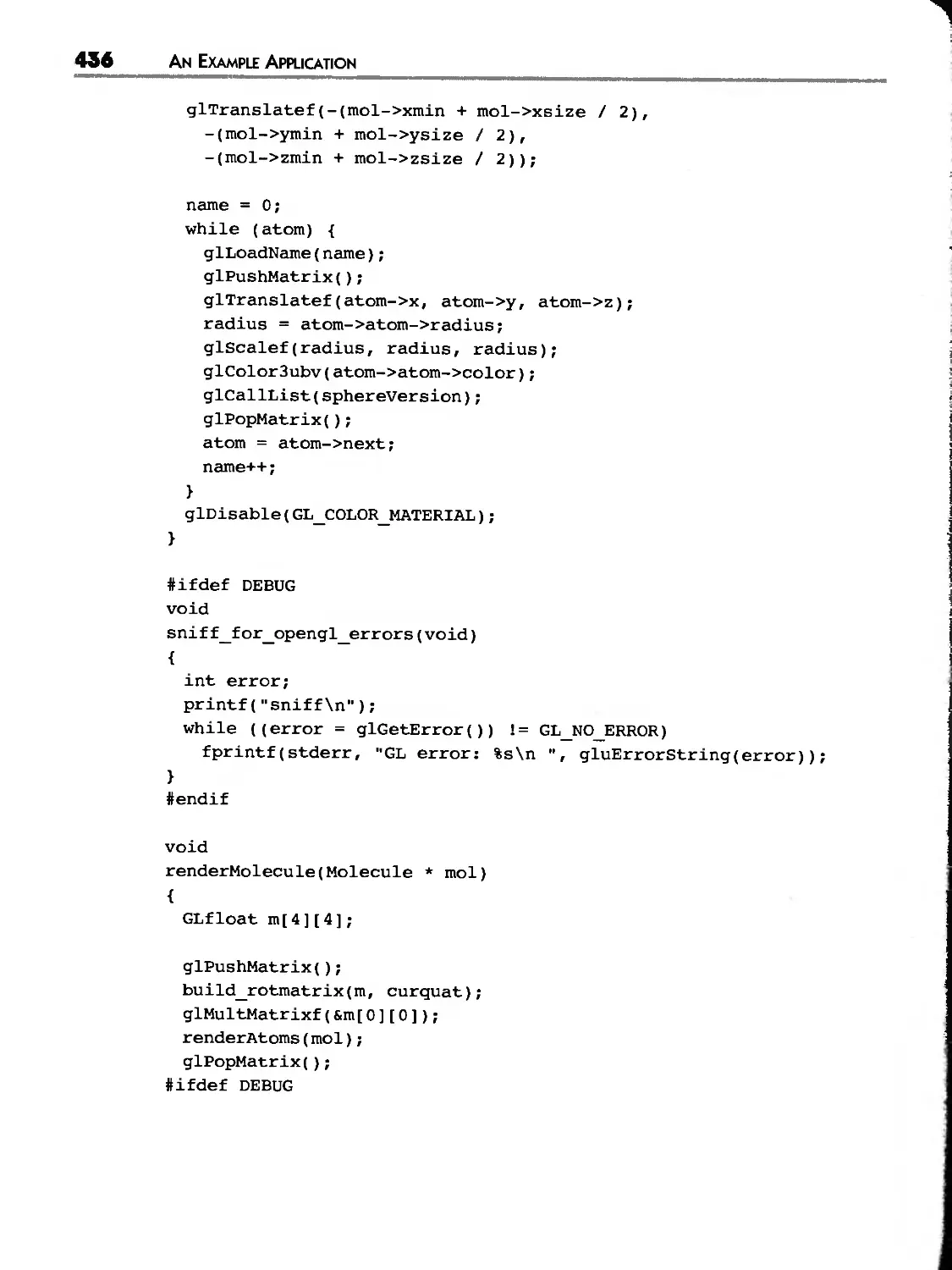

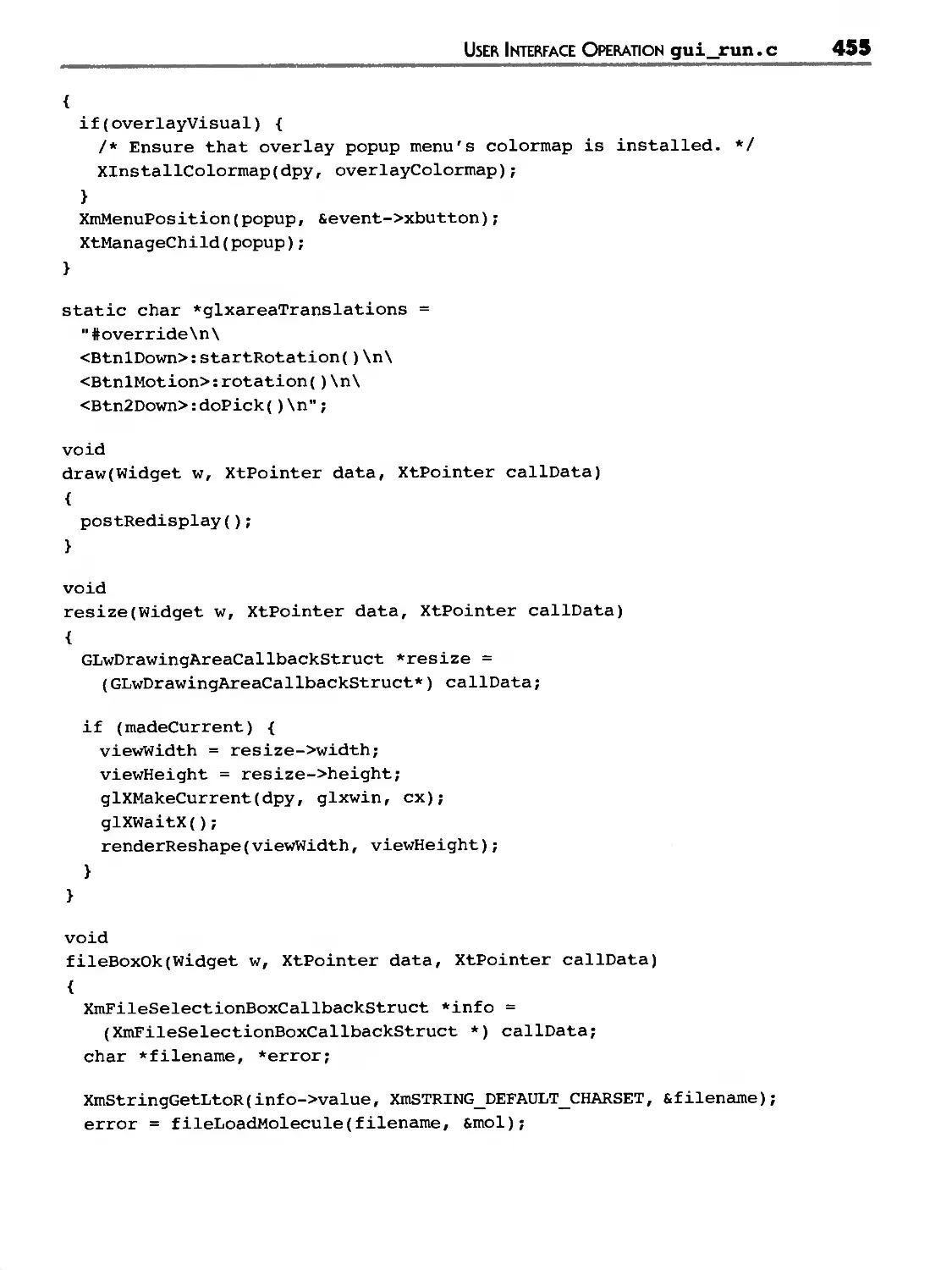

the desired OpenGL visual. A new drawable of type GLXPixraap is returned: if can