Автор: Rachev S.T.

Теги: mathematics physics mathematical physics john wiley and sons stochastic models

Год: 1991

Текст

Probability Metrics

and the Stability of

Stochastic Models

SVETLOZAR T. RACHEV

Department of Statistics and Applied Probability

University of California

Santa Barbara

USA

JOHN WILEY & SONS

Chichester . New York . Brisbane . Toronto . Singapore

Copyright © 1991 by John Wiley & Sons Ltd.

Baffins Lane, Chichester

West Sussex PO19 1UD, England

All rights reserved.

No part of this book may be reproduced by any means,

or transmitted, or translated into a machine language

without the written permission of the publisher.

Other Wiley Editorial Offices

John Wiley & Sons, Inc., 605 Third Avenue,

New York, NY 10158-0012, USA

Jacaranda Wiley Ltd, G.P.O. Box 859, Brisbane,

Queensland 4001, Australia

John Wiley & Sons (Canada) Ltd, 22 Worcester Road,

Rexdale, Ontario M9W 1L1, Canada

John Wiley & Sons (SEA) Pte Ltd, 37 Jalan Pemimpin 05-04,

Block B, Union Industrial Building, Singapore 2057

Library of Congress Cataloging-in-Publication Data:

Rachev, S. T. (Svetlozar Todorov)

Probability metrics / Svetlozar T. Rachev.

p. cm. — (Wiley series in probability and mathematical

statistics. Applied probability and statistics section)

Includes bibliographical references and index.

ISBN 0 471 92877 1

1. Limit theorems (Probability theory) 2. Metric spaces.

I. Title. II. Series.

QA273.67.R33 1991

519.2—dc20 90-43733

CIP

British Library Cataloguing in Publication Data:

Rachev, Svetlozar T.

Probability metrics.

1. Probabilities & statistical mathematics

I. Title

519.2

ISBN 0 471 92877 1

Typeset by Techset Composition Ltd, Salisbury, UK.

Printed in Great Britain by Courier International, Tiptree, Essex

To my children

Borjana and Vladimir

Contents

Preface xi

PART I GENERAL TOPICS IN THE THEORY OF

PROBABILITY METRICS

Chapter 1 Main Directions in the Theory of Probability Metrics 3

Chapter 2 Probability Distances and Probability Metrics: Definitions 5

2.1 Some examples of metrics in probability theory 5

2.2 Metric and semimetric spaces; distance and semidistance spaces 7

2.3 Definitions of probability distance and probability metric 10

2.4 Universally measurable separable metric spaces 12

2.5 The equivalence of the notions of p. (semi-)distance on ^2 and on 3: 17

Chapter 3 Primary, Simple and Compound p. Distances. Minimal

and Maximal Distances and Norms 21

3.1 Primary distances and primary metrics 21

3.2 Simple distances and metrics; co-minimal functionals and minimal

norms 25

3.3 Compound distances and moment functions 39

Chapter 4 A Structural Classification of the Probability Distances 51

4.1 Hausdorff structure of p. semidistances 51

4.2 A-structure of p. semidistances 68

4.3 (-structure of p. semidistances 71

PART II RELATIONS BETWEEN COMPOUND,

SIMPLE AND PRIMARY DISTANCES

Chapter 5 Monge-Kantorovich Mass Transference Problem.

Minimal Distances and Minimal Norms 89

5.1 Statement of Monge-Kantorovich problem 89

5.2 Multi-dimensional Kantorovich theorem 89

vii

viii CONTENTS

5.3 Dual representation of the mimimal norms ?ic; a generalization of the

Kantorovich-Rubinstein theorem 107

5.4 Application: explicit representations for a class of minimal norms 115

Chapter 6 Quantitative Relationships between Minimal Distances and

Minimal Norms 119

6.1 Kantorovich metric fic is equal to Kantorovich-Rubinstein norm fic if

and only if the cost function c is a metric 119

6.2 Inequalities between fic, ?ic 121

6.3 Convergence, compactness and completeness in (^(U), fic) and

mu),[ic) no

6.4 fic- and ^-uniformity 134

6.5 Generalized Kantorovich and Kantorovich-Rubinstein functionals 136

Chapter 7 ^-minimal Metrics 141

7.1 Definition; general properties 141

7.2 Two examples of X-minimal metrics 146

7.3 X-minimal metrics of given probability metrics; the case U = R 147

7.4 The case: U is a separable metric space 155

7.5 Relations between multi-dimensional Kantorovich and Strassen

theorems; convergence of minimal metrics and minimal distances 162

Chapter 8 Relations between Minimal and Maximal Distances 167

8.1 Duality theorems and explicit representations for jlc and % 167

8.2 Convergence of measures with respect to minimal distances and

minimal norms 174

Chapter 9 Moment Problems Related to the Theory of Probability

Metrics. Relations between Compound and Primary

Distances 185

9.1 Primary minimal distances; moment problems with one fixed

pair of marginal moments 185

9.2 Moment problems with two fixed pairs of marginal moments;

moment problems with fixed linear combinations of moments 191

PART III APPLICATIONS OF MINIMAL p. DISTANCES

Chapter 10 Uniformity in Weak and Vague Convergence 201

10.1 (-metrics and uniformity classes 201

10.2 Mdtrization of the vague convergence 208

CONTENTS ix

Chapter 11 Glivenko-Cantelli Theorem and Bernstein-Kantorovich

Invariance Principle 211

11.1 Fortet-Mourier, Varadarajan and Wellner Theorems 211

11.2 Functional central limit theorem and Bernstein-Kantorovich

invariance principle 218

Chapter 12 Stability of Queuing Systems 223

12.1 Stability of G \ G | 11 oo-system 223

12.2 Stability of GI | GI111 oo -system 226

12.3 Approximation of a random queue by means of deterministic

queueing models 229

Chapter 13 Optimal Quality Usage 241

13.1 Optimality of quality usage and the Monge-Kantorovich problem 241

13.2 Estimates of the minimal total losses t^((/)*) 248

PART IV IDEAL METRICS

Chapter 14 Ideal Metrics with Respect to Summation Scheme for i.i.d.

Random Variables 257

14.1 Robustness of x2-test of exponentiality 257

14.2 Ideal metrics for sums of independent random variables 264

14.3 Rates of convergence in the CLT in terms of metrics with uniform

structure 274

Chapter 15 Ideal Metrics and Rate of Convergence in the CLT for

Random Motions 283

15.1 Ideal metrics in the space of random motions 283

15.2 Rates of convergence in the integral and local CLTs for random

motions 288

Chapter 16 Applications of Ideal Metrics for Sums of i.i.d. Random

Variables to the Problems of Stability and Approximation

in Risk Theory 299

16.1 The problem of stability in risk theory 299

16.2 The problem of continuity 301

16.3 Stability of the input characteristics 307

X CONTENTS

Chapter 17 How Close are the Individual and Collective Models in

the Risk Theory? 313

17.1 Stop-loss distances as measures of closeness between individual and

collective models 313

17.2 Approximation by compound Poisson distributions 326

Chapter 18 Ideal Metric with Respect to Maxima Scheme of i.i.d.

Random Elements 337

18.1 Rate of convergence of maxima of random vectors via ideal metrics 337

18.2 Ideal metrics for the problem of rate of convergence to max-stable

processes 351

18.3 Doubly ideal metrics 373

Chapter 19 Ideal Metrics and Stability of Characterizations of

Probability Distributions 387

19.1 Characterization of an exponential class of distributions

{Fp, 0 < p < oo} and its stability 389

19.2 Stability in de Finnetti's theorem 400

19.3 Characterization and stability of environmental processes 406

Bibliographical Notes 423

References 435

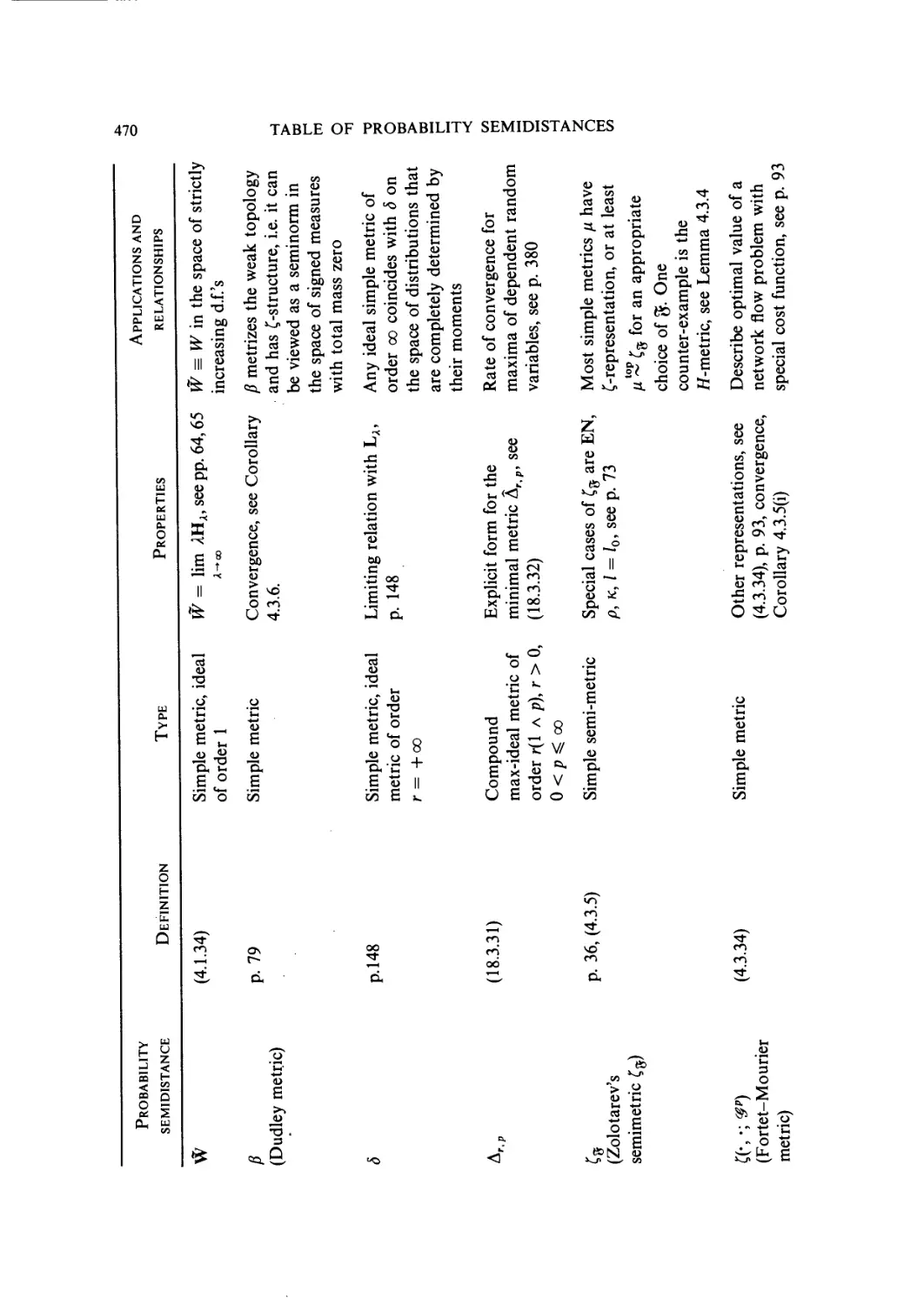

Table of Probability Semidistances 463

Index of Symbols and Abbreviations 479

Index 487

Preface

The study of limit theorems and a number of other questions in probability

theory makes it necessary to introduce functional, defined either on classes of

probability distributions or on classes of random elements, and evaluating their

nearness in one or another probabilistic sense. Thus various metrics have

appeared, among which are the well known Kolmogorov (uniform) metric, V

metrics, the Prokhorov metric, the metric of convergence in probability (Ky

Fan metric) and others. The use of metrics in many problems in probability

theory is connected with the following fundamental question:

'Is the proposed stochastic model a satisfactory approximation to the real

model, and if so, within what limits?' To answer this question, an investigation

of the qualitative and quantitative stability of the stochastic model is required.

Analysis of quantitative stability assumes the use of metrics as measures of

comparability. The main idea of the method of metric distances (MMD),

developed by V. M. Zolotarev and his students to solve stability problems, is

reduced to the following two aspects.

Problem 1 (Choice of ideal metrics). Find the most appropriate (ideal) metrics

for the stability problem under consideration. Then, solve the problem in terms

of these ideal metrics.

Problem 2 (Comparison of metrics). If it is required to write the solution of

the stability problem in terms of other metrics, one must solve the problem of

comparison of these metrics with the chosen (ideal) metrics.

Unlike Problem 1, Problem 2 does not depend on the specific stochastic

model under consideration. Thus, the independent solution of the second

problem allows its re-use in any particular situation. In addition, it enables us

to use. a variety of metric relationships without making any effort in different

kinds of stability problems. Moreover, following the stated two-stage approach,

we get a clear comprehension of the specific regularities which form the stability

effect.

In probability theory, metrics have been used for a long time, although one

usually exploits a very limited class of metrics. Also, some ideas of the MMD

have been used for a long time in approximation theory and functional analysis.

In view of the variety of stability problems, there are no regular selection rules

xi

xii PREFACE

determining the 'ideal' metric for the given problem. Therefore, the development

of the MMD demands the creation of a theory of probability metrics (TPM).

The term 'probability metric' means simply a semimetric in a space of

random variables (taking values in some separable metric space). In probability

theory, sample spaces are usually not fixed and one is interested in those metrics

whose values depend on the joint distributions of the pairs of random variables

being considered. Each such metric can be considered just as given by a function

defined on the set of probability measures on the Cartesian square of the sample

space. Complications connected with the question of existence of pairs of

random variables on a given space with given probability laws can be easily

avoided. Although such a function is not a metric on a space of probability

distributions (it is not a function of pairs of measures), small values of it say

that the measure is concentrated near the diagonal. Therefore its marginal

distributions are close to each other. Fixing these marginal distributions, one

can find the infimum of the values of our function on the class of all measures

with the given marginals. Such an infimum is a metric on the class of probability

distributions and in some concrete cases (for example, for the L, distance in

the space of random variables—Kantorovich's theorem; for the Ky Fan

metric—Strassen-Dudley's theorem; for the indicator metric—Dobrushin's

theorem) were found earlier (giving, respectively, the Kantorovich (or Wasser-

stein) metric, the Prokhorov metric and the total variation distance).

The necessary classification of the set of probability metrics {p. metrics) is

naturally carried out from the point of view of metric structure and generating

topologies. That is why the following two research directions arise.

Direction 1. Description of the basic structures of p. metrics.

Direction 2. Analysis of the topologies in the space of probability measures,

generated by different types of p. metrics. This analysis can be carried out with

the help of convergence criteria for different metrics.

At the same time, more specialized research directions arise. Namely,

Direction 3. Characterization of the ideal metrics for the given problem.

Direction 4. Investigations of the main relationships between different type

of p. metrics.

In this book, all four directions are considered as well as applications to

different problems of probability theory. Much attention is paid to the possi-

possibility of giving equivalent definitions of p. metrics (for example, in direct and

dual terms, in terms of the Hausdorff metric for sets, etc). Indeed, in concrete

applications of p. metrics, the use of different equivalent variants of the

definitions in different steps of the proof is often a decisive factor.

One of the main classes of metrics considtred is the class of minimal metrics,

the ideii of which goes back to the work of Kantorovich in the 1940s on the

PREFACE xiii

transportation problems in linear programming. Such metrics have been found

independently by many authors in several parts of probability theory (Markov

processes, statistical physics, etc.). They are connected with the widely known

method of 'coupling.' Then it is natural to evaluate the distance between'

variables with metrics of the indicated type. Distances for such metrics are hard

to compute, but it is easy to give upper bounds, attained by at least one joint

probability distribution. Another useful class of metrics studied in this book

is the class of 'ideal' metrics having satisfied the following properties: A)

lAPc,Qc)<\cW,Q) for all ce[-C,C],c#0, where Pc(A)-.= P{{l/c)A) for

any Borel set A on a Banach space U, and B) /i(Pj * Q, P2*Q) < lAP\> Pi),

where * denotes the convolution. This class is convenient for the study of

functionals of sums of independent random variables, giving nearest bounds of

the distance to limit distributions.

The presentation here is given in a general form, although specific cases are

considered as they arise in the process of finding supplementary bounds, or in

applications to important special cases.

The MMD given herein is illustrated in some concrete problems. First, there

are problems of the type of the Glivenko-Cantelli theorem on the convergence

of the empirical measures. Originally, this kind of problem was different in view

of the various possible natural modes of convergence and was considered as a

problem needing an ad hoc approach. Here it is shown that from a general

point of view such results turn out to be obvious consequences of the SLLN

and general properties of metrics. Analogously, we considered a generalization

of the Prokhorov theorem on convergence of random polygons to the Wiener

process in the case where one considers the question of convergence of

distributions of unbounded functionals. Also considered are applications to the

rate of convergence for sums and maxima of random variables and convolution

of random motions. Special sections are devoted to application of TPM to the

solution of stability problems in queueing theory, risk theory, quality usage,

and others.

I would like to thank a number of people who have directly contributed to

this project. V. M. Zolotarev introduced me to the subject of theory of

probability metrics and stability of stochastic models. He has been a constant

source of intellectual guidance and inspiration. R. M. Shortt, L. Ruschendorf,

L. de Haan, J. Yukich, E. Omey, L. Baxter, P. Todorovich, M. Taksar and J.

Beirlant worked with me on papers which formed the foundation of this book.

R. M. Dudley's lecture notes from Aarhus University have been a rich source

of ideas. In addition, I want to thank H. Robbins, S. Cambanis, G. Simons, H.

Kellerer, S. Resnick and G. Samorodnitski for many helpful discussions during

the last three years. My warm thanks and appreciation go to Ruth Bahr,

Michelle Bebb and Lee Trimble for their expert typing of this manuscript.

Finally, I must thank the editorial and production department of Wiley for their

support, patience and superb final product.

xiv PREFACE

While trying to correct all the mistakes in my manuscript, I realize that all

of them have not been found. I will be very happy to learn of any mistakes

found by the reader together with any comments one might havef.

Svetlozar T. Rachev

f Research supported by NATO Scientific Affairs Division Grant CRG 900798 and Grant from

UC Regents, University of California, Santa Barbara.

PART I

General Topics

in the Theory of

Probability Metrics

CHAPTER 1

Main Directions in the Theory of

Probability Metric

In the period of the formation of probability theory as a mathematical science,

the first limit theorems (the Law of Large Numbers, De Moivre and Laplace

Central Limit Theorems) were obtained (cf. Feller 1970, Chap. VI, 4, and Chap.

VII, 3). In applications of these limit theorems, a given probability distribution

is often approximated by some limiting distribution. In such cases, the con-

convergence rate problem arises. As mentioned in the Preface, the convergence rate

problem requires the concept of a metric as a measure of comparability.

Even in the case of probability distributions on the real line, several different

types of metrics (Levy metric, Kolmogorov metric, Lp-metric) are often used

to estimate the closeness between distributions. Since the early thirties, the

demands of various applications resulted in the creation of new, more compli-

complicated probability models. Both the theory of random processes and the theory

of distributions on functional spaces were extensively developed. In connection

with limit theorems in general spaces, Fortet and Mourier A953), Prokhorov

A956), Kantorovich and Rubinstein A958), and Dudley A966a) suggested a

series of new metrics on spaces of distributions. Certainly, the study of metric

properties is not confined to probability theory; such investigations have

occurred in other mathematical areas. In fact, some metrics on spaces of

measures (e.g. the Kantorovich-Rubinstein metric, total variation norm, and

Lp-metrics) play an important role in functional analysis (see Dunford and

Schwartz 1988, Kantorovich and Akilov 1984). In probability theory, an even

greater variety of such metrics arises in completely natural ways. This variety

and suitability can be partly explained by the fact that the stochastic problem

under consideration often dictates the choice of an appropriate metric. Fre-

Frequently, this choice will determine the investigation's success.

Questions concerning the bounds within which stochastic models can be

applied (as in all probabilistic limit theorems) can only be answered by

investigation of qualitative and quantitative stability. Such stability is

very often convenient to express in terms of a metric. This was the case with

Zolotarev's Method of Metric Distances (MMD) and the Theory of

Probability Metrics (TPM) (see Zolotarev 1976a-d, 1977a,b, 1983a,b, Zolotarev

and Rachev 1985).

MAIN DIRECTIONS PROBABILITY METRICS

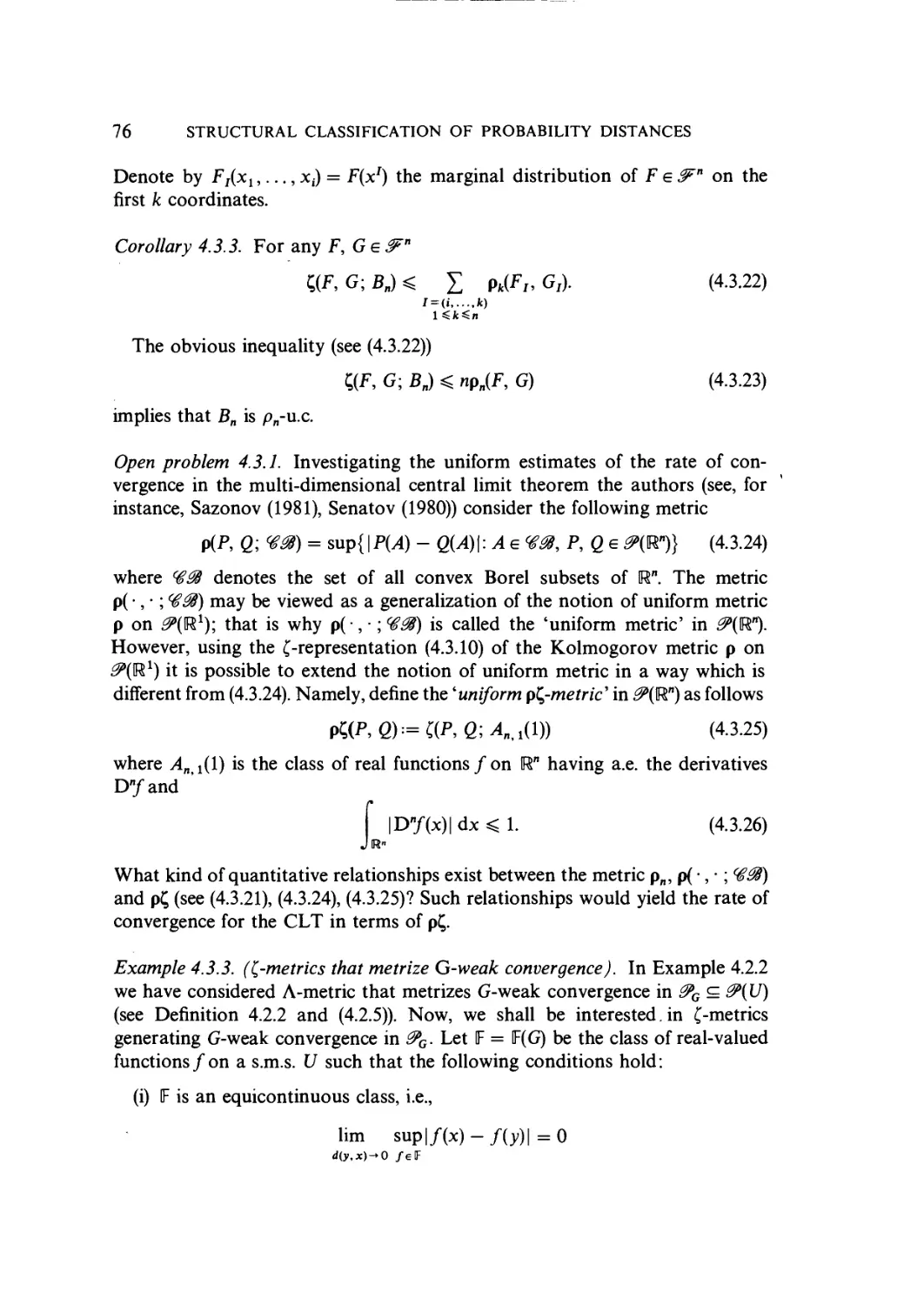

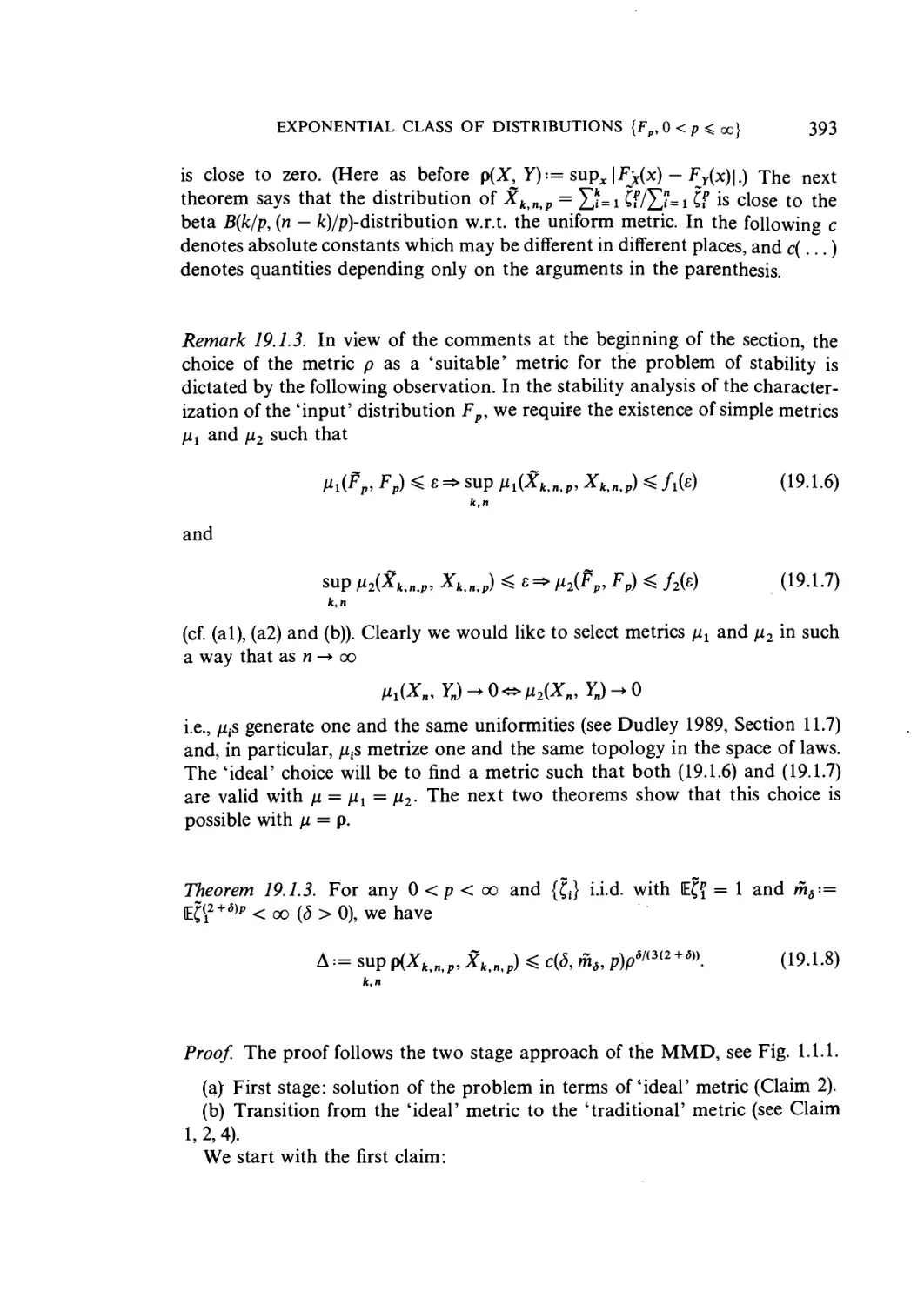

Figure 1.1.1 summarizes the problems concerning MMD and TPM.

THEORY OF PROBABILITY METRICS (TPM)

Classification of

p. metrics

Description of the basic

metric and topological

structures of p. metrics

Ideal metrics problem

Characterizations of

the 'ideal' (suitable,

natural) metrics w.r.t.

the specific character

of the given

approximating problem

Comparison of metrics

Analysis of the metric

and topological

relationships between

different classes of

p. metrics

First stage of MMD

Solutions of the stability

problem in terms of

approximate metrics

Second stage of MMD

Transition from the initial

appropriate metrics to the

metric required (w.r.t. the

final solution)

METHODS OF METRIC DISTANCES (MMD)

Figure 1.1.1 Theory of the probability metrics as a necessary tool to investigate the method of

metric distances. (From Rachev and Shortt, 1990. Reproduced by permission of the American

Mathematical Society.)

CHAPTER 2

Probability Distances and Probability

Metrics: Definitions

2.1 SOME EXAMPLES OF METRICS IN PROBABILITY THEORY

Below is a list of various metrics commonly found in probability and statistics.

1. The engineer's metric

EN(X, Y)-.= |EpO - EG)| X, YeX1 B.1.1)

where 3?pis the space of all real-valued random variables (r.v.s) with E| X\p < oo.

2. The uniform (or Kolmogorov) metric

, Y)-.= sup{|F^(x) - Fy{x)\:xeU} X,YeX = X(R) B.1.2)

where Fx is the distribution function (d.f.) of X, R = (— oo, + oo), and X is the

space of all real-valued r.v.s.

3. The Levy metric

, Y) ¦¦= inf{e > 0: Fx(x - e) - e < Fy(x) ^ Fx(x + e) + e Vx e R}. B.1.3)

Remark 2.1.1. We see that p and L may actually be considered as metrics on

the space of all distribution functions. However, this cannot be done for

EN simply because EN(X, Y) = 0 does not imply the coincidence of Fx and FY,

while p(X, Y) = 0oL{X, Y) = 0oFx = FY. The Levy metric metrizes weak

convergence (convergence in distribution) in the space J5", whereas p is often

applied in the CLT, cf. Hennequin and Tortrat A965).

4. The Kantorovich metric

k(X, Y) = f \Fx(x) - Fy(x)\dx X,YeXl.

PROBABILITY DISTANCES AND PROBABILITY METRICS

5. The Lp-metrics between distribution functions

Qp(X, Y)-.= M IF

\i/P

- Fy(t)|"dtJ p > 1

B.1.4)

Remark 2.1.2. Clearly, k = 0t. Moreover, we can extend the definition of 9P

when p = oo by setting 9^ = p. One reason for this extension is the following

dual representation for 1 ^ p ^ oo

QP(X, Y) = sup \Ef(X) - Ef(Y)\, X, YgX1

where ^p is the class of all measurable functions / with ||/||9 < 1. Here,

,9(l/p + \jq = 1) is defined, as usual, by

I/I'

ess sup |

q = oo.

(The proof of the above representation is given by Dudley A989), p. 333, for

the case p = 1.)

6. The Ky Fan metrics

K(X, y):=inf{e>O:Pr(|X- Y\ > e) < e}

and

K*{X, Y):= E |X ~ 7|

\X-Y\

B.1.5)

B.1.6)

Both metrics metrize convergence in probability on X = X(U), the space of real

random variables (Lukacs 1968, Chapter 3, Dudley 1976, Theorem 3.5).

7. The Lp-metric

, Y)-.= {E\X - Y\p}llp p > 1 X, YeX".

Remark 2.1.3. Define

and

mp{X):= {E\X\p}llp p^l XeXp

X, Y)-.= \mp(X) - mp(Y)\ p > 1 X, YeXp.

B.1.7)

B.1.8)

B.1.9)

METRIC AND SEMIMETRIC SPACES 7

Then we have, for Xo, Xv .. .'in Xp

see, for example, Lukacs A968), Chapter 3.

All of the (semi-)metrics on subsets of ? mentioned above may be divided

into three main groups: primary, simple, and compound (semi-)metrics. A

metric n is primary if n(X, Y) = 0 implies that certain moment characteristics

of X and Y agree. As examples, we have EN B.1.1) and MOMP B.1.9). For

these metrics

MOMP(I, Y) = Oomp(X) = mp(Y).

A metric n is simple if

B.1.12)

Examples are p B.1.2), L B.1.3), and 9P B.1.4). The third group, the compound

(semi-)metrics have the property

H{X, y) = 0oPr(I= Y)= 1. B.1.13)

Some examples are K B.1.5), K* B.1.6), and Sep B.1.7).

Later on, precise definitions of these classes will be given, and a study made

of the relationships between them. Now we shall begin with a common

definition of probability metric which will include the types mentioned above.

2.2 METRIC AND SEMIMETRIC SPACES; DISTANCE AND

SEMIDISTANCE SPACES

First of all, let us recall the notions of metric and semimetric space. Generaliza-

Generalizations of these notions will be needed in TPM.

Definition 2.2.1. A set S--= (S, p) is said to be a metric space with the metric p

if p is a mapping from the product S x S to [0, oo) having the following

properties for each x, y, z e S

A) Identity property: p(x, y) = Oox = y;

B) Symmetry: p(x, y) = p(y, x);

C) Triangle inequality: p(x, y) < p(x, z) + p(z, y).

Some well known examples of metric space are the following.

(a) The n-dimensional vector space W endowed with the metric p(x, y) =

PROBABILITY DISTANCES AND PROBABILITY METRICS

= (xl,...,xn)eW 0<p<oo

Wloo™ SUP \Xil

1

(b) The Hausdorff metric between closed sets

r{Cu C3) = max^ sup inf p(xl5 x2), sup inf p(xu x2) >

where C,s are closed sets in a bounded metric space (S, p) (Hausdorff 1949).

(c) The H-metric. Let D(U) be the space of all bounded function /: U -> U,

continuous from the right and having limits from the left,/(x —) = limtT xf(t).

For any f eD(U) define the graph Tf as the union of the sets {(x, y): xeU,

y = /(*)} and {(x, y): x e U, y = f(x -)}. The H-metric H(f, g) in D(U) is defined

by the Hausdorff distance between the corresponding graphs, H(f,g)-=

r(Tf, Tg). Note that in the space J^(IR) of distribution functions, H metrizes

the same convergence as the Skorokhod metric:

s(F, G) = inf< e > 0: there exists a strictly increasing continuous function

X: U -> U, such that A(R) = R, sup|A(t) - t\ <e

te U

and sup\F(A(t))-G(t)\<s\.

teU J

Moreover, H-convergence in #" implies convergence in distributions (the

weak convergence). Clearly, p-convergence (see B.1.2)) implies H-convergence.

(A more detailed analysis of the metric H will be given in Section 4.1.)

If the identity property in Definition 2.2.1 is weakened by changing A) to

A*) x = y=>p(x,y) = 0,

then S is said to be a semimetric space (or pseudometric space) and p a semimetric

(or pseudometric) in S. For example, the Hausdorff metric r is only a semimetric

in the space of all Borel subsets of a bounded metric space (S, p).

Obviously, in the space of real numbers EN (see 2.1.1) is the usual

uniform metric on the real line U, i.e. EN(a, b)-.= \a - b\, a, beU, For p ^ 0,

define i*rp as the space of all distribution functions F with J?, F(x)p dx +

Jo' A — F(x))p dx < oo. The distribution function space J^ = J^0 can be con-

considered as a metric space with metrics p and L, while 9PA ^ p < oo) is a metric

METRIC AND SEMIMETRIC SPACES 9

in 2F*. The Ky-Fan metrics (see B.1.5), B.1.6)) [resp. ifp-metric (see 2.1.7)] may

be viewed as semimetrics in X (resp. 3?p) as well as metrics in the space of all

Pr-equivalence classes

X-.= {YeX:Pr{Y = X) = 1} VX e ? [resp. ?"]. B.2.1)

EN, MOMp, 9p, ?fp can take infinite values in 3? so we shall assume,

in the next generalization of the notion of metric, that p may take infinite values;

at the same time we shall extend also the notion of triangle inequality.

Definition 2.2.2. The set S is called a distance space with distance p and

parameter IK = Kp if p is a function from S x S to [0, oo], IK ^ 1 and for

each x,y,zeS the identity property A) and the symmetry property B) hold

as well as the following version of the triangle inequality: C*) (Triangle

inequality with parameter K)

P(x, y) ^ K[p(x, z) + p(z, y)l B.2.2)

If, in addition, the identity property A) is changed to A*) then S is called

a semidistance space and p is called a semidistance (with parameter IK^).

Here and in the following we shall distinguish the notions 'metric' and

'distance', using 'metric' only in the case of 'distance with parameter IK = 1,

taking finite or infinite values'.

Remark 2.2.1. It is not difficult to check that each distance p generates a

topology in S with a basis of open sets B(a, r)-= {x eS; p(x, a) <r},aeS,r > 0.

We know, of course, that every metric space is normal and that every

separable metric space has a countable basis. In much the same way, it is easily

shown that the same is true for distance space. Hence, by Urysohn's Metrization

Theorem (Dunford and Schwartz 1988, 1.6.19), every separable distance space

is metrizable.

Actually, distance spaces have been used in functional analysis for a long

time as is seen by the following examples.

Example 2.2.1. Let 3tf by the class of all nondecreasing continuous functions

H from [0, oo) onto [0, oo) which vanish at the origin and satisfy Orlicz's

condition

< oo. B.2.3)

r>0 "W

Then p-.= H(p) is a distance in S for each metric p in S and Kp = KH.

Example 2.2.2. (Birnbaum-Orlicz distance space, Birnbaum and Orlicz A931),

and Dunford and Schwartz A988), p. 400.)

10 PROBABILITY DISTANCES AND PROBABILITY METRICS

The Birnbaum-Orlicz space LH(H e #?) consists of all integrable functions

on [0, 1] endowed with Birnbaum-Orlicz distance

-/2(*)|)dx. B.2.4)

Jo

Obviously, KPh = KH.

Example 2.2.3. Similarly to B.2.4) Kruglov A973) introduced the following

distance in the space of distribution functions

Kr(F, G) = (j)(F(x) - G(x)) dx B.2.5)

where the function cj) satisfies the following conditions

(a) (j) is even and strictly increasing on [0, oo), 0@) = 0,

(b) for any x and y and some fixed A ^ 1

0(x + y) ^ A{<t>{x) + fry)). B.2.6)

Obviously, IKKr = A.

2.3 DEFINITIONS OF PROBABILITY DISTANCE AND

PROBABILITY METRIC

Let U be a separable metric space (s.m.s.) with metric d, Uk = U x • • • x U the

k times

fe-fold Cartesian product of U and ^k = ^k(U) the space of all probability

measures defined on the a algebra 0$k = &k(U) of Borel subsets of Uk. We shall

use the terms 'probability measure' and 'law' interchangeably. For any set

{a, /?,..., y} c |i5 2,..., k} and for any Pe^k let us define the marginal of

P on the coordinates a,f$,...,y by Ta p yP. For example, for any Borel

subsets A and B of U, T,P{A) = P{Ax U x ••• x U), Tl3P(AxB) =

P(A x U x B x ¦• • x U). Let B be the operator in U2 defined by B(x, y)¦¦=

{y,x) {x,ye U). All metrics n(X, Y) cited in Section 2.1 (see B.1.1H2.1.9)) are

completely determined by the joint distributions ^rx Y{?rx yg^2(U)) of the

random variables X, Y eX(U). In the next definition we shall introduce the

notion of probability distance and thus we shall describe the primary, simple,

and compound metrics in a uniform way. Moreover, the space where the r.v.s

X and 7 take values will be extended to U, an arbitrary s.ms.

Definition 2.3.1. A mapping ft defined on 0>2 and taking values in the extended

interval [0, oo] is said to be a probability semidistance with parameter K ¦¦=

DEFINITIONS OF PROBABILITY DISTANCE 11

K^ ^ 1 (or briefly, p. semidistance) in g?2 > if it possesses the three properties listed

below

A) ID {Identity Property). If P e 0>2 and P{[JxeU {(x, x)}) = 1 then /x(P) = 0

B) SYM {Symmetry). If P e 0>2 then /*(P o B"*) = /*(P)

C) TI {Triangle Inequality). If P13, P12, P23e^2 and there exists a law

Qe&3 such that the following 'consistency' condition holds:

Tl3Q = Pl3 Tl2Q = P12 T23Q = P23, B.3.1)

then

) + fi(P23I

If IK = 1 then jx is said to be a probability semimetric. If we strengthen

the condition ID to

TD:IfPeP2, then

P{\J {{x, x): xeU})= l<=>fi{P) = 0,

then we say that fx is a probability distance with parameter K = K^ ^ 1 (or

briefly,/?, distance).

Definition 2.3.1 acquires a visual form in terms of random variables, namely,

let X ¦¦= ?{U) be the set of all r.v.s on a given probability space (Q, s/, Pr) taking

values in {U, &J. By if X2 ¦¦= ^X2{U) ¦¦= ^X2{U; Q, j*, Pr) we denote the space

of all joint distributions Pr^- y generated by the pairs X,YeX. Since i?3E2 c g>2,

then the notion of p. (semi-)distance is naturally defined on if X2. Considering

jx on the subset SCX2, we shall put

and call n a /?. semidistance on X. If /i is a p. distance, then we use the phrase

p. distance on 3E. Each p. semidistance [resp. distance] /x on X is a semidistance

[resp. distance] on 3E in the sense of Definition 2.2.2. Then the relationships

ID, TO, SYM, and TI have simple 'metrical' interpretations:

ID(*> Pr(X =Y)=l=> n{X, Y) = 0

TD(*> Pr(X =Y) = lon{X, Y) = 0

SYM(*> pipe, Y) = n{Y,X)

TI<*> n{X, Z) < K\XX, Z) + n{Z,

Definition 2.3.2. A mapping jx: <?X2 -»• [0, oo] is said to be a probability

semidistance in X [resp. distance] with parameter K-.= K^ ^ 1, if /i(X, Y) =

fj.{T>rx Y) satisfies the properties ID(*> [resp. Ito1**], SYM(*) and TI(*> for

all r.v.s X,Y,ZeX{U).

12 PROBABILITY DISTANCES AND PROBABILITY METRICS

Example 2.3.1. Let H e Jf (see Example 2.2.1) and (U, d) be a s.m.s. Then

&H(X, Y) = EH(d[X, Y)) is a p. distance in X(U). Clearly, ??H is finite in the

subspace of all X with finite moment EH(d(X, a)) for some ae U. The Kruglov's

distance Kr(X, Y)-.= Kr(Fx, FY) is a p. semidistance in X(U).

Examples of p. metrics in X(U) are the Ky Fan metric

K{X, Y)¦¦= inf{? > 0: Pr{d(X, Y) > e) < e} {X, YeX{U) B.3.2)

and the ^-metrics @ < p < oo)

S?P{X, Y)-.= {W(X, Y)}minA-llp) 0 < p < oo, B.3.3)

&JX, Y) -.= ess sup d(X, Y) ¦.= inf {e > 0: Pr(d(X, Y) > s) = 0} B.3.4)

2>0(X, Y) == E/{X, 7} := Pr(X, 7). B.3.5)

The engineer's metric EN, Kolmogorov metric p, Kantorovitch metric k,

and the Levy metric L (see Section 2.1) are p. semimetrics in X(U).

Remark 2.3.1. Unlike Definition 2.3.2, Definition 2.3.1 is free of the choice of

the initial probability space, and depends only on the structure of the metric

space U. The main reason for considering not arbitrary but separable metric

spaces ([/, d) is that we need the measurability of the metric d in order to connect

the metric structure of U with that of X(U). In particular, the measurability of

d enables us to handle in a well defined way, p. metrics such as the Ky Fan

metric K and Jzfp-metrics. Note that S?o does not depend on the metric d,

so one can define Jzf0 on X(U), where U is an arbitrary measurable space,

while in B.3.2)-B.3.4) we need d(X, Y) to be a random variable. Thus the natural

class of spaces appropriate to our investigation is the class of s.m.s.

2.4 UNIVERSALLY MEASURABLE SEPARABLE METRIC SPACES

What follows is an exposition of some basic results regarding universally

measurable separable metric spaces (u.m.s.m.s.). As we shall see, the notion of

u.m.s.m.s. plays an important role in TPM.

Definition 2.4.1. Let P be a Borel probability measure on a metric space (U, d).

We say that P is tight if for each e > 0, there is a compact K ^ U with

P(K) > 1 - a. See Dudley A989), Section 11.5.

Definition 2.4.2. A s.m.s. (U, d) is universally measurable (u.m.) if every Borel

probability measure on U is tight.

Definition 2.4.3. A s.m.s. (U, d) is Polish if it is topologically complete (i.e. there

is a topologically equivalent metric e such that (U, e) is complete). Here the

UNIVERSALLY MEASURABLE SEPARABLE METRIC SPACES 13

topological equivalence of d and e simply means that for any x, xt, x2,... in U

d{xn, x) ^Ooe{xn, x) -»• 0.

Theorem 2.4.1. Every Borel subset of a Polish space is u.m.

Proof. See Billingsley A968) Theorem 1.4, Cohn A980) Proposition 8.1.10, and

Dudley A989), p. 391.

Remark 2.4.1. Theorem 2.4.1 provides us with many examples of u.m. spaces,

but does not exhaust this class. The topological characterization of u.m. s.m.s.

is a well known open problem (see Billingsley 1968, Appendix III, p. 234).

In his famous paper on measure theory, Lebesgue A905) claimed that the

projection of any Borel subset of U2 onto U is a Borel set. As noted by Souslin

and his teacher Lusin A930), this is in fact not true. As a result of the

investigations surrounding this discovery, a theory of such projections (the

so-called 'analytic' or 'Souslin' sets) was developed. Although not a Borel set,

such a projection was shown to be Lebesgue-measurable, in fact u.m. This train

of thought leads to the following definition.

Definition 2.4.4. Let S be a Polish space and suppose that / is a measurable

function mapping S onto a separable metric space U. In this case, we say that

U is analytic.

Theorem 2.4.2. Every analytic s.m.s. is u.m.

Proof. See Cohn's A980), Theorem 8.6.13, p. 294 and Dudley A989), Theorem

13.2.6.

Example 2.4.1. Let Q be the set of rational numbers with the usual topology.

Since Q is a Borel subset of the Polish space R, then Q is u.m., however, Q is

not itself a Polish space.

Example 2.4.2. In any uncountable Polish space, there are analytic (hence u.m.)

non-Borel sets. See Cohn's A980) Corollary 8.2.17 and Dudley A989), Proposi-

Proposition 13.2.5.

Example 2.4.3. Let C[0, 1] be the space of continuous functions /: [0, 1] -»• IR

under the uniform norm. Let E ^ C[0, 1] be the set of / which fail to be

differentiable at some t e [0, 1]. Then a theorem of Mazurkiewicz A936) says

that E is an analytic, non-Borel subset of C[0, 1]. In particular, E is u.m.

Recall again the notion of Hausdorff metric r-.= rp in the space of all subsets

14 PROBABILITY DISTANCES AND PROBABILITY METRICS

of a given metric space (S, p)

r(A, B) = max< sup inf p(x, y), sup inf p(x, y) >

LxeA yeB yeB xeA J

= inf{8 > 0: AE 2 B, BE 2 A] B.4.1)

where ,4? is the open 8-neighborhood of A, Ae = {x: d(x, A) <&}.

As we noticed in the space 2b of all subsets A^0ofS, the Hausdorff distance

r is actually only a semidistance. However, in the space # = ^(S) of all closed

non-empty subsets, r is a metric (see Definition 2.2.1) and takes on both finite

and infinite values, and if S is a bounded set then r is a finite metric on c€.

Theorem 2.4.3. Let (S, p) be a metric space, and let (^(S), r) be the space

described above. If (S, p) is separable [resp. complete; resp. totally bounded],

then (^(S), r) is separable [resp. complete; resp. totally bounded].

Proof. See Hausdorff A944), Section 29; Kuratowski A969), Sections 21 and 23.

Example 2.4.4. Let S = [0, 1] and let p be the usual metric on S. Let M be the

set of all finite complex-valued Borel measures m on S such that the Fourier

transform

P

m(t) = exp(iut)m(du)

Jo

vanishes at t = ± oo. Let Jl be the class of sets E e ^(S) such that there is some

mei% concentrated on E. Then Jl is an analytic, non-Borel subset of (#(S), rp),

see Kaufman A984).

We seek a characterization of u.m. s.m.s. in terms of their Borel structure.

Definition 2.4.5. A measurable space M with cr-algebra Jl is standard if there

is a topology &~ on M such that (M, ^") is a compact metric space and the

Borel cr-algebra generated by 3~ coincides with Jl.

A s.m.s. is standard if it is a Borel subset of its completion (see Dudley 1989,

p. 347). Obviously, every Borel subset of a Polish space is standard.

Definition 2.4.6. Say that two s.m.s. U and Fare called Borel-isomorphic if

there is a one-one correspondence/of U onto Ksuch that Be@(U) if and only

Theorem 2.4.4. Two standard s.m.s. are Borel-isomorphic if and only if they

have the same cardinality.

Proof. See Cohn A980), Theorem 8.3.6 and Dudley A989), Theorem 13.1.1.

UNIVERSALLY MEASURABLE SEPARABLE METRIC SPACES 15

Theorem 2.4.5. Let U be a separable metric space. The following are equivalent:

A) U is u.m.

B) For each Borel probability m on U, there is a standard set S e3$(U) such

that m(S) = 1.

Proof. 1 =>2: Let m be a law on U. Choose compact Kn^ U with m(Kn) ^

1 — 1/n. Put S= [Jn2i Kn. Then S is cr-compact, and hence standard. So

m(S) = 1, as desired.

2 <= 1: Let m be a law on [/. Choose a standard set S e @(U) with m(S) = 1.

Let U be the completion of U. Then S is Borel in its completion S, which is

closed in U. Thus, S is Borel in U. It follows from Theorem 2.4.1 that

1 = m(S) = sup{m(K): K compact}.

Thus, every law m on U is tight, so that U is u.m. QED

Corollary 2.4.1. Let (U, d) and (V, e) be Borel-isomorphic separable metric

spaces. If (U, d) is u.m., then so is (V, e).

Proof. Suppose that m is a law on V. Define a law n on U by n{A) = m{f(A))

where/: U -*¦ V is a Borel-isomorphism. Since [/ is u.m. there is a standard set

Sg(/ with n(S) = 1. Then/(S) is a standard subset of Kwith m{f{S)) = 1. Thus,

by Theorem 2.4.5, V is u.m. QED

The following result, which is in "essence due to Blackwell A956), will be used

in an important way later on (cf. the basic theorem of Section 3.2, Theorem

3.2.1).

Theorem 2.4.6. Let U be a u.m. separable metric space and suppose that Pr is

a probability measure on U. If js/ is a countably generated sub-cr-algebra of

), then there is a real-valued function P(B\x), Be3${U), xeU such that

A) for each fixed Be&(U), the mapping x -*¦ P{B\x) is an jsZ-measurable

function on U;

B) for each fixed x e U, the set function B -*¦ P{B\x) is a law on U;

C) for each Aestf and Be &(U), we have jA P(B\x) Pr(dx) = Pr(^4 n B);

D) there is a set N e si? with Pr(iV) = 0 such that P(B\x) = 1 whenever

xeU -N.

Proof. Choose a sequence Fl,F2,... of sets in @{U) which generates

and is such that a subsequence generates s/. We shall prove that there exists

a metric e on U such that ([/, d) and ([/, e) are Borel-isomorphic and for which

the sets F1; F2, ¦.. are clopen, i.e., open and closed.

16 PROBABILITY DISTANCES AND PROBABILITY METRICS

Claim 1. If (I/, d) is a s.m.s. and Alt A2,... is a sequence of Borel subsets of

U, then there is some metric e on U such that

(i) (U, e) is a separable metric space isometric with a closed subset of U;

(ii) A1,A2,... are clopen subsets of (U, e);

(iii) (U, d) and (U, e) are Borel-isomorphic (see Definition 2.4.6).

Proof of claim. Let Bu B2,... be a countable base for the topology of (U, d).

Define sets Clt C2,... by C2n_ t = >ln and C2n = Bn (n = 1, 2,...) and

/: 1/ -> R by /(x) = Xn°°= i 2/c,,(x)/3n. Then / is a Borel-isomorphism of {U, d)

onto f(U) ^ K, where K is the Cantor set,

an/3": ans take value 0 or 2 >.

Define the metric e by e(x, y) = |/(x) — f(y)\, so that (I/, e) is isometric with

f(U) ^ K. Then >ln = /" l{x e K; x(n) = 2}, where x(n) is the nth digit in the

ternary expansion of x e K. Thus, An is clopen in (U, e), as required.

Now (U, e) is (Corollary 2.4.1) u.m., so there are compact sets K1 ^ K2^ ...

with Pr(KJ -»• 1. Let ^ and ^2 be the (countable) algebras generated by the

sequences F1; F2,... and F1; F2,...,K1, K2,..., respectively. Then define

P^^lx) so that A) and C) are satisfied for B e CS2. Since CS2 is countable, there

is some set N e j/ with Pr(iV) = 0 and such that for xe N,

(a) P^-lx) is a finitely additive probability on ^2;

(b) P^Alx) =lfoTAestfn<g2 and xeX;

(c)

C/a/m 2. For xeJV, the set function B -> P^B] x) is countably additive on ^.

Proof of claim: Suppose that H 1; H2,... are disjoint sets in <§x whose union

is U. Since the Hn are clopen and the Kn are compact in (U, e), there is, for

each n, some M = M(n) such that Kn^HlvH2(j---(j HM. Finite additivity

of P,{x, ¦) on ^2 yields, for x ? N, P^KJx) < ?? .P^H^x) < ^i" i ^i(H,-|x).

Let n -»• oo and apply (c) to obtain ^]?i t P^H.-lx) = 1, as required.

In view of the claim, for each xeN, we define B -*¦ P(B\x) as the unique

countably additive extension of P^ from <§x to 3$(U). For xeN, put

PE|x) = Pr(?). Clearly, B) holds. Now the class of sets in &(U) for which A)

and C) hold is a monotone class containing ^1; and so coincides with @(U).

Claim 3. Condition D) holds.

Proof of claim. Suppose that A e $? and x e A — N. Let Ao be the js/-atom

containing x. Then Ao ^ A and there is a sequence Ax, A2,... in ^ such that

NOTIONS OF p. (SEMI-)DISTANCE ON ^2 AND ON X 17

Ao = Ax n A2 n •••. From (b), P{An\x) = 1 for n > 1, so that P^olx) = 1, as

desired. QED

Corollary 2.4.2. Let U and V be u.m. s.m.s. and let Pr be a law on U x V.

Then there is a function P:@{V) x [/ -> U such that

A) for each fixed B e ^(K), the mapping x -»• P(B | x) is measurable on U;

B) for each fixed xeU, the set function 5 -»• P(B\x) is a law on V;

C) for each Ae3$(U) and 5 e ^(K), we have

P{B\x)P1(dx) = T>r(AnB)

Ja

where Px is the marginal of Pr on U.

Proof. Apply the preceding theorem with stf the cr-algebra of rectangles A x U

for A g @(U). QED

2.5 THE EQUIVALENCE OF THE NOTIONS OF

p. (SEMI-)DISTANCE ON ^2 AND ON X

As we have seen in Section 2.3, every p. (semi-)distance on g?2 induces (by

restriction) a p. (semi-)distance on 3E. It remains to be seen whether every

p. (semi-)distance on X arises in this way. This will certainly be the case whenever

J?X2(U, (Q, s/, Pr)) = 0>2{U). B.5.1)

Note that the left member depends not only on the structure of (U, d) but also

on the underlying probability space.

In this section we will prove the following facts.

(i) There is some probability space (Q, stf, Pr) such that B.5.1) holds for every

separable metric space U.

(ii) If U is a separable metric space, then B.5.1) holds for every non-atomic

probability space (Q, s/, Pr) if and only if U is universally measurable.

We need a few preliminaries.

Definition 2.5.1 (see Loeve 1963, p. 99; Dudley 1989, p. 82). If (Q, s4, Pr) is

a probability space, we say that A e s4 is an atom if Pr(yl) > 0 and PrE) = 0

or Vx{A) for each measurable B c A. A probability space is non-atomic if it has

no atoms.

Lemma 2.5.1 (Berkes and Phillip 1979). Let v be a law on a complete s.m.s.

(U, d) and suppose that (Q, js/, Pr) is a non-atomic probability space. Then

there is a [/-valued random variable X with distribution S?(X) = v.

18 PROBABILITY DISTANCES AND PROBABILITY METRICS

Proof. Denote by d* the following metric on U2: d*(x, y) •¦= d(x^ x2) + d(yu y2)

for x = (x1,yl) and y = (x2,y2)- For each k, there is a partition of U2

comprising non-empty Borel sets {Aik: i = 1, 2,...} with diam(yllfc) < l//c and

such that Aik is a subset of some Ajk_ t.

Since (fi, stf, Pr) is non-atomic, we see that for each # e js/ and for each

sequence p; of non-negative numbers such that Pi + p2 + '" = Pr(#), there

exists a partitioning <€x,<€1,...oi<€ such that Pr(#?) = pt, i = 1, 2,... (see e.g.

Loeve 1963, p. 99).

Therefore, there exist partitions {Bik: i = 1,2,...} ^ s/,k = 1,2,... such that

Bik c #.fc_ j for some; =j@ and PrEifc) = y(ylIfc) for all i, k. For each pair (i,j),

let us pick a point xlfc g >llfc and define U2-valued Xk(co) = xik for co e Bik. Then

d*(Xk + m(a>), Xk(co)) < \jk, m = 1, 2,... and since (U2, d*) is a complete space,

then there exists the limit X(co) = lim^^ Xk(a>). Thus

d*(X(co), Xk(co)) ^ lim ld*(Xk+m(co), X(co)) + d*(Xk + m(co), Xfc(co))] < \.

Let Pk:= Pr^ and P*:= Pr^. Further, our aim is to show that P* = v. For

each closed subset A c jj

Pk(A) = Pr(Xfc e 4) < Pr(X e ^41/fc) = P*(,41/fc) < Pfc(^2/fc) B.5.2)

where Ailk is the open l//c-neighborhood of A. On the other hand,

Pk(A) = X {Pfc(x/fc): xik eA} = Yj {T>r(Bik): xik e A}

= X {v(Aiky. xik eA}^Z {V(A* " AllkY- x* e A}

^ v(Al'k) ^ X {v(Aik): xik e A2'k} < Pk(A2'k). B.5.3)

Further, we can estimate the value Pk(A2lk) in the same way as in B.5.2) and

B.5.3) and thus, we get the inequalities

P*{A1/fc) ^ Pk{A2lk) < P*(A2'k) B.5.4)

v{Ailk) ^ Pfc(y42/fc) < v(A3lk). B.5.5)

Since y(yl1/fc) tends to v(A) with /c -»• oo for each closed set A and analogously

P*(Allk) -^ P*(A) as /c -v oo, then by B.5.4) and B.5.5) we obtain the equalities

P*{A)= limPk{A2'k) =

fc-oo

for each closed A and hence, P* = v. QED

Theorem 2.5.1. There is a probability space (Q, jrf, Pr) such that for every

separable metric space U and every Borel probability n on [/, there is a random

variable X. Q -> [/ with

NOTIONS OF p. (SEMI-)DISTANCE ON <3»2 AND ON X 19

Proof. Define (fi, s/, Pr) as the measure-theoretic (von Neumann) product (see

Hewitt and Stromberg A965), Theorems 22.7 and 22.8, pp. 432-433) of the

probability spaces (C, @(C), v), where C is some non-empty subset of U with

Borel cr-algebra @(C), and v is some Borel probability on (C, @{C)).

Now, given a separable metric space U, there is some set CeK Borel-

isomorphic with U (cf. Claim 1 in Theorem 2.4.6). Let f\C -*U supply the

isomorphism. If n is a Borel probability on U, let v be a probability on C such

that f(v)-.= vf~l =fi. Define X.Q^U as X = /°tt, where tt:Q->C is a

projection onto the factor (C, ^(C), v). Then =Sf(X) = /*, as desired.

Remark 2.5.1. The result above establishes the claim (i) made at the beginning

of the section. It provides one way of ensuring B.5.1): simply insist that all r.v.s

be defined on a 'super-probability space' as in Theorem 2.5.1. We make this

assumption throughout the sequel.

The next theorem extends the Berkes and Phillips's Lemma 2.5.1 to the case

of u.m. s.m.s. U.

Theorem 2.5.2. Let U be a separable metric space. The following are equivalent.

A) U is u.m.

B) If (Q, s/, Pr) is a non-atomic probability space, then for every Borel

probability P on U, there is a random variable X: Q -*¦ U with law JS?(X) = P.

Proof. 1 => 2: Since U is u.m. there is some standard set Se3$(U) with P(S) = 1

(Theorem 2.4.5). Now there is a Borel-isomorphism / mapping S onto a Borel

subset B of U (Theorem 2.4.4). Then f{P)-= P°/"' is a Borel probability on

U. Thus, there is a random variable g: Q -»• U with Sf{g) = f{P) and #(Q) c B

(Lemma 2.5.1 with (U,d) = (U, |-|)). We may assume that g(Q) c B since

PT[g-\B))= 1. Define x:Q->[/ by x{co) =f~\g{co)). Then Se{X) = v, as

claimed.

2 => 1: Now suppose that v is a Borel probability on U. Consider a random

variable X: Q -»• U on the (non-atomic) probability space (@, 1), ^@, 1), I) with

if(X) = y. Then range (X) is an analytic subset of U with u*(range(X)) = 1.

Since range(X) is u.m. (Theorem 2.4.2), there is some standard set S ^ range(X)

with P(S) = 1. This follows from Theorem 2.4.5. The same theorem shows that

U is u.m. QED

Remark 2.5.2. If U is u.m. s.m.s., we operate under the assumption that all

[/-valued r.v.s are defined on a non-atomic probability space. Then B.5.1) will

be valid.

CHAPTER 3

Primary, Simple and Compound

p. Distances. Minimal and Maximal

Distances and Norms

In this chapter we shall give a more detailed analysis of the notions of p. distance

and p. metric in order to provide the first (crude) classification of p. semi-

distances.

3.1 PRIMARY DISTANCES AND PRIMARY METRICS

Let h: 0>x -»• UJ be a mapping, where ^ = ^(U) is the set of Borel probability

measures (laws) for some s.m.s. (U, d) and J is some index set. This function h

induces a partition of 2?2 = ^(U) (tne set of laws on U2) into equivalence classes

for the relation

P~Qoh(Pi) = KQi) and h(P2) = h(Q2) Pr.= TtP, Qr.= TtQ C.1.1)

where Pt and Q( (i = 1, 2) are the ith marginals of P and Q, respectively. Let fx

be a p. semidistance on 0>2 with parameter KM (Definition 2.3.1), such that fx is

constant on the equivalence classes of ~, i.e.

P~Qo»{P) = m- C-1.2)

Definition 3.1.1. If the p. semidistance jx = jxh satisfies Relation C.1.2), then we

call /x a primary distance (with parameter KM). If KM = 1 and /x assumes only

finite values, we say that fx is a primary metric.

Obviously, by Relation C.1.2), any primary distance is completely de-

determined by the pair of marginal characteristics {hPx, hP2). In case of primary

distance /x we shall write /x{hPi, hP2)-= jx(P) and hence fx may be viewed as a

21

22 PRIMARY, SIMPLE AND COMPOUND p. DISTANCES

distance in the image space hffi) ^ RJ, i.e., the following metric properties hold

hP1 = hP2of4hPlt hP2) = 0

SYMA)

TIA) If the following marginal conditions are fulfilled

a = hG;/>A)) = WJiI*^) b = h{T2P{2)) = hG\PC)) c = h{T2P(l)) = h(T2PC))

for some law P{1), PB\ P{3) e 0>2 then n(a, c) ^ KM|>(fl, b) + jx{b, c)].

The notion of primary semidistance /xh becomes easier to interpret assuming

that a probability space (fi, st, Pr) with property B.5.1) is fixed (see Remark

2.5.1). In this case jxh is a usual distance (see Definition 2.2.1) in the space

h(X)-.= {hX-.= h Pr*, where XeX{U)} C.1.3)

and thus, the metric properties of fx •¦= fxh take the simplest form (cf. Definition

2.2.2):

hX =

SYMB*> n(hX, hY) = n{hY, hX),

TIC*> n(hX, hZ) ^ K^nihX, hY) + n{hY, hZ)~\.

Further, we shall consider several examples of primary distances and metrics.

Example 3.1.1. Primary minimal distances. Each p. semidistance jx and each

mapping h^l -*¦ UJ determine a functional p.h -*¦ p.h: H,^) x h{^) -* [0, oo] de-

defined by the following equality

M&» 52):= inf{/i(P): hPt = ah i = 1, 2} C.1.4)

(where Pt are the marginals of P) for any pair (au a2) e h(^) x

Further, we shall prove (see Chapter 5) that jxh is a primary distance for

different special functions h and spaces U.

Definition 3.1.2. The functional jlh is called a primary h-minimal distance with

respect to the p. semidistance fx.

Open problem 3.1.1. In general it is not true that the metric properties of a

p. distance fx imply that ]x is a distance. The following two examples illustrate

this fact (see further Chapter 9):

(a) Let U = U, d(x, y) = |x — y\. Consider the p. metric

H(X, Y) = ?0(X, Y) = Pr(X * Y) X, YeX(U)

and the mapping h: ?(M)-+ [0, oo] given by hX = $L\X\. Then (see further

Section 9.1)

*, b) = inf{Pr(X # Y): E|X| = a, E| Y\ = b} = 0

PRIMARY DISTANCES AND PRIMARY METRICS 23

for all a ^ 0 and 6^0. Hence in this case the metric properties of n imply only

semimetric properties for juh.

(b) Now let jx be defined as in (a) but h: X(R) -*¦ [0, oo] x [0, oo] be defined

by hX = (\E\X\,\EX2). Then

= inf{Pr(X ± Y): E\X\ = au EX2 = a2, E| Y\ = bu EY2 = b2) C.1.5)

where Jxh is not even p. semidistance since the triangle inequality TI{3*] is not

valid.

With respect to this, the following open problem arises: under which condition

on the space U, p. distance /j. on X(U) and transformation h: ?{U) -*¦ RJ the

primary h-minimal distance jlh is a primary p. distance in h(?I

As we shall see later on (Section 9.1), all further examples 3.12 to 3.15 of

primary distances are special cases of primary Jz-minimal distances.

Example 3.1.2. Let H ejf (see Example 2.2.1) and 0 be a fixed point of a s.m.s.

(U, d). For each Pe^ with marginals Pt= TtP, let m1P,m2P denote the

'marginal moments of order p > 0',

mtP¦¦= m\p)P-.= ({ dp(x, 0)P,.(dx)Y p > 0 p'-.= min(l, l/p).

Then

<^h,p(P)--= MH^{mxP, m2P)-.= H^m^P - m2P\) C.1.6)

is a primary distance. One can also consider MH p as a distance in the space

m(p\^) ¦¦= \m(p)P ¦.= ( dp{x, a)P{dx)\P < oo, P e 0>{U) I C.1.7)

of moments m(p)P of order p > 0. If H(t) = t then

A(x OVP P~Md\

Ltia3 V 1 2/V

it;

is a primary metric in m(p)(^).

Example 3.1.3. Let g: [0, oo] -> 1R and H e Jf. Then

is a primary distance in g ° mffi) and

Ji{g\mxP, m2P)-.= igim.P) - g(m2P)\ C.1.9)

is a primary metric.

24 PRIMARY, SIMPLE AND COMPOUND p. DISTANCES

If U is a Banach space with norm|| • || then we define the primary dis-

distance ^HP{g)as follows

^ m^Y)-.= H(\g(m^X) - g{m^Y)\) C.1.10)

where (cf. B.1.8)) m(p)X is the 'p-th moment (norm) of X'

m(p)X:={E\\X\\py.

By Equation C.1.9), JtH p(g) may be viewed as a distance (see Definition

2.2.2) in the space

gom(X)-.= {gom(X):= g({E\\X\\p}p'), XeX} p'= mind,?-1), * = X(U)

C.1.11)

of moments g o m(X). If U is the real line 1R and g{t) = H(t) = t{t ^ 0) then

JtH p(g)(m(p)X, m(p)Y) is the usual deviation between moments m(p)X and m(p)Y

(see'B.1.9)).

Example 3.1.4. Let J be an index set (with arbitrary cardinality), g{ (i eJ) be

real functions on [0, oo] and for each P e ^(U) define the set

hP:={9i(mP),ieJ} C.1.12)

Further, for each P e ^(U) let us consider hPx and hP2 where P/s are the

marginals of P. Then

^ C.1.13)

otherwise

is a primary metric.

Example 3.1.5. Let [/ be the «-dimensional Euclidean space W, H eJ^. Define

the 'engineer distance'

EN(X, Y;H):=H[

i= 1

C.1.14)

where X = (XU..., Xn), Y= {Yu ..., Yn) belong to the subset X(W) ? X(W) of

all n-dimensional random vectors that have integrable components. Then

EN( •, •; H) is a p. semidistance in j^R"). Analogously, the 'Lp-engineer metric'

X|EXI.-E^.

X|EXI.-E^.H ,P>0 C.1.15)

is a primary metric in X(W). In the case p = 1 and n = 1, the metric EN( •, •; p)

coincides with the engineer metric in X(U) (see B.1.1)).

SIMPLE DISTANCES AND METRICS 25

3.2 SIMPLE DISTANCES AND METRICS; CO-MINIMAL

FUNCTIONALS AND MINIMAL NORMS

Clearly, any primary distance /x(P) (P e g?2) is completely determined by the pair

of marginal distributions P{ = T(P (i = 1, 2), since the equality Pl = P2 implies

hP^ = hP2 (see Relations C.1.1), C.1.2) and Definition 3.1.1). On the other

hand, if the mapping h is 'rich enough' then the opposite implication

t =hP2=>Pl=P2

takes place. The simplest example of such 'rich' h: 3P(U)-* R3 is given by the

equalities

HP) ¦¦= {P(C), Ce<$,Pe 0>(U)} C.2.1)

where J = <& is the family of all closed non-empty subsets C ^ U. Another

example is

h{P) = \pf-.= fdP:feCb{U)\ Pe0>{U)

where Cb(U) is the set of all bounded continuous functions on U. Keeping in

mind these two examples we shall define the notion of 'simple' distance as a

particular case of primary distance with h given by Equality C.2.1).

Definition 3.2.1. The p. semidistance /x is said to be a simple semidistance in

0> = 0>{U), if for each P e 0>2

^(P) = 0<=T1P= T2P.

If, in addition, n is a p. semimetric, then jx will be called a simple semimetric.

If the converse implication (=>) also holds, we say that jx is simple distance. If,

in addition, /x is a p. semimetric, then /x will be called a simple metric.

Since the values of the simple distance /x(P) depend only on the pair marginals

P^,P2 we shall consider /x as a functional on ^ x 0>2 and we shall use the

notation

where Px x P2 means the measure product of laws Px and P2. In this case the

metric properties of jx take the form (cf. Definition 2.3.1) (for each P^,P2,

IDB) Pl=P2on(Pl,P2) =

SYM<2>

TI<2>

Hence, the space 0> of laws P with a simple distance jx is a distance space (see

Definition 2.2.2). Clearly each primary distance is a simple semidistance in J<\

26 PRIMARY, SIMPLE AND COMPOUND p. DISTANCES

The Kolmogorov metric p B.1.2), the Levy metric L B.1.3) and the 0p-metrics

B.1.4) are simple metrics in 0>(U).

Let us consider a few more examples of simple metrics which we shall use

later on.

Example 3.2.1. Minimal distances.

Definition 3.2.2. For a given p. semidistance jx on 0>2 the functional jx on & x &

defined by the equality

/}(/>!, P2)-= inf{/*(P); TtP = Ph i = 1, 2} Pu P2e0> C.2.2)

is said to be (simple) minimal (w.r.t. n) distance.

As we showed in Section 2.5.1, for a 'rich enough' probability space, the

space 0>2 of all laws on U2 coincides with the set of joint distributions Pr* y

of [/-valued r.v.s. Thus always n(P) = /x(Ptx Y) for some X, YeX(U) and

therefore Equation C.2.2) can be rewritten as follows

ft(Pv P2) = inf{MX, Y): Pr* = Pu Pry = P2}.

The last is the Zolotarev definition of a minimal metric (Zolotarev, 1976b).

In the next theorem we shall consider the conditions on U that guarantee jx

to be a simple metric.

We use the notation -^ 'to mean weak convergence of laws' (cf., Billingsley,

1968).

Theorem 3.2.1. Let U be a u.m. s.m.s. (see Definition 2.4.2) and let jx be a p.

semidistance with parameter K^. Then (x is a simple semidistance with para-

parameter Kfr = K^. Moreover, if n is a p. distance satisfying the following 'con-

'continuity' condition

Then jx is a simple distance with parameter K(i= K^.

Remark 3.2.1. The continuity condition is not restrictive; in fact, all p. distances

we are going to use satisfy this condition.

Remark 3.2.2. Clearly, if /x is a p. semimetric then, by the above theorem, jx is

a simple semimetric.

Proof. IDB): If Px g ^ then we let X eX(U) have the distribution P^ Then,

by ID(*> (Definition 2.3.2),

SIMPLE DISTANCES AND METRICS 27

Suppose now that jx is a p. distance and the continuity condition holds.

If jx{P1,P1) = 0 then there exists a sequence of laws P(n)G0>2 with fixed

marginals 7;P(n) = P, (i = 1, 2) such that ix{P(n)) -> 0 as n -»• oo. Since P,. is a tight

measure then the sequence {Pn, n ^ 1} is uniformly tight, i.e., for any 8 > 0

there exists a compact K? c t/2 such that P(n)(JQ ^ 1 - 2 for all n > 1 (cf.

Dudley, 1989, Section 11.5). Using Prokhorov compactness criteria (see, for

instance, Billingsley 1968, Theorem 6.1) we choose a subsequence P(n ] that

weakly tends to a law P e 0>2, hence, TtP = P, and n(P) = 0. Since /x is a p.

distance P is concentrated on the diagonal x = y and thus Pt = P2 as desired.

SYMB): Obvious.

TIB): Let Pu P2, P3e^. For any e>0 define a law P12e0»2 with

marginals TtPl2 = Pt (i = 1, 2) and a law P23 e 0>2 with 7^P23 = Pi+1 (i = 1, 2)

such that fi(Pl,P2) >n{Pl2) -s and fi(P2, P3) > n{P23) - e. Since [/ is a

u.m. s.m.s. then there exist Markov kernels P'{A/z) and P"(A/z) defined by the

equalities

C.2.3)

P23(A2 * A3)-.= \ P"(A3/z)P2(dz) C.2.4)

JA2

for all Au A2, ^3el, (see Corollary 2.4.2). Then define a set function Q

on the algebra si of finite unions of Borel rectangles A1 x A2 x A3 by the

equation

Q(A1 x A2 x A3)-.= P'(A1/z)P"(A3/z)P2(dz). C.2.5)

It is easily checked that Q is countably additive on si and therefore extends

to a law on U3. We use '<2' to represent this extension also. The law Q has the

projections Tl2Q = Pl2,T23Q = P23. Since jx is a p. semidistance with parameter

IK = K^ we have

i,P3)<Mr136XiK|>(p12)H

Letting ?-^0we complete the proof of TIB). QED

As will be shown later (Part 2), all simple distances in the next examples are

actually simple minimal (x distances with respect to p. distances jx that will be

introduced in Section 3.3 (see further examples 3.3.1 to 3.3.3).

Example 3.2.2. (Kantorovich metric and Kantorovich distance). In Section 2.1,

28 PRIMARY, SIMPLE AND COMPOUND p. DISTANCES

we introduced the Kantorovich metric k and its 'dual' representation

*(/>!, P2)= I \Fl(x)-F2(x)\dx

fd(Pl-P2)

: /: R -> R, /' exists a.e. and |/'| < 1 a.e.

where P;s are laws on 1R with d.f.s F{ and finite first absolute moment. From

the above representation it also follows that

fd(Pl-P2)

: f: R -> R, / is A, 1)-Lipschitz,

i.e., \f(x)-f(y)\ t:\x-y\Vx, ye

In this example we shall extend the definition of the above simple p. metric

of the set ^(U) of all laws on a s.m.s. {U, d). For any a e @, oo) and /? e [0, 1]

define the Lipschitz functions class

Lip^l/):= {/: U - R: | f(x) - /(y)| < a d'(x, y) Vx, y e I/} C.2.6)

with the convention

d°(x, y)<= {[ ^^ C.2.7)

[0 if x = y.

Denote the set of all bounded functions /eLipa/?([/) by Lip^([/). Let

&H(U) be the class of all pairs (/, g) of functions that belong to the set

Lipb(U):= [JUpaA(U) C.2.8)

a>0

and satisfy the inequality

fix) + g(y) ^ H(d(x, y)) Vx,yeU C.2.9)

where H is a convex function from Jf. Recall that H e Jf if H is a nondecreasing

continuous function from [0, oo) onto [0, oo), vanishes at the origin and

KH-= supf>0 HBt)/H(t) < oo. For any two laws Pt and P2 on a s.m.s. (U, d)

define

4(^1, P2)-= supj f /dP! + L dP2: (/, ^) g ^H([/)|. C.2.10)

We shall prove further that {H is a simple distance with K^ = KH in the

space of all laws P with finite '//-moment', j" H{d(x, a))P(dx) < oo. The

proof is based on the representation of <fH as a minimal distance tH =

?&H (Corollary 5.2.2) with respect to a p. distance (with K<?H = KHyH{P) =

SIMPLE DISTANCES AND METRICS

29

Jj/2 H(d{x, y))P(dx, dy) and then an appeal to Theorem 3.2.1 proves that ?H is a

simple p. distance if (U, d) is a universally measurable s.m.s. In the case H(t) = tp

A < p < oo) define

l<P<oo.

C.2.11)

In addition, for p e [0, 1] and p = oo, denote

4(Pi,P2):=sup<

/eLip*lpP(l/)j pe@,l]

/d(Pi-P2)

:/eLipltO(l/

C.2.12)

C.2.13)

= o(P1,P2):=

'•(Pi,

C.2.14)

where, as above, 3§1 = ^(U) is the Borel cr-algebra on a s.m.s. ([/, rf), and

Ae-.= {x:d{x,A)<e}.

For any 0 < p < 1, p=oo, ^, is a simple metric in ^([/) which follows

immediately from the definition. To prove that i^ is a p. metric (taking

possibly infinite values) one can use the equality

sup \?,{A) - P2(A<)-] = sup IP2(A) - P,{AE)l

The equality <f0 = a in Equation C.2.13) follows from the fact that both

metrics are minimal with respect to one and the same p. distance Sfo{P) =

P((x, y):x^ y), see further Corollary 6.1.1 and Corollary 7.4.2. We shall prove

also (see Corollary 7.3.2) that ?H = <?„, as a minimal distance w.r.t. ?n defined

above, admits the Birnbaum-Orlicz representation (see Example 2.2.2)

4(Pi. P2) = 4(Pi,

in the case of U = U and d(x, y) = \x — y\. In Equation C.2.15),

is the (generalized) inverse of the d.f. F{ determined by P{ (i

H(i) = twe claim that

C.2.15)

C.2.16)

1, 2). Letting

= K(Pl,P2)-.= \ \Fl(x)-F2(x)\dx Pf

i = 1, 2.

C.2.17)

30 PRIMARY, SIMPLE AND COMPOUND p. DISTANCES

Remark 3.2.3. Here and in the sequel, for any simple semidistance jx on

we shall use the following notations interchangeably

u X2)-.= MPr*,, Pr*2) VX1; X2eX(W)

= piFu F2):= fi(Pi, Pi) VFi, F2 G jrflT)

where Prx is the distribution of Xh F; is the d.f. of P, and &(M") stands

for the class of d.f.s on W.

The ^-metric C.2.17) is known as the average metric in !F{R) as well as the

first difference pseudomoment, and it is also denoted by k (see Zolotarev, 1976b).

A great contribution in the investigation of ^-metric properties was made by

Kantorovich A942, 1948), Kantorovich and Akilov A984, 4, Chap. VIII). That

is the reason the metric ?x is called the Kantorovich metric. Considering ?H

as a generalization ?x, we shall call ?H the Kantorovich distance.

Example 3.2.3 (Prokhorov metric and Prokhorov distance). Prokhorov A956)

introduced his famous metric

n{Pu P2)-.= inf{e > 0: PX{C) < P2{O) + e, P2(C) < P^C) + e VC e #}

C.2.18)

where <€-.= ^(U) is the set of all nonempty closed subsets of a Polish space

[/and

(?:= {x:d{x, C)<e}. C.2.19)

The metric n admits the following representations: for any laws Pt and P2

on a s.m.s. (U, d)

n{P,, P2) = inf{8 > 0: P^C) < P2(O) + e for any C e #}

= inf{8 > 0: P^C) < P2{CE]) + e, for any C e #}

= inf{e > 0: P^A) < P2(A?) + e, for any A e &x) C.2.20

where

Ce]-.= {x:d(x, C)^e} . C.2.21)

is the e-closed neighborhood of C (see, for example, Theorem 8.1, Dudley,

1976).

Let us introduce a parametric version of the Prokhorov metric

«*tfV ^2)" inf{e > 0: Pi(Q < P2(C'E) + e for any CeV}. C.2.22)

The next lemma gives the main relationship between the Prokhorov-type

metrics and the metrics 4 and 4, defined by Equalities C.2.13) and C.2.14).

SIMPLE DISTANCES AND METRICS 31

Lemma 3.2.1. For any Pu P2 e0>(U)

lim nx(Pu P2) = <x(P1; P2) = 'S0(Plt P2) C.2.23)

;i-o

lim Xnx(PuP2) = SJPuP2).

Proof. For any fixed A > 0 the function A?{X)-.= supjP^C) - P2{CXe): CeV],

A ^ 0 is non-increasing on e > 0, hence

nx(pu P2) = inf{? > 0: Ae(X) < ?} = max min(8, v

?>0

For any fixed s > 0, AE(-) is non-increasing and

lim AE(X) = AE@) = surfP^Q - P2(C)) = sup {P,{A) ~

= sup |P1(^)-P2M)| ==0(^1,^2)

Thus

lim nx{Pu P2) = max mini e, lim ^?(A)) = max min(8, a(P1; P2)) = o(Pu P2).

X-+0 ?>0 V X-+0 ) ?>0

Analogously, as X -* 00

An^P!, P2) = inf{A8 > 0: A,{X) < 2}

= inf{8 > 0: A,{\) < a/A} -> inf{? > 0: ^4?A) < 0}

= C(Pi, P2). QED

As a generalization of nx we define the Prokhorov distance

nH(Pu Pi) ¦¦= inf{H(?) > 0: P^/l8) < P2(^) + H(?), V^ e #J C.2.24)

for any strictly increasing function H &3^. From Equation C.2.24),

"h(^i> -P2) = inf{<5 > 0: P^/l) < P2{AH~Hd)) + 5 for any AE&J C.2.25)

and it is easy to check that nH is a simple distance with KnH = KH (see

Condition B.2.3)). The metric nx is a special case of nH with H(t) = f/A.

Example 3.2.4 (Birnbaum-Orlicz distance @H) and Qp-metric in &(M)). Let

U = U, d{x,y) = \x — y\. Following Example 2.2.2 we define the Birnbaum-

Orlicz average distance

I t He3V F;g^(!R) i = 1,2 C.2.26)

32 PRIMARY, SIMPLE AND COMPOUND p. DISTANCES

and the Birnbaum-Orlicz uniform distance

!, F2)== H(p(F1? F2)) = sup H(|Ft(x) - F2(x)|). C.2.27)

xeR

The Qp-metric {p > 0)

( f °° ~) p'

8P(F1? F2) == < | Ft(r) - F2(r)|p dr > p' == min(l, 1/p) C.2.28)

V. J — oo J

is a special case of 8H with appropriate normalization that makes 8P

p. metric taking finite and infinite values in the distribution functions space

). In case p = oo we denote 8^ to be the Kolmogorov metric

0.(^1, F2)-= p(Flt F2)-.= suplF^x) - F2(x)\. C.2.29)

xeR

In the case p = 0 we put

Oo^i, Fi)-= P I{t: Fi@ # F2@} d' = Leb{F1 ^ F2).

J — xi

Here as in the following, I(A) is the indicator of the set A.

Example 3.2.5 (Co-minimal metrics). As we have seen in Section 3.1 each

primary distance fx{P) = ^h^P, h2P) (Pe^2) determines a semidistance (see

Definition 2.2.2) in the space of equivalence classes

{Pe&2:hvP = a,h2P = b} a,beRJ. C.2.30)

Analogously, the minimal distance

#P):=/iGiP, T2P)

¦¦= inf {n{P): P g ^2(^X P and P have one and the same marginals,

TiP=TiP,i=l,2}tPeP2(U)

may be viewed as a semidistance in the space of classes of equivalence

{Pe0>2:TlP=Pl,T2P = P2} Pl,P2c0>l. C.2.31)

Obviously, the partitioning C.2.31) is more refined than Equation C.2.30)

and hence each primary semidistance is a simple semidistance. Thus

{the class of primary distances (Definition 3.1.1)}

c= {the class of simple semidistances (Definition 3.2.1)}

<= {the class of all p. semidistances (Definition 2.3.1)}.

Open problem 3.2.1. A basic open problem in TPM is to find a good classifi-

SIMPLE DISTANCES AND METRICS 33

cation of the set of all p. semidistances. Does there exist a 'Mendeleyev

periodic table' of p. semidistances?

One can get a classification of probability semidistances considering more

and more refined partitions of ^2. For instance, one can use a partition finer

than Equation C.2.31), generated by

{Pe0>2:TlP = Pl,T2P = P2,Pe0><#t} teT C.2.32)

where Pt and P2 are laws in ^ and ^^t{t e T) are subsets of ^2, whose

union covers 0>2. As an example of the set ^"^ one could consider

^t = jpe^2: j fidP^b;, iej\ t = (J,b,f) C.2.33)

where J is an index set, b-.= {bhieJ) is a set of reals and /= {fhieJ}

is a family of bounded continuous functions on U2 (Kemperman 1983, Levin

and Rachev 1990).

Another useful example of a set 0*61 is constructed using a given probability

metric v(P){P e ^2) and has the form

= ^6^: v(P) ^ t) C.2.34)

where t e [0, oo] is a fixed number.

Open problem 3.2.2. Under which conditions is the functional

li(Plt P2; 0«t)¦¦= inf\ti{P): Pe0>2,TiP = Pfc = 1, 2), Pe ^(P^ P2 e^2)

a simple semidistance (resp., semimetric) w.r.t. the given p. distance (resp. metric)

Further, we shall examine this problem in the special case of C.2.34)

(see Theorem 3.2.2). Analogously, one can investigate the case of ^% =

{P e ^2: v,(P) ^ a;, i = 1, 2,...} {t = (at, a2,...)) for fixed p. metrics v; and

a,, e [0, oo ].

Following the main idea of obtaining primary and simple distances by means

of minimization procedures of certain types (see Definitions 3.1.2 and 3.2.2) we

shall give the notion of'co-minimal distance'.

For a given compound semidistances \i and v with parameters K^ and Ky,

respectively, and for each a > 0 denote

/iv(P1? P2, a) = inf{/z(P): P e ^2, T,P = P1? T2P = P2, v(P) ^ a} Pl5 P2 e ^

C.2.35)

(cf. Equation C.2.32) and C.2.34)).

Definition 3.2.4. The functional /xv(P1? P2, a) (P1? P2 e ^, a > 0) will be called

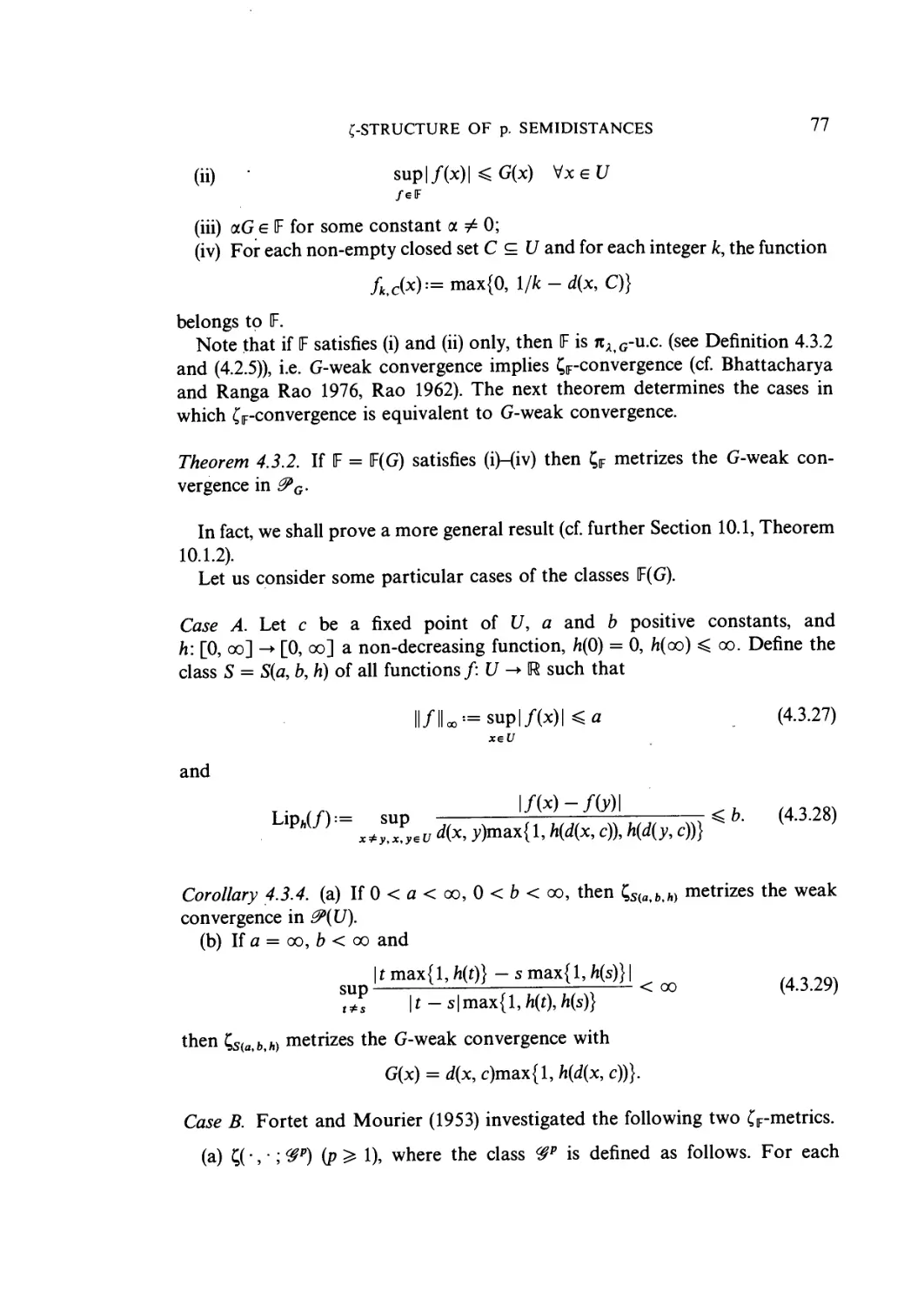

the co-minimal (metric) functional w. r. t. the p. distances \i and v (see Fig. 3.2.1)

34

PRIMARY, SIMPLE AND COMPOUND p. DISTANCES

As we will see in the next theorem, the functional /zv( •, •, a) has some metric

properties but nevertheless it is not a p. distance, however, fiv{ •, •, a) induces

p. semidistances as follows.

Let [xv be the so-called co-minimal distance

Hv(Pu P2) = inf{a > 0; fxv(Pl P2, a) < a}

(see Fig. 3.2.1) and let

C.2.36)

i, P2) = Km sup a/iv(P1? P2, a).

a-»0

Then the following theorem is true.

Theorem 3.2.2. Let U be an u.m. s.m.s. and n be a p. distance satisfying the

'continuity' condition in Theorem 3.2.1. Then, for any p. distance v,

(a) fiv( •, •, a) satisfies the following metric properties

SYMC>:

lt P3, Kv(a

l5 P2, a)

, P3,

for any P1? P2, P3 e ^, a ^ 0, j8 ^ 0.

(b) fiv is a simple distance with parameter K^v = max[K/J, KJ. In particular,

if \i and v are p. metrics then \iv is a simple metric.

(c) Jiv is a simple semidistance with parameter K^ = 2K/JKV.

Figure 3.2.1 Co-minimal distance fiv{PlyP2). (From Rachev and Shortt, 1990. Reproduced by

permission of the American Mathematical Society.)

SIMPLE DISTANCES AND METRICS 35

Proof.

(a) By Theorem 3.2.1, and Fig. 3.2.1, ^v(P1? P2, a) = 0=>/z(P,, P2) = 0=>

Pl= P2 as well as if Pt e ^ and X is a r.v. with distribution Pt then

/xv(P1? Pj; a) ^ MPr*,x) = 0. So, IDC) is valid. Let us prove TIC). For each

Pt, P2, P3e^y a ^ 0,' /? ^ 0 and a ^ 0 define laws P,2 g ^2 and P23 g .^2 such

that TiPl2 = Pi,TiP23 = Pi+l(i=l,2), v(P12Ka, v(P23HP and /iv(Pl5 P2, a)^

v(Pi2) ~ e, M^2' ^3, a) ^ M^23) - ?• Define a law g g 0>3 by Equation C.2.5).

Then Q has bivariate marginals T12<2 = P12 and T23Q = P23, hence, v(T130 ^

Kv[v(P12) + v(P23)] ^ Kv(a + ?) and

,, P2, a) + fiv(P2, P3, p) + 2e].

Letting e -* 0, we get TIC).

(b) If tiv(Py, P2) < a and A*v(P2, ^3) < /?, then there exists P12 (resp. P23) with

marginals Pi and P2 (resp. P2 and P3) such that /x(P12) < a, v(P12) < a,

n(P23) < P- In a similar way, as in (a) we conclude that /xv(P1? P3, Kv(a + /?)) ^

K^(a + ?), thus, MPl P2) < max(K^, Kv)(a + j8).

(c) Follows from (a) with a = p. QED

Example 3.2.6. (Minimal norms). Each co-minimal distance fiv is greater than

the minimal distance p. (see Fig. 3.3.1). We now consider examples of simple

metrics fa corresponding to given p. distances \i that have (like fxv) a 'minimal'

structure but fa s$ fi.

Let Jfk be the set of all finite non-negative measures on the Borel cr-algebra

g$k = &(Uk) (U is a s.m.s.). Let Jf0 denote the space of all finite signed measures

v on J\ with total mass m{U) = 0. Denote by ^^{U2) the set of all continuous,

symmetric and non-negative functions on U2. Define the functional

lic(m) ¦¦= c(x, y)m(dx, dy), meJl2 ce %Sf(U2) C.2.37)

and

{ic{v)-.= inf{/ic(m): 7> - T2m = v} v g jMq C.2.38)

where T{in means the zth marginal measure of m.

Lemma 3.2.2. For any c g %>?f{U2) the functional fic is a seminorm in the space

Jt0.