Текст

STEWART N. ETHIER

THOMAS G. KURTZ

MARKOV PROCESSES

CHARACTERIZATION AND CONVERGENCE

WILEY SERIES IN PROBABILITY

AND MATHEMATICAL STATISTICS

Markov Processes

Markov Processes

Characterization and Convergence

STEWART N. ETHIER

THOMAS G. KURTZ

® WILEY-

INTERSCIENCE

A JOHN WILEY & SONS, INC., PUBLICATION

Copyright © 1986,2005 by John Wiley & Sons, Inc. All rights reserved.

Published by John Wiley & Sons, Inc., Hoboken, New Jersey.

Published simultaneously in Canada.

No part of this publication may be reproduced, stored in a retrieval system or transmitted

in any form or by any means, electronic, mechanical, photocopying, recording, scanning

or otherwise, except as permitted under Sections 107 or 108 of the 1976 United States

Copyright Act, without either the prior written permission of the Publisher, or

authorization through payment of the appropriate per-copy fee to the Copyright

Clearance Center, 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax

(978) 750-4470. Requests to the Publisher for permission should be addressed to the

Permissions Department, John Wiley & Sons, Inc., Ill River Street, Hoboken, NJ 07030,

(201) 748-6011, fax (201) 748-6008.

Limit of Liability/Disclaimer of Warranty: While the publisher and author have used their best

efforts in preparing this book, they make no representations or warranties with respect to the

accuracy or completeness of the contents of this book and specifically disclaim any implied

warranties of merchantability or fitness for a particular purpose. No warranty may be created or

extended by sales representatives or written sales materials. The advice and strategies contained

herein may not be suitable for your situation. You should consult with a professional where

appropriate. Neither the publisher nor author shall be liable for any loss of profit or any other

commercial damages, including but not limited to special, incidental, consequential, or other

damages.

For general information on our other products and services or for technical support, please contact

our Customer Care Department within the U.S. at (800) 762-2974, outside the U.S. at (317) 572-

3993 or fax (317)572-4002.

Wiley also publishes its books in a variety of electronic formats. Some content that appears in print

may not be available in electronic format. For information about Wiley products, visit our web site at

www.wiley.com.

Library of Congress Cataloging-in-Publication is available.

ISBN-13 978-0-471-76986-6

ISBN-I0 0-47I-76986-X

Printed in the United States of America.

10 987654321

PREFACE

The original aim of this book was a discussion of weak approximation results

for Markov processes. The scope has widened with the recognition that each

technique for verifying weak convergence is closely tied to a method of charac-

terizing the limiting process. The result is a book with perhaps more pages

devoted to characterization than to convergence.

The Introduction illustrates the three main techniques for proving con-

vergence theorems applied to a single problem. The first technique is based on

operator semigroup convergence theorems. Convergence of generators (in an

appropriate sense) implies convergence of the corresponding semigroups,

which in turn implies convergence of the Markov processes. Trotter's original

work in this area was motivated in part by diffusion approximations. The

second technique, which is more probabilistic in nature, is based on the mar-

tingale characterization of Markov processes as developed by Stroock and

Varadhan. Here again one must verify convergence of generators, but weak

compactness arguments and the martingale characterization of the limit are

used to complete the proof. The third technique depends on the representation

of the processes as solutions of stochastic equations, and is more in the spirit

of classical analysis. If the equations “converge,” then (one hopes) the solu-

tions converge.

Although the book is intended primarily as a reference, problems are

included in the hope that it will also be useful as a text in a graduate course on

stochastic processes. Such a course might include basic material on stochastic

processes and martingales (Chapter 2, Sections 1-6), an introduction to weak

convergence (Chapter 3, Sections 1-9, omitting some of the more technical

results and proofs), a development of Markov processes and martingale prob-

lems (Chapter 4, Sections 1-4 and 8), and the martingale central limit theorem

(Chapter 7, Section 1). A selection of applications to particular processes could

complete the course.

vi PREFACE

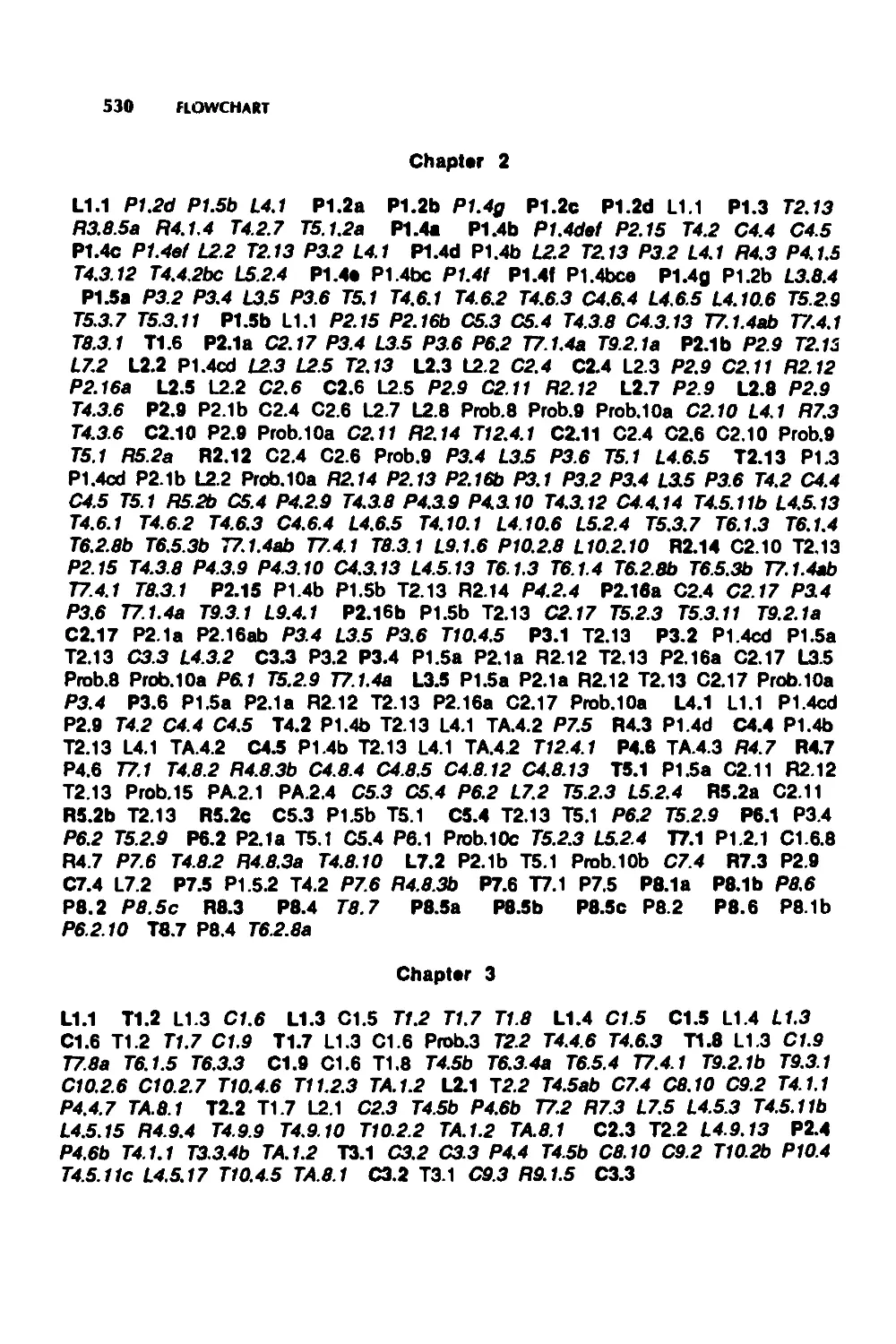

As an aid to the instructor of such a course, we include a flowchart for all

proofs in the book. Thus, if one’s goal is to cover a particular section, the chart

indicates which of the earlier results can be skipped with impunity. (It also

reveals that the course outline suggested above is not entirely self-contained.)

Results contained in standard probability texts such as Billingsley (1979) or

Breiman (1968) are assumed and used without reference, as are results from

measure theory and elementary functional analysis. Our standard reference

here is Rudin (1974). Beyond this, our intent has been to make the book

self-contained (an exception being Chapter 8). At points where this has not

seemed feasible, we have included complete references, frequently discussing

the needed material in appendixes.

Many people contributed toward the completion of this project. Cristina

Costantini, Eimear Goggin, S. J. Sheu, and Richard Stockbridge read large

portions of the manuscript and helped to eliminate a number of errors.

Carolyn Birr, Dee Frana, Diane Reppert, and Marci Kurtz typed the manu-

script. The National Science Foundation and the University of Wisconsin,

through a Romnes Fellowship, provided support for much of the research in

the book.

We are particularly grateful to our editor, Beatrice Shube, for her patience

and constant encouragement. Finally, we must acknowledge our teachers,

colleagues, and friends at Wisconsin and Michigan State, who have provided

the stimulating environment in which ideas germinate and flourish. They con-

tributed to this work in many uncredited ways. We hope they approve of the

result.

Stewart N. Ethier

Thomas G. Kurtz

Salt Lake City, Utah

Madison, Wisconsin

August 1985

CONTENTS

Introduction 1

1 Operator Semigroups 6

1 Definitions and Basic Properties, 6

2 The Hille-Yosida Theorem, 10

3 Cores, 16

4 Multivalued Operators, 20

5 Semigroups on Function Spaces, 22

6 Approximation Theorems, 28

7 Perturbation Theorems, 37

8 Problems, 42

9 Notes, 47

2 Stochastic Processes and Martingales 49

I Stochastic Processes, 49

2 Martingales, 55

3 Local Martingales, 64

4 The Projection Theorem, 71

5 The Doob-Meyer Decomposition, 74

6 Square Integrable Martingales, 78

7 Semigroups of Conditioned Shifts, 80

8 Martingales Indexed by Directed Sets, 84

9 Problems, 89

10 Notes, 93

vii

Vlii CONTENTS

3 Convergence of Probability Measures 95

1 The Prohorov Metric, 96

2 Prohorov’s Theorem, 103

3 Weak Convergence, 107

4 Separating and Convergence Determining Sets, 111

5 The Space D£[0, oo), 116

6 The Compact Sets of D£[0, oo), 122

7 Convergence in Distribution in Dc[0, oo), 127

8 Criteria for Relative Compactness in D£[0, oo), 132

9 Further Criteria for Relative Compactness

in D£[0, oo), 141

10 Convergence to a Process in C£[0, oo), 147

11 Problems, 150

12 Notes, 154

4 Generators and Markov Processes 155

1 Markov Processes and Transition Functions, 156

2 Markov Jump Processes and Feller Processes, 162

3 The Martingale Problem: Generalities and Sample

Path Properties, 173

4 The Martingale Problem: Uniqueness, the Markov

Property, and Duality, 182

5 The Martingale Problem: Existence, 196

6 The Martingale Problem: Localization, 216

7 The Martingale Problem: Generalizations, 221

8 Convergence Theorems, 225

9 Stationary Distributions, 238

10 Perturbation Results, 253

11 Problems, 261

12 Notes, 273

5 Stochastic Integral Equations 275

1 Brownian Motion, 275

2 Stochastic Integrals, 279

3 Stochastic Integral Equations, 290

4 Problems, 302

5 Notes, 305

6 Random Time Changes 306

1 One-Parameter Random Time Changes, 306

2 Multiparameter Random Time Changes, 311

3 Convergence, 321

CONTINTS tx

4 Markov Processes in Zd, 329

5 Diffusion Processes, 328

6 Problems, 332

7 Notes, 335

7 Invariance Principles and Diffusion Approximations 337

1 The Martingale Central Limit Theorem, 338

2 Measures of Mixing, 345

3 Central Limit Theorems for Stationary Sequences, 350

4 Diffusion Approximations, 354

5 Strong Approximation Theorems, 356

6 Problems, 360

7 Notes, 364

8 Examples of Generators 365

1 Nondegenerate Diffusions, 366

2 Degenerate Diffusions, 371

3 Other Processes, 376

4 Problems, 382

5 Notes, 385

9 Branching Processes 386

1 Galton-Watson Processes, 386

2 Two-Type Markov Branching Processes, 392

3 Branching Processes in Random Environments, 396

4 Branching Markov Processes, 400

5 Problems, 407

6 Notes, 409

10 Genetic Models 410

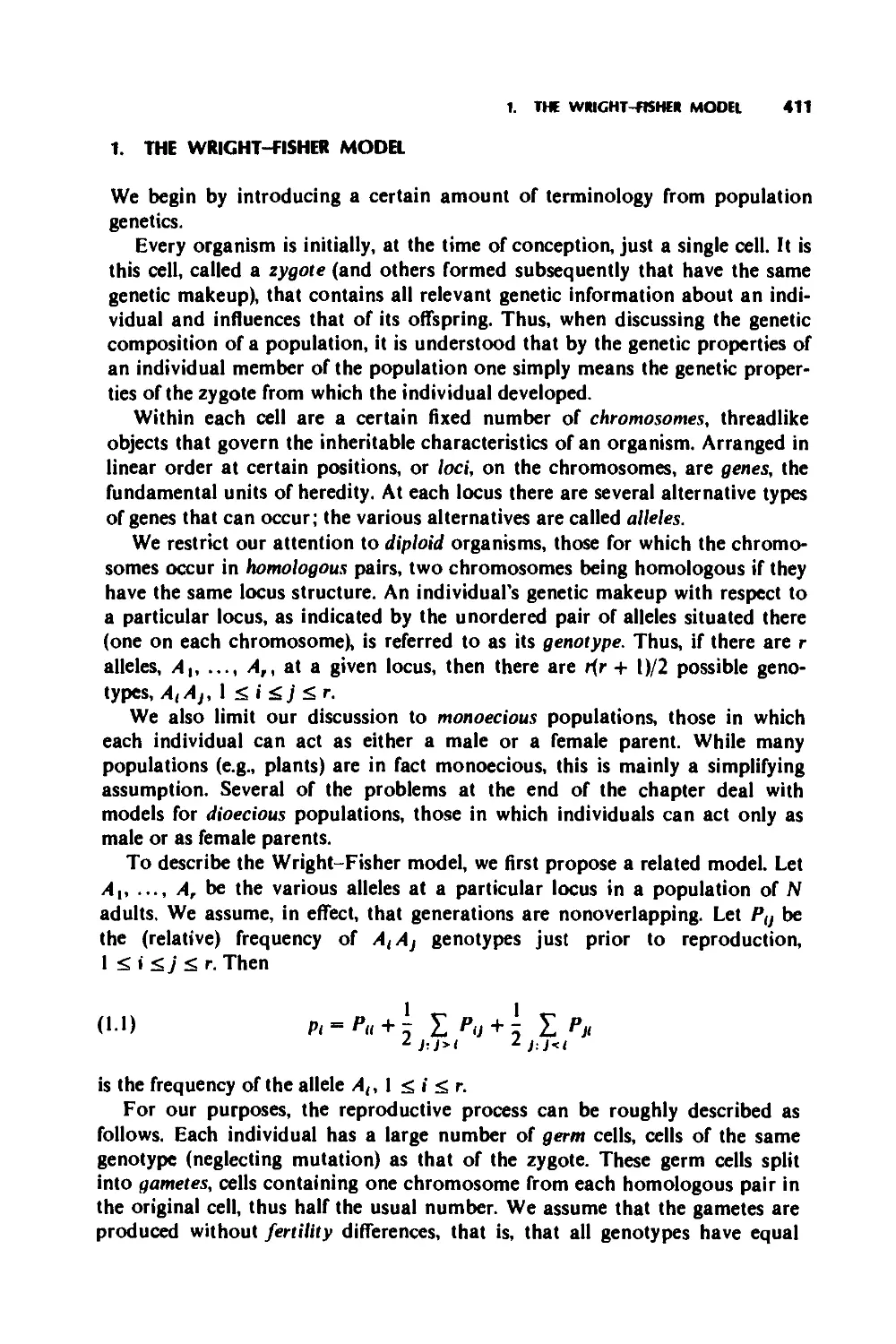

1 The Wright-Fisher Model, 411

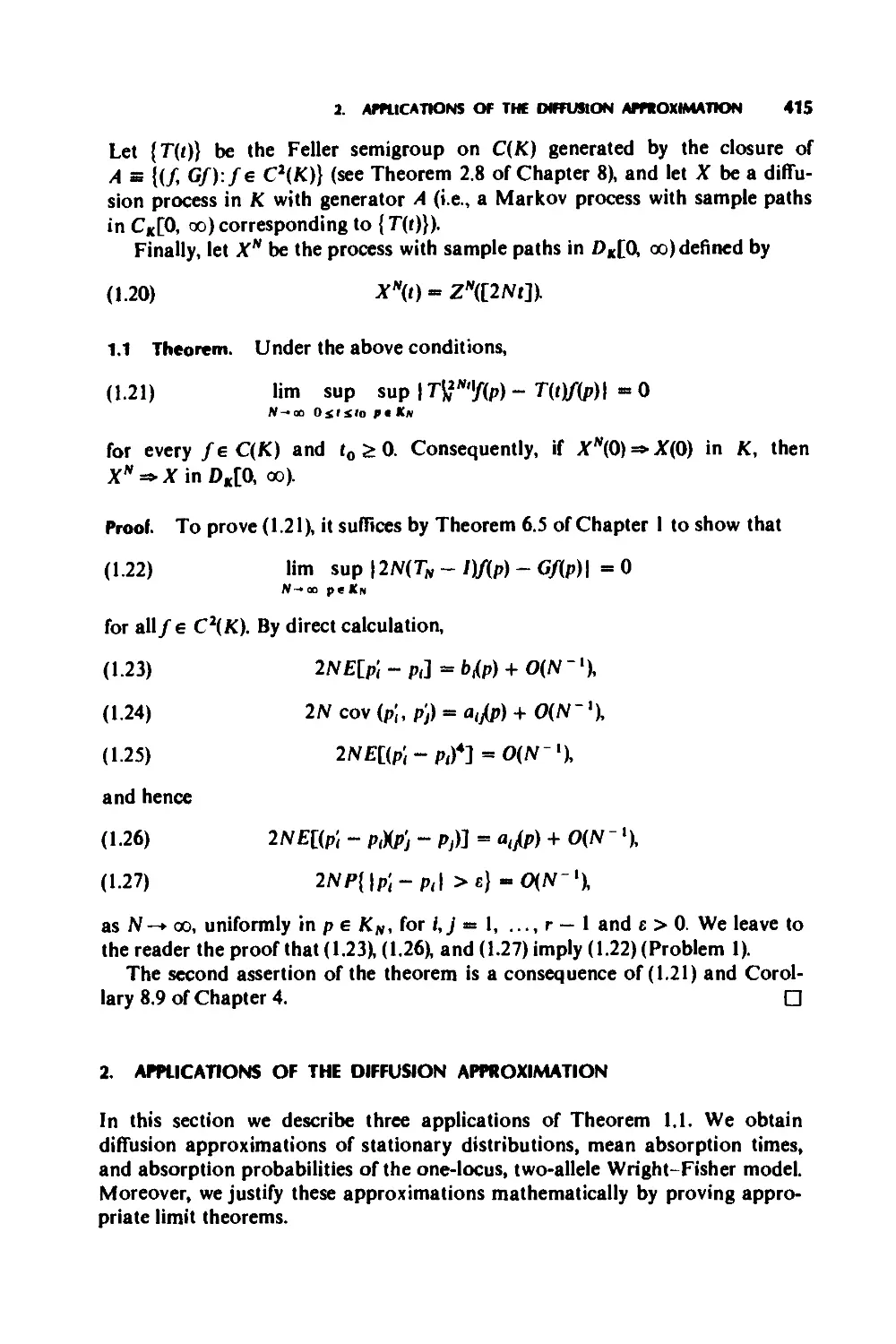

2 Applications of the Diffusion Approximation, 415

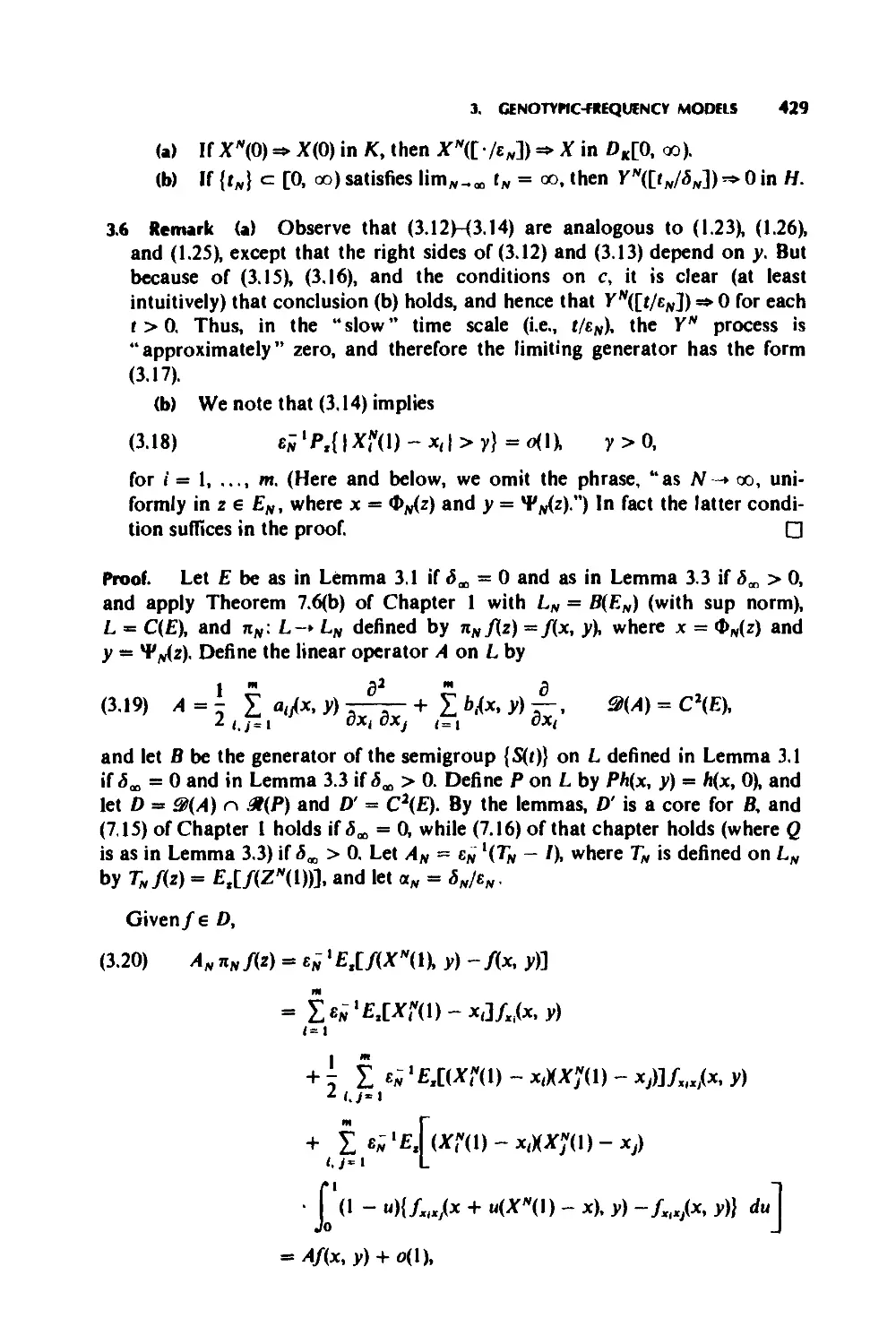

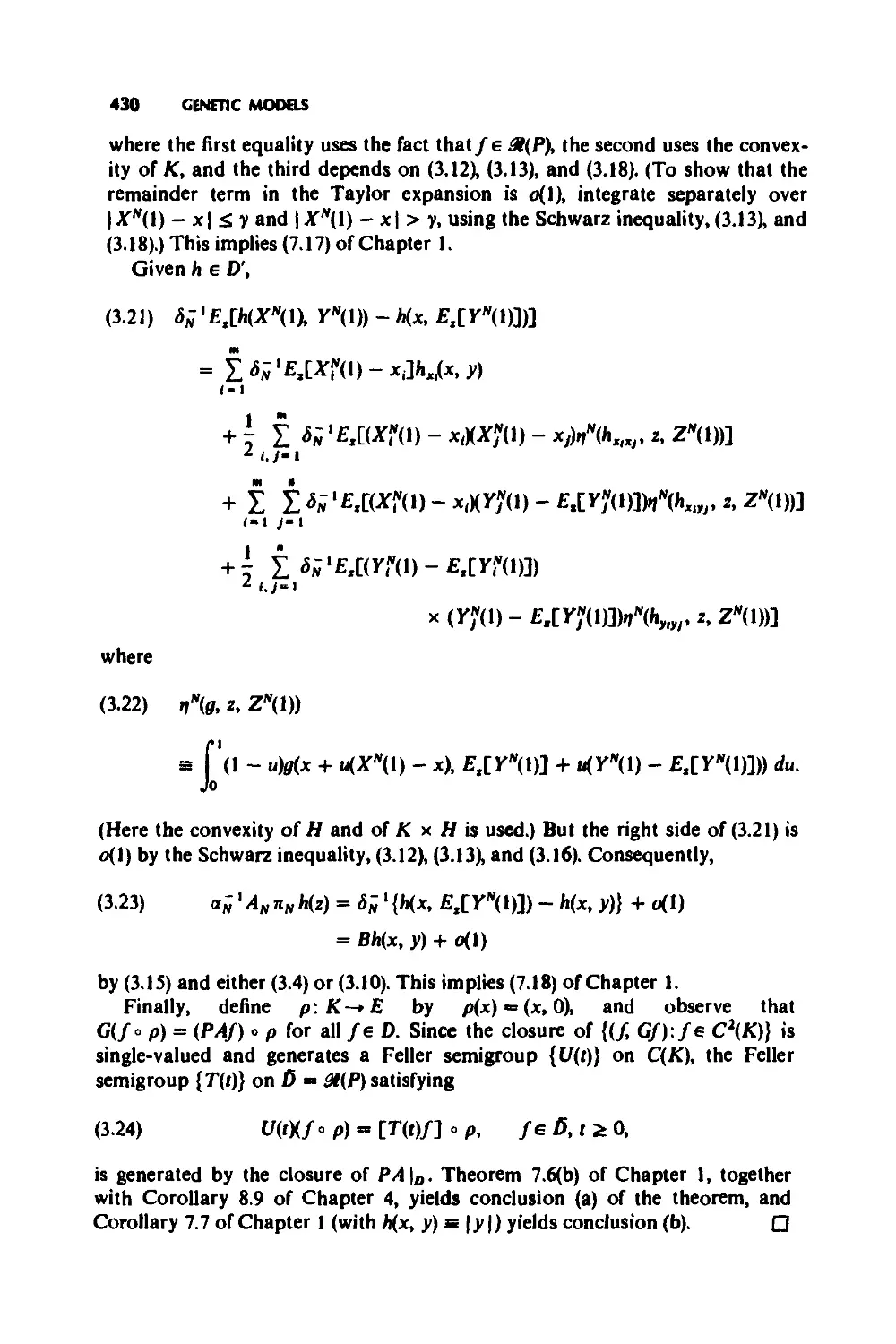

3 Genotypic-Frequency Models, 426

4 Infinitely-Many-Allele Models, 435

5 Problems, 448

6 Notes, 451

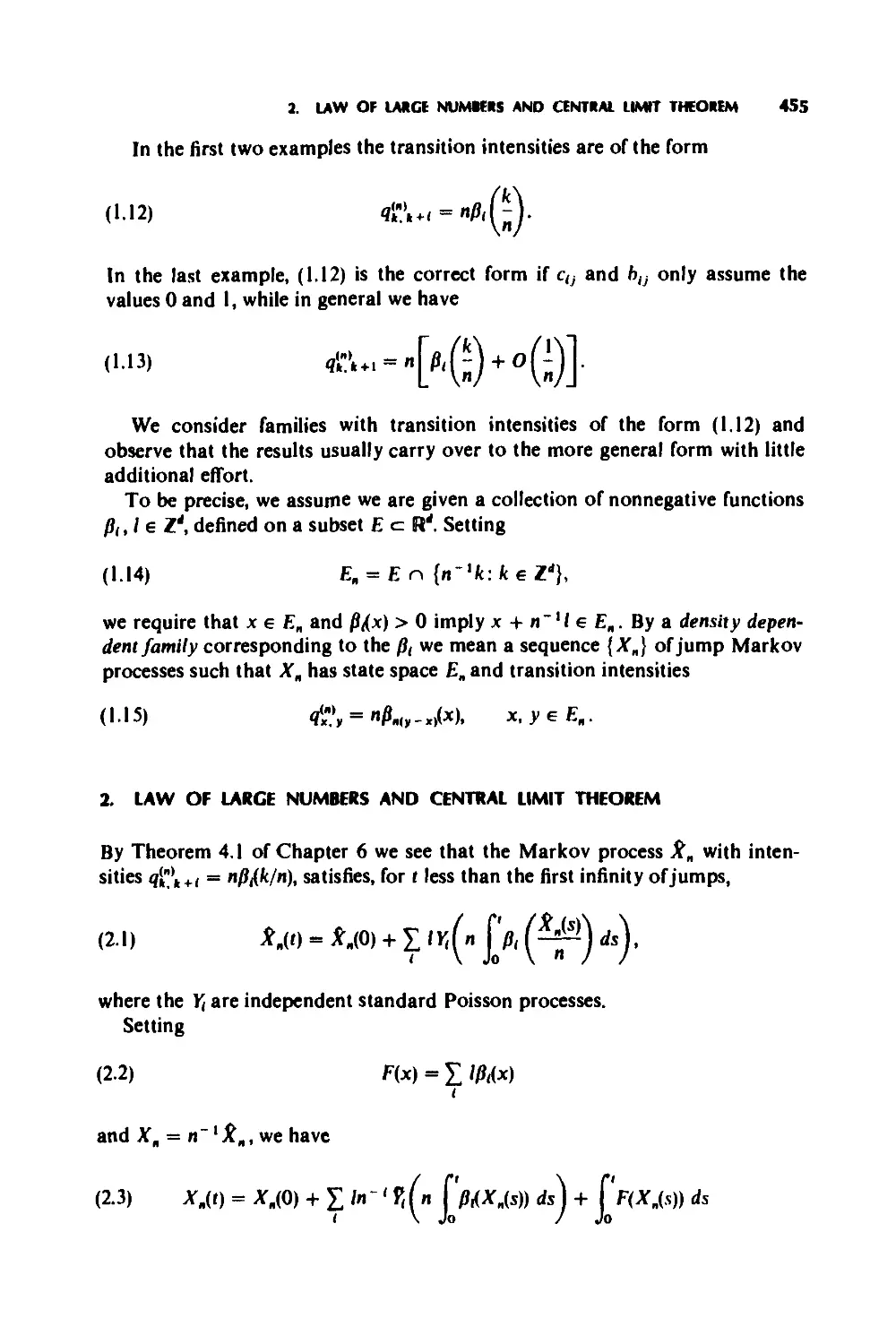

11 Density Dependent Population Processes

1 Examples, 452

2 Law of Large Numbers and Central Limit Theorem, 455

452

X

CONTENTS

3

4

5

6

Diffusion Approximations, 459

Hitting Distributions, 464

Problems, 466

Notes, 467

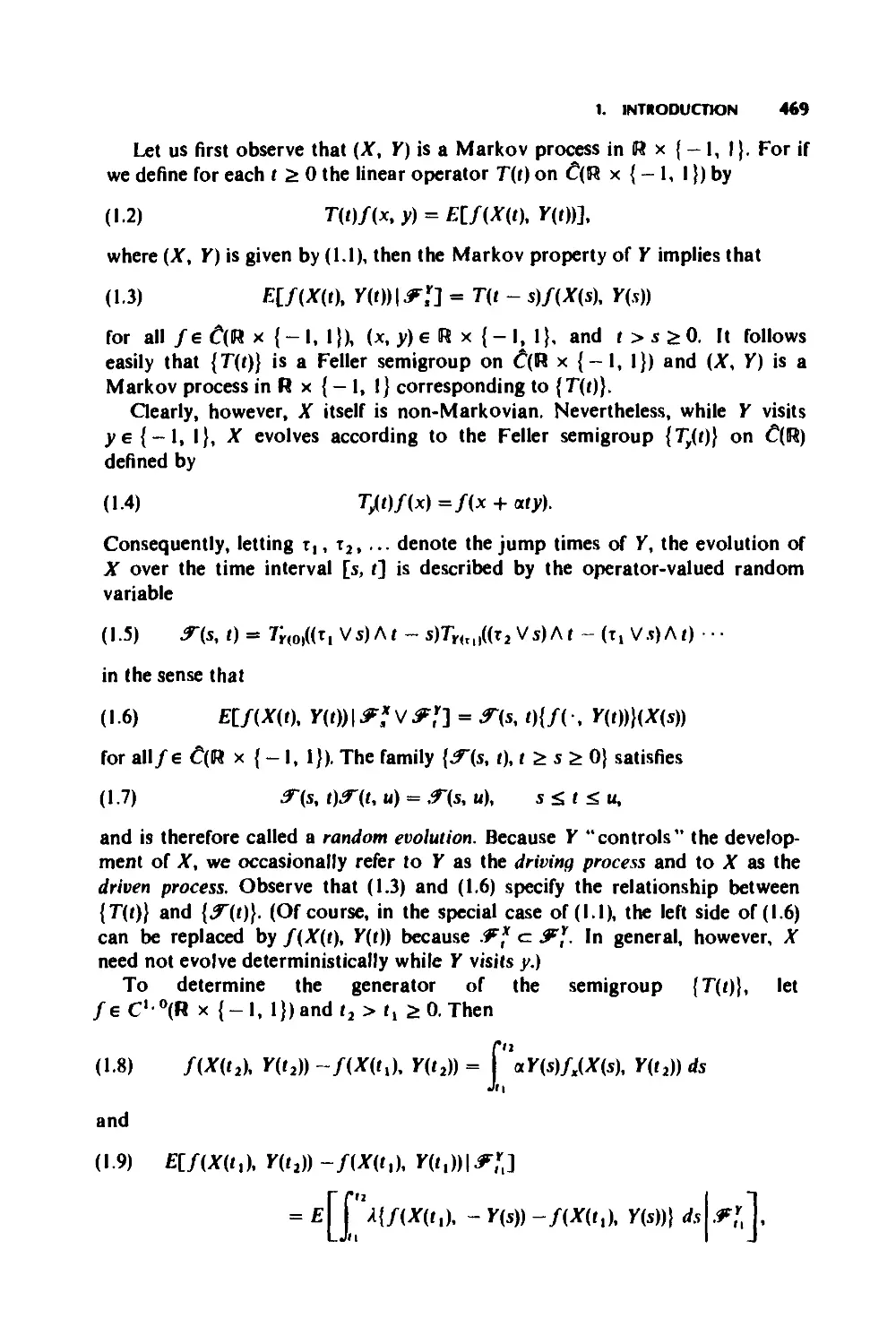

12 Random Evolutions

468

1 Introduction, 468

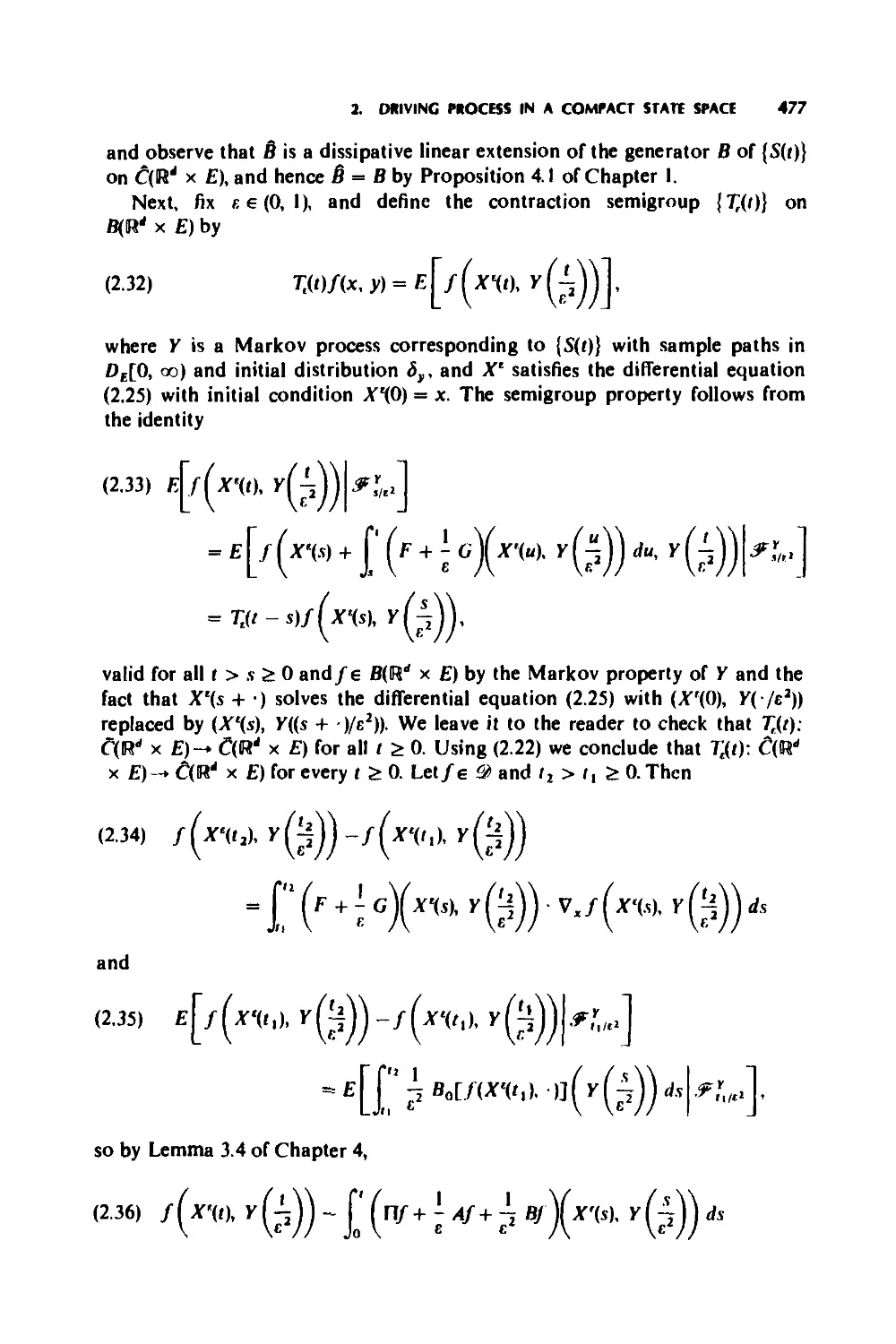

2 Driving Process in a Compact State Space, 472

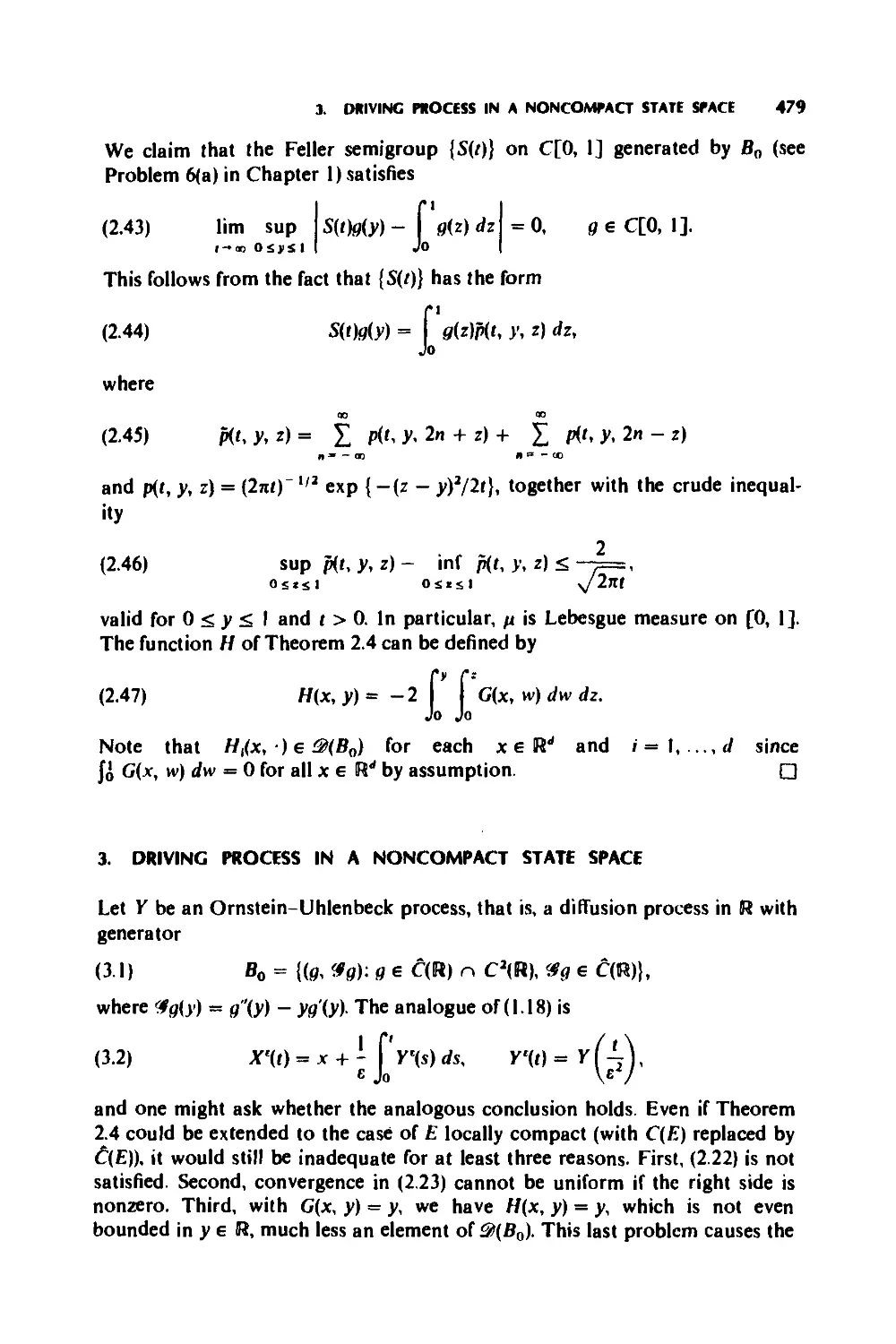

3 Driving Process in a Noncompact State Space, 479

4 Non-Markovian Driving Process, 483

5 Problems, 491

6 Notes, 491

Appendixes 492

1 Convergence of Expectations, 492

2 Uniform Integrability, 493

3 Bounded Pointwise Convergence, 495

4 Monotone Class Theorems, 496

5 Gronwall’s Inequality, 498

6 The Whitney Extension Theorem, 499

7 Approximation by Polynomials, 500

8 Bimeasures and Transition Functions, 502

9 Tulcea’s Theorem, 504

10 Measurable Selections and Measurability of Inverses, 506

11 Analytic Sets, 506

References 508

Index 521

Flowchart

529

Markov Processes Characterization and Convergence

Edited by STEWART N. ETHIER and THOMAS G. KURTZ

Copyright © 1986,2005 by John Wiley & Sons, Inc

INTRODUCTION

The development of any stochastic model involves the identification of proper-

ties and parameters that, one hopes, uniquely characterize a stochastic process.

Questions concerning continuous dependence on parameters and robustness

under perturbation arise naturally out of any such characterization. In fact the

model may well be derived by some sort of limiting or approximation argu-

ment. The interplay between characterization and approximation or con-

vergence problems for Markov processes is the central theme of this book.

Operator semigroups, martingale problems, and stochastic equations provide

approaches to the characterization of Markov processes, and to each of these

approaches correspond methods for proving convergence results.

The processes of interest to us here always have values in a complete,

separable metric space E, and almost always have sample paths in Z)E[0, oo),

the space of right continuous £-valued functions on [0, oo) having left limits.

We give DE[0, oo) the Skorohod topology (Chapter 3), under which it also

becomes a complete, separable metric space. The type of convergence we

are usually concerned with is convergence in distribution; that is, for a

sequence of processes {.¥„} we are interested in conditions under which

lim,<nI E[/(X,)J = E[/M] for every/ё C(D£[0, co)). (For a metric space S,

C(S) denotes the space of bounded continuous functions on S. Convergence in

distribution is denoted by X„ =» X.) As an introduction to the methods pre-

sented in this book we consider a simple but (we hope) illuminating example.

For each n I, define

(I) x„(*) = I + 3x(x — - J, n„(x) = 3x + xlx — -||x — -

\ и/ \ n/\ n

1

2 INTRODUCTION

and let Y„ be a birth-and-death process in Z+ with transition probabilities

satisfying

(2) P{ Y„(t + A) = j + 1 | Y„(t) . j} = nd}-)h + o(h)

\Л/

and

(3) P{Y„(t + h)=J-II Y„(t) =j} = W'fyh + o(h)

as A-*0+. In this process, known as the Schldgl model, Y„(t) represents the

number of molecules at time t of a substance Я in a volume n undergoing the

chemical reactions

i з

(4) Ro R2 + 2R ЗЯ,

з i

with the indicated rates. (See Chapter 11, Section 1.)

We rescale and renormalize letting

(5) X,(t) = n,/4(n"1 Y„(nll2t) - 1), r 2> 0.

The problem is to show that X„ converges in distribution to a Markov process

X to be characterized below.

The first method we consider is based on a semigroup characterization of

X. Let E„ — {nI/4(n“*y — 1): у e Z+), and note that

(6) T,(t)/(x) . E[/(X,(t)) | X,(0) « x]

defines a semigroup {7^(0} on B(Ee) with generator of the form

(7) G„f(x) = п3/2Ц1 + n - ll*x){f(x + n ~ 3'4) - /(x)}

+ и3/2^(1 + n"1/4x){/(x - л"3'4) -f(x)}.

(See Chapter 1.) Letting A(x) s 1 + 3x2, /i(x) = 3x + x3, and

(8) G/(x) = 4/"(x) - x3/'(x),

a Taylor expansion shows that

(9) G„ f(x) = G/(x) + n3/2{ A.( 1 + n - "4x) - A( 1 + и " 1/4x)}{f(x + и ~ 3'4) -f{x)}

+ "3/2{a.(1 + и“,/4х) - /41 + n~lt*x)]{f(x - n~314) -/(x)}

+ 4.(1 + и~,/4х) Г (1 - u){f(x + un-3/4) -f{x)} du

Jo

+ ;41 + n"*'4x) J (1 - u){/’(x - un~3/4)-f"(x)} du

+ {(A + PXI + n'll4x) - (Л + pXDJirW,

INTUOtHJCnON

3

for all/е C2(R) with/' g Cc(R) and all x g E„. Consequently, for such/

(10) lim sup | G„f(x) - Gf(x) | = 0.

я -»co jt • EH

Now by Theorem 1.1 of Chapter 8,

(11) A s {(/ G/):/gC[ —oo, oo] n C2(R), G/g C[-oo, oo]}

is the generator of a Feller semigroup {T(t)} on C[ —oo, oo]. By Theorem 2.7

of Chapter 4 and Theorem 1.1 of Chapter 8, there exists a diffusion process X

corresponding to {T(t)}, that is, a strong Markov process X with continuous

sample paths such that

(12) E[/(X(0) I &?] = T(t - s)/(X(s))

for all/g C[ — oo, oo] and t 2: s 2: 0. (J*-* = c(X(u):u <, s).)

To prove that X„=>X (assuming convergence of initial distributions), it

suffices by Corollary 8.7 of Chapter 4 to show that (10) holds for all/in a core

D for the generator A, that is, for all /in a subspace D of 0(A) such that A is

the closure of the restriction of A to D. We claim that

(13) D = {/+ g:f g g C2(R),/' g Cc(R), (x2g)' g Cc(R)}

is a core, and that (10) holds for all/g D. To see that D is a core, first check

that

(14) &(A) = {/g C[- oo, oo] n C2(R):/" g C(R), x3/' g C[-oo, oo]}.

Then let h g C2(R) satisfy Z(-i.n h <, Z(-j. л and put hm(x) = h(x/m). Given

/ g &(A), choose g g D with (x2g)' g Cc(R) and x\f - g)' g d(R) and define

(IS) ЛД) =/(0) - (КО) + Г (/- g)'(y)hm(y) dy.

Jo

Then fm + g g D for each m,fm + g-*f and G(/m + g)->Gf.

The second method is based on the characterization of X as the solution of

a martingale problem. Observe that

(16) /(ЗД- Гоя/(ВД^

Jo

is an {^/"J-martingale for each /g B(E„) with compact support. Conse-

quently, if some subsequence {XnJ converges in distribution to X, then, by the

continuous mapping theorem (Corollary 1.9 of Chapter 3) and Problem 7 of

Chapter 7,

(17) /(X(t)) - Г G/(X(s)) ds

Jo

4 INTRODUCTION

is an {J^/j-martingale for each f g C3(6l), or in other words, X is a solution of

the martingale problem for {(/, G/):/g C3(R)}. But by Theorem 2.3 of

Chapter 8, this property characterizes the distribution on DH[0, oo) of X.

Therefore, Corollary 8.16 of Chapter 4 gives XH => X (assuming convergence of

initial distributions), provided we can show that

(18) lim iiin sup | XJt) | > «I = 0, T > 0.

а“*<ю tOstsT J

Let <p(x) ж e* + e~x, and check that there exist constants C,.« > 0 such that

G„</> £ С„'в<р on [-a, a] for each n £ 1 and a > 0, and lim,-® CBi, <

oo. Letting t,ie = inf {t 0: | Jfjt) | £ «}, we have

(19) e~c,.,T jnf ф(у)р] sup |X,(t)|^a>

(.OstsT J

£ £[exp {- Ce> «(г.,. A T)}^(X.(te.. A T))J

<. Е[ф(Х.(0))]

by Lemma 3.2 of Chapter 4 and the optional sampling theorem. An additional

(mild) assumption on the initial distributions therefore guarantees (18).

Actually we can avoid having to verify (18) by observing that the uniform

convergence of G„ f to Gf for f e Cc2(R) and the uniqueness for the limiting

martingale problem imply (again by Corollary 8.16 of Chapter 4) that X„ => X

in DR4[0, oo) where R4 denotes the one-point compactification of R. Con-

vergence in DR[0, oo) then follows from the fact that X„ and X have sample

paths in DR[0, oo).

Both of the approaches considered so far have involved characterizations in

terms of generators. We now consider methods based on stochastic equations.

First, by Theorems 3.7 and 3.10 of Chapter 5, we can characterize X as the

unique solution of the stochastic integral equation

(20) X(t) = X(0) + 2^/2 W(t) - f X(s)3 ds,

Jo

where PF is a standard, one-dimensional, Brownian motion. (In the present

example, the term corresponds to the stochastic integral term.) A

convergence theory can be developed using this characterization of X, but we

do not do so here. The interested reader is referred to Kushner (1974).

The final approach we discuss is based on a characterization of X involving

random time changes. We observe first that Y„ satisfies

(21) X,(t)= Г„(0) +/vX [' A.(n-‘y.(s))dsV Ndn j 1 ВД) ds),

\ Jo / \ Jo /

INTRODUCTION

5

where W+ and AL are independent, standard (parameter I), Poisson processes.

Consequently, X„ satisfies

(22) X,(t) = X„(0) + n~314 fl + (n3'2 f' A,(l + n * ,/4X„(s)) ds)

— n~3/4R _(n312 p„(l + n l/4X,(s))ds^

+ и3/4 Г(ЛЯ-Д,Х1 +n-,z4X,(s))ds,

Jo

where ft+(u) = N+(u) - и and ft (u) = /V(u) — и are independent, centered,

standard, Poisson processes. Now it is easy to see that

(23) (n 3/4ft+(n3/2 ),n314 ft Jn3/2 )) =»(H\, HL),

where W+ and ИС are independent, standard, one-dimensional Brownian

motions. Consequently, if some subsequence {Xn } converges in distribution to

X, one might expect that

(24) X(t) = X(0) + H\(4t) + HC(4t) - |' X(s)3 ds.

Jo

(In this simple example, (20) and (24) are equivalent, but they will not be so in

general.) Clearly, (24) characterizes X, and using the estimate (18) we conclude

X„ =► X (assuming convergence of initial distributions) from Theorem 5.4 of

Chapter 6.

For a further discussion of the Schldgl model and related models see

Schldgl (1972) and Malek-Mansour et al. (1981). The martingale proof of

convergence is from Costantini and Nappo (1982), and the time change proof

is from Kurtz (1981c).

Chapters 4-7 contain the main characterization and convergence results

(with the emphasis in Chapters 5 and 7 on diffusion processes). Chapters 1-3

contain preliminary material on operator semigroups, martingales, and weak

convergence, and Chapters 8-12 are concerned with applications.

Markov Processes Characterization and Convergence

Edited by STEWART N. ETHIER and THOMAS G. KURTZ

Copyright © 1986,2005 by John Wiley & Sons, Inc

OPERATOR SEMIGROUPS

Operator semigroups provide a primary tool in the study of Markov pro-

cesses. In this chapter we develop the basic background for their study and the

existence and approximation results that are used later as the basis for exis-

tence and approximation theorems for Markov processes. Section 1 gives the

basic definitions, and Section 2 the Hille-Yosida theorem, which characterizes

the operators that are generators of semigroups. Section 3 concerns the

problem of verifying the hypotheses of this theorem, and Sections 4 and 5 are

devoted to generalizations of the concept of the generator. Sections 6 and 7

present the approximation and perturbation results.

Throughout the chapter, L denotes a real Banach space with norm || • ||.

1. DEFINITIONS AND BASIC PROPERTIES

A one-parameter family {T(t):t^0} of bounded linear operators on a

Banach space L is called a semigroup if T(0) = / and T(s + t) = T(s)T(t) for all

s, t 0. A semigroup {T(t)} on L is said to be strongly continuous if lim,_o T(t)f

= /for every/g L; it is said to be a contraction semigroup if || T(t)|| <; 1 for all

t £ 0.

Given a bounded linear operator В on L, define

(1.1) *'*= Z r^°-

1. DEFINITIONS ANO BASIC PBOFEBTIES

7

A simple calculation gives = e*ee'e for all s, t 0, and hence {e,e} is a

semigroup, which can easily be seen to be strongly continuous. Furthermore

we have

(1.2) ||e"|| <, f ^t*||B*|l <• L = «*"•". t^O.

k-0 K! k-o *1

An inequality of this type holds in general for strongly continuous semi-

groups.

1.1 Proposition Let {T(t)} be a strongly continuous semigroup on L. Then

there exist constants M I and a) 2: 0 such that

(1.3) ЦПОЯ t£0.

Proof. Note first that there exist constants M 1 and t0 > 0 such that

II T(t) || <, M for 0 £ t <, t0. For if not, we could find a sequence {t,} of positive

numbers tending to zero such that || T(t,)|| —» oo, but then the uniform

boundedness principle would imply that sup,|| T(t,)/|| = oo for some f 6 L,

contradicting the assumption of strong continuity. Now let co = t01 log M.

Given t 2 0, write t = kt0 + s, where к is a nonnegative integer and 0 s <

t0; then

(1.4) || T(t)|| = || T(s)T(t0)‘ II £ MMk <, MM'1" = Meal. □

1.2 Corollary Let {T(t)} be a strongly continuous semigroup on L. Then, for

each f 6 a continuous function from [0, oo) into L.

Proof. Let f e L. By Proposition 1.1, if t ;> 0 and h ;> 0, then

(1.5) || T(t + h)f- = || T(t)[T(/i)/-/] ||

^Ме“'ИТ(Л)/-/||,

and if 0 <; h <, t, then

(1.6) || T(t - h)f - = || T(t - h)tT(h)f-/] ||

1.3 Remark Let {T(t)} be a strongly continuous semigroup on L such that

(1.3) holds, and put S(t) = for each t 0. Then {$(!)} is a strongly

continuous semigroup on L such that

(1.7)

||S(t)||^M, t^o.

8 OPERATOR SEMIGROUPS

In particular, if M = 1, then {S(t)} is a strongly continuous contraction semi-

group on L.

Let {$(0} be a strongly continuous semigroup on L such that (1.7) holds,

and define the norm ||| - ||| on L by

(1.8) lll/lll =sup IIWII-

<40

Then Ц/H £ lll/IH £ M|/Я for each fsL,so the new norm is equivalent to

the original norm; also, with respect to ||| - |||, {S(t)} is a strongly continuous

contraction semigroup on L.

Most of the results in the subsequent sections of this chapter are stated in

terms of strongly continuous contraction semigroups. Using these reductions,

however, many of them can be reformulated in terms of noncontraction semi-

groups. О

A (possibly unbounded) linear operator A on L is a linear mapping whose

domain 2(A) is a subspace of L and whose range 2(A) lies in L. The graph of

A is given by

(1.9) ST(A) = {(f, Af):fe 3(A)} c L x L.

Note that L x L is itself a Banach space with componentwise addition and

scalar multiplication and norm ||(/, g)|| = ||/|| + ||^||. A is said to be closed if

&(A) is a closed subspace of L x L.

The (infinitesimal) generator of a semigroup {T(t)} on L is the linear oper-

ator A defined by

(110) Af= lim ; {T(t)/-/}•

<-o *

The domain 3(A) of A is the subspace of all/б L for which this limit exists.

Before indicating some of the properties of generators, we briefly discuss the

calculus of Banach space-valued functions.

Let A be a closed interval in (— oo, oo), and denote by CJA) the space of

continuous functions u: Д-» L. Let C[(A) be the space of continuously differ-

entiable functions м: A -»L.

If A is the finite interval [a, />], и: Д-» L is said to be (Riemann) integrable

over A if 11т,_0 £k =, u(sk)(tk - tk-i) exists, where a = t0 £ s, £ < • • • £

t„_ ( s s„ £ t„ = b and <5 = max (tk - tk_ t); the limit is denoted by JA m(i) dt or

Ji м(г)dt. If A = [a, oo), m: A—► L is said to be integrable over A if u|(a>A| is

integrable over [a, h] for each b £ a and limj^ JJ u(t) dt exists; again, the

limit is denoted by JA м(г) dt or J® u(t) dt.

We leave the proof of the following lemma to the reader (Problem 3).

1. DEFINITIONS AND BASIC PROPEBTIES

9

1.4 Lemma (a) If и 6 CJA) and fA||u(t)|| dt < oo, then и is integrable over

A and

(III)

u(t) dt

£ II «(Oil dt.

Js

In particular, if A is the finite interval [a, b], then every function in CJA) is

integrable over A.

(b) Let В be a closed linear operator on L. Suppose that u 6 CJA),

u(0 e for all t e A, Bu e CJA), and both и and Bu are integrable over

A. Then fa m(0 dt e &(B) and

(1.12) В u(t)dt = Bu(t) dt.

Ja Ja

(c) If и 6 Cj [a, b], then

(113)

f” d

I — u(t) dt = м(Ь) - «(a).

J. <"

1.5 Proposition Let {T(t)} be a strongly continuous semigroup on L with

generator A.

(a) If/6 L and t 0, then f'o T(s)f ds e ®(Л) and

(1.14) T[t)f-f= A f T(s)fds.

Jo

(b) If/e @(Л) and t 0. then T(t)f 6 S>(A) and

(115) ~ T(t)f= AT(t)f= T(t)Af

at

(c) If/e Э(Л) and t > 0, then

(1.16) T(t)/-/= J ‘ AT(s)fds = f T(s)Afds.

JO Jo

Proof, (a) Observe that

(1.17) ; [T(A) - /] Г T(s)/ d.s = if [ T(s + h)f - HO/] ds

h Jo b Jo

="T{s)fds ~ Jo

I Р + * I f*

= - T(s)fds-- \ T(s)fds

A J, b Jo

for all h > 0, and as h -♦ 0 the right side of (1.17) converges to T[t)f - f.

10 OPERATOR SEMIGROUPS

(b) Since

(1.18) 1 [T(t + h)f-T(t)f] = T(t)A„f

n

for all h > 0, where Ah = h ~ *[T(h) - /], it follows that T(t)f e 2(A)

and (d/dt)+T(t)f = AT(t)f= T(t)Af. Thus, it suffices to check that

(d/dt)~ T(t)f = T(tH/(assuming t > 0). But this follows from the identity

(1.19) [ T(t - h)f - T(t)/] - T(t)Af

— fl

- T(t ~ h)tA„ - Л]/ + [T(t - Л) - Т(г)]Л/,

valid for 0 < h <, t.

(c) This is a consequence of (b) and Lemma 1.4(c). □

1.6 Corollary If A is the generator of a strongly continuous semigroup

{T(t)} on L, then 2(A) is dense in L and A is closed.

Proof. Since lim,_0+f1 fo T(s)fds—f for every f g L, Proposition 1.5(a)

implies that 2(A) is dense in L. To show that A is closed, let {/„} c 2(A)

satisfy /„ -»/ and Af„ —► g. Then T(t)f„ —f„ = J'o T(s)Af„ ds for each t > 0, so,

letting n—► oo, we find that T(t)f—f = Jo T(s)g ds. Dividing by t and letting

t -> 0, we conclude that f e 2(A) and Af=g. О

2. THE HILIE-YOSIDA THEOREM

Let Л be a closed linear operator on L. If, for some real A, 1 — A (s 11 — A) is

one-to-one, 2(1 — A) = L, and (A — A)~l is a bounded linear operator on L,

then 1 is said to belong to the resolvent set p(A) of Л, and RA = (Л — Л)"1 is

called the resolvent (at A) of A.

2.1 Proposition Let {T(t)} be a strongly continuous contraction semigroup

on L with generator A. Then (0, oo) c p(A) and

(2.1) (A- A)~lg = ["e J'T(t)gdt

Jo

for all g g L and 1 > 0.

Proof. Let 1 > 0 be arbitrary. Define on L by Uig = jo e~l,T(t)g dt.

Since

(2.2) HU.gll <. Г e-“\\T(t)gU dt^T'M

Jo

2. THE HIUE-YOSIDA THEOREM

11

for each g 6 L, 1Л is a bounded linear operator on L. Now given g g L,

(2.3) 1 [T(A) - IlU.g - If" e-*[T(t + h)g - T(t)g] dt

h n Jo

J* _ 1 f “ Г*

= —Г— e~l'T(t)g dt - — e~uT(t)gdt

ft Jo “Jo

for every h > 0, so, letting h-*0,.we find that ихде^(А) and А1Цд =

Wtg - g, that is,

(2.4) (2- A)Uлд = g, g 6 L.

In addition, if g 6 0(A), then (using Lemma 1.4(b))

(2.5) Ад = Г e “T(t)Ag dt = | A(e hT(t)g) dt

Jo Jo

= A f* е иТ(1)д dt = AUig,

Jo

so

(2.6) C/2 - A)g = g, де ©(Л).

By (2.6), 2 - A is one-to-one, and by (2.4), Л(2 - A) = L. Also, (2 — Л)”1 «

Ux by (2.4) and (2.6), so 2 6 р(Л). Since 2 > 0 was arbitrary, the proof is

complete. □

Let Л be a closed linear operator on L. Since (2 — ЛХр — A) =

(p - ЛХ2 - Л) for all 2, p 6 р(Л), we have (p - Л) '(2 - Л) 1 = (2 - Л) 1

(p — Л) *, and a simple calculation gives the resolvent identity

(2.7) RA R„ = R„ Rx = (2 - p)" ‘(R, - R J, 2, p g р(Л).

If 2 g р(Л) and 12 - p| < || RJ then

(2.8) f(2-prRr'

» = 0

defines a bounded linear operator that is in fact (p - Л)~1. In particular, this

implies that р(Л) is open in R.

A linear operator A on L is said to be dissipative if || 2/ — Л/Ц 2: 2||/|| for

every f g ©(Л) and 2 > 0.

2.2 Lemma Let Л be a dissipative linear operator on L and let 2 > 0. Then

A is closed if and only if d?(2 — Л) is closed.

Proof. Suppose A is closed. If {/„} с ®(Л) and (2 — Л)/я—»h, then the dissi-

pativity of A implies that {/„} is Cauchy. Thus, there exists f g L such that

12 OPERATOR SEMIGROUPS

/,-»/ and hence Af„—»Af — h. Since A is closed,/6 2(A) and h = (A — A)f. It

follows that 2(A — A) is closed.

Suppose 2(A — A) is closed. If {/„} <= 2(A),/„-* f, and Af„—» g, then (A — A)f„

Af— g, which equals (A — A)f0 for some/0 6 2(A). By the dissipativity of A,

/.—»/<>> and hence f=foe 2(A) and Af =• g. Thus, A is closed. О

2.3 Lemma Let A be a dissipative closed linear operator on L, and put

р+(Л) = p(A) n (0, oo). If p+(A) is nonempty, then p+(A) = (0, oo).

Proof. It suffices to show that р+(Л) is both open and closed in (0, oo). Since

p(A) is necessarily open in R, p+(A) is open in (0, oo). Suppose that {A„} c.

p+(A) and A„ -♦ A > 0. Given g g L, let g„ = (A - ЛХА, — A)~ lg for each n, and

note that, because A is dissipative,

lA — A I

(2.9) lim || a, - 0|| = lim || (A - AM - ЛГ'вИ <. lim —д! ц0ц = о.

Я -* 00 я “♦ оо я “* 00 ^я

Непсе 2(А — A) is dense in L, but because A is closed and dissipative,

0t(A — A) is closed by Lemma 2.2, and therefore 2(A — A) — L. Using the

dissipativity of A once again, we conclude that A — Л is one-to-one and

||(A — Л)"11| <, A"'. It follows that A e р+(Л), so р+(Л) is closed in (0, oo), as

required. □

2.4 Lemma Let Л be a dissipative closed linear operator on L, and suppose

that 2(A) is dense in L and (0, oo) <= p(A). Then the Yosida approximation Лд

of Л, defined for each A > 0 by Лд = АЛ(А — Л)"1, has the following proper-

ties:

(a) For each A > 0, Лд is a bounded linear operator on L and {е'Ля} is a

strongly continuous contraction semigroup on L.

(b) Лд Л„ = Л„ Лд for all A, p > 0.

(c) Axf^ After every f g 2(A).

Proof. For each A > 0, let Rx = (A — A)~‘ and note that || Rx || <, A~ '. Since

(A — Л)Яд = I on L and RX(A — A) = I on 2(A), it follows that

(2.10) Лд = А2Кд - Al on L, A > 0,

and

(2.11) Лд-АЛдЛ on 2(A), A > 0.

By (2.10), we find that, for each A > 0, Лд is bounded and

(2.12) 11^11 = е-'л||еи1Яя|| <; 1

2. THE HILLE-YOSIDA THEOREM

13

for all t 0, proving (a). Conclusion (b) is a consequence of (2.10) and (2.7). As

for (c), we claim first that

(2.13)

lim ARkf=f, feL.

Л -* CO

Noting that ||АКд/—/|| = ||КАЛ/|| <; 2-,|| Л/||-»0 as А-» oo for each

fe 0(A), (2.13) follows from the facts that ©(Л) is dense in L and

(|2RA —/(( <,2 for all A > 0. Finally, (c) is a consequence of (2.11) and

(2.13). □

2.5 Lemma If В and C are bounded linear operators on L such that

ВС = CB and f| e** || 5 I and || e'c || £ 1 for all t > 0, then

(2.14)

for every f g L and t 0.

Proof. The result follows from the identity

(2.15)

f d C1

е'У-е'с/= — [e*?-*]/*» e'B(B C)e{' S>cfds

Jo *s Jo

e,ee<'~,)C(B - Qfds.

(Note that the last equality uses the commutivity of В and C.) □

We are now ready to prove the Hille-Yosida theorem.

2.6 Theorem A linear operator A on L is the generator of a strongly contin-

uous contraction semigroup on L if and only if;

(а) Й»(Л) is dense in L.

(b) A is dissipative.

(c) 0t(A - Л) = L for some A > 0.

Proof. The necessity of the conditions (a)-(c) follows from Corollary 1.6 and

Proposition 2.1. We therefore turn to the proof of sufficiency.

By (b), (c), and Lemma 2.2, A is closed and p(A) n (0, oo) is nonempty, so

by Lemma 2.3, (0, oo) c p(A). Using the notation of Lemma 2.4, we define for

each A > 0 the strongly continuous contraction semigroup {Tx(t)} on L by

7}(t) = e,Ai. By Lemmas 2.4(b) and 2.5,

(2.16) II TMf - |l <; t IIA J- AJ\\

14

OPERATOR SEMIGROUPS

for all / g L, t 0, and Л, ц > 0. Thus, by Lemina 2.4(c), lim^..^ 7}(t)/exists

for all t 0, uniformly on bounded intervals, for all f g 2(A), hence for every

f g 2(A) = L. Denoting the limit by T(t)/and using the identity

(2.17) T(s + t)f - T(s)T(t)f = [T(s + t) — TA(s + t)]/

+ 7I(s)[7I(t) - T(t)]/+ [ВД - T(s)]T(t)f

we conclude that {T(t)} is a strongly continuous contraction semigroup on L.

It remains only to show that A is the generator of {T(t)}. By Proposition

1.5(c),

(2.18) 7I(t)/-/- Г WAJds

Jo

for all/g L, t 0, and A > 0. For each/g 2(A) and t 0, the identity

(2.19) Tx(s)Aj- T(s)Af= T^AJ- Af) + [TA(s) - T(s)] Л/,

together with Lemma 2.4(c), implies that T^AJ"—» T(s)Af as 2—»oo, uni-

formly in 0 <; s <; t. Consequently, (2.18) yields

(2.20) T(t)f-f= Г T(s)Af ds

Jo

for all / 6 2(A) and t £ 0. From this we find that the generator В of {T(t)) is

an extension of A. But, for each A > 0, A — В is one-to-one by the necessity of

(b), and 2(A — A) = L since A g p(A). We conclude that В = A, completing the

proof. □

The above proof and Proposition 2.9 below yield the following result as a

by-product.

2.7 Proposition Let {T(t)J be a strongly continuous contraction semigroup

on L with generator A, and let Ak be the Yosida approximation of A (defined

in Lemma 2.4). Then

(2.21) ||eM/- T(t)/|| <, t|| A J- Л/Ц, /g 2(A), t 2> 0, 2 > 0,

so , for each /g L, Нтд_00е'Лл/ = T(t)f for all t 0, uniformly on bounded

intervals.

2. 8 Corollary Let {T(t)} be a strongly continuous contraction semigroup on

L with generator A. For M c L, let

(2.22) Aw = {2 > 0: 2(2 — Л)"1: M —»M}.

If either (a) M is a closed convex subset of L and AM is unbounded, or (b) M is

a closed subspace of L and AM is nonempty, then

(2.23) T(t): M — M, t 2> 0.

2. THE HIUE-YOSIDA THEOREM

15

Proof. If 2, p > 0 and 11 - p/A | < 1, then (cf. (2.8))

(2.24) М(д-Л)-'= f у fl -jYu(A- Л)'•]-*'.

Consequently, if M is a closed convex subset of L, then A g AM implies

(0, A] c AM, and if M is a closed subspace of L, then A g Am implies (0, 22) c

AM. Therefore, under either (a) or (b), we have AM = (0, 00). Finally, by (2.10),

(2.25) exp {tAj = exp {-t2} exp {гЛ[Л(Л - A)~ ’]}

я ® 0 «*

for all t 0 and 2 > 0, so the conclusion follows from Proposition 2.7. □

2.9 Proposition Let (T(t)} and {$(?)} be strongly continuous contraction

semigroups on L with generators A and B, respectively. If A = B, then

T(t) = S(t) for all t 2: 0.

Proof. This result is a consequence of the next proposition. □

2.10 Proposition Let A be a dissipative linear operator on L. Suppose that

u: [0, 00)-» L is continuous, u(t) g ®(/t) for all t > 0, Au: (0, 00)-» L is contin-

uous, and

(2.26) u(t) = u(e) -I- J 4u(s) ds,

for all t > e > 0. Then || u(r) || 5 || и(0) || for all t 0.

Proof. Let 0 < e = t0 < *i < < t„ — t. Then

(2.27)

II НО II = II Ис) II + t [II ИО II - l|M(G-1)113

1» 1

= ll«(e)ll + i [IMOII - IMO - (G - t<-iMHOII]

i = I

+ f [ II M(t<) - (t< - t, _ , MU(O II - II u(t() - (HO - uft, _ ,)) II ]

1 = 1

£ l|u(£)|| + £

i» 1

ll«(O- (G -1(-, MHO II -

C1*

— I Лм(з) ds

Jtit

II «(в) II + E I II 4u(r() - Лф)Н ds,

i* 1 Jtf- t

16 OPERATOR SEMIGROUPS

where the first inequality is due to the dissipativity of A. The result follows

from the continuity of Au and u by first letting max(r, — t<_|)—>0 and then

letting c-»0. □

In many applications, an alternative form of the Hille-Yosida theorem is

more useful. To state it, we need two definitions and a lemma.

A linear operator A on L is said to be closable if it has a closed linear

extension. If A is closable, then the closure A of A is the minimal closed linear

extension of A; more specifically, it is the closed linear operator В whose

graph is the closure (in L x L) of the graph of A.

2.11 Lemma Let A be a dissipative linear operator on L with 2(A) dense in

L. Then A is closable and ^t(2 — A) = ^t(2 — A) for every A > 0.

Proof. For the first assertion, it suffices to show that if {/„} c 2(A), f, -»0,

and Af„ —»g 6 £, then g « 0. Choose {grm} <= 2(A) such that gm -»g. By the

dissipativity of A,

(2.28) || (2 - A)g„ - Ag || = lim || (2 - A)(g„ + 2/.) ||

H-*0D

lim 2||0„ + AfJ =2||gm||

W-* 00

for every 2 > 0 and each m. Dividing by 2 and letting 2—►oo, we find that

II 9m ~ 9II II 9m II for each m- Letting m-> oo, we conclude that g = 0.

Let 2 > 0. The inclusion &(A — А) э 3t(2 — A) is obvious, so to prove

equality, we need only show that 2(A — A) is closed. But this is an immediate

consequence of Lemma 2.2. □

2.12 Theorem A linear operator A on L is closable and its closure A is the

generator of a strongly continuous contraction semigroup on L if and only if:

(a) 2(A) is dense in L.

(b) A is dissipative.

(c) 2(A — A) is dense in L for some 2 > 0.

Proof. By Lemma 2.11, A satisfies (a)-(c) above if and only if A is closable and

A satisfies (aHc) of Theorem 2.6. □

3. CORES

In this section we introduce a concept that is of considerable importance in

Sections 6 and 7.

3. COKES

17

Let A be a closed linear operator on L. A subspace D of 0(A) is said to be a

core for A if the closure of the restriction of A to D is equal to A (i.e., if

Л|о = A).

3.1 Proposition Let A be the generator of a strongly continuous contraction

semigroup on L. Then a subspace D of 0(A) is a core for A if and only if D is

dense in L and 0(). — Л|о) is dense in L for some A > 0.

3.2 Remark A subspace of L is dense in L if and only if it is weakly dense

(Rudin (1973), Theorem 3.12). □

Proof. The sufficiency follows from Theorem 2.12 and from the observation

that, if A and В generate strongly continuous contraction semigroups on L

and if A is an extension of B, then A — B. The necessity depends on Lemma

2.11. □

3.3 Proposition Let A be the generator of a strongly continuous contraction

semigroup {T(t)} on L. Let Da and D be dense subspaces of L with Da c D c

0(A). (Usually, Da = D.) If T(t): Da ~* D for all t 0, then D is a core for A.

Proof. Given f 6 Do and 2 > 0,

(3.1) f e-^^feD

" k~o \nj

for и = 1,2....By the strong continuity of {T(t)} and Proposition 2.1,

(3.2) lim (4 - Л)А = lim - f e' - A)f

= o \n/

= Ге Л'Т(ГХА- A)f dt

Jo

= (Я- Л)'(2- A)f-f.

so Я 2 - A |0) => Da. This suffices by Proposition 3.1 since Do is dense in L. □

Given a dissipative linear operator A with 0(A) dense in L, one often wants

to show that A generates a strongly continuous contraction semigroup on L.

By Theorem 2.12, a necessary and sufficient condition is that .4?(2 - A) be

dense in L for some 2 > 0. We can view this problem as one of characterizing

a core (namely, 0(A)) for the generator of a strongly continuous contraction

semigroup, except that, unlike the situation in Propositions 3.1 and 3.3, the

generator is not provided in advance. Thus, the remainder of this section is

primarily concerned with verifying the range condition (condition (c)) of

Theorem 2.12.

Observe that the following result generalizes Proposition 3.3.

18

OPERATOR SEMIGROUPS

3.4 Proposition Let A be a dissipative linear operator on L, and Do a sub-

space of 2(A) that is dense in L. Suppose that, for each f e Do> there exists a

continuous function uf: [0, oo)-» L such that uf(0)=f uf(t) 6 2(A) for all

t > 0, Auf. (0, oo)-» L is continuous, and

(3.3) Uf(t) — uz(e) = J Auf(s)ds

for all t > e > 0. Then A is closable, the closure of A generates a strongly

continuous contraction semigroup {T(t)} on L, and T(t)f = uf(t) for all f 6 Do

and t 0.

Proof. By Lemma 2.11, A is closable. Fix f e Do and denote u^ by u. Let

t0 > e > 0, and note that JJ° e ~ 'u(t) dt e 2(A) and

(3.4) A | e~'u(t) dt = | e~‘Au(t)dt.

Jt Jt

Consequently,

(3.5) Г e~'u(t) dt = (e~‘— e~'°)u(e) + | e~* f Au(s) ds dt

Jc Jc

J^o

(e~a — e~'°)Au(s) ds

c

J'•(°

e“'«(t) dt + e~‘u(e) — e~'°u(t0).

s

Since ||u(/)|| <; ll/ll for all t ^0 by Proposition 2.10, we can let e-»0 and

l0—» oo in (3.5) to obtain Jq e~'u(t) dt e 2(A) and

(3.6) (1-Л)| е’*м(/)Л=/

Jo

We conclude that 2( 1 — A) о Do, which by Theorem 2.6 proves that A gener-

ates a strongly continuous contraction semigroup {T(z)} on L. Novi for each

/6D0,

(3.7) T(t)f- T(e)f = £ AT(s)fds

for all t > e > 0. Subtracting (3.3) from this and applying Proposition 2.10

once again, we obtain the second conclusion of the proposition. □

The next result shows that a sufficient condition for A to generate is that A

be triangulizable. Of course, this is a very restrictive assumption, but it is

occasionally satisfied.

3. CORES

19

3.5 Proposition Let A be a dissipative linear operator on L, and suppose

that L(, L2, L3,. . . is a sequence of finite-dimensional subspaces of 2(A) such

that (L, is dense in L. If A: L,-» L, for n = 1, 2, . . then A is closable

and the closure of A generates a strongly continuous contraction semigroup

on L.

Proof. For и = 1, 2......(A — A)(L„) =» L, for all A not belonging to the set of

eigenvalues of hence for all but at most finitely many A > 0. Conse-

quently, (A — i ^«) = i f°r a4 but at most countably many A > 0

and in particular for some A > 0. Thus, the conditions of Theorem 2.12 are

satisfied. □

We turn next to a generalization of Proposition 3.3 in a different direction.

The idea is to try to approximate A sufficiently well by a sequence of gener-

ators for which the conditions of Proposition 3.3 are satisfied. Before stating

the result we record the following simple but frequently useful lemma.

3.6 Lemma Let At, A2, . . . and A be linear operators on L, Do a subspace

of L, and A > 0. Suppose that, for each g 6 Do, there exists f„ e 2(A„)r^(A)

for и = I, 2, . . . such that g„ к (A — A„)f„-*g as oo and

(3.8) lim ||(Л, — Л)/,|| =0.

Then 0f(A — Л) => Do.

Proof. Given geDo, choose {/,} and {g,} as in the statement of the

lemma, and observe that lim,^aj||(A — A)f„ — 9, II = 0 by (3.8). It follows that

lim,|| (A — A)f, — g || = 0, giving the desired result. □

3.7 Proposition Let Л be a linear operator on L and Do and Dt dense

subspaces of L satisfying Do c <&(A) cDtcL Let ||| • ||| be a norm on Dt.

For n = 1,2,.. ..suppose that Л, generates a strongly continuous contraction

semigroup {T,(t)} on L and 2(A) с £2(Л,). Suppose further that there exist

co 0 and a sequence {e,} <= (0, oo) tending to zero such that, for n = 1,2,...,

(3.9) НМ,-Л)/|| <; £,|||Л||, fe2(A),

(3.10) T/t): Dt-* DIII T,(t)|0, III e"', t2>0,

and

(3.11) T„(t)- Do >&(A),

Then A is closable and the closure of A generates a strongly continuous

contraction semigroup on L.

20 OPERATOR SEMIGROUPS

Proof. Observe first that ^(Л) is dense in L and, by (3.9) and the dissipativity

of each A„, A is dissipative. It therefore suffices to verify condition (c) of

Theorem 2.12.

Fix 2 > a). Given g 6 Do, let

(3.12) e~^Te(-}g e 2(A)

for each m, n 2 1 (cf. (3.1)). Then, for и = 1, 2,..., (Д — Л,)/„,-♦

fo — A„)g dt = g as m-*co, so there exists a sequence (mJ of

positive integers such that (A — A„)f„'„-*g as n—» oo. Moreover,

(3.13) IIM.-Л)Л.,.|| ^e.lll/^,.111

m»a

<.£ятя‘ £ e',I*/M"e"*''w,|||0|||

—* 0 as n -» oo

by (3.9) and (3.10), so Lemma 3.6 gives the desired conclusion. □

3.8 Corollary Let A be a linear operator on L with 2(A) dense in L, and let

HI HI be a norm on 2(A) with respect to which 2(A) is a Banach space.

For n = 1, 2....... let T„ be a linear || • ||-contraction on L such that

T„: 2(A)-* 2(A), and define A„ = п(Тя — 1). Suppose there exist w 2 0 and a

sequence {£„} c (0, oo) tending to zero such that, for n = 1, 2,. . ., (3.9) holds

and

(3.14) ll|T,|eM)||| <. I +"

n

Then A is closable and the closure of A generates a strongly continuous

contraction semigroup on L.

Proof. We apply Proposition 3.7 with Do = = 2(A). For и = I, 2.....

expfMj: 2(A)—* 2(A) and

(3.15) |||exp {Mj |в(Л)||| exp { —nt) exp {nt||| Тя|в)Л)|||} £ exp {cm}

for all t 2 0, so the hypotheses of the proposition are satisfied. □

4. MULTIVALUED OPERATORS

Recall that if A is a linear operator on L, then the graph &(A) of A is a

subspace of L x L such that (0, g) 6 &(A) implies g = 0. More generally, we

regard an arbitrary subset A of L x L as a multivalued operator on L with

domain 2(A) = {/: (f, g) 6 A for some g} and range 2(A) = {g: (/, g)eA for

some f}. A c L x L is said to be linear if A is a subspace of L x L. If A is

linear, then A is said to be single-valued if (0, g) e A implies g = 0; in this case,

4. MULTIVALUED OPHtATOXS

21

A is a graph of a linear operator on L, also denoted by A, so we write Af = g if

(fg)eA. If A c L x L is linear, then A is said to be dissipative if

II if — p|| 2: ЛЦ/II for all (/, g) 6 A and A > 0; the closure A of A is of course

just the closure in L x L of the subspace A. Finally, we define

A - A = {(/, Af - g): (f, g) e A} for each A > 0.

Observe that a (single-valued) linear operator A is closable if and only if the

closure of A (in the above sense) is single-valued. Consequently, the term

“dosable” is no longer needed.

We begin by noting that the generator of a strongly continuous contraction

semigroup is a maximal dissipative (multivalued) linear operator.

4.1 Proposition Let A be the generator of a strongly continuous contraction

semigroup on L. Let Be L x L be linear and dissipative, and suppose that

A с B. Then A = B.

Proof. Let (/, g)e В and A > 0. Then (/, Af — g) g A — B. Since A g р(Л),

there exists h g ®(Л) such that Ah - Ah = Af — g. Hence (h, Af - g) g

A — A с A - B. By linearity, (/ - h, 0) g A — B, so by dissipativity, f = h.

Hence g — Ah, so (/, g) 6 A. □

We turn next to an extension of Lemma 2.11.

4.2 Lemma Let A c L x L be linear and dissipative. Then

(4.1) A0 = ((fg)e А.де2(А)}

is single-valued and 2(A — A) = Я(А — Л) for every A > 0.

Proof. Given (0, g) g Ло, we must show that g = 0. By the definition of Ao,

there exists a sequence {(g„,h„)}cA such that g„--+g. For each n,

(g,, h„ + Ag) g A by the linearity of A, so || Ag„ - h„ — Ад || A || g„ || for every

A > 0 by the dissipativity of A. Dividing by A and letting A~* oo, we find that

II0. — 0II II 9n II for each n. Letting и -» oo, we conclude that g = 0.

The proof of the second assertion is similar to that of the second assertion

of Lemma 2.11. □

The main result of this section is the following version of the Hille-Yosida

theorem.

4.3 Theorem Let A <= L x L be linear and dissipative, and define Ao by

(4.1), Then Ao is the generator of a strongly continuous contraction semigroup

on 2(A) if and only if 2(A — A) => £2(Л) for some A > 0.

Proof. Ao is single-valued by Lemma 4.2 and is clearly dissipative, so by the

Hille-Yosida theorem (Theorem 2.6), Ao generates a strongly continuous

contraction semigroup on 2(A) if and only if 2(A0) is dense in 2(A) and

2(A — Ло) = 2(A) for some A > 0. The latter condition is clearly equivalent to

22

OPERATOR SEMIGROUPS

2(Л — Л) o 2(A) for some A > 0, which by Lemma 4.2 is equivalent to

2(Л — A) о &(A) for some A > 0. Thus, to complete the proof, it suffices to

show that 2(A0) is dense in 2(A) assuming that Л(А — Ло) = 2(A) for some

A > 0. _____ _________________________________

By Lemma 2.3, Й?(А — Ло) = 2(A) for every A > 0, so 2(Л — A) =

2(Л — A) о 2(A) for every A > 0. By the dissipativity of Л, we may regard

(A — Л)'1 as a (single-valued) bounded linear operator on 2(Л — A) of norm

at most A ~1 for each A > 0. Given (f g) 6 A and A > 0, A/ — g g &(Л — A) and

f g 2(A) c 2(A) с 2(Л — A), so де 2(Л — A), and therefore || A(A — Л)- —f ||

= ||(A — Л)" ‘g|| <, A“11| ||. Since 2(A) is dense in 2(A), it follows that

(4.2) lim Л(Л-A) fe 2(A).

A-*ao

(Note that this does not follow from (2.13).) But clearly, (А —Л)-1;

2(Л - A0)—*2(A0), that is, (A - Л)~ l: 2(A)-* 2(A0), for all A > 0. In view

of (4.2), this completes the proof. □

Multivalued operators arise naturally in several ways. For example, the

following concept is crucial in Sections 6 and 7.

For n = 1, 2.....let L,, in addition to L, be a Banach space with norm

also denoted by || ||, and let n„: L-* L, be a bounded linear transformation.

Assume that sup, || it, || < oo. If A,c£, x L„ is linear for each n £ 1, the

extended limit of the sequence {Л„} is defined by

(4.3) ex-lim A„ = {(f, g) e L x L: there exists (f,, g„) g Л, for each

Я-* 00

и £ 1 such that ||f, ~ njl -* 0 and || g„ - n,g ||-»0).

We leave it to the reader to show that ex-lim.^^A, is necessarily closed in

L x L (Problem 11).

To see that ex-lim,-.*, Л, need not be single-valued even if each Л, is, let

L, = L, я, = 1, and Л, = В + nC for each n £ 1, where В and C are bounded

linear operators on L. If f belongs to Ж(С), the null space of C, and he L,

then A,(f + (l/n)h)—* Bf + Ch, so

(4.4) {(/, Bf + Ch): f g Ж(С), heL}c ex-lim Л„.

«-♦00

Another situation in which multivalued operators arise is described in the

next section.

5. SEMIGROUPS ON FUNCTION SPACES

In this section we want to extend the notion of the generator of a semigroup,

but to do so we need to be able to integrate functions u: [0, oo)-» L that are

5. SEMIGROUPS ON FUNCTION SPACES

23

not continuous and to which the Riemann integral of Section 1 does not

apply. For our purposes, the most efficient way to get around this difficulty is

to restrict the class of Banach spaces L under consideration. We therefore

assume in this section that L is a “function space” that arises in the following

way.

Let (M, be a measurable space, let Г be a collection of positive mea-

sures on J(, and let У be the vector space of Ж-measurable functions f such

that

(5.1) ll/ll = sup f |/| du < oo.

к«Г J

Note that || || is a seminorm on & but need not be a norm. Let

Ж = {/g У: ll/ll = 0} and let L be the quotient space У/Ж, that is, L is the

space of equivalence classes of functions in where/~ g if ||/~ g|| =0. As

is typically the case in discussions of Zf-spaces, we do not distinguish between

a function in & and its equivalence class in L unless necessary.

L is a Banach space, the completeness following as for Zf-spaces. In fact, if v

is a <7-finite measure on Ж, I <. q < oo, p~ 1 + q* 1 = 1, and

(5.2)

Г = 0: fi « v,

where || • ||, is the norm on ZJ(v), then L = Zf(v). Of course, if Г is the set of

probability measures on then L = B(M, Ж), the space of bounded Л-

measurable functions on M with the sup norm.

Let (S, v) be a ff-finite measure space, let /: S x M -♦ R be У x Л-

measurable, and let g: S-* [0, oo) be У-measurable. If ||/(s, )|| < g(s) for all

s g S and J g(s)v(ds) < oo, then

(5.3)

sup

J J/(s, x)v(ds) fi(dx)

< sup I |/(s, x)|/r(dx)v(ds)

< I g(s)v(ds) < oo,

and we can define J f(s, )v(ds) g L to be the equivalence class of functions in

У equivalent to h, where

5 4 h . = H/(s. x)v(ds), f |/(s, x)| v(ds) < oo,

' * ( 0, otherwise.

With the above in mind, we say that u: S-» L is measurable if there exists

an У x Ж-measurable function v such that ф, ) g u(s) for each s g S. We

define a semigroup {T(t)} on L to be measurable if T( -)/ is measurable as a

function on ([0, oo), Л[0, oo)) for each f g L. We define the full generator A of

a measurable contraction semigroup {T(r)} on L by

24

OPERATOR SEMIGROUPS

(5.5)

A - <C4 9} 6 L x L. T(t)f-f~

(. Jo

T(s)g ds,

1210

We note that A is not, in general, single-valued. For example, if L = B(R) with

the sup norm and T(t)/(x) = f(x + t), then (0, g) e A for each g g B(R) that is

zero almost everywhere with respect to Lebesgue measure.

5.1 Proposition Let L be as above, and let {T(t)} be a measurable contrac-

tion semigroup on L. Then the full generator A of {T(t)j is linear and dissi-

pative and satisfies

(5.6) (A - A)~ lh = Г°° e-*T(t)h dt

Jo

for all h e 9ЦА — Л) and A > 0. If

(5.7) T(s) Г e~*'T(t}h dt = | e'A'T(s + t)h dt

Jo Jo

for all h e L, A > 0, and s 0, then 0t(A — A) = L for every A > 0.

Proof. Let (f, g) e A, A > 0, and h = Af — g. Then

(5.8) f e ^'T(t)h dt = A | e'x,T(t)fdt - | * e^'T(t)g dt

Jo Jo Jo

= A I e uT{t)fdt - A I eh Г T(s)g ds dt

Jo Jo Jo

=f

Consequently, ||/|| £ A" 1ЦЛ||, provingdissipativity, and(5.6) holds.

Assuming (5.7), let heL and A > 0, and define f= jo e ~uT(t)hdt and

g = Af — h. Then

(5.9) f T(s)g ds = A | | e“A“T(s + u)h du ds — | T(s)h ds

Jo Jo Jo Jo

= A I eu I e"A"T(u)/i du ds - Г T(s)h ds

Jo Ji Jo

= | e~iuT(u)h du — | e ^T(u)h du

Ji Jo

+ I T(s)h ds — I T(s)h ds

Jo Jo

- T(t)f-f

for all t 0, so (f g) e A and h » Af - g e 9ЦА — A). □

5. SEMIGROUPS ON FUNCTION SPACES

25

The following proposition, which is analogous to Proposition 1.5(a), gives a

useful description of some elements of A.

5.2 Proposition Let L and {T(t)} be as in Theorem 5.1, let h e L and u £ 0,

and suppose that

(5.Ю)

T(t) I T(s)h ds =1 T(t + s)h ds

for all t 0. Then

(5.11)

Proof. Put/ = J* T(s)h ds. Then

(5.12)

I T(t + s)h ds - Г T(s)h ds

Jo Jo

- j " T(s)h ds - I ‘ T(s)h ds

T(s)(T(u}h - h) ds

for all t 0.

□

In the present context, given a dissipative closed linear operator A c L x L,

it may be possible to find measurable functions u:fO, oo)-*L and

»: [0, oo)-» L such that (u(t), u(0) e A for every t > 0 and

(5.13)

HO = м(0) + I Hs) ds, t 0.

One would expect и to be continuous, and since A is closed and linear, it is

reasonable to expect that

(5.14)

u(s) ds, ИО - «(0) I e A

for all t > 0. With these considerations in mind, we have the following multi-

valued extension of Proposition 2.10. Note that this result is in fact valid for

arbitrary L.

26

OPERATOR SEMIGROUPS

5.3 Proposition Let A c L x L be a dissipative closed linear operator.

Suppose u: [0, oo)-* L is continuous and (f'o u(s) ds, u(t} — u(0)) e A for each

t > 0. Then

(5.15)

II«(Oil £ II«(0)11

for all t 0. Given A > 0, define

(5.16)

e'^uft) dt, g = A I e~A'(u(t) — u(0)) dt.

Io Jo

Then (f g) e A and Af-g = u(0).

Proof. Fix t £ 0, and for each £ > 0, put u,(t) = e~1 f{+t u(s) ds. Then

(5.17) u,(t) = ut(0) + j £~*(u(s + e) — u(s)) ds.

Jo

Since (u£(t), l(u(t + 0 — «(0)) 6 A, it follows as in Proposition 2.10 that

II «JO II II «,(0) II • Letting £ -»0, we obtain (5.15).

Integrating by parts,

(5.18) f= f е'л,и(г) dt = Я J e~u | u(s) ds dt,

Jo Jo Jo

s° (/. в) 6 A by the continuity of и and the fact that A is dosed and linear. The

equation Af — g = u(0) follows immediately from the definition off and g. □

Heuristically, if {S(t)} has generator В and {T(t)} has generator A + B, then

(cf. Lemma 6.2)

(5.19) T(t)f = S(t)f + f S(t - 0Л T(s)/ ds

Jo

for all t 2: 0. Consequently, a weak form of the equation u, = (A + B)u is

(5.20) u(t) = S(t)u(0) 4- j S(t — s)4u(s) ds.

Jo

We extend Proposition 5.3 to this setting.

5.4 Proposition Let L be as in Proposition 5.1, let A c L x L be a dissi-

pative closed linear operator, and let {S(r)} be a strongly continuous, measur*

able, contraction semigroup on L. Suppose u: [0, oo)-»L is continuous,

v: [0, oo)—> L is bounded and measurable, and

5. SEMIGROUPS ON FUNCTION SPACES

(5.21)

u(0 = S(t)u(O) +1 S(t — s)Hs) ds

for all t ;> 0. If

(5.22)

u(s) ds, I HO ds 1 6 A

Io Jo /

for every t > 0, and

(5.23)

S(q + г)ф) ds = S(q) S(r)v(s) ds

for all q, r, t 0, then (5.15) holds for all t 0.

5.5 Remark The above result holds in an arbitrary Banach space under the

assumption that v is strongly measurable, that is, v can be uniformly approx-

imated by measurable simple functions.

Proof. Assume first that u:[0, oo)-»L is continuously differentiable,

v. [0, oo)-* L is continuous, and (u(t), HO) 6 A for all t 0. Let 0 = t0 < t, <

< t„ = t. Then, as in the proof of Proposition 2.10,

(5.24)

II ИОН = IIИ0)|| + £ [II ИО II - llM(rf_ ,)|0

= IIu(0)|| + Z IIИМИ - ИО - (S(t, - G-.)- ЛИ0-.)

S(t, — s)Hs) ds

£ IIИ0)II + £ [ПИОН - IIИО - (S(t, — /)ИО - (О - .МОП]

+ X (s0< - О-.) - /ХИО - И*.-.)) -

(S(tt - s)v(s) - HO) ds

£ ИИО)|| + £ [IIИО11 - Ц2ИО-(0-0-.МОН + 115(1,-1,.,)ИОII]

[(5(1, - t,.,) - f)u'(s) - S(t, - s)H0 + HO] ds

<; II u(0) H + ||(S(s" - s') - f)u'(s) - S(s" - s)Hs) + Hs")ll ds,

28 OPfRATOR SEMIGROUPS

where s' = t(_ , and s" = t( for t(_1 s < t,. Since the integrand on the right is

bounded and tends to zero as max (t( — t(_,)-»0, we obtain (5.15) in this case.

In the general case, fix t £ 0, and for each e > 0, put

(5.25) u,(t) = e"1 J u(s) ds, vs(t) = e"1 J u(s) ds.

Then

(5.26) ut(t) = e~1 | u(t + s) ds

Jo

J's p p+ar

S(t + s)u(0) ds + c~1 I I S(t + s — r)v(r) dr ds

о Jo Jo

«= e~ lS(t) f S(s)u(0) ds + €~1 | J S(/ + s - r)v{r) dr ds

Jo Jo Jo

+ c"1 f j S(t — r)v(r + s) dr ds

Jo Jo

= S(0| 1 I S(s)u(0) ds + c“1 ( ( S(s — r)v(r) dr ds I

L Jo Jo Jo J

+ I S(t - r)vs(r) dr.

Jo

By the special case already treated,

(5.27) ||w,(t)|| £ ~1 J S(sM°) ds + e~1 J J S(s - r)v(r) dr ds

and letting e—»0, we obtain (5.15) in general.

6. APPROXIMATION THEOREMS

In this section, we adopt the following conventions. For n = 1, 2,..., L„, in

addition to L, is a Banach space (with norm also denoted by || • ||) and n„ :

L—t L„ is a bounded linear transformation. We assume that sup, || n„ || < oo.

We write/,-»/iff, 6 L, for each n £ l,f 6 L, and lim,-.^ II/, - n„ /Ц = 0.

6.1 Theorem For n - 1, 2.....let {TJt)} and {T(t)} be strongly continuous

contraction semigroups on L„ and L with generators A„ and A. Let D be a

core for A. Then the following are equivalent:

(a) For each f 6 L, TK(t)nef~» T(t)f for all t 0, uniformly on bounded

intervals.

6. APPROXIMATION THEOREMS

29

(b) For each f e L, T„(t)nn f-* T(t)f for all t 0.

(c) For each f e D, there exists f„ e ©(Л„) for each n к I such that

/„-»/and A„f„-<- Affi.e., {(/ Afy.fe D} c ex-lim^A).

The proof of this result depends on the following two lemmas, the first of

which generalizes Lemma 2.5.

6.2 Lemma Fix a positive integer n. Let {S„(t)} and {S(t)} be strongly contin-

uous contraction semigroups on L„ and L with generators B„ and B. Let

f e @(B) and assume that n„S(s)f e @(B„) for all s 0 and that BnnnS(- )f.

[0, oo)-» L„ is continuous. Then, for each t £ 0,

(6.1) f-n„ S(t)f =| S„(t - sX B„ n„ - n„ B)S(s)f ds,

Jo

and therefore

(6.2) I ||(B.n„-n„B)WII ds.

Jo

Proof. It suffices to note that the integrand in (6.1) is ~(d/ds)S„(t - s)n„S(s)f

for 0 <; s < t. □

6.3 Lemma Suppose that the hypotheses of Theorem 6.1 are satisfied

together with condition (c) of that theorem. For n = I, 2,... and A > 0, let A*

and A* be the Yosida approximations of A„ and A (cf. Lemma 2.4). Then

A*n„f-> Alf for every f e L and A > 0.

Proof. Fix A > 0. Let f e D and g = (A — A)f. By assumption, there exists

f„ e &(А„) for each n I such that /„-♦/ and A„f„~> Af, and therefore (A — A„)f„

-» g. Now observe that

(6.3) \\А*пяд-п,,А*д\\

= || [Л2(Л - A) - * - Л К g - n„Ц2(Л - A) - 1 - Л/]<? ||

= Л2||(Л - А„)~‘л„д - л„(А - А)~‘дП

Л21|(Л - Anyln„g -/JI + Л2||/Л - п„(А - Л)-'д|1

А\\п„д - (Л - А)ЛН + Л2||/л - VII

for every и ;> I. Consequently, || А*п„д - п„ А*д\\ -»0 for all д е 0H.A — Л|о).

But 0ЦА - Л |D) is dense in L and the linear transformations А*п„ — я„Л2,

n = 1,2,..., are uniformly bounded, so the conclusion of the lemma follows.

□

Proof of Theorem 6.1. (a => b) Immediate.

30 OPERATOR SEMIGROUPS

(b=>c) Let A > 0,/e &(A), and g = (A — A)f so that f = ^е~иТ(1}д

dt. For each n^ I, put /, = j® e~x,TK(t)nKg dt e &(A„}. By (b) and the

dominated convergence theorem, fn —>f so since (А — Ая)/я x it„g—>g = (A

— A)f we also have A^-tAf.

(c => a) For n = I, 2,... and A > 0, let {П0} and {Тл(г)} be the strong-

ly continuous contraction semigroups on L, and L generated by the Yosida

approximations A* and A*. Given f e D, choose {/„} as in (c). Then

(6.4) T/t)n„ f-n„ T(t)f = T.(tXn. /-/.) + [T.(t)f, - WJ

+ ГМ/. - n„f) + [П0«./~ «. ПО/]

+ «.СП»)/- П0Л

for every n ;> I and t к 0. Fix t0 0. By Proposition 2.7 and Lemma 6.3,

(6.5) sup || W„ - ПО/.II bm t01| A. f, - A*f,||

O’St Sto л-»<ю

<; liin t0{ || A. f„ - n„Af\\ + || n.(Af- Лл/)||

+ Ця.ЛУ- Ляля/|| + || Л>я/-/я)||}

^К»0||Л/- Л УII,

where К = sup, || п„ ||. Using Lemmas 6.2, 6.3, and the dominated con-

vergence theorem, we obtain

(6.6) liin sup ||H0«./-«.И0/11

.-.oo Oststo

J*<0

|| (Л*ля — пя Лл)Тл(х)/|| ds = 0.

0

Applying (6.5), (6.6), and Proposition 2.7 to (6.4), we find that

(6.7) iiS sup ||Тя(0«./-л.П0/11 <12Ке0||ЛУ- Л/ll.

«-•oo O’St <sto

Since A was arbitrary. Lemma 2.4(c) shows that the left side of (6.7) is zero.

But this is valid for all/ e D, and since D is dense in L, it holds for all/e L.

О

There is a discrete-parameter analogue of Theorem 6.1, the proof of which

depends on the following lemma.

6.4 Lemma Let В be a linear contraction on L. Then

(6-8) IIB"/- 711 £ х/л||В/-/||

for all/б L and n = 0, I.

6. APPROXIMATION THEOREMS

31

Proof. Fix/б L and n 0. For к = 0, 1,...,

(6.9) II В"/— B*/|| 5 ||BI*’"/-/||

= I

j=o

S|fc- n| ЦВ/-/Ц.

Therefore

= e“" f (B"/-B*/)£|

f |* - л| ЦВ/-/Ц

*-o k!

£ (fc-n)2—}> ЦВ/-/Ц

= УЙВ/-/Ц.

(Note that the last equality follows from the fact that a Poisson random

variable with parameter n has mean n and variance n.) □

6.5 Theorem For n = 1,2..........let T„ be a linear contraction on L„, let £„ be

a positive number, and put A„ = е~'(Т„ - I). Assume that Ктя_ввя = 0. Let

{T(t)} be a strongly continuous contraction semigroup on L with generator A,

and let D be a core for A. Then the following are equivalent:

(a) For each/6 L, T(t)f for all t £ 0, uniformly on bounded

intervals.

<b) For each f e L, Т}!мпя T(t)f for all t 2> 0.

(c) For each f e D, there exists f„ e L„ for each n к I such that f,->f

and Ля/я — A/(i.e., {(/ Afy.fe D] c ex-lim^ A„).

Proof. (a=»b) Immediate.

(b => c) Let A > 0, f e ^(A), and g = (A — A)f, so that f — Jo e uTfl)g

dt. For each n £ I, put

(6.H) A = e. f e ^Tknnng.

k«0

32 OPERATOR SEMIGROUPS

By (b) and the dominated convergence theorem,/,—»/ and a simple calcu-

lation shows that

(6.12) (2 - Л,)/, = я, g + Ле. n„ g

+ “ 1+*“") f е'^'Т^П'д

*-o

for every n 1, so (2 - Ля)/я -*g = {X — A)f. It follows that A„ f, -»Af.

(c => a) Given f e D, choose {/,} as in (c). Then

(6.13) T^nJ-n,T(t)f

+ exp

Л, f(/« -«/) + CXP

- exp <£,

nJ-neT(t)f

for every n 1 and t 0. Fix t0 0. By Lemma 6.4,

(6.14)

lim sup

-•oo Osisio

1*4, - exp

and by Theorem 6.1,

(6.15) lim sup

-.00 Oststo

Consequently,

(6.16)

lim sup || T^nJ - n„ - 0.

-.00 Oststo

But this is valid for all f e D, and since D is dense in L, it holds for all f e L

□

6.6 Corollary Let be a family of linear contractions on

L with F(0) = I, and let {T(t)} be a strongly continuous contraction

semigroup on L with generator A. Let D be a core for A. If lim£_0

£_ l[F(fi)/—f] = Affor every f 6 D, then, for each f 6 L, V(t/nyf~* T(t)ffor all

t 0, uniformly on bounded intervals.

Proof. It suffices to show that if {t„} is a sequence of positive numbers such

that t,—»t 0, then Vftjriff-» T(t)f for every f 6 L. But this is an immediate

consequence of Theorem 6.5 with T„ = Vftjn) and £, = tjn for each n ;> 1. □

6. AmtOXIMATION THEO*EMS

33

6.7 Corollary Let {T(t)), {S(t)}, and {1/(0} be strongly continuous contrac-

tion semigroups on L with generators A, B, and C, respectively. Let D be a

core for A, and assume that D <= ©(B) n ©(C) and that A * В + C on D.

Then, for each f e L,

(6.П) lim [s(^(0p- W

for all t 0, uniformly on bounded intervals. Alternatively, if {e„} is a

sequence of positive numbers tending to zero, then, for each f e L,

(6-18) lim [5(е31/(еЛ,л’У = T(t)f

я —• oo

for all t 0, uniformly on bounded intervals.

Proof. The first result follows easily from Corollary 6.6 with F(t) = S(t)l/(t)

for all t 0. The second follows directly from Theorem 6.5. □

6.8 Corollary Let {T(r)} be a strongly continuous contraction semigroup on

L with generator A. Then, for each feL, (f — Щп)А)~я/-> T(t)f for all t 0,

uniformly on bounded intervals. Alternatively, if {£„} is a sequence of positive

numbers tending to zero, then, for each feL,(l — £„ Л)“,,/*"У-> T(t)f for all

t 0, uniformly on bounded intervals.

Proof. The first result is a consequence of Corollary 6.6. Simply take

V(t) = (/ — M)~* for each t 0, and note that if £ > 0 and A = £“*, then

(6.19) = A2(A — A)~lf — Af=AJ,

where Ал is the Yosida approximation of A (cf. Lemma 2.4). The second result

follows from (6.19) and Theorem 6.5. □

We would now like to generalize Theorem 6.1 in two ways. First, we would

like to be able to use some extension A„ of the generator A, in verifying the

conditions for convergence. That is, given (/, g) e A, it may be possible to find

(/< 0«) 6 for each n 1 such that/„-»/and g„—»g when it is not possible

(or at least more difficult) to find g„) g A„ for each n 1. Second, we

would like to consider notions of convergence other than norm convergence.

For example, convergence of bounded sequences of functions pointwise or

uniformly on compact sets may be more appropriate than uniform con-

vergence for some applications. An analogous generalization of Theorem 6.5 is

also given.

34 OPERATOR SEMIGROUPS

Let LIM denote a notion of convergence of certain sequences f, e L„,

n= 1, 2,..., to elements f g L satisfying the following conditions:

(6.20) LIM f, «f and LIM g„ = g imply

LIM (о/, + pg„) ~ Pg for all a, p g R.

(6.21) LIM/*,‘* =/**• for each fc^l and

lim sup ||/<*‘ -/J| V ||/““ -/|| =0 imply LIM /, =/

k-*® я2 1 ,

(6.22) There exists К > 0 such that for each / g L, there is a

sequence /, g L, with Ц/, || К ||/||, » 1,2 .,., satisfying

LIM/,=/

If A„ <= L„ x L„ is linear for each n 1, then, by analogy with (4.3), we define

(6.23) ex-LIM A„ = {(/ g) g L x L: there exists (/,, g„) g A„

for each n 2: 1 such that LIM/, = /and LIM g„ = g}.

6.9 Theorem For n = 1, 2,..., let A„ c L, x L, and A c L x L be linear

and dissipative with — A„) = L, and — A) = L for some (hence all)

A > 0, and let {T,(t)} and {T(t)} be the corresponding strongly continuous

contraction semigroups on ^(Л,) and <&(A). Let LIM satisfy (6.2OH6.22)

together with

(6.24) LIM /, = 0 implies LIM (Л - Л,)~ 7. = 0 for all A > 0.

(a) If A c ex-LIM A„, then, for each (/, g) g A, there exists (/,, g„) g A„

for each n 1 such that sup, Ц/, || < oo, sup, || дя || < oo, LIM /, = / LIM g„

= g, and LIM T,(t)/, = T(t)/for all t Z 0.

(b) If in addition extends to a contraction semigroup (also

denoted by { Zi(r)}) on L, for each n 1, and if

(6.25) LIM /, « 0 implies LIM T,(r)/, « 0 for all t £ 0,

then, for each/g &(A), LIM/, = /implies LIM Tn(t)f„ = T(t)/for all t 0.

6.10 Remark Under the hypotheses of the theorem, ex-LIM A„ is closed

in L x L (Problem 16). Consequently, the conclusion of (a) is valid for all

(/ g) g A. О

Proof. By renorming L„, n = 1, 2,..., if necessary, we can assume К = 1 in

(6.22).

Let & denote the Banach space (fLii^») x with norm given by

II({/.}. nil = sup.a JII/. || V ll/ll, and let

(6.26) - {({/.}>/) 6 LIM/. =/}.

6. APPROXIMATION THEOREMS

35

Conditions (6.20) and (6.21) imply that <s a closed subspace of if, and

Condition (6.22) (with К = 1) implies that, for each feL, there is an element

({/.},/) with || ({/.},/) II = ||/||.

Let

(6.27) = {[({/.},/), ({».}• 0)] e X x 2>: (/., g,) e A. for each

n 1 and (/, g) 6 A}.

Then j/ is linear and dissipative, and — j/) = for all A > 0. The corre-

sponding strongly continuous semigroup {^"(t)} on £2(j/) is given by

(6.28) ^OX{/J J) = ({TJM,}, T(t)f\.

We would like to show that

(6.29) ^(t): n 0(j/) — tfo n 0(j/j. t2>0.

To do so, we need the following observation. If (/, 0) e A, A > 0, h = Af — g,

({/1Я}, Л) e and

(6.30) (4, 0.) = ((A - A„)' ‘Л., Af. - Л.)

for each n 1, then

(6.31) [({/.},/), ({0.}, 0)] 6 (^0 x ^0) n J/.

To prove this, since A c ex-LIM A„, choose (/., 0.) 6 Л. for each n 1 such

that LIM /. =f and LIM 0. = g. Then LIM (h. - (А/, — &,)) = 0, so by (6.24),

LIM (A - Л.Г 4 -/. = 0. It follows that LIM/. = LIM (A - Л.)' */i. =

LIM /. = f and LIM 0. = LIM (Af. - /1.) = Af - Л = g. Also, sup. Ц/. || <

A’1 sup. ||h.|| < 00 and sup. || 0.11 <, 2 sup. ||h. || < co. Consequently, [({/.},/),

({0.}, 0)] belongs to 5^0 x , and it clearly also belongs to j/.

Given ({h.}, h) 6 and A > 0, there exists (/, g) 6 A such that Af- g = h.

Define (/.,0.)еЛ. for each n^l by (6.30). Then (A — .i/) ‘({Л.}, h) =

({/.}./) 6^0 by (6.31), so

(6.32) (A - j/) ‘: A > 0.

By Corollary 2.8, this proves (6.29).

To prove (a), let (/, g) 6 A, A > 0, and h = Af - g. By (6.22), there exists

({h.}, h) e with || ({/>.}, h) || = || h ||. Define (/., 0.) 6 Л. for each n 1 by

(6.30). By (6.31), (6.29), and (6.28), ({T.(t)/.}, for all t 2>0, so the

conclusion of (a) is satisfied.

As for (b), observe that, by (a) together with (6.25), LIM/. =/g @(Л)

implies LIM T.(t)/. = T(t)/for all t ;> 0. Let f e ©(Л) and choose {J4*1} «= ®(Л)

such that ||/<м —/II s; 2~* for each к 1. Put f*01 = 0, and by (6.22), choose

36 OPERATOR SEMIGROUPS

({ui*‘},/<*‘ g such that || («*»},/<*» -/**““) II - И/*** -/**'“Il for

each к 1. Fix t 0. Then

(6.33) LIM £ u'« */<“, LIM T.(t) £ <*' - W"'

i i

for each к 1. Since

(6.34)

00

£ «4°

*+l

||/**‘~/|| £ 2~*,

<;3-2-*,

and

(6.35)

r„(t) £ u«‘

4+1

£3-2-*,

|| W“- T(t)/|| ;S2‘,

for each n 2: 1 and к 1, (6.21) implies that

(6.36) LIM £ ui° = f, LIM T„(t) £ ui° - T(t)/,

i i

so the conclusion of (b) follows from (6.25).

6.11 Theorem For n = 1, 2,..., let T„ be a linear contraction on L„, let

£, > 0, and put Л, - £," l(T„ - I). Assume that lim,,-,,,, £, = 0. Let A c L x L

be linear and dissipative with — Л) = L for some (hence all) A > 0, and let

{T(t)} be the corresponding strongly continuous contraction semigroup on

&(A). Let LIM satisfy (6.20H6.22), (6.24), and

(6.37) lim И/.||=0 implies LIM/, = 0.

(a) If A c ex-LIM A„, then, for each (/, g) e A, there exists /, e L.

for each n^l such that sup, Ц/, || < oo, sup,||A,/,|| < oo, LIM/, =/

LIM AJ„ - g, and LIM T1."*-’/. « T(t)/for all t 0.

(b) If in addition

(6.38) LIM/, = 0 implies LIM T’"**/, = 0 for all t 2> 0,

then for each/6 3(A), LIM /, «= /implies LIM = T(t)/for all t 0.

Proof. Let (/, g) g A. By Theorem 6.9, there exists/, g L, for each n 1 such

that sup,Ц/, || < oo, sup,|| Л^/, || < oo, LIM /, =/, LIM Ajn = g, and

LIM = T(t]fiot al) t 0. Since

(6.39)

lim

Я-» 00

Ы'-Ш

Л,>/, — exp {еЛ,}/,

7. PERTURBATION THEOREMS

37

for all t 0, we deduce from (6.37) that

(6.40)

LIM exp

K£Hz-=tw'

t 2t0.

The conclusion of (a) therefore follows from (6.14) and (6.37).

The proof of (b) is completely analogous to that of Theorem 6.9 (b). □

7. PERTURBATION THEOREMS

One of the main results of this section concerns the approximation of semi-

groups with generators of the form A + B, where A and В themselves generate

semigroups. (By definition, 2(A + B) — 2(A) n £2(B).) First, however, we give

some sufficient conditions for A + В to generate a semigroup.

7.1 Theorem Let A be a linear operator on L such that A is single-valued

and generates a strongly continuous contraction semigroup on L. Let В be a

dissipative linear operator on L such that £2(B) => 2(A). (In particular, В is

single-valued by Lemma 4.2.) If

(7.1) IIB/H <, a|| ЛГИ + /6 0(A),

where 0^a< 1 and fl 0, then A + В is single-valued and generates a

strongly continuous contraction semigroup on L. Moreover, A + В = A + B.

Proof. Let у 2: 0 be arbitrary. Clearly, 0(Л + yB) = 0(Л) is dense in L. In

addition, Л + yB is dissipative. To see this, let Л. be the Yosida approx-

imation of A for each ц > 0, so that Лм = цМц — Л)"1 — /]. If/e 2(A) and

A > 0, then

(7.2) || Af — (Л + уB)/|| = lim || Л/- (A, + уB)/||

д-»оо

= lim ||(A + n)f- yBf— ц2(ц - A)~ l/||

Ц-* oo

2: lim {||(A + v)f- yB/|| - || ц2(ц - А) 1/||}

ц-* оо

2: lim {(Л + д) 11/Ц -д 11/11}

Ц-* 00

= Л11/11

by Lemma 2.4(c) and the dissipativity of yB.

38 OPERATOR SEMIGROUPS

If/6 2(A), then there exists {/J c. 2(A) such that f,-* f and Af,-* Af. By

(7.1), {Bfn} is Cauchy, so f g 2(B) and Bf,-* Bf. Hence 2(A) <= 2(B) and (7.1)

extends to

(73) || Bf || <, a|| Л/Ц + P\\f II, fe 2(A).

In addition, if/c 2(A) and if {/„} is as above, then

(7.4) (A + yB)f = lim Af + у lim Bf, = lim (A + yB)f = (A + yB)f,

M it it

implying that A + yB is a dissipative extension of A + yB.

Let

(7.5) Г = {y 0: 2(A — A — yB) «= L for some (hence all) Л > 0}.

To complete the proof, it suffices by Theorem 2.6 and Proposition 4.1 to show

that 1 g Г. Noting that 0 g Г by assumption, it is enough to show that

(7.6)

у G Г n [0, 1)

implies

У. У +

1 — ay4)

2a )

<= Г.