Текст

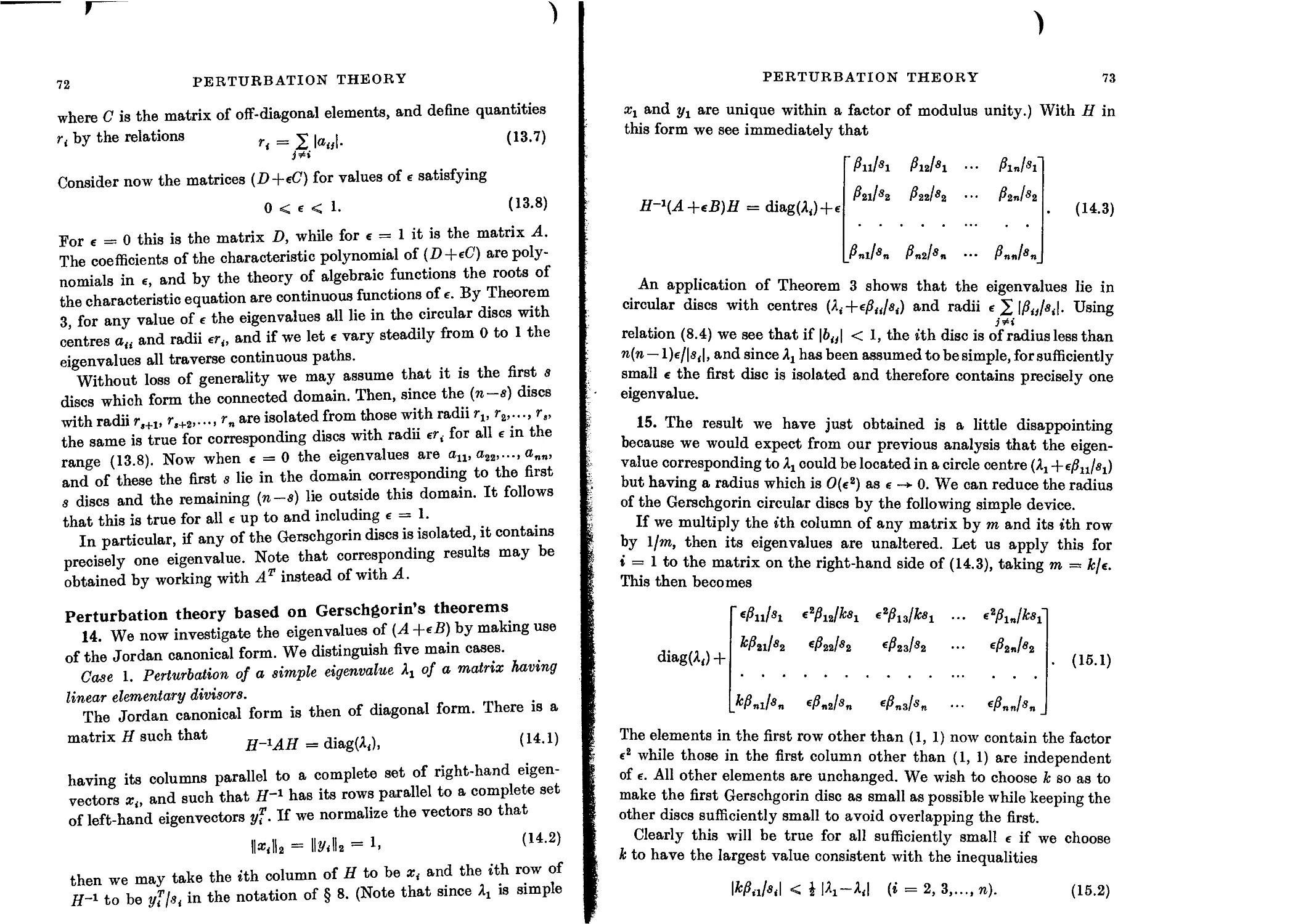

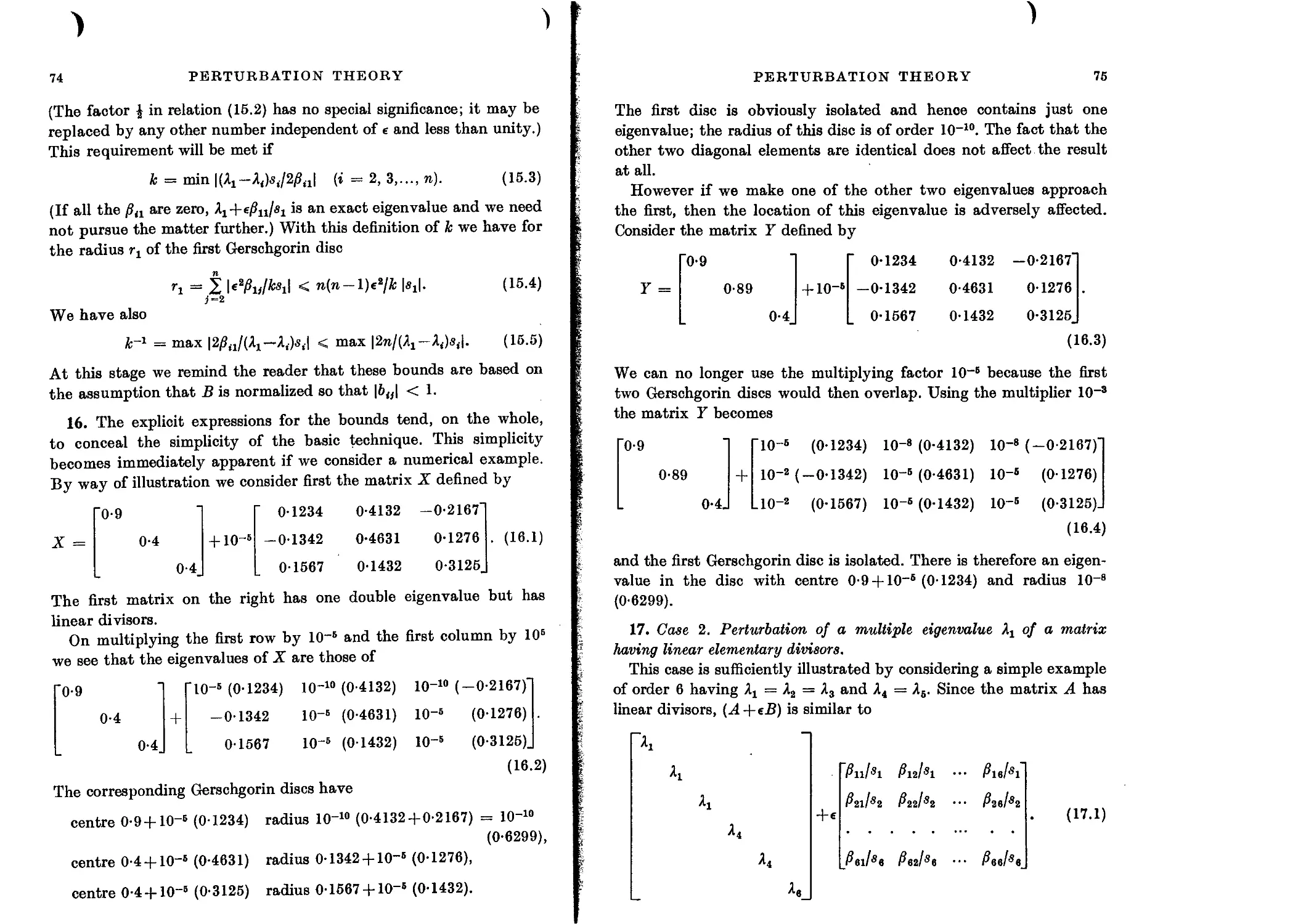

THE ALGEBRAIC EIGENVALUE

PROBLEM

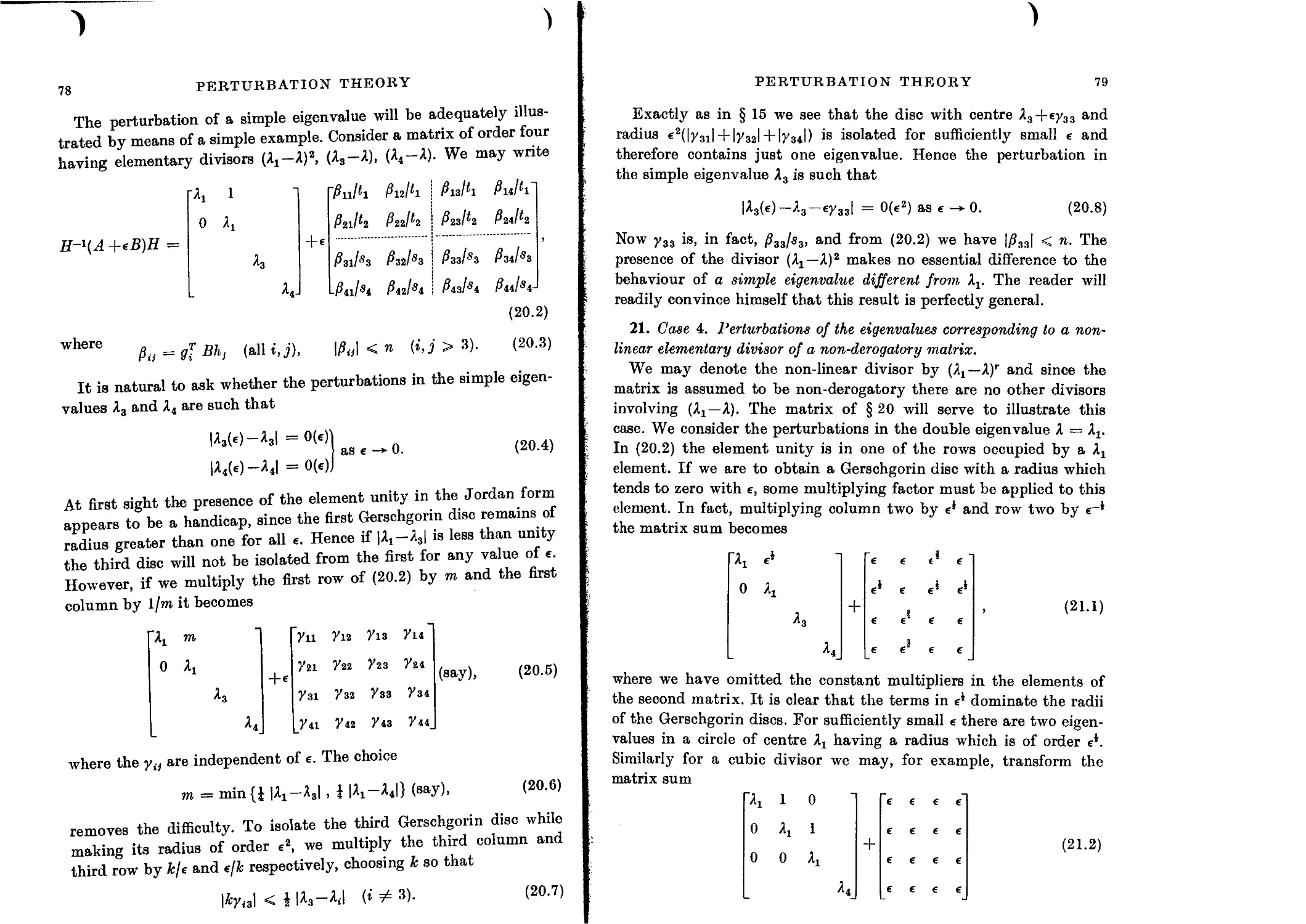

BY

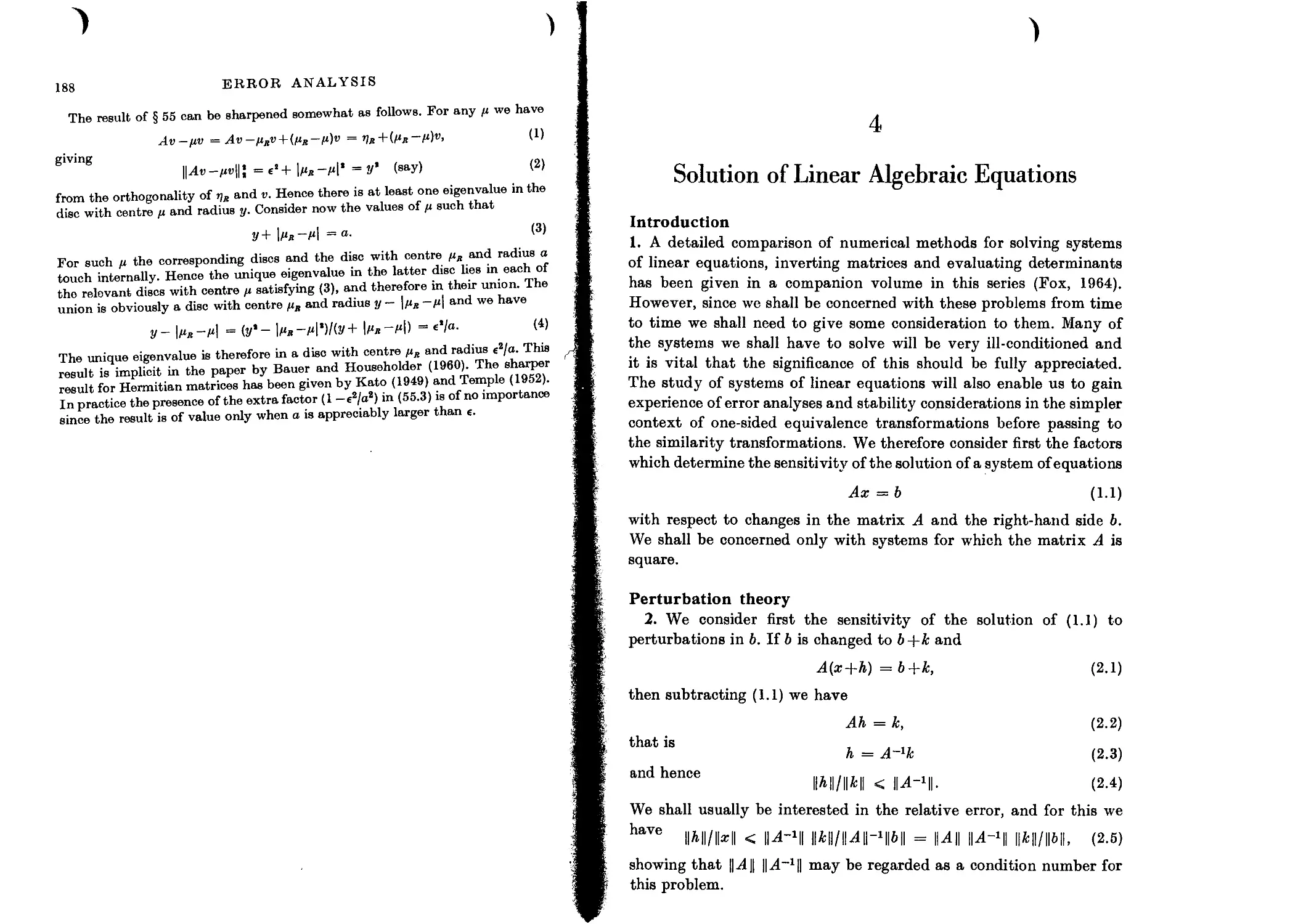

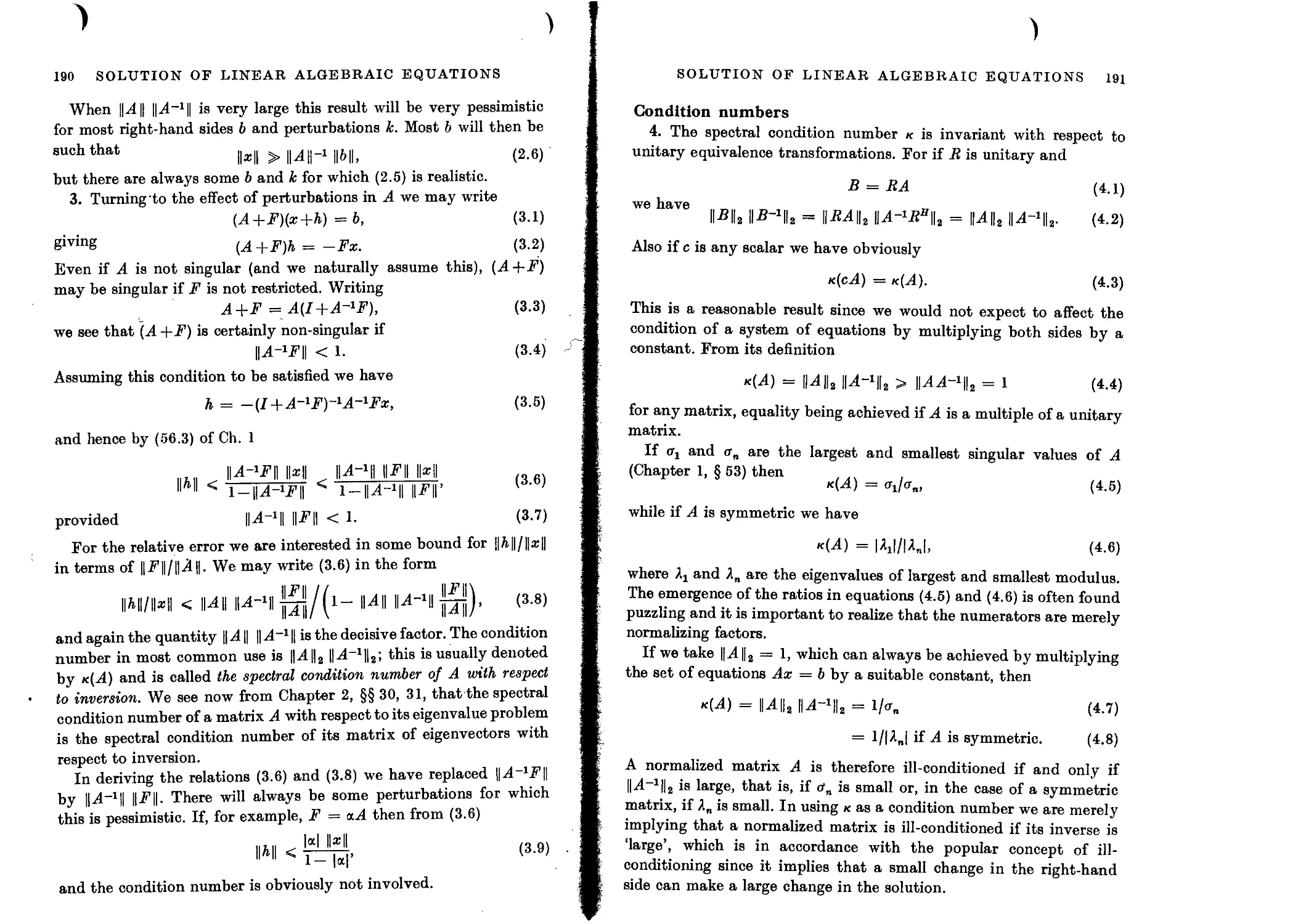

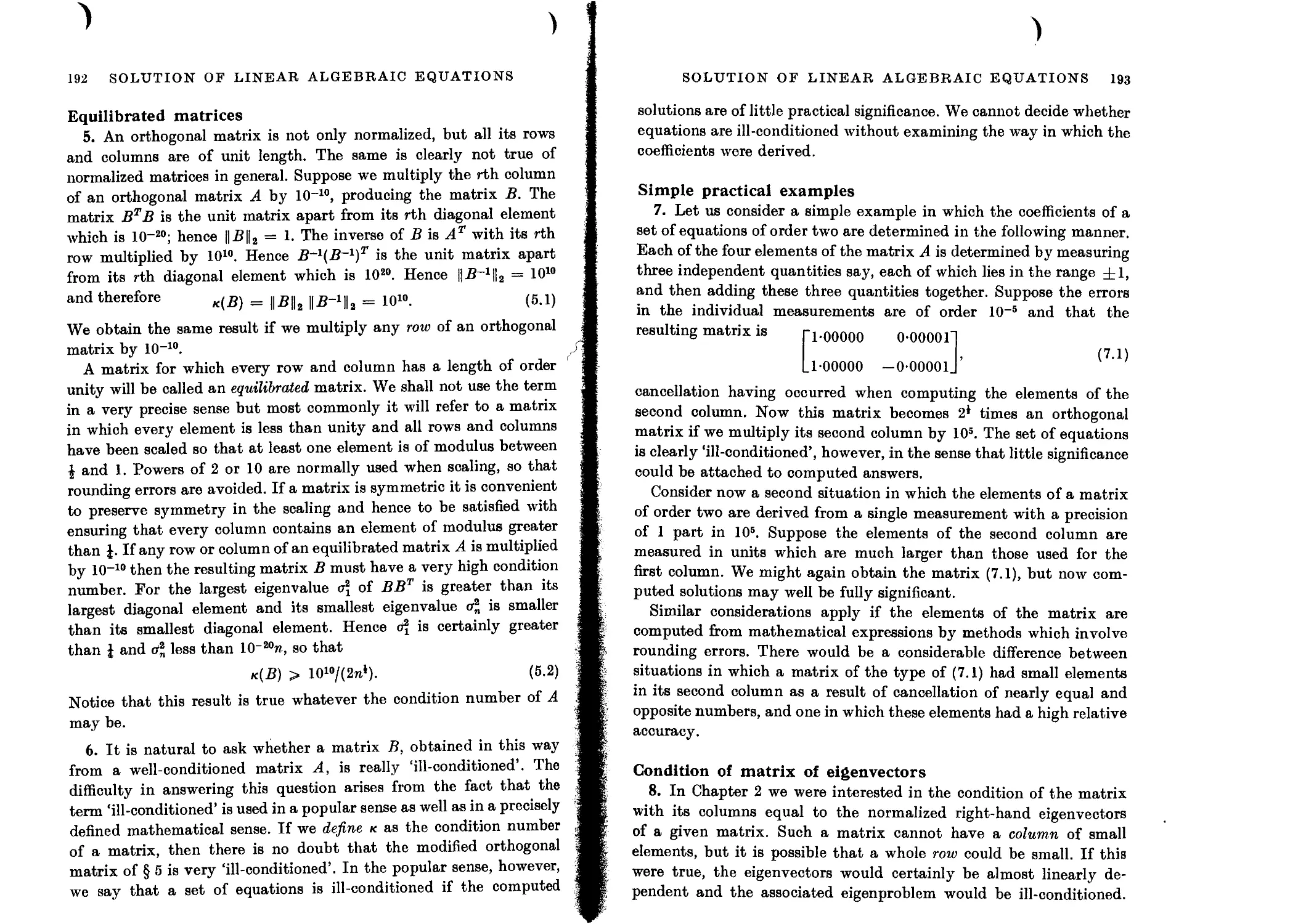

J. H. WILKINSON

M.A. (Cantab.), Sc.D., D.Tech., F.R.S.

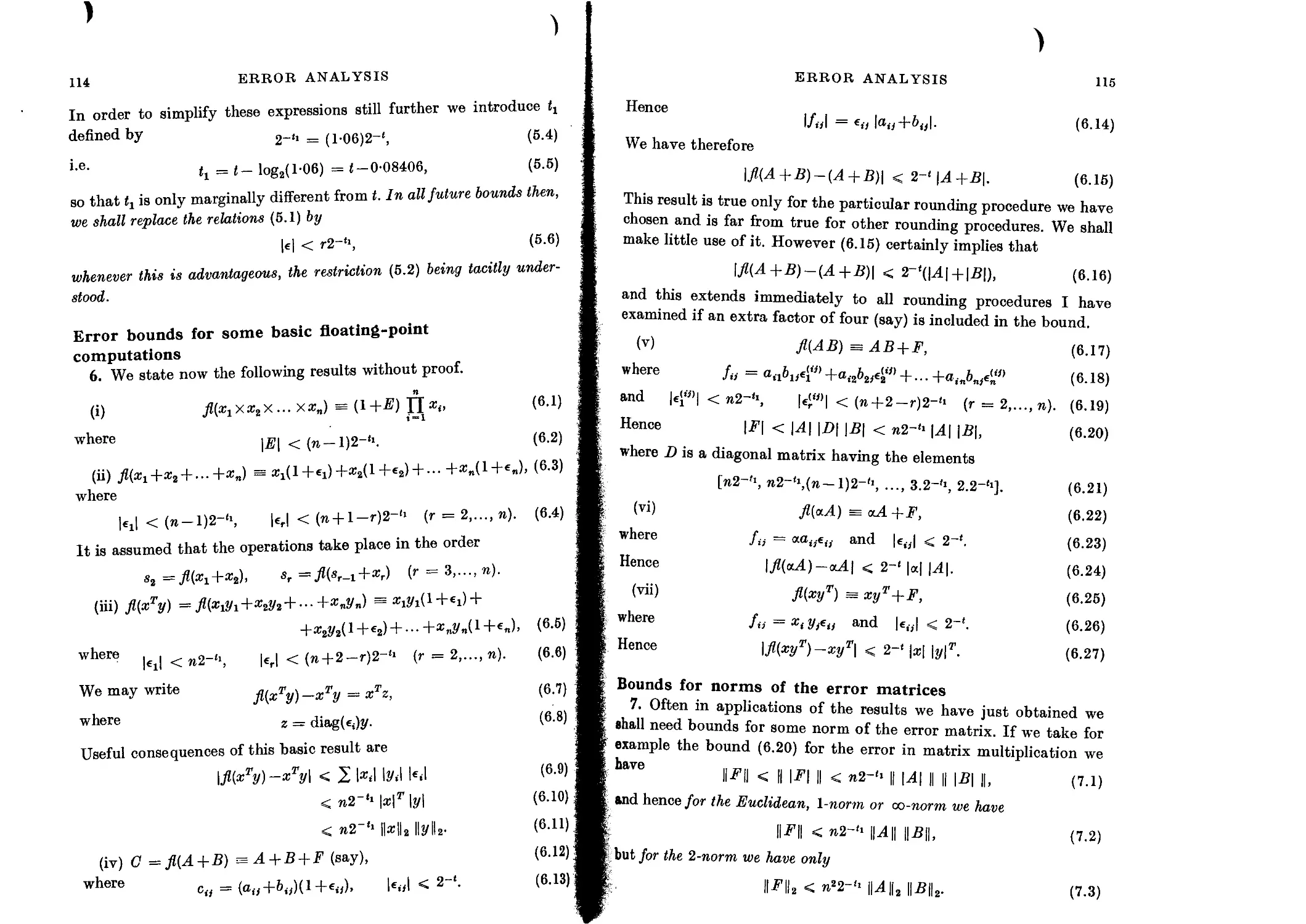

CLARENDON PRESS • OXFORD

THE ALGEBRAIC EIGENVALUE

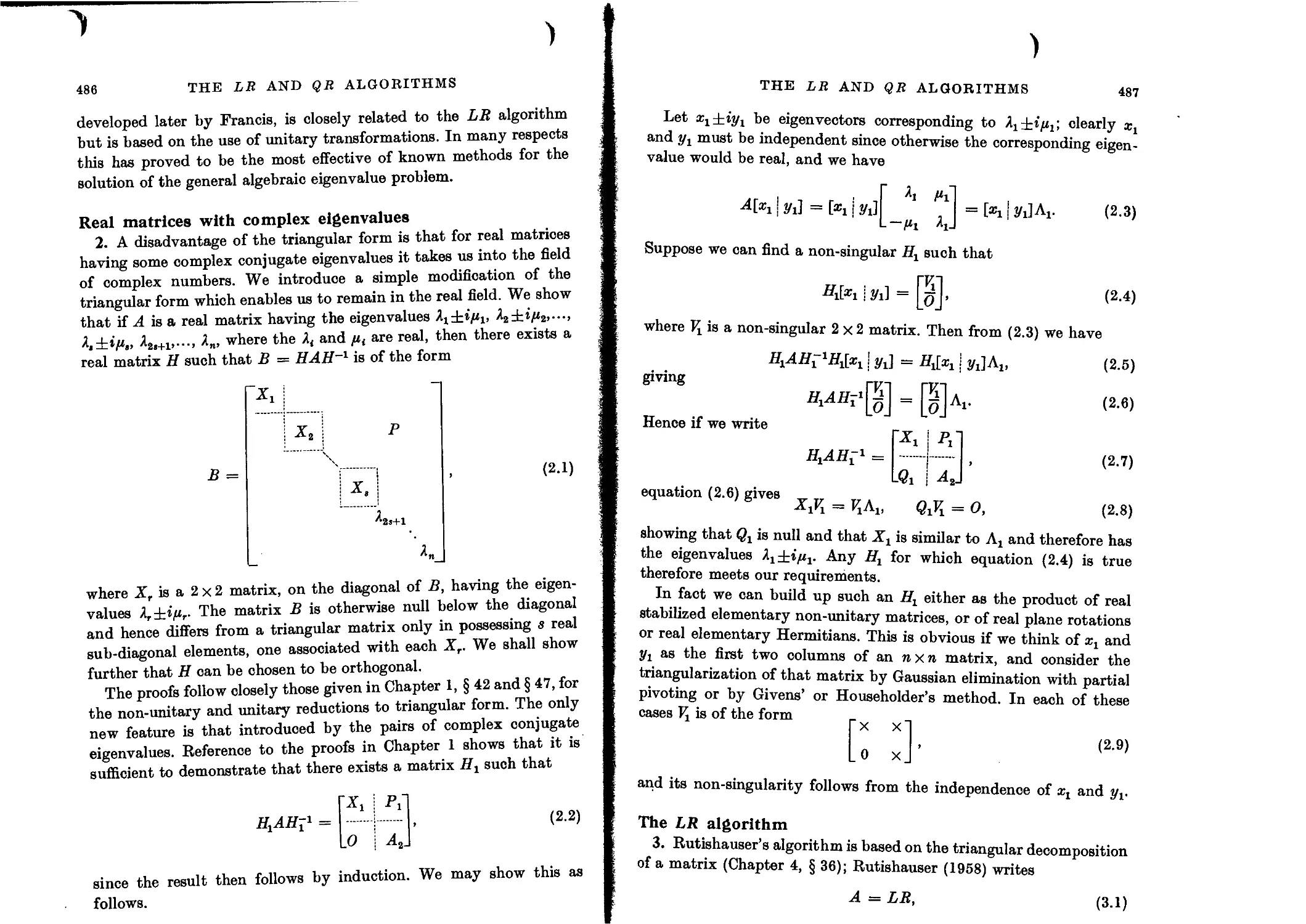

PROBLEM

BY

J. H. WILKINSON

M.A. (Cantab.), Sc.D., D.Tech., F.R.S.

CLARENDON PRESS • OXFORD

Oxford University Press, Walton Street, Oxford 0X2 6DP

Oxford New York Toronto

Delhi Bombay Calcutta Madras Karachi

Petaling Jaya Singapore Hong Kong Tokyo

Nairobi Dar es Salaam Cape Town

Melbourne Auckland

and associated companies in

Beirut Berlin Ibadan Nicosia

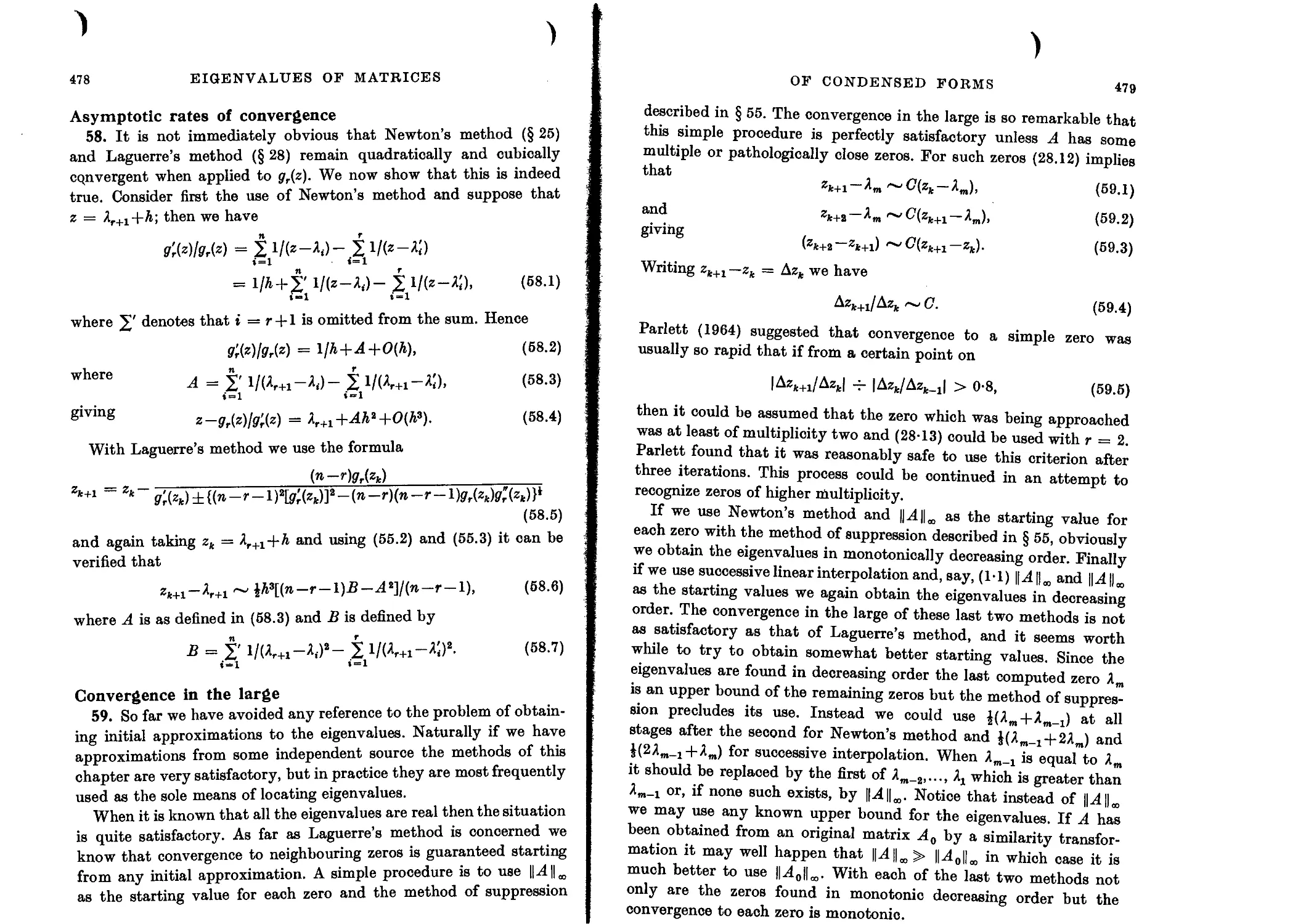

Oxford is a trade mark of Oxford University Press

Published in the United States

by Oxford University Press, New York

© Oxford University Press, 1965

First published 1965

Reprinted (with corrections) 1967, 1969, 1972, 1977, 1978

First published in paperback 1988

All rights reserved. No part of this publication may be reproduced,

stored in a retrieval system, or transmitted, in any form or by any

means, electronic, mechanical, photocopying, recording, or otherwise,

without the prior permission of Oxford University Press

This book is sold subject to the condition that it shall not, by way of

trade or otherwise, be lent, re-sold, hired out, or otherwise circulated

without the publisher’s prior consent in any form of binding or cover

other than that in which it is published and without a similar condition

including this condition being imposed on the subsequent purchaser

British Library Cataloguing in Publication Data

Wilkinson, J. H.

The algebraic eigenvalue problem.—

(Monographs on numerical analysis)

I. Matrices 2. Eigenvalues

I. Title II. Series

512.9'434 QAI93

ISBN 0-19-853418-3

Library of Congress Cataloging in Publication Data

Wilkinson, J. H. (James Hardy)

The algebraic eigenvalue problem.

(Monographs on numerical analysis)

Bibliography: p.

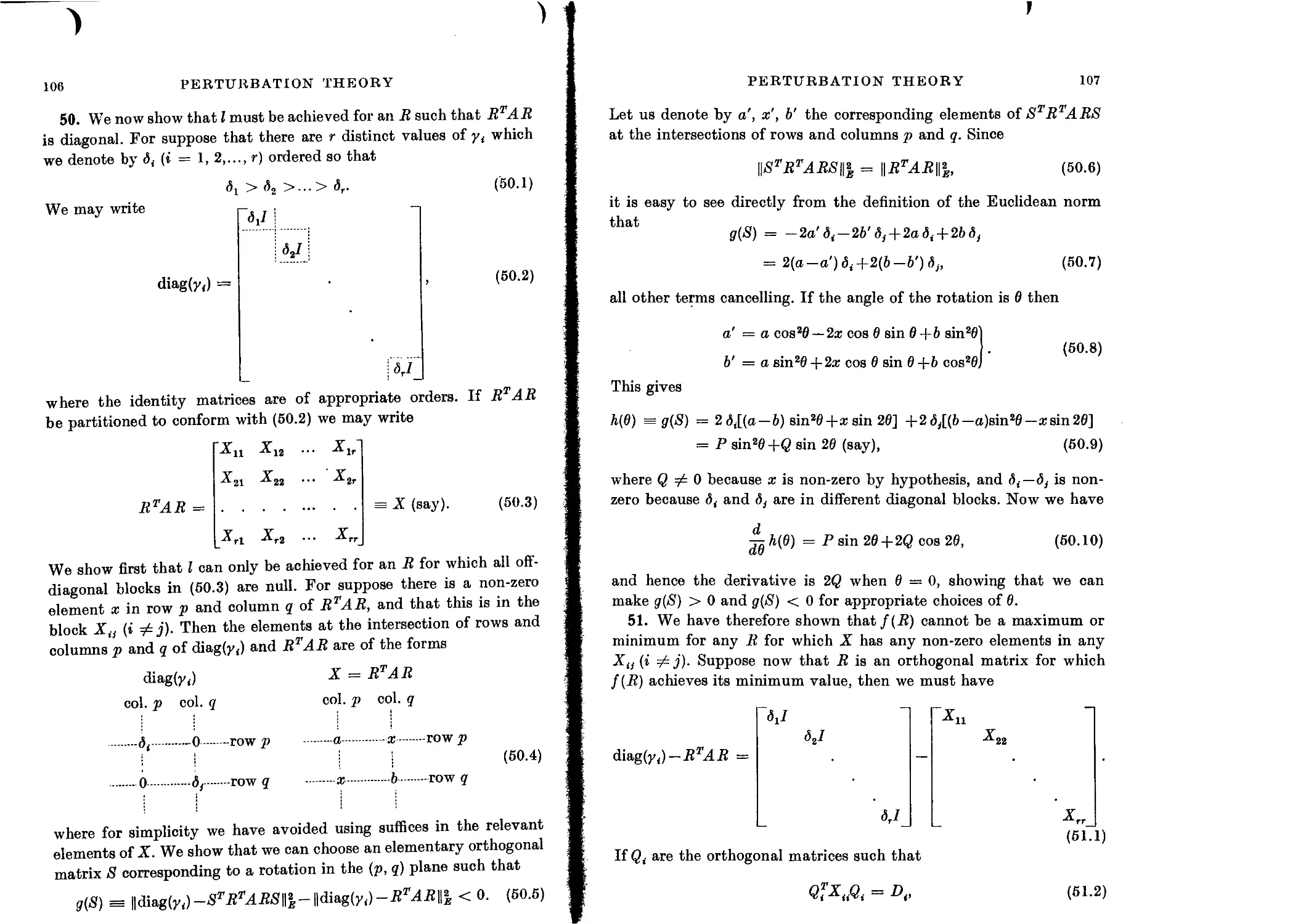

Includes index.

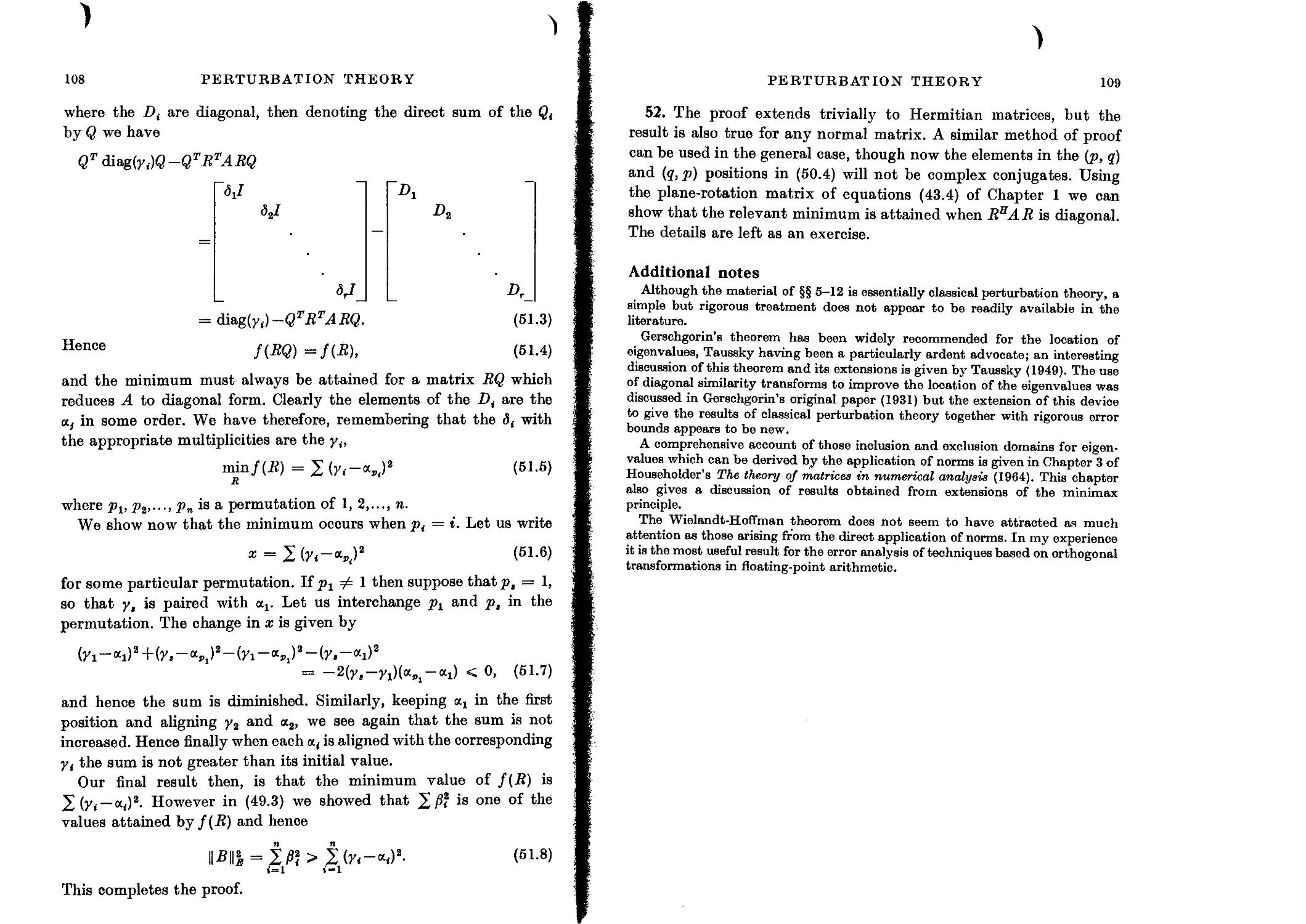

I. Equations—Numerical solutions. 2. Matrices.

3. Algebras, Linear. I. Title. II. Series.

QA218.W51988 512.9'4 87-2874

ISBN 0-19-853418-3

Printed in Great Britain

at the University Printing House, Oxford

by David Stanford

Printer to the University

Preface

The solution of the algebraic eigenvalue problem has for long had a

particular fascination for me because it illustrates so well the difference

between what might be termed classical mathematics and practical

numerical analysis. The eigenvalue problem has a deceptively simple

formulation and the background theory has been known for many

years; yet the determination of accurate solutions presents a wide

variety of challenging problems.

The suggestion that I might write a book on this topic in the series

of Monographs on Numerical Analysis was made by Professor L. Fox

and Dr. E. T. Goodwin after my early experience with automatic

digital computers. It is possible that its preparation would have

remained a pious aspiration had it not been for an invitation from

Professor J. W. Givens to attend a Matrix Symposium at Detroit in

1957 which led to successive invitations to lecture on ‘Practical

techniques for solving linear equations and computing eigenvalues

and eigenvectors’ at Summer Schools held in the University of

Michigan. The discipline of providing a set of notes each year for these

lectures has proved particularly valuable and much of the material

in this book has been presented during its formative stage in this way.

It was originally my intention to present a description of most of

the known techniques for solving the problem, together with a

critical assessment of their merits, supported wherever possible by the

relevant error analysis, and by 1961 a manuscript based on these lines

was almost complete. However, substantial progress had been made

with the eigenvalue problem and error analysis during the period in

which this manuscript was prepared and I became increasingly

dissatisfied with the earlier chapters. In 1962 I took the decision to

redraft the whole book with a modified objective. I felt that it was no

longer practical to cover almost all known methods and to give error

analyses of them and decided to include mainly those methods of

which I had extensive practical experience. I also inserted an

additional chapter giving fairly general error analyses which apply

to almost all the methods given subsequently. Experience over the

years had convinced me that it is by no means easy to give a

reliable assessment of a method without using it, and that often

comparatively minor changes in the details of a practical process have

a disproportionate influence on its effectiveness.

vi

PREFACE

The writer of a book on numerical analysis faces one particularly

difficult problem, that of deciding to whom it should be addressed.

A practical treatment of the eigenvalue problem is potentially of

interest to a wide variety of people, including among others, design

engineers, theoretical physicists, classical applied mathematicians

and numerical analysts who wish to do research in the matrix field.

A book which was primarily addressed to the last class of readers

might prove rather inaccessible to those in the first. I have not

omitted anything purely on the grounds that some readers might find

it too difficult, but have tried to present everything in as elementary

terms as the subject matter warrants. The dilemma was at its most

acute in the first chapter. I hope the elementary presentation used

there will not offend the serious mathematician and that he will treat

this merely as a rough indication of the classical material with which

he must be familiar if he is to benefit fully from the rest of the book.

I have assumed from the start that the reader is familiar with the

basic concepts of vector spaces, linear dependence and rank. An

admirable introduction to the material in this book is provided by

L. Fox’s ‘An introduction to numerical linear algebra’ (Oxford, this

series). Research workers in the field will find A. S. Householder’s

‘The theory of matrices in numerical analysis’ (Blaisdell, 1964) an

invaluable source of information.

My decision mentioned earlier to treat only those methods of which

I have extensive experience has inevitably resulted in the omission of

a number of important algorithms. Their omission is perhaps less

serious than it might have been, since the treatises by Durand,

‘Solutions num£riques des equations algebriques’ (Masson, 1960,1961)

and by D. K. Faddeev and V. N. Faddeeva, ‘Vycislitel’nye metody

lineinoi algebry’ (Moscow, 1963) give fairly extensive coverage.

However, two omissions call for special mention. The first is the

Jacobi-type method developed by P. J. Eberlein and various modi-

fications of this method developed independently by H. Rutishauser.

These seem to me to show exceptional promise and may well provide

the most satisfactory solution to the general eigenvalue problem.

I was reluctant to include them without first giving them the detailed

study which they deserve. My decision was reinforced by the reali-

zation that they are not directly covered by the general error analyses

I have given. The second omission is a general treatment of Rutis-

hauser’s QD algorithm. This is of very wide application and I did not

feel I could do full justice to this work within the restricted field of the

PREFACE

vii

eigenvalue problem. The reader is referred to papers by Rutishauser

and Henrici. The literature in the eigenvalue field is very extensive

and I have restricted the bibliography mainly to those papers to

which explicit reference is made in the text. Very detailed bibliog-

raphies are available in the books by Faddeev and Faddeeva and

Householder mentioned earlier.

When redrafting the book I was tempted to present the algorithms

in ALGOL, but decided that the difficulties of providing procedures

which were correct in every detail were prohibitive at this stage.

Accordingly I have used the language of classical mathematics but

have adopted a form of words which facilitates translation into

ALGOL and related computer languages.

The material for this book is drawn from many sources but I have

been particularly influenced by the work of colleagues at the Univer-

sity of Michigan Summer Schools, especially that of F. L. Bauer,

G. E. Forsythe, J. W. Givens, P. Henrici, A. S. Householder, 0.

Taussky, J. Todd and R. S. Varga. Apart from this it is the algo-

rithmic genius of H. Rutishauser which has been my main source of

inspiration.

It is a pleasure to acknowledge the assistance I have received from

numerous friends in this enterprise. I would like to thank Professors

J. W. Carr and R. C. F. Bartels for successive invitations to lecture at

the Summer Schools in Ann Arbor, thereby providing me with an

annual stimulus for completing the manuscript. I am particularly

indebted to Professors G. E. Forsythe and J. W. Givens who gen-

erously provided duplicating facilities for the two main drafts of

the manuscript. This has enabled me to benefit from the opinions of

a much wider circle of readers than would otherwise have been possible.

Many valuable comments were received from Professor G. E. For-

sythe, Dr. J. M. Varah and Mr. E. A. Albasiny. The whole of the

final manuscript was read by Dr. D. W. Martin and Professor L. Fox

and the first proofs by Dr. E. T. Goodwin. Their comments and sugges-

tions rescued me from numerous blunders and infelicities of style.

I am a notoriously poor proof reader and I was fortunate indeed to

have the expert guidance of Mrs. I. Goode in seeing the book through

the press. Finally sincere thanks are due to my wife who typed successive

versions of the manuscript. Without her patience and pertinacity the

project would almost certainly have foundered.

J. H. Wilkinson

Teddington, March, 1965

)

Contents

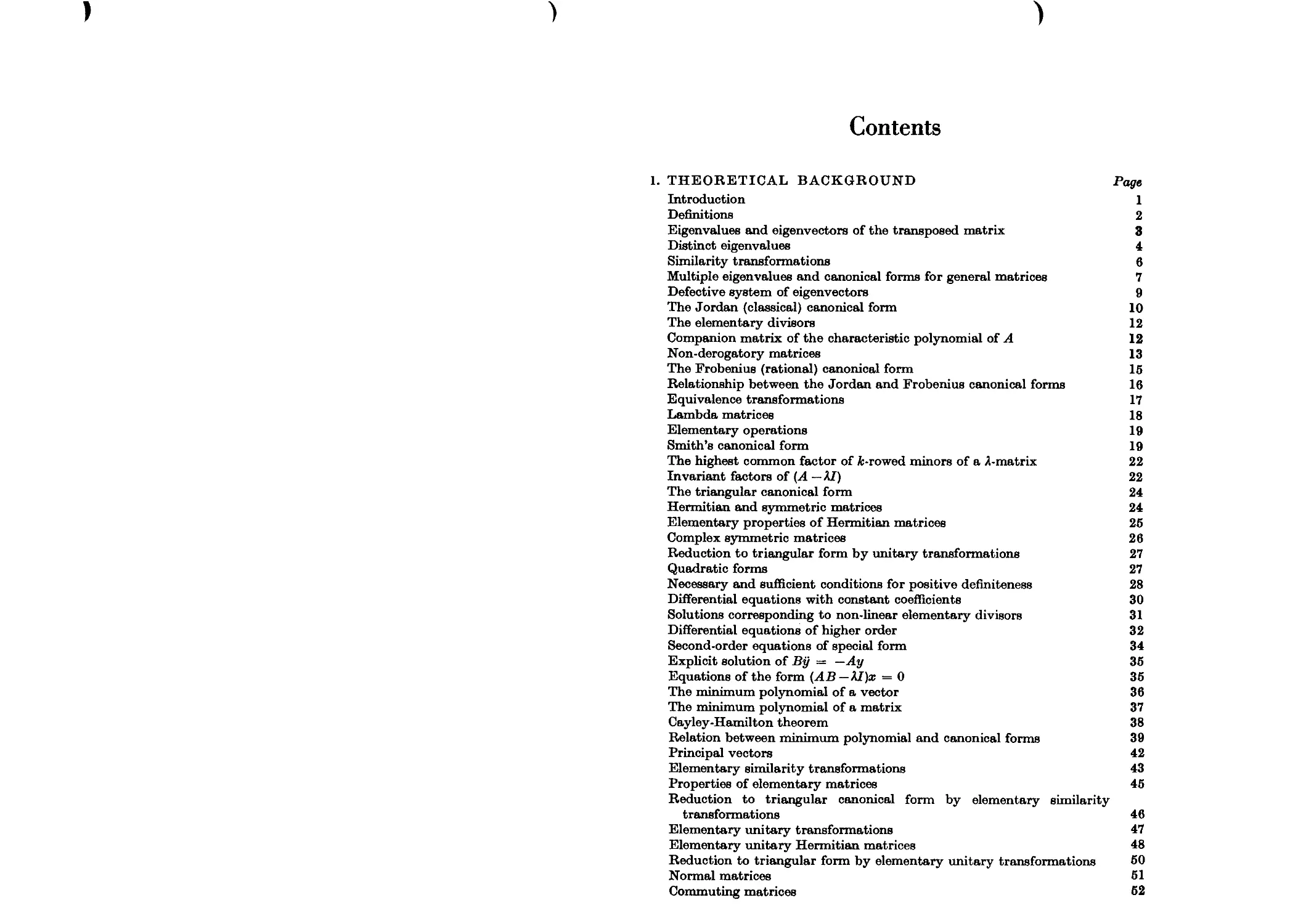

1. THEORETICAL BACKGROUND Page

Introduction 1

Definitions 2

Eigenvalues and eigenvectors of the transposed matrix 3

Distinct eigenvalues 4

Similarity transformations 6

Multiple eigenvalues and canonical forms for general matrices 7

Defective system of eigenvectors 9

The Jordan (classical) canonical form 10

The elementary divisors 12

Companion matrix of the characteristic polynomial of A 12

Non-derogatory matrices 13

The Frobenius (rational) canonical form 15

Relationship between the Jordan and Frobenius canonical forms 16

Equivalence transformations 17

Lambda matrices 18

Elementary operations 19

Smith’s canonical form 19

The highest common factor of fc-rowed minors of а Л-matrix 22

Invariant factors of (A —XI) 22

The triangular canonical form 24

Hermitian and symmetric matrices 24

Elementary properties of Hermitian matrices 25

Complex symmetric matrices 26

Reduction to triangular form by unitary transformations 27

Quadratic forms 27

Necessary and sufficient conditions for positive definiteness 28

Differential equations with constant coefficients 30

Solutions corresponding to non-linear elementary divisors 31

Differential equations of higher order 32

Second-order equations of special form 34

Explicit solution of By — —Ay 35

Equations of the form (AB — XI )x = 0 35

The minimum polynomial of a vector 36

The minimum polynomial of a matrix 37

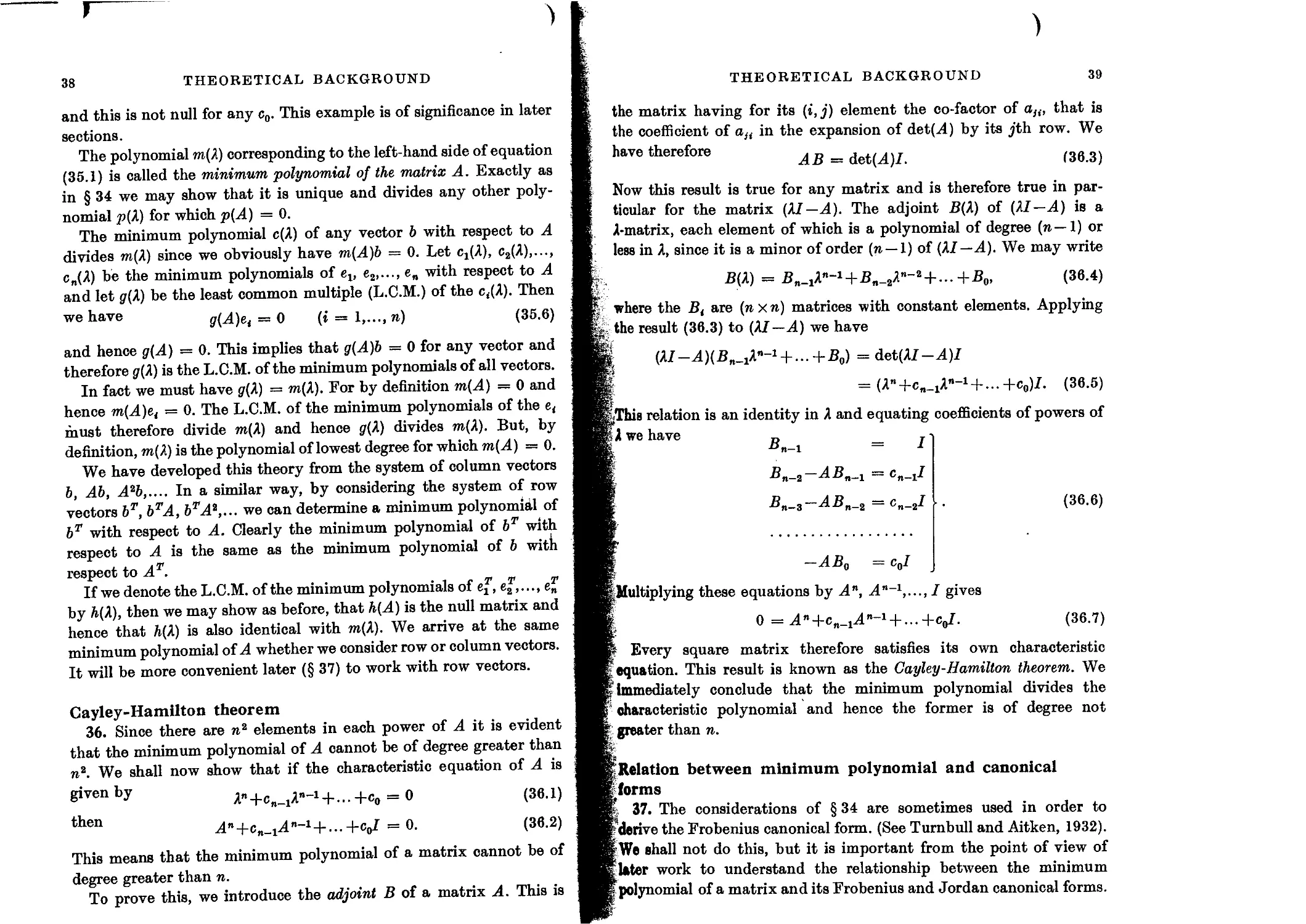

Cayley-Hamilton theorem 38

Relation between minimum polynomial and canonical forms 39

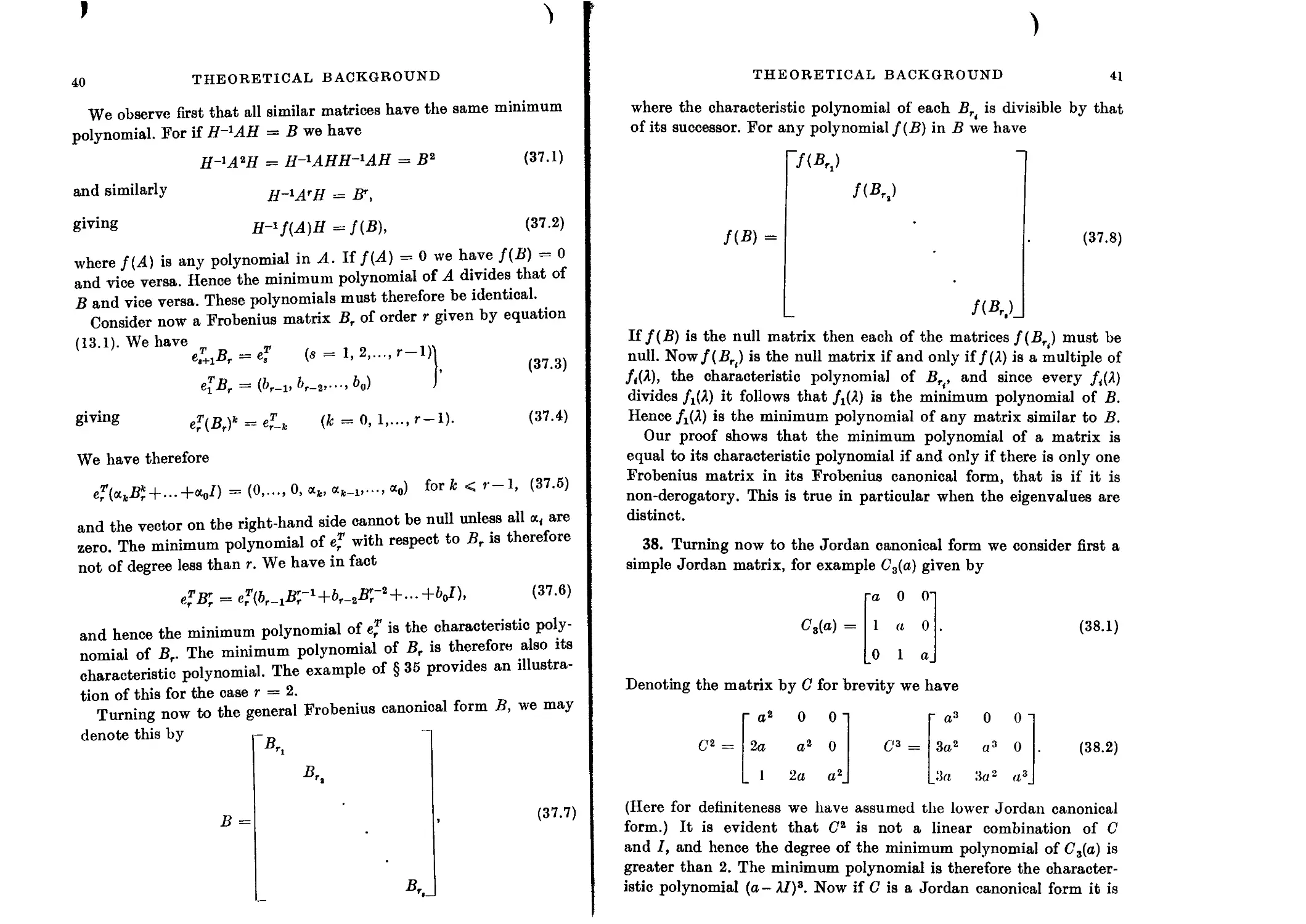

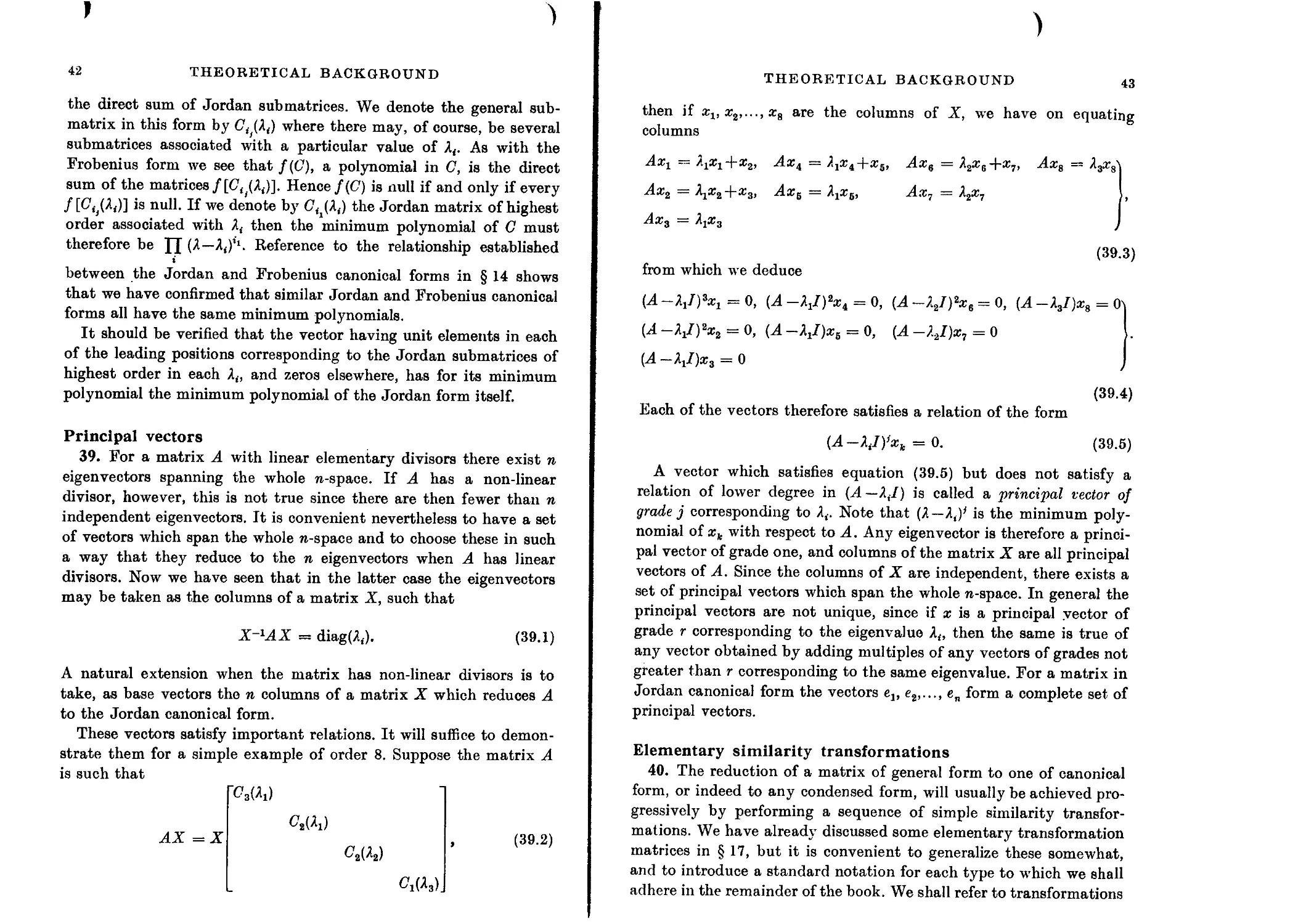

Principal vectors 42

Elementary similarity transformations 43

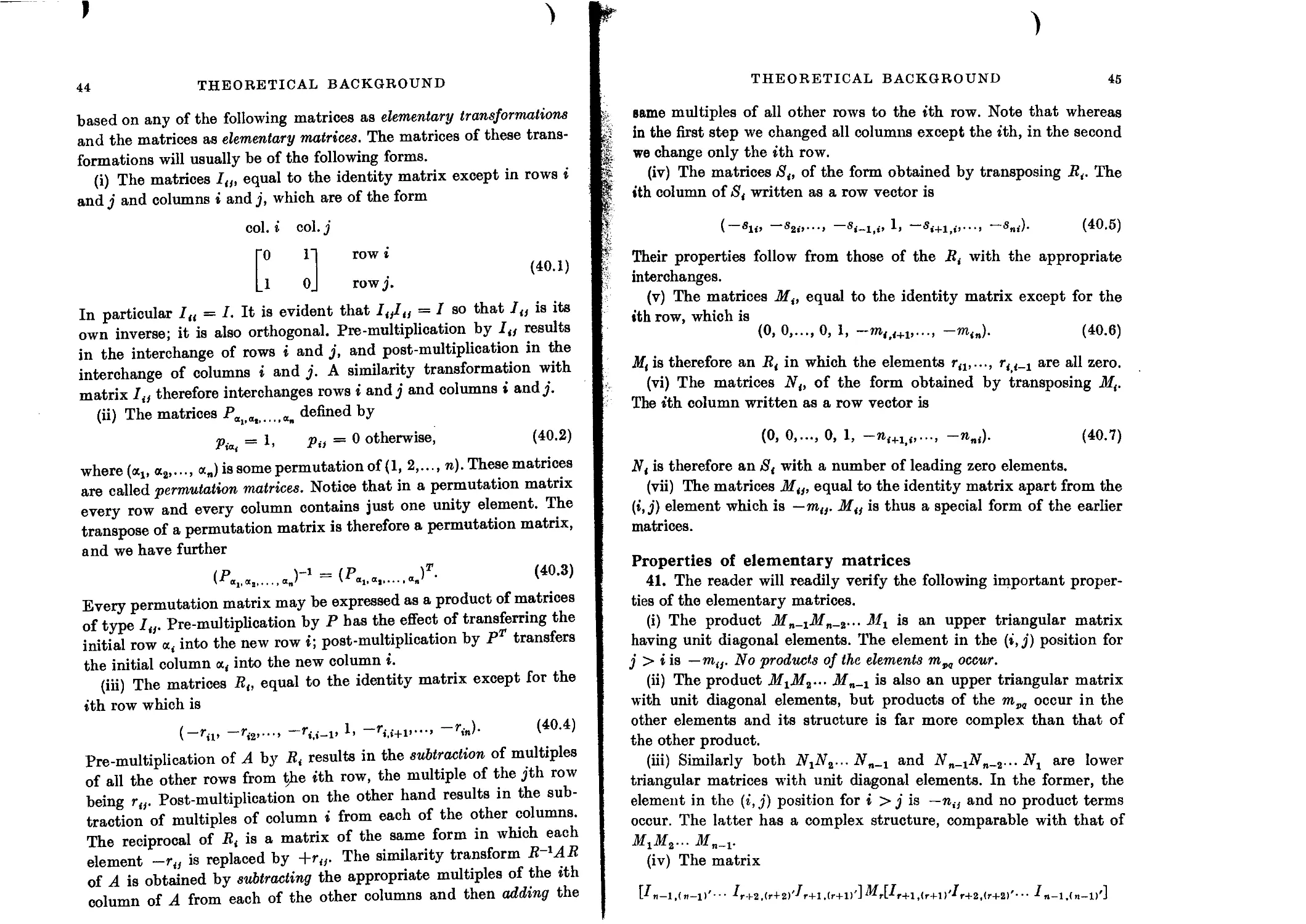

Properties of elementary matrices 45

Reduction to triangular canonical form by elementary similarity

transformations 46

Elementary unitary transformations 47

Elementary unitary Hermitian matrices 48

Reduction to triangular form by elementary unitary transformations 50

Normal matrices 51

Commuting matrices 52

X

CONTENTS

Eigenvalues of AB 54

Vector and matrix norms 55

Subordinate matrix norms 56

The Euclidean and spectral norms 57

Norms and limits 58

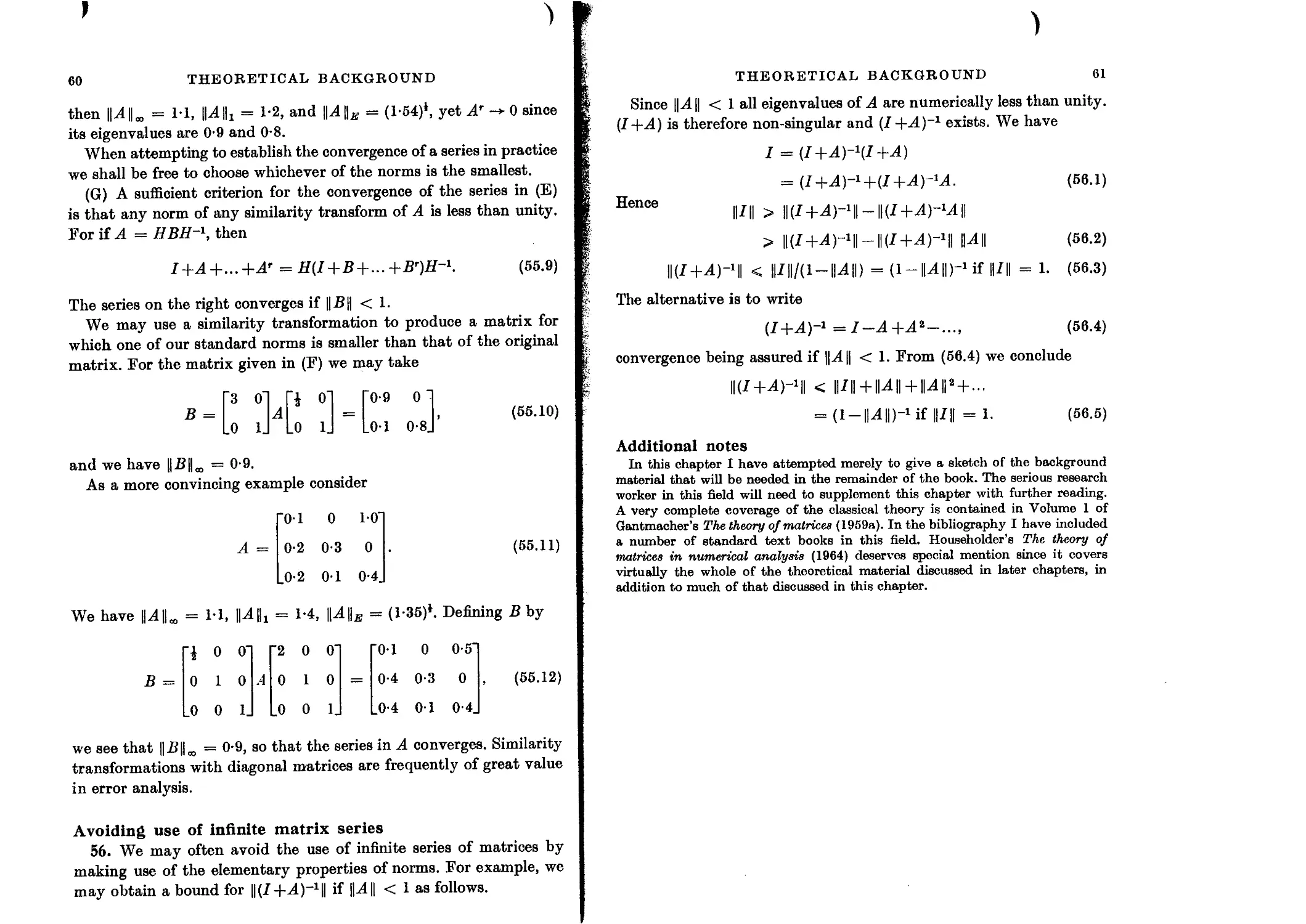

Avoiding use of infinite matrix series 60

2. PERTURBATION THEORY

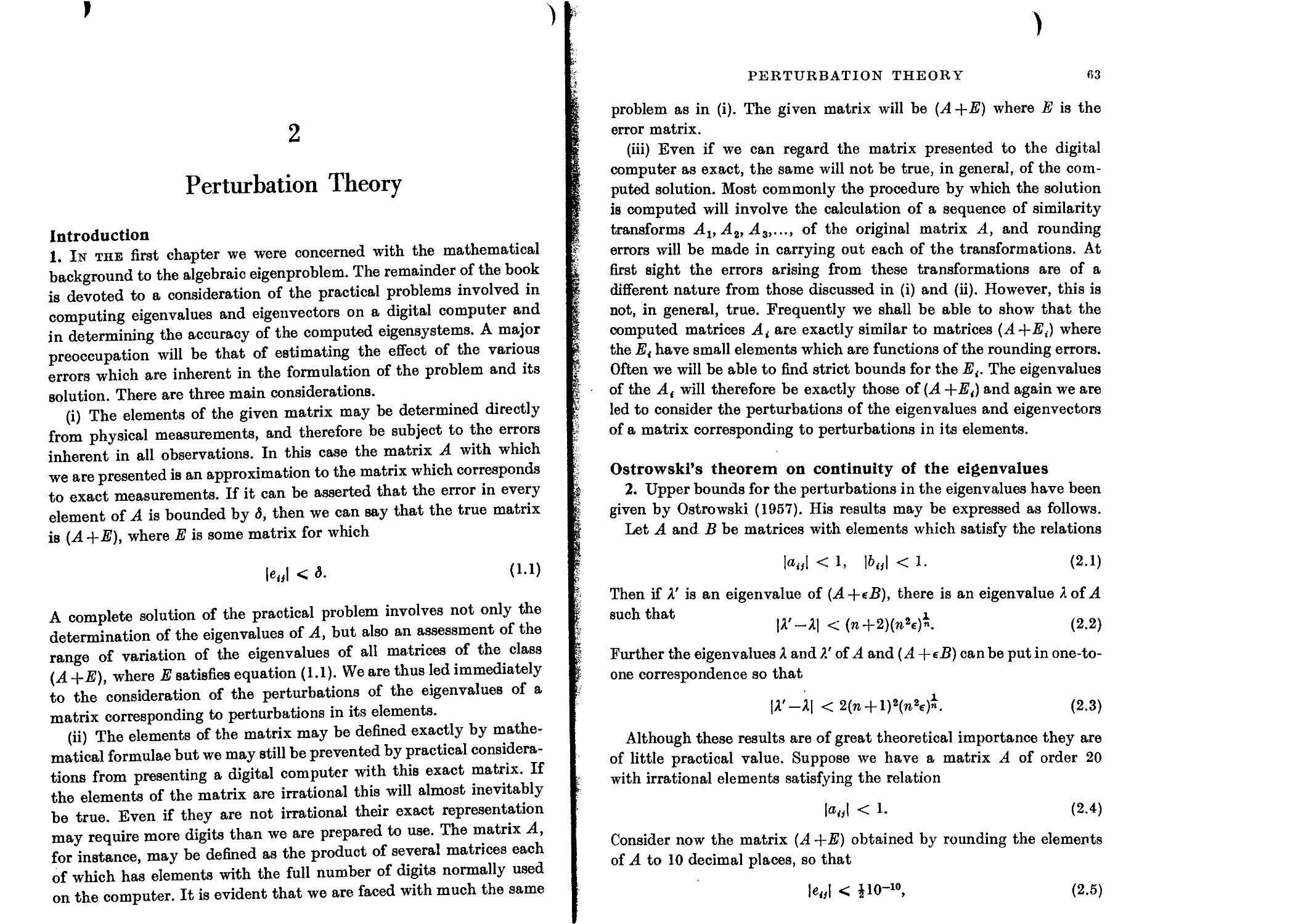

Introduction 62

Ostrowski’s theorem on continuity of the eigenvalues 63

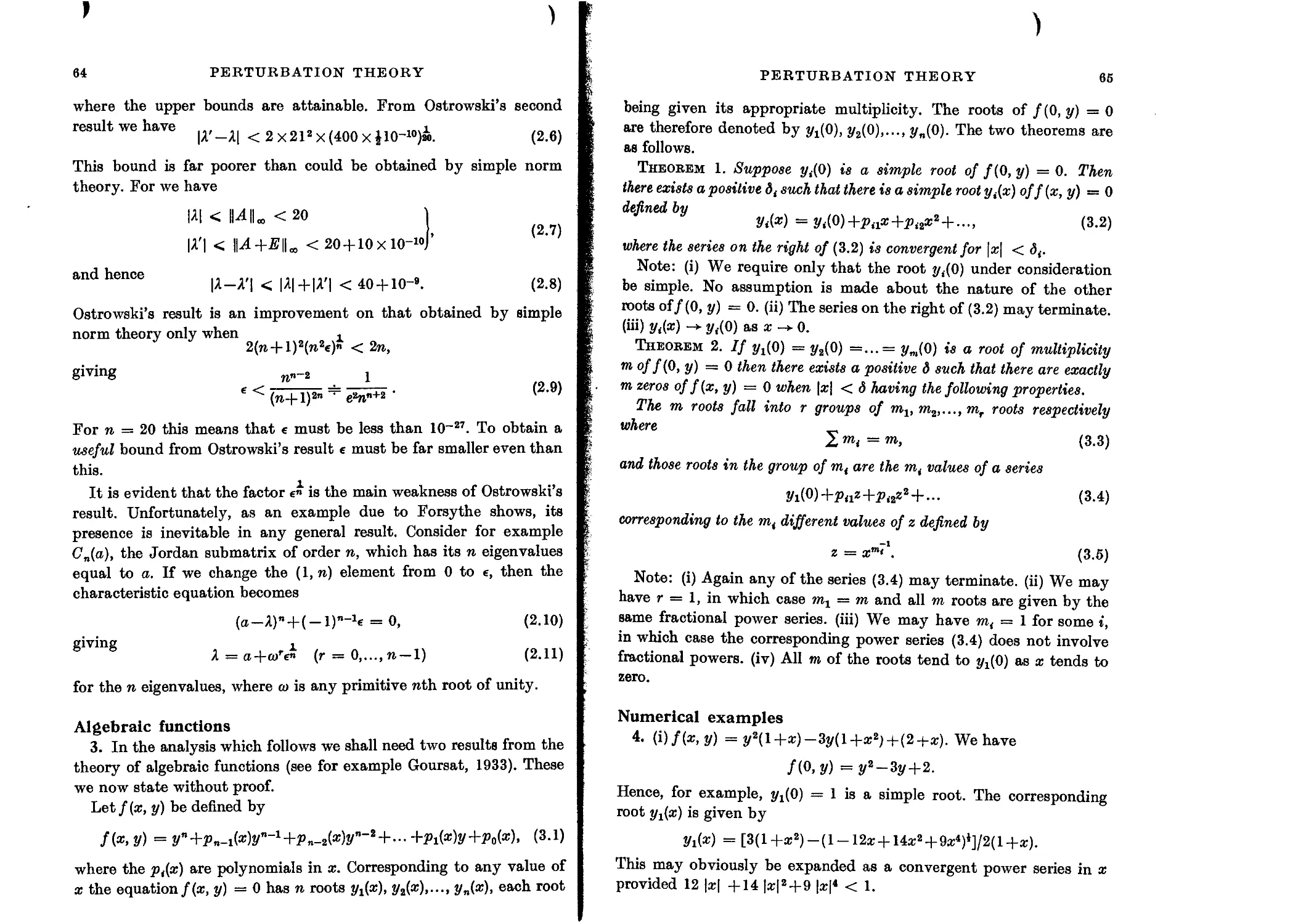

Algebraic functions 64

Numerical examples 65

Perturbation theory for simple eigenvalues 66

Perturbation of corresponding eigenvectors 67

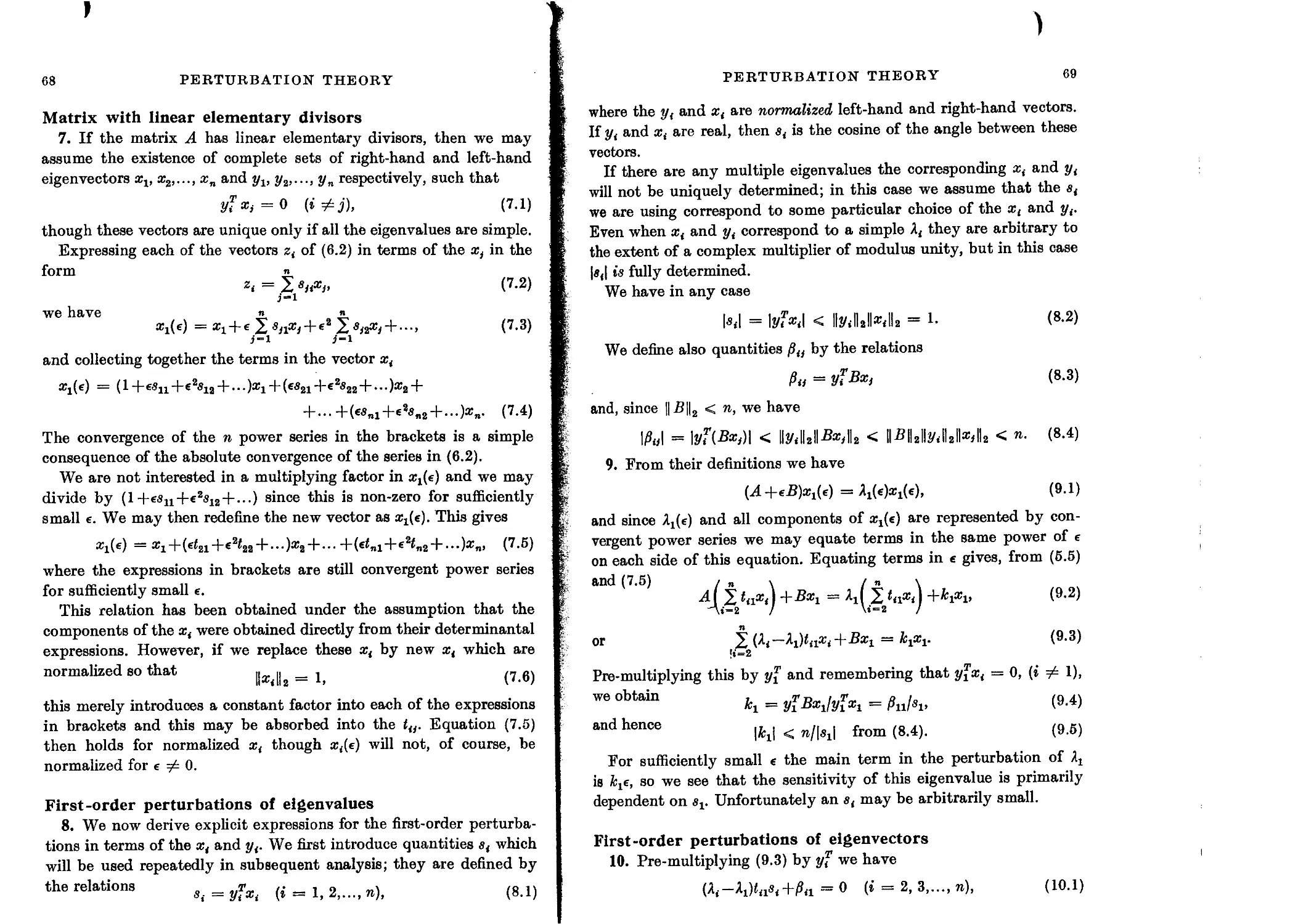

Matrix with linear elementary divisors 68

First-order perturbations of eigenvalues 68

First-order perturbations of eigenvectors 69

Higher-order perturbations 70

Multiple eigenvalues 70

Gerschgorin’s theorems 71

Perturbation theory based on Gerschgorin’s theorems 72

Case 1. Perturbation of a simple eigenvalue of a matrix having linear

elementary divisors. 72

Case 2. Perturbation of a multiple eigenvalue of a matrix having

linear elementary divisors. 75

Case 3. Perturbation of a simple eigenvalue of a matrix having one or

more non-linear elementary divisors. 77

Case 4. Perturbations of the eigenvalues corresponding to a non-linear

elementary divisor of a non-derogatory matrix. 79

Case 5. Perturbations of eigenvalues Л,- when there is more than one

divisor involving (Л,- — Л) and at least one of them is non-linear. 80

Perturbations corresponding to the general distribution of non-linear

divisors 81

Perturbation theory for the eigenvectors from Jordan canonical form 81

Perturbations of eigenvectors corresponding to a multiple eigenvalue

(linear elementary divisors) 83

Limitations of perturbation theory 84

Relationships between the e( 85

The condition of a computing problem 86

Condition numbers 86

Spectral condition number of A with respect to its eigenproblem 87

Properties of spectral condition number 88

Invariant properties of condition numbers 89

Very ill-conditioned matrices 90

Perturbation theory for real symmetric matrices 93

Unsymmetric perturbations 93

Symmetric perturbations 94

Classical techniques 94

Symmetric matrix of rank unity 97

Extremal properties of eigenvalues 98

Minimax characterization of eigenvalues 99

Eigenvalues of the sum of two symmetric matrices 101

CONTENTS xi

Practical applications 102

Further applications of minimax principle 103

Separation theorem 103

The Wielandt-Hoffman theorem 104

3. ERROR ANALYSIS

Introduction 110

Fixed-point operations 110

Accumulation of inner-products 111

Floating-point operations 112

Simplified expressions for error bounds 113

Error bounds for some basic floating-point computations 114

Bounds for norms of the error matrices 115

Accumulation of inner-products in floating-point arithmetic 116

Error bounds for some basic/Z2( ) computations 117

Computation of square roots 118

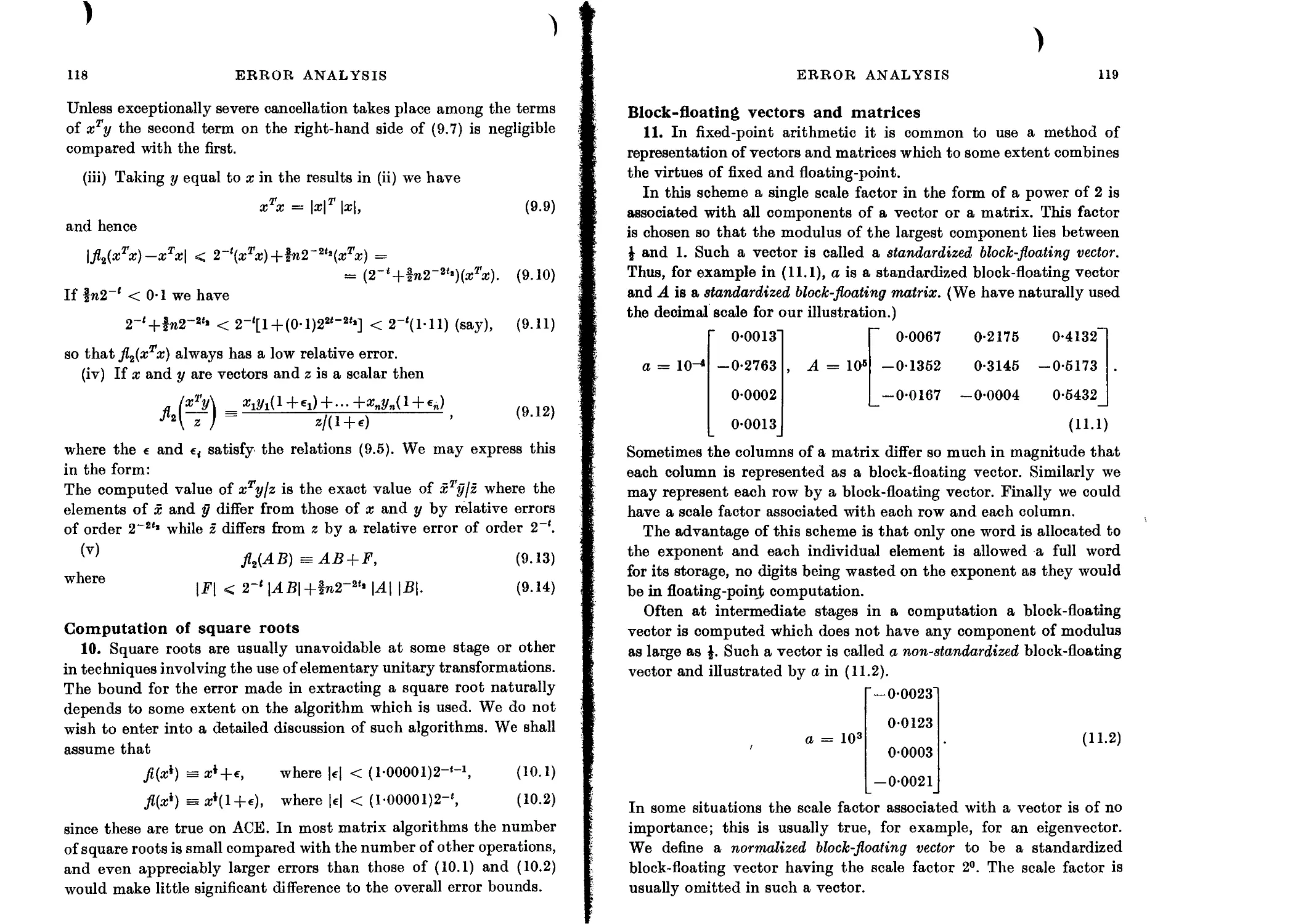

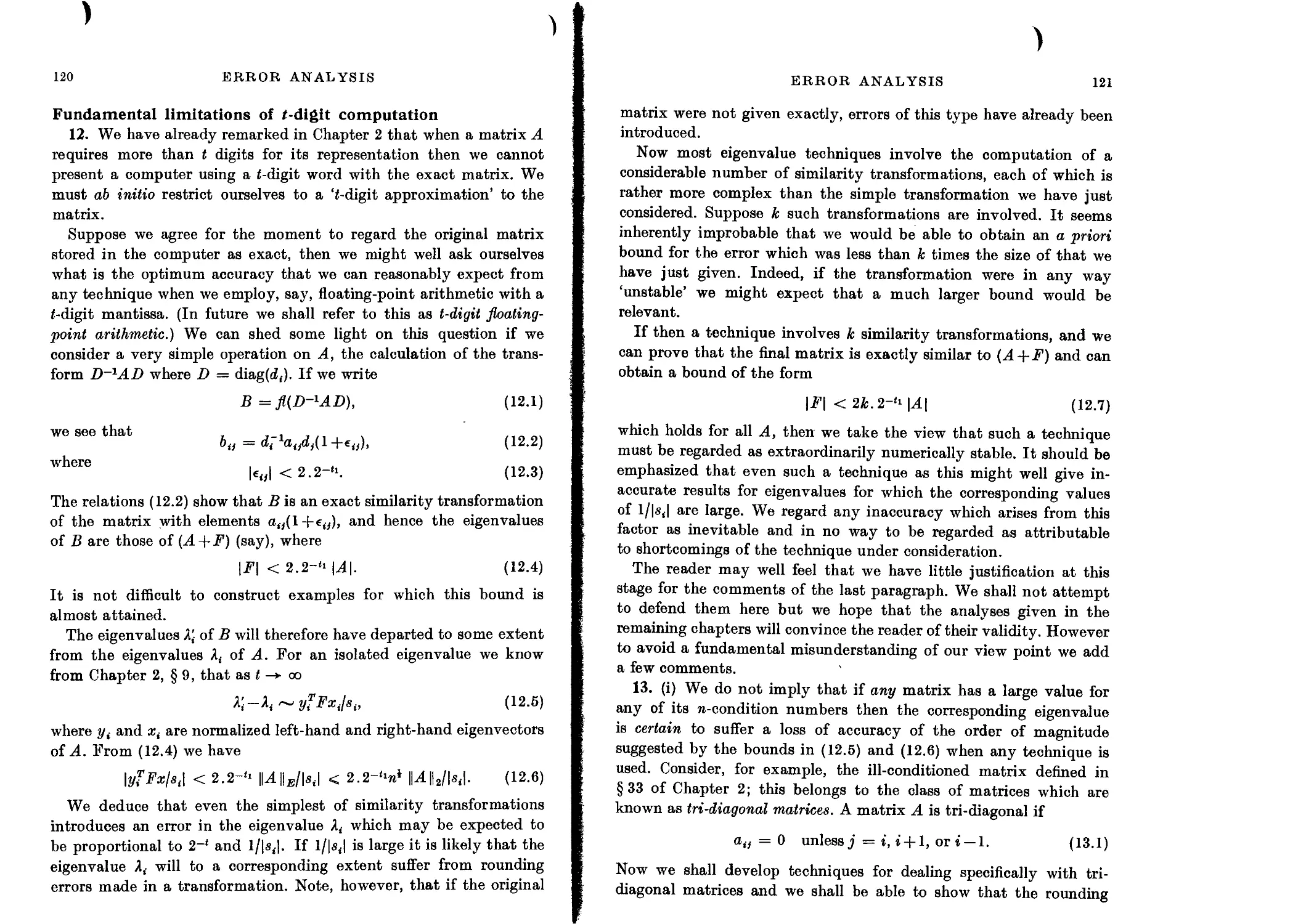

Block-floating vectors and matrices 119

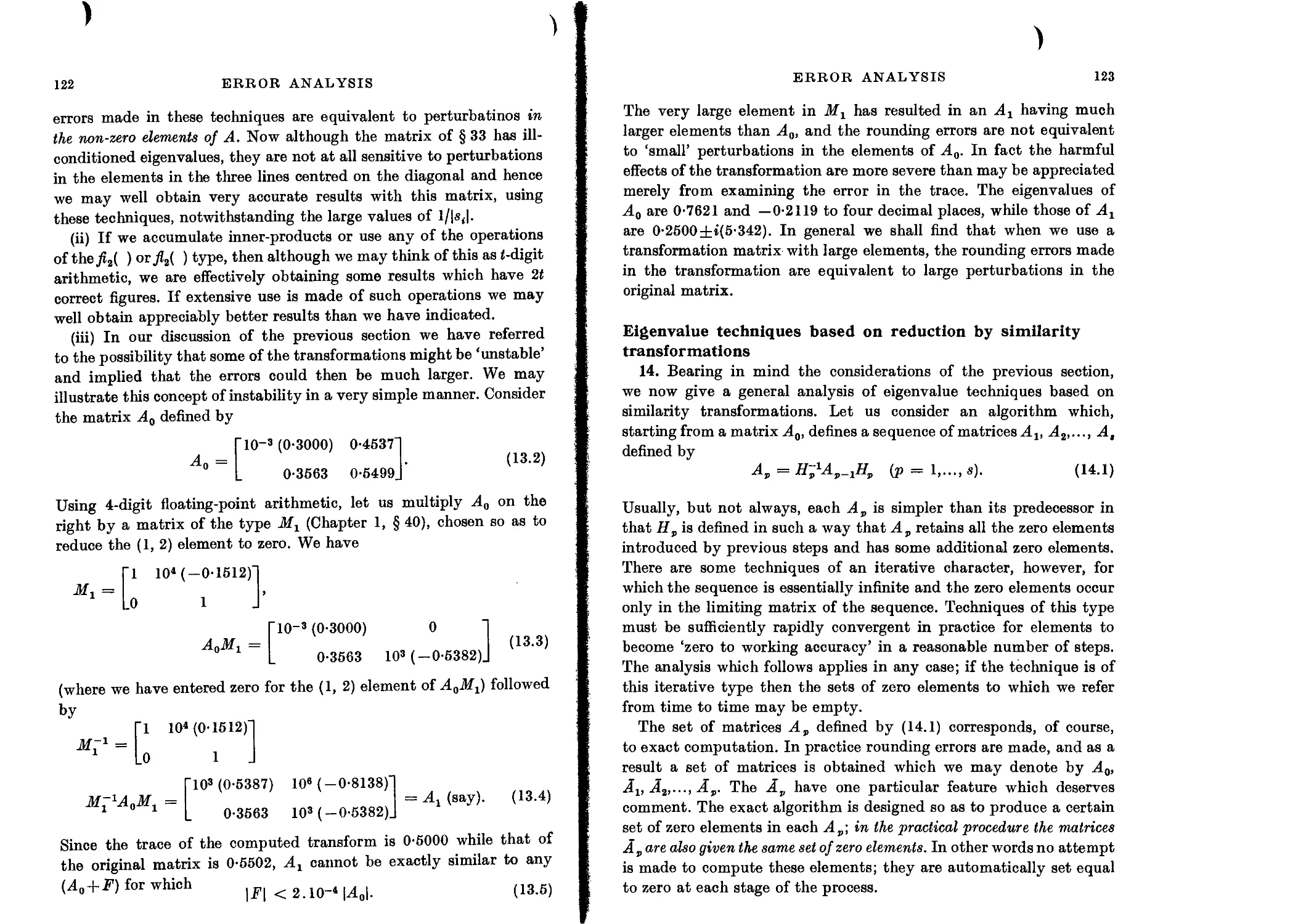

Fundamental limitations of 2-digit computation 120

Eigenvalue techniques based on reduction by similarity transformations 123

Error analysis of methode based on elementary non-unitary trans-

formations 124

Error analysis of methods based on elementary unitary transformations 126

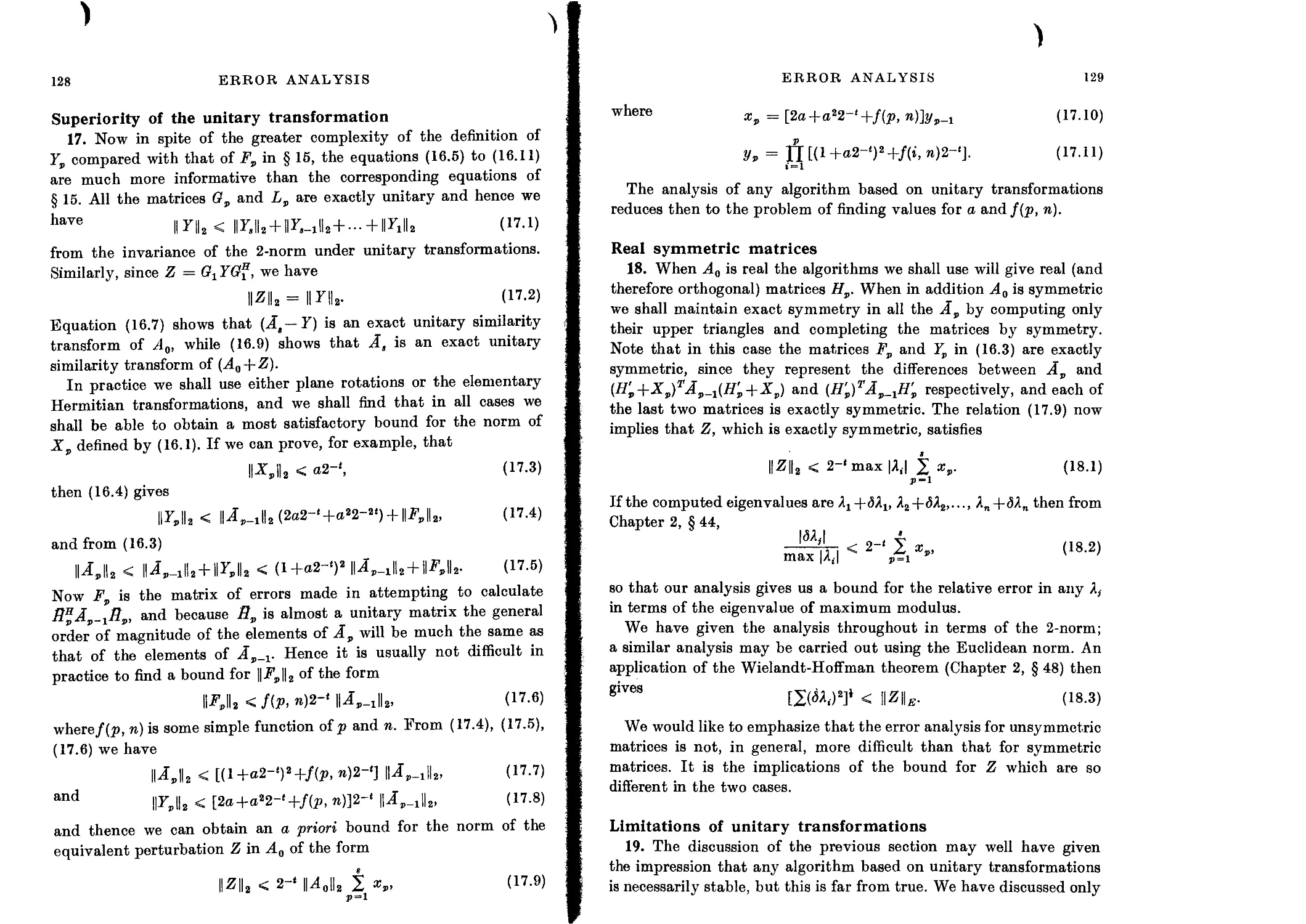

Superiority of the unitary transformation 128

Real symmetric matrices 129

Limitations of unitary transformations 129

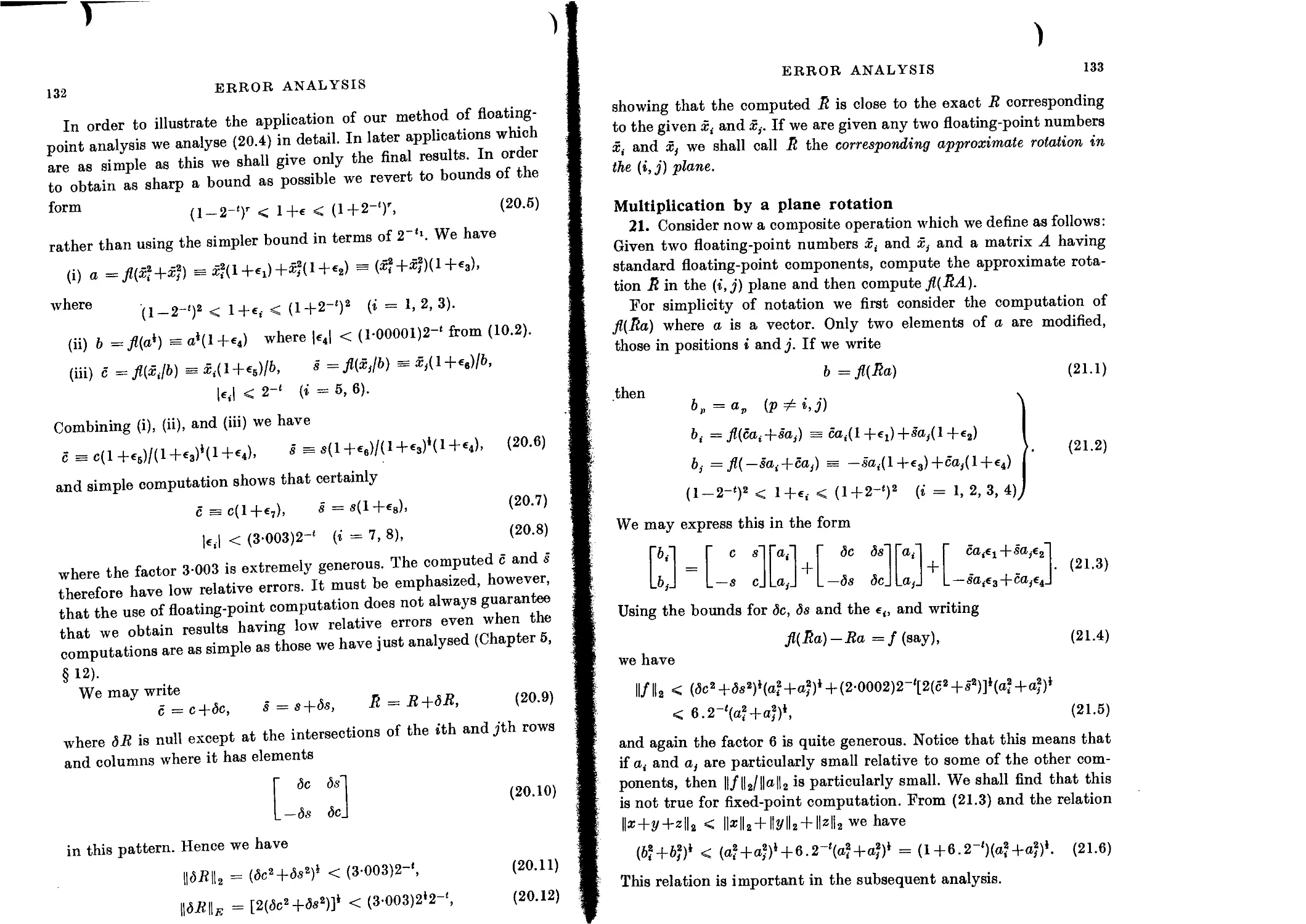

Error analysis of floating-point computation of plane rotations 131

Multiplication by a plane rotation 133

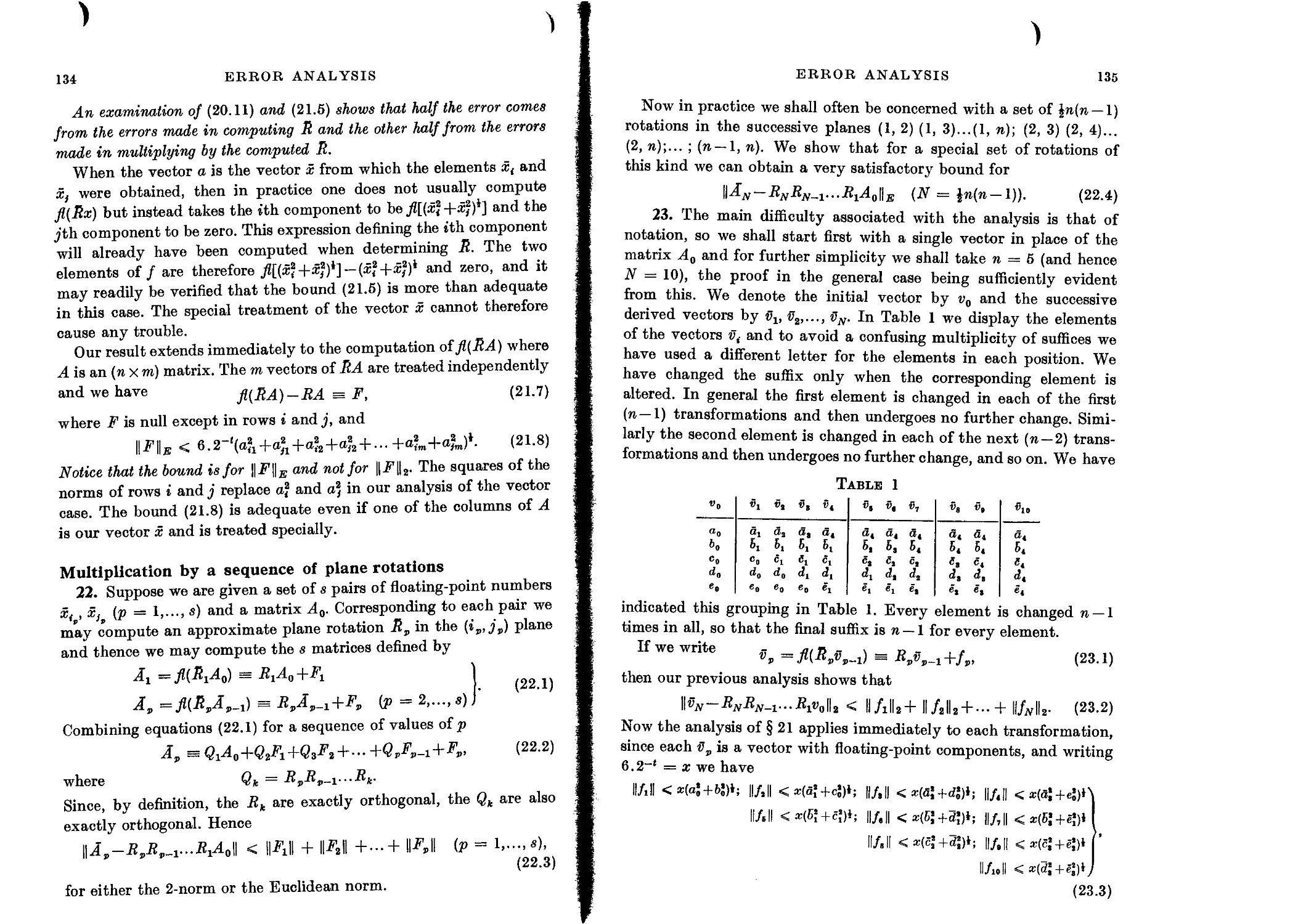

Multiplication by a sequence of plane rotations 134

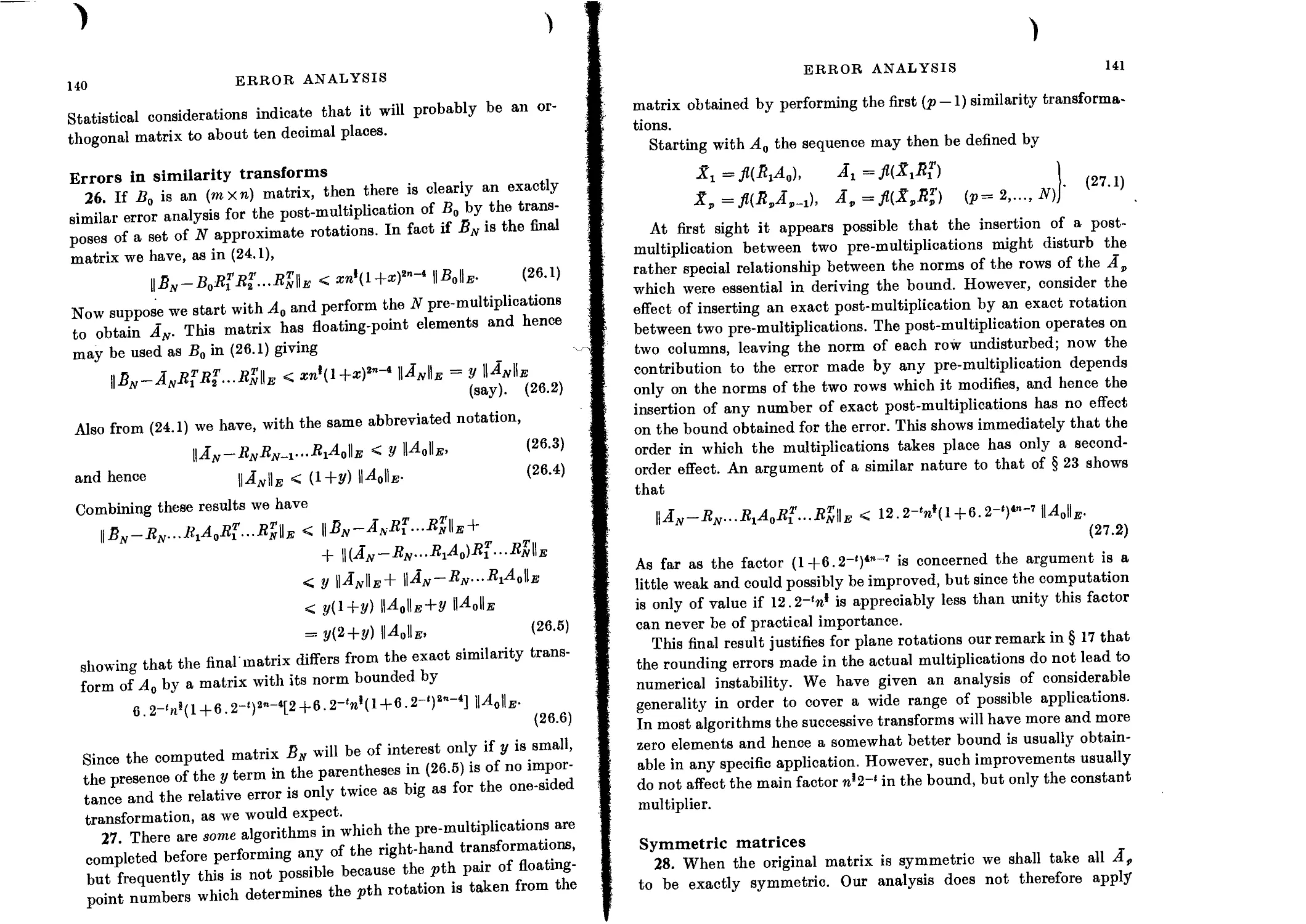

Error in product of approximate plane rotations 139

Errors in similarity transforms 140

Symmetric matrices 141

Plane rotations in fixed-point arithmetic 143

Alternative computation of sin в and cos 0 145

Pre-multiplication by an approximate fixed-point rotation 145

Multiplication by a sequence of plane rotations (fixed-point) 147

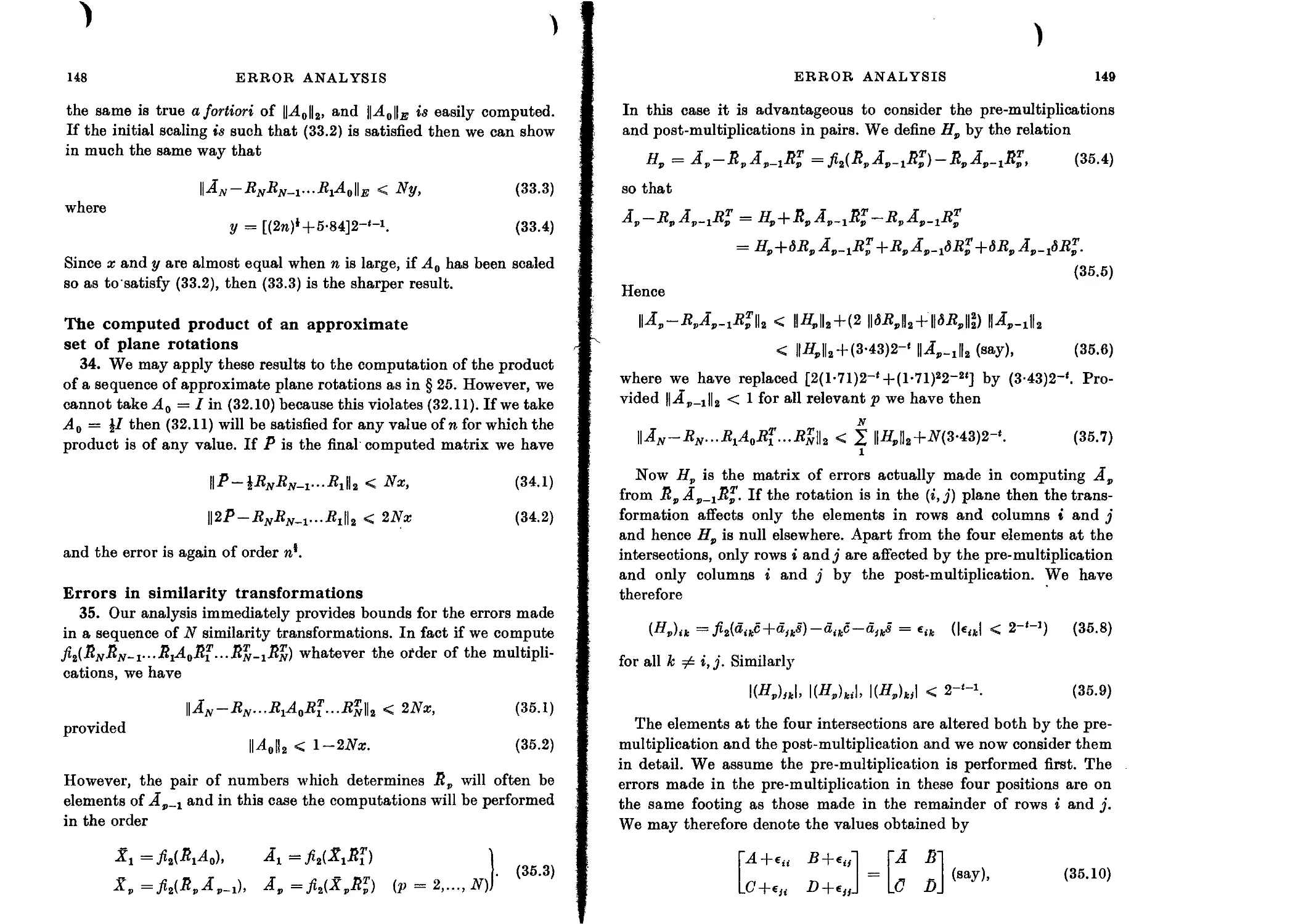

The computed product of an approximate set of plane rotations 148

Errors in similarity transformations 148

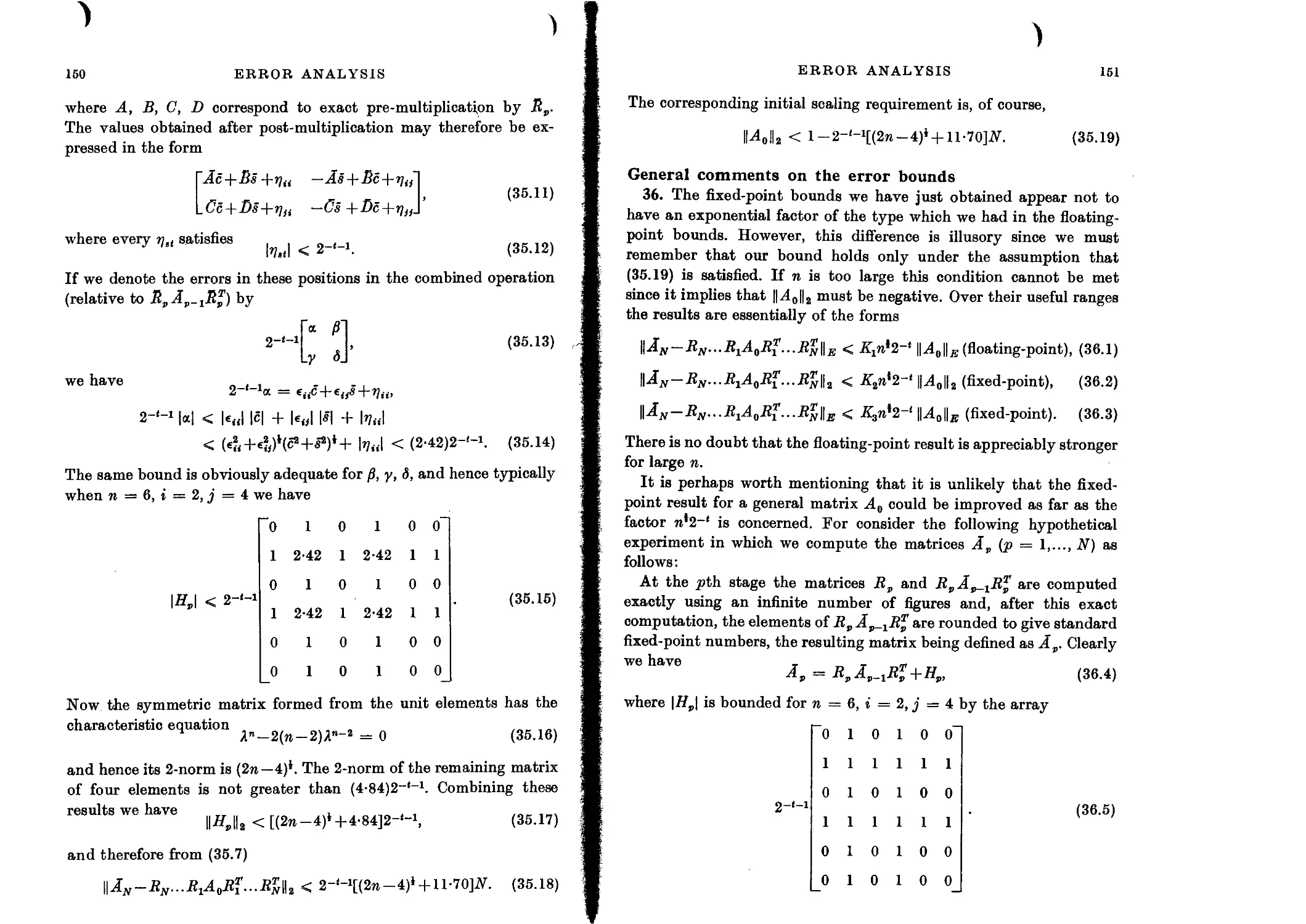

General comments on the error bounds 151

Elementary Hermitian matrices in floating-point 152

Error analysis of the computation of an elementary Hermitian matrix 153

Numerical example 156

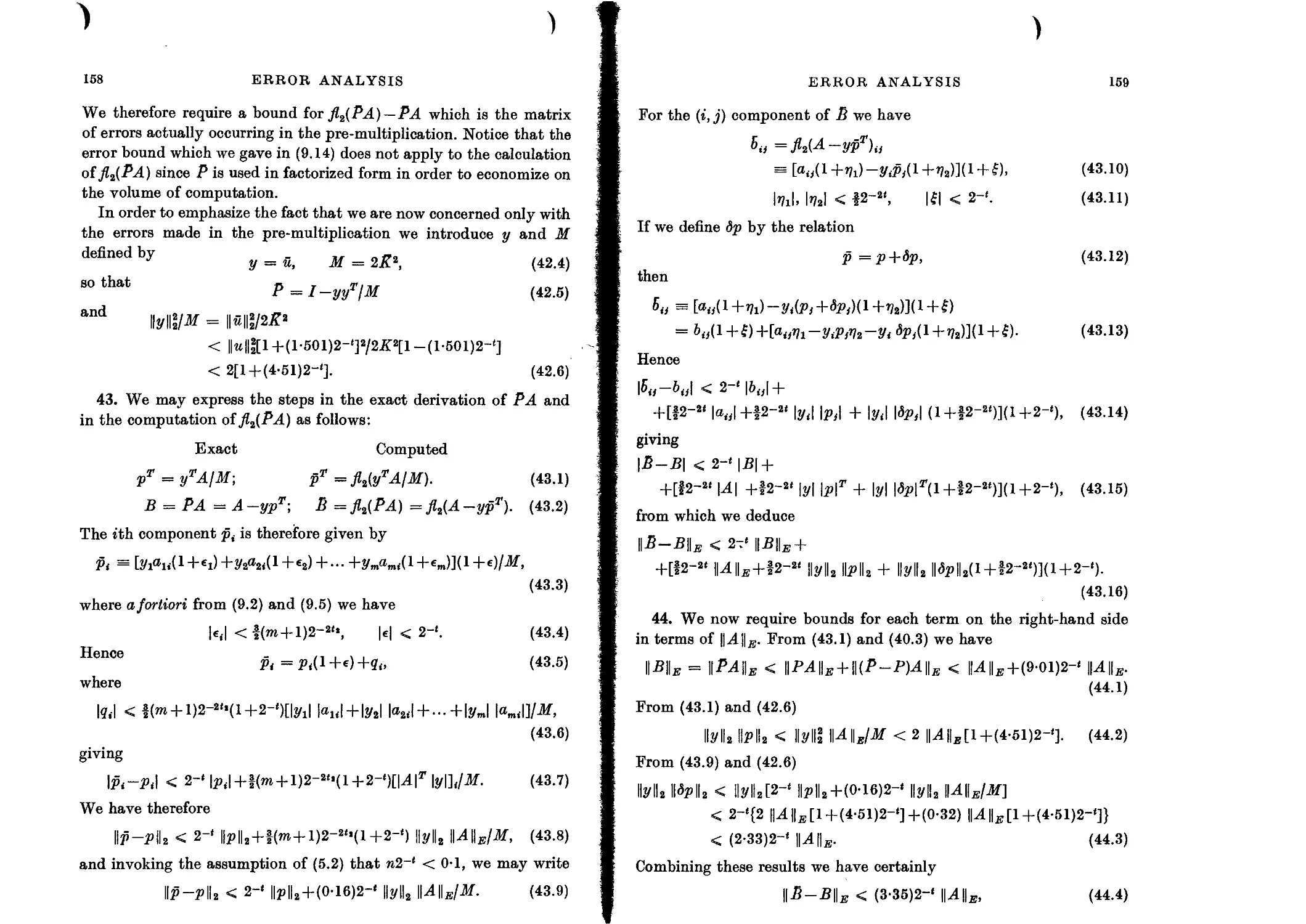

Pre-multiplication by an approximate elementary Hermitian matrix 157

Multiplication by a sequence of approximate elementary Hermitians 160

Non-unitary elementary matrices analogous to plane rotations 162

Non-unitary elementary matrices analogous to elementary Hermitian

matrices 163

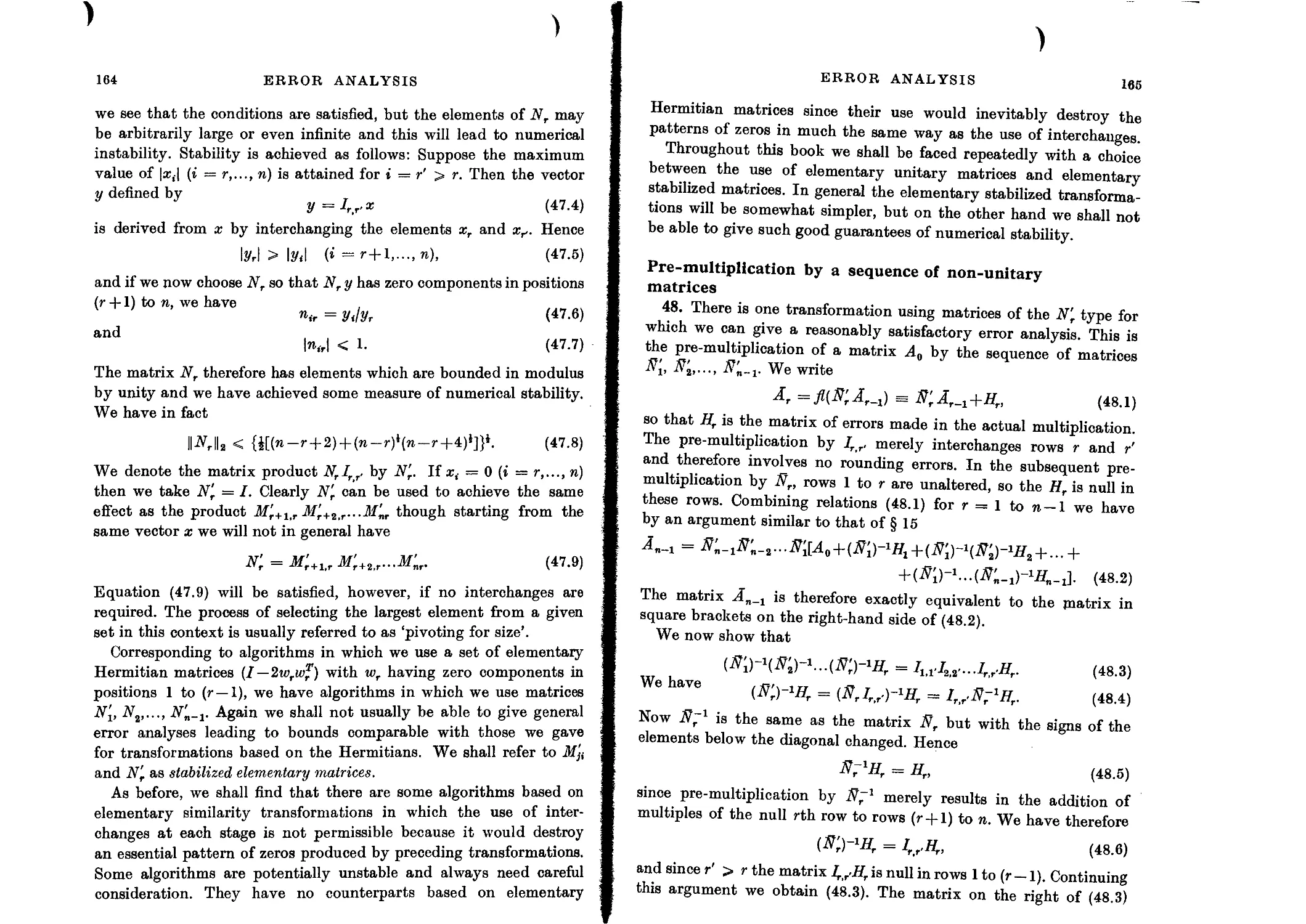

Pre-multiplication by a sequence of non-unitary matrices 165

A priori error bounds 166

Departure from normality 167

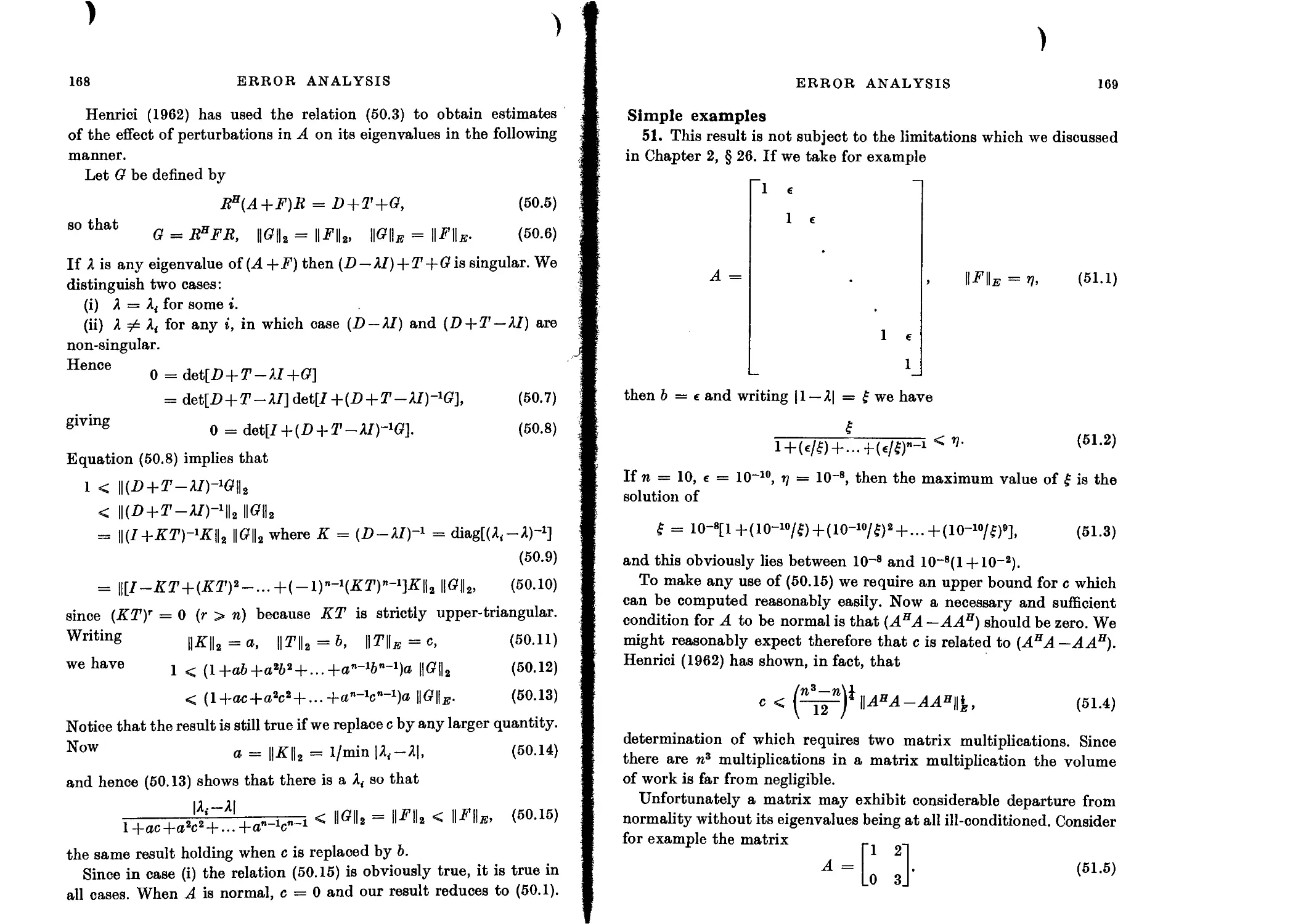

Simple examples 169

A posteriori bounds 170

A posteriori bounds for normal matrices 170

XU CONTENTS

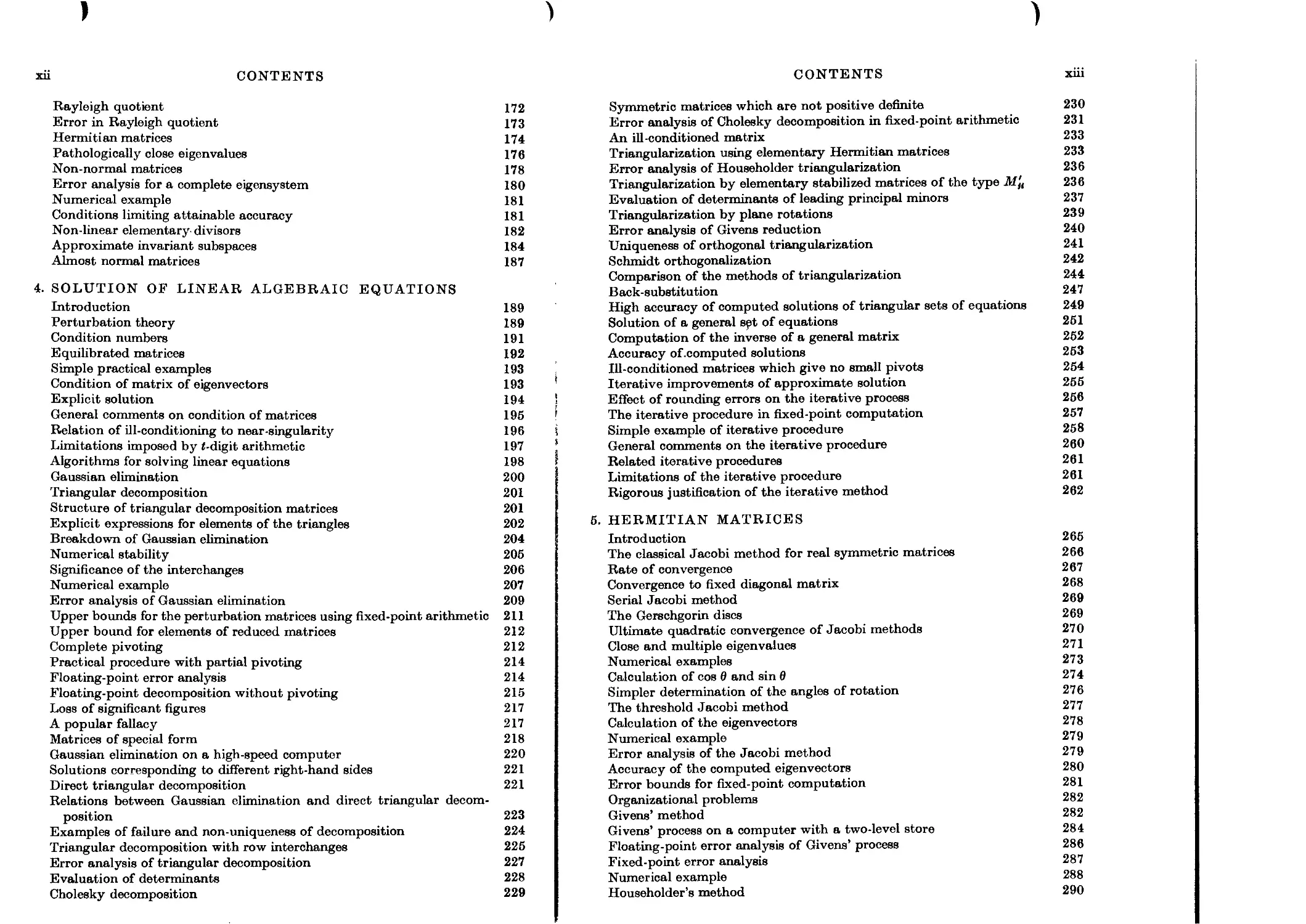

Rayleigh quotient 172

Error in Rayleigh quotient 173

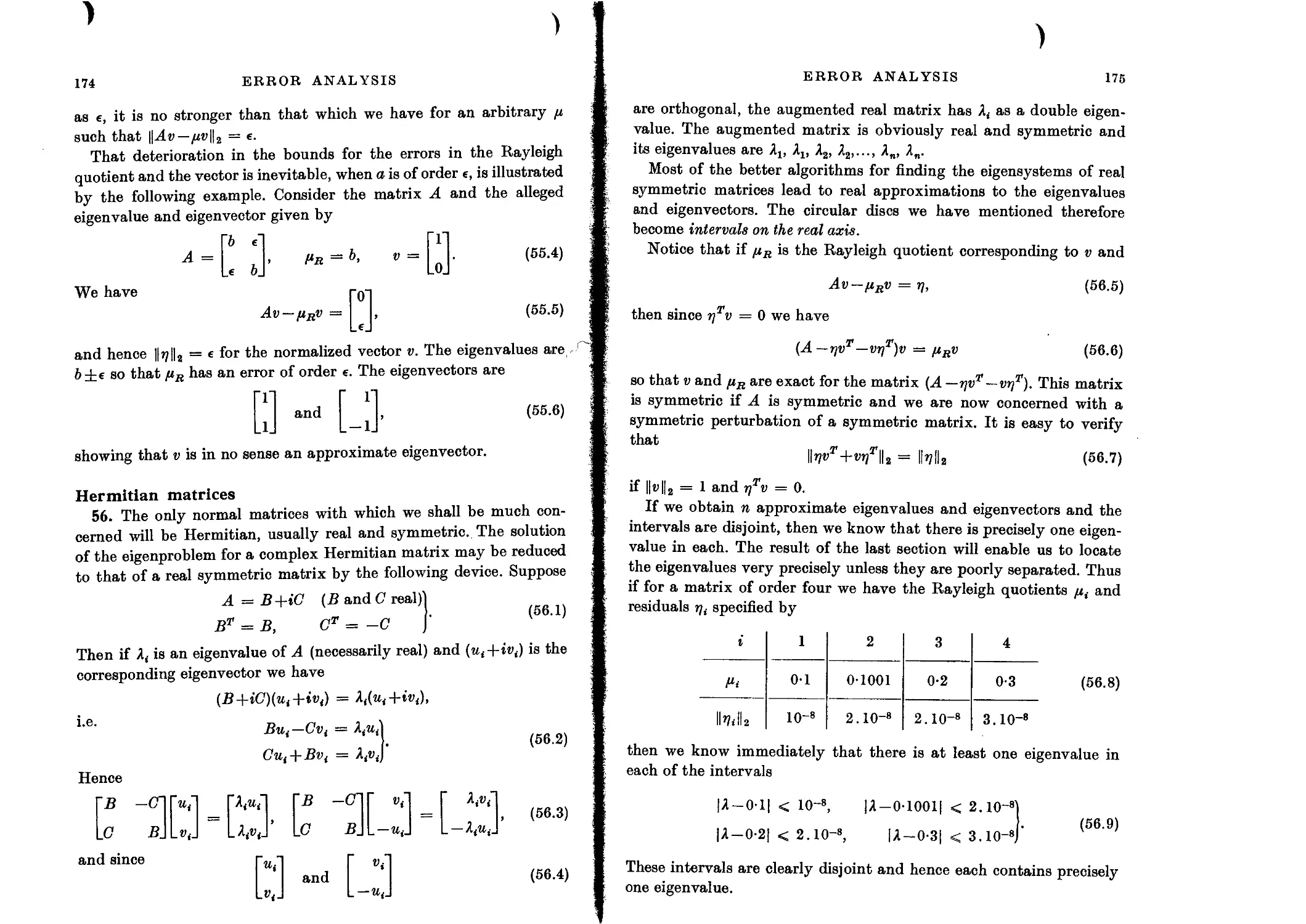

Hermitian matrices 174

Pathologically close eigenvalues 176

Non-normal matrices 178

Error analysis for a complete eigensystem 180

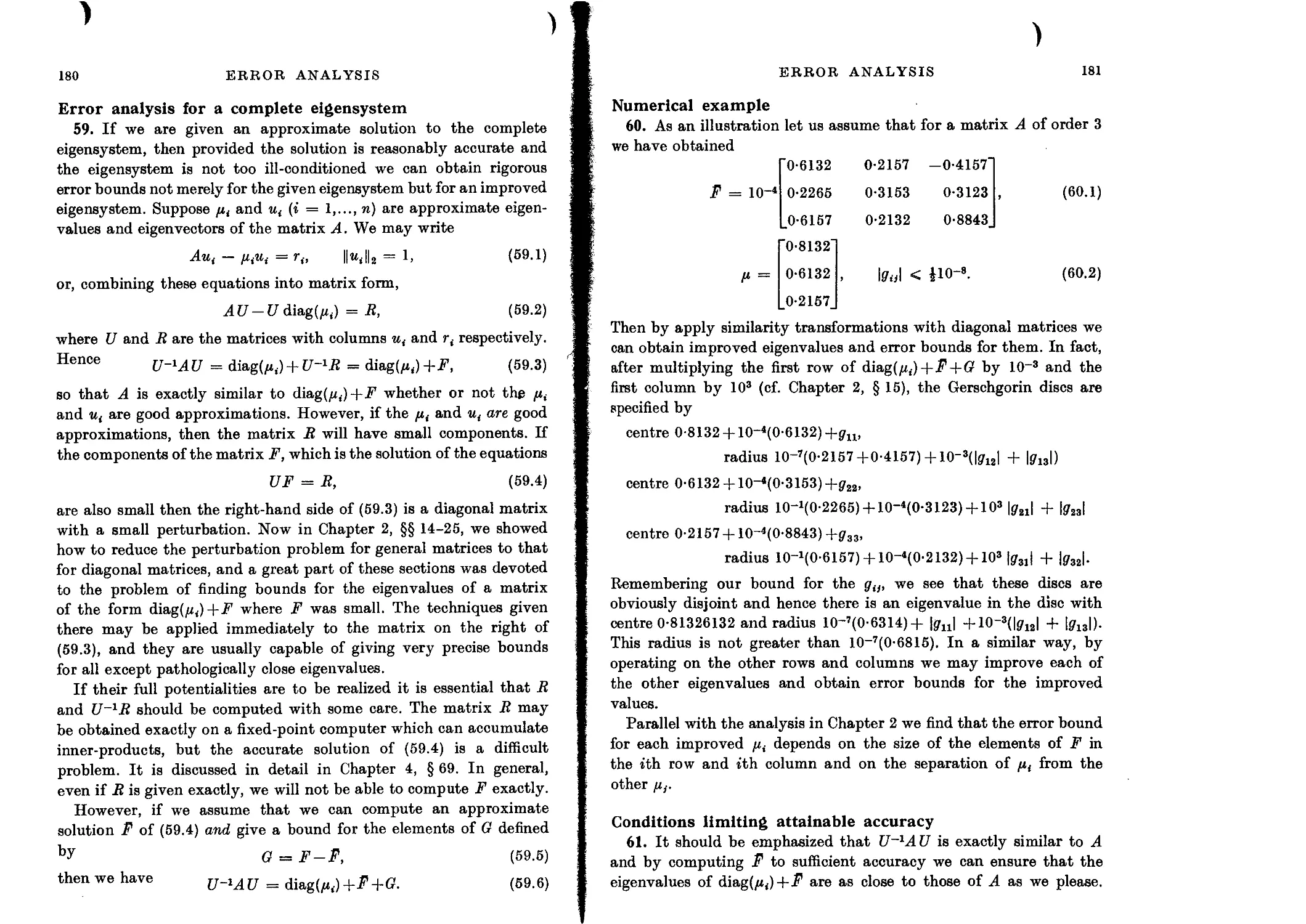

Numerical example 181

Conditions limiting attainable accuracy 181

Non-linear elementary divisors 182

Approximate invariant subspaces 184

Almost normal matrices 187

4. SOLUTION OF LINEAR ALGEBRAIC EQUATIONS

Introduction 189

Perturbation theory 189

Condition numbers 191

Equilibrated matrices 192

Simple practical examples 193

Condition of matrix of eigenvectors 193

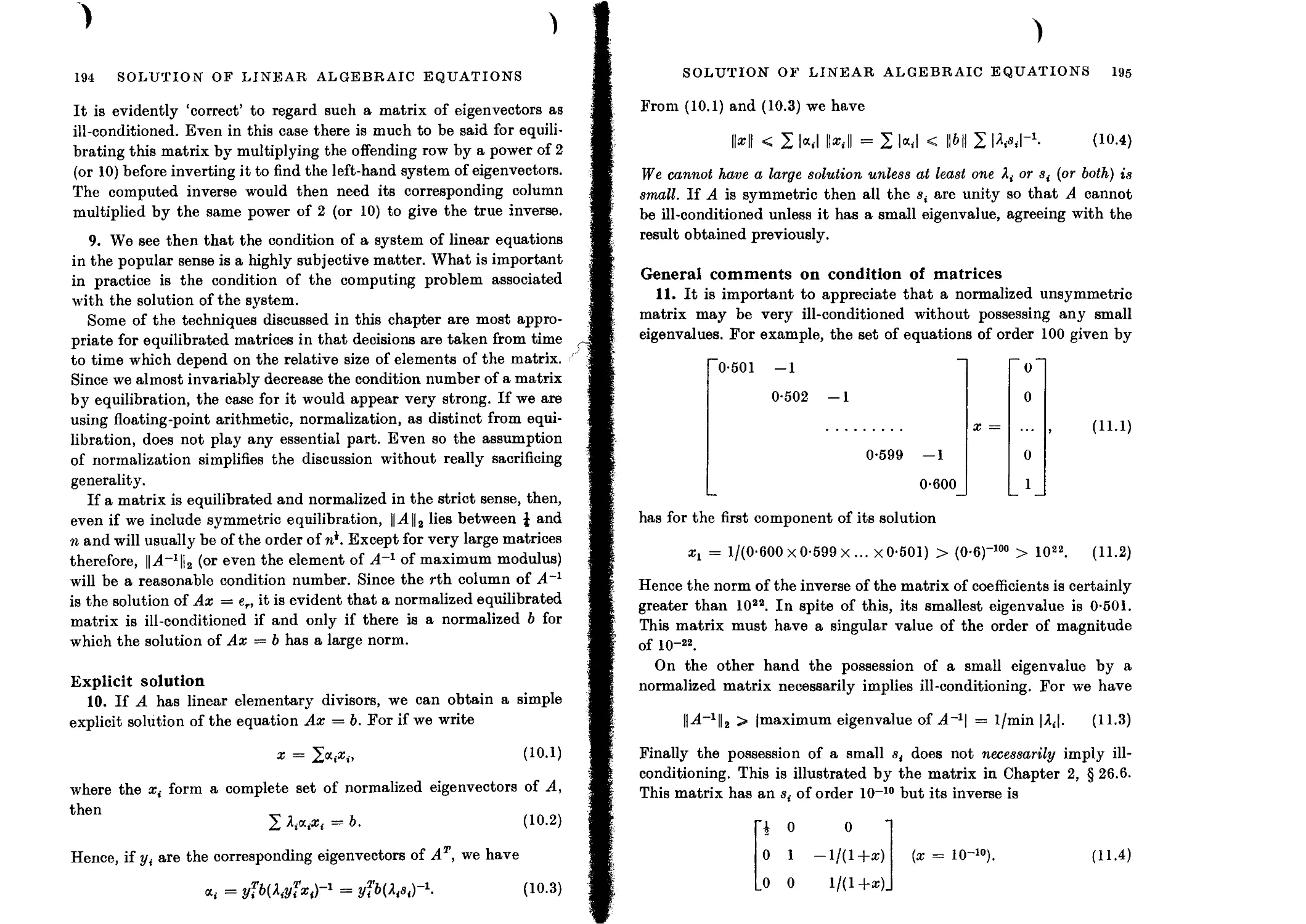

Explicit solution 194

General comments on condition of matrices 195

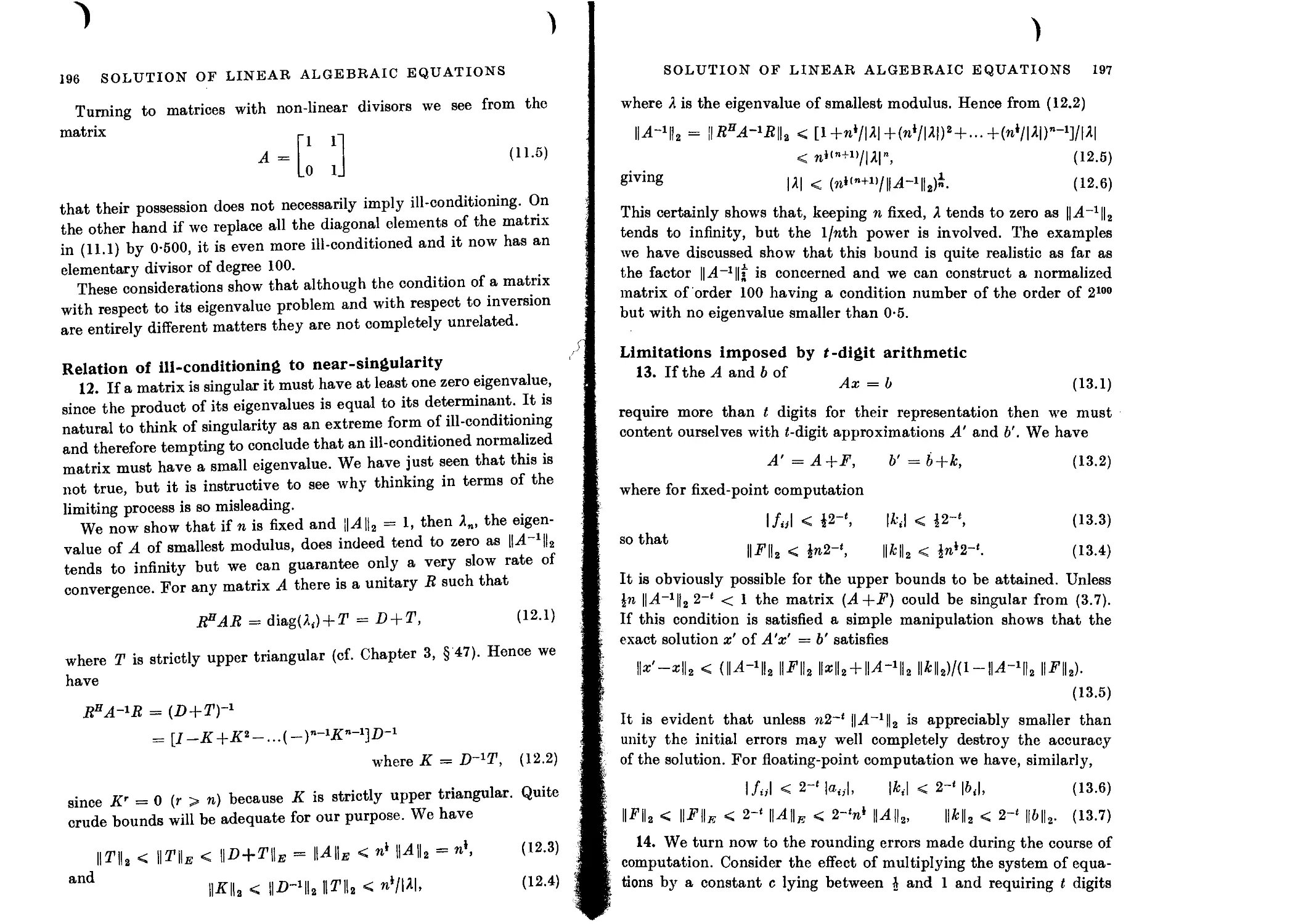

Relation of ill-conditioning to near-singularity 196

Limitations imposed by /-digit arithmetic 197

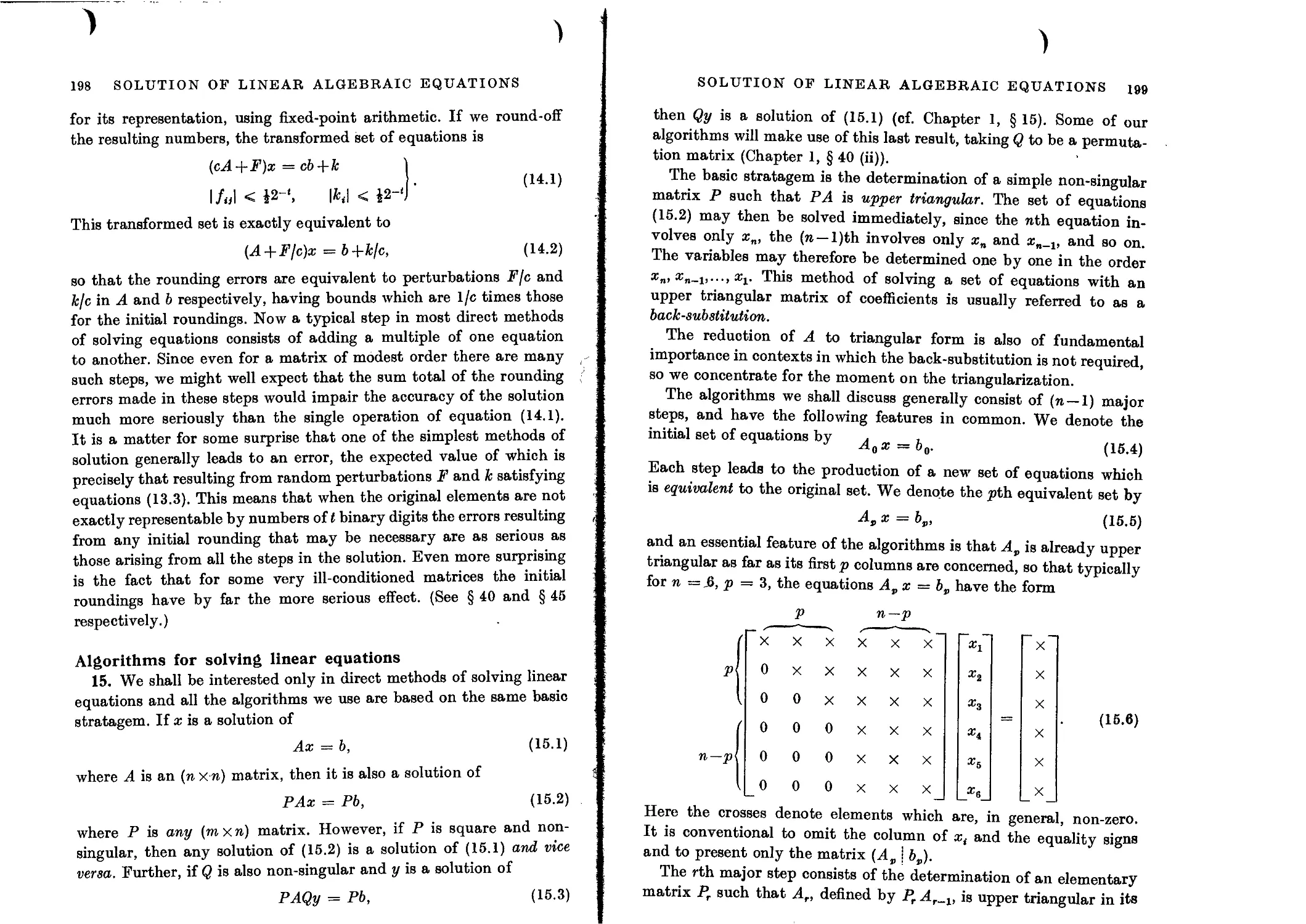

Algorithms for solving linear equations 198

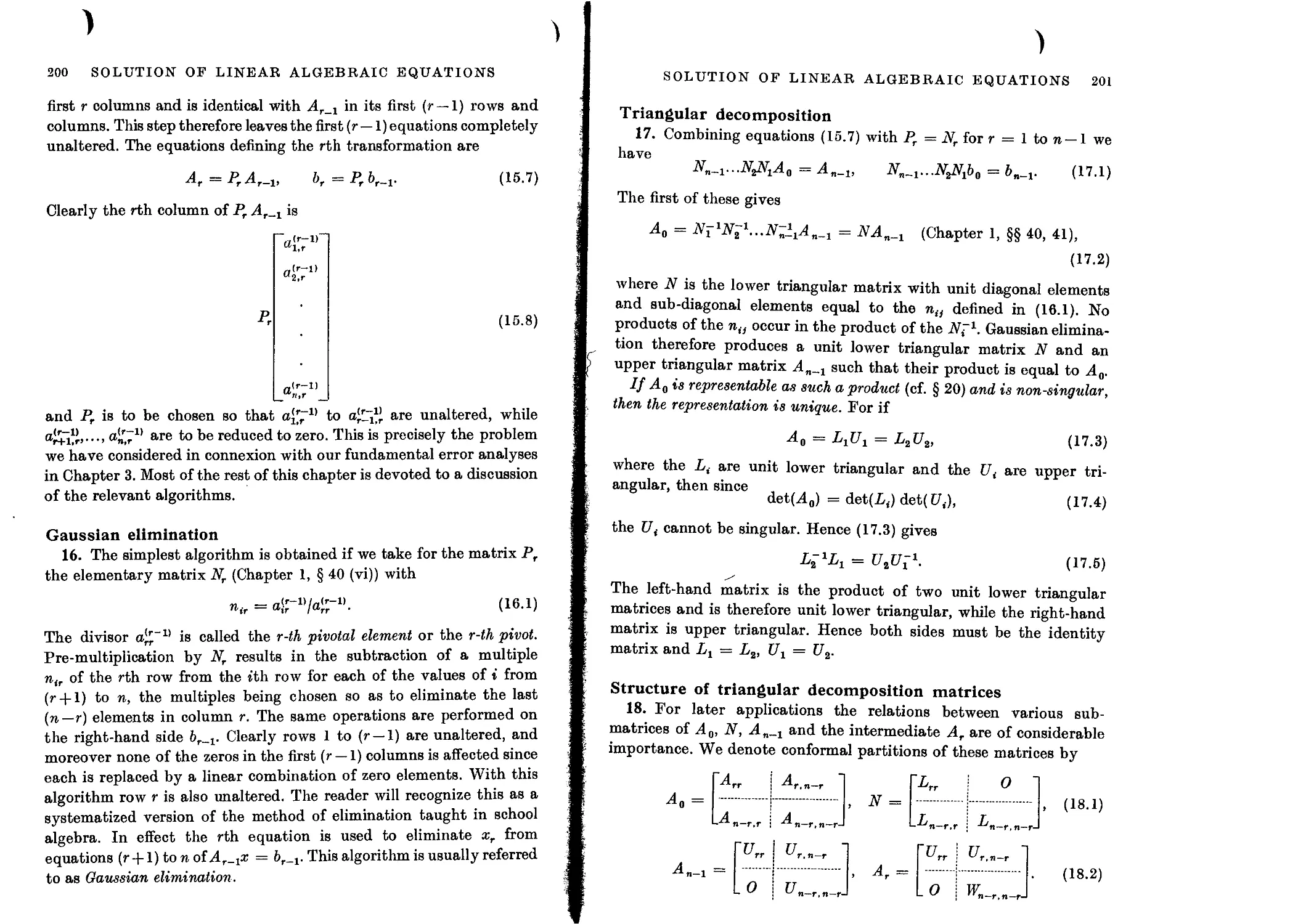

Gaussian elimination 200

Triangular decomposition 201

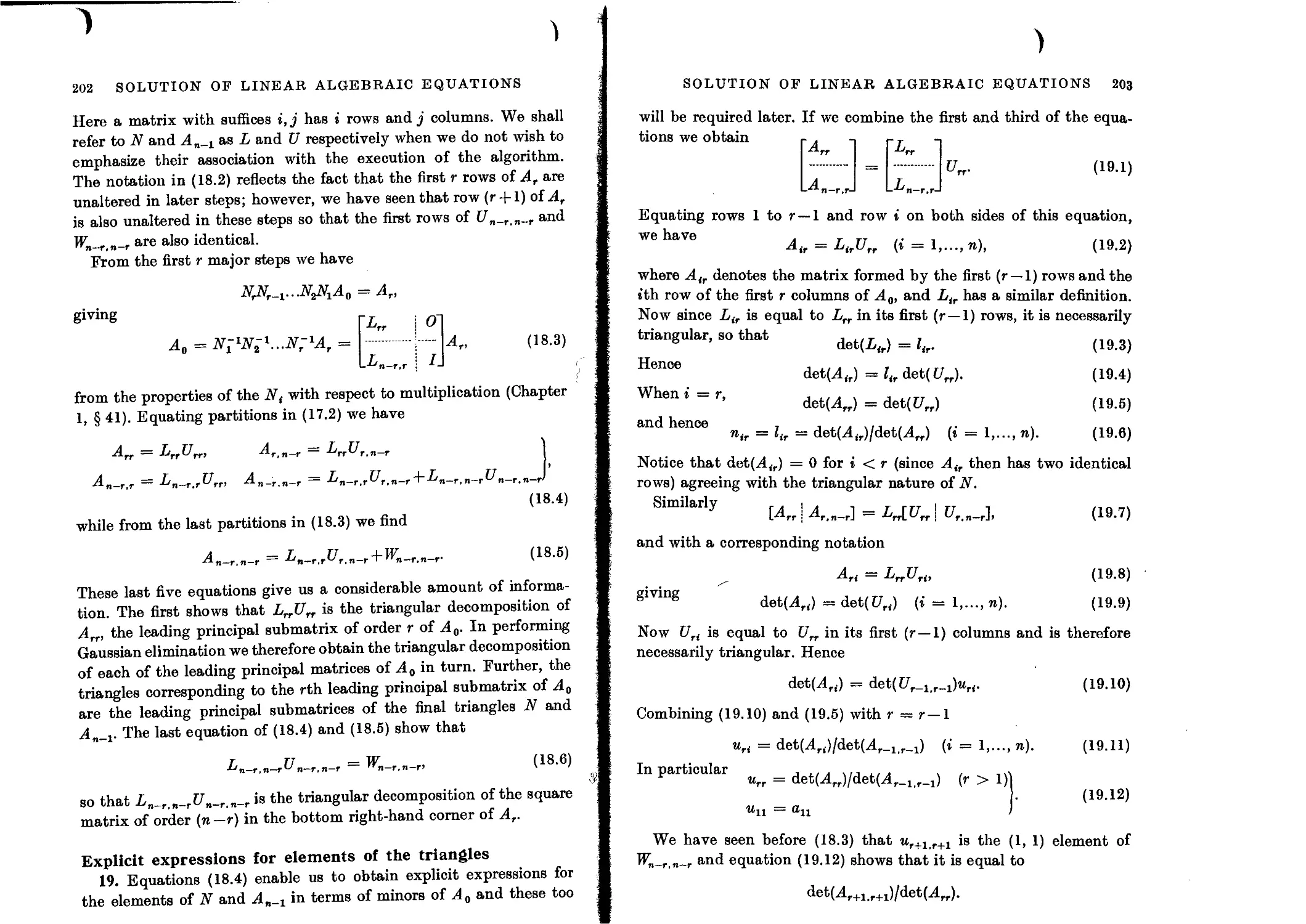

Structure of triangular decomposition matrices 201

Explicit expressions for elements of the triangles 202

Breakdown of Gaussian elimination 204

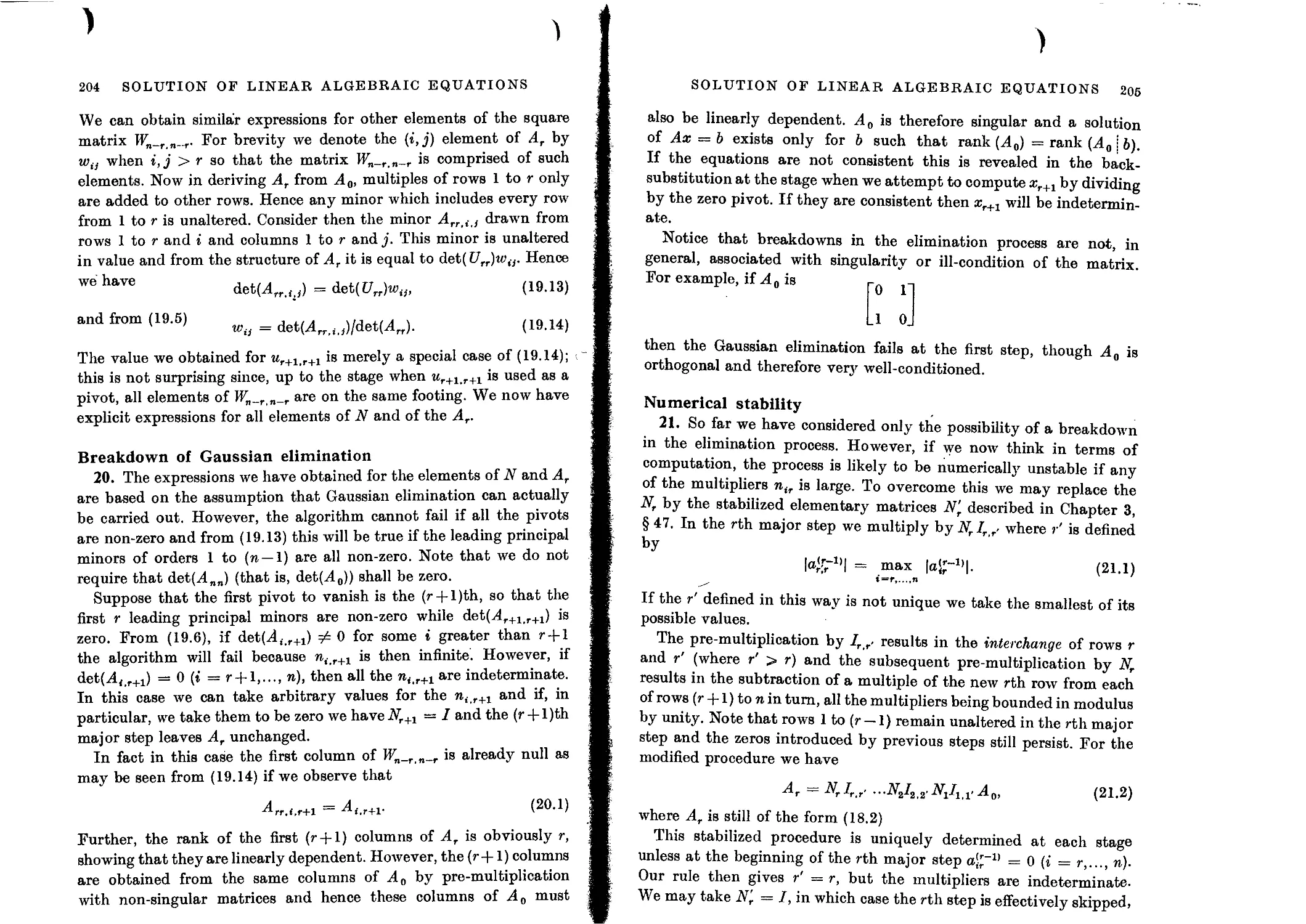

Numerical stability 205

Significance of the interchanges 206

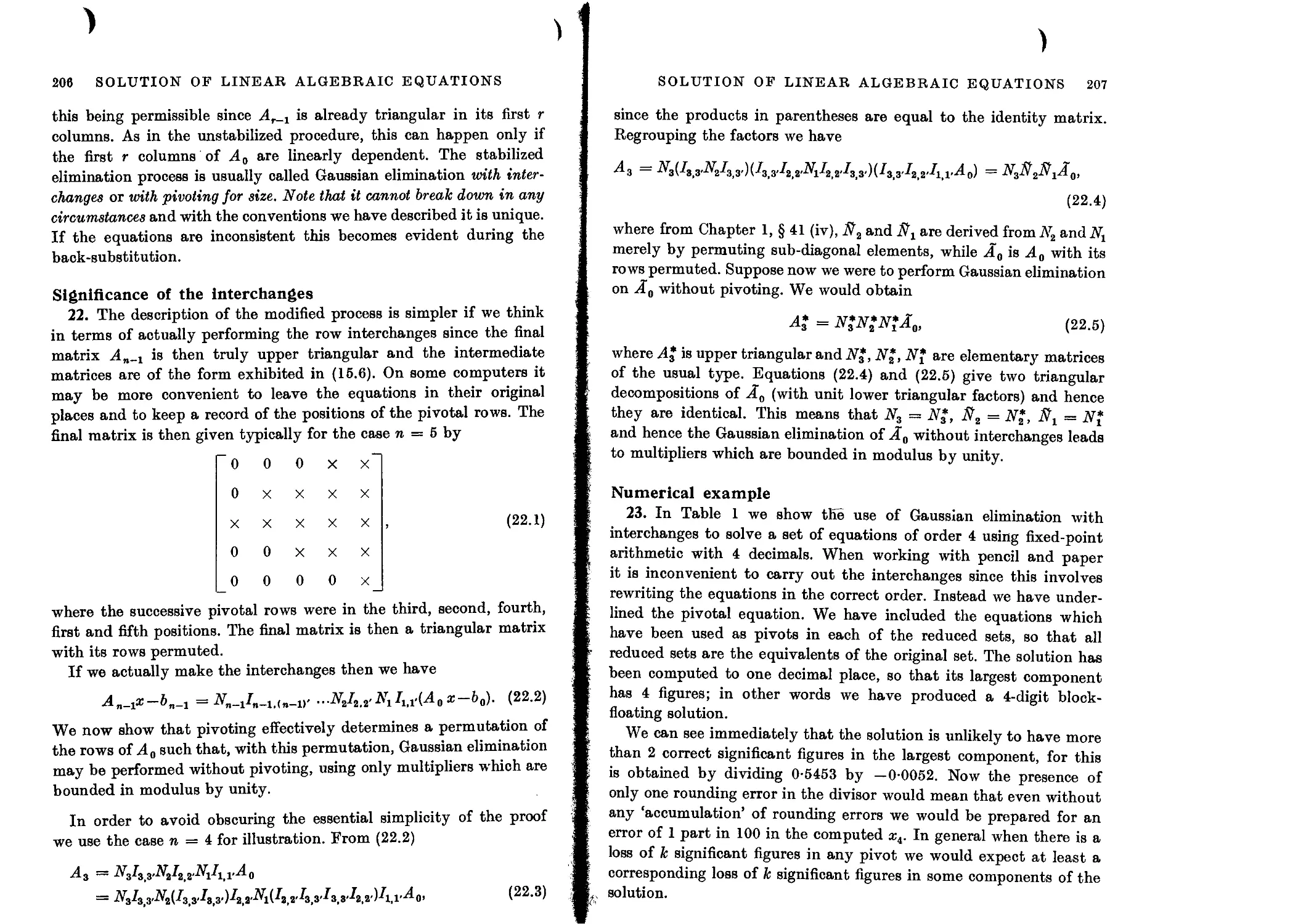

Numerical example 207

Error analysis of Gaussian elimination 209

Upper bounds for the perturbation matrices using fixed-point arithmetic 211

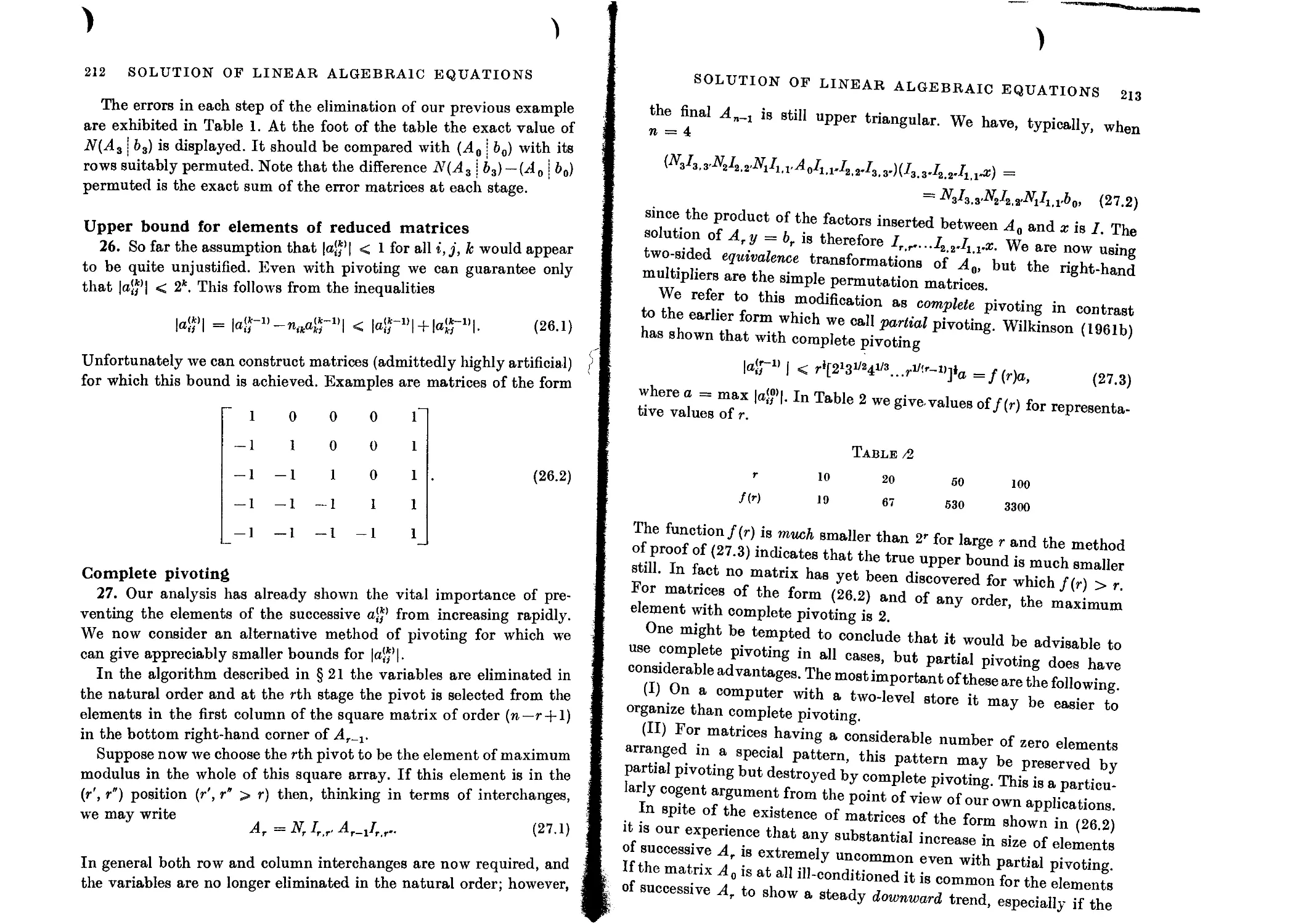

Upper bound for elements of reduced matrices 212

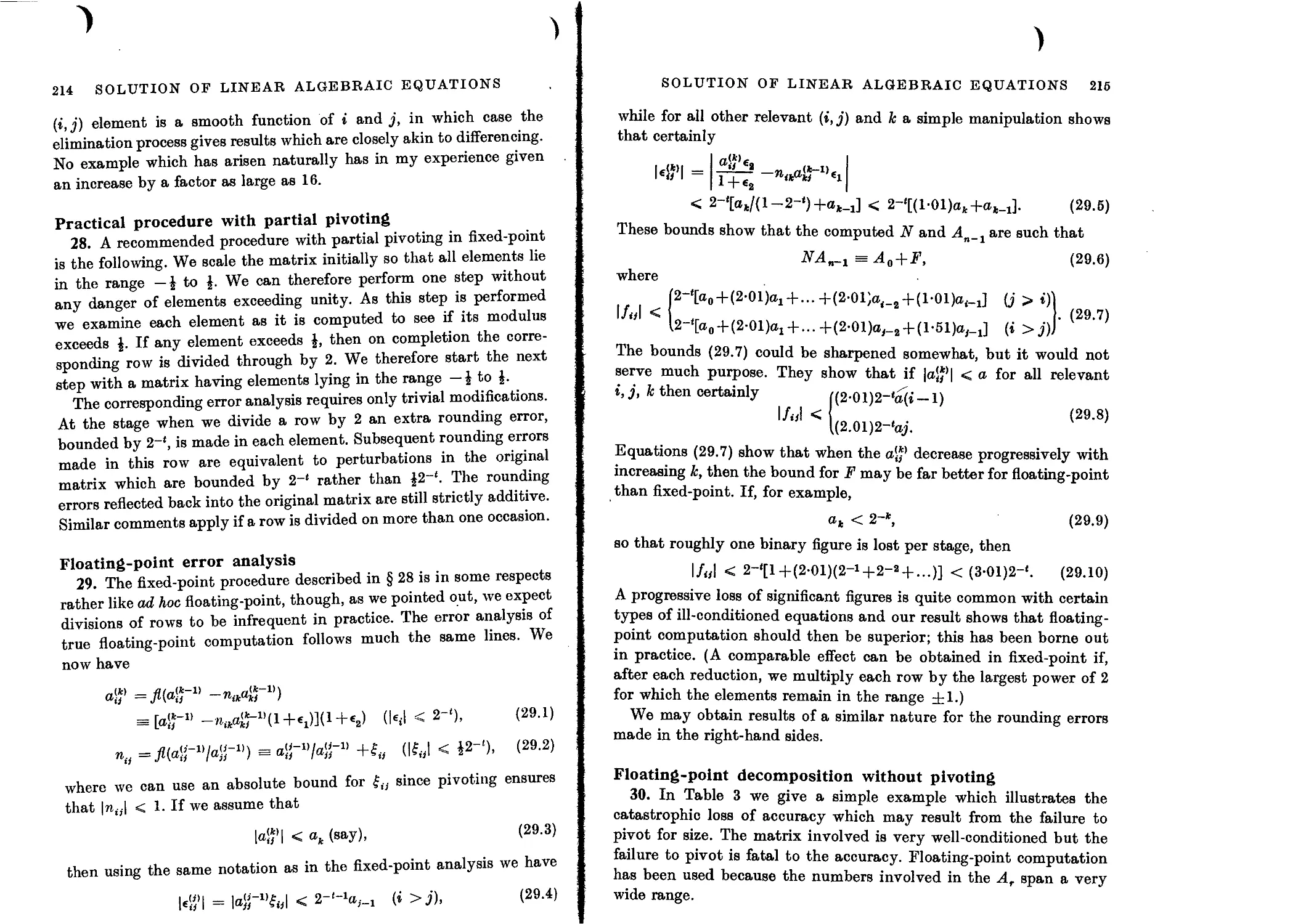

Complete pivoting 212

Practical procedure with partial pivoting 214

Floating-point error analysis 214

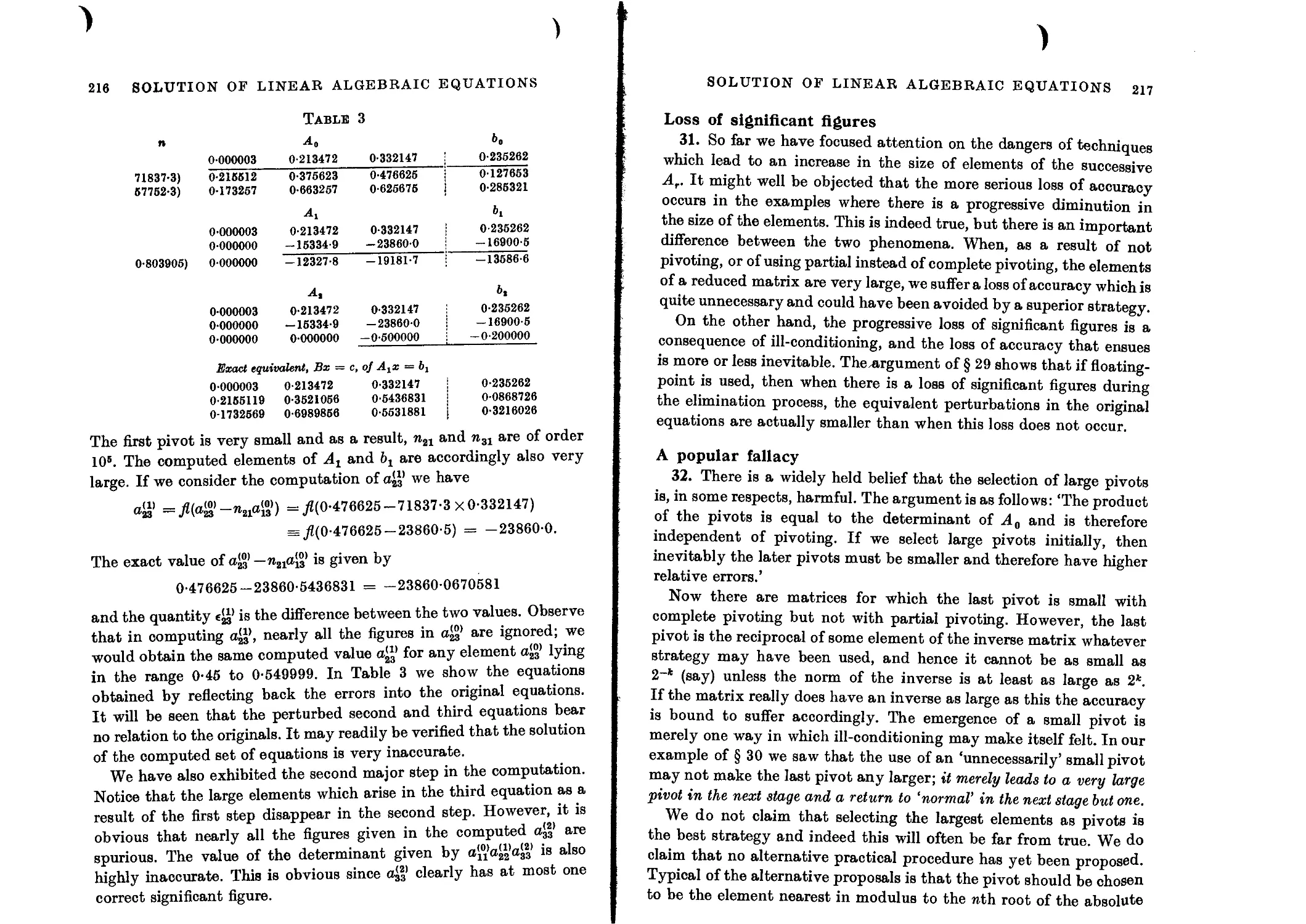

Floating-point decomposition without pivoting 215

Loss of significant figures 217

A popular fallacy 217

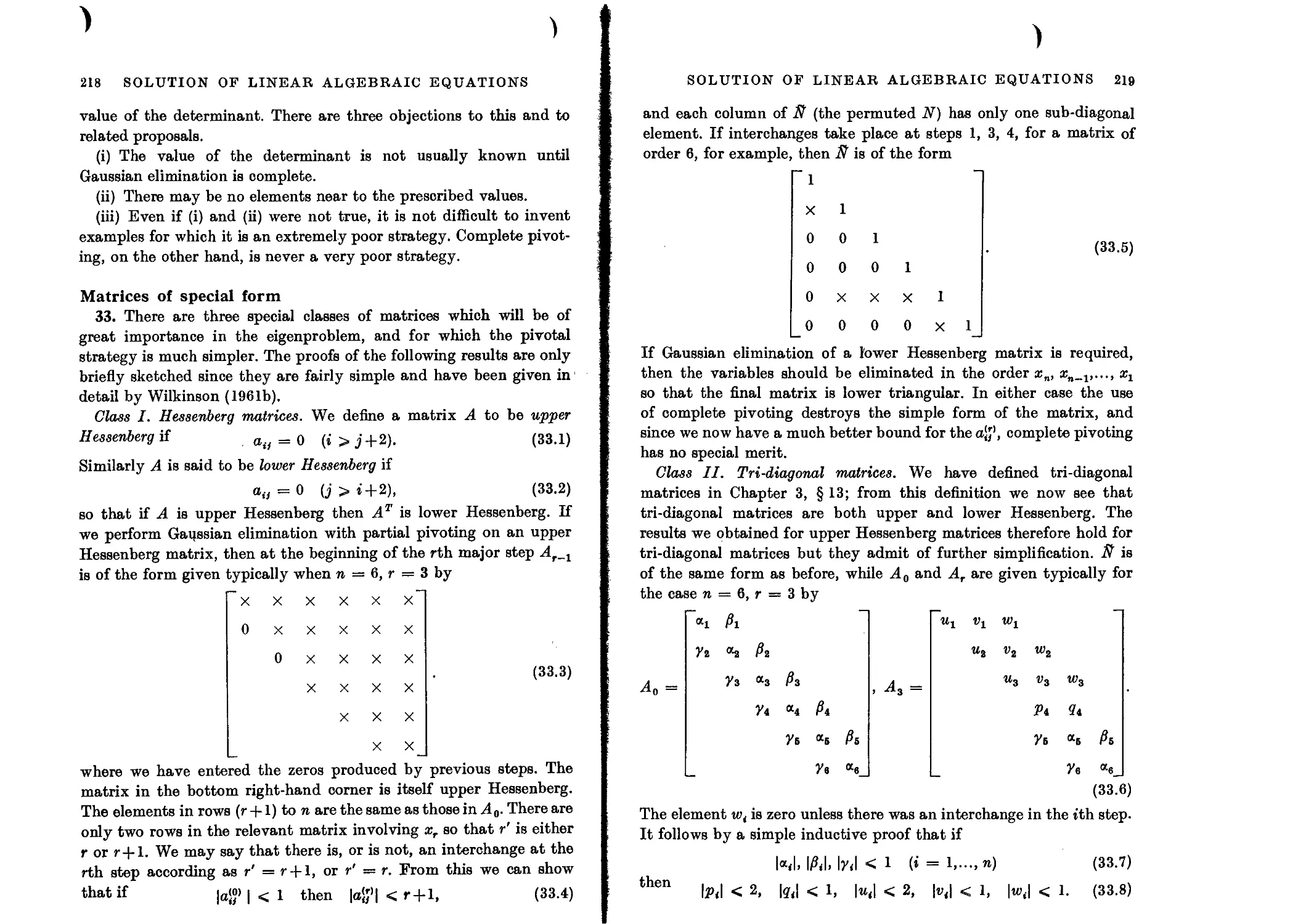

Matrices of special form 218

Gaussian elimination on a high-speed computer 220

Solutions corresponding to different right-hand sides 221

Direct triangular decomposition 221

Relations between Gaussian elimination and direct triangular decom-

position 223

Examples of failure and non-uniqueness of decomposition 224

Triangular decomposition with row interchanges 225

Error analysis of triangular decomposition 227

Evaluation of determinants 228

Cholesky decomposition 229

CONTENTS xiii

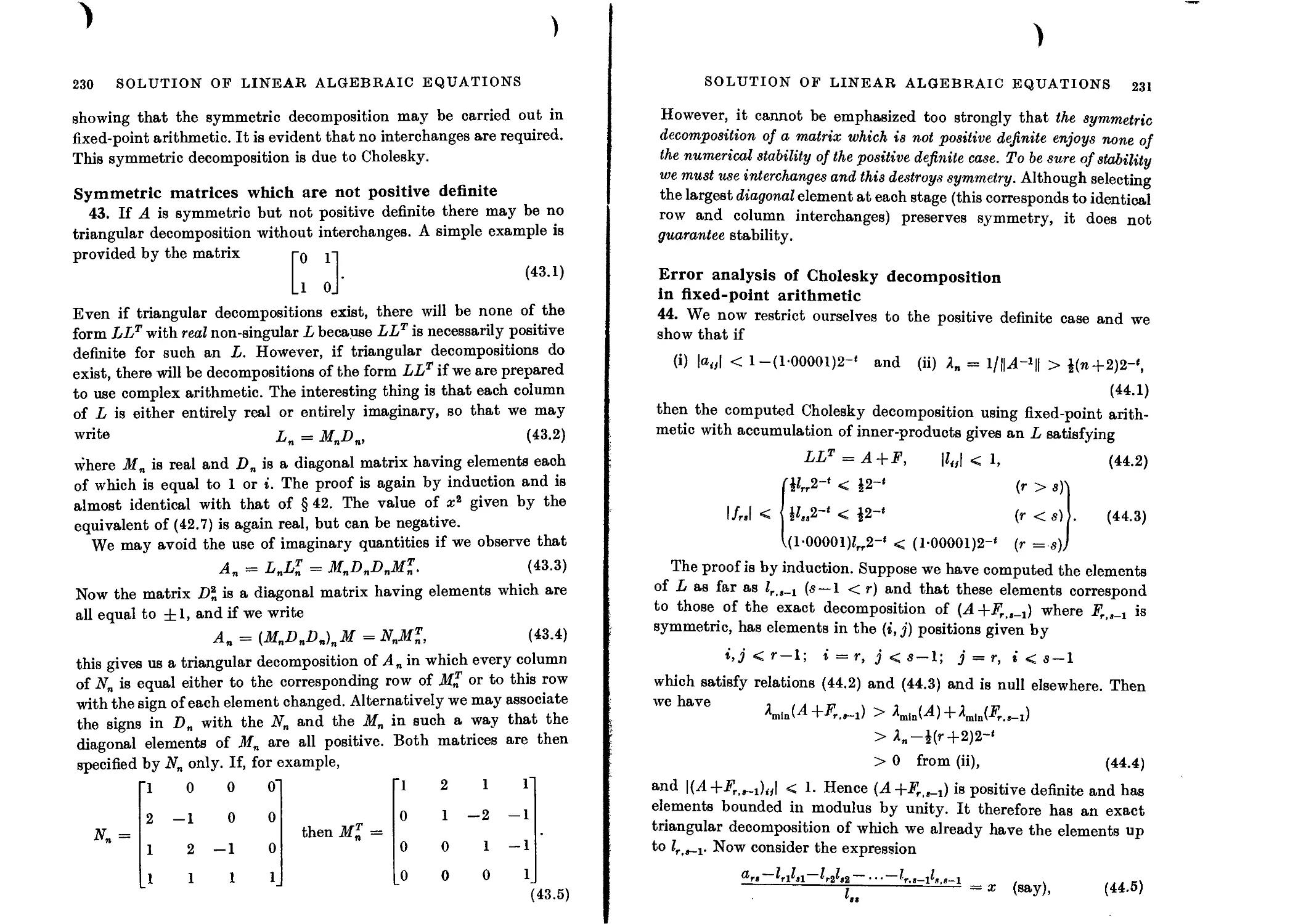

Symmetric matrices which are not positive definite 230

Error analysis of Cholesky decomposition in fixed-point arithmetic 231

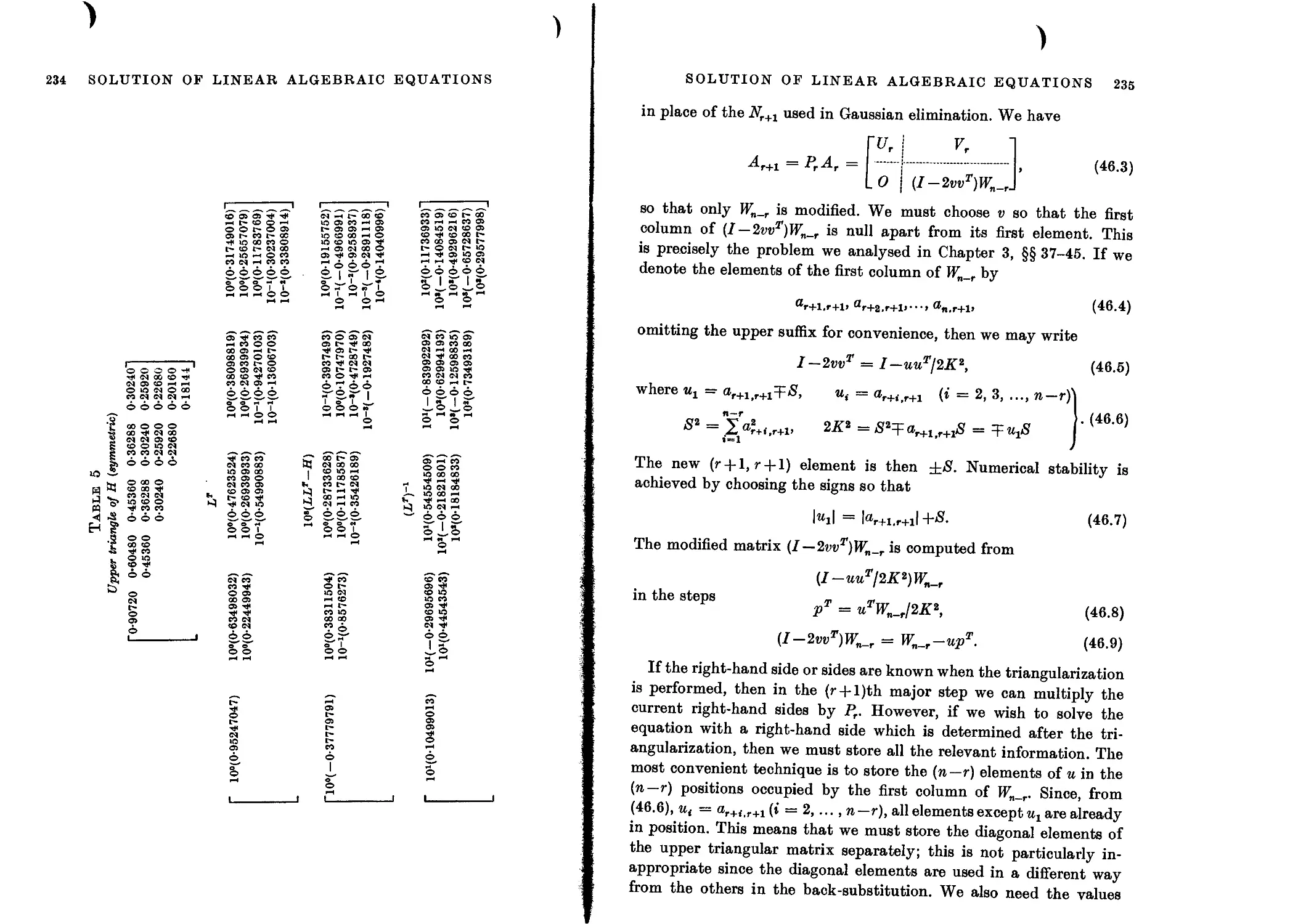

An ill-conditioned matrix 233

Triangularization using elementary Hermitian matrices 233

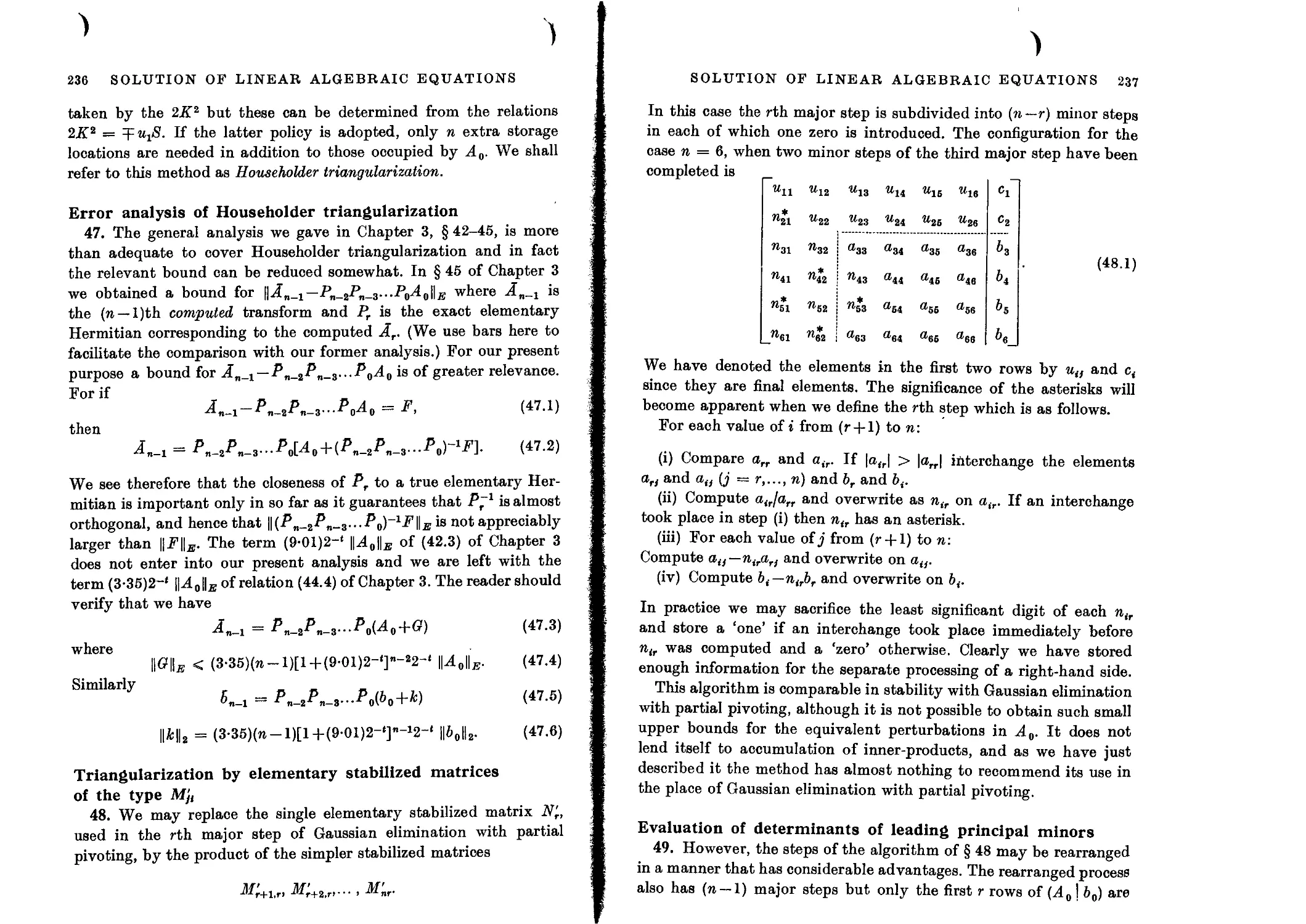

Error analysis of Householder triangularization 236

Triangularization by elementary stabilized matrices of the type M'u 236

Evaluation of determinants of leading principal minors 237

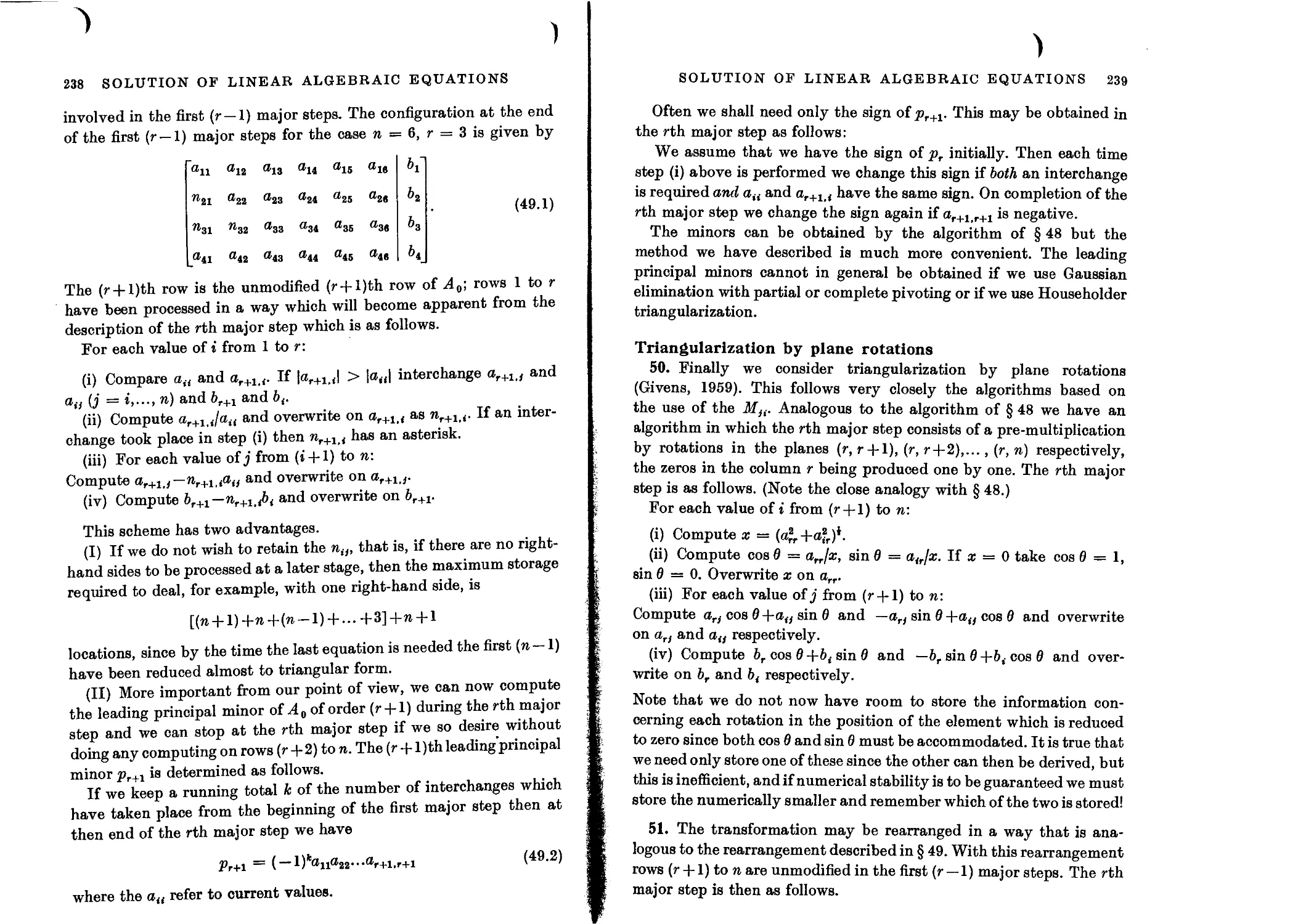

Triangularization by plane rotations 239

Error analysis of Givens reduction 240

Uniqueness of orthogonal triangularization 241

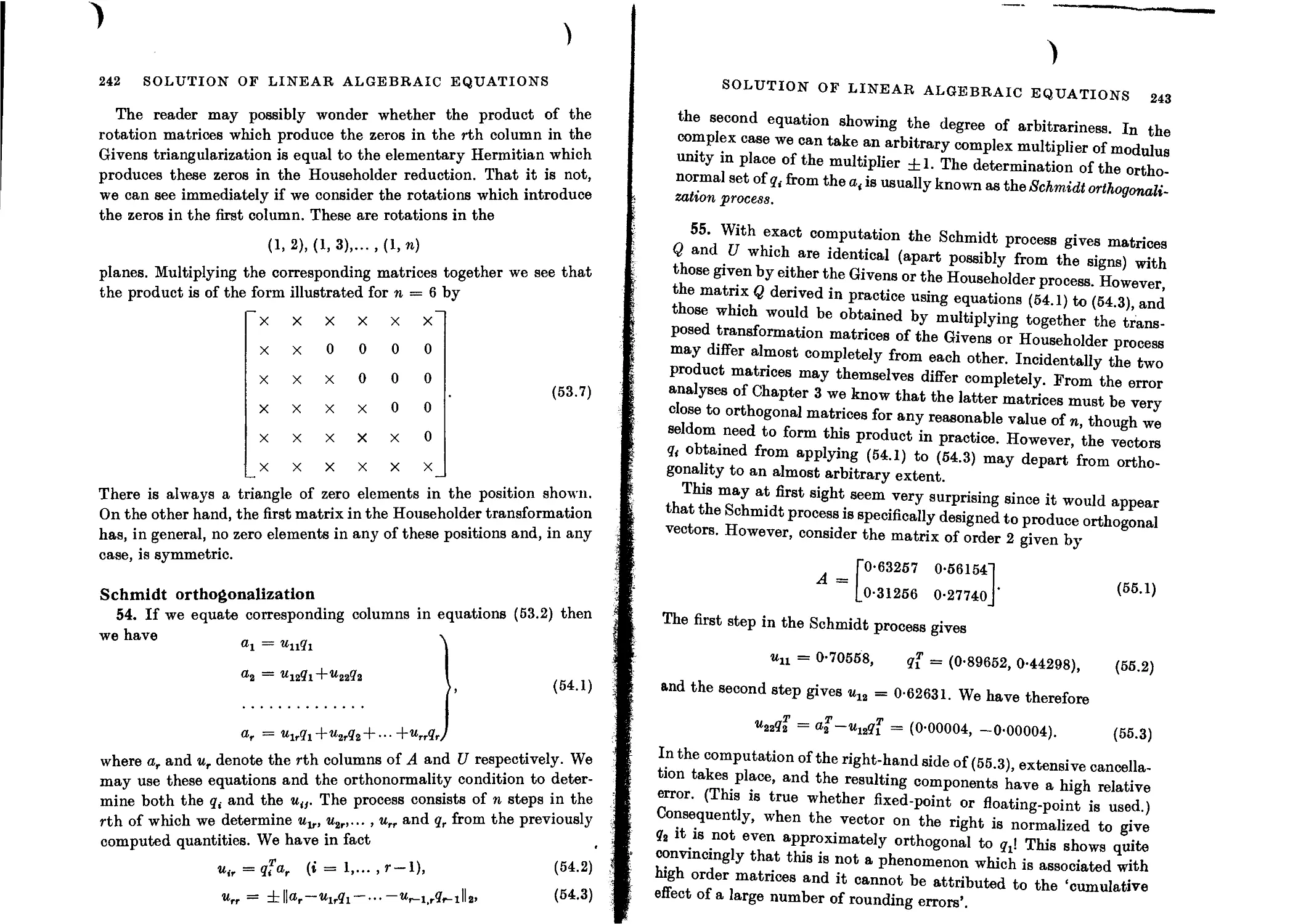

Schmidt orthogonalization 242

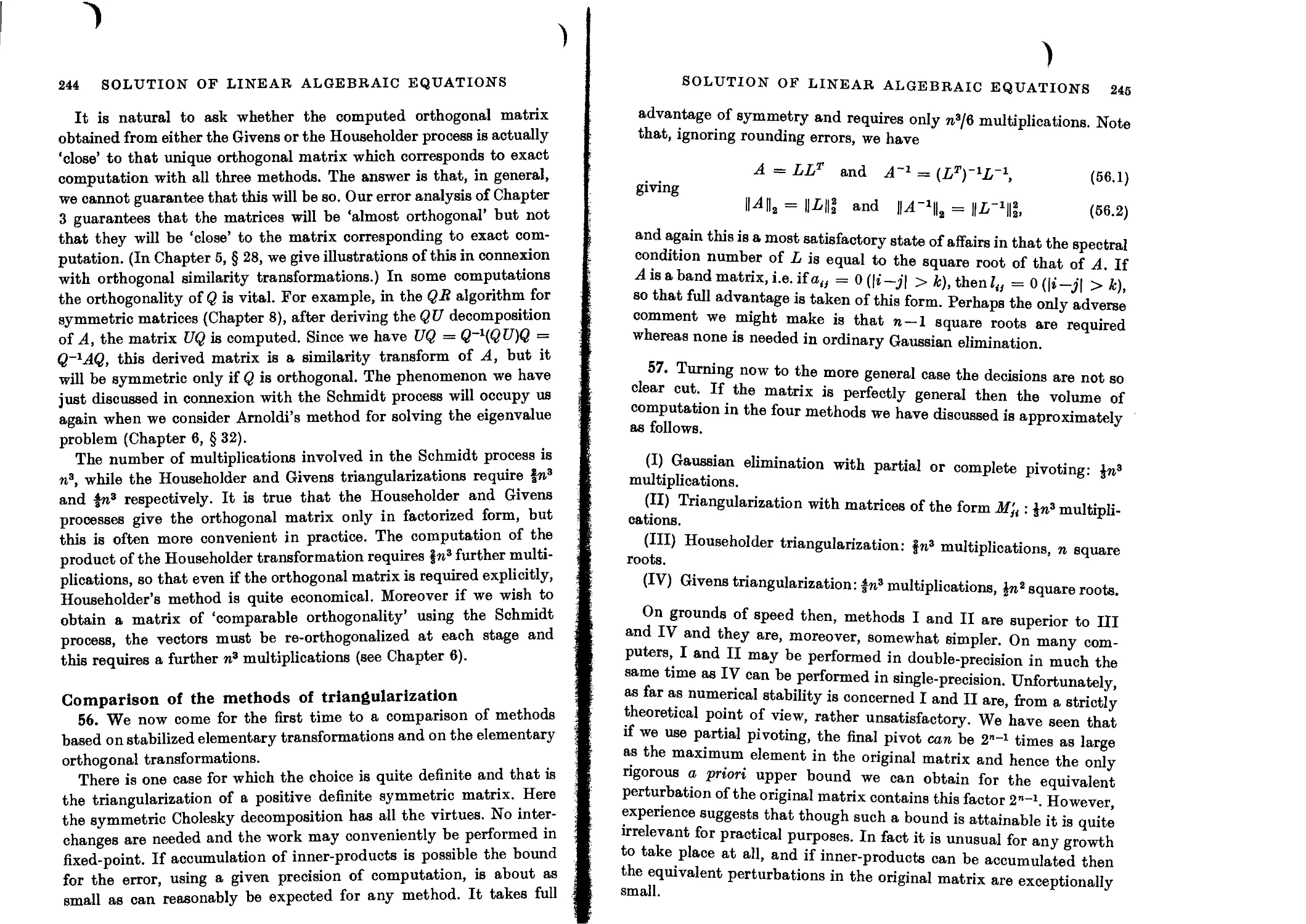

Comparison of the methods of triangularization 244

Back-substitution 247

High accuracy of computed solutions of triangular sets of equations 249

Solution of a general spt of equations 251

Computation of the inverse of a general matrix 252

Accuracy of.computed solutions 253

Ill-conditioned matrices which give no small pivots 254

Iterative improvements of approximate solution 255

Effect of rounding errors on the iterative process 256

The iterative procedure in fixed-point computation 257

Simple example of iterative procedure 258

General comments on the iterative procedure 260

Related iterative procedures 261

Limitations of the iterative procedure 261

Rigorous justification of the iterative method 262

5. HERMITIAN MATRICES

Introduction 265

The classical Jacobi method for real symmetric matrices 266

Rate of convergence 267

Convergence to fixed diagonal matrix 268

Serial Jacobi method 269

The Gerschgorin discs 269

Ultimate quadratic convergence of Jacobi methods 270

Close and multiple eigenvalues 271

Numerical examples 273

Calculation of cos 0 and sin 0 274

Simpler determination of the angles of rotation 276

The threshold Jacobi method 277

Calculation of the eigenvectors 278

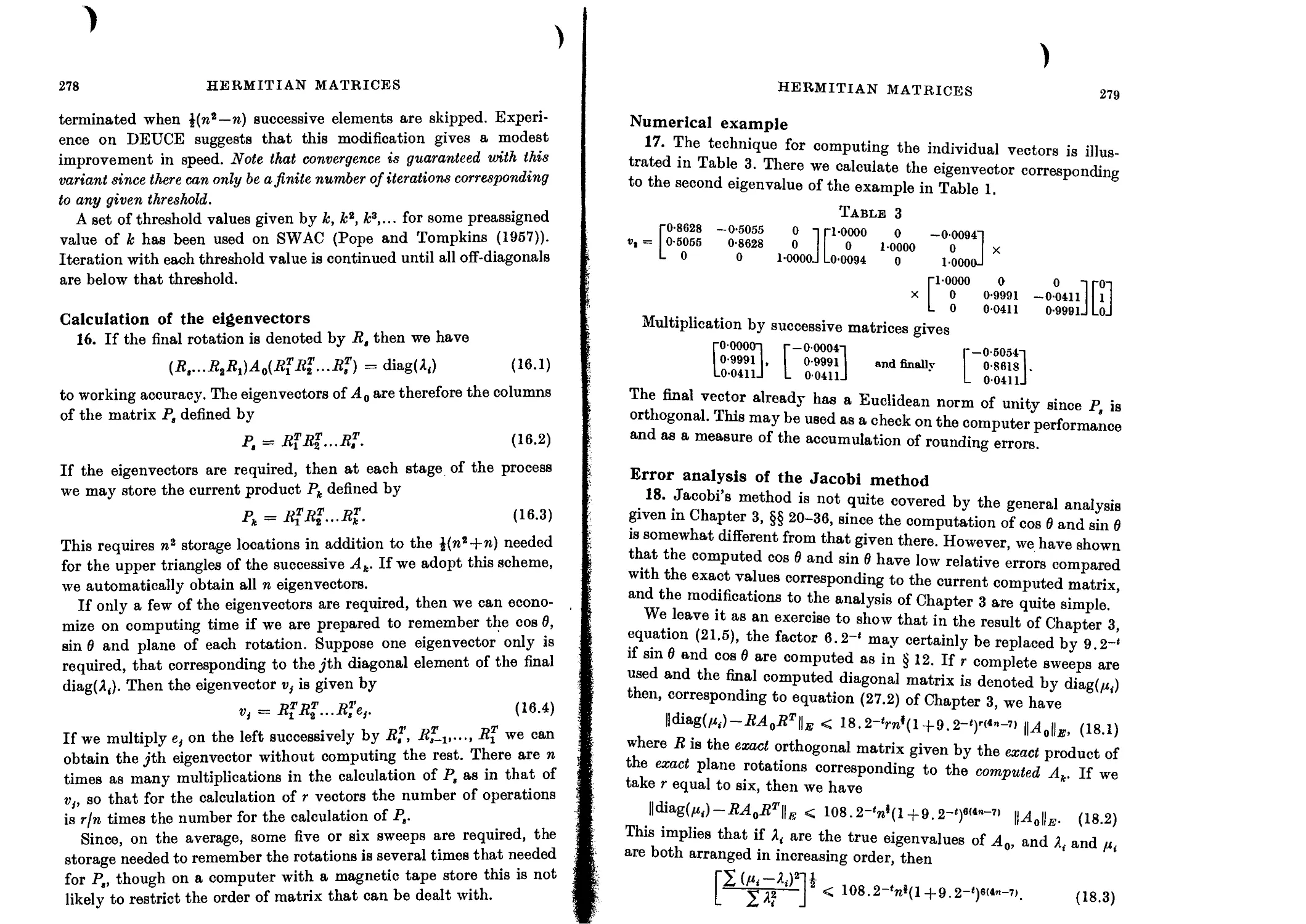

Numerical example 279

Error analysis of the Jacobi method 279

Accuracy of the computed eigenvectors 280

Error bounds for fixed-point computation 281

Organizational problems 282

Givens’ method 282

Givens’ process on a computer with a two-level store 284

Floating-point error analysis of Givens’ process 286

Fixed-point error analysis 287

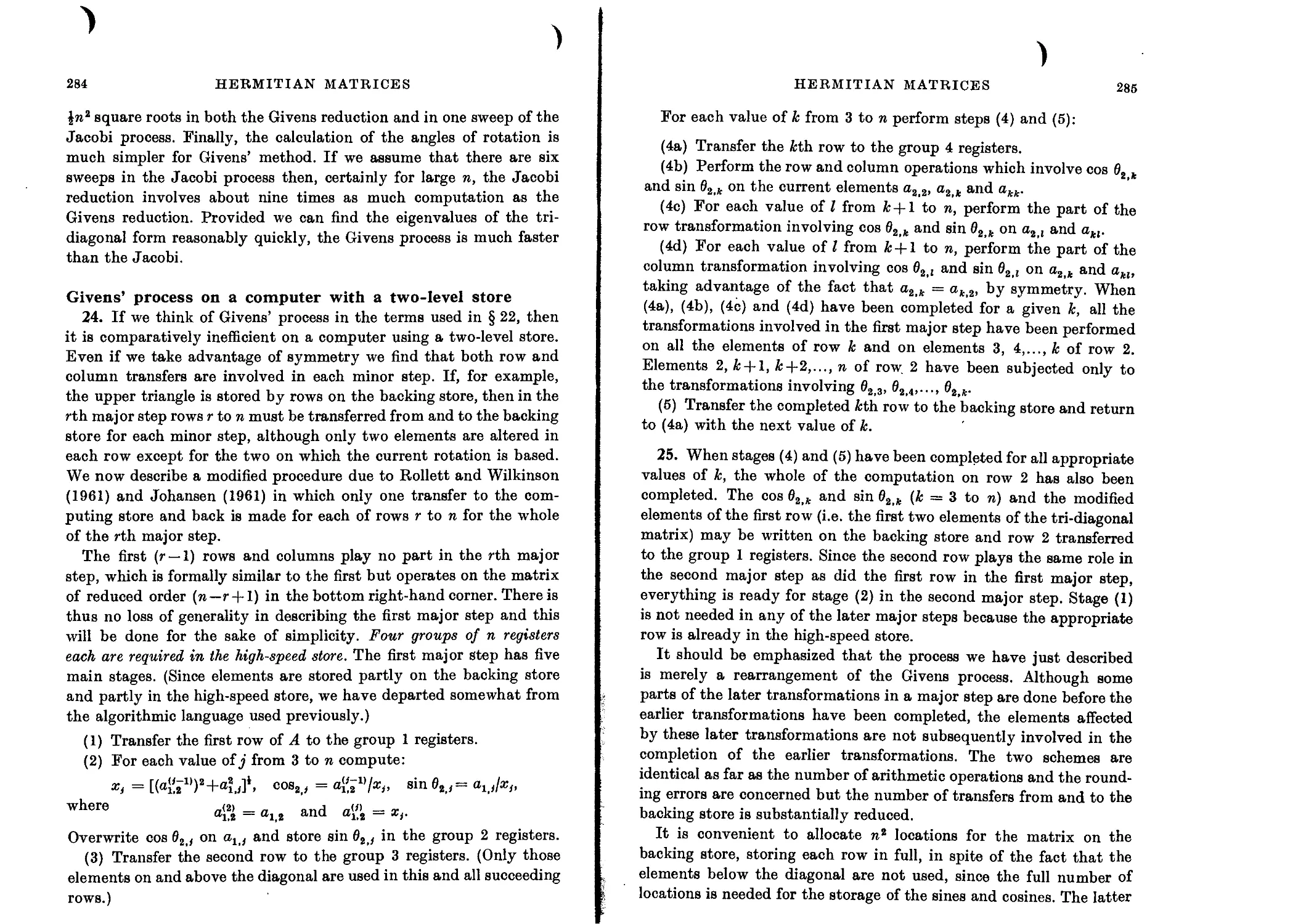

Numerical example 288

Householder’s method 290

xiv CONTENTS

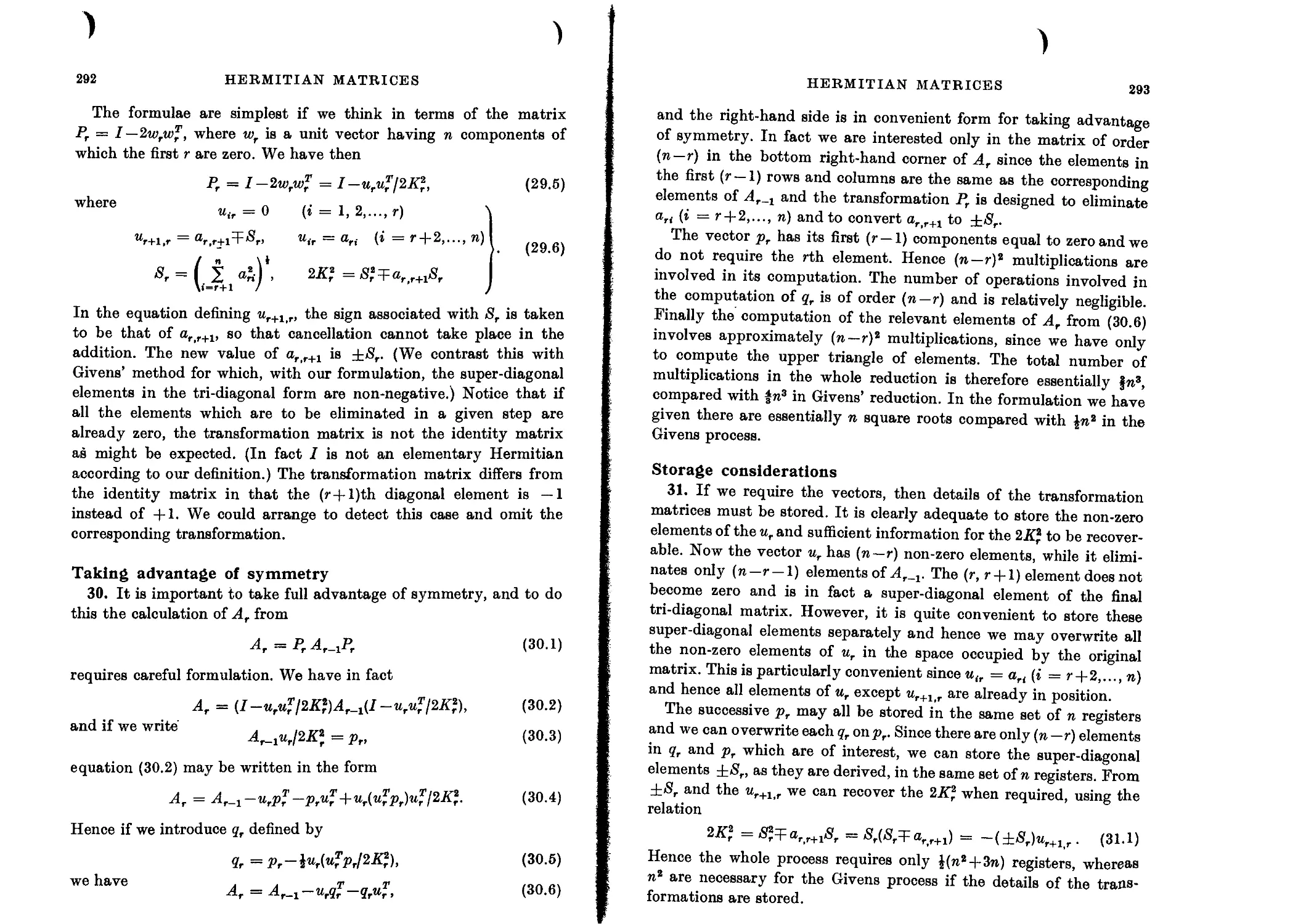

Taking advantage of symmetry 292

Storage considerations 293

Householder’s process on a computer with a two-level store 294

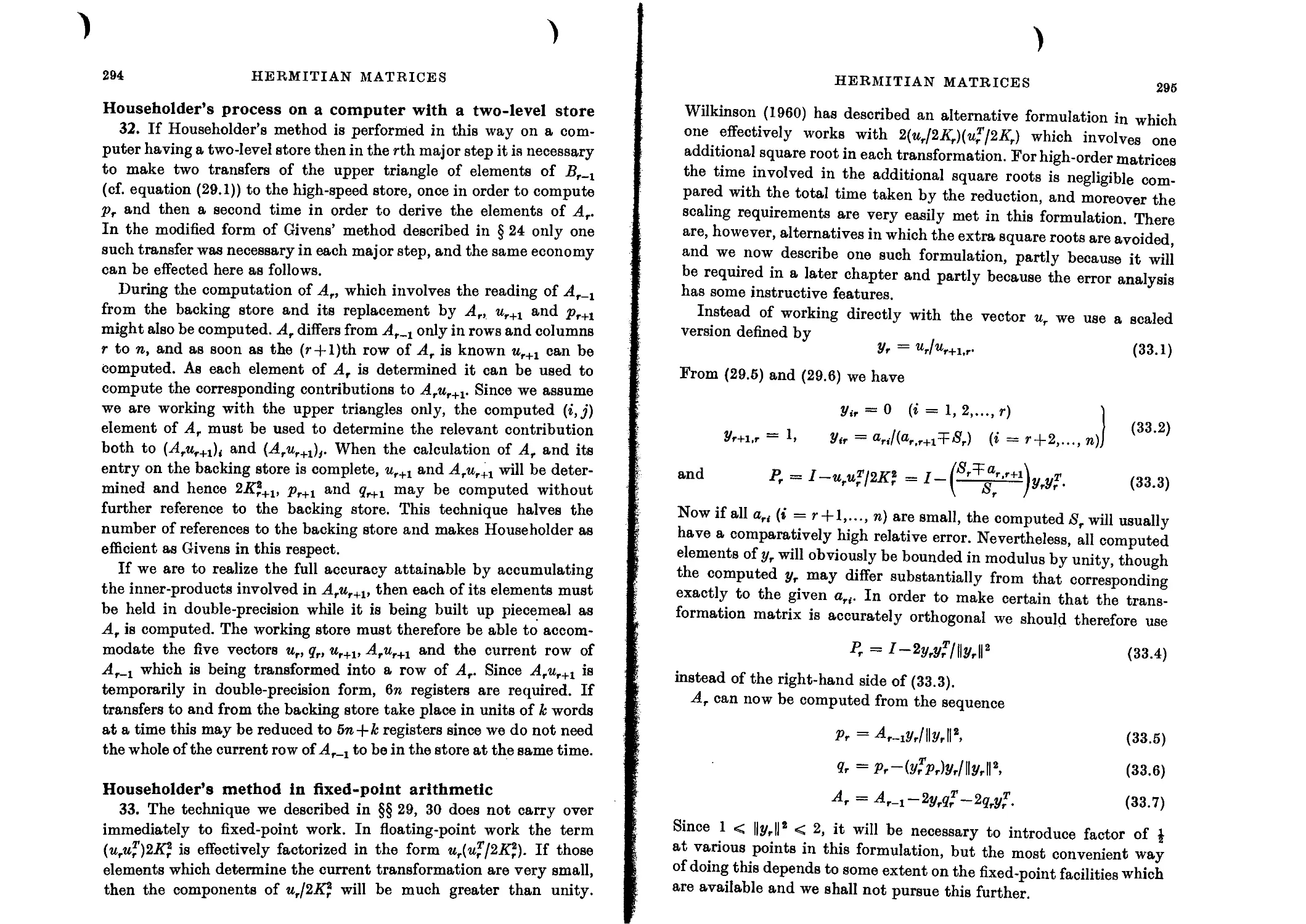

Householder’s method in fixed-point arithmetic 294

Numerical example 296

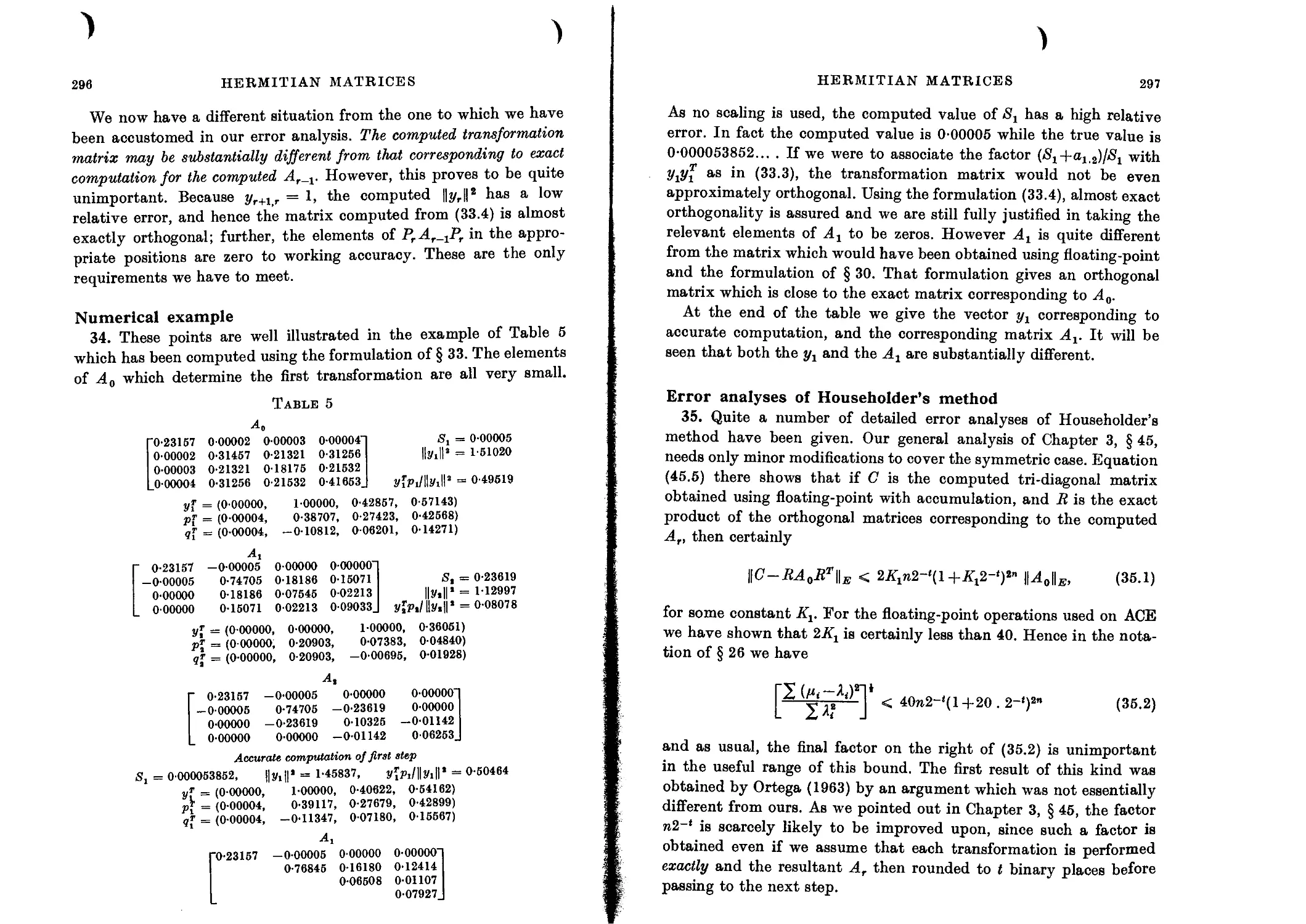

Error analyses of Householder’s method 297

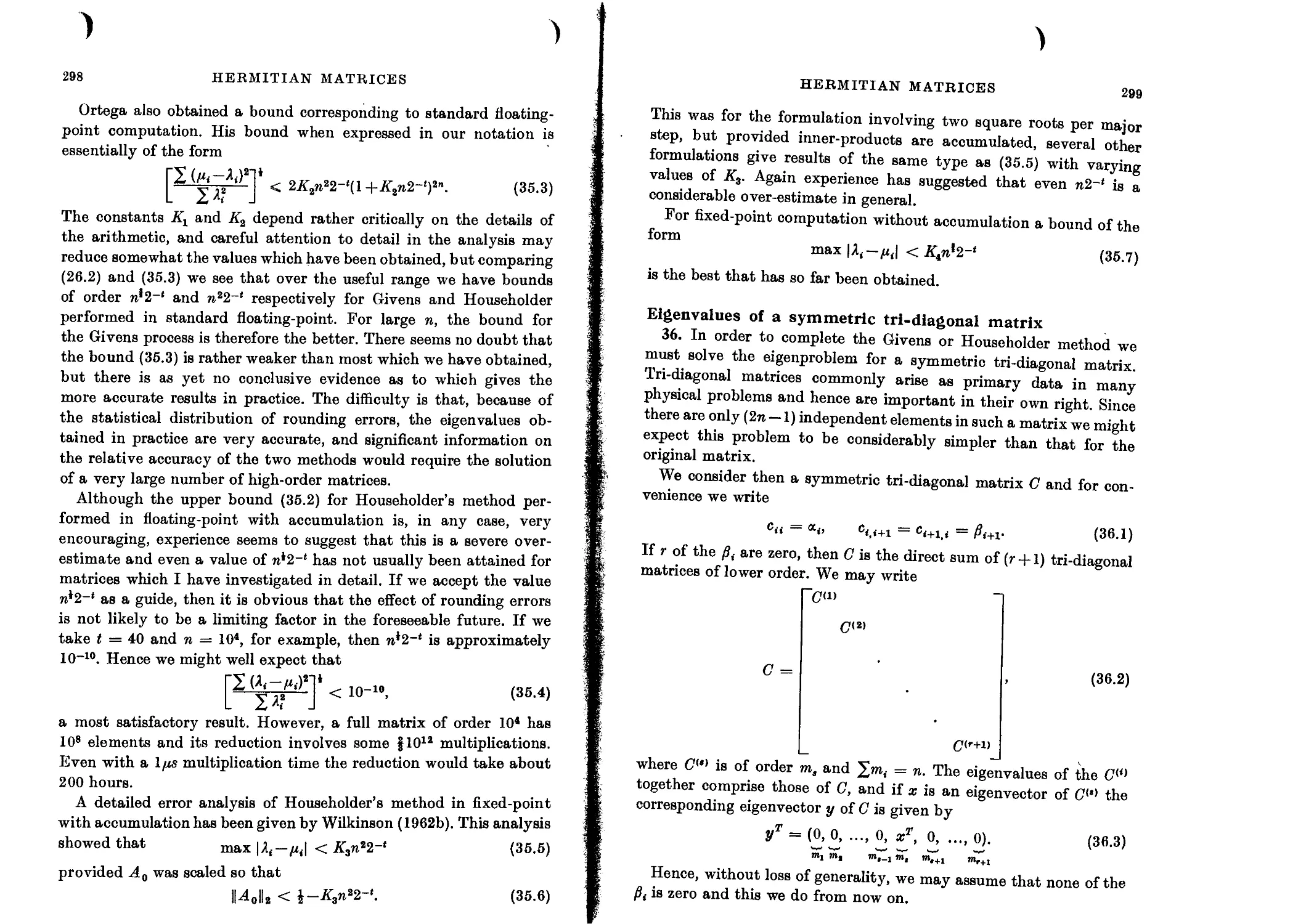

Eigenvalues of a symmetric tri-diagonal matrix 299

Sturm sequence property 300

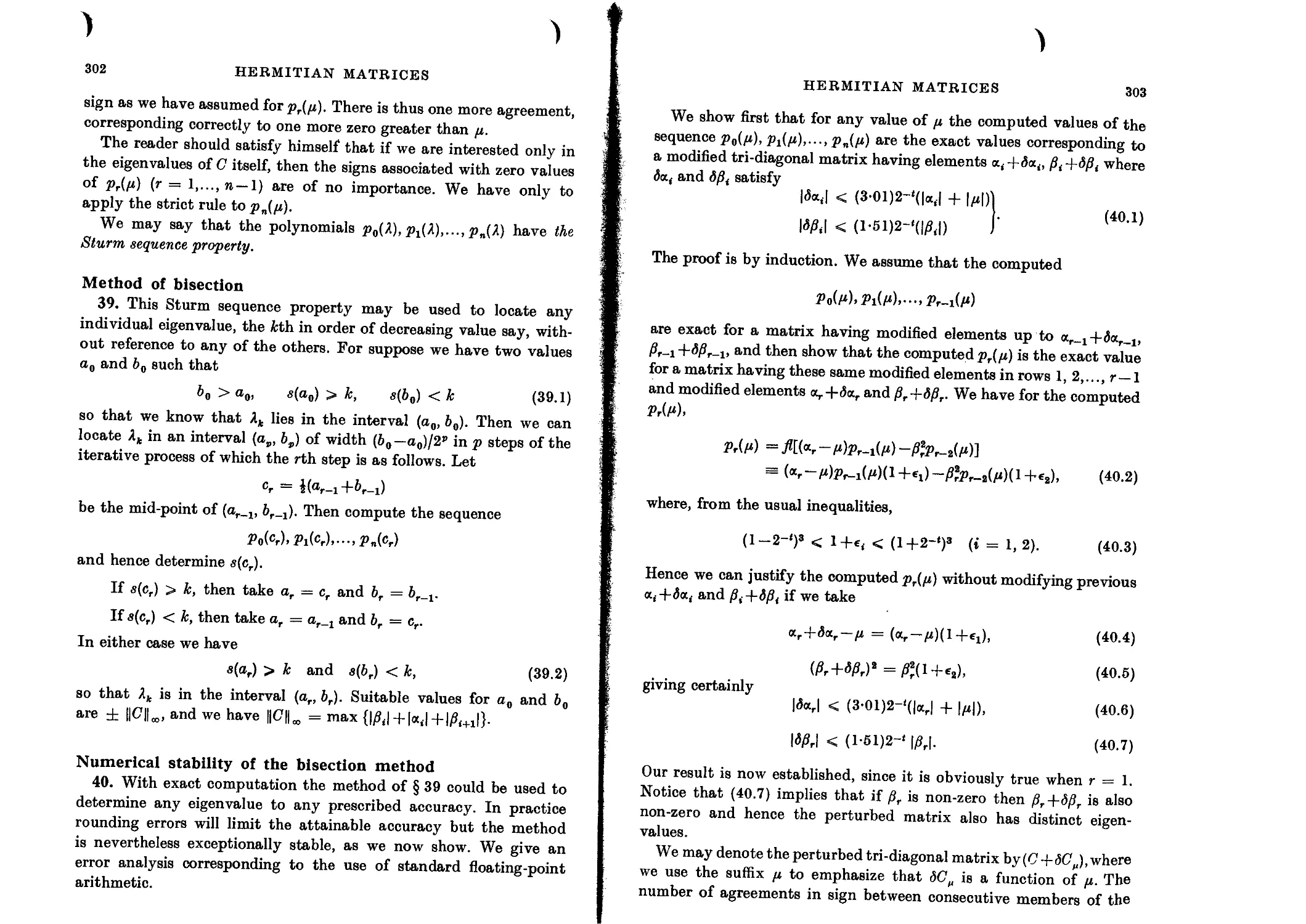

Method of bisection 302

Numerical stability of the bisection method 302

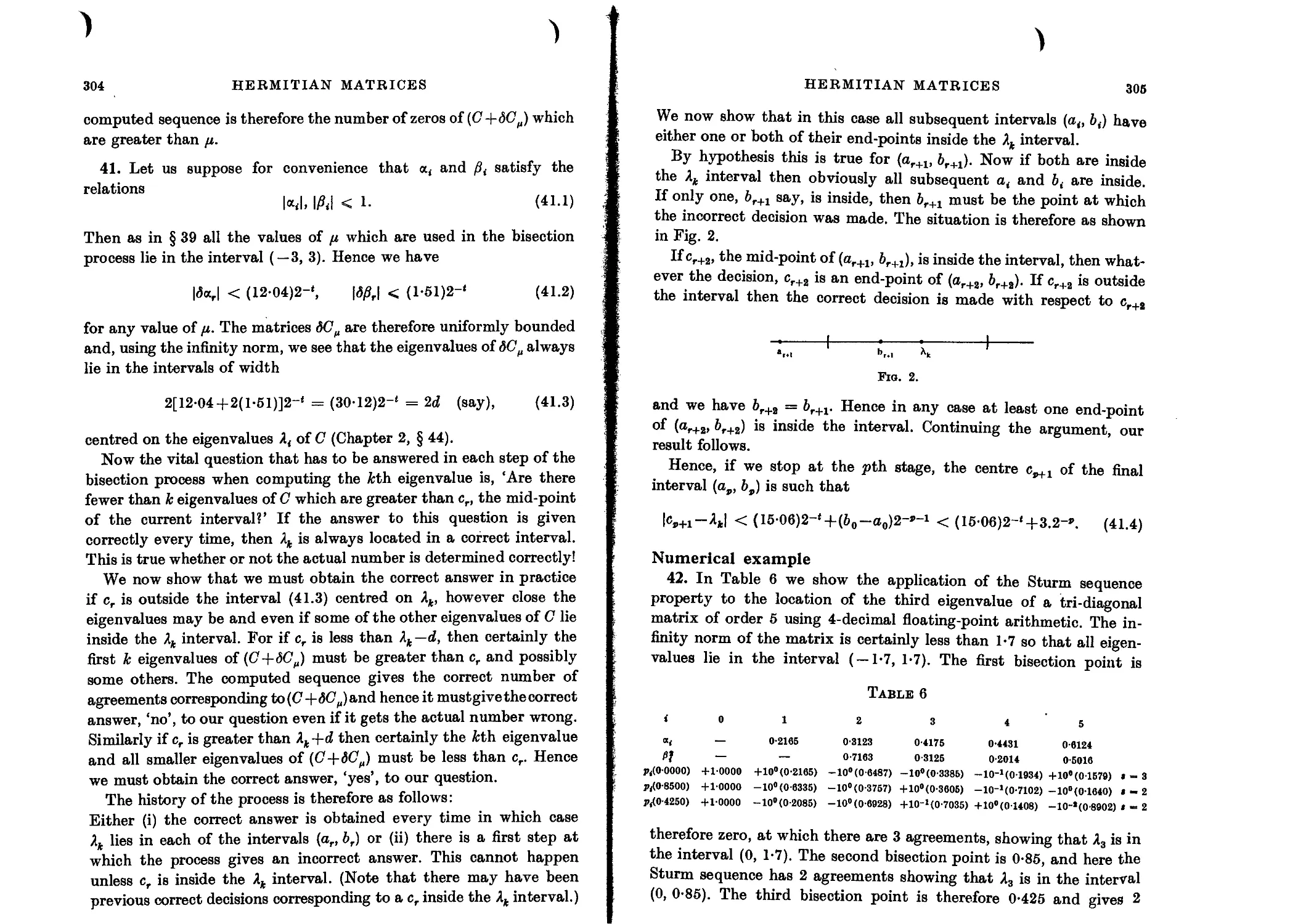

Numerical example 305

General comments on the bisection method 306

Small eigenvalues 307

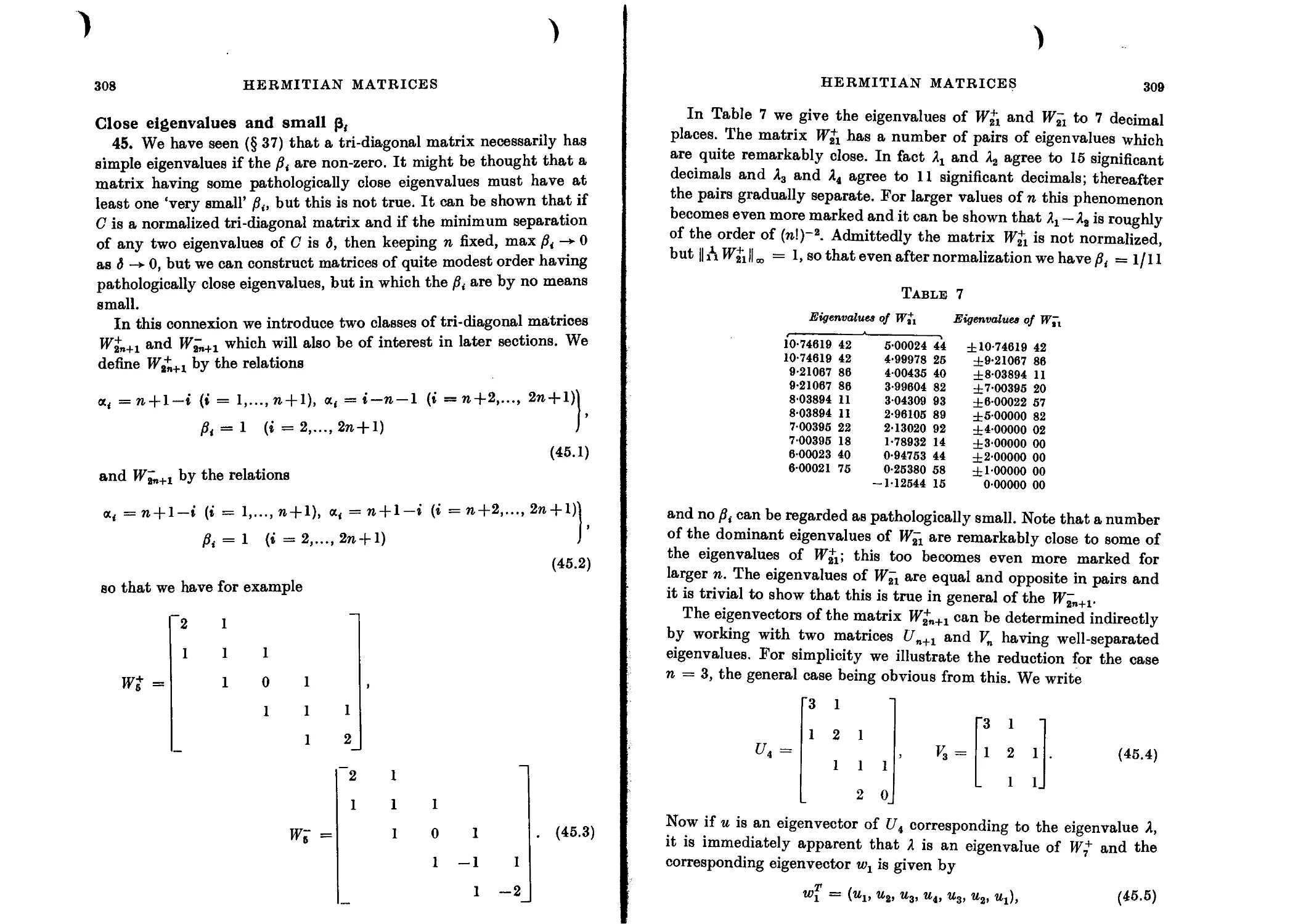

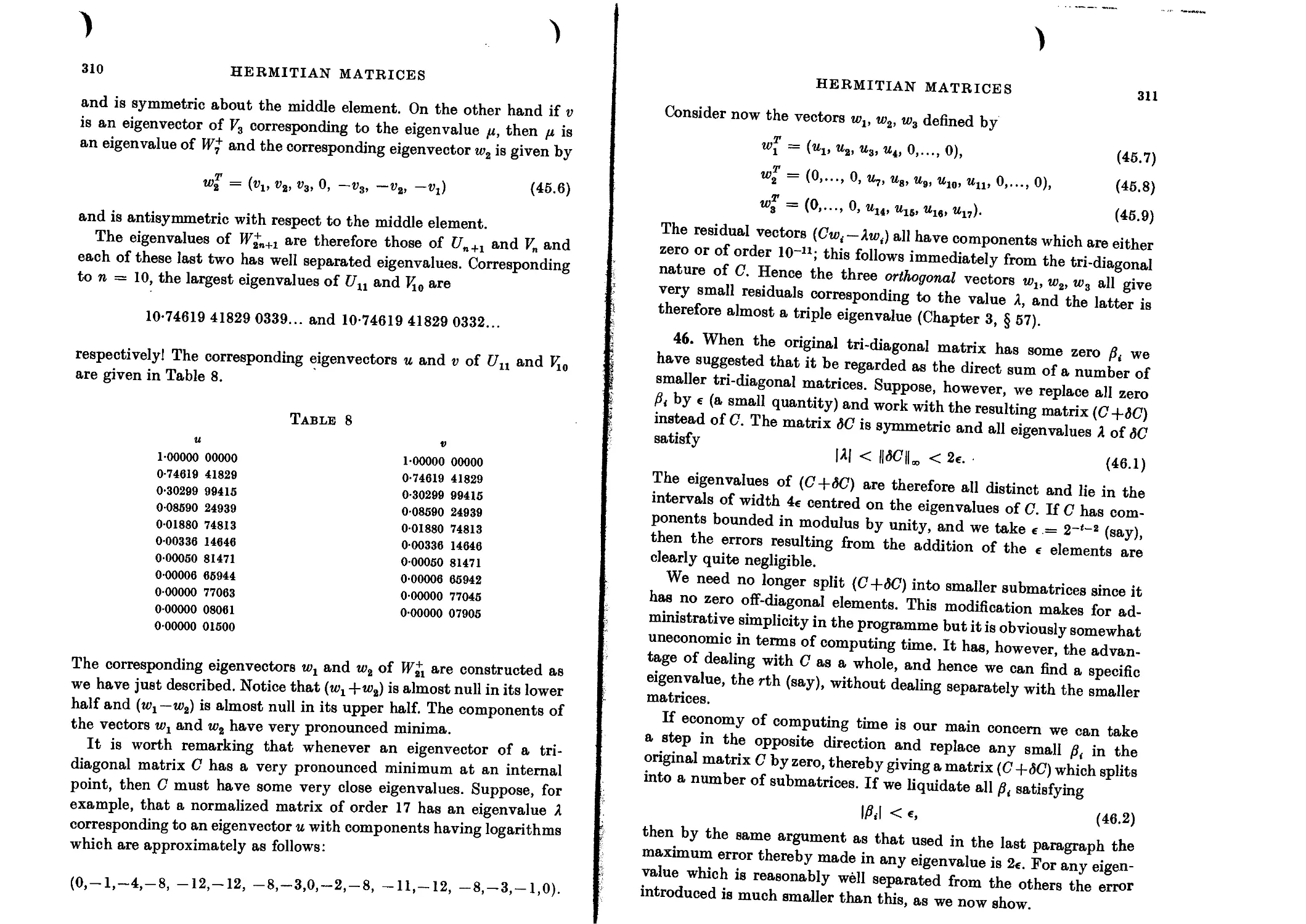

Close eigenvalues and small 308

Fixed-point computation of the eigenvalues 312

Computation of the eigenvectors of a tri-diagonal form 315

Instability of the explicit expression for the eigenvector 316

Numerical examples 319

Inverse iteration 321

Choice of initial vector b 322

Error analysis 323

Numerical example 325

Close eigenvalues and small 327

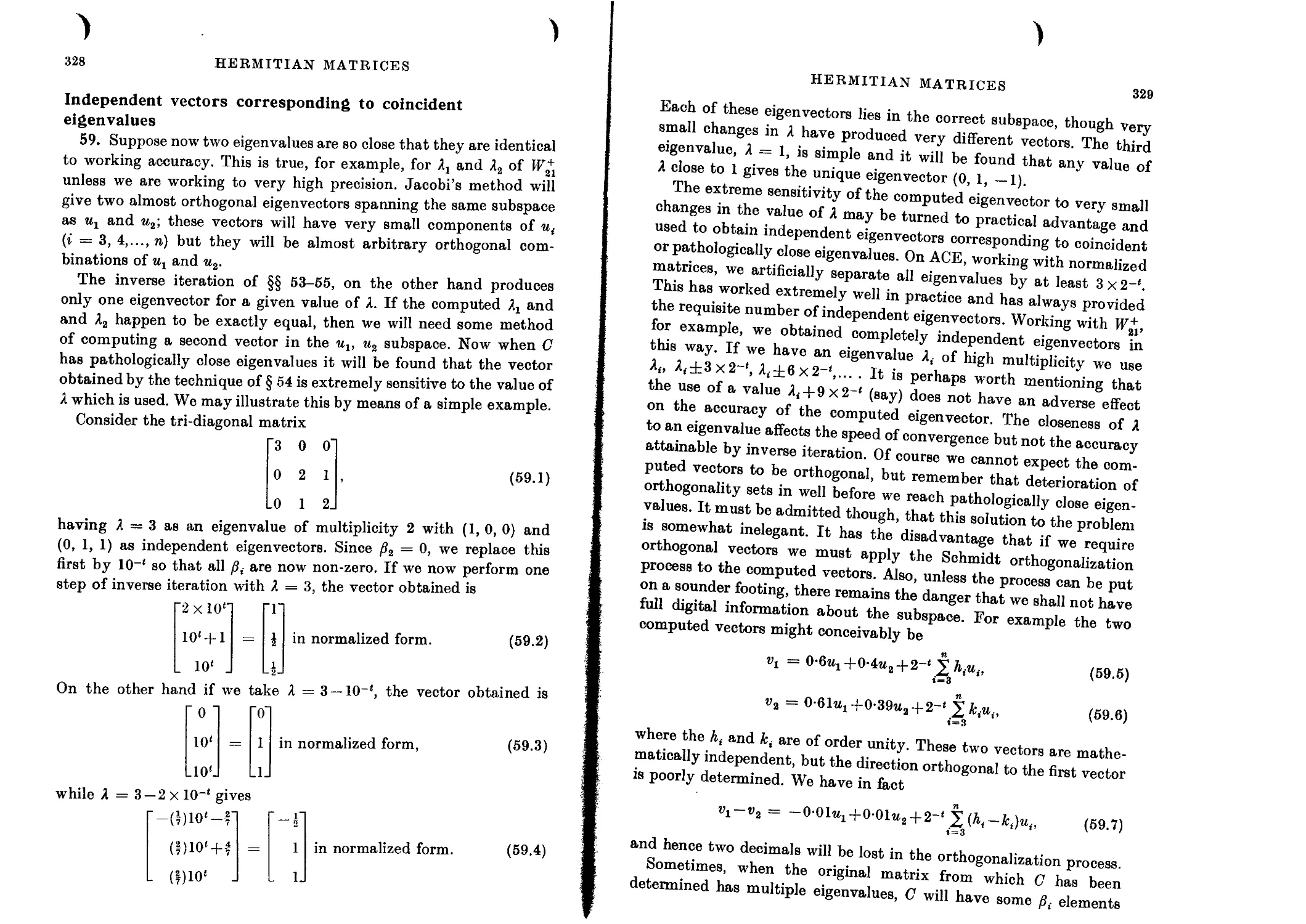

Independent vectors corresponding to coincident eigenvalues 328

Alternative method for computing the eigenvectors 330

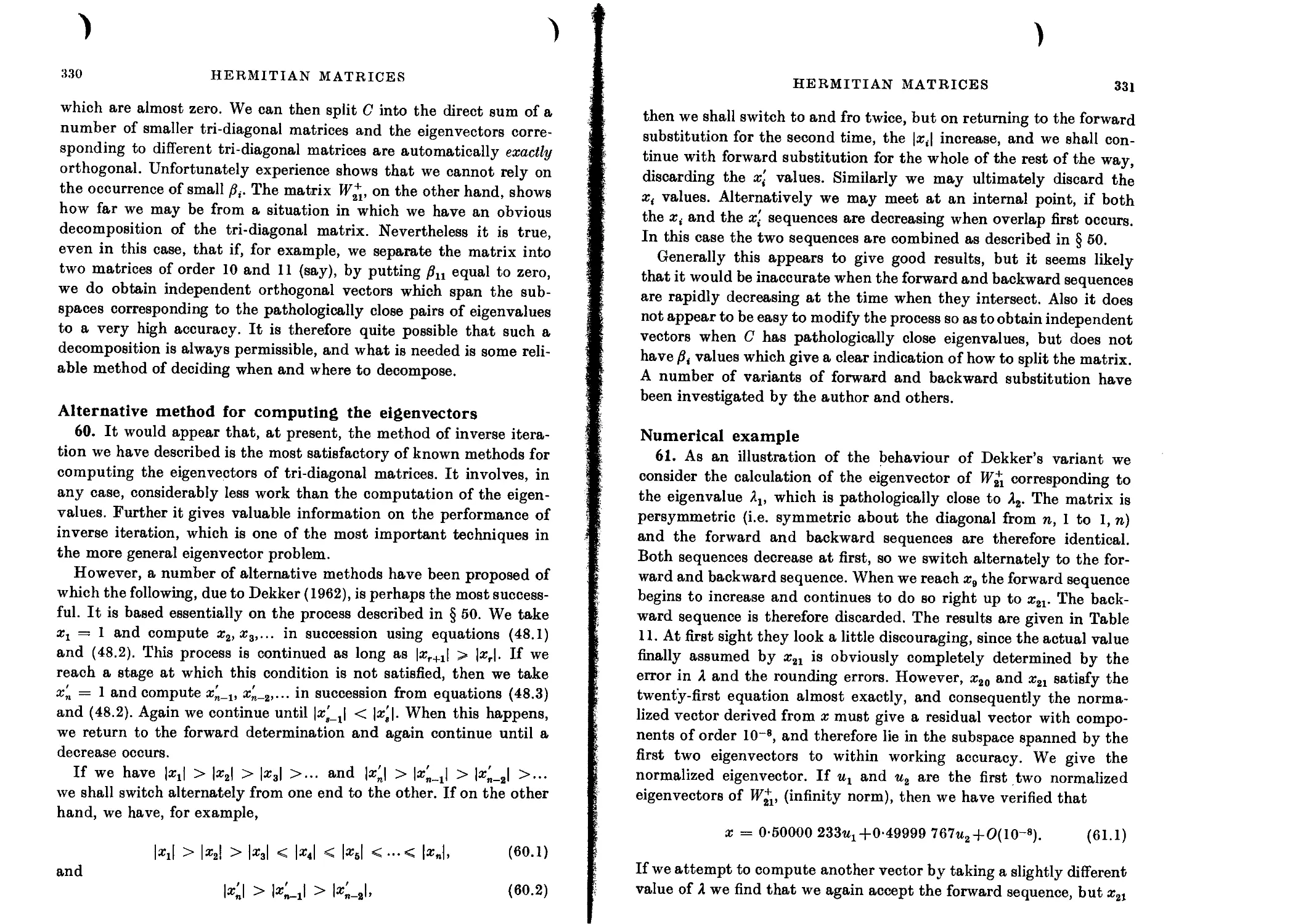

Numerical example 331

Comments on the eigenproblem for tri-diagonal matrices 332

Completion of the Givens and Householder methods 333

Comparison of methods 334

Quasi-symmetric tri-diagonal matrices 335

Calculation of the eigenvectors 336

Equations of the form Ax — S.Bx and ABx = Ле 337

Numerical example 339

Simultaneous reduction of A and В to diagonal form 340

Tri-diagonal A and В 340

Complex Hermitian matrices 342

6. REDUCTION OF A GENERAL MATRIX TO CONDENSED

FORM

Introduction 345

Givens’ method 345

Householder’s method 347

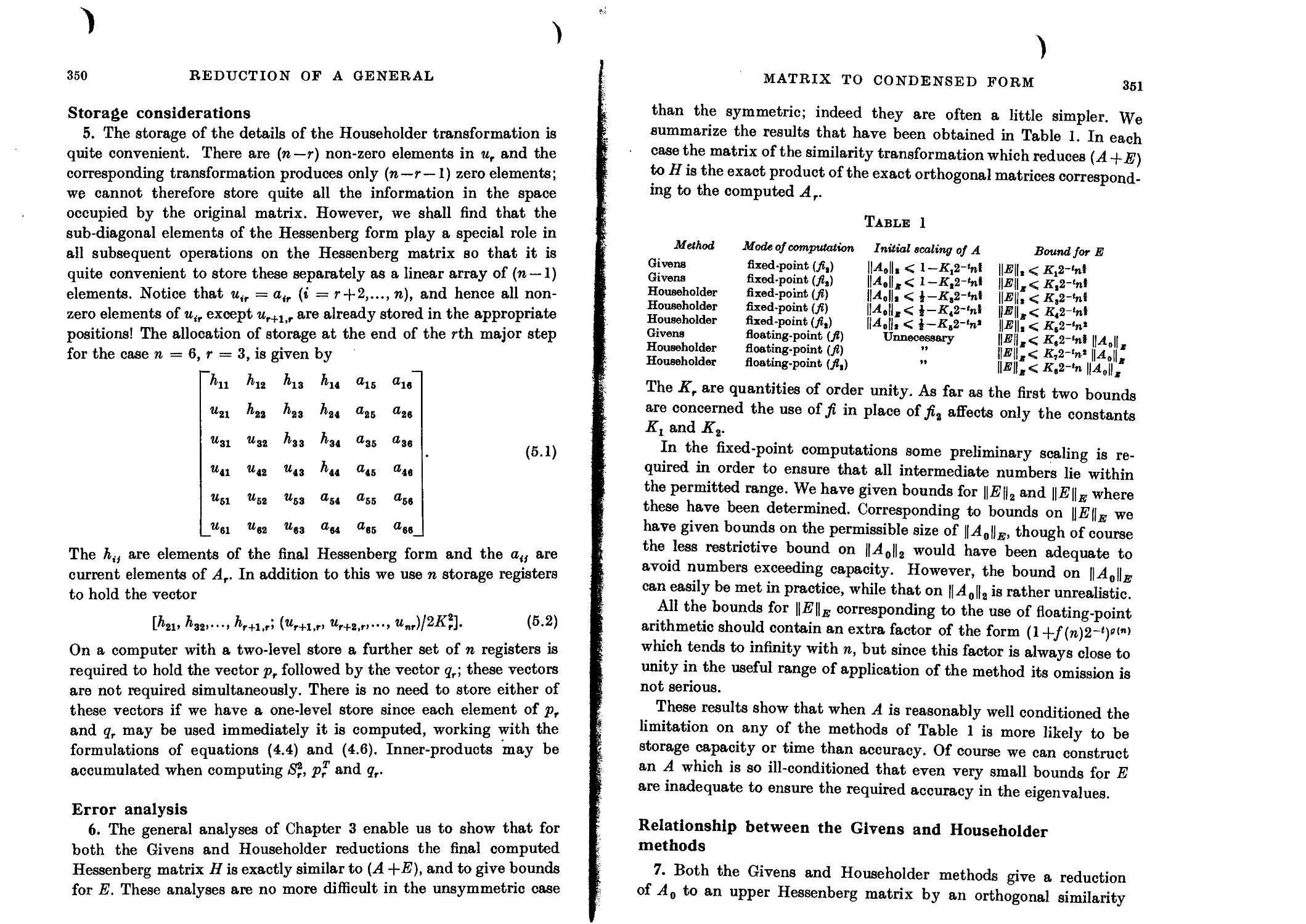

Storage considerations 350

Error analysis 350

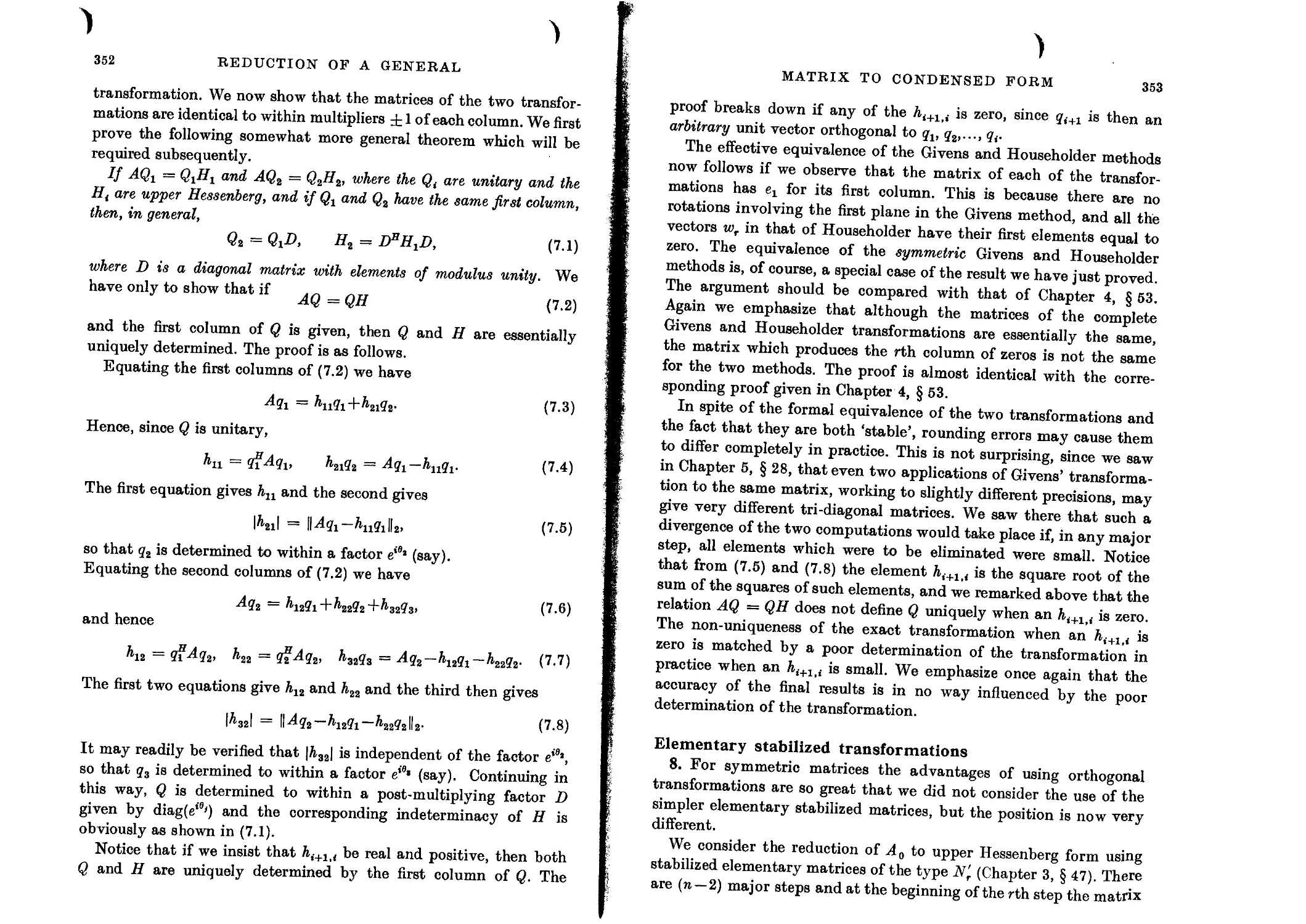

Relationship between the Givens and Householder methods 351

Elementary stabilized transformations 353

Significance of the permutations 355

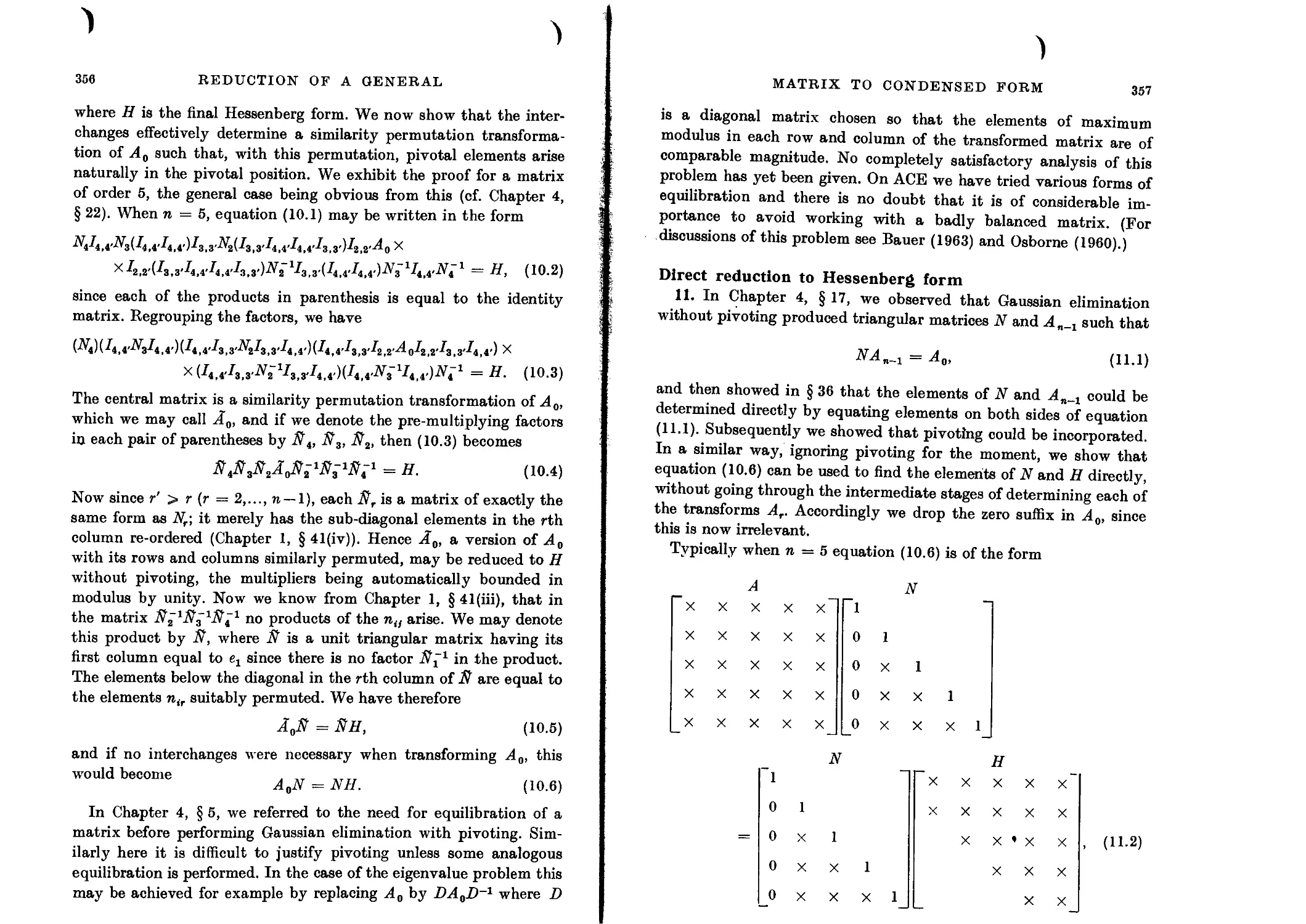

Direct reduction to Hessenberg form 357

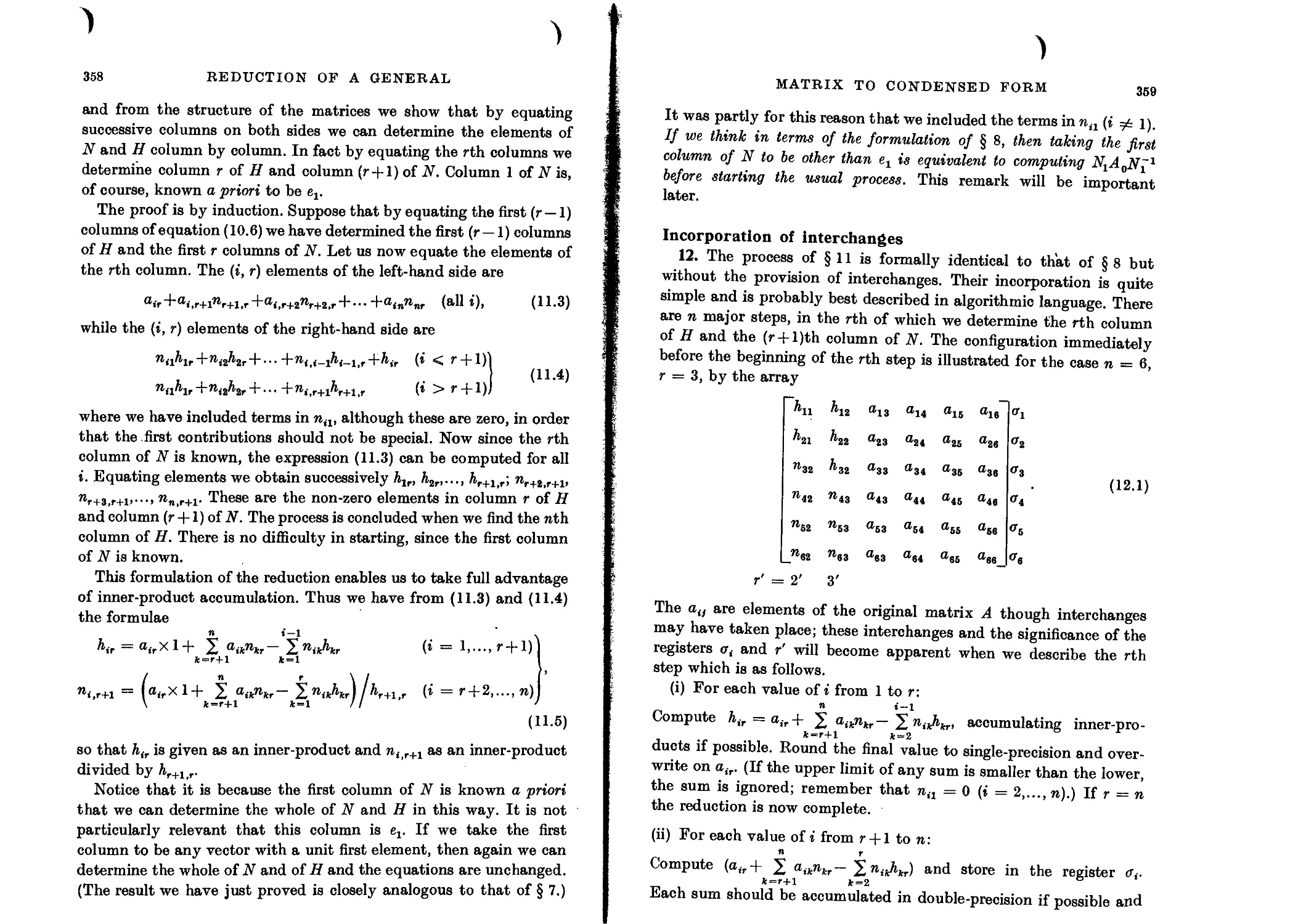

Incorporation of interchanges 359

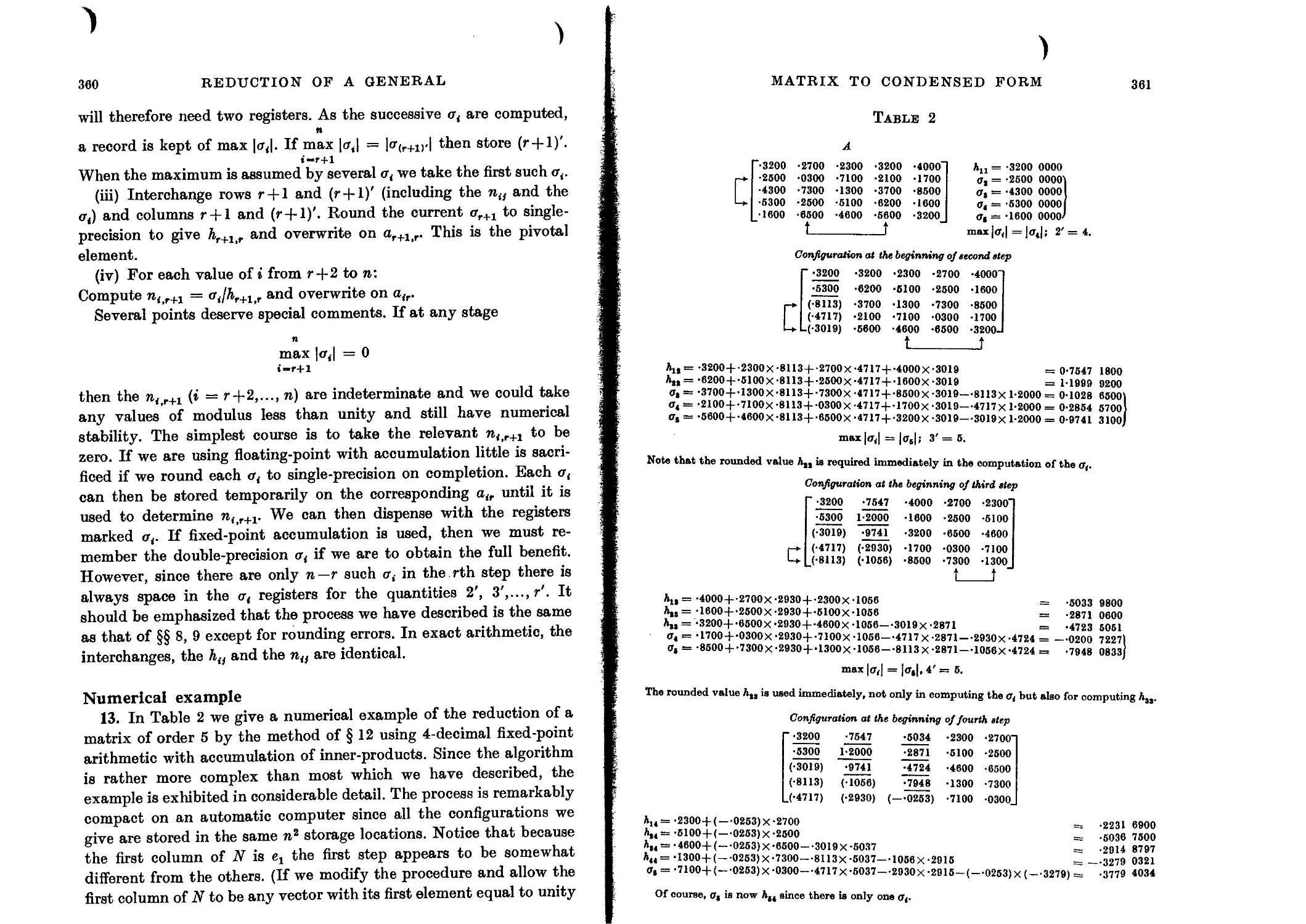

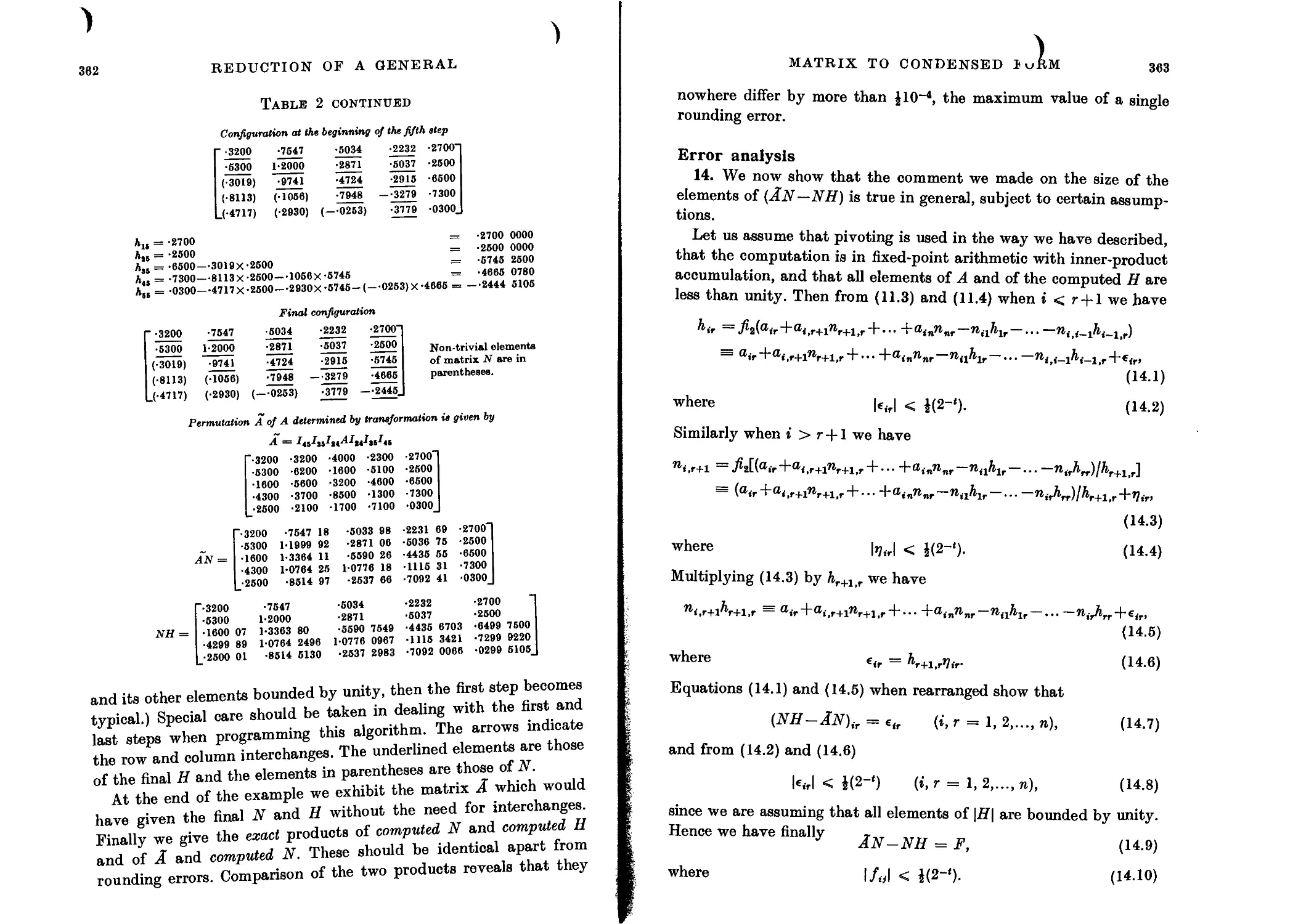

Numerical example 360

Error analysis 363

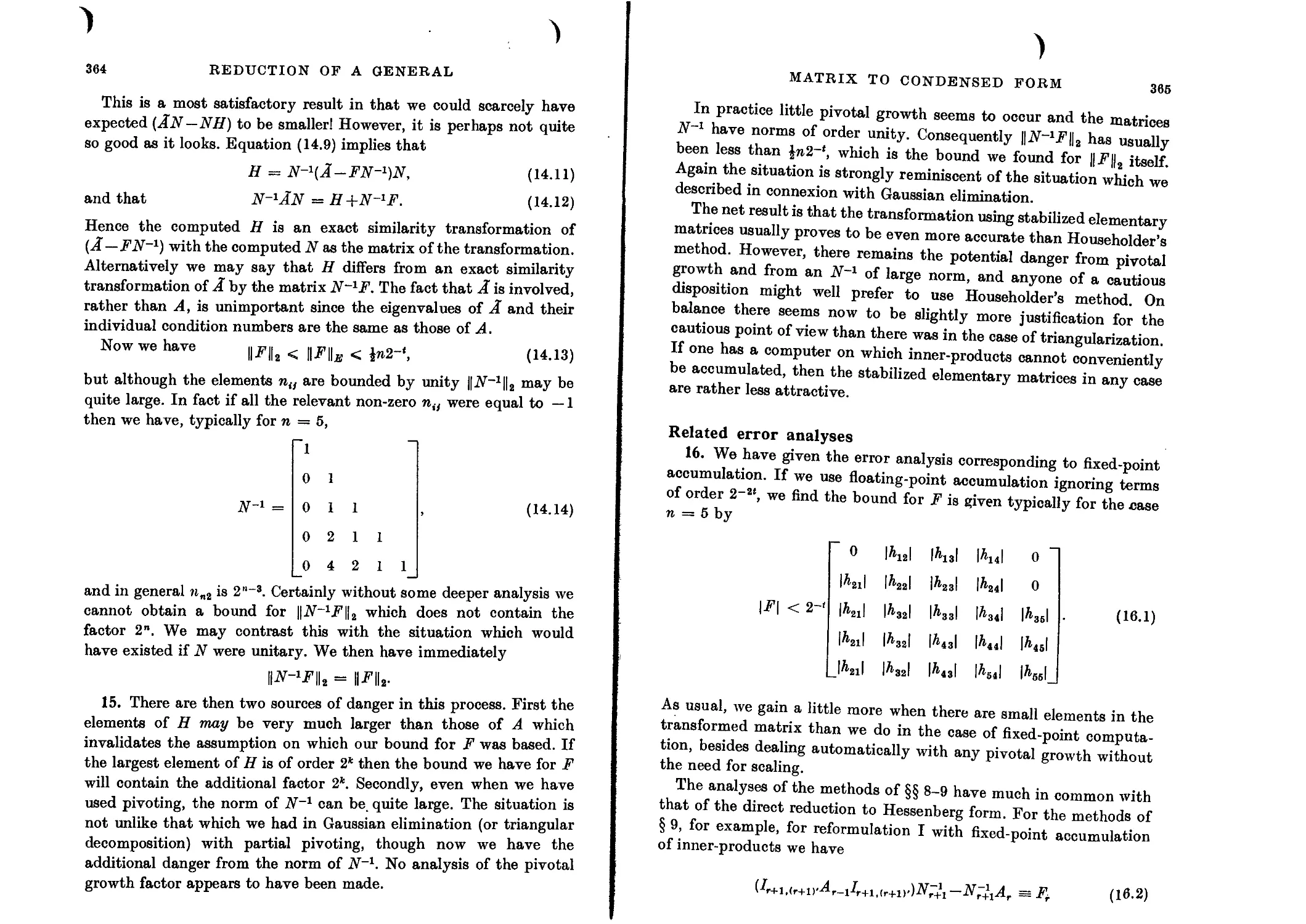

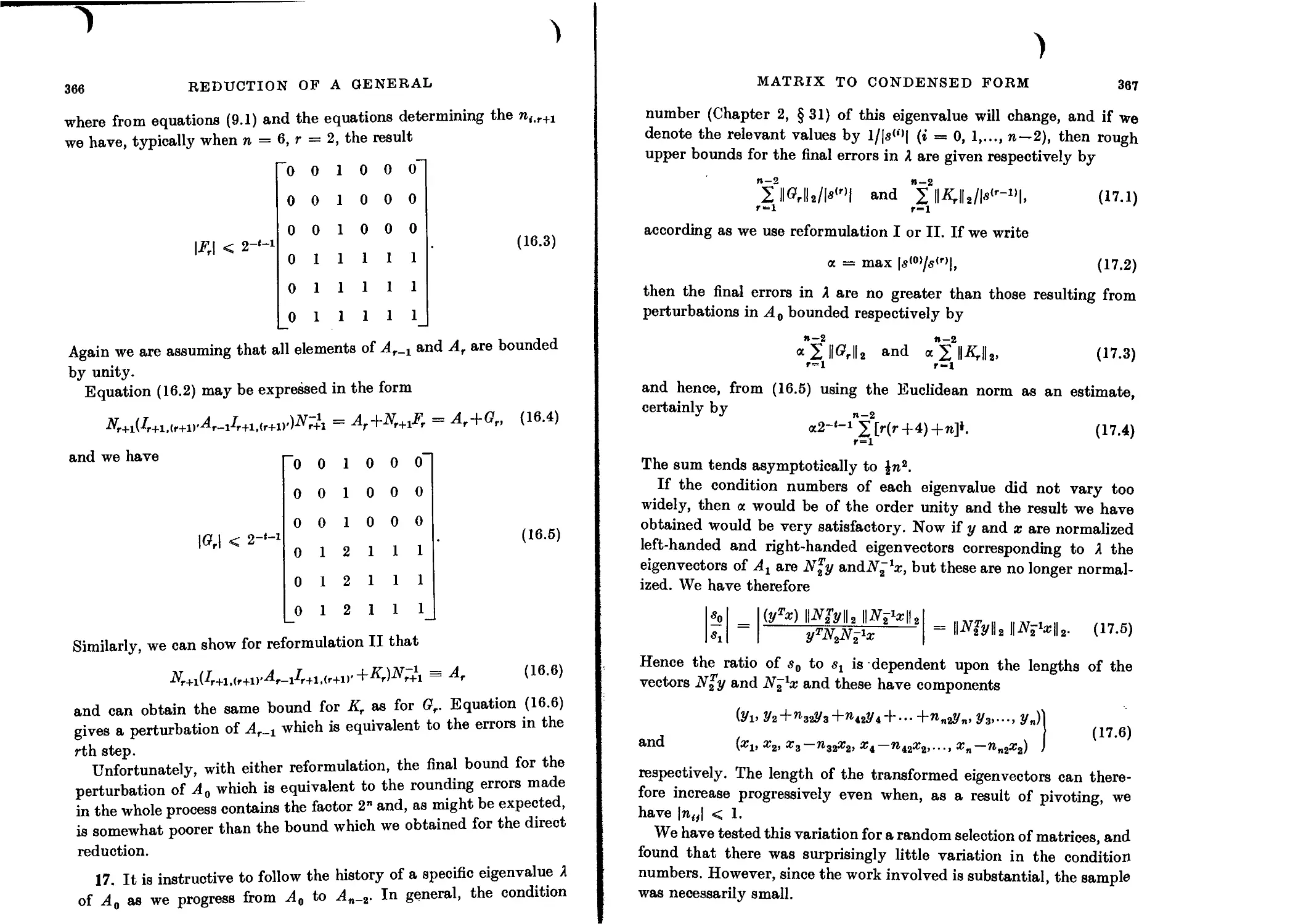

Related error analyses 365

CONTENTS xv

Poor determination of the Hessenberg matrix 368

Reduction to Hessenberg form using stabilized matrices of the type M'jt 368

The method of Krylov 369

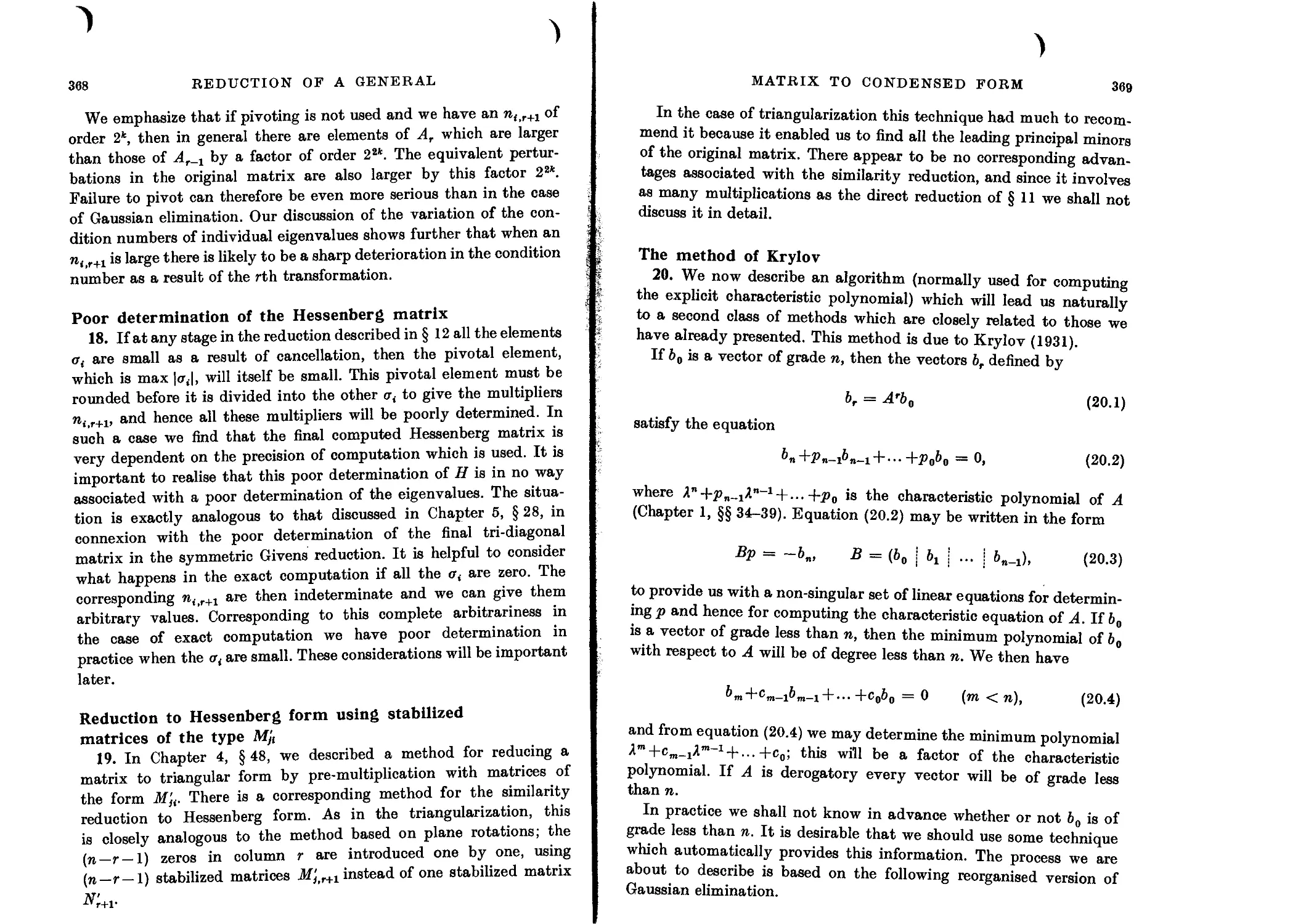

Gaussian elimination by columns 370

Practical difficulties 371

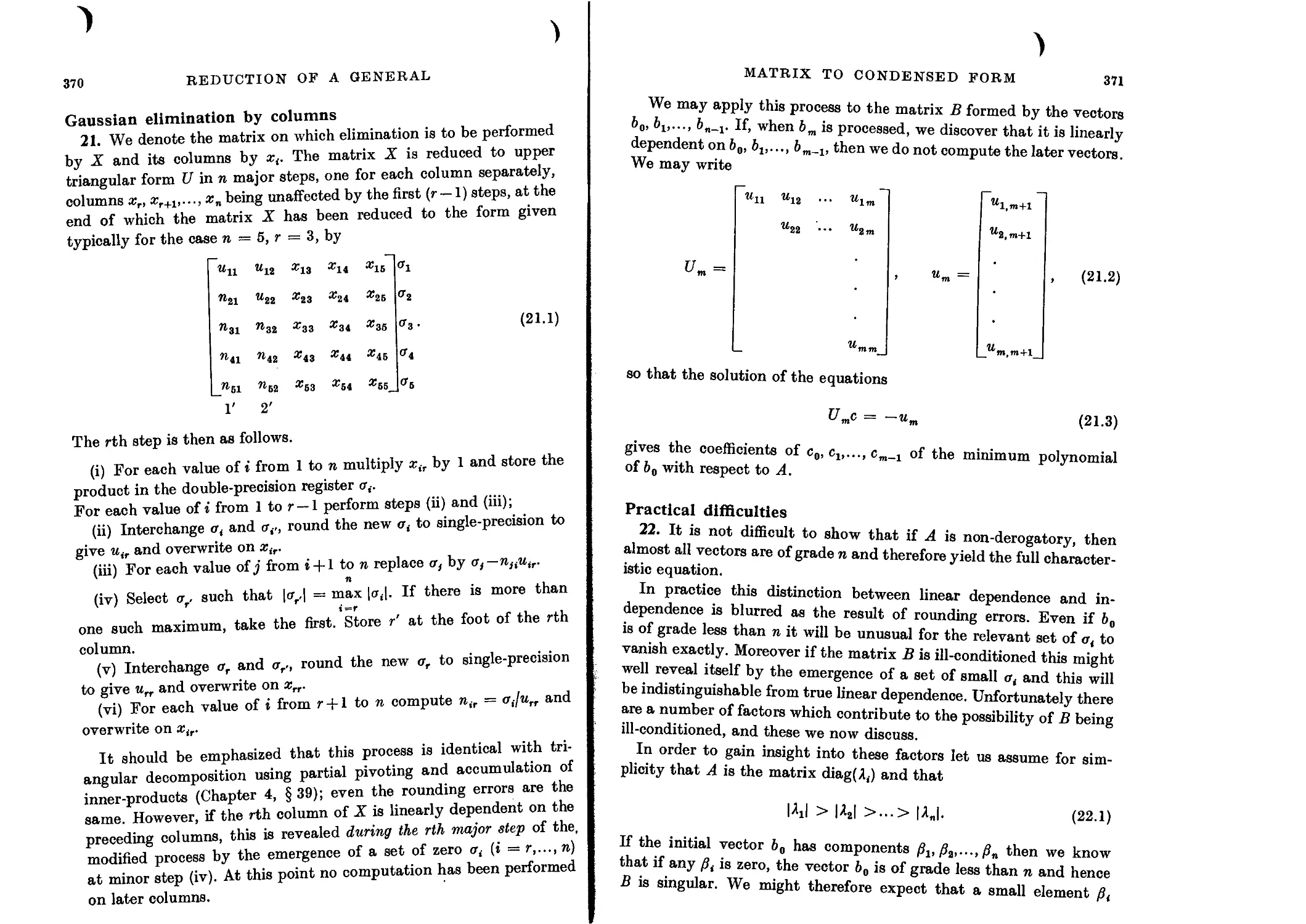

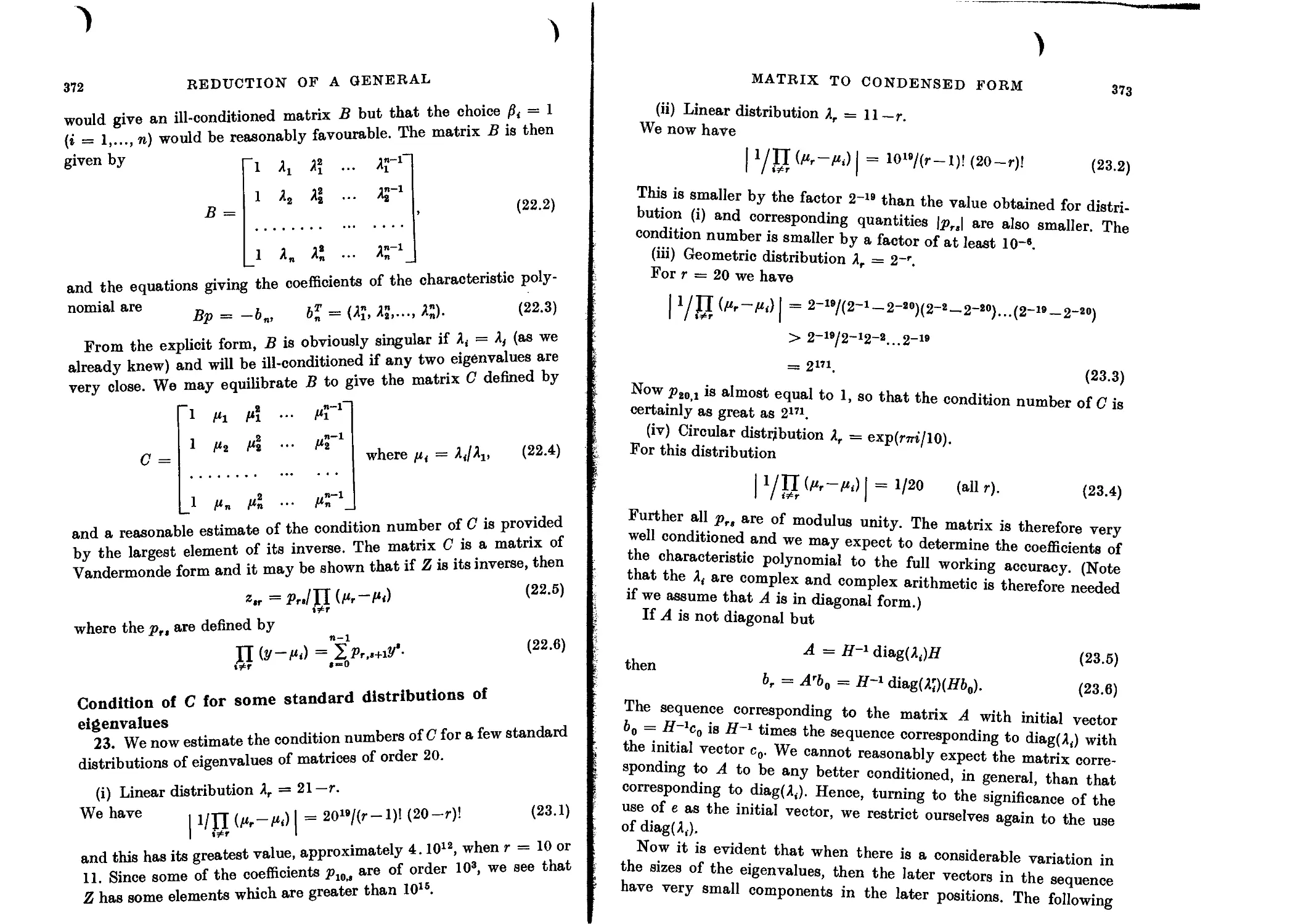

Condition of C for some standard distributions of eigenvalues 372

Initial vectors of grade less than n 374

Practical experience 376

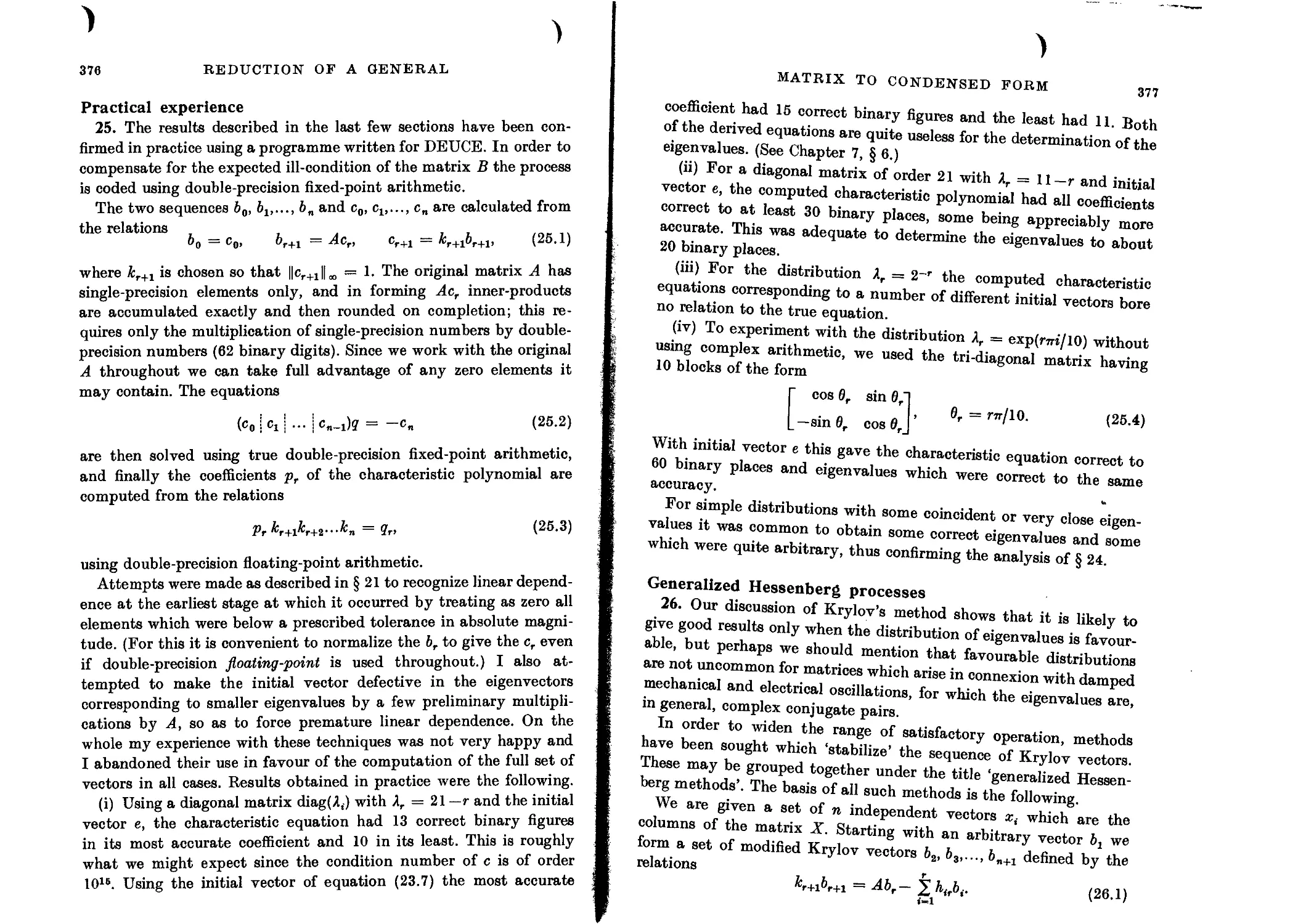

Generalized Hessenberg processes 377

Failure of the generalized Hessenberg process 378

The Hessenberg method 379

Practical procedure 380

Relation between the Hessenberg method and earlier methods 381

The method of Amoldi 382

Practical considerations 383

Significance of re-orthogonalization 385

The method of Lanczos 388

Failure of procedure 389

Numerical example 390

The practical Lanczos process 391

Numerical example 392

General comments on the unsymmetric Lanczos process 394

The symmetric Lanczos process 394

Reduction of a Hessenberg matrix to a more compact form 395

Reduction of a lower Hessenberg matrix to tri-diagonal form 396

The use of interchanges 397

Effect of a small pivotal element 398

Error analysis 399

The Hessenberg process applied to a lower Hessenberg matrix 402

Relationship between the Hessenberg process and the Lanczos process 402

Reduction of a general matrix to tri-diagonal form 403

Comparison with Lanczos method 404

Re-examination of reduction to tri-diagonal form 404

Reduction from upper Hessenberg form to Frobenius form 405

Effect of small pivot 407

Numerical example 408

General comments on the stability 408

Specialized upper Hessenberg form 409

Direct determination of the characteristic polynomial 410

7. EIGENVALUES OF MATRICES OF CONDENSED FORMS

Introduction 413

Explicit polynomial form 413

Condition numbers of explicit polynomials 416

Some typical distributions of zeros 417

Final assessment of Krylov’s method 421

General comments on explicit polynomials 421

Tri-diagonal matrices 423

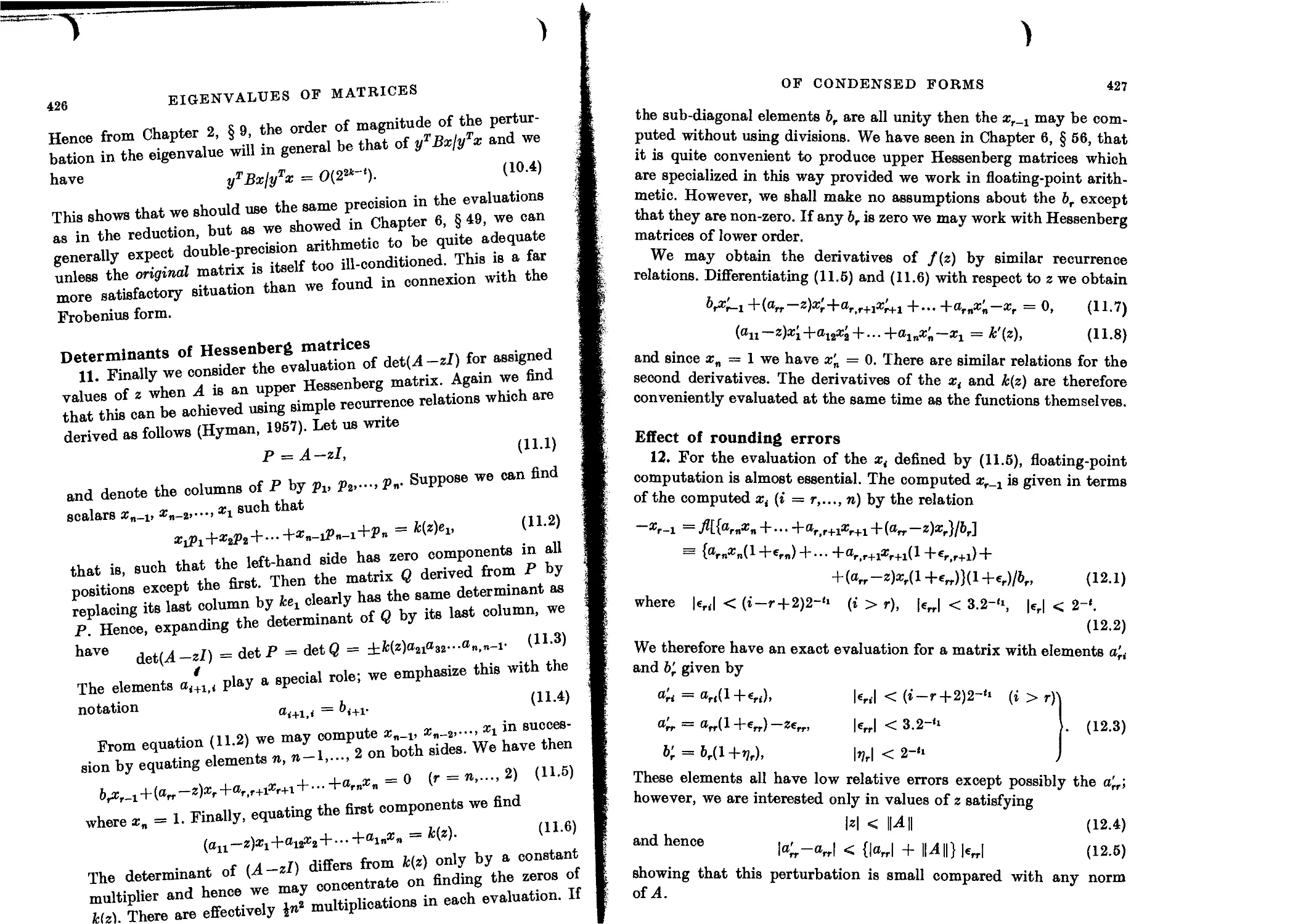

Determinants of Hessenberg matrices 426

Effect of rounding errors 427

Floating-point accumulation 428

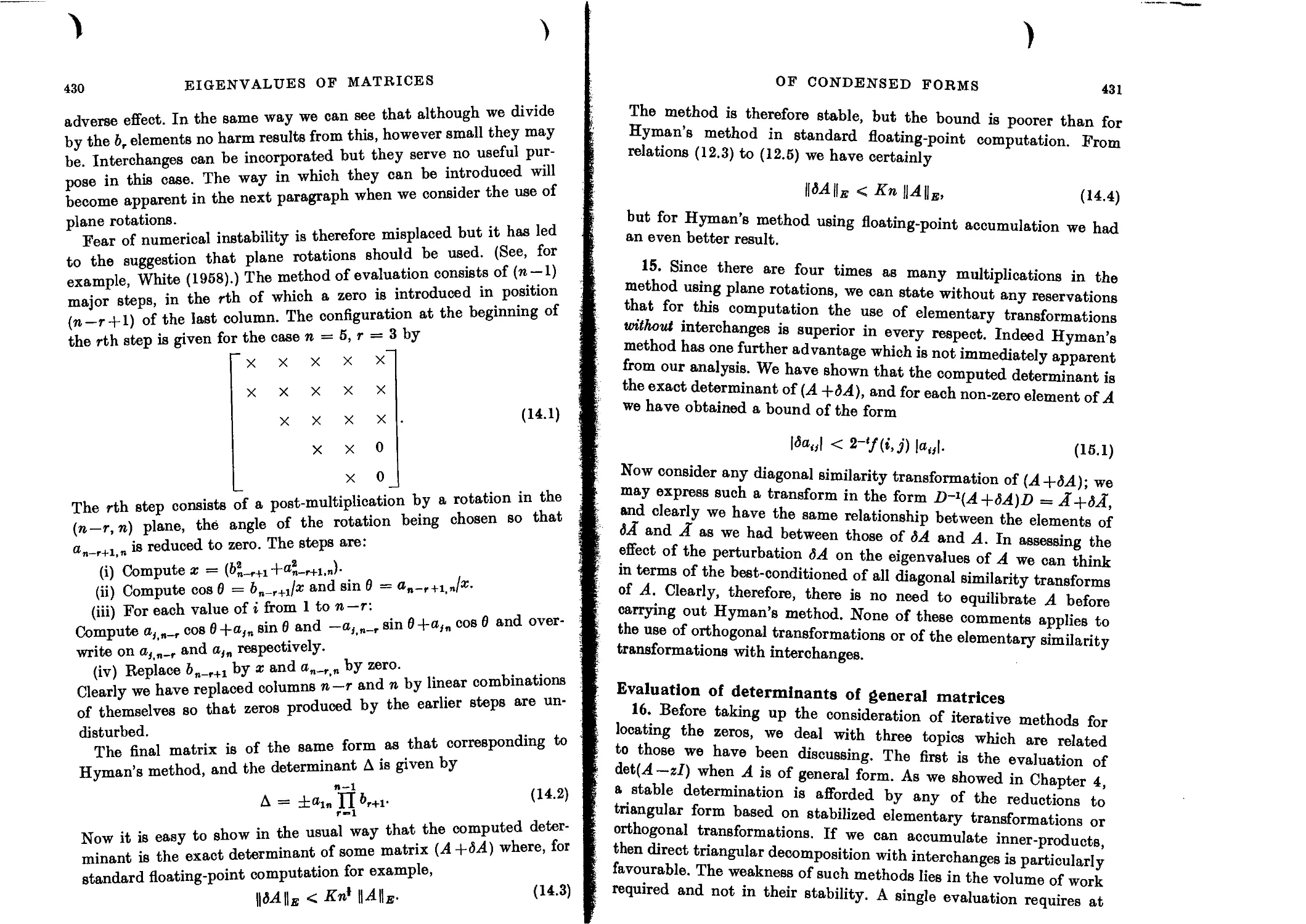

Evaluation by orthogonal transformations 429

xvi CONTENTS

Evaluation of determinants of general matrices 431

The generalized eigenvalue problem 432

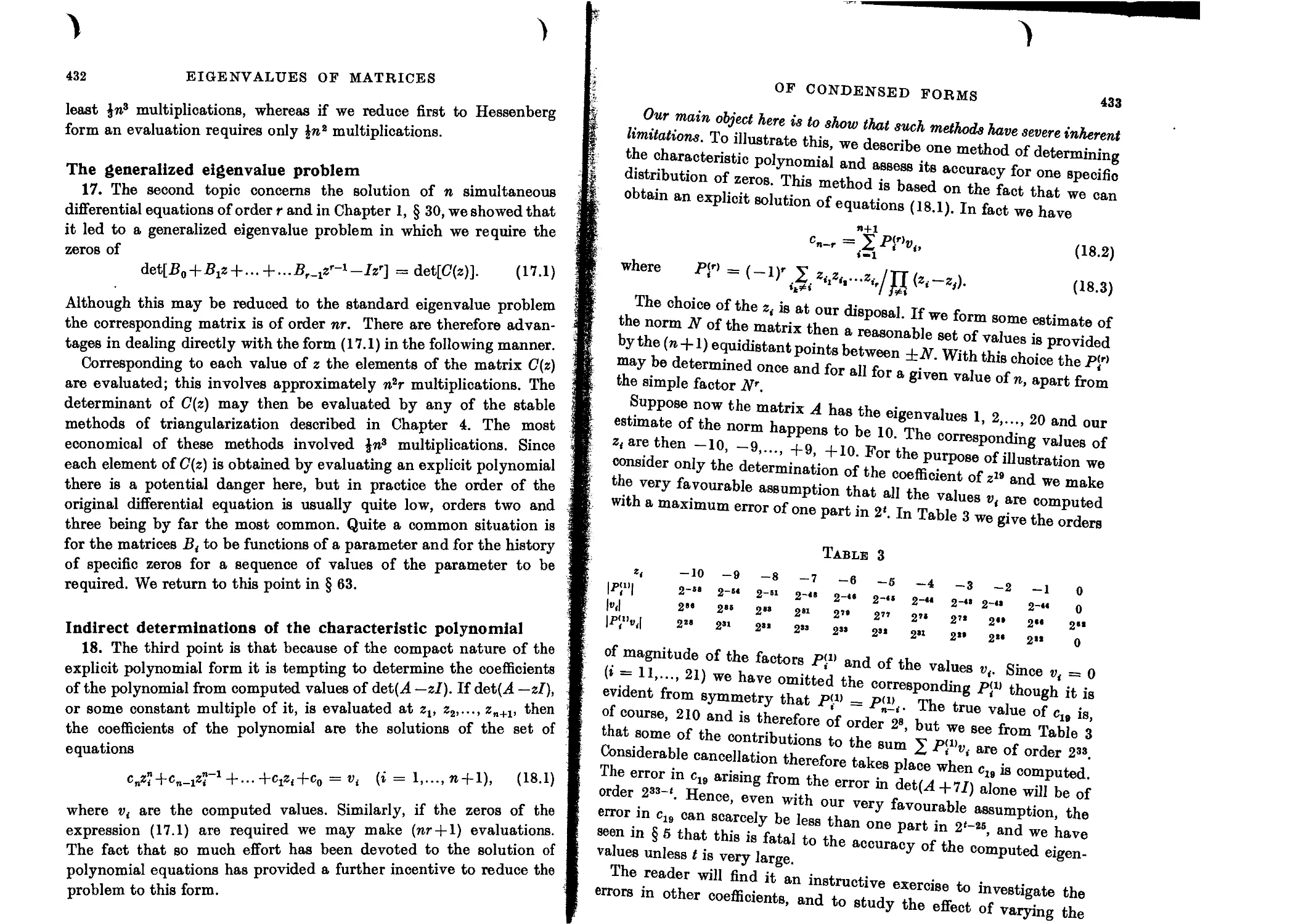

Indirect determinations of the characteristic polynomial 432

Le Verrier’s method 434

Iterative methods based on interpolation 435

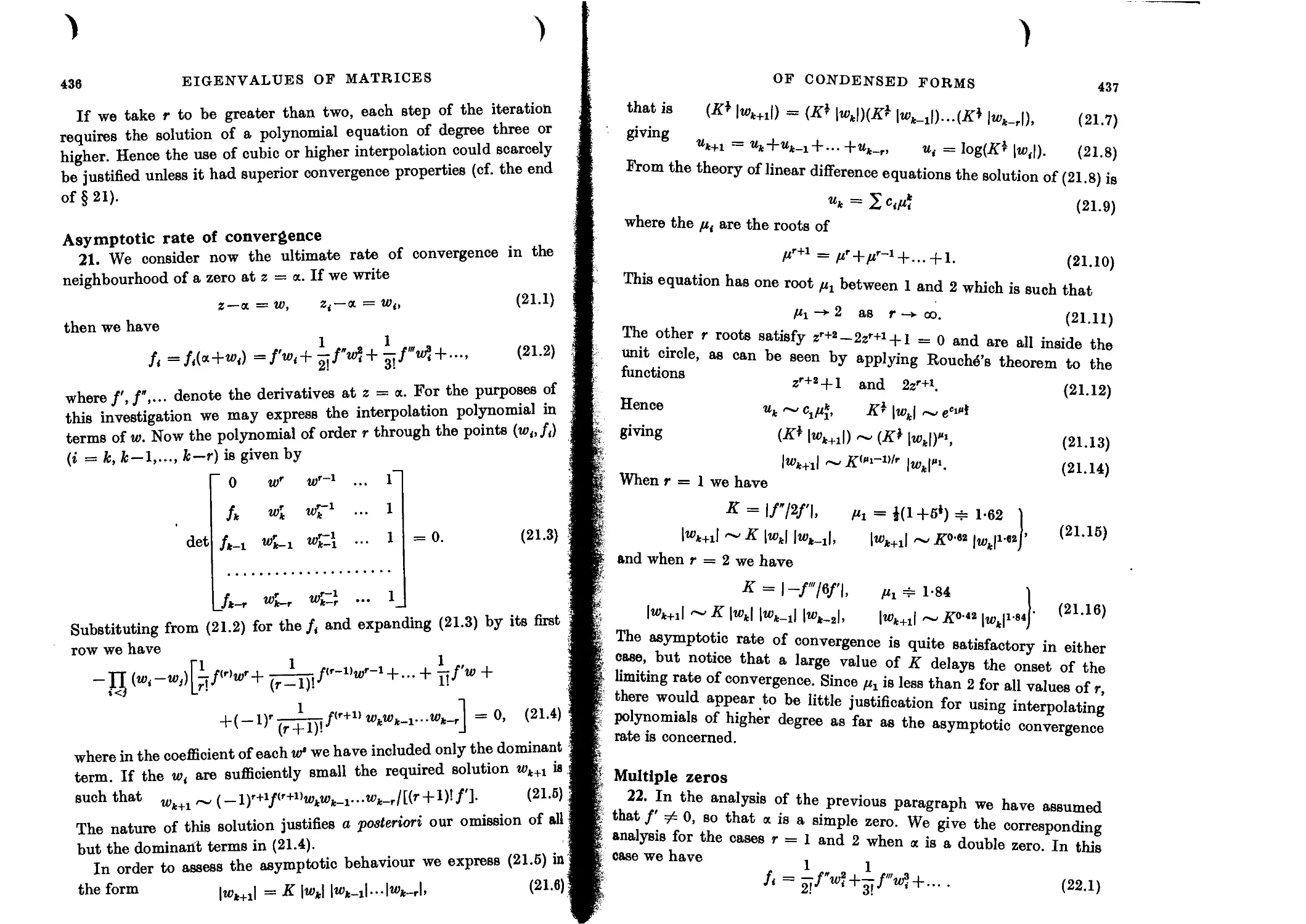

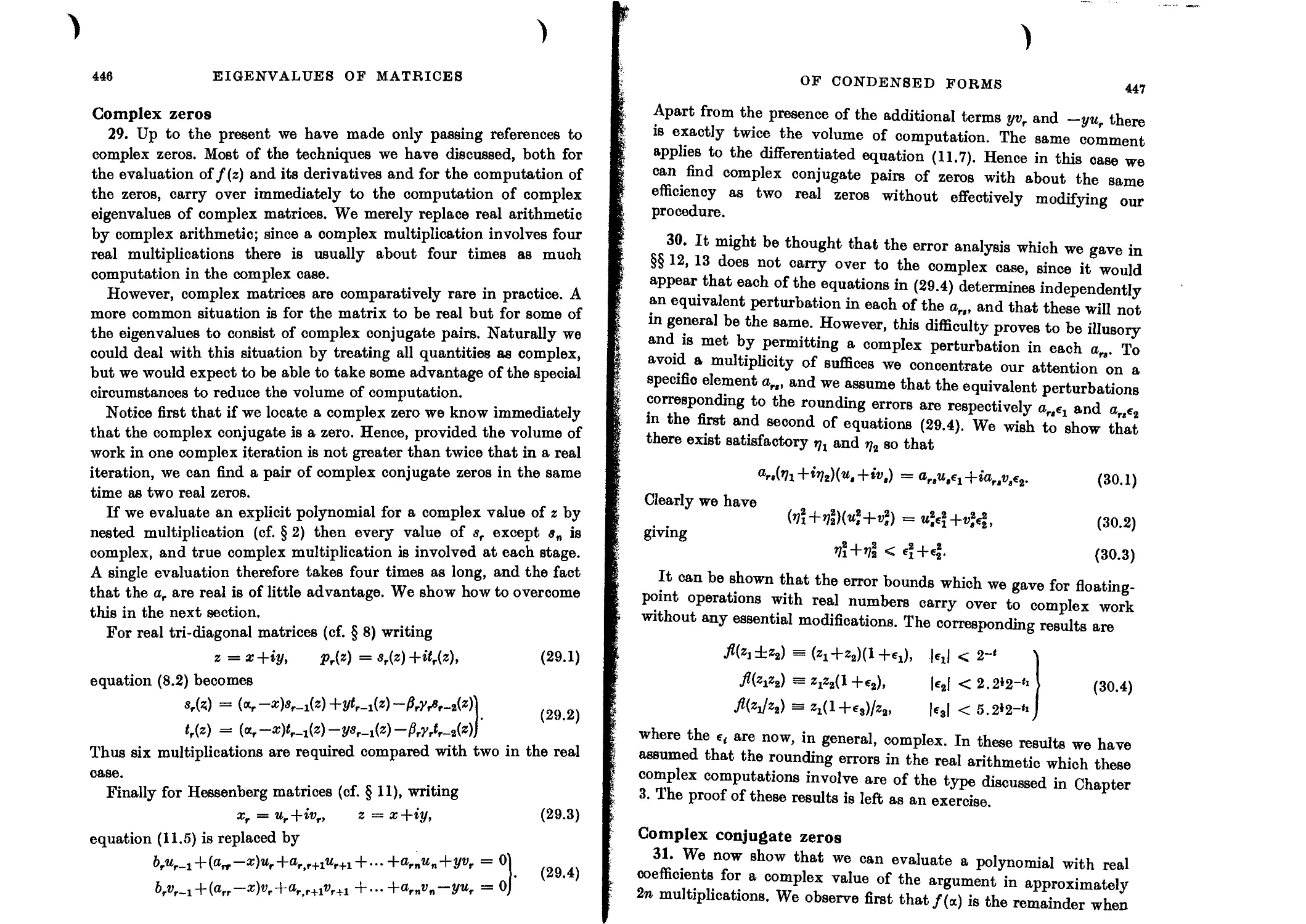

Asymptotic rate of convergence 436

Multiple zeros 437

Inversion of the functional relationship 439

The method of bisection 440

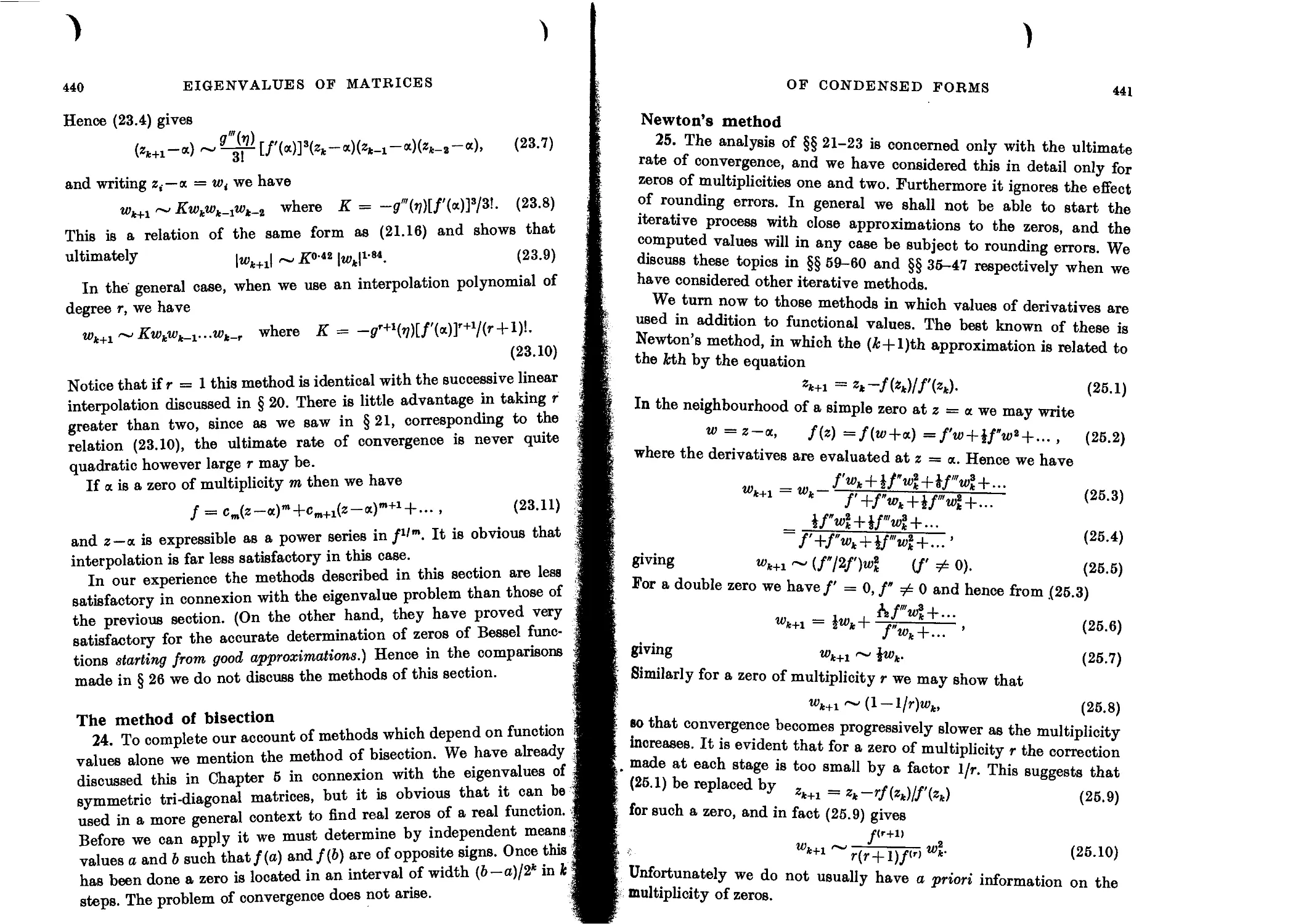

Newton’s method 441

Comparison of Newton’s method with interpolation 442

Methods giving cubic convergence 443

Laguerre’s method 443

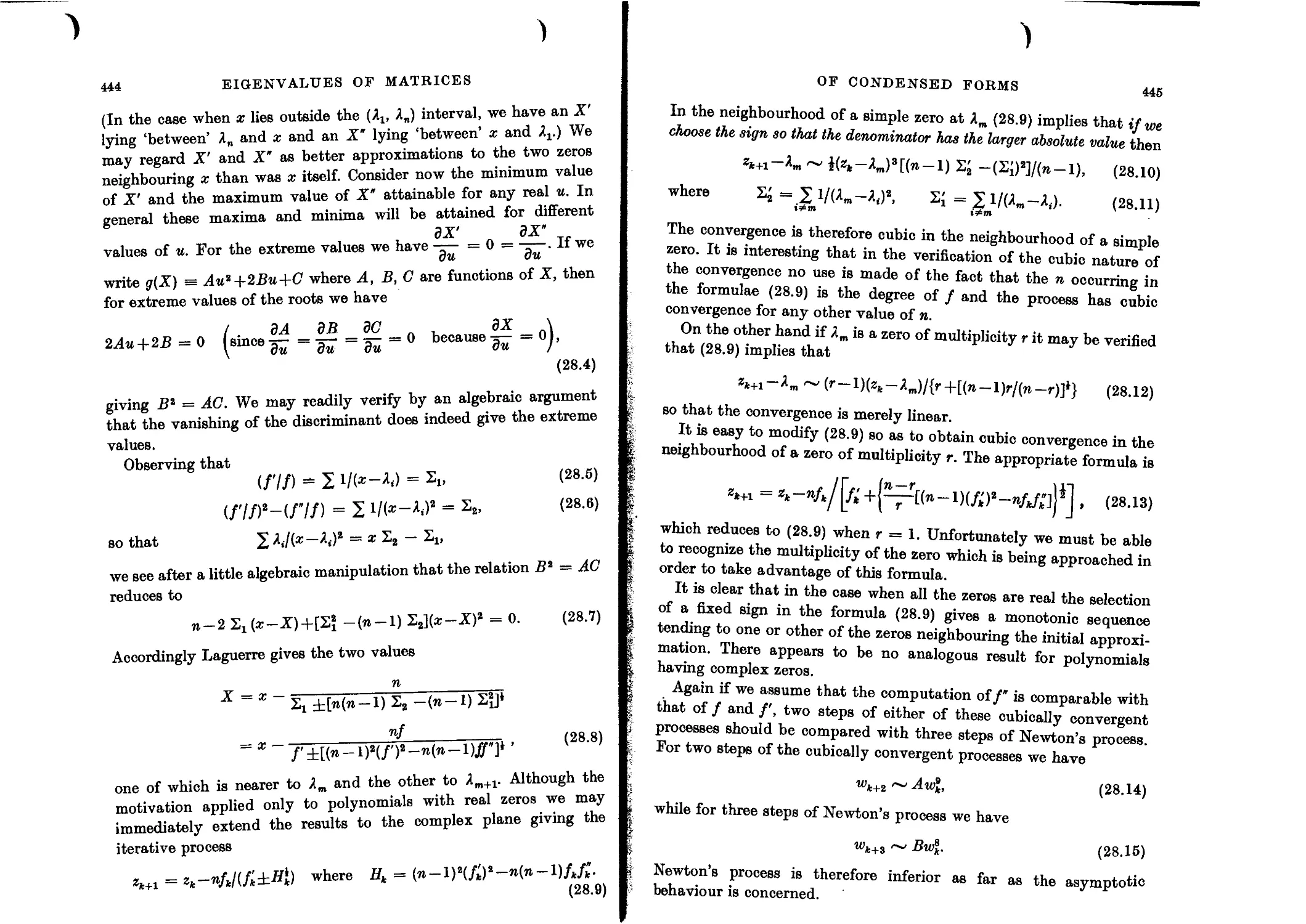

Complex zeros 446

Complex conjugate zeros 447

Bairstow’s method 449

The generalized Bairstow method 450

Practical considerations 452

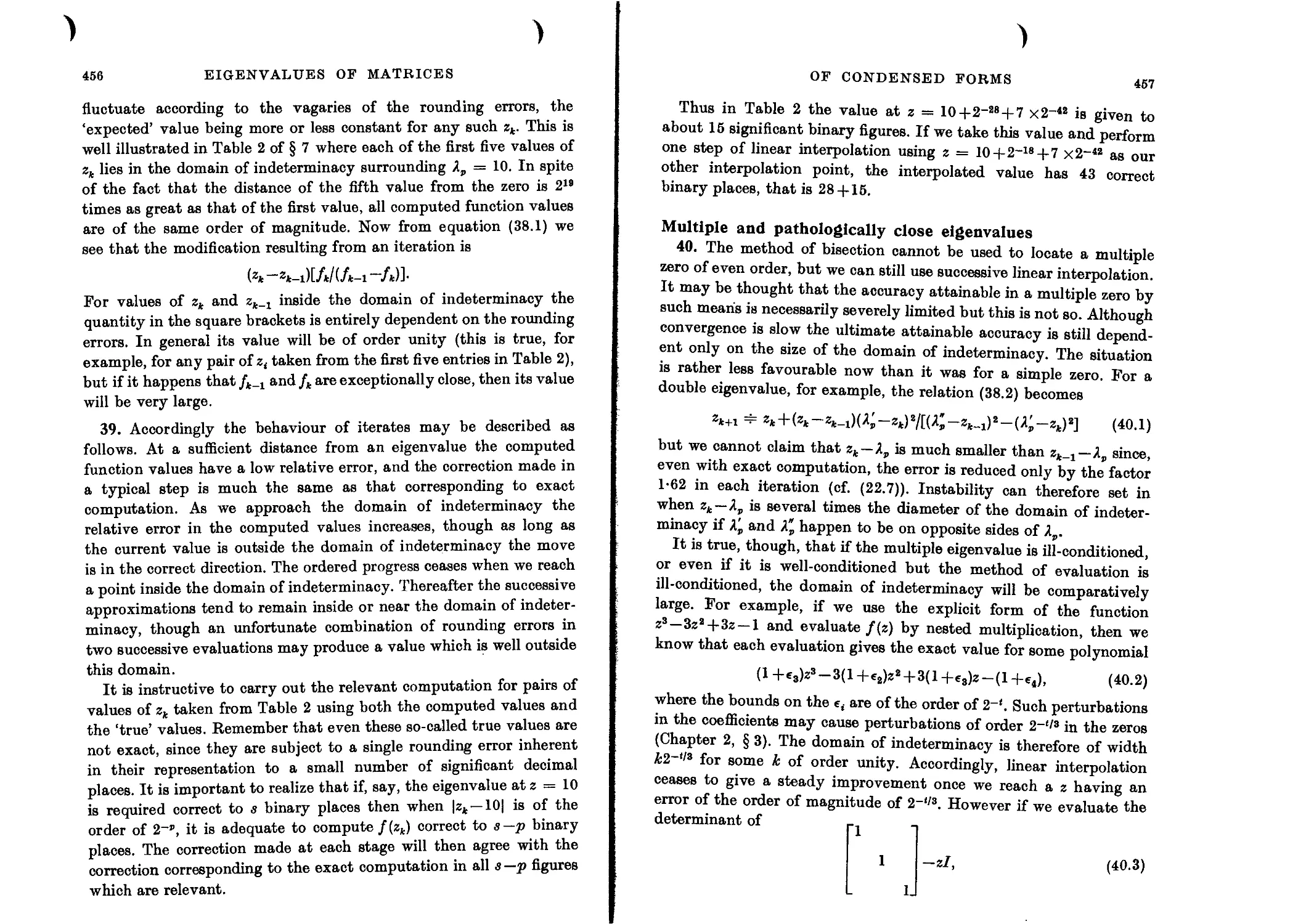

Effect of rounding errors on asymptotic convergence 453

The method of bisection 453

Successive linear interpolation 455

Multiple and pathologically close eigenvalues 457

Other interpolation methods 458

Methods involving the use of a derivative 459

Criterion for acceptance of a zero 461

Effect of rounding errors 462

Suppression of computed zeros 464

Deflation for Hessenberg matrices 465

Deflation of tri-diagonal matrices 468

Deflation by rotations or stabilized elementary transformations 469

Stability of the deflation 472

General comments on deflation 474

Suppression of computed zeros 474

Suppression of computed quadratic factors 475

General comments on the methods of suppression 476

Asymptotic rates of convergence 478

Convergence in the large 478

Complex zeros 481

Recommendations 482

Complex matrices 483

Matrices containing an independent parameter 483

8. THE LR AND QR ALGORITHMS

Introduction 485

Real matrices with complex eigenvalues 486

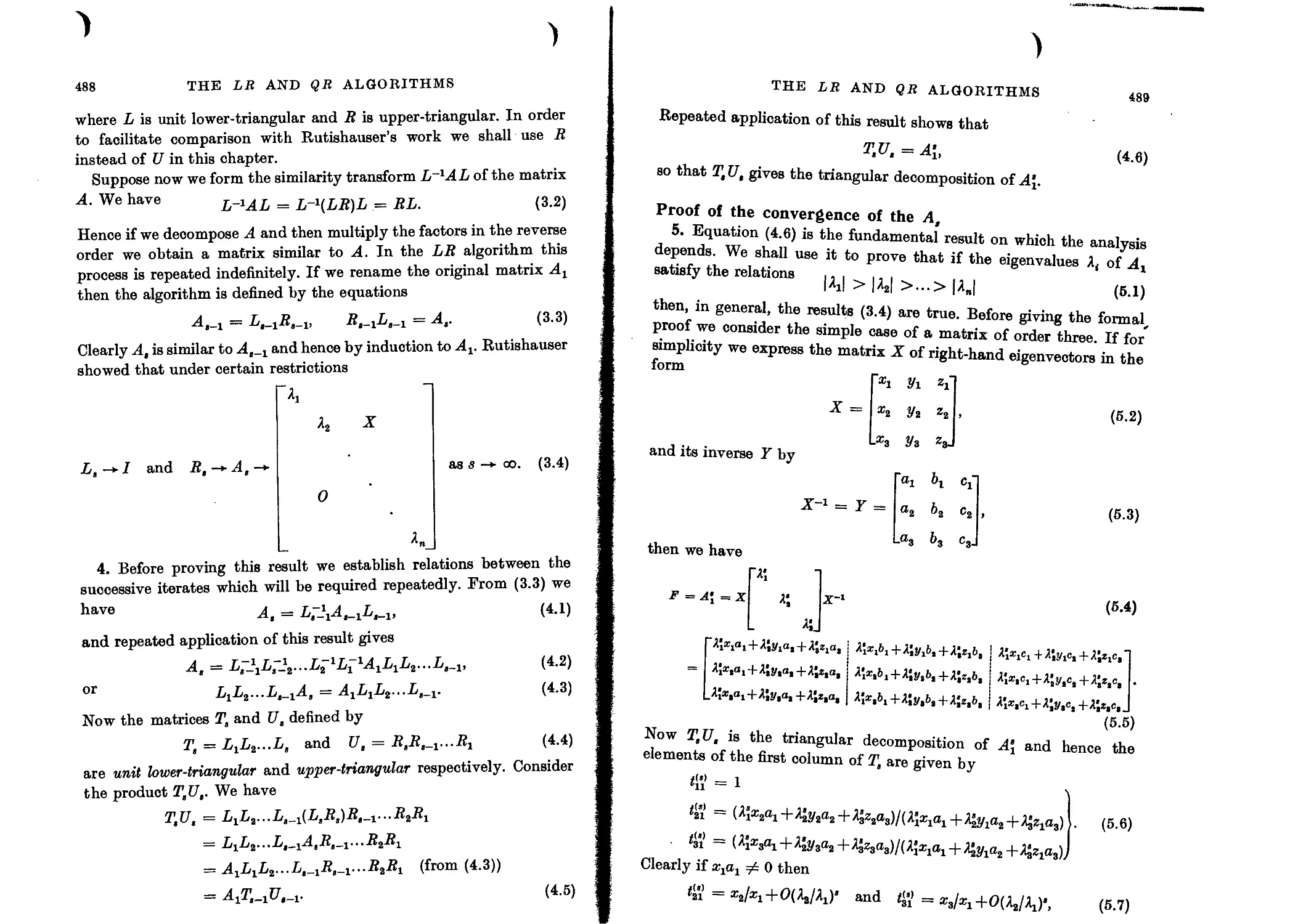

The LR algorithm 487

Proof of the convergence of the A, 489

Positive definite Hermitian matrices 493

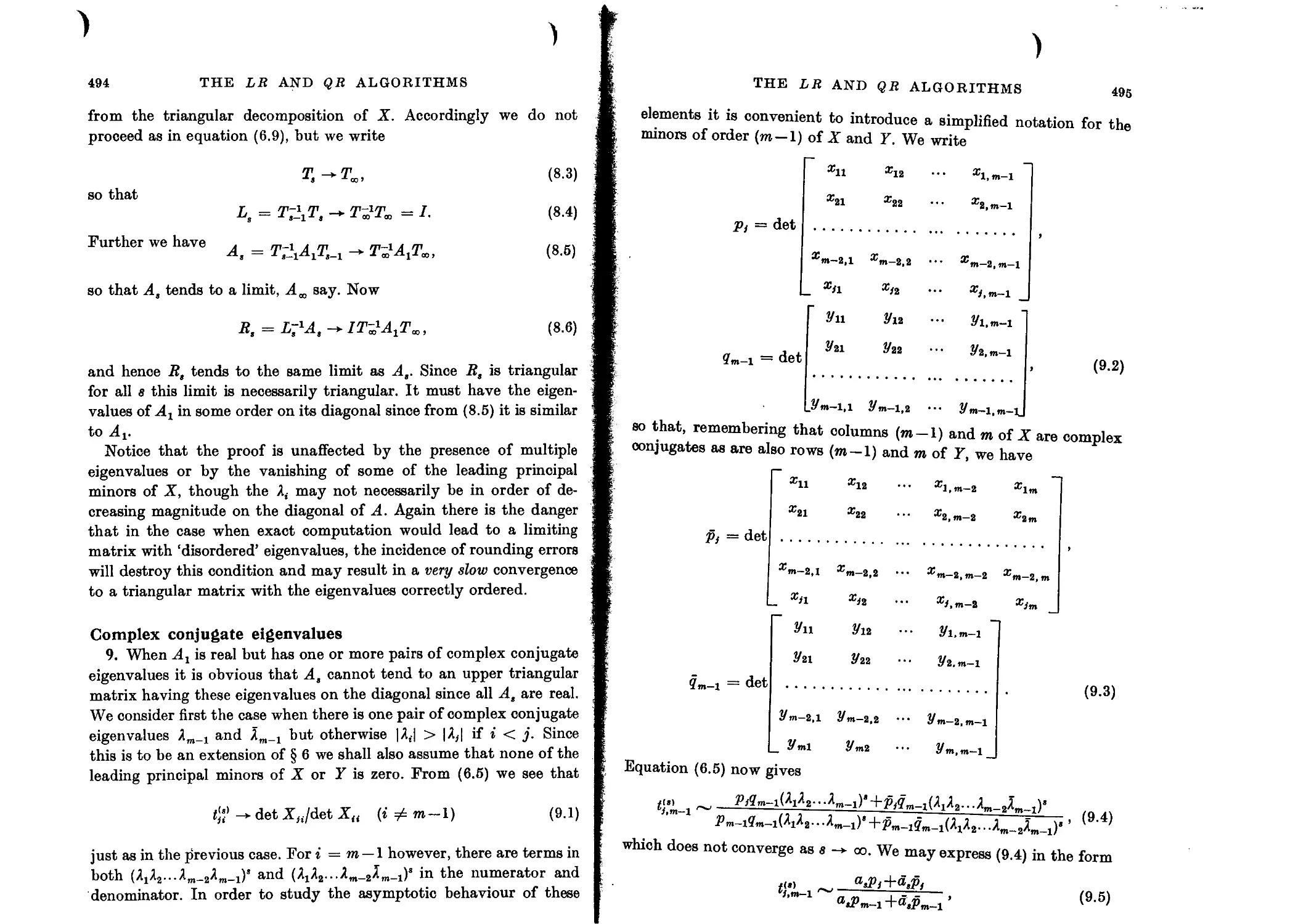

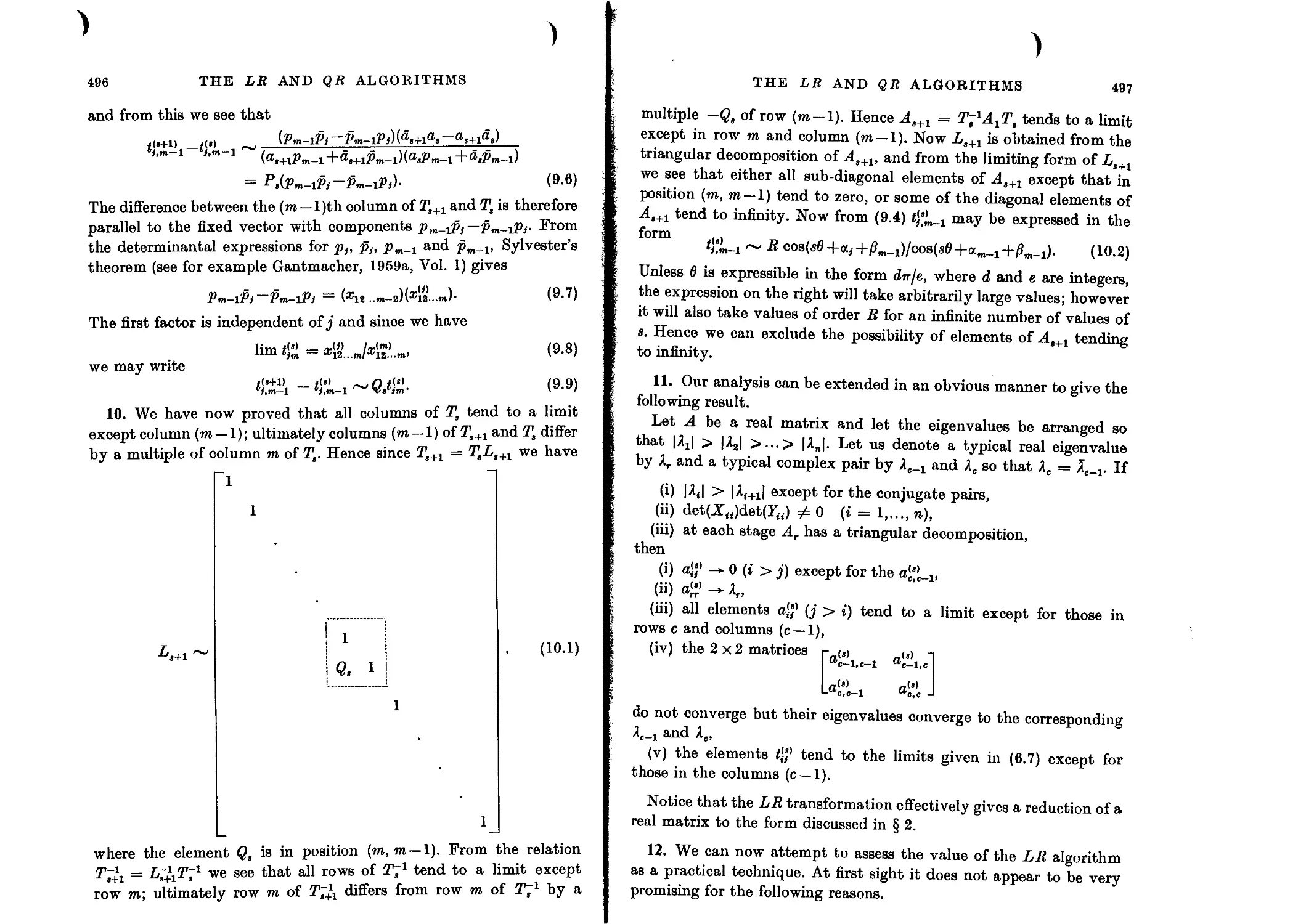

Complex conjugate eigenvalues 494

Introduction of interchanges 498

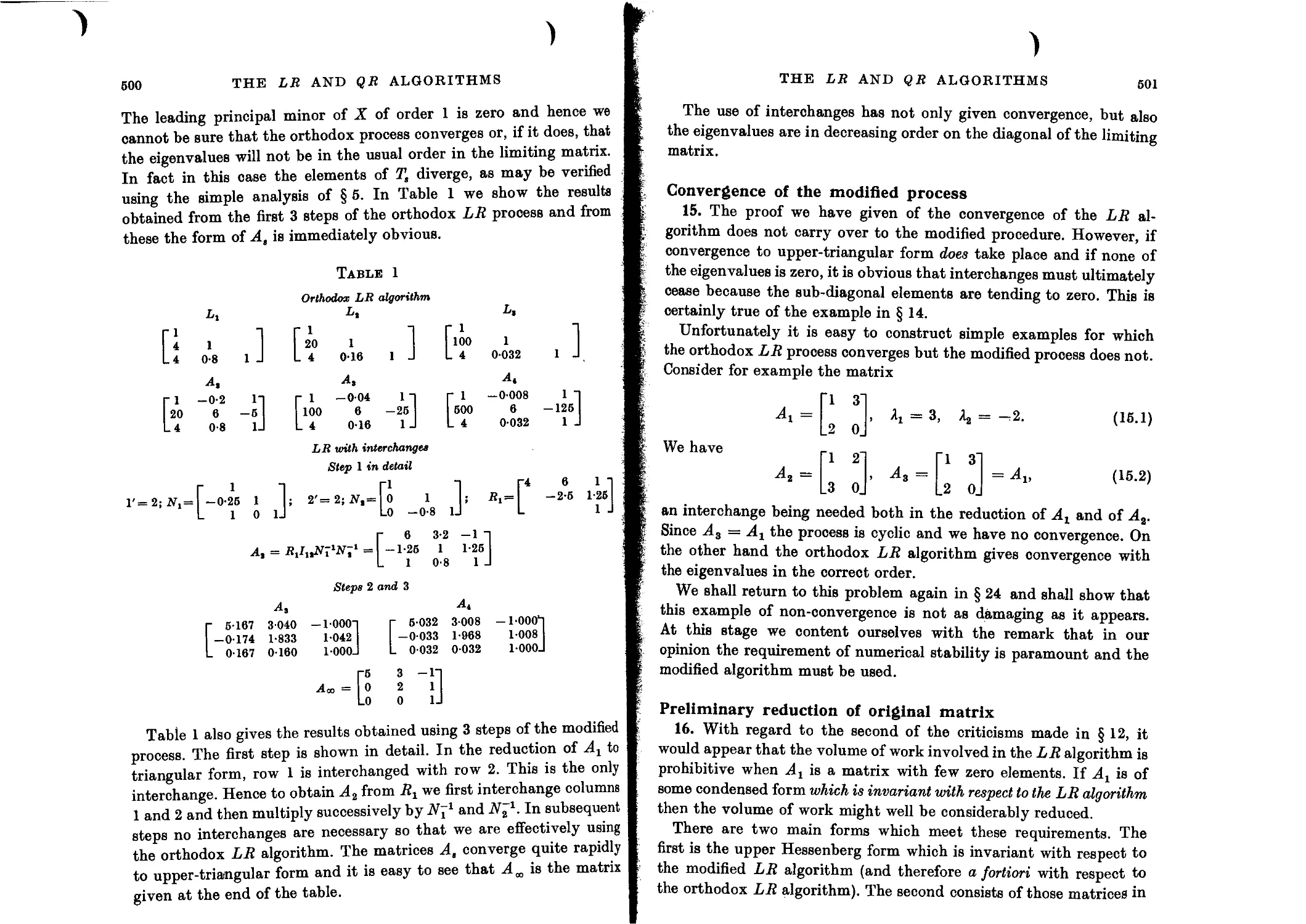

Numerical example 499

Convergence of the modified process 501

CONTENTS xvu

Preliminary reduction of original matrix 501

Invariance of upper Hessenberg form 502

Simultaneous row and column operations 504

Acceleration of convergence 505

Incorporation of shifts of origin 506

Choice of shift of origin 507

Deflation of the matrix 509

Practical experience of convergence 510

Improved shift strategy 511

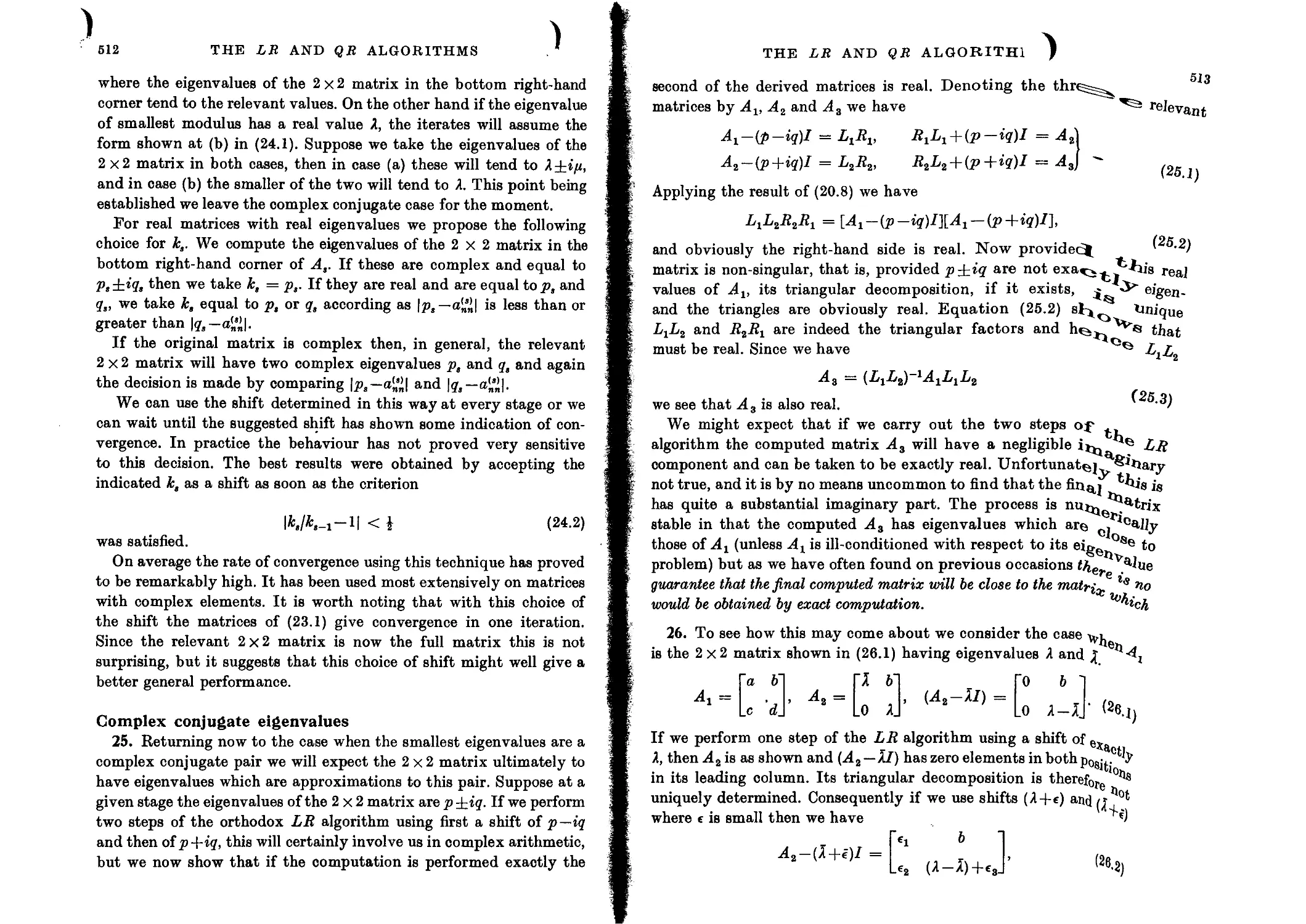

Complex conjugate eigenvalues 512

Criticisms of the modified LR algorithm 515

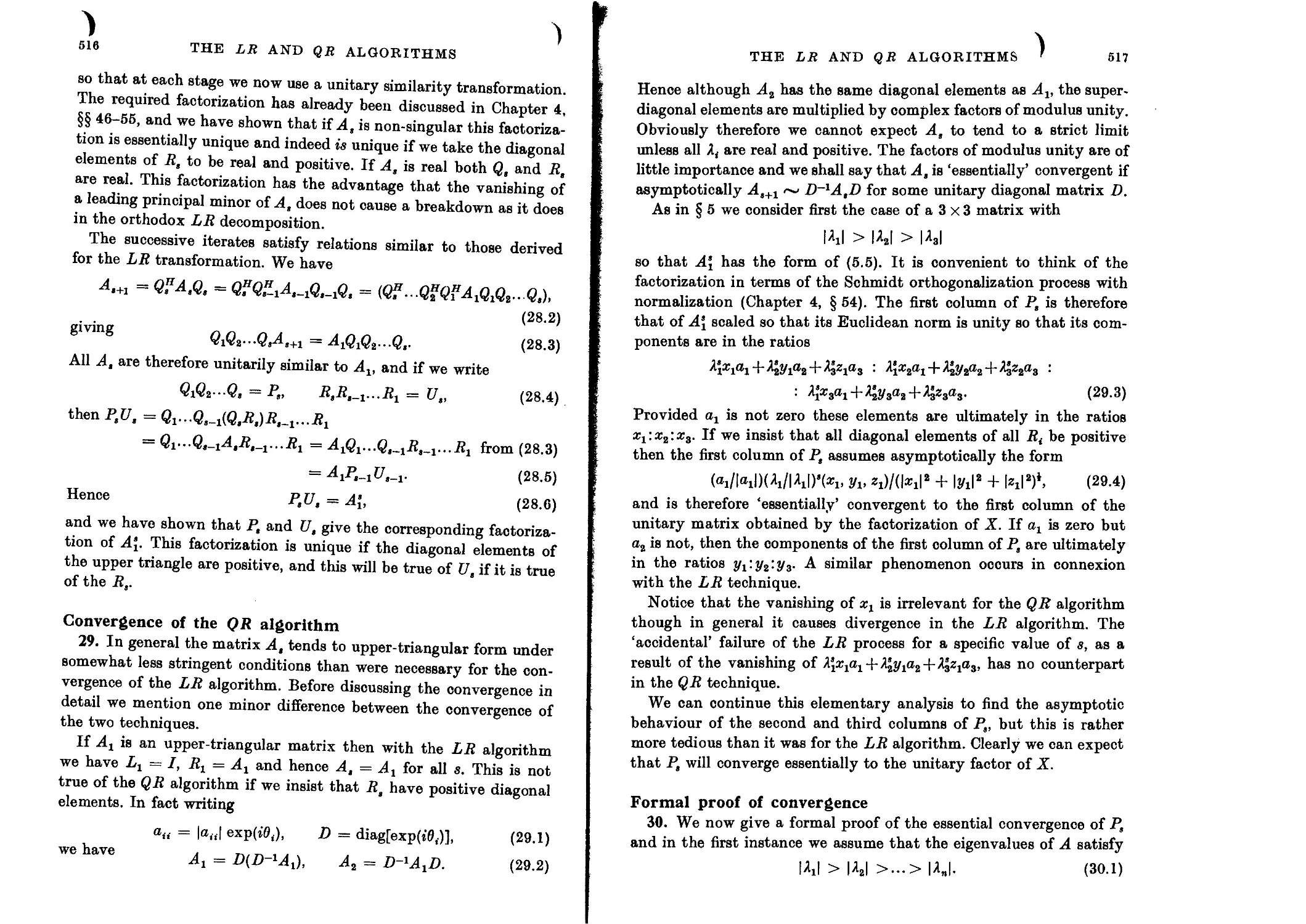

The QR algorithm 515

Convergence of the QR algorithm 516

Formal proof of convergence 517

Disorder of the eigenvalues 519

Eigenvalues of equal modulus 520

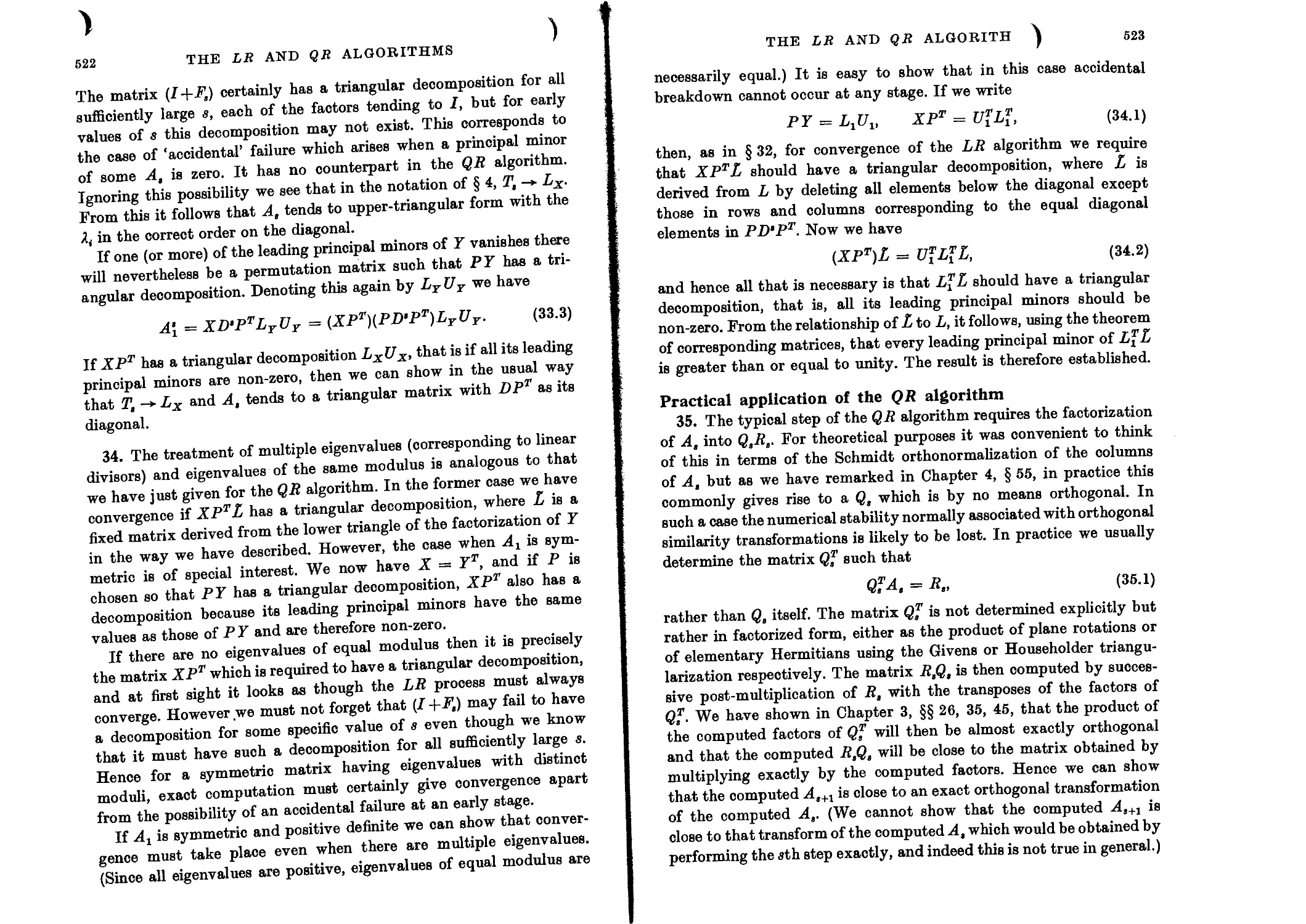

Alternative proof for the LR technique 521

Practical application of the QR algorithm 523

Shifts of origin 524

Decomposition of A, 525

Numerical example 527

Practical procedure 527

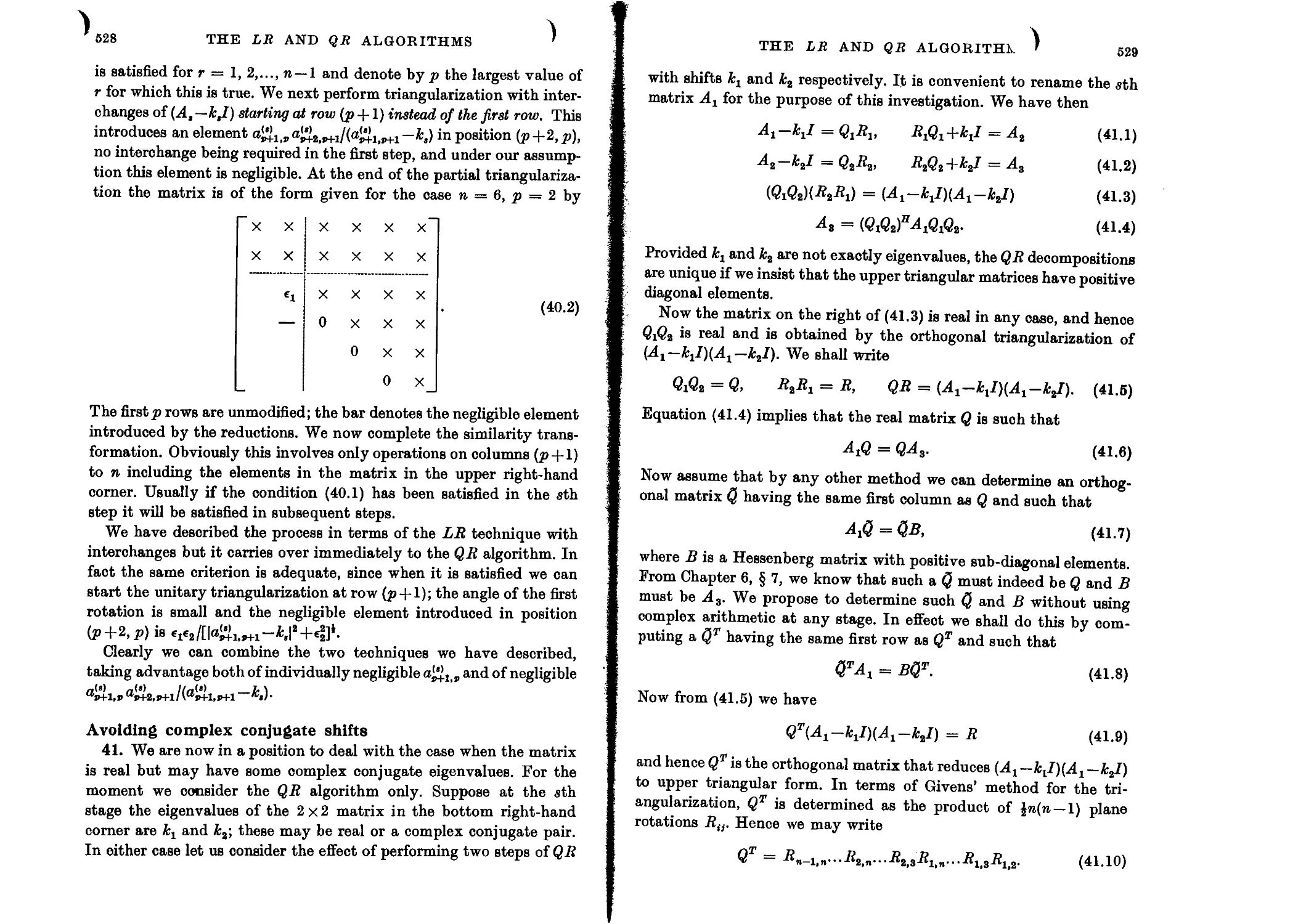

Avoiding complex conjugate shifts 528

Double QR step using elementary Hermitians 532

Computational details 534

Decomposition of As 535

Double-shift technique for LR 537

Assessment of LR and QR algorithms 538

Multiple eigenvalues 540

Special use of the deflation process 543

Symmetric matrices 544

Relationship between LR and QR algorithms 545

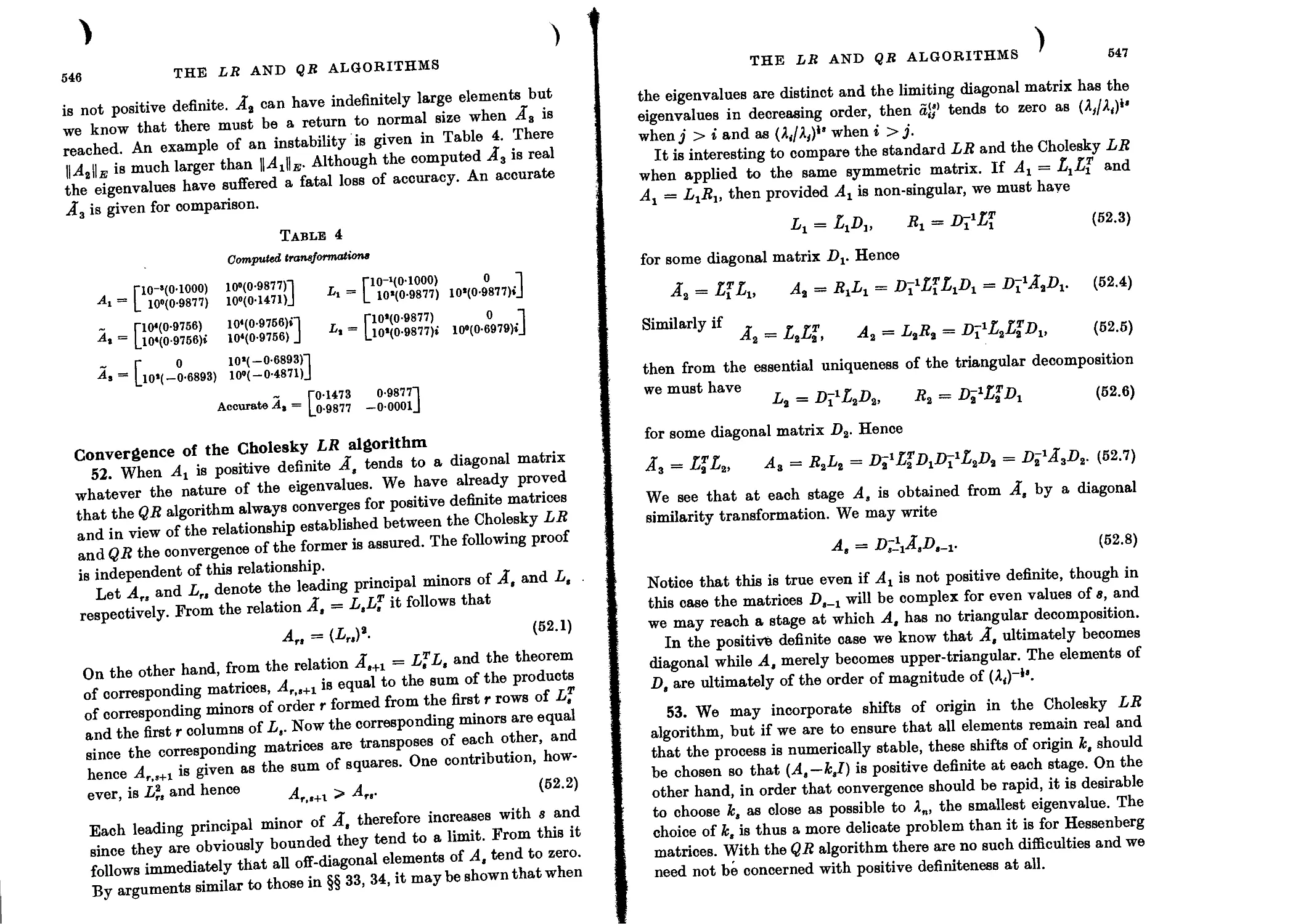

Convergence of the Cholesky LR algorithm 546

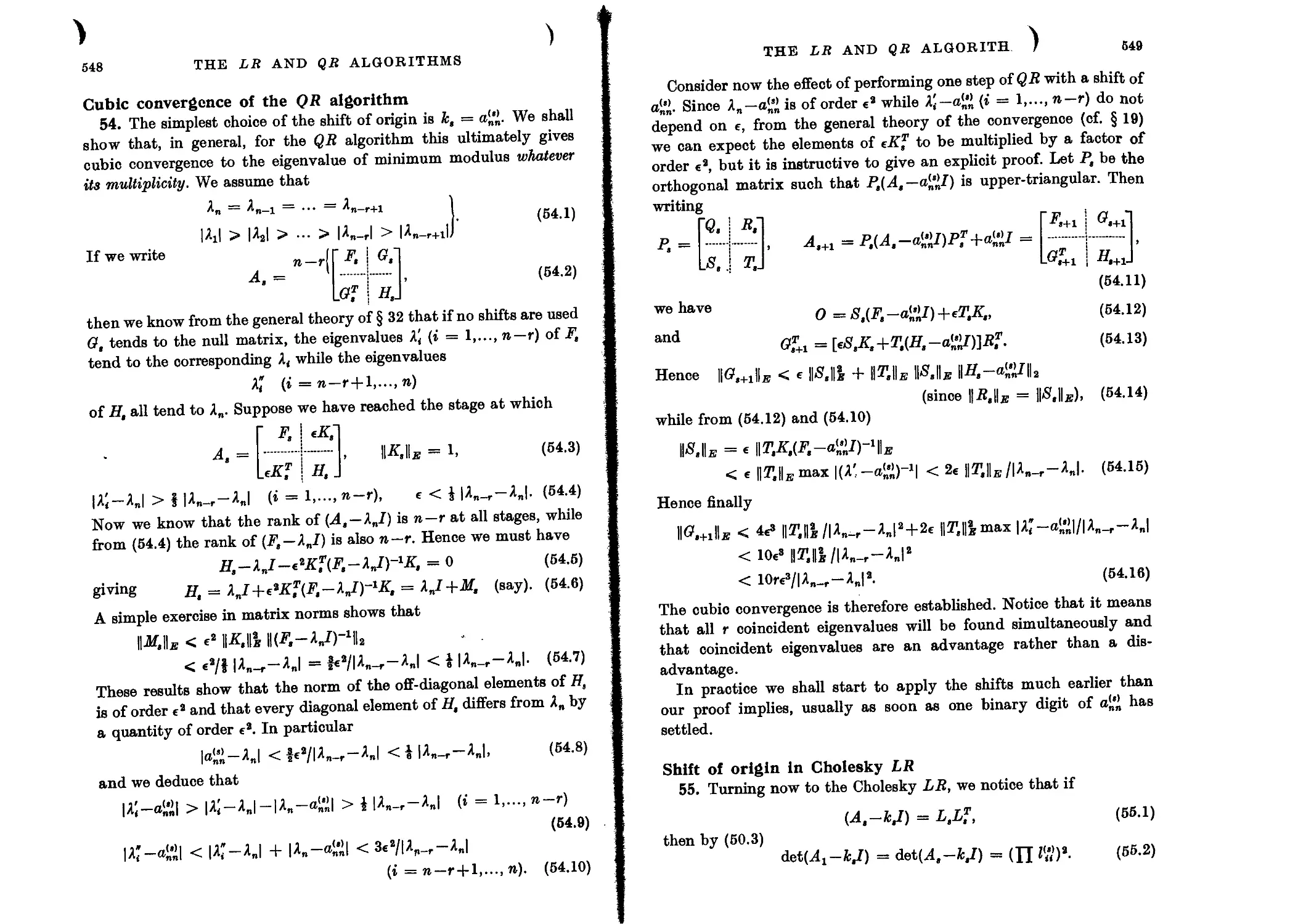

Cubic convergence of the QR algorithm 548

Shift of origin in Cholesky LR 549

Failure of the Cholesky decomposition 550

Cubically convergent LR process 551

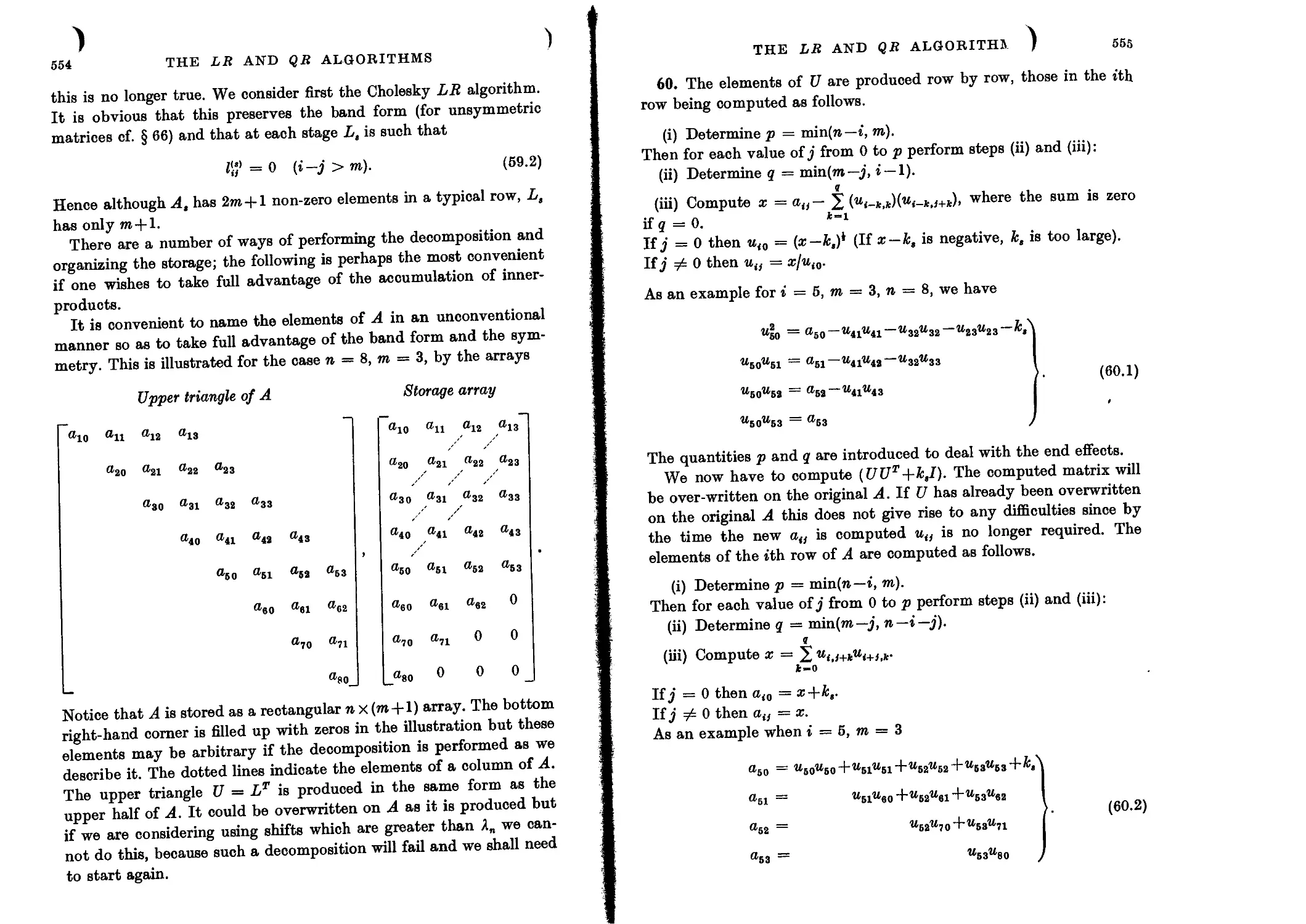

Band matrices 553

QR decomposition of a band matrix 557

Error analysis 561

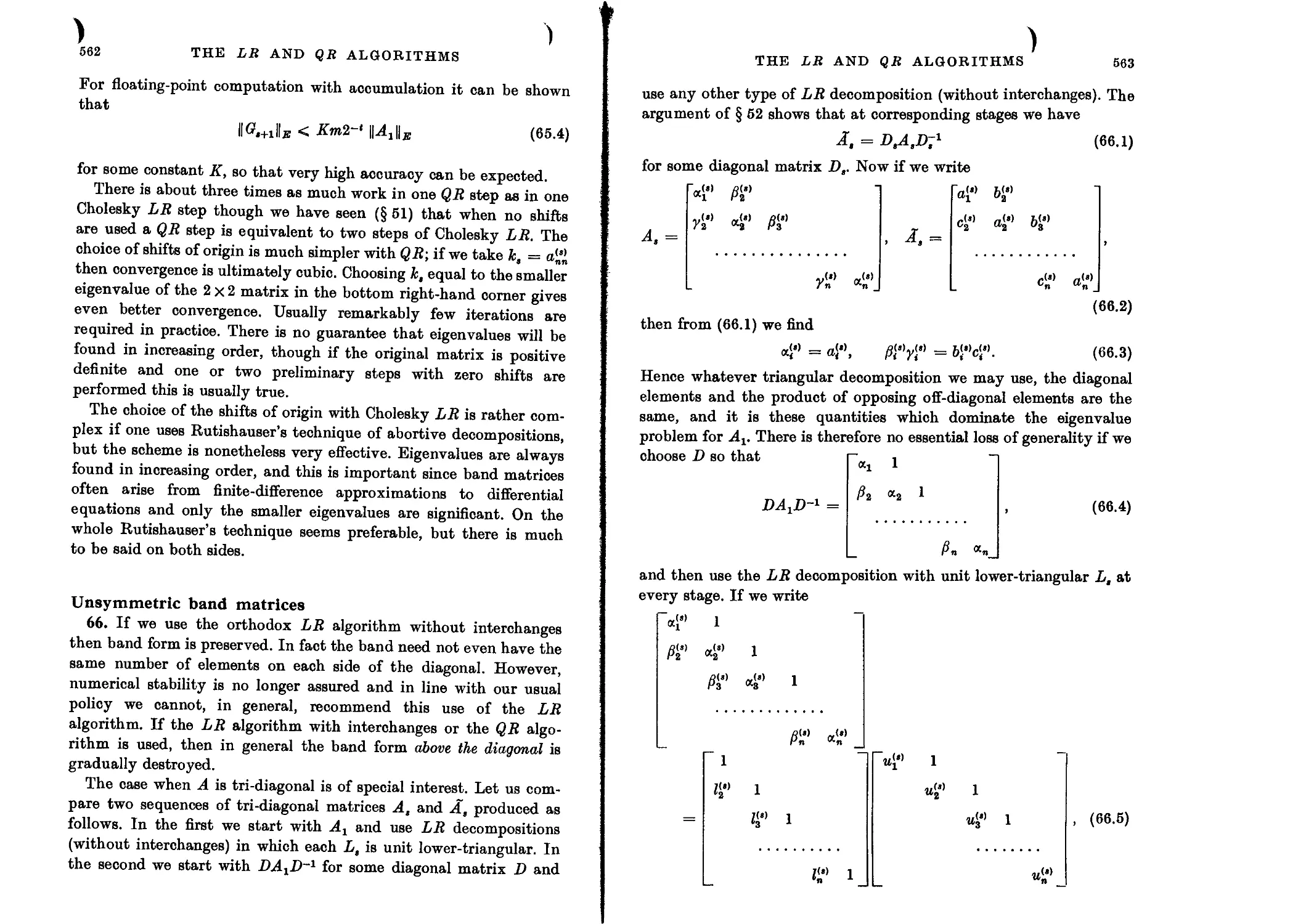

Unsymmetric band matrices 562

Simultaneous decomposition and recombination in QR algorithm 565

Reduction of band width 567

9. ITERATIVE METHODS

Introduction 570

The power method 570

Direct iteration with a single vector 571

Shift of origin 572

Effect of rounding errors 573

Variation of p 576

Ad hoc choice of p 577

xviii

CONTENTS

Aitken’s acceleration technique 578

Complex conjugate eigenvalues 579

Calculation of the complex eigenvector 581

Shift of origin 582

Non-linear divisors 582

Simultaneous determination of several eigenvalues 583

Complex matrices 584

Deflation 584

Deflation based on similarity transformations 585

Deflation using invariant subspaces 587

Deflation using stabilized elementary transformations 587

Deflation using unitary transformations 589

Numerical stability 590

Numerical example 592

Stability of unitary transformations 594

Deflation by non-similarity transformations 596

Genera] reduction using invariant subspaces 599

Practical application 601

Treppen-iteration 602

Accurate determination of complex conjugate eigenvalues 604

Very close eigenvalues 606

Orthogonalization techniques 606

Analogue of treppen-iteration using orthogonalization 607

Bi-iteration 609

Numerical example 610

Richardson’s purification process 614

Matrix squaring 615

Numerical stability 616

Use of Chebyshev polynomials 617

General assessment of methods based on direct iteration 618

Inverse iteration 619

Error analysis of inverse iteration 620

General comments on the analysis 621

Further refinement of eigenvectors 622

Non-linear elementary divisors 626

Inverse iteration with Hessenberg matrices 626

Degenerate cases 627

Inverse iteration with band matrices 628

Complex conjugate eigenvectors 629

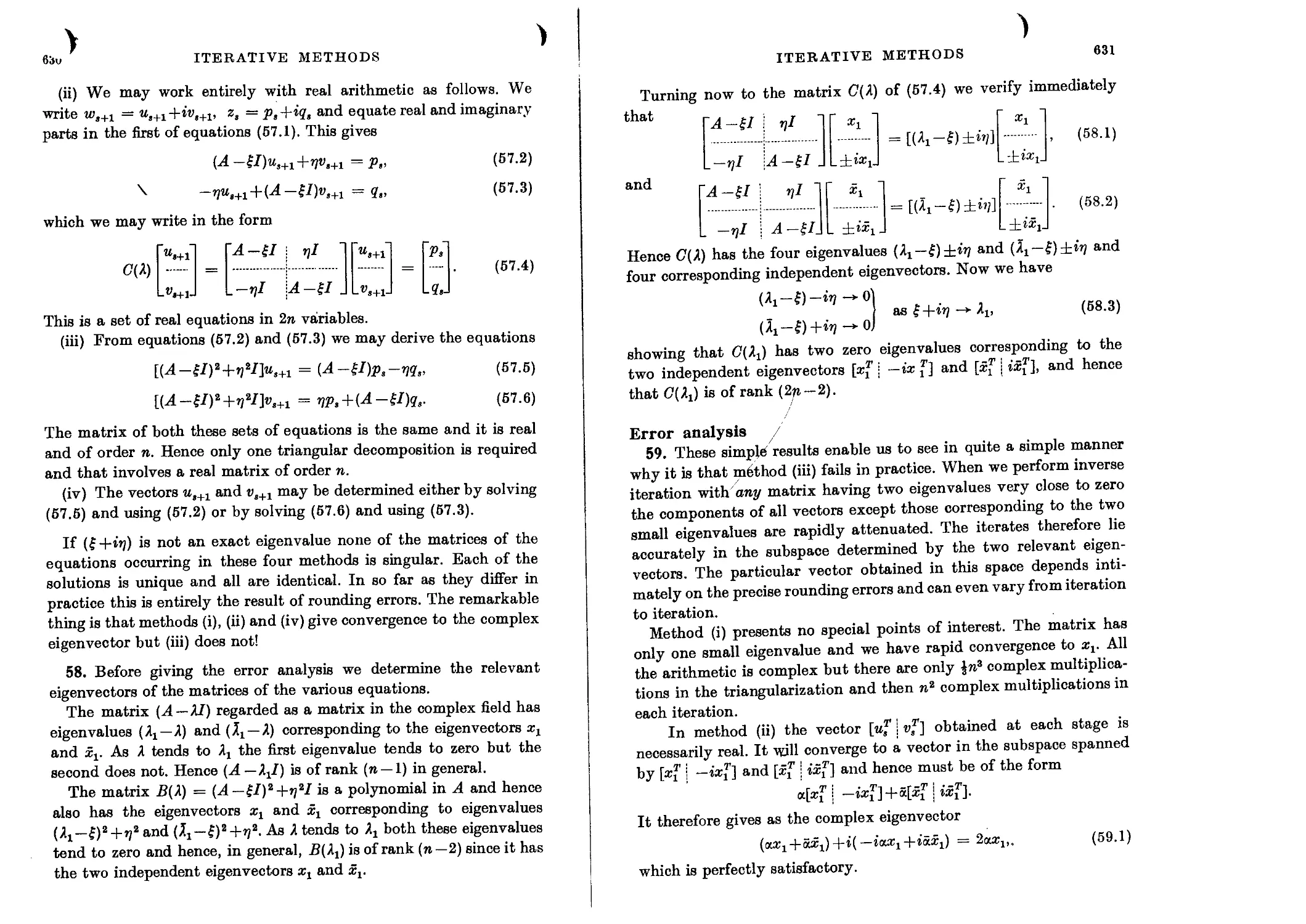

Error analysis 631

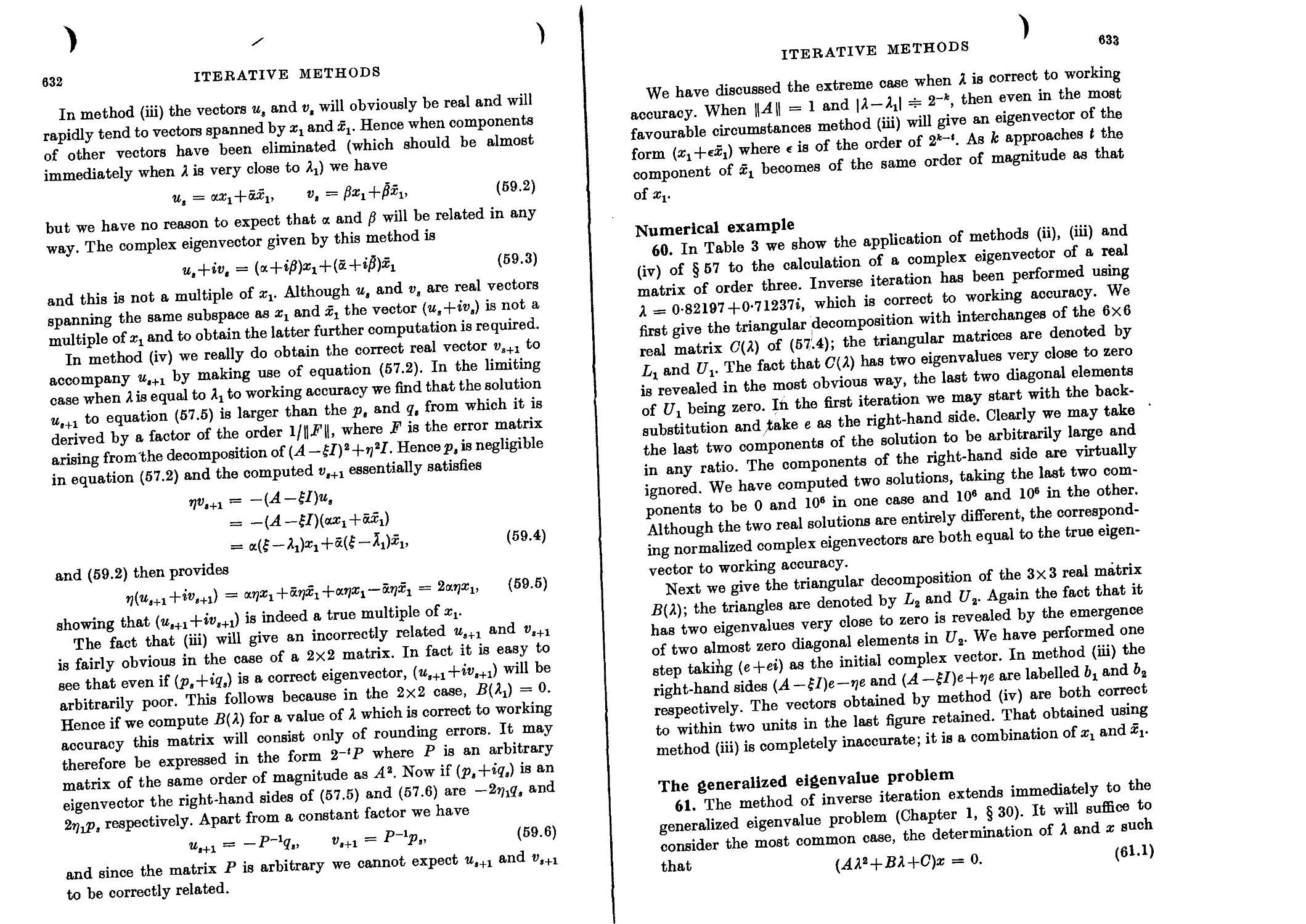

Numerical example 633

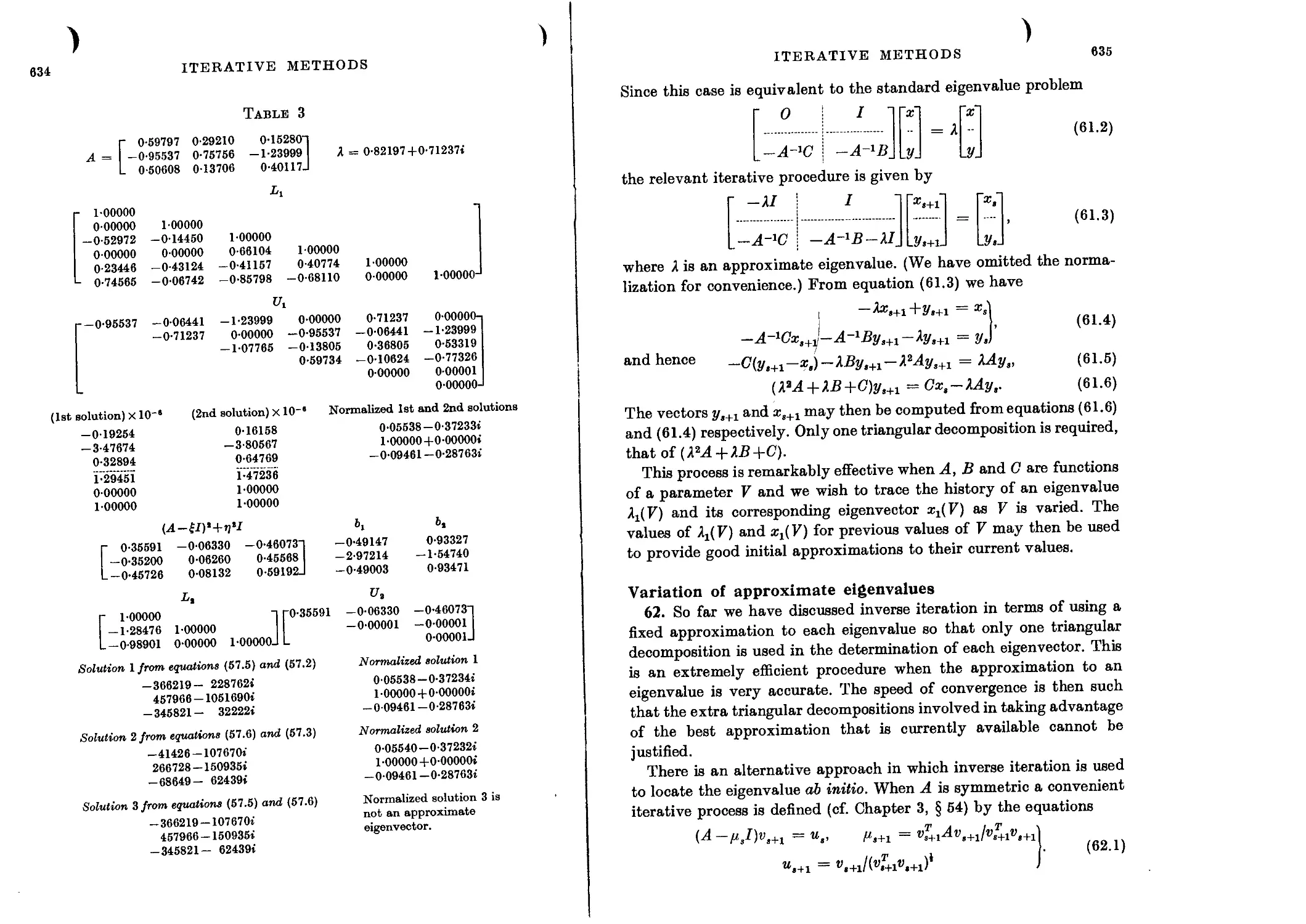

The generalized eigenvalue problem 633

Variation of approximate eigenvalues 635

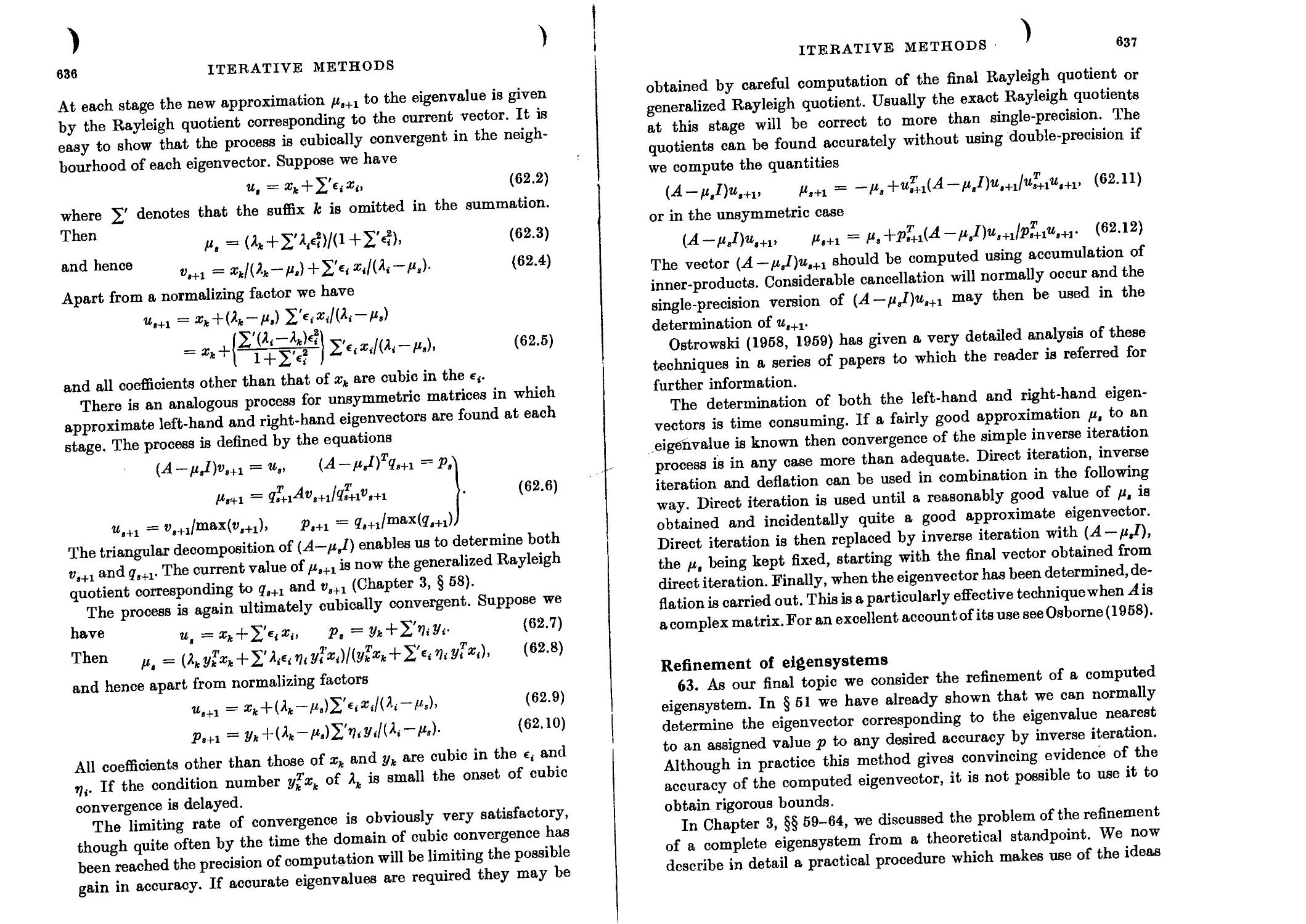

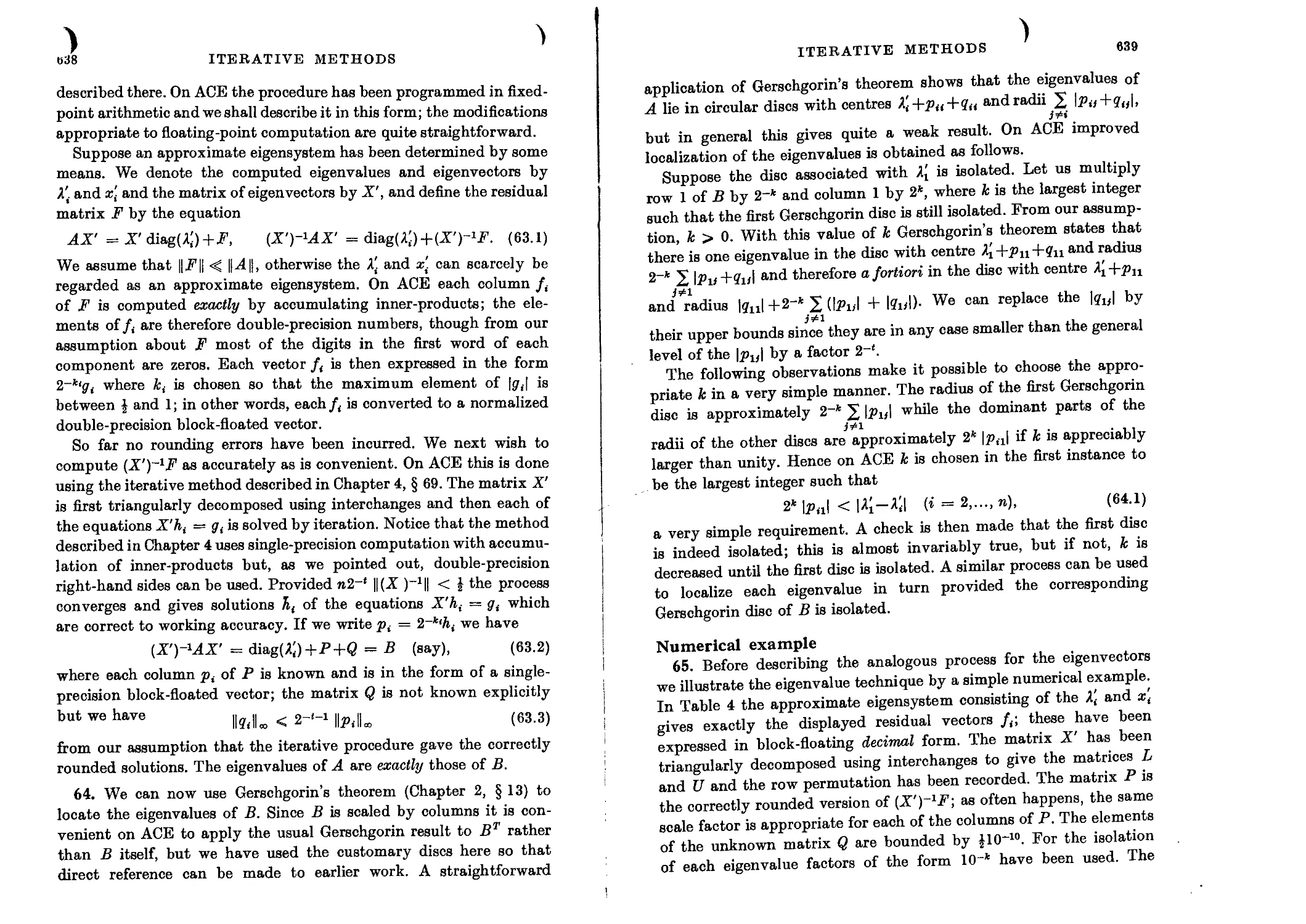

Refinement of eigensystems 637

Numerical example 639

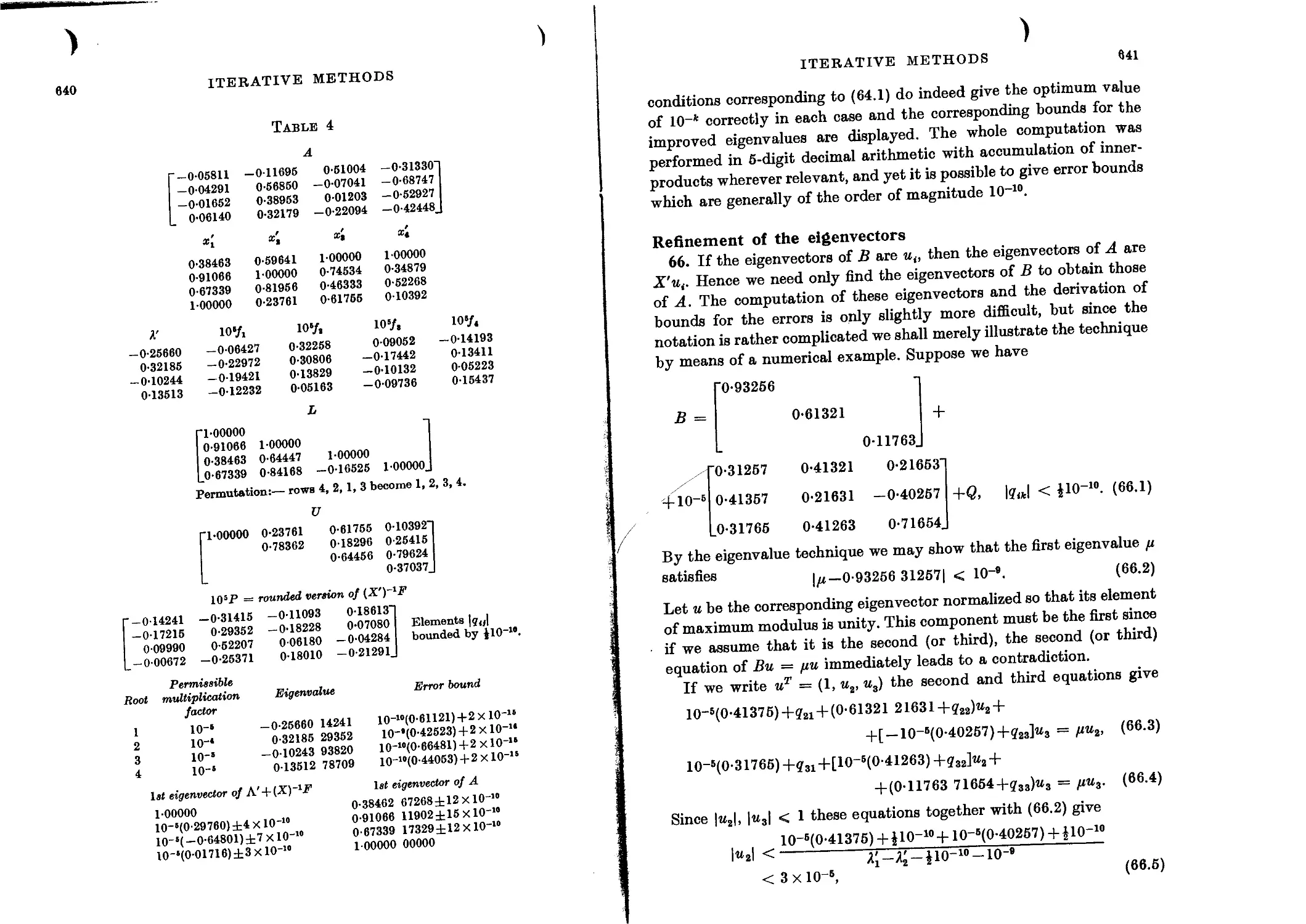

Refinement of the eigenvectors 641

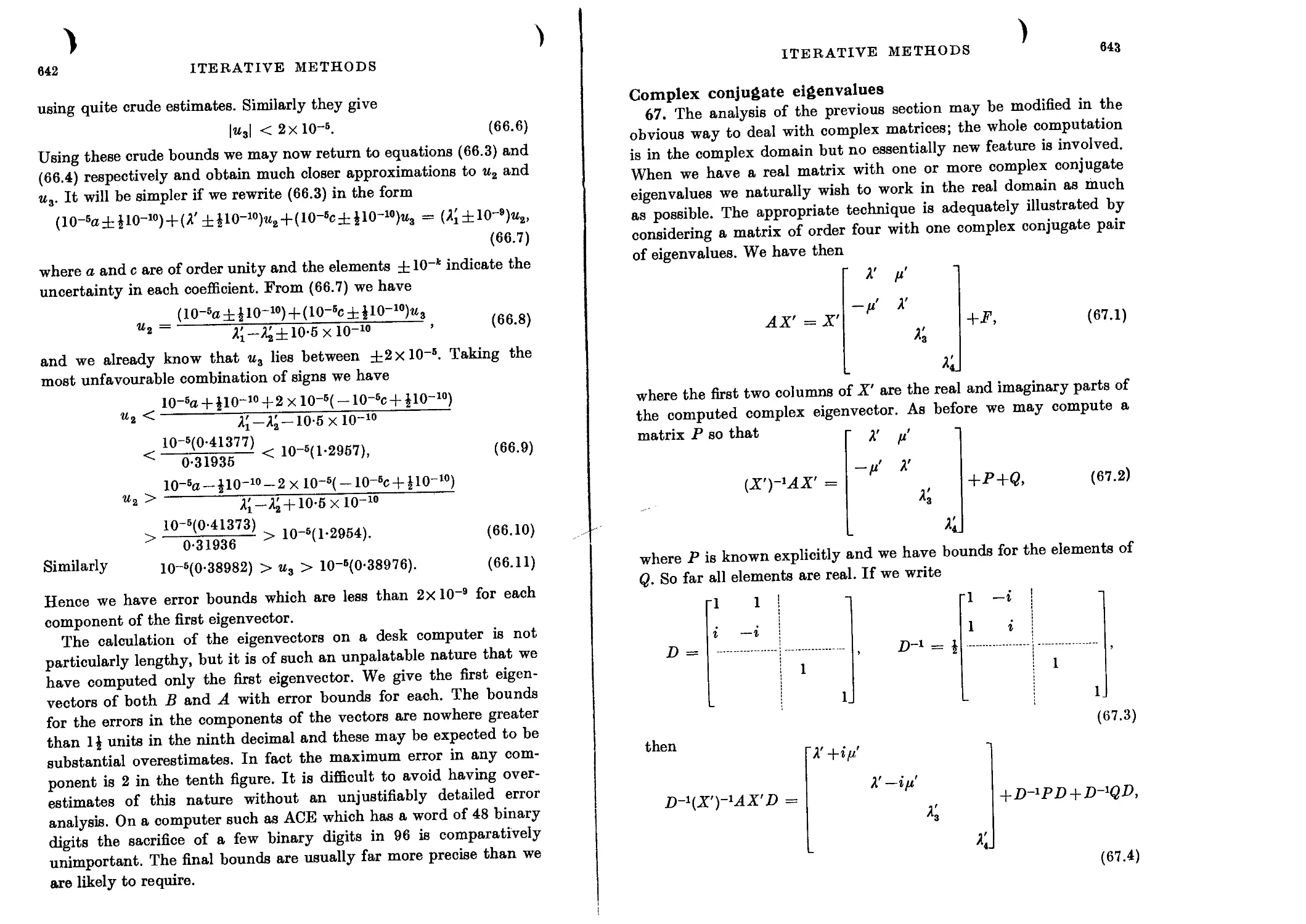

Complex conjugate eigenvalues 643

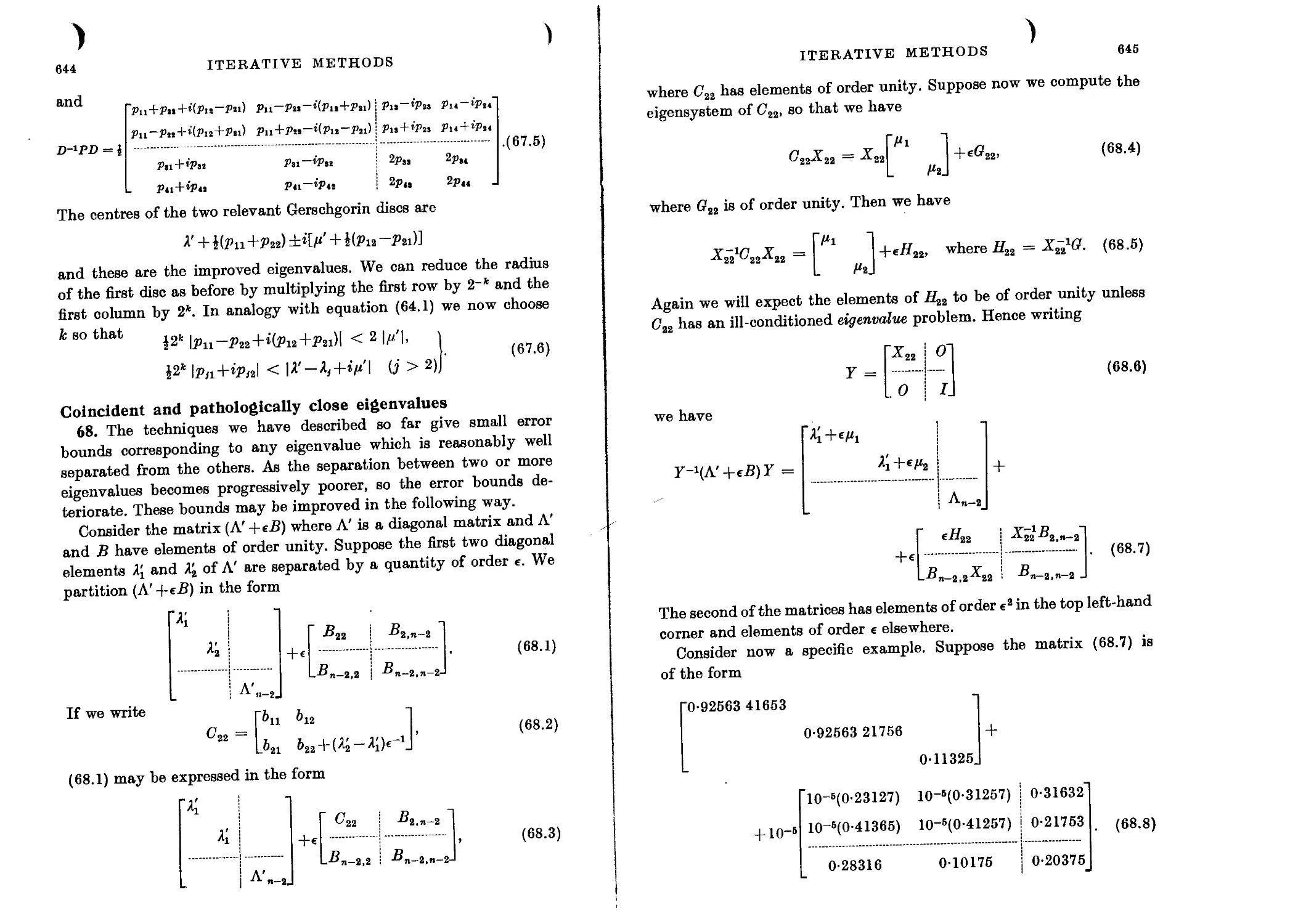

Coincident and pathologically close eigenvalues 644

Comments on the ACE programmes 646

BIBLIOGRAPHY 649

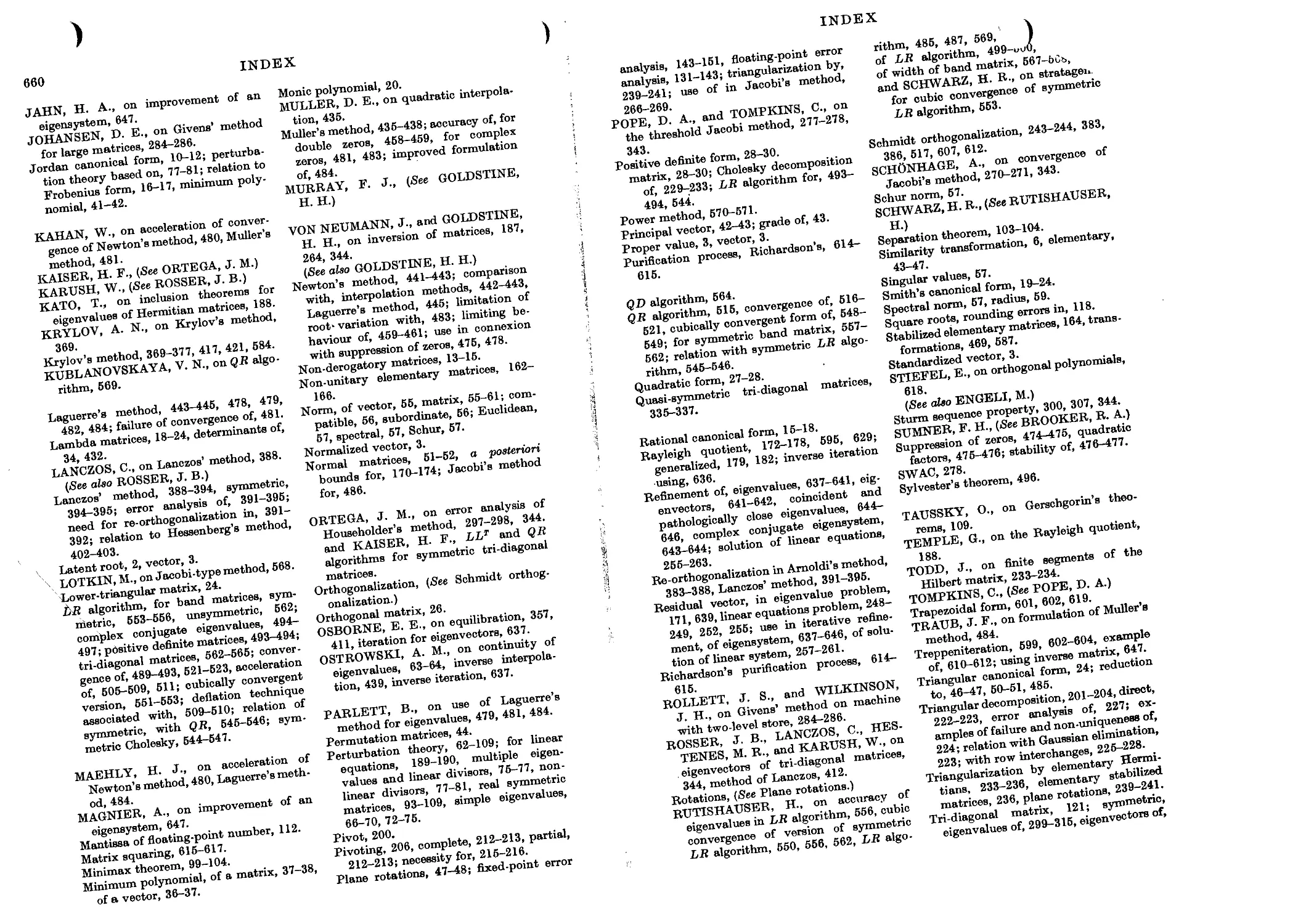

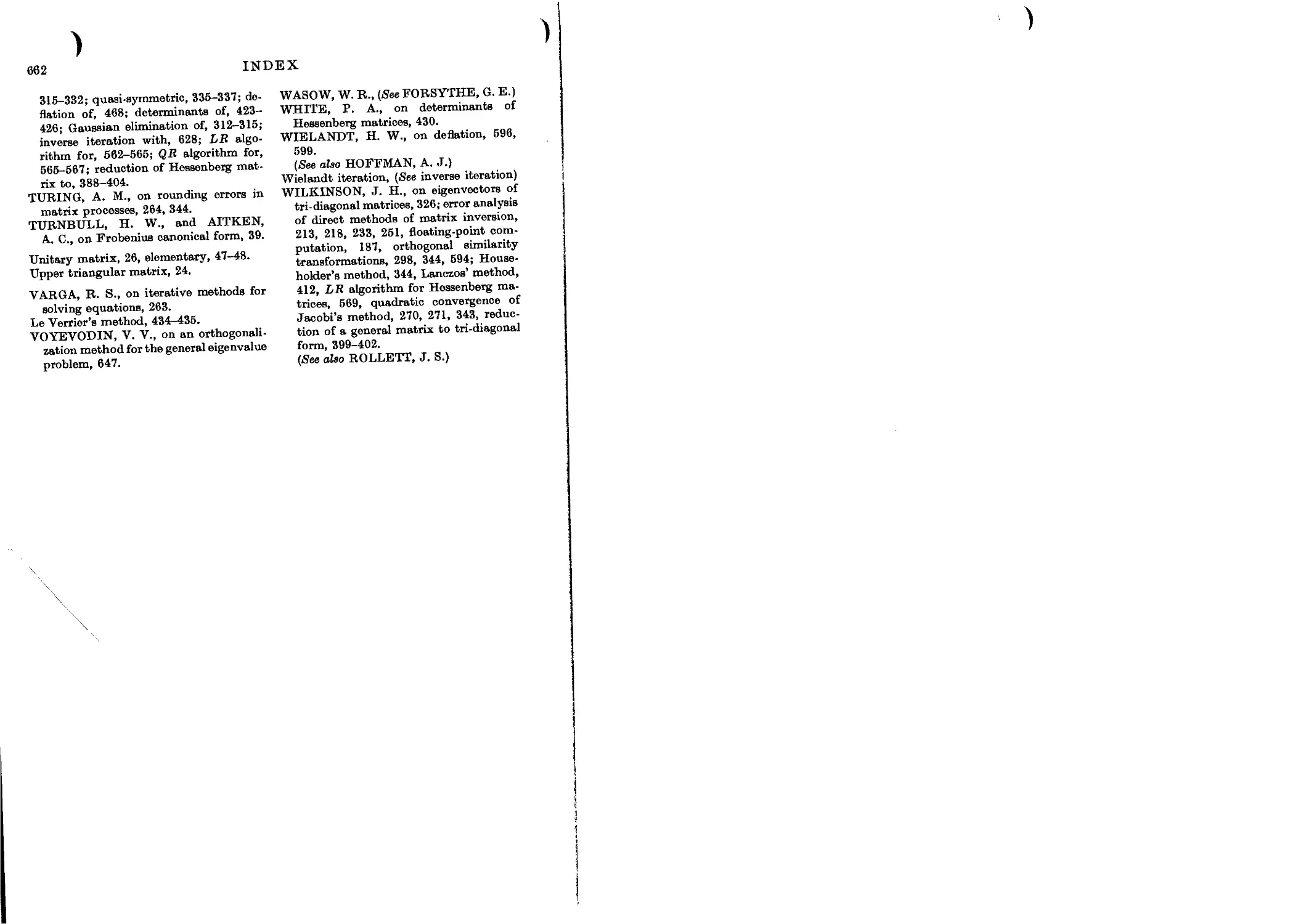

INDEX 657

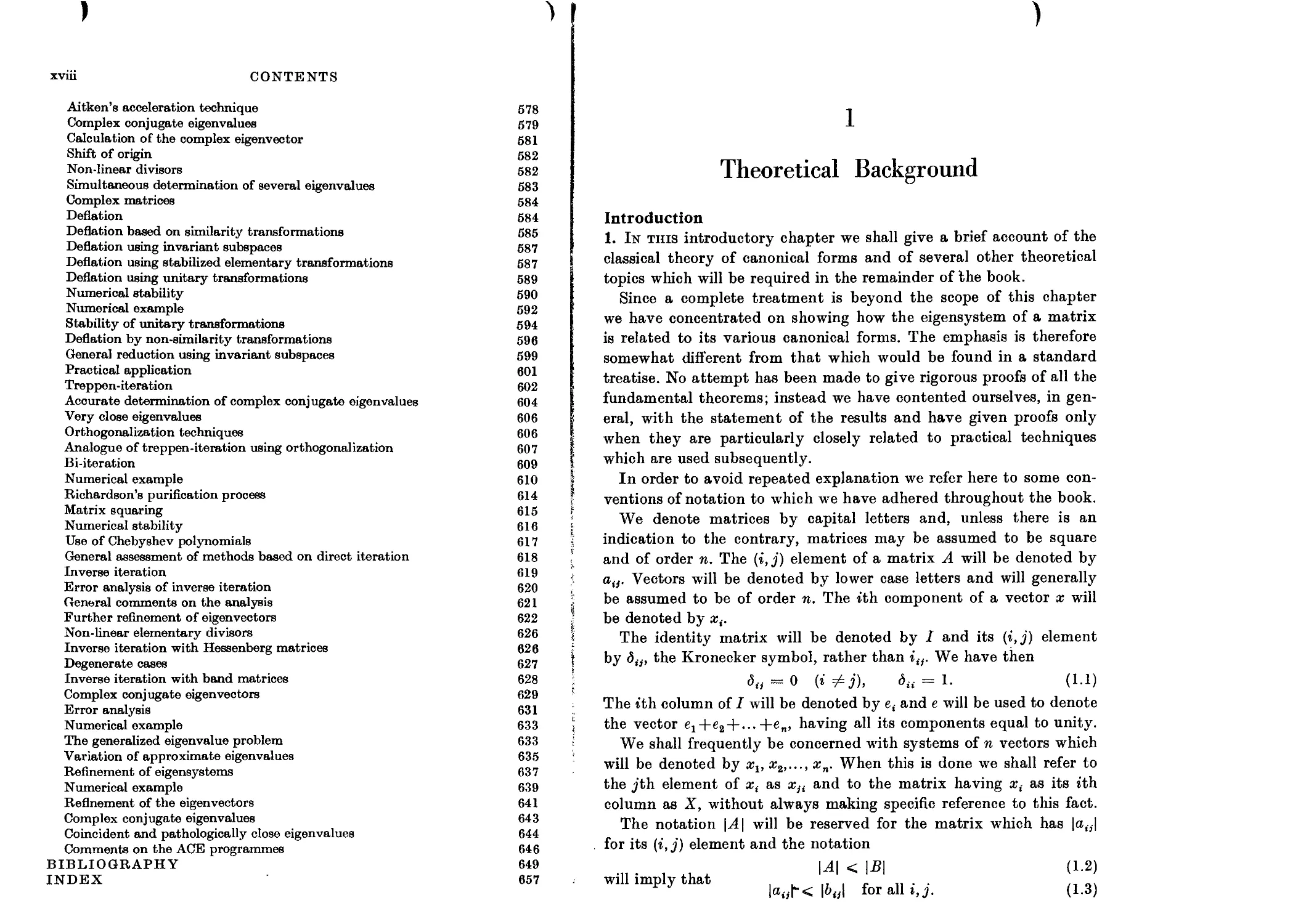

1

Theoretical Background

Introduction

1. In this introductory chapter we shall give a brief account of the

classical theory of canonical forms and of several other theoretical

topics which will be required in the remainder of the book.

Since a complete treatment is beyond the scope of this chapter

we have concentrated on showing how the eigensystem of a matrix

is related to its various canonical forms. The emphasis is therefore

somewhat different from that which would be found in a standard

treatise. No attempt has been made to give rigorous proofs of all the

fundamental theorems; instead we have contented ourselves, in gen-

eral, with the statement of the results and have given proofs only

when they are particularly closely related to practical techniques

which are used subsequently.

In order to avoid repeated explanation we refer here to some con-

ventions of notation to which we have adhered throughout the book.

We denote matrices by capital letters and, unless there is an

indication to the contrary, matrices may be assumed to be square

and of order n. The (i,J) element of a matrix A will be denoted by

atj. Vectors will be denoted by lower case letters and will generally

be assumed to be of order n. The ith component of a vector x will

be denoted by xf.

The identity matrix will be denoted by I and its (i,j) element

by <5tJ, the Kronecker symbol, rather than iif. We have then

<5„ = 0 <5H = 1. (1.1)

The ith column of I will be denoted by e, and e will be used to denote

the vector e1+e2 + --> +en, having all its components equal to unity.

We shall frequently be concerned with systems of n vectors which

will be denoted by aq, x2,..., xn. When this is done we shall refer to

the jth element of xt as aqf and to the matrix having x( as its ith

column as X, without always making specific reference to this fact.

The notation |Л| will be reserved for the matrix which has |a„|

for its (i,j) element and the notation

•n • i + И < 1*1 <L2)

will imply that , , „ . .

Iaob< I&J for all г, j. (1.3)

2

THEORETICAL BACKGROUND

As a result of this decision and the use of ЦАЦ for the norm of A

(of. § 52) the determinant of A will be denoted by det (A). In line

with this, an (n x n) diagonal matrix with (i, i) element equal to

А,- will be denoted by diag (AJ.

The transpose of a matrix will be denoted by AT and is such that

its (i, j) element is equal to aSi. Similarly xT will denote a row vector

having its ith component equal to x(. Since it is more convenient to

print a row vector than a column vector, we shall frequently intro-

duce a column vector x and then define it by giving the explicit

expression for xT.

We assume throughout that we are working in the field of complex

numbers, though in computational procedures there is much to be

gained from remaining in the real field whenever possible.

Definitions

2. The fundamental algebraic eigenproblem is the determination

of those values of A for which the set of n homogeneous linear equa-

tions in n unknowns

Ax — Ax (2.1)

has a non-trivial solution. Equation (2.1) may be written in the form

(A-AZ)x = 0, (2.2)

and for arbitrary A this set of equations has only the solution x = 0.

The general theory of simultaneous linear algebraic equations shows

that there is a non-trivial solution if, and only if, the matrix (A —AZ)

is singular, that is

det(A —AZ) = 0. (2.3)

The determinant on the left hand side of equation (2.3) may be

expanded to give the explicit polynomial equation

a0+a1A + ...+an_1A’-1 + (-l)"An = 0. (2.4)

Equation (2.4) is called the characteristic equation of the matrix A

and the polynomial on the left-hand side of the equation is called

the characteristic polynomial. Since the coefficient of An is not zero

and we are assuming throughout that we are working in the complex

number field, the equation always has n roots. In general the roots

may be complex, even if the matrix A is real, and there may be roots

of any multiplicities up to n. The n roots are called the eigenvalues,

latent roots, characteristic values or proper values of the matrix A.

THEORETICAL BACKGROUND

3

Corresponding to any eigenvalue Л, the set of equations (2.2) has

at least one non-trivial solution x. Such a solution is called an eigen-

vector, latent vector, characteristic vector or proper vector corresponding

to that eigenvalue. If the matrix (А— Л1) is of rank less than (n — 1)

then there will be more than one independent vector satisfying

equation (2.2). We discuss this point in detail in §§ 6-9. It is evident

that if a; is a solution of (2.2), then kx is also a solution for any value

of k, so that even when (Л — Л1) is of rank (n — 1), the eigenvector

corresponding to Л is arbitrary to the extent of a constant multiplier.

It is convenient to choose this multiplier so that the eigenvector has

some desirable numerical property, and such vectors are called

normalized vectors. The most convenient forms of normalization are

those for which

(i) the sum of the squares of the moduli of the components is

equal to unity,

(ii) a component of the vector which has the largest modulus is

equal to unity, and

(iii) the sum of the moduli of the components is equal to unity.

The normalizations (i) and (iii) have the disadvantage of being

arbitrary to the extent of a complex multiplier of modulus unity.

Many of the processes we describe in this book lead, in the first

instance, to eigenvectors which are not in normalized form. It is

often convenient to multiply such vectors by a power of 10 (or 2) so

that the largest element lies between 01 and 1-0 (or | and 1) in

modulus. We shall refer to vectors which have been treated in this

way as standardized vectors.

Eigenvalues and eigenvectors of the transposed matrix

3. The eigenvalues and eigenvectors of the transpose of a matrix

are of considerable importance in the general theory. The eigenvalues

of AT are, by definition, those values of Л for which the set of equations

= Лу (3.1)

has a non-trivial solution. These are the values for which

det(^r—Л1) = 0, (3.2)

and since the determinant of a matrix is equal to that of its transpose,

the eigenvalues of AT are the same as those of A. The eigenvectors

are, in general, different. We shall usually denote the eigenvector

of AT corresponding to 2,- by yit so that we have

A^Vi = ^iVi- (3.3)

4

THEORETICAL BACKGROUND

Equation (3.3) may be written in the form

= A.yf,

(3-4)

so that yf is sometimes called a left-hand eigenvector of A correspond-

ing to 2j; while the vector x( such that

Ax( = ZjXf (3.5)

is called a right-hand eigenvector of A corresponding to 2(. The

importance of the eigenvectors of the transposed matrix springs

mainly from the following result.

If xt is an eigenvector of A corresponding to the eigenvalue 2(,

and y, is an eigenvector of AT corresponding to 2,, then

= 0 (#2,^2,).

For we may write (3.5) in the form

xf AT = 2ЛТ

= 2,yr

and we have

(3-6)

(3-7)

(3-8)

Post-multiplying (3.7) by ys and pre-multiplying (3.8) by xf and

subtracting we have „ , T , T

6 0=2Лг^-2,а^„ (3.9)

leading to equation (3.6).

Note that since xf and/or yf may be complex vectors, xfyt is not an

inner-product as usually understood. We have in fact

= У?*»

xfy} =

When x is complex we can have

xTx = 0

whereas a true inner-product is positive for all non-zero x.

and not

(3.10)

(3.11)

(3-12)

Distinct eigenvalues

4. The general theory of the eigensystem is simplest when the

n eigenvalues are distinct, and we consider this case first. We denote

the eigenvalues by 21; 22,..., 2n. Equation (2.2) certainly has at least

one solution for each value of 2( and we can therefore assume the

existence of a set of eigenvectors which we denote by xlt xs,..., xn.

We shall show that each of these vectors is unique, apart from an

arbitrary multiplier.

THEORETICAL BACKGROUND

5

We first show that the vectors must be linearly independent. For,

if not, let r be the smallest number of linearly dependent vectors.

We may number the vectors so that these are xlt xz,..., xr and, from

our assumption, there is a relation between them of the form

5 afxt = 0 (4.1)

i

in which none of the a4 is zero. Clearly r > 2 because, by definition,

none of the xt is the null vector. Pre-multiplying equation (4.1) by

A, we have r

2 а4ЛЛ = 0. (4.2)

1

Multiplying equation (4.1) by and subtracting (4.2) gives

(4.3)

i

and none of the coefficients in this relation is zero. Now equation (4.3)

implies that xlt x2,..., хг_г are linearly dependent, which is contrary

to hypothesis. The n eigenvectors are therefore independent and span

the whole n space. Consequently they may be used as a base in which

to express an arbitrary vector.

From the last result the uniqueness of the eigenvectors follows

immediately. For if there is a second eigenvector zT corresponding

to we may write n

zi — 2 aA> (4.4)

where at least one of the a, is non-zero. Multiplying (4.4) by A gives

^izi = 2 Ma- (4-5)

i

Multiplying (4.4) by and subtracting from (4.5) we have

0 = 2^-^, (4.6)

2

and hence from the independence of the a;4

а4(Л4 — Ях) = 0 (t = 2, 3,..., n),

glV1Ilg <tt = 0 (» = 2, 3,..., n). (4.7)

The non-zero a4 must therefore be a1; showing that zx is a multiple

of

Similarly we may show that the eigenvectors of AT are unique

and linearly independent. We have proved earlier that

=0 (Я, * Ы (4.8)

6

THEORETICAL BACKGROUND

so that the two sets of vectors form a bi-orthogonal system. We have

further т

xfy( =#= 0 (* = 1, 2,..., n). (4.9)

For if x{ were orthogonal to yt it would be orthogonal to yx, уг,...,уп

and hence to the whole n-space. This is impossible, since xx is not the

null vector.

Equations (4.8) and (4.9) enable us to obtain an explicit expression

for an arbitrary vector v in terms of the xt or the y(. For if we write

® = Ja<a:<, (4.10)

4—1

then n

yfv =£л<у?х{ = ^yfxf. (4.11)

Hence т , r

«,= yfv/yfxi, (4-12)

the division being permissible because the denominator is non-zero.

Similarity transformations

5. If we choose the arbitrary multipliers in the x( and yt so that

yfxf = 1 (» = 1, 2,...,n), (5.1)

the relations (4.8) and (5.1) imply that the matrix YT, which has

yf as its tth row, is the inverse of the matrix, X, which has x( as its

ith column. The n sets of equations

Axt — Xixi (5.2)

may be written in matrix form

AX = X diag(Ai). (5.3)

Now we have seen that the inverse of the matrix X exists and is

equal to YT. Equation (5.3) therefore gives

X-'AX = YTAX = diag^J. (5.4)

Transforms, H~lAH, of the matrix A, where H is non-singular,

are of fundamental importance from both the theoretical and the

practical standpoints and are known as similarity transforms while A

and H~lAH are said to be similar. Obviously HAH-1 is also a

similarity transform of A. We have shown that when the eigenvalues

of A are distinct then there is a similarity transformation which

reduces A to diagonal form and that the matrix of the transformation

THEORETICAL BACKGROUND

7

has its columns equal to the eigenvectors of A. Conversely if we

haVe H~rAH = diagf/zj (5.5)

^ien ЛЯ — // diagf/z,). (5.6)

This last equation implies that the /z( are the eigenvalues of A in

some order, and that the tth column of H is an eigenvector corre-

sponding to Hf.

The eigenvalues of a matrix are invariant under a similarity trans-

formation. For if , ,

Ax = Xx (5.7)

then ,T , . , ,T ,

H~rAx — XH^x,

giving

Н-ЧЦНН-^х = ЛЯ"1», (H^AHjH-h: = Ш^х. (5.8)

The eigenvalues are therefore preserved and the eigenvectors are

multiplied by Я-1.

Many of the numerical methods for finding the eigensystem of

a matrix consist essentially of the determination of a similarity trans-

formation which reduces a matrix A of general form to a matrix В

of special form, for which the eigenproblem may be more simply

solved.

Similarity is a transitive property, for if

В = Hi'AHi and C = H?BH2 (5.9)

then C = H^H^AHJb = (HMr'AtHiHJ. (5.10)

In general the reduction of a general matrix to one of special

form will be effected by a sequence of simple similarity transforma-

tions.

Multiple eigenvalues and canonical forms for general

matrices

6. The structure of the system of eigenvectors for a matrix which

has one or more multiple eigenvalues may be far less simple than that

described above. It may still be true that there is a similarity trans-

formation which reduces A to diagonal form. When this is true, we

have, for some non-singular H,

H-'AH = diagUJ.

(6.1)

8

THEORETICAL BACKGROUND

The Я( must then be the eigenvalues of A and each 2, must occur

with the appropriate multiplicity. For

Я~1(Л-27)Я = Н^АН-Ш-ЧН = diag^-2), (6.2)

and taking determinants of both sides we have

det(H“1) det (A -U) det(tf) = ПМ.-Д (6.3)

glVmg det(^-lf) = №.—Д (6.4)

The 2, are therefore the roots of the characteristic equation of A.

Writing (6.1) in the form . ,T „

6 v ' AH = H diag(2(), (6.5)

we see that the columns of H are eigenvectors of A. Since H is non-

singular, its columns are independent. If, for example, is a double

root, then, denoting the first two columns of H by xr and x2 we have

AX} = 2jXlt Ax2 = 2ха;2, (6.6)

where xr and ж2 are independent. From equations (6.6), any vector

in the subspace spanned by xx and x2 is also an eigenvector. For we

A (eq#! + <х2ж2) = a.jA^Xi~}-ct.2XjX2 = 2х(осхя;х-|-а2й;2). (6.7)

Coincident roots for a matrix which can be reduced to diagonal

form by a similarity transformation are associated with an indeter-

minacy in the eigenvectors corresponding to the multiple root. We

can still select a set of eigenvectors which span the whole n-space and

can therefore be used as a base in which to express an arbitrary

vector.

Thus the diagonal matrix Л defined by

(6-8)

has the 5 independent eigenvectors ex, e2, e3, e4 and e6. Any combina-

tion of ex and e2 is an eigenvector corresponding to 2. = I and any

combination of e3 and e4 is an eigenvector corresponding to 2 = 2.

For any matrix similar to Л there is a non-singular H such that

H-^AH = A

(6.9)

THEORETICAL BACKGROUND

9

and the eigenvectors of A are Нег, He2, He3, Het and HeB, any

combination of the first two being an eigenvector corresponding to

2 = 1 and any combination of the third and fourth an eigenvector

corresponding to 2 = 2.

Defective system of eigenvectors

7. The properties of a matrix which is similar to a diagonal matrix

but has multiple eigenvalues are in most respects like those of a

matrix with distinct eigenvalues. However, not all matrices with

multiple eigenvalues are of this type.

The matrix C2(a) defined by

t72(a) —

Г

a

(7.1)

has the double eigenvalue 2 = a. If x is an eigenvector of C2(a) with

components aq and a:2, then we must have

(te1+ x2 = 0

0aq+0a;2 = 0

(7.2)

These equations imply that ж2 = 0. There is thus only one eigen-

vector corresponding to 2 = a, the vector ev We may regard C2(a)

as the limit as b —> a of a matrix B2 defined by

В

2 —

a

0

1

b

(7-3)

For Ъ Ф a this matrix has two eigenvalues a and b, and the corre-

sponding vectors are

1'

0

and

1 '

b — a

As b -► a the two eigenvalues and eigenvectors become coincident.

More generally if we define matrices Cr(a) by the relations

^(a) = [a], Cr(a) = a 1 a 1 a 1 , (7-4)

a 1 _ a

10

THEORETICAL BACKGROUND

then Cr(a) has an eigenvalue, a, of multiplicity r, but it has only one

eigenvector, x = ev We may prove this as we proved the correspond-

ing result for Cs(a). Alternatively it follows from the fact that the

rank of [C,(a)-aZ] is (r — 1). (The matrix of order (r — 1) in the top

right-hand corner has determinant 1.) The transposed matrix [<7r(a)]r

also has only one eigenvector, у = er. We have immediately

xTy = eier ~ ° (7.5)

which contrasts with the result obtained for the eigenvectors of

matrices with distinct eigenvalues.

The matrix Cr(a), (r > 1), cannot be reduced to diagonal form by a

similarity transformation. For if there exists an H for which

= diag(2j), (7.6)

that 18’ С.Н diag(2,), (7.7)

then, as shown in § 6, the 2, must be equal to the eigenvalues of CT(a)

and must therefore all have the value a. Equation (7.7) then shows

that the columns of H are all eigenvectors of Cr(a). These columns

are independent, since H is non-singular. The assumption that such

an H exists must therefore be false.

The matrix (7r(a) is called a simple Jordan submatrix of order r,

or sometimes a simple classical submatrix of order r. Matrices of this

form are of special importance in the theory, as will be seen in the

next section.

The Jordan (classical) canonical form

8. We see then that a matrix with multiple eigenvalues is not

necessarily similar to a diagonal matrix. It is natural to ask what is

the most compact form to which a matrix A may be reduced by means

of a similarity transformation. The answer is contained in the following

theorem.

Let A be a matrix of order n with r distinct eigenvalues 21, 22,..., 2r

of multiplicities mt, m2,..., mr so that

m1+m2+... +mr = n. (8.1)

Then there is a similarity transformation with matrix Я such that

the matrix H~XAH consists of simple Jordan submatrices isolated

along the diagonal with all other elements equal to zero. The sum of

the orders of the submatrices associated with Л( is equal to mt. Apart

THEORETICAL BACKGROUND

11

from the ordering of the submatrices along the diagonal, the trans-

formed matrix is unique. We say that H^AH is the direct sum of

the simple Jordan submatrices.

Thus a matrix of order six with five eigenvalues equal to and one

equal to A2, which is similar to C defined by

cannot also be similar to a matrix of the form

^2(^1) >

L

(8.3)

although this too has five roots equal to and one equal to 22. The

matrix of simple Jordan submatrices is called the Jordan canonical

form or the classical canonical form of the matrix A.

In the special case when all the Jordan submatrices in the Jordan

canonical form are of order unity, this form becomes diagonal. We

have already seen that if the eigenvalues of a matrix are distinct

then the Jordan canonical form is necessarily the matrix diag(2i).

The total number of independent eigenvectors is equal to the

number of submatrices in the Jordan canonical form. Thus a matrix A

which can be reduced to G, defined by equation (8.2), has four in-

dependent eigenvectors. The eigenvectors of C are elt e3, e5 and.ee and

those of A are Helt He3, He6 and Hea. There are only three eigen-

vectors corresponding to the eigenvalue which is of multiplicity

five.

We shall not prove the existence and the uniqueness of the Jordan

canonical form since the method of proof has little relevance to the

methods we describe in later chapters. In the simple Jordan submatrix

of order r we have taken the elements equal to unity to lie on the

super-diagonal line. There is a corresponding form in which these

elements are taken on the sub-diagonal. We may refer to the form of

equation (7.4) as the upper Jordan form and the alternative form as

the lower Jordan form. The choice of the value unity for these elements

is of no special significance. Clearly by a suitable similarity trans-

formation with a diagonal matrix these elements could be given arbitrary

non-zero values.

12

THEORETICAL BACKGROUND

The elementary divisors

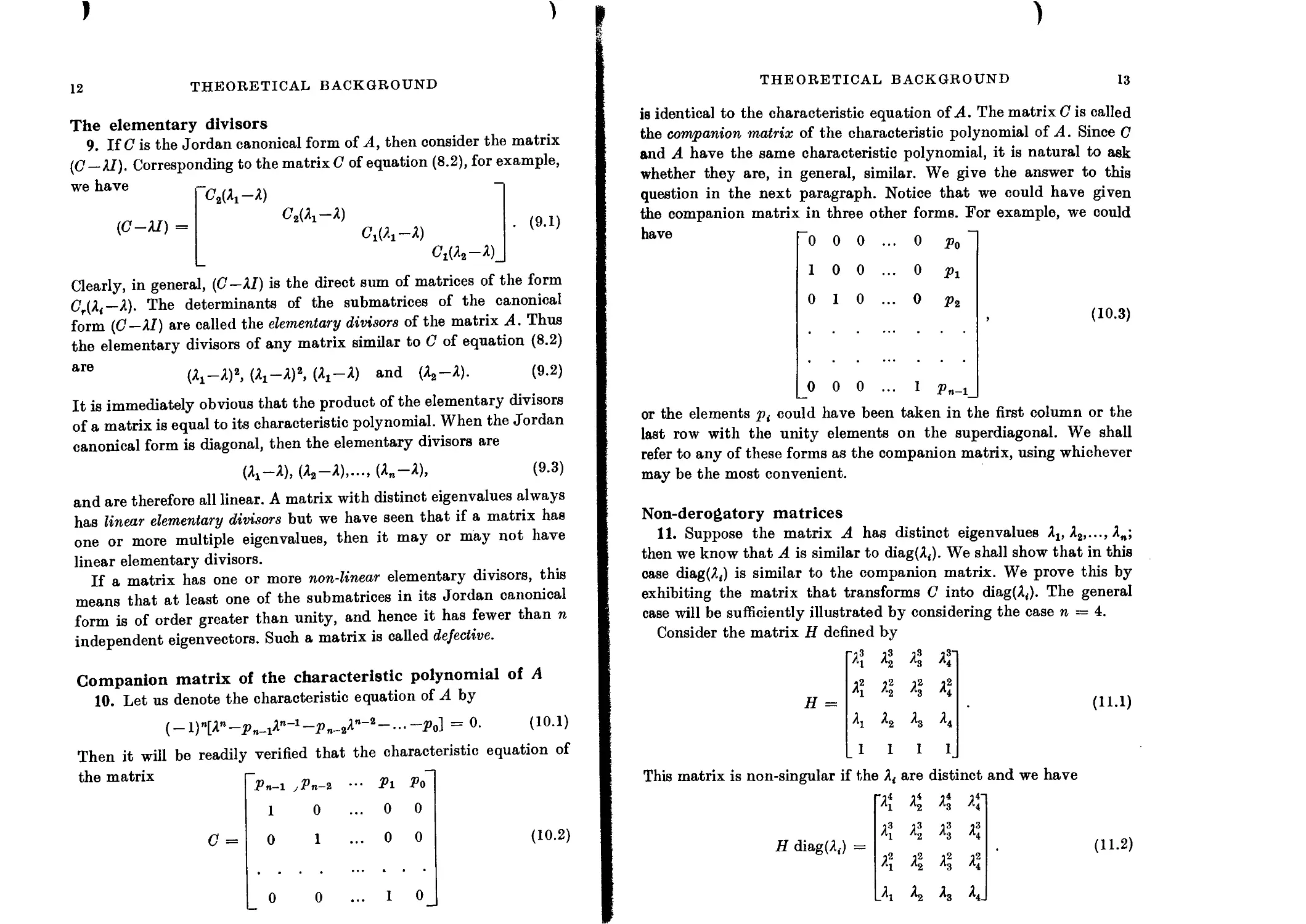

9. If C is the Jordan canonical form of A, then consider the matrix

(С—21). Corresponding to the matrix C of equation (8.2), for example,

we have

(7^-2)

(<7—2Z) =

ед-2)

C'1(21-2)

• (9.1)

C'i(22-2)_

Clearly, in general, (<7—21) is the direct sum of matrices of the form

Cr(2(—2). The determinants of the submatrices of the canonical

form (C—21) are called the elementary divisors of the matrix A. Thus

the elementary divisors of any matrix similar to C of equation (8.2)

аГе (2i— 2)2, (2x-2)2, (2X—2) and (22—2). (9.2)

It is immediately obvious that the product of the elementary divisors

of a matrix is equal to its characteristic polynomial. When the Jordan

canonical form is diagonal, then the elementary divisors are

(2x-2), (2a—2),..., (2n—2), (9.3)

and are therefore all linear. A matrix with distinct eigenvalues always

has linear elementary divisors but we have seen that if a matrix has

one or more multiple eigenvalues, then it may or may not have

linear elementary divisors.

If a matrix has one or more non-linear elementary divisors, this

means that at least one of the submatrices in its Jordan canonical

form is of order greater than unity, and hence it has fewer than n

independent eigenvectors. Such a matrix is called defective.

Companion matrix of the characteristic polynomial of A

10. Let us denote the characteristic equation of A by

( —l)n[2”—pn_22n~2 —... —p0] = 0. (10.1)

Then it will be readily verified that the characteristic equation of

the matrix — —

Pn-1 jPn-2 Pl Po

1 0 ... 0 0

C = 0 1 ... 0 0 (Ю.2)

0 0 ... 1 0

THEORETICAL BACKGROUND

13

is identical to the characteristic equation of A. The matrix G is called

the companion matrix of the characteristic polynomial of A. Since G

and A have the same characteristic polynomial, it is natural to ask

whether they are, in general, similar. We give the answer to this

question in the next paragraph. Notice that we could have given

the companion matrix in three other forms. For example, we could

have “0 0 0

1 0 0

0 1 0

0 Po

0 Pl

0 P2 , (10.3)

1 Pn-1

0 0 0

or the elements p( could have been taken in the first column or the

last row with the unity elements on the superdiagonal. We shall

refer to any of these forms as the companion matrix, using whichever

may be the most convenient.

Non-derogatory matrices

11. Suppose the matrix A has distinct eigenvalues Я1; Я2,..., An;

then we know that A is similar to diag(A,). We shall show that in this

case diag(A4) is similar to the companion matrix. We prove this by

exhibiting the matrix that transforms G into diag(2<). The general

case will be sufficiently illustrated by considering the case n = 4.

Consider the matrix H defined by

j3 j3

Л1 Л2

J2 I2

H = 1 2

Aj A2

1 1

This matrix is non-singular if the A< are distinct and we have

X *2 ^3 %

я! %

H diag(A() =

% %

Л ^2 ^8 ^4-

(П.2)

14

THEORETICAL BACKGROUND

Further, the relation

>з Рг Pl Po "14 14 14 14“ Л1 л2 л3 л4

10 0 0 13 13 13 13 Л1 Л2 Л3 Л4

H = (11.3)

0 10 0 12 12 12 12 Л1 Л2 Л3 Л4

0 0 1 0 ^3 ^4.

follows immediately from the fact that

AJ = P3A?+/M?+M+Po- (П.4)

Hence

CH = H diag(A(), (11.5)

so that C is similar to diag(^J and hence to any matrix with eigen-

values

Turning now to the case when there are some multiple eigenvalues,

we see immediately that if A has more than one independent eigen-

vector corresponding to then it cannot be similar to C. For (C—AI)

is of rank (n — 1) for any value of Я, since the determinant of the matrix

of order (n —1) in the bottom left-hand corner is obviously 1. The

eigenvector x{ corresponding to the eigenvalue At is given by

x? =[ЛГ\ЯГ2,...,Я,., 1], (11.6)

as can be seen by solving

(C-AJX = 0 . (11.7)

For a matrix to be similar to the companion matrix of its charac-

teristic polynomial then, it is necessary that its Jordan canonical

form should have only one Jordan submatrix associated with each

distinct eigenvalue. We now prove that this condition is also sufficient

and again it will suffice to demonstrate it for a simple case.

12. Consider a matrix of order 4 having (Лх —Я)2, (Я3—Я), (Я4 — Я) for

its elementary divisors. Such a matrix is similar to At given by

'A 1

О Я4

^4-

(12.1)

THEORETICAL BACKGROUND

16

Now the matrix H defined by

"i8 012 o3“

Aj OAj Ag A^

% ч Л32 22

н =

2i 1 23 Я4

1 0 11

(12.2)

is clearly non-singular if 21( 23, 24 are distinct, and we have

ЯА4 = X 42? 23 2?" • (12.3)

}3 q12 08 j3 A^ OAj Ag A^

2? 22i A2 2?

_2i 1 23 24

Since 2i is a double root of the characteristic equation/(2) = 0, we have

/'(Ai) = 0, giving and hence 42i — 32>32i+2pg2i4-Pi, CH = HA 4. (12.4) (12.5)

In an exactly similar way we can deal with a Jordan submatrix

of order r associated with a matrix of order n. If 2j is the relevant

eigenvalue then the corresponding matrix H, has the r columns

2”-1 In — 1' \ 1 , л;-2 .. In —1\ 1 i A?“r yr—1/ 1

2»-2 In— 2' \ 1 > 2«-3 .. 1 T r-i 1 1 (12.6)

Ai 1 -• 0

1 0 .. °

b Hence, provided there is only one Jordan matrix associated with each

Ц distinct 2,., and therefore only one eigenvector associated with each

К distinct 2O the matrix is similar to the companion matrix of its

ж characteristic polynomial. Such a matrix is called non-derogatory.

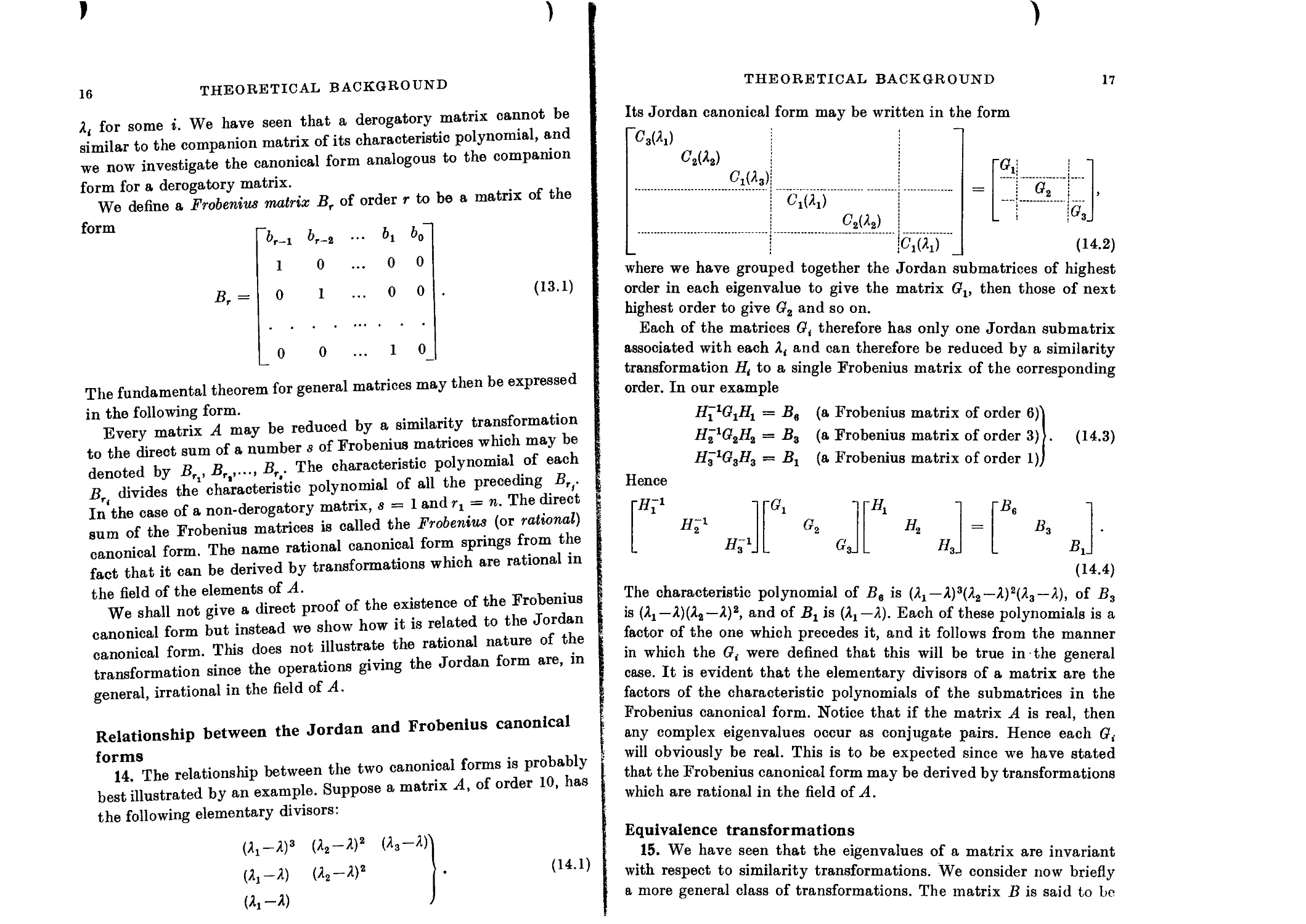

К The Frobenius (rational) canonical form

В 13. A matrix is called derogatory if there is more than one Jordan

К wbmatrix (and therefore more than one eigenvector) associated with

16

THEORETICAL BACKGROUND

for some i. We have seen that a derogatory matrix cannot be

similar to the companion matrix of its characteristic polynomial, and

we now investigate the canonical form analogous to the companion

form for a derogatory matrix.

We define a Frobenius matrix Br of order r to be a matrix of the

form

^r-l ^r-2 *0

1 0 ... 0 0

Br = 0 1 ... 0 0 (13.1)

_ 0 0 ... 1 0_

The fundamental theorem for general matrices may then be expressed

in the following form.

Every matrix A may be reduced by a similarity transformation

to the direct sum of a number s of Frobenius matrices which may be

denoted by Bfj, Вг>,..., Br . The characteristic polynomial of each

ВГ( divides the characteristic polynomial of all the preceding ВГ).

In the case of a non-derogatory matrix, s = 1 and rt = n. The direct

sum of the Frobenius matrices is called the Frobenius (or rational)

canonical form. The name rational canonical form springs from the

fact that it can be derived by transformations which are rational in

the field of the elements of A.

We shall not give a direct proof of the existence of the Frobenius

canonical form but instead we show how it is related to the Jordan

canonical form. This does not illustrate the rational nature of the

transformation since the operations giving the Jordan form are, in

general, irrational in the field of A.

Relationship between the Jordan and Frobenius canonical

forms

14. The relationship between the two canonical forms is probably

best illustrated by an example. Suppose a matrix A, of order 10, has

the following elementary divisors:

Л-Я)3

(Л1-Л)

Л-Я)

(Л2-Я)2

(Л-D2

U3-A)'

(14.1)

THEORETICAL BACKGROUND

17

Its Jordan canonical form may be written in the form

4(A)

GM

CM

rGi

cm

G3J

I 1ВД

where we have grouped together the Jordan submatrices of highest

order in each eigenvalue to give the matrix Gv then those of next

highest order to give G2 and so on.

Each of the matrices Gt therefore has only one Jordan submatrix

associated with each and can therefore be reduced by a similarity

transformation Ht to a single Frobenius matrix of the corresponding

order. In our example

H^G.H. = Be

H?G3H3 = B3

H3lG3H3 = Br

(14-2)

Hence

ГЯГ1

(a Frobenius matrix of order 6)'

(a Frobenius matrix of order 3) .

(a Frobenius matrix of order 1),

(14-3)

6r2

G3.

r^i 1

h,

L h3]

Ba

з J

Г&

(14.4)

The characteristic polynomial of Be is (Aj—Л)3(Л2—A)2(A3—A), of B3

is (AJ—A)(A2—A)2, and of Br is (Ax—A). Each of these polynomials is a

factor of the one which precedes it, and it follows from the manner

in which the G( were defined that this will be true in the general

case. It is evident that the elementary divisors of a matrix are the

factors of the characteristic polynomials of the submatrices in the

Frobenius canonical form. Notice that if the matrix A is real, then

any complex eigenvalues occur as conjugate pairs. Hence each G(

will obviously be real. This is to be expected since we have stated

that the Frobenius canonical form may be derived by transformations

which are rational in the field of A.

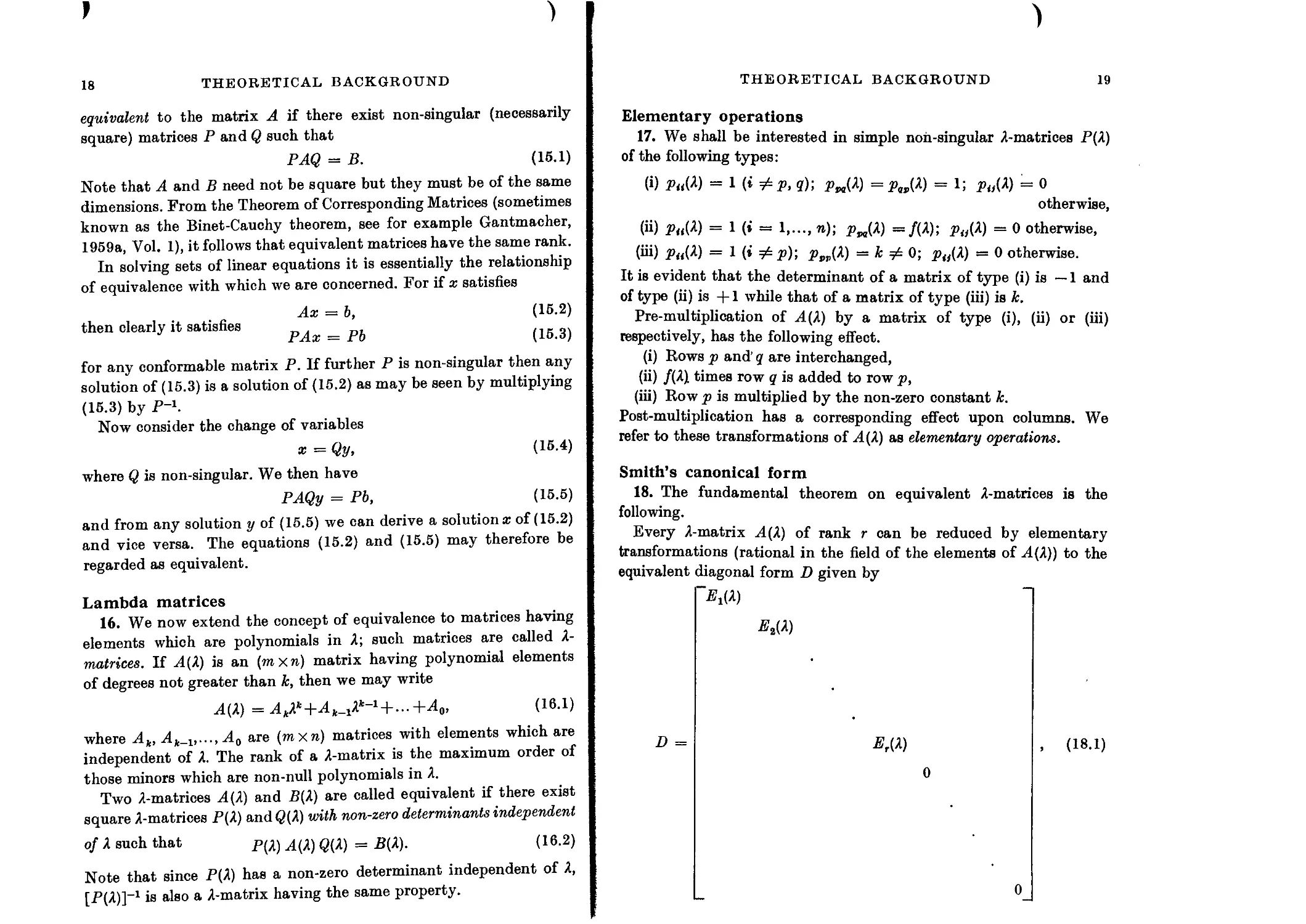

Equivalence transformations

15. We have seen that the eigenvalues of a matrix are invariant

with respect to similarity transformations. We consider now briefly

a more general class of transformations. The matrix В is said to be

18

THEORETICAL BACKGROUND

equivalent to the matrix A if there exist non-singular (necessarily

square) matrices P and Q such that

PAQ = B. (15.1)

Note that A and В need not be square but they must be of the same

dimensions. From the Theorem of Corresponding Matrices (sometimes

known as the Binet-Cauchy theorem, see for example Gantmacher,

1959a, Vol. 1), it follows that equivalent matrices have the same rank.

In solving sets of linear equations it is essentially the relationship

of equivalence with which we are concerned. For if x satisfies

Ax = b, (15.2)

then clearly it satisfies

PAx = Pb (15.3)

for any conformable matrix P. If further P is non-singular then any

solution of (15.3) is a solution of (15.2) as may be seen by multiplying

(15.3) by P-\

Now consider the change of variables

a: = Qy, (15.4)

where Q is non-singular. We then have

PAQy = Pb, (15.5)

and from any solution у of (15.5) we can derive a solution x of (15.2)

and vice versa. The equations (15.2) and (15.5) may therefore be

regarded as equivalent.

Lambda matrices

16. We now extend the concept of equivalence to matrices having

elements which are polynomials in 2; such matrices are called 2-

matrices. If Л (2) is an (mxn) matrix having polynomial elements

of degrees not greater than k, then we may write

Л(2) = Л^*+А_12*-1+...+Л0, (16.1)

where Ak, Ak_r....Ao are (mxn) matrices with elements which are

independent of 2. The rank of a 2-matrix is the maximum order of

those minors which are non-null polynomials in 2.

Two 2-matrices Л(2) and B(2) are called equivalent if there exist

square 2-matrices F(2) and 0(2) with non-zero determinants independent

of к such that л(2) Q(A) = B(2). (16.2)

Note that since F(2) has a non-zero determinant independent of 2,

[jP(2)]“1 is also a 2-matrix having the same property.

THEORETICAL BACKGROUND

19

Elementary operations

17. We shall be interested in simple noh-singular 2-matrices P(2)

of the following types:

(i) рц(Х) = <?); pM(2) = ^>„(2) = 1; pit(X) = 0

otherwise,

(ii) PhW = 1 (i = 1,..., n); pM(2) ==/(2); po(2) = 0 otherwise,

(iii) p{i(k) = 1 (i ^p)-, р„„(2) = к =£ 0; po(2) = 0 otherwise.

It is evident that the determinant of a matrix of type (i) is — 1 and

of type (ii) is +1 while that of a matrix of type (iii) is k.

Pre-multiplication of Л(2) by a matrix of type (i), (ii) or (iii)

respectively, has the following effect.

(i) Rows p and’ q are interchanged,

(ii) /(2) times row q is added to row p,

(iii) Row p is multiplied by the non-zero constant k.

Post-multiplication has a corresponding effect upon columns. We

refer to these transformations of Л(2) as elementary operations.

Smith’s canonical form

18. The fundamental theorem on equivalent 2-matrices is the

following.

Every 2-matrix Л (2) of rank r can be reduced by elementary

transformations (rational in the field of the elements of Л(2)) to the

equivalent diagonal form D given by

"Ei(2)

ErW

0

(18.1)

0

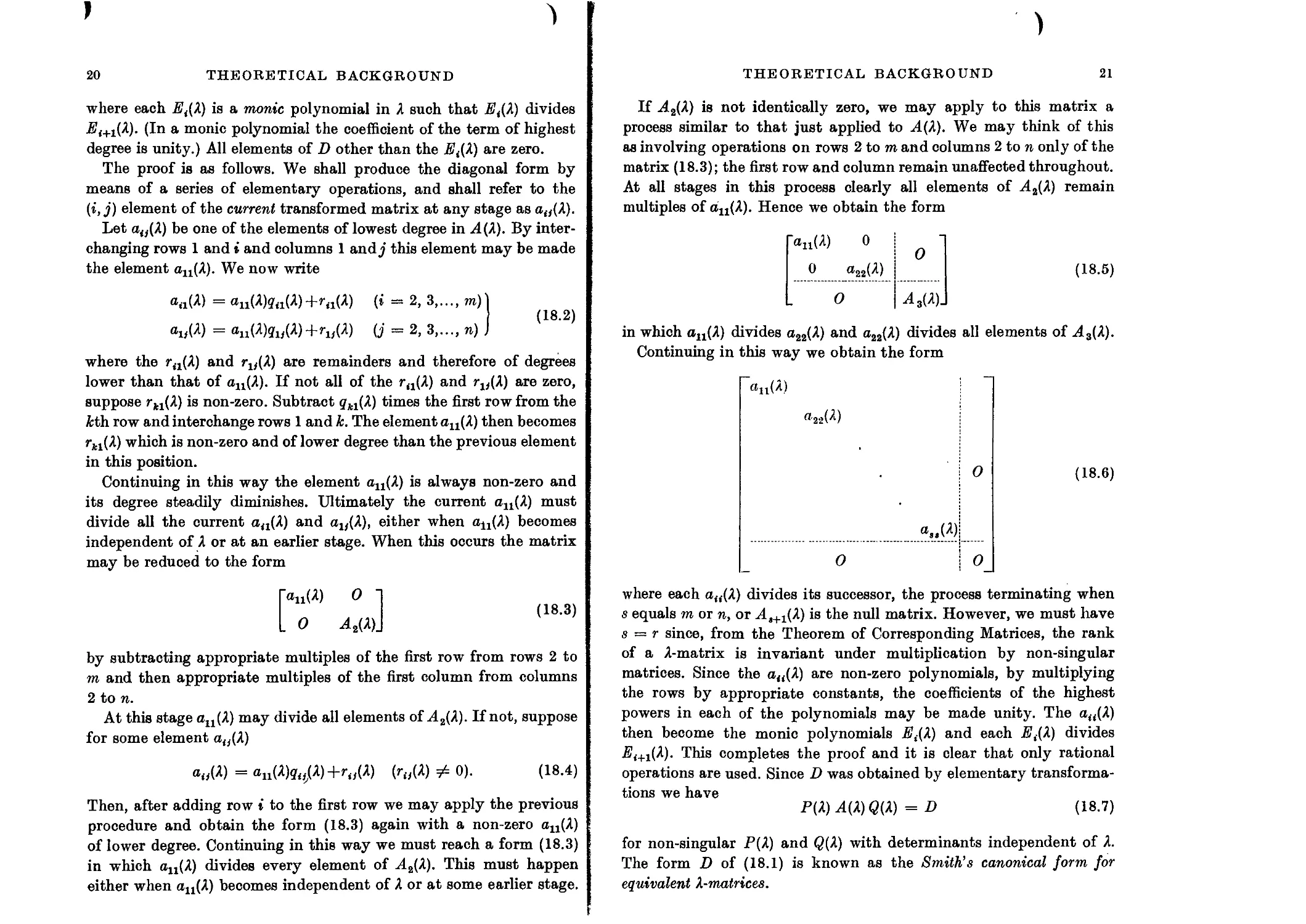

20

THEORETICAL BACKGROUND

where each Et(X) is a monic polynomial in 2 such that Е<(Л) divides

A'<+i(2). (In a monic polynomial the coefficient of the term of highest

degree is unity.) All elements of D other than the E{(X) are zero.

The proof is as follows. We shall produce the diagonal form by

means of a series of elementary operations, and shall refer to the

(i, j) element of the current transformed matrix at any stage as aiy(2).

Let ao(2) be one of the elements of lowest degree in А(2). By inter-

changing rows 1 and i and columns 1 and J this element may be made

the element an(2). We now write

an(2) — anW<hiW(* — 2, 3,..., wi)l

(18-2)

au(2) = au(2)?u(2)+rw(2) (j = 2, 3,..., n) J

where the rn(2) and rjy(2) are remainders and therefore of degrees

lower than that of au(2). If not all of the rn(2) and rH(2) are zero,

suppose rH(2) is non-zero. Subtract g'il(2) times the first row from the

Mh row and interchange rows 1 and k. The element au(2) then becomes

ги(2) which is non-zero and of lower degree than the previous element

in this position.

Continuing in this way the element au(2) is always non-zero and

its degree steadily diminishes. Ultimately the current au(2) must

divide all the current aa(2) and au(2.), either when ац(2) becomes

independent of 2 or at an earlier stage. When this occurs the matrix

may be reduced to the form

«nW 0

. О A2(2).

(18.3)

by subtracting appropriate multiples of the first row from rows 2 to

m and then appropriate multiples of the first column from columns

2 to n.

At this stage au(2) may divide all elements of A2(2). If not, suppose

for some element a(J(2)

a„(2) =au(2)g4(2)+ro(2) (r„(2) ф 0). (18.4)

Then, after adding row i to the first row we may apply the previous

procedure and obtain the form (18.3) again with a non-zero au(2)

of lower degree. Continuing in this way we must reach a form (18.3)

in which au(2) divides every element of A2(2). This must happen

either when au(2) becomes independent of 2 or at some earlier stage.

THEORETICAL BACKGROUND

21

If AZ(A) is not identically zero, we may apply to this matrix a

process similar to that just applied to A (2). We may think of this

as involving operations on rows 2 to m and columns 2 to n only of the

matrix (18.3); the first row and column remain unaffected throughout.

At all stages in this process clearly all elements of A2(2) remain

multiples of an(2). Hence we obtain the form

«и(А) о

0

О

(18.5)

in which an(2) divides агг(Х) and a22(2) divides all elements of A3(2).

Continuing in this way we obtain the form

«11(A)

«22(A) >

| 0

«,,(A)|

О O_

(18.6)

where each af((2) divides its successor, the process terminating when

s equals m or n, or ri.3+1(2) is the null matrix. However, we must have

s = r since, from the Theorem of Corresponding Matrices, the rank

of a 2-matrix is invariant under multiplication by non-singular

matrices. Since the a4<(2) are non-zero polynomials, by multiplying

the rows by appropriate constants, the coefficients of the highest

powers in each of the polynomials may be made unity. The ««(A)

then become the monic polynomials P,(2) and each P,(2) divides

2?<+i(2). This completes the proof and it is clear that only rational

operations are used. Since D was obtained by elementary transforma-

tions we have

P(2) A(2) QW = D (18.7)

for non-singular P(2) and Q(2) with determinants independent of A.

The form D of (18.1) is known as the Smith’s canonical form for

equivalent ^.-matrices.

22

THEORETICAL BACKGROUND

The highest common factor of Л-rowed minors

of a A-matrix

19. Consider now the Л-rowed minors of the Smith’s canonical

form. If к > т then all Л-rowed minors are zero. Otherwise the only

non-zero Л-rowed minors are products of Л of the Et(A). Since each

Et(A) is a factor of EM(A), the highest common factor (H.C.F.) Gk(A)

of Л-rowed minors is Ek(A) E2(A)...Ek(A). Hence if we write С0(Л) = 1

(i = 1, 2,..., r). (19.1)

We now show that the H.C.F. of Л-rowed minors of a matrix (we

take the coefficient of the highest power of Л to be unity) is invariant

under equivalence transformations. For if

Р(Л)А(Л)(?(Л) = В(Л), (19.2)

then any Л-rowed minor of В(Л) may be expressed as the sum of

terms of the form PaApQy where Pa, Ap, Q? denote a Л-rowed minor

of Р(Л), А(Л), Q(A) respectively. Hence the H.C.F. of the Л-rowed

minors of A(A) divides any Л-rowed minor of В (A). Since, however,

Л(Л) = [Р(Л)]-»В(Л)[(?(Л)]-»

and [Р(Л)]-1 and [ф(Л)]_1 are also Л-matrices, the H.C.F. of Л-rowed

minors of B(A) divides any Л-rowed minor of А(Л). The two H.C.F.’s

must therefore be equal.

All equivalent Л-matrices therefore have the same Smith’s canonical

form. The polynomials E{(A) are called the invariant factors or invariant

polynomials of А(Л).

Invariant factors of (A—XI)

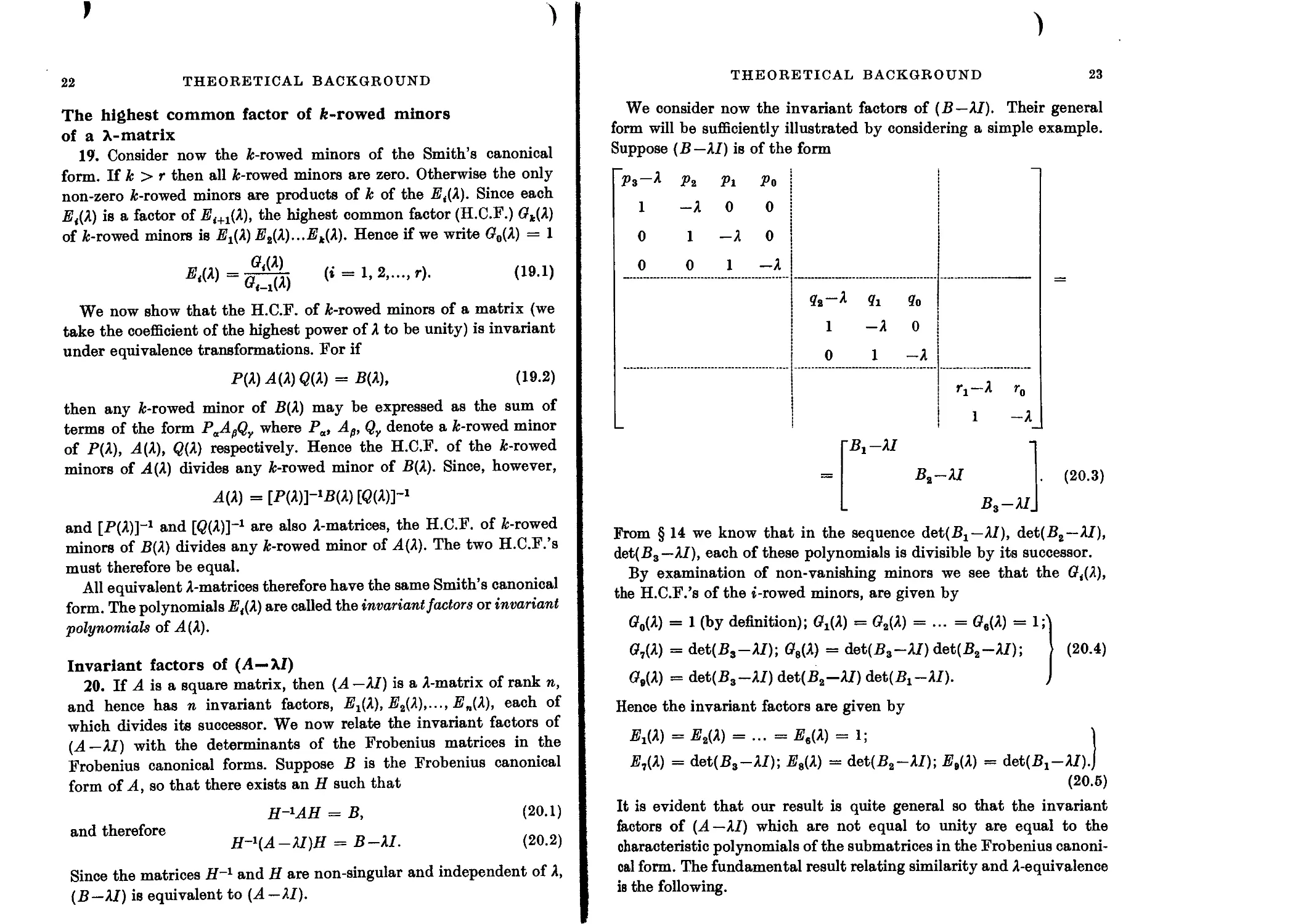

20. If A is a square matrix, then (A —Al) is a Л-matrix of rank n,

and hence has n invariant factors, Ег(А), E2(A),..., E„(A), each of

which divides its successor. We now relate the invariant factors of

(A— Al) with the determinants of the Frobenius matrices in the

Frobenius canonical forms. Suppose В is the Frobenius canonical

form of A, so that there exists an H such that

and therefore

H^AH = B, (20.1)

H~l(A-AI)H = B—AI. (20.2)

Since the matrices H-1 and H are non-singular and independent of Л,

(B—AI) is equivalent to (A —Al).

THEORETICAL BACKGROUND

23

We consider now the invariant factors of (В—Al). Their general

form will be sufficiently illustrated by considering a simple example.

Suppose (B—AI) is of the form

р3-Л p2 P1 p0

1 -Л 0 0

0 1 -A 0

0 0 1 -A

q0

1 -A 0

0 ~A

ri-Л r0

1 -A

ГВ1-Л/

Ba—AI

BS-AI

(20.3)

From § 14 we know that in the sequence de^Bj — Al), det(B2 —Al),

&eh(B3—AI), each of these polynomials is divisible by its successor.

By examination of non-vanishing minors we see that the Gt(A),

the H.C.F.’s of the i-rowed minors, are given by

G0(A) = 1 (by definition); G±(A) = G2(A) = ... = (?в(Л) = 1Л

(77(A) = det(B3—AI); G8(A) = det(B3-lT) det(B2-W); I (20.4)

Gt(A) = det(B3 —Al) &Л(В3-А1) АеЦВ^-AI). J

Hence the invariant factors are given by

EX(A) = ВД = ... = E3(A) = 1; 1

E2(A) = det(B3-Al)-, E3(A) = det(B2-Af); E3(A) = det(B1-lir).j

(20.5)

It is evident that our result is quite general so that the invariant

factors of (A—Al) which are not equal to unity are equal to the

characteristic polynomials of the submatrices in the Frobenius canoni-

cal form. The fundamental result relating similarity and A-equivalence

is the following.

24

THEORETICAL BACKGROUND

A necessary and sufficient condition for A to be similar to В is that

(А — 27) and {B—AI) are equivalent. For this reason the invariant

factors of (A—Ad) are sometimes called the similarity invariants of A.

Again from § 14 we see that if we write

E^A) = (Лх—ЛУЧЛа—Л)Ч (20.6)

where Лх, Л2,..., Л, are the distinct eigenvalues of A, then the factors

on the right of (20.6) are the elementary divisors of A.

The triangular canonical form

21. Reduction to Jordan canonical form provides complete informa-

tion on both the eigenvalues and the eigenvectors. This form is a

special case of the triangular canonical form. The latter is very much

simpler from the theoretical standpoint and also for practical com-

putation. Its importance is a consequence of the following theorem

and that of § 25.

Every matrix is similar to a matrix of triangular form, that is, a

matrix in which all the elements above the diagonal are zero. We

refer to such a matrix as a lower triangular matrix. As with the

Jordan form there is an alternative transformation leading to a

matrix with zeros below the diagonal {upper triangular). The proof is

comparatively elementary but is deferred until § 42 when the appro-

priate transformation matrices have been introduced.

Clearly the diagonal elements are the eigenvalues with the appro-

priate multiplicities. For if these elements are pt then the characteristic

polynomial of the triangular matrix is JJ {pt —A) and we have seen

that the characteristic polynomial is invariant under similarity trans-

formations.

Hermitian and symmetric matrices

22. Matrices which are real and symmetric are of special importance

in practice as we shall see in § 31. We could extend this class of

matrices by including all matrices which are symmetric and have

complex elements. However, such an extension proves to be of little

value because matrices of this extended class do not have many of

the more important properties of real symmetric matrices. The most

useful extension is to the class of matrices of the form (P+iQ) where

P is real and symmetric and Q is real and skew symmetric, that is

QT = -Q. (22.1)

THEORETICAL BACKGROUND

25

Matrices of this class are called Hermitian matrices. If A is Her-

mitian we have from the definition

AT = A, (22.2)

where A denotes the matrix whose elements are the complex con-

jugates of those of A. The matrix AT is frequently denoted by AB

and is referred to as the Hermitian transposed matrix. Similarly xB

is the row vector with components equal to the complex conjugates

of those of the column vector x. The notation is a convenient one and

has the advantage that results proved for Hermitian matrices may

be converted to the corresponding results for real symmetric matrices

by replacing AH by AT and ж® by xT. If a is a scalar, aH is defined to

be the complex conjugate of a. It follows immediately from the

definitions that

(AB)B = A

(хл)н = X

= CbBbAh

AB — A

(22.3)

(ABC)H

while

(22.4)

if A is Hermitian.

Note that ^x is the inner-product of у and x as normally under-

stood and г. n J! tt .лл —

У x = (у x)B = xBy. (22.5)

The inner-product xBx is real and positive for all x other than the

null vector since it is equal to the sum of the squares of the moduli

of the elements of x.

Elementary properties of Hermitian matrices

23. The eigenvalues of a Hermitian matrix are all real. For if

then

Ax = Ax

xBAx = AxBx.

(23.1)

(23.2)

Now we have remarked that xBx is real and positive for a non-null x.

Further we have

(xBAx)B == xBABx?B — Xя Ax,

(23.3)

and since Xя Ax is a scalar this means that it is real. Hence A is real.

For real symmetric matrices, real eigenvalues imply real eigenvectors,

but the eigenvectors of a complex Hermitian matrix are in general

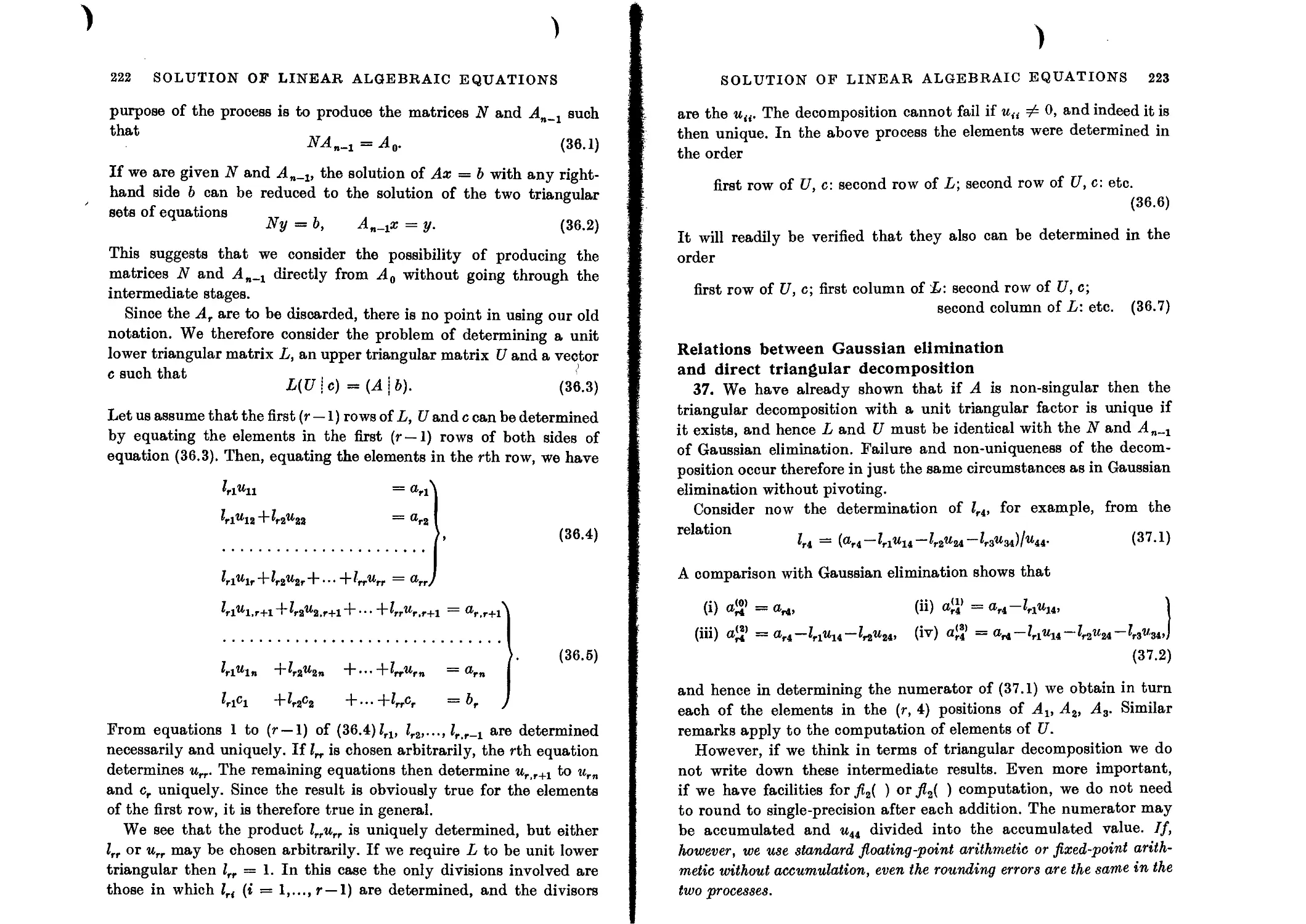

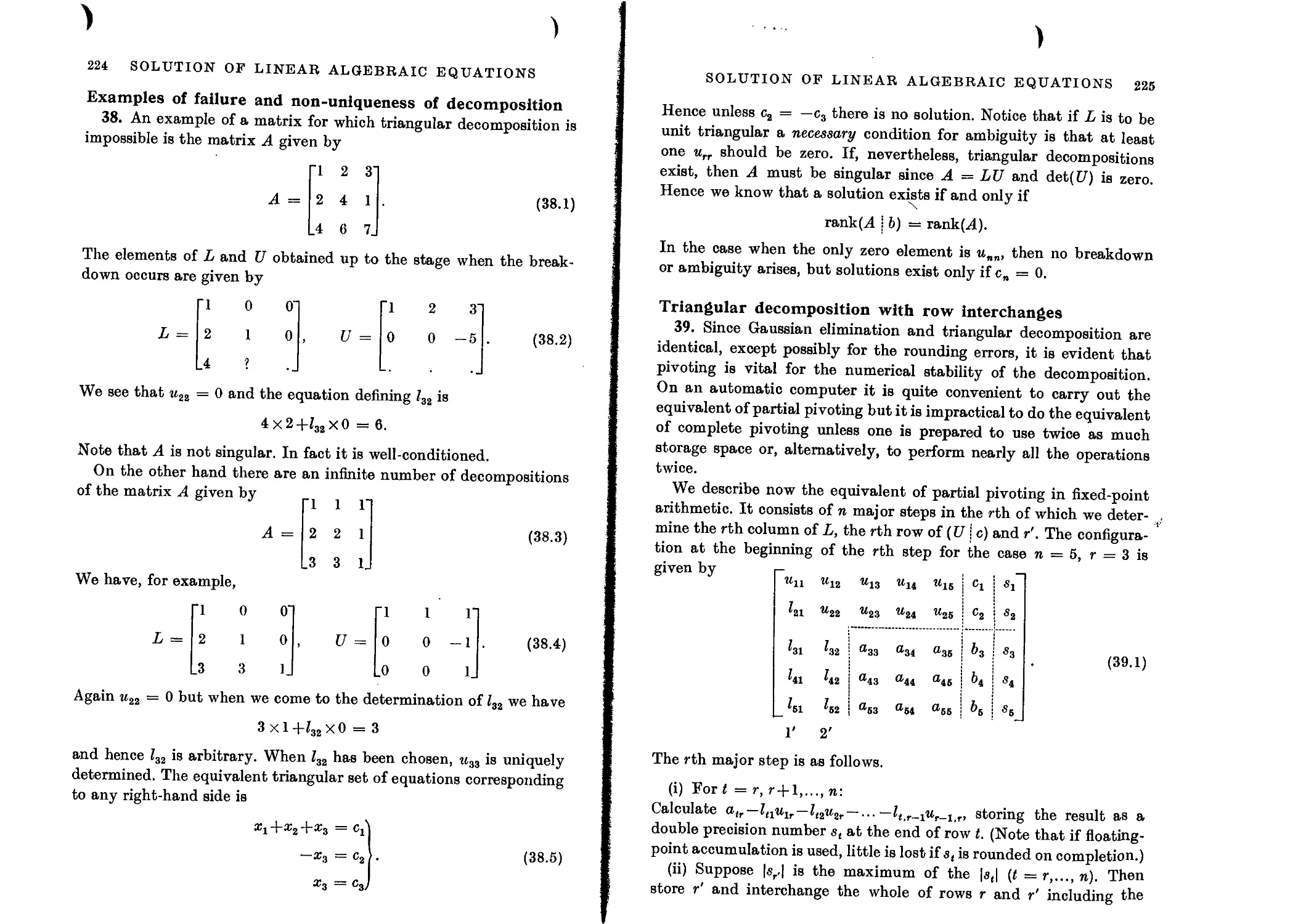

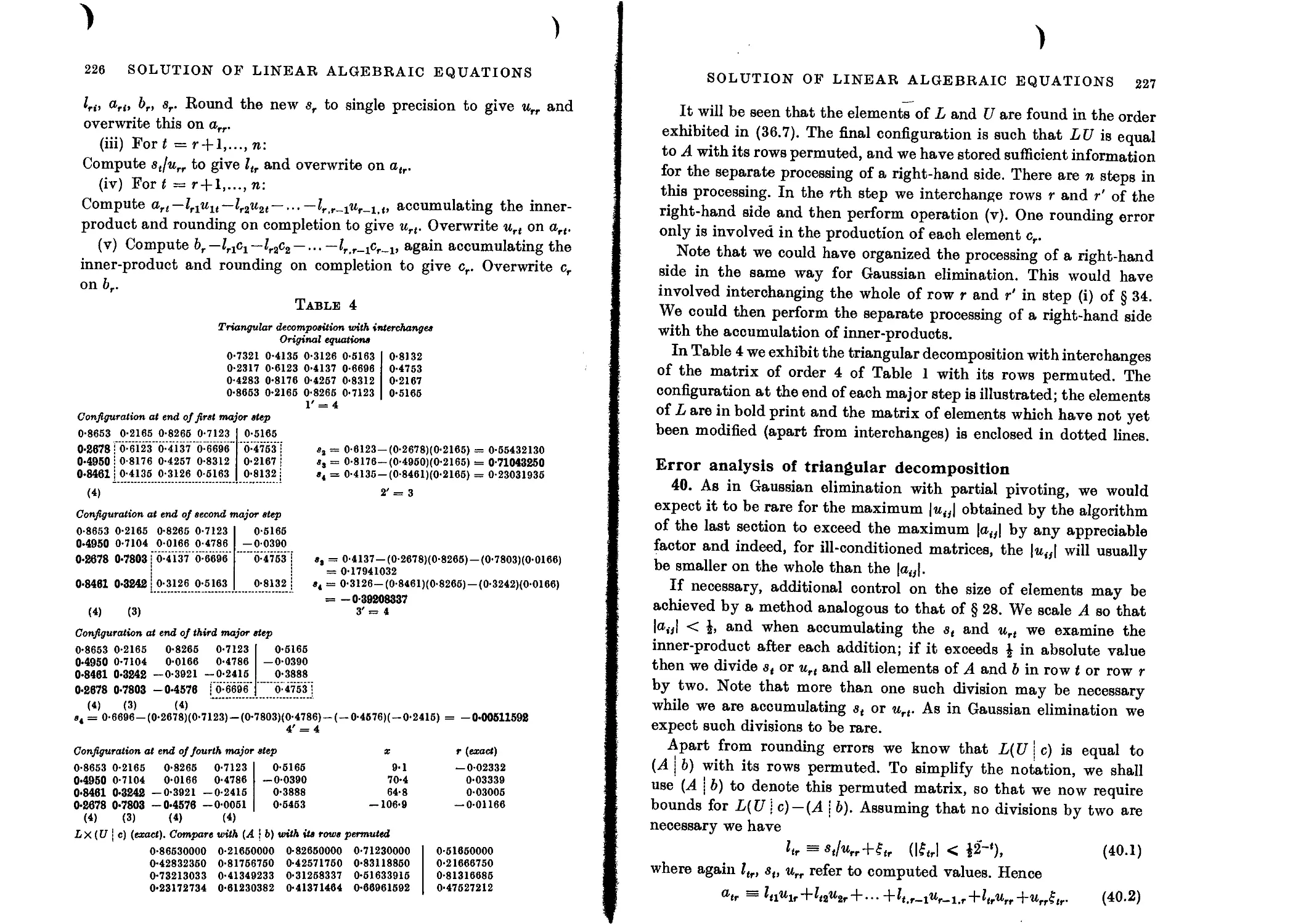

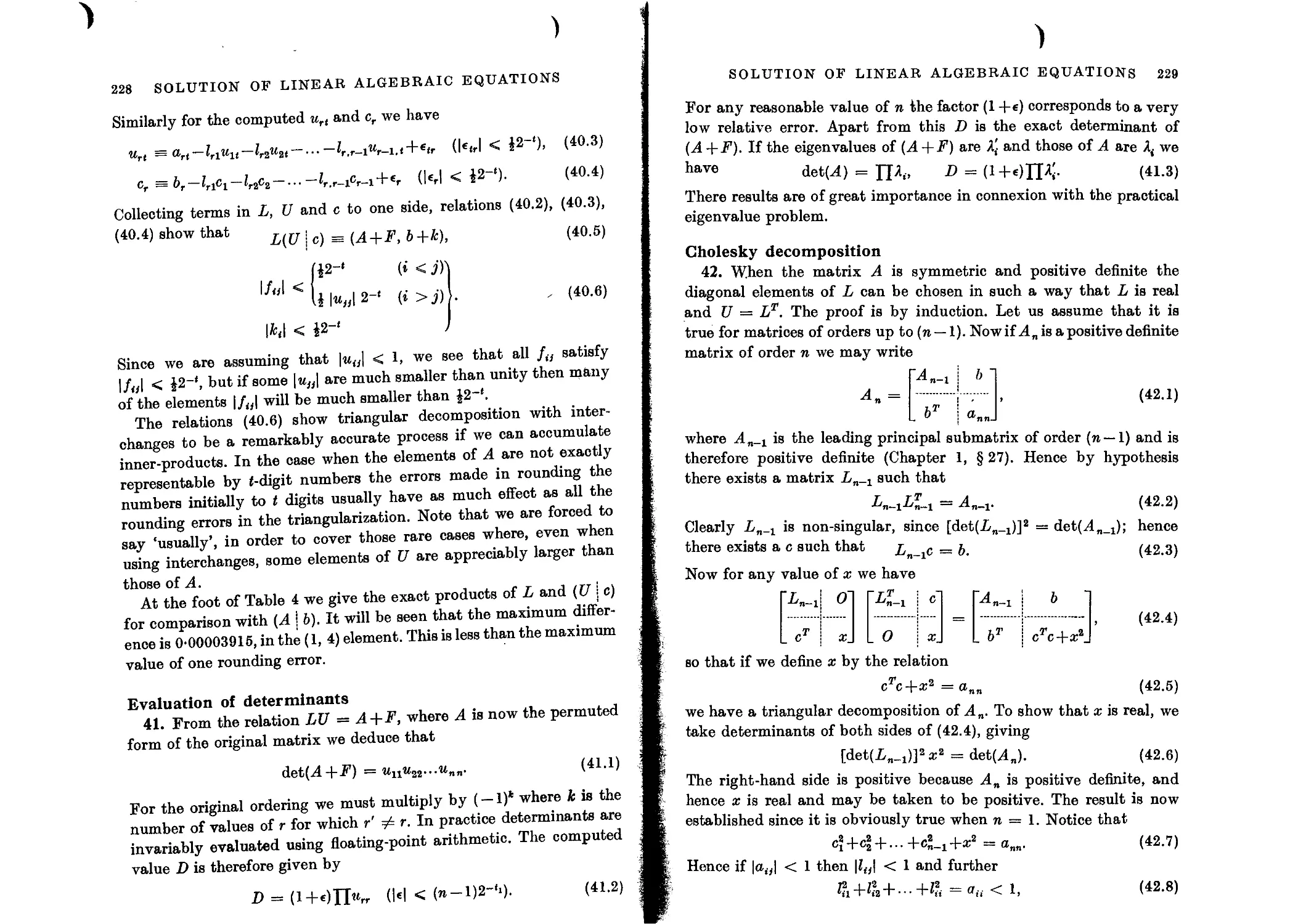

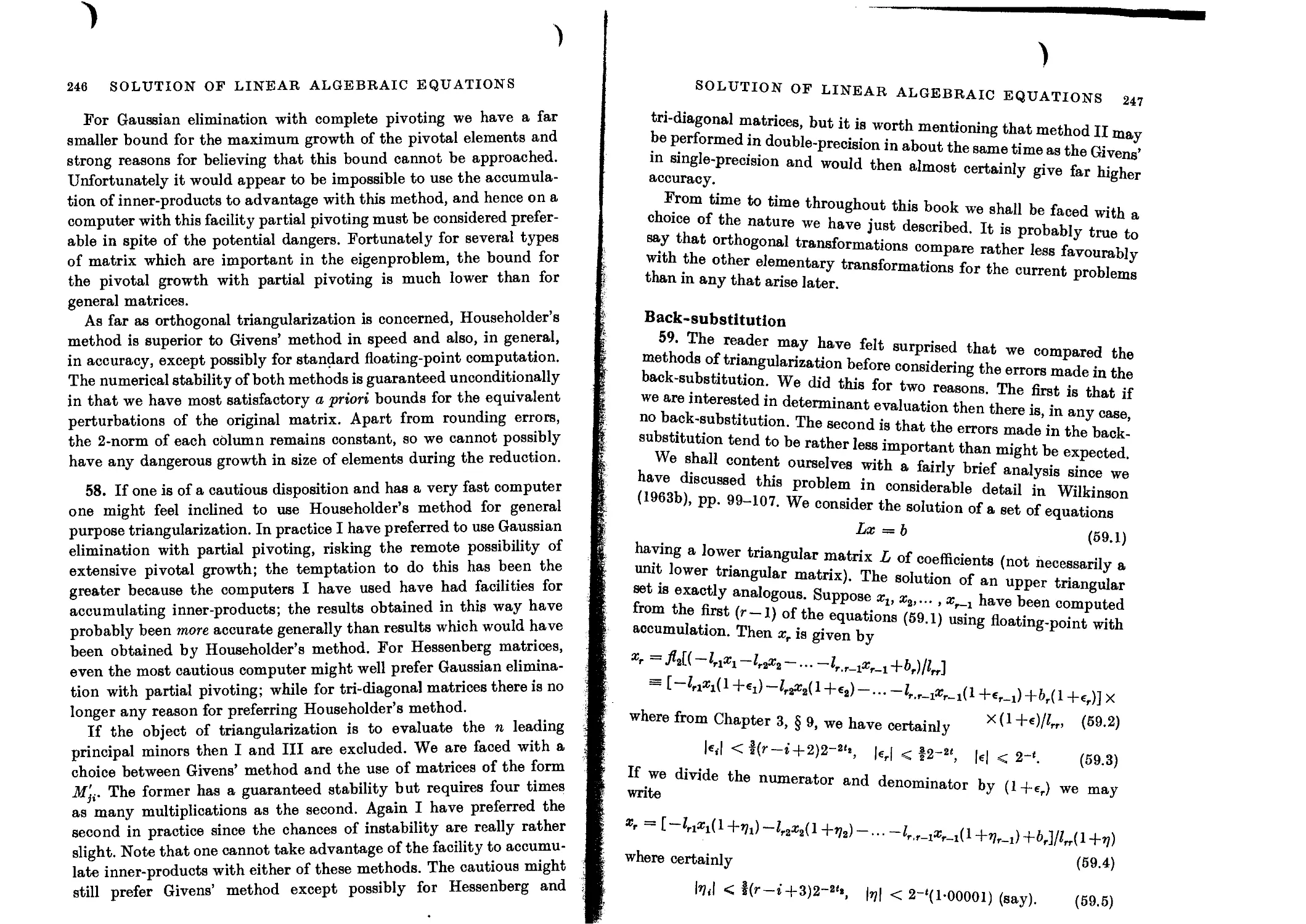

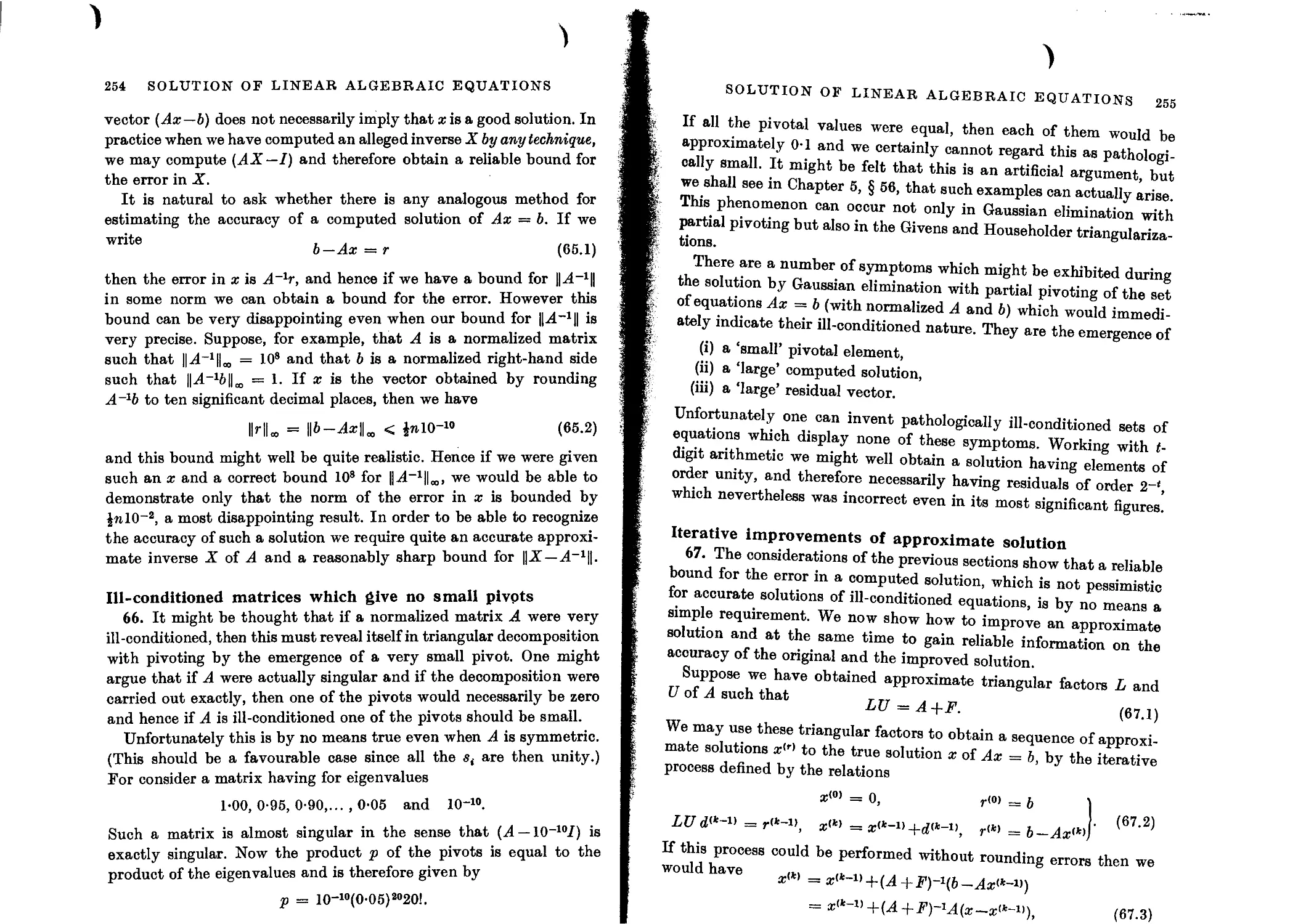

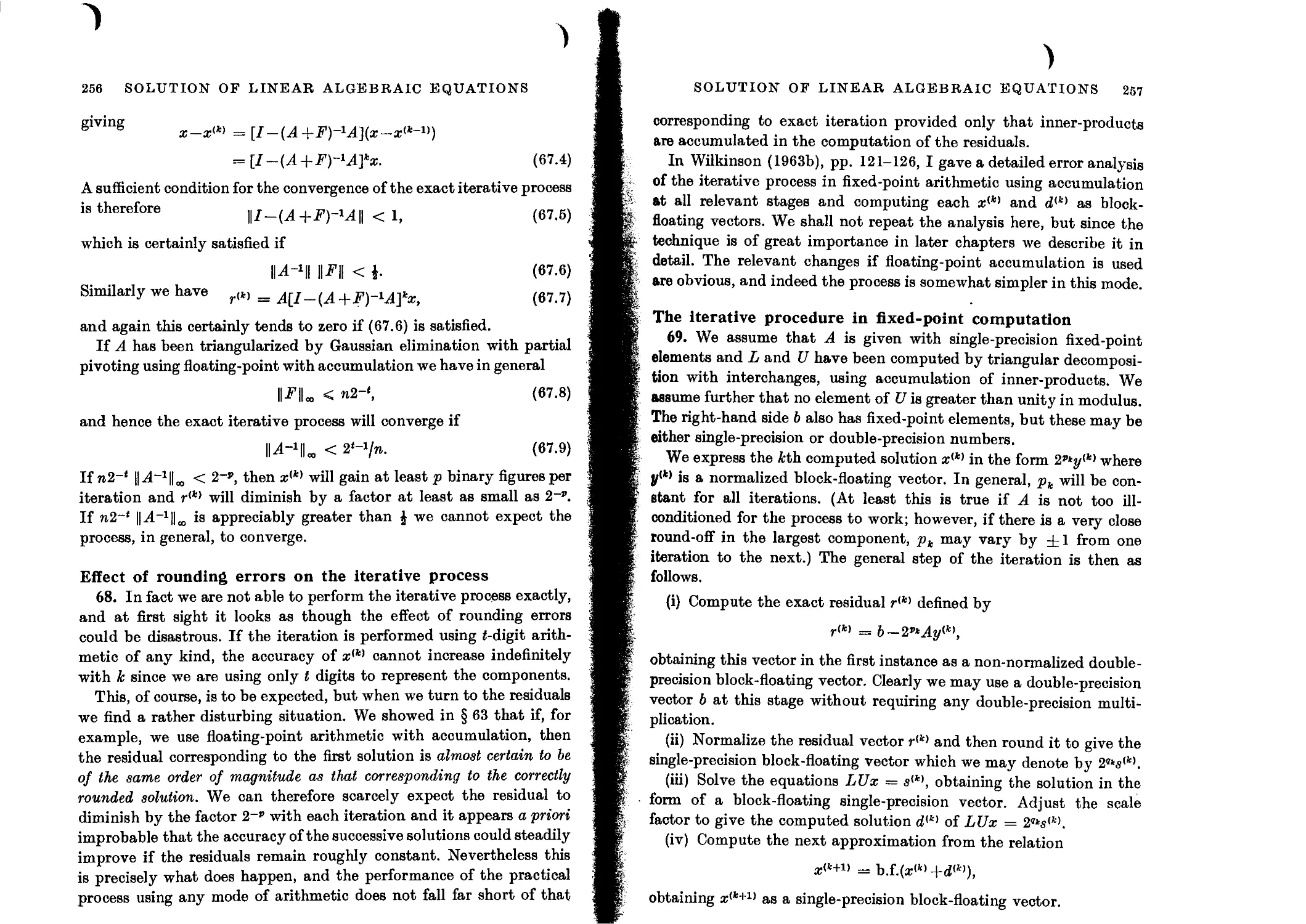

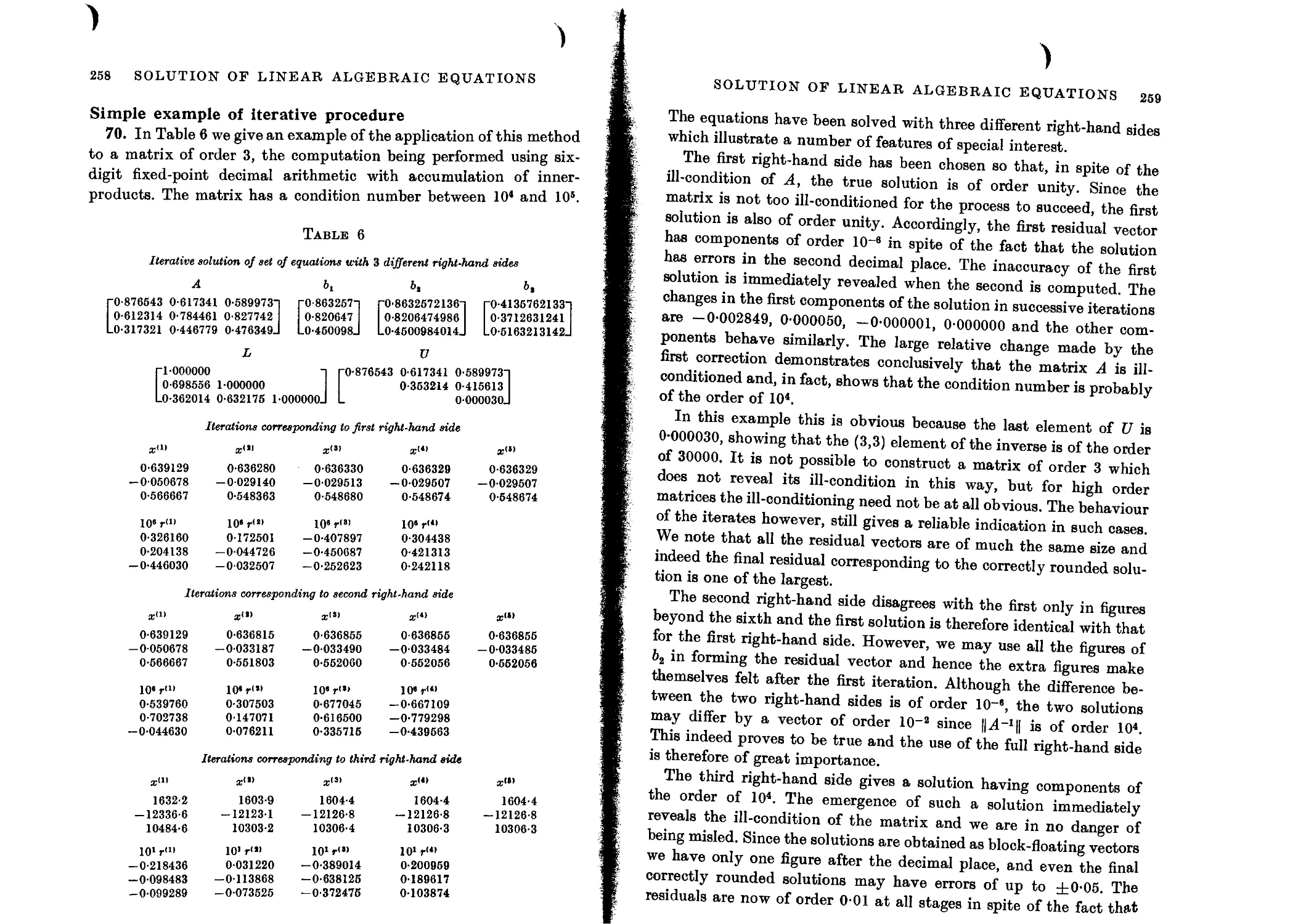

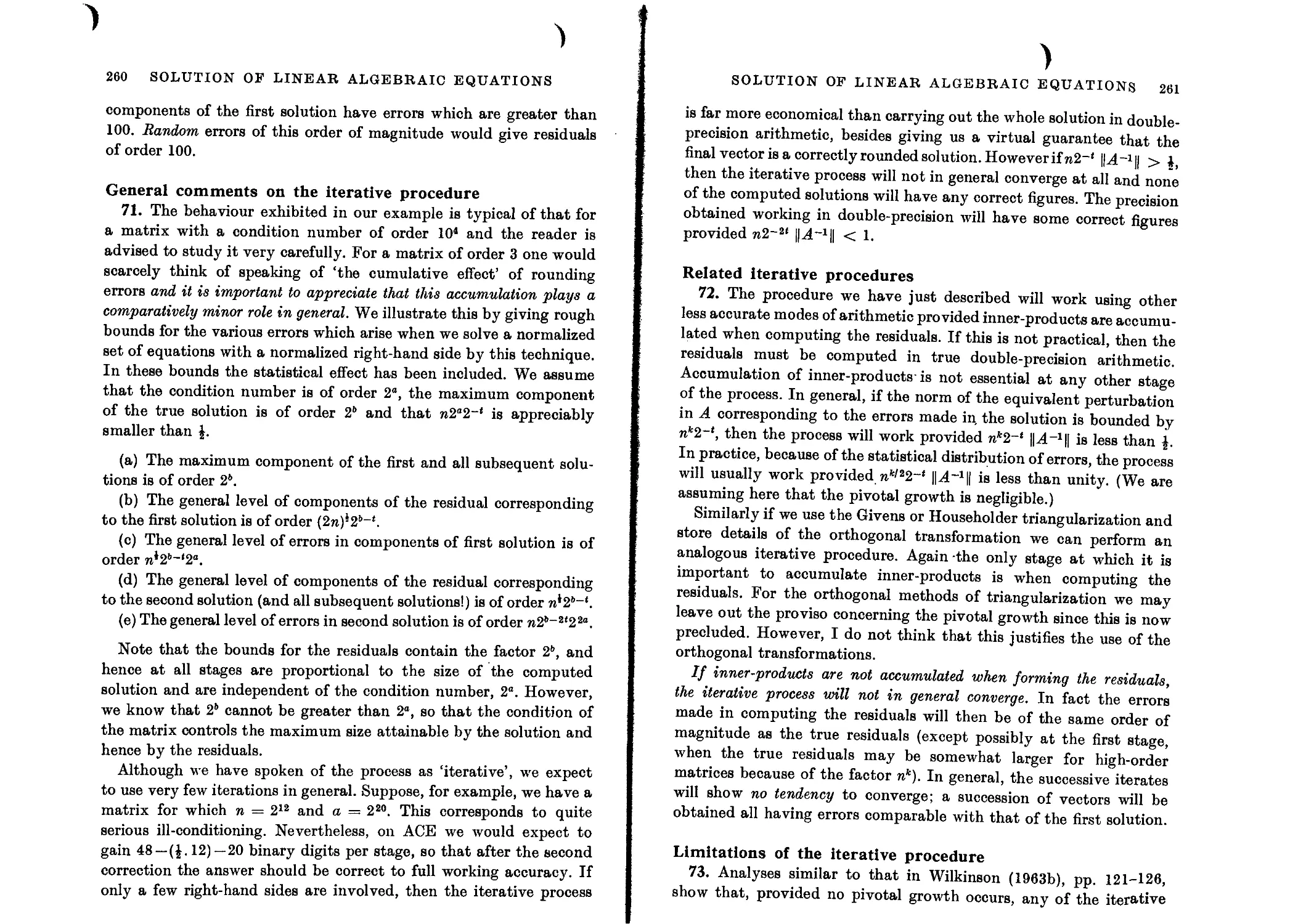

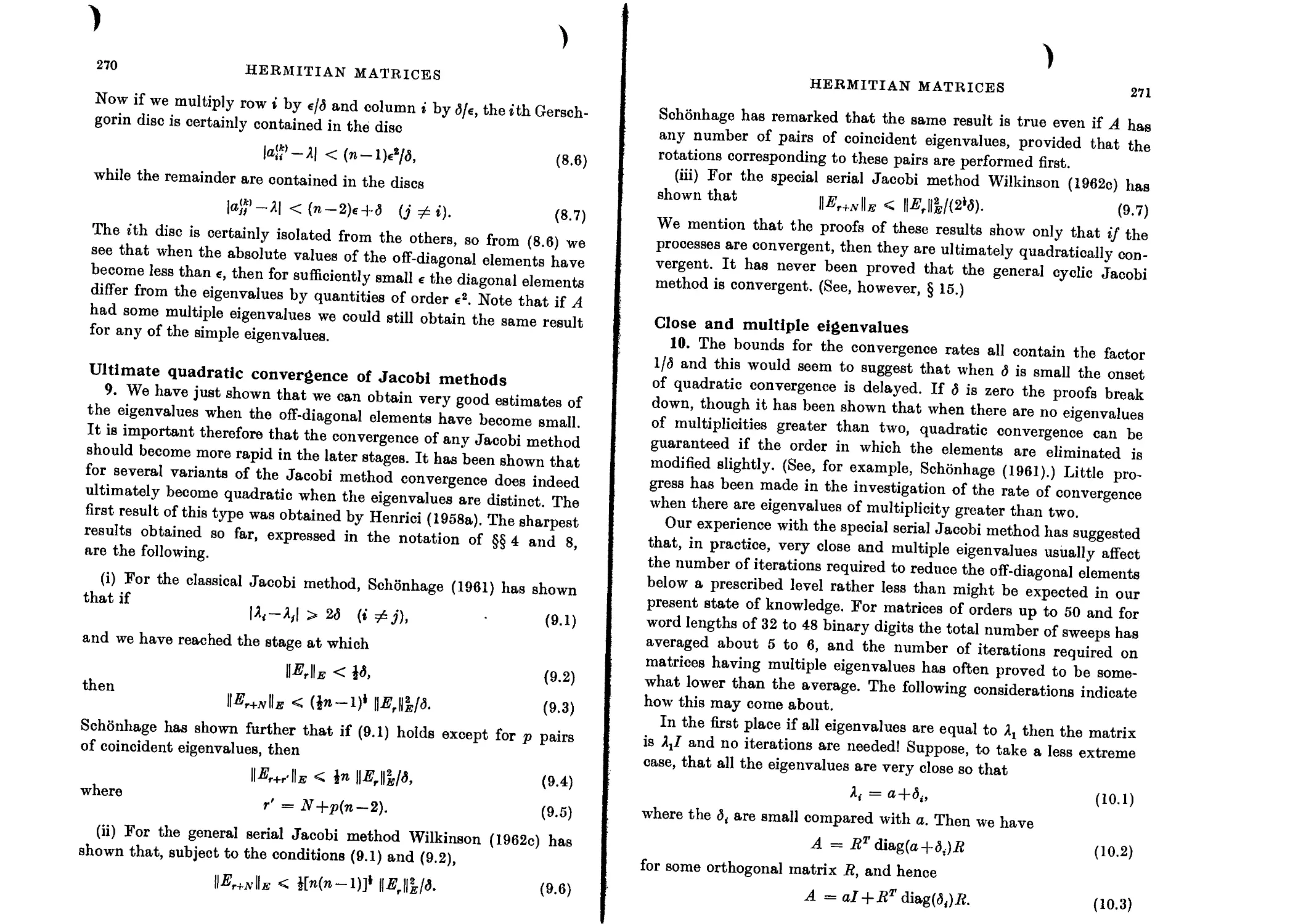

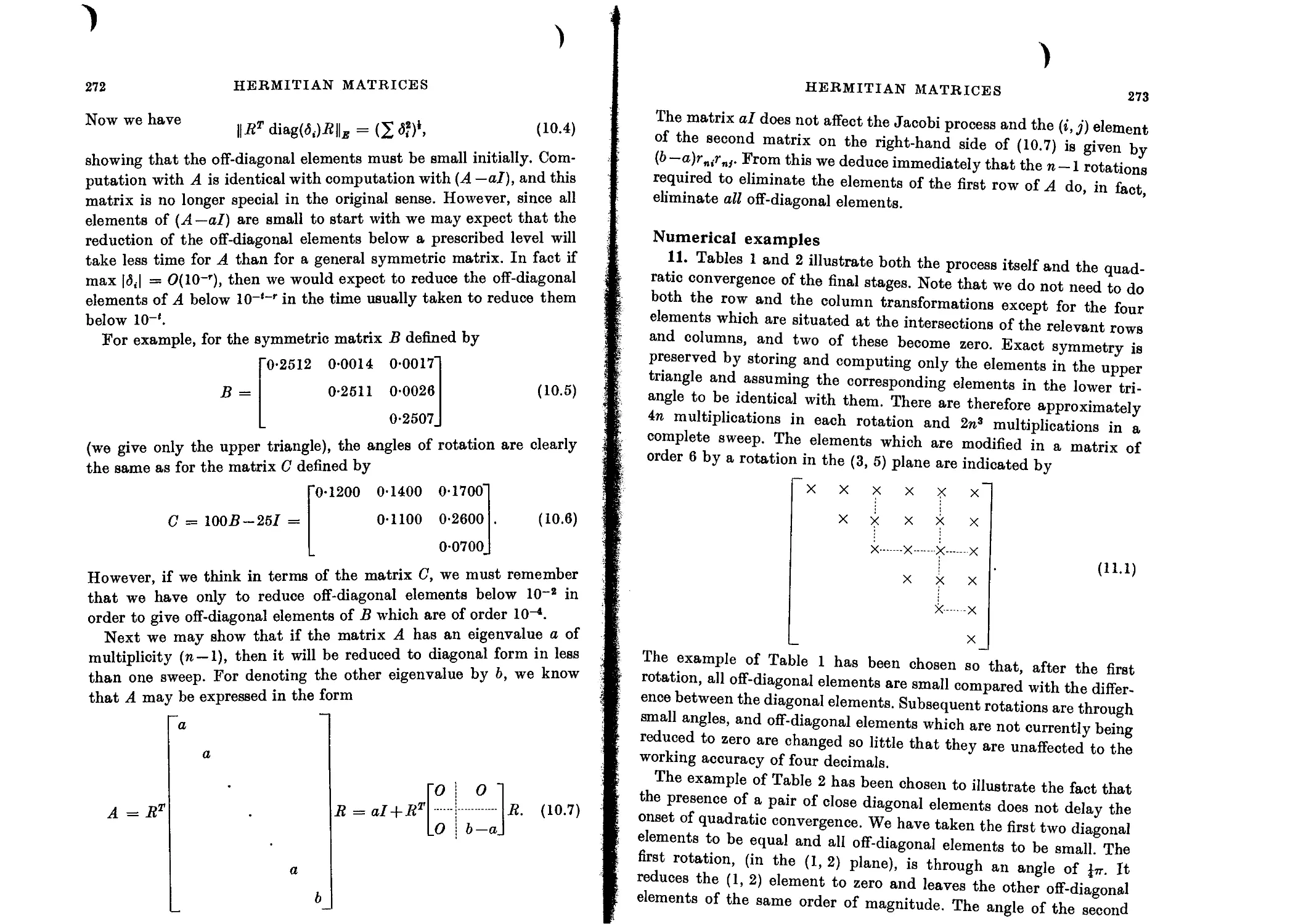

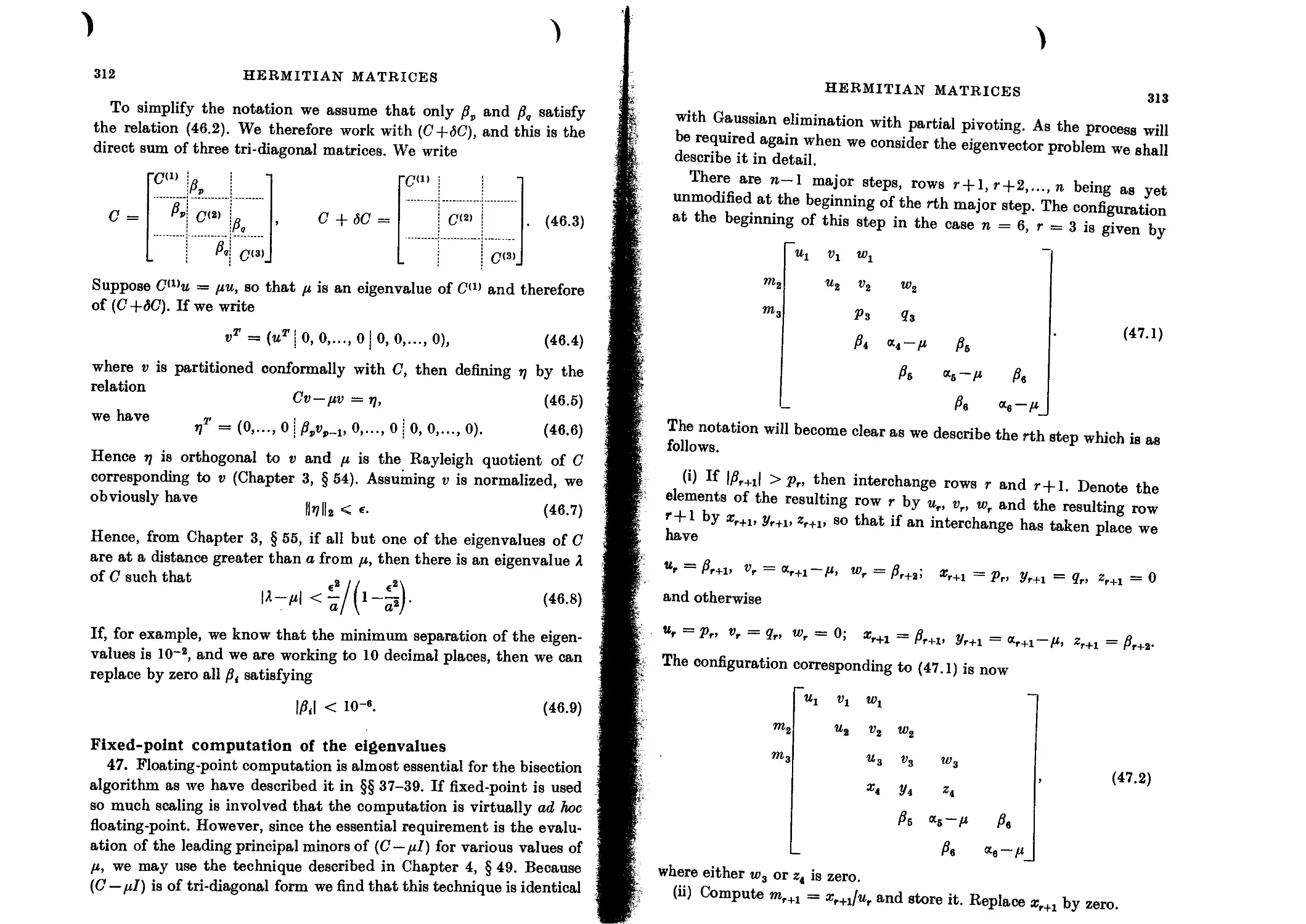

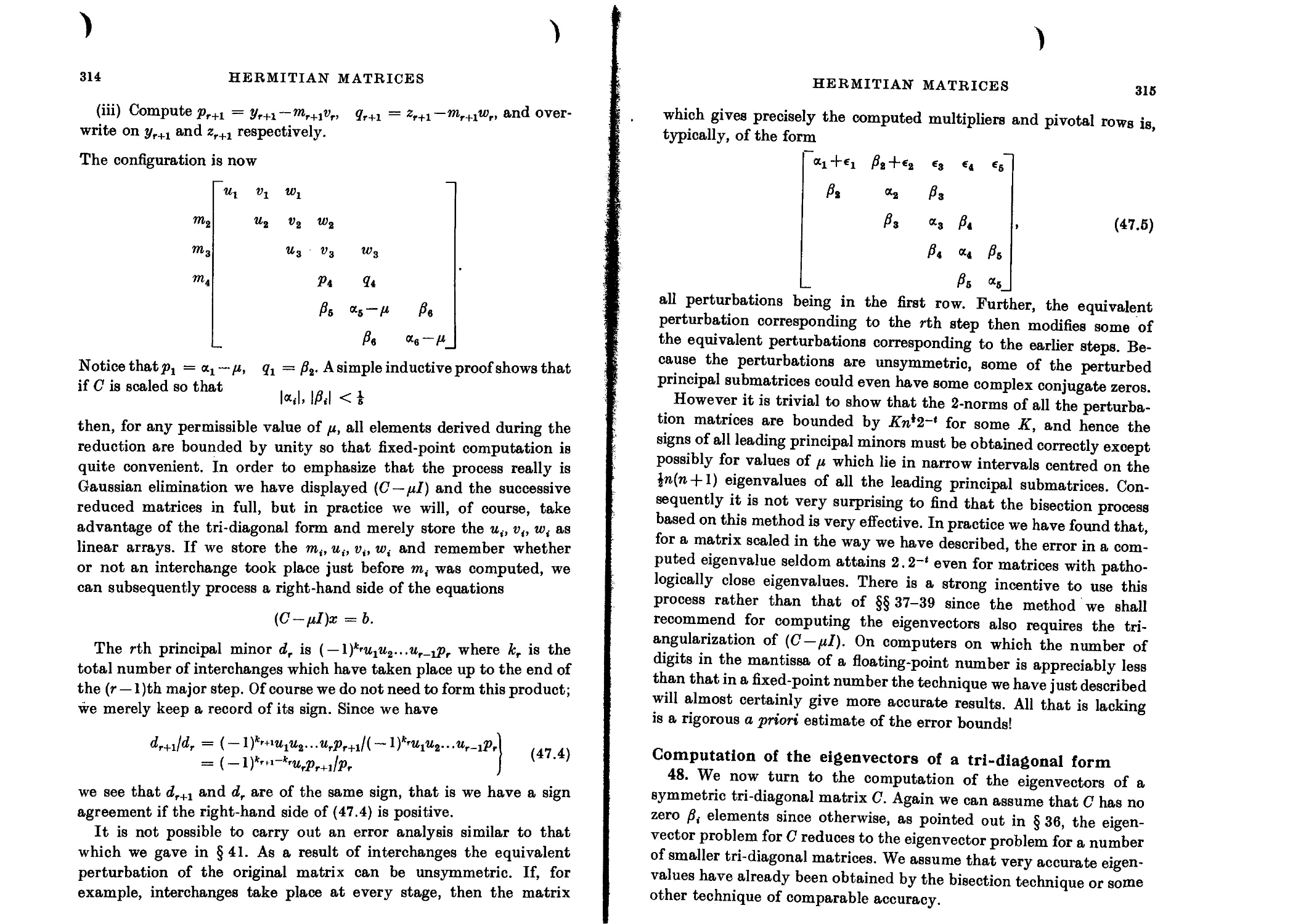

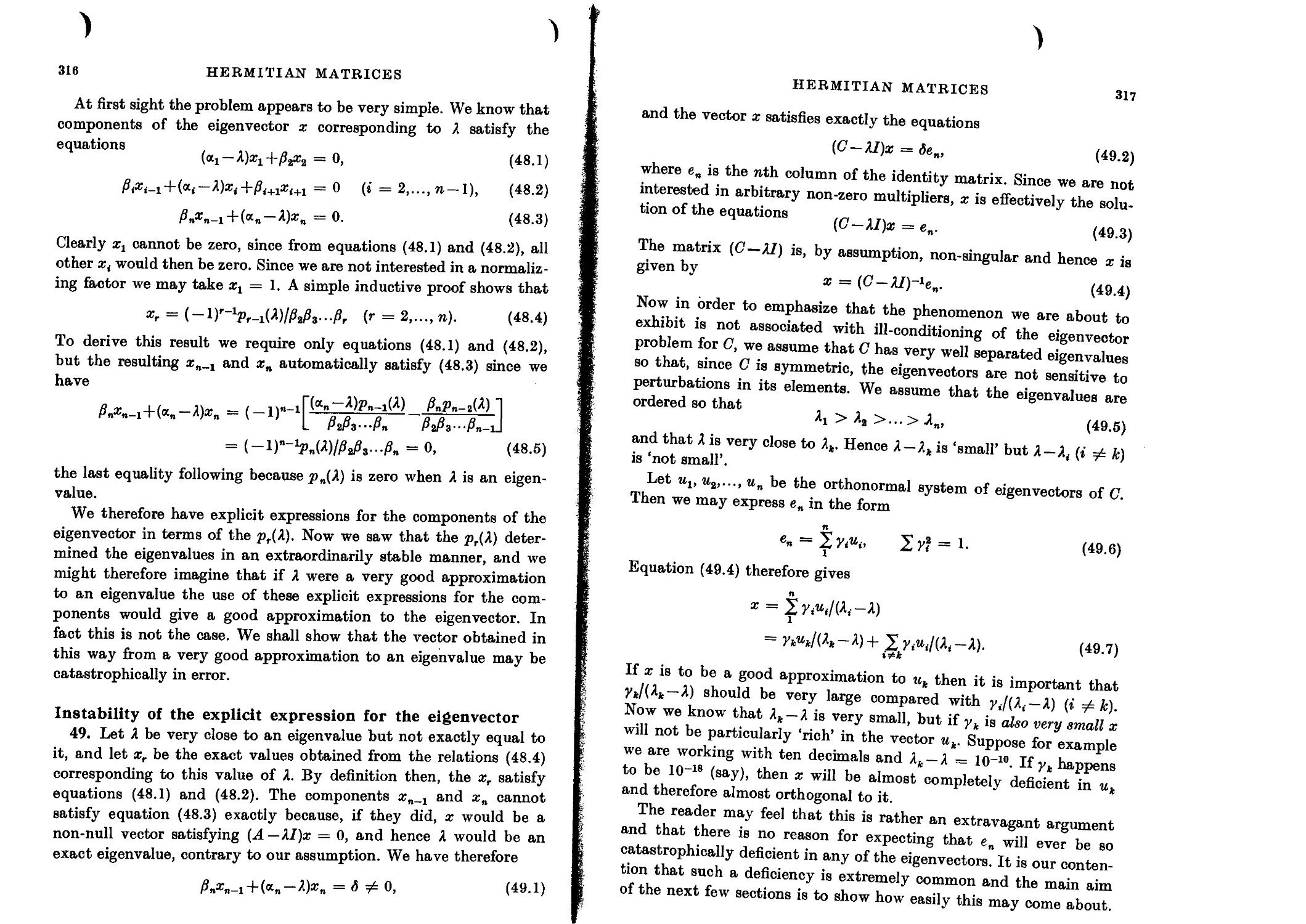

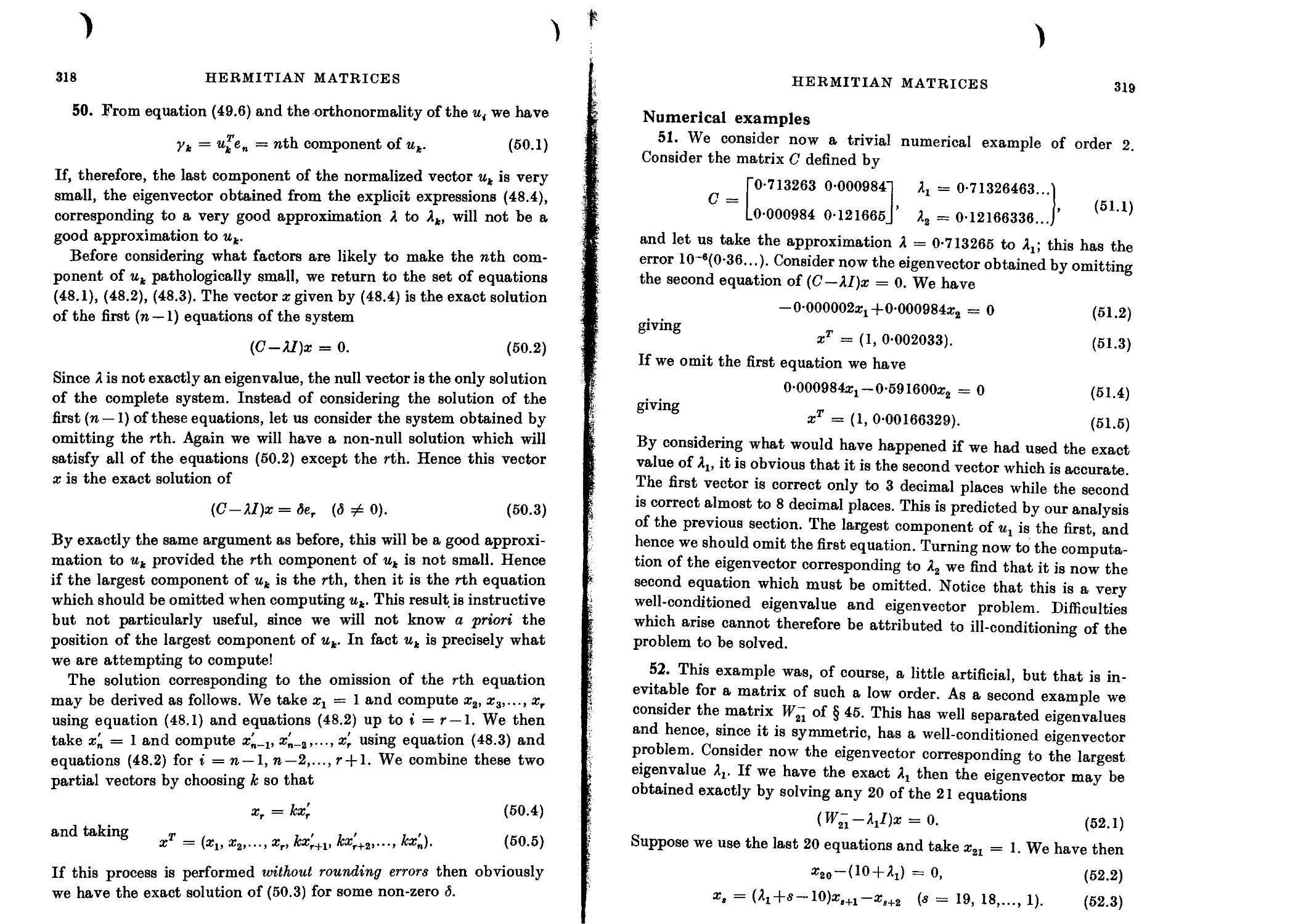

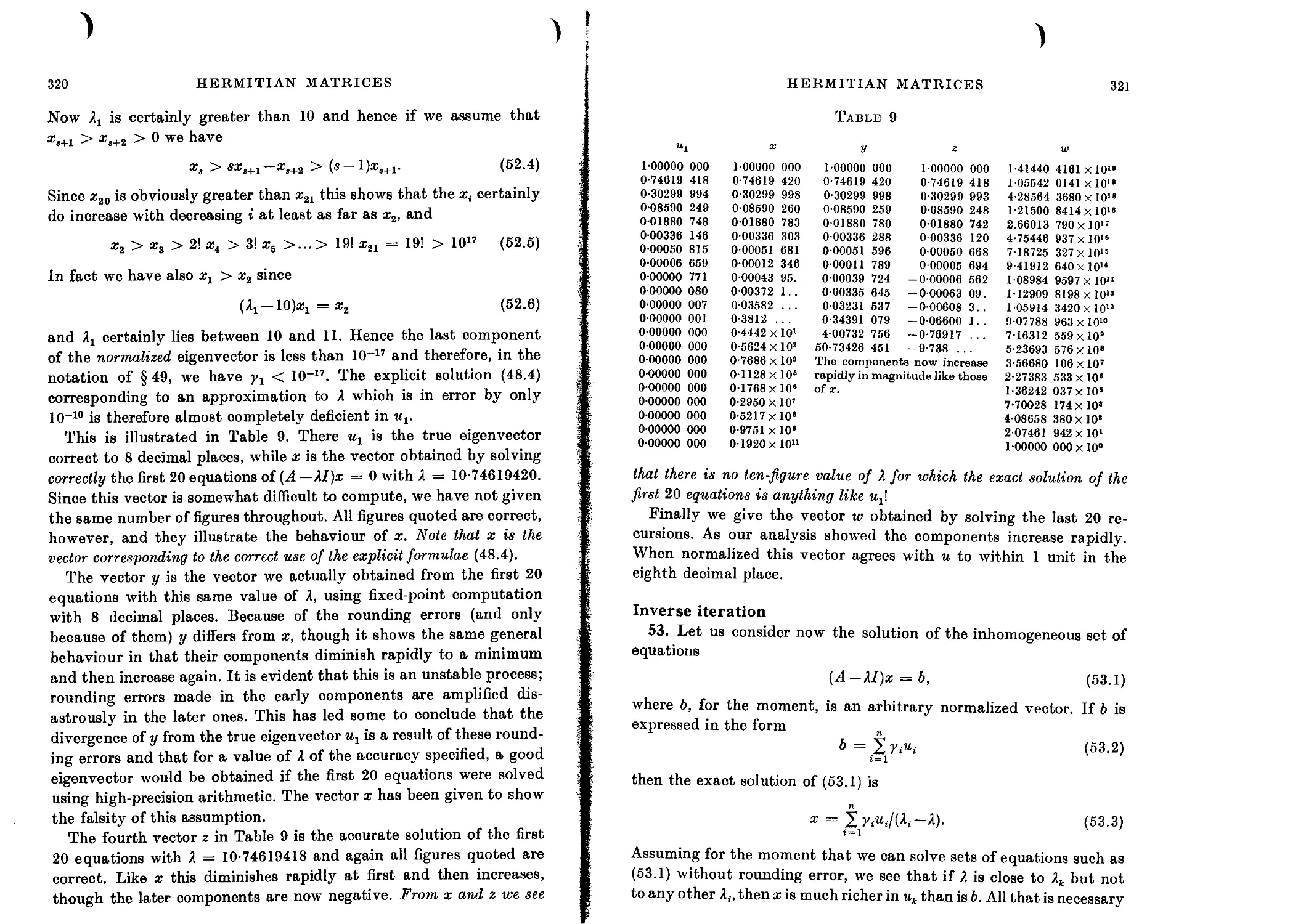

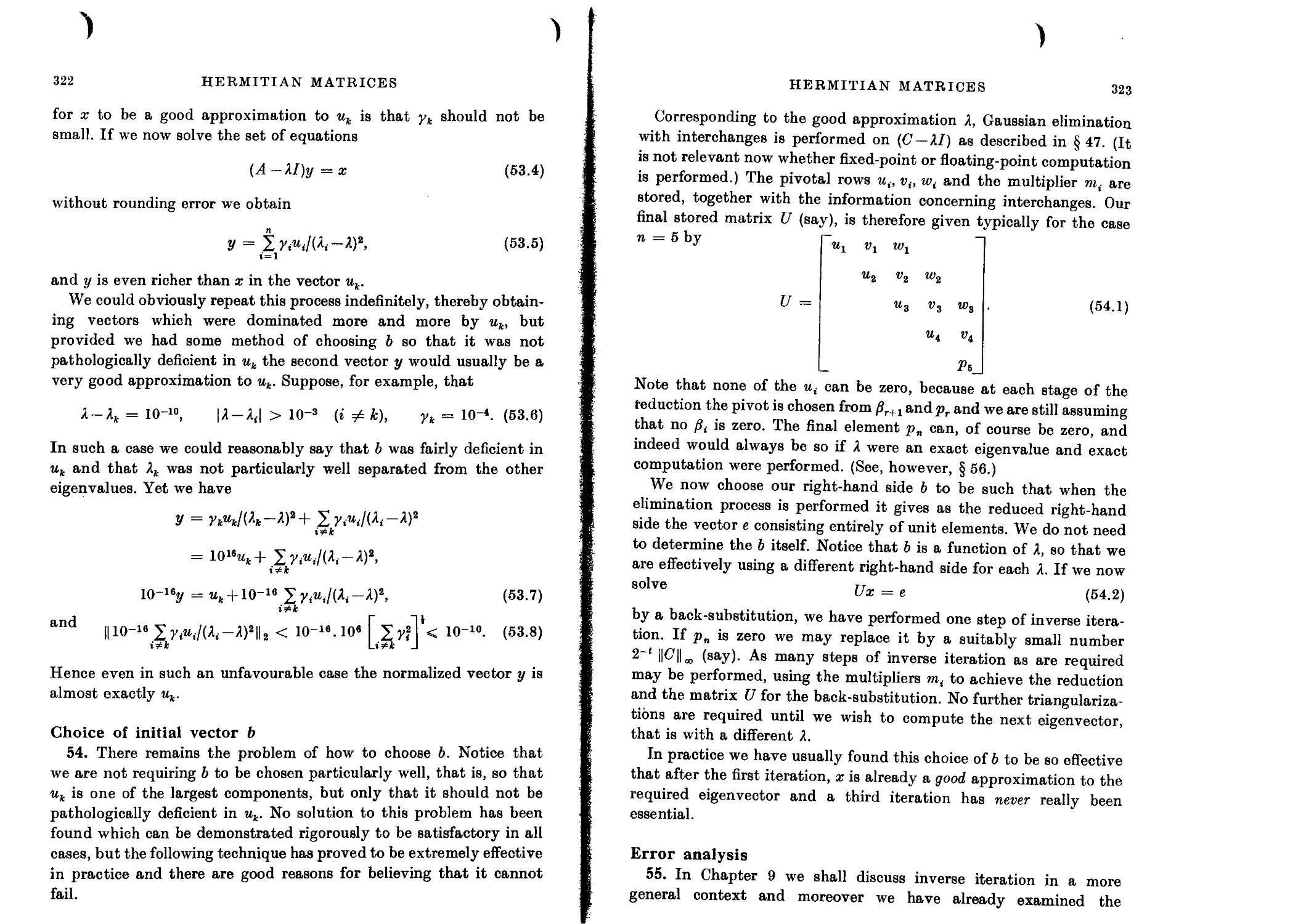

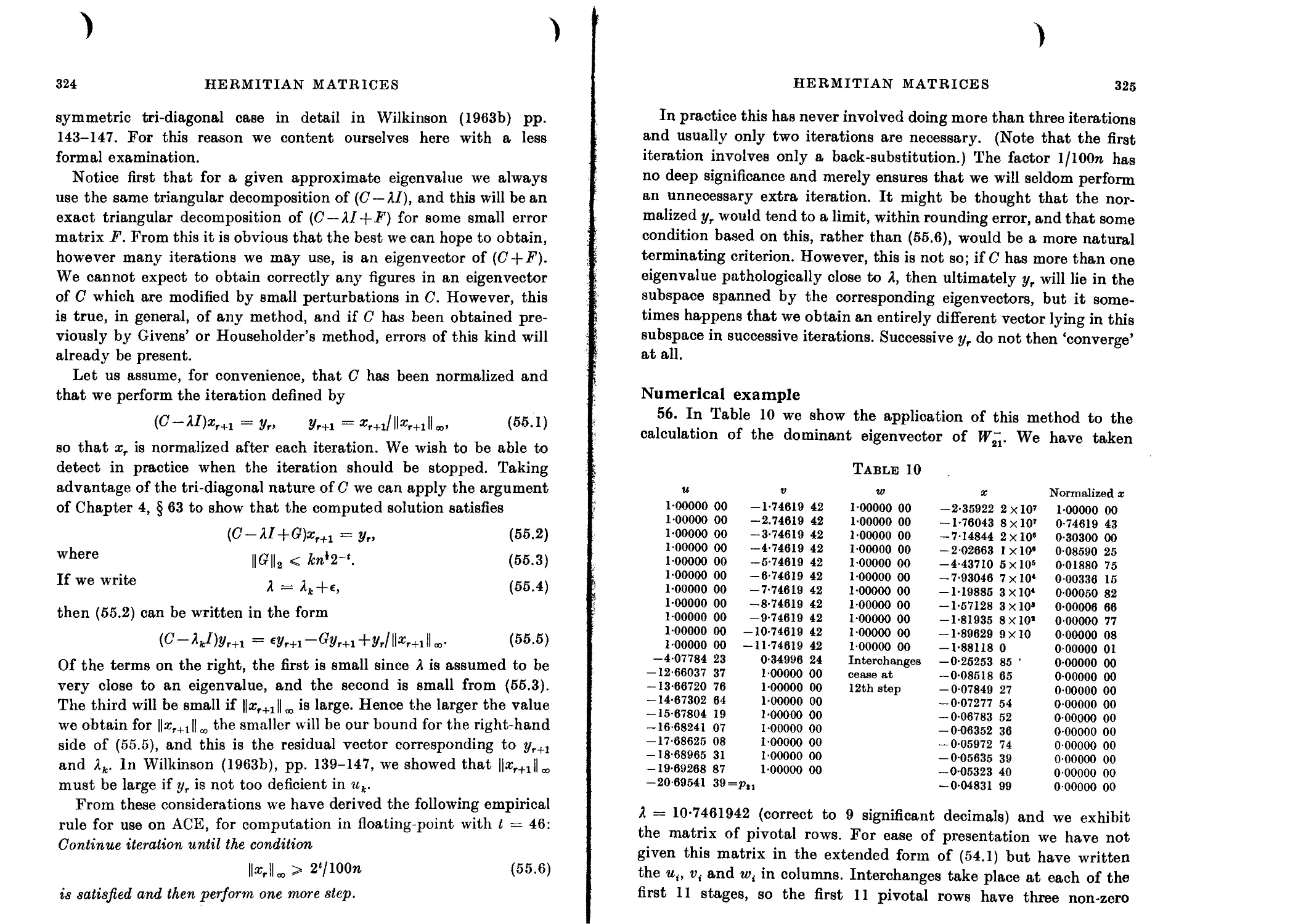

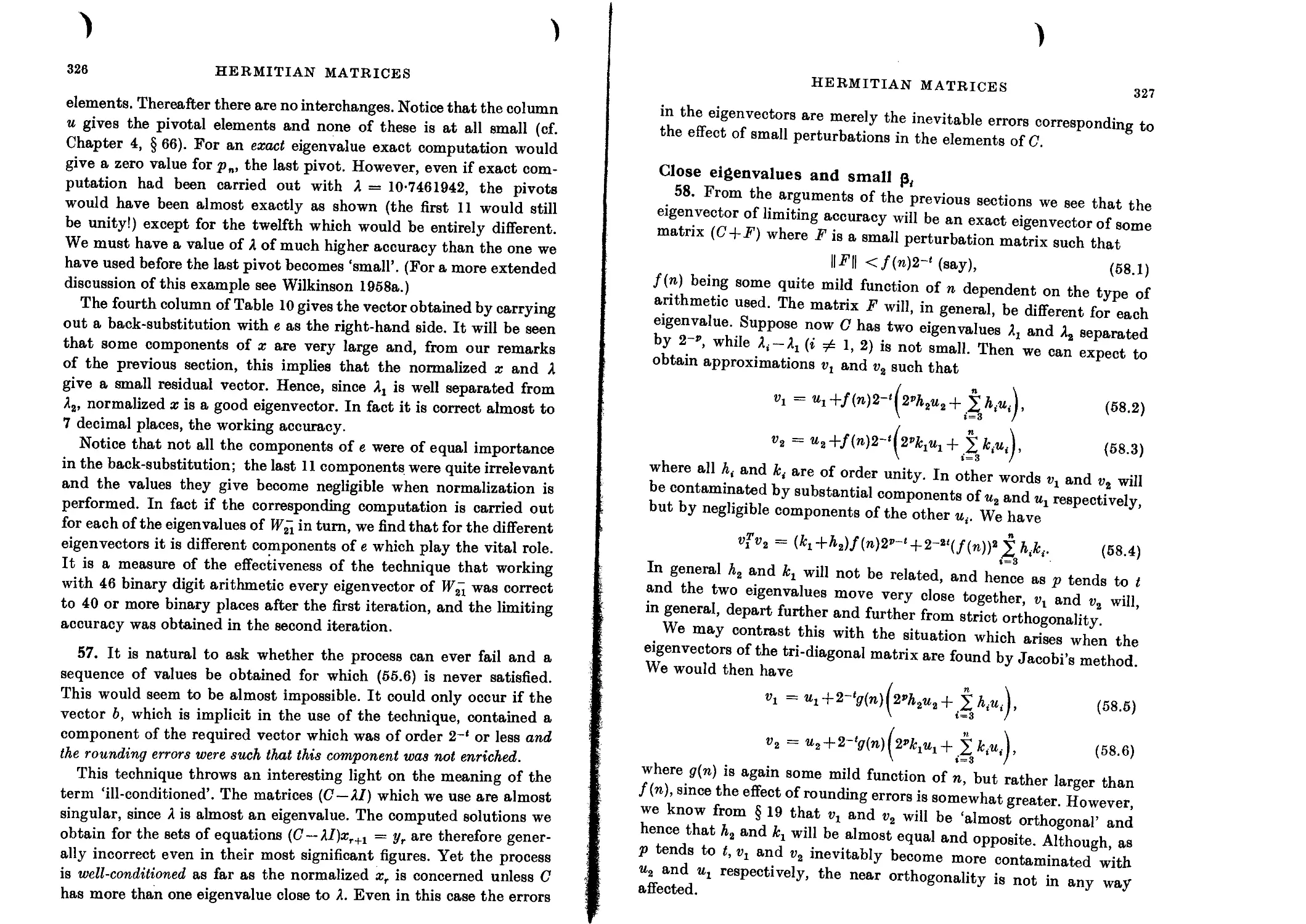

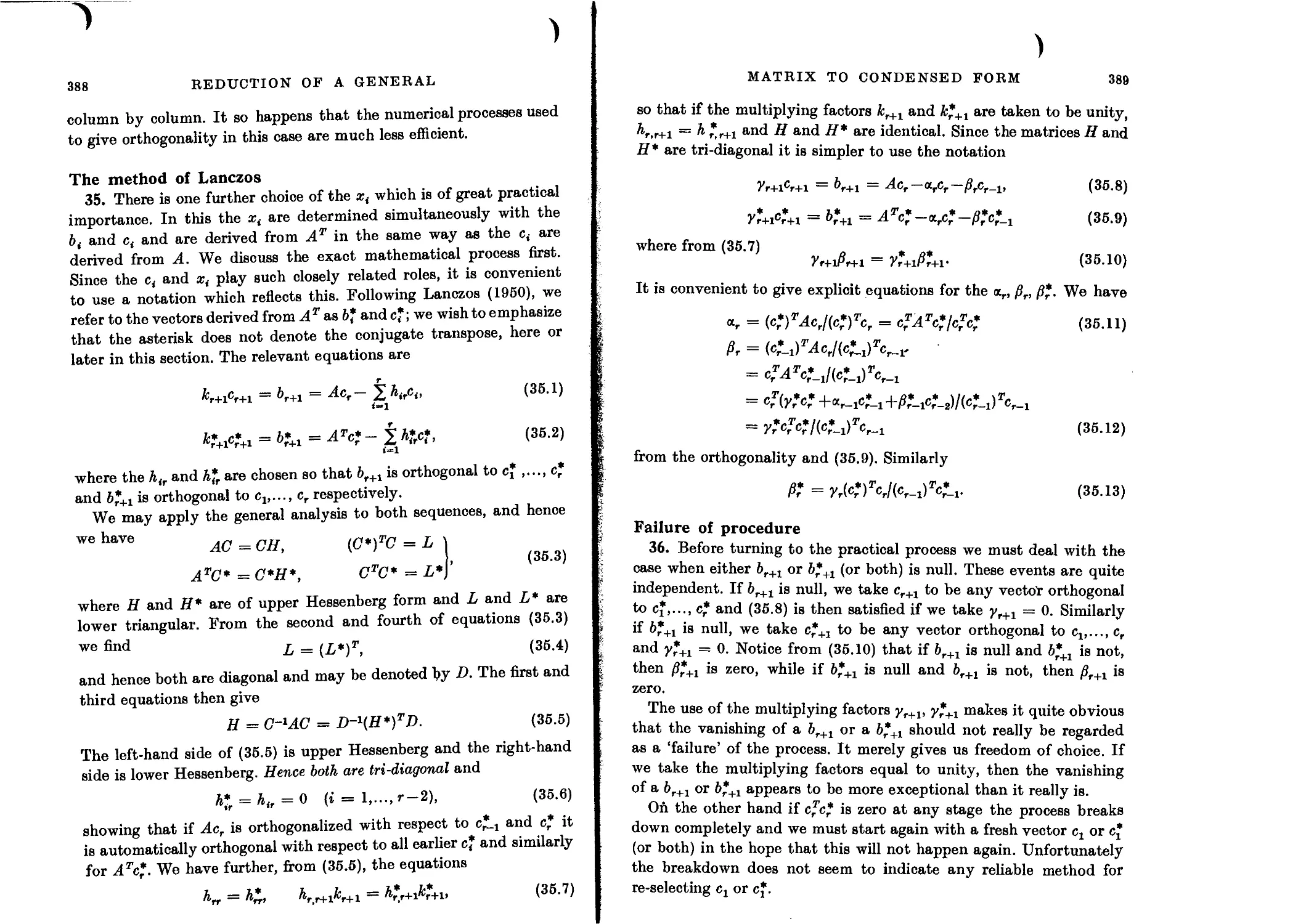

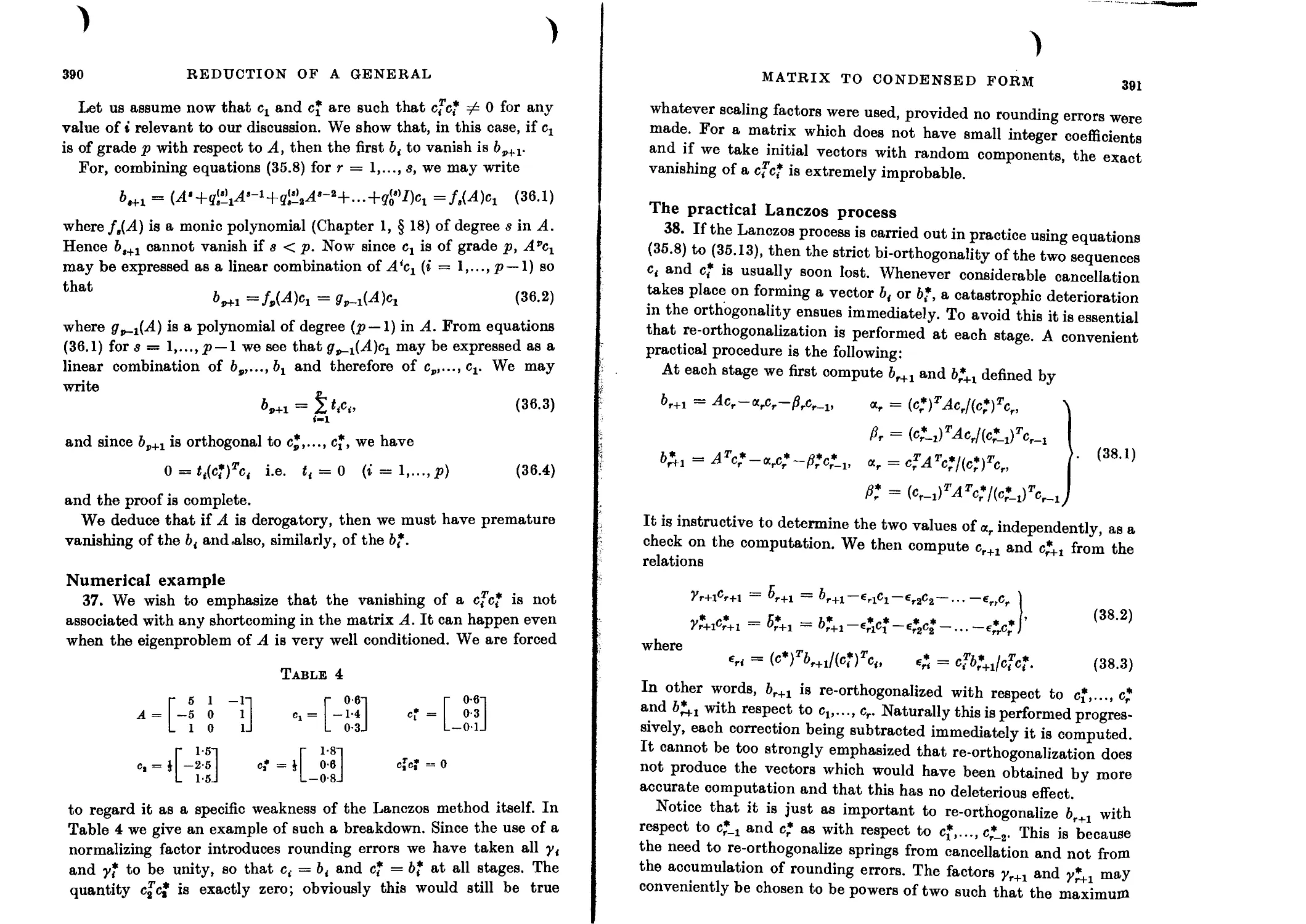

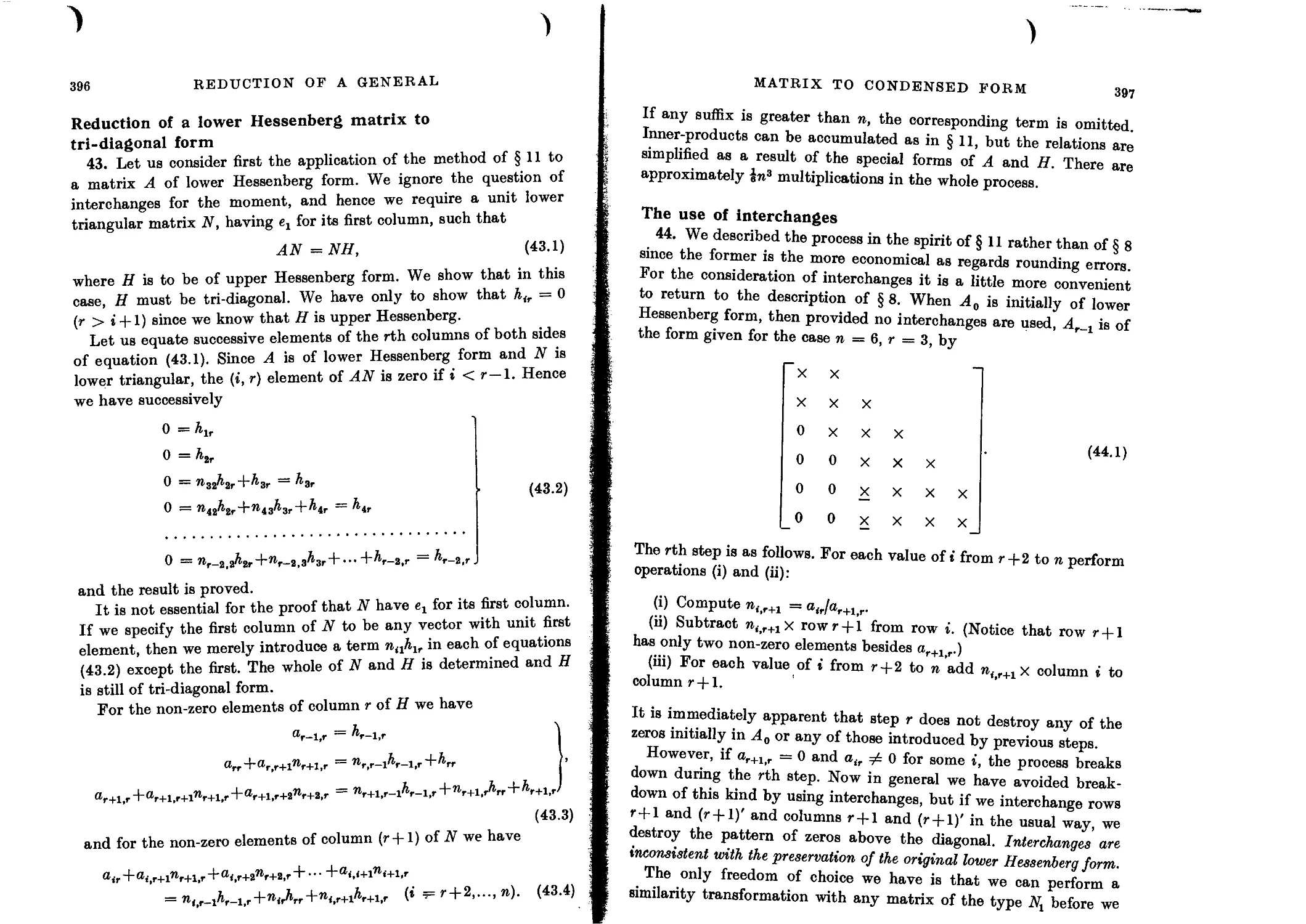

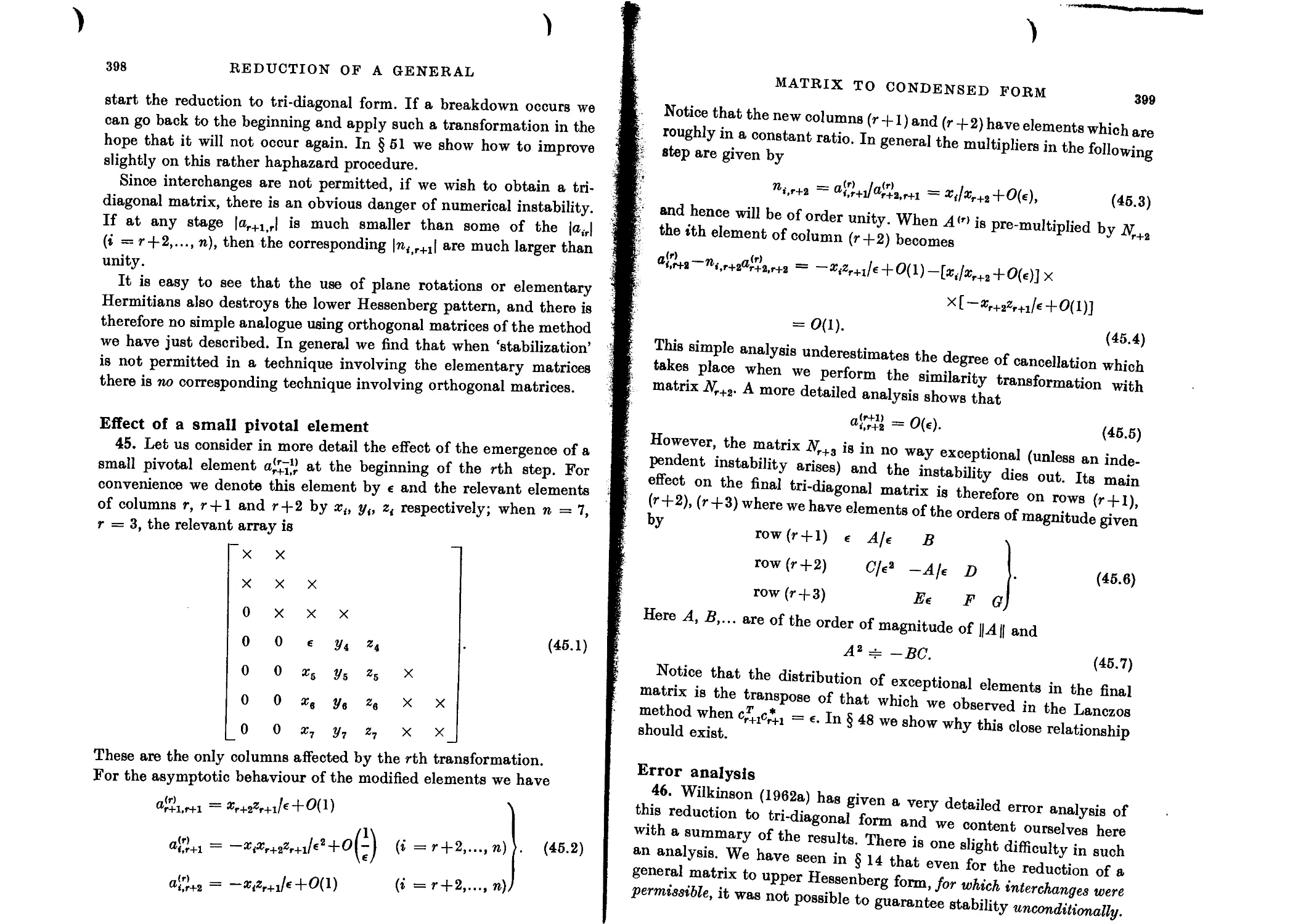

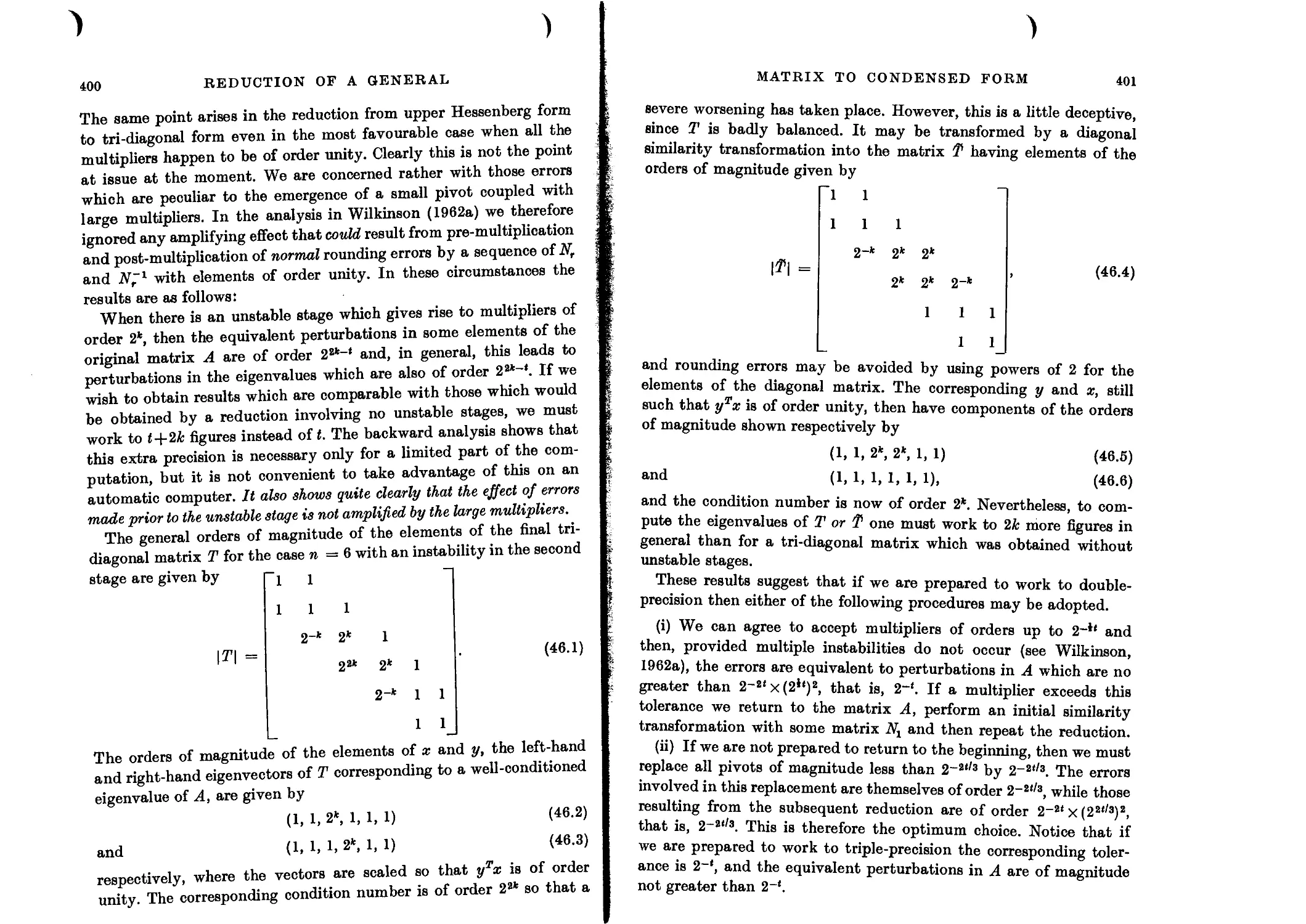

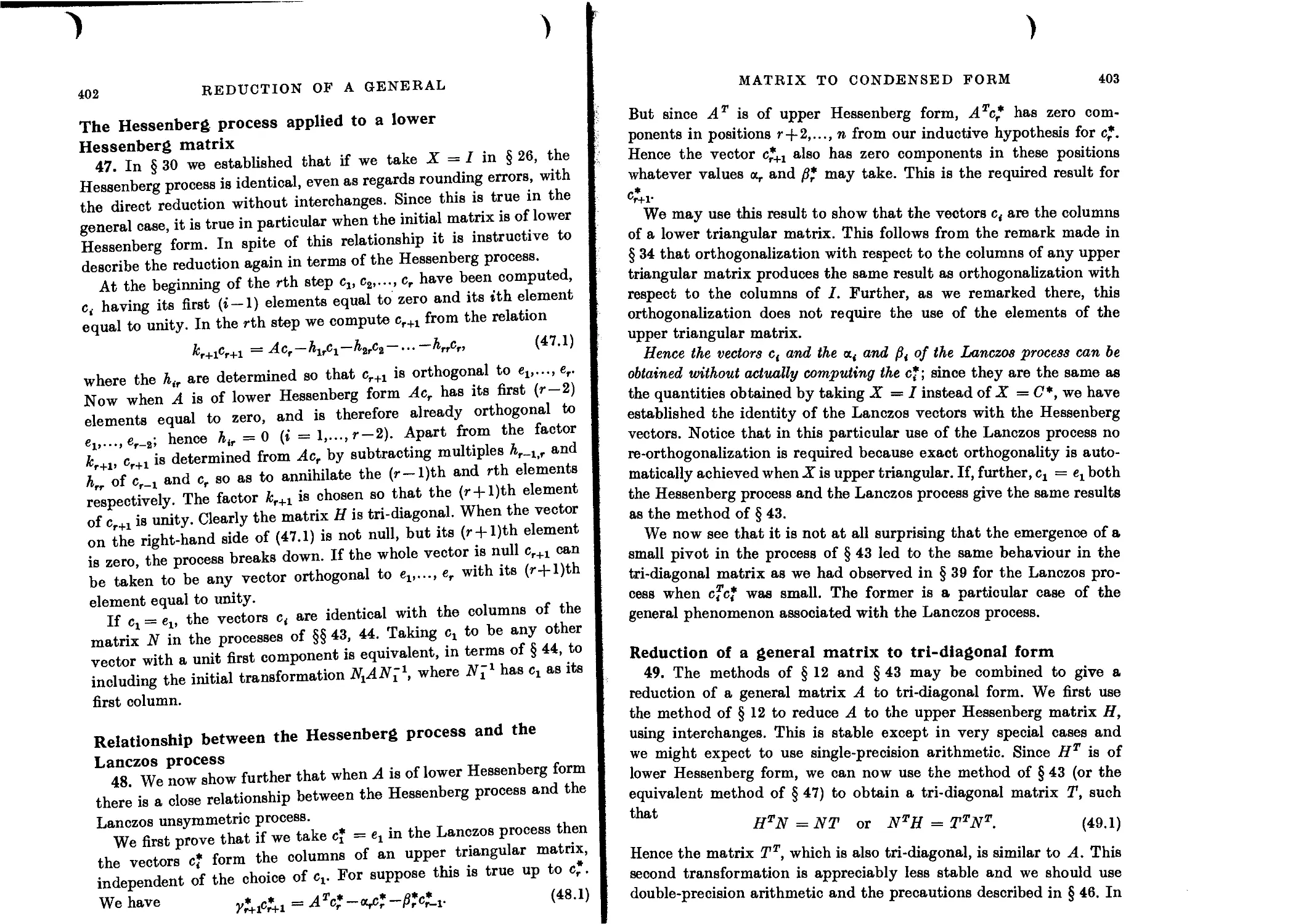

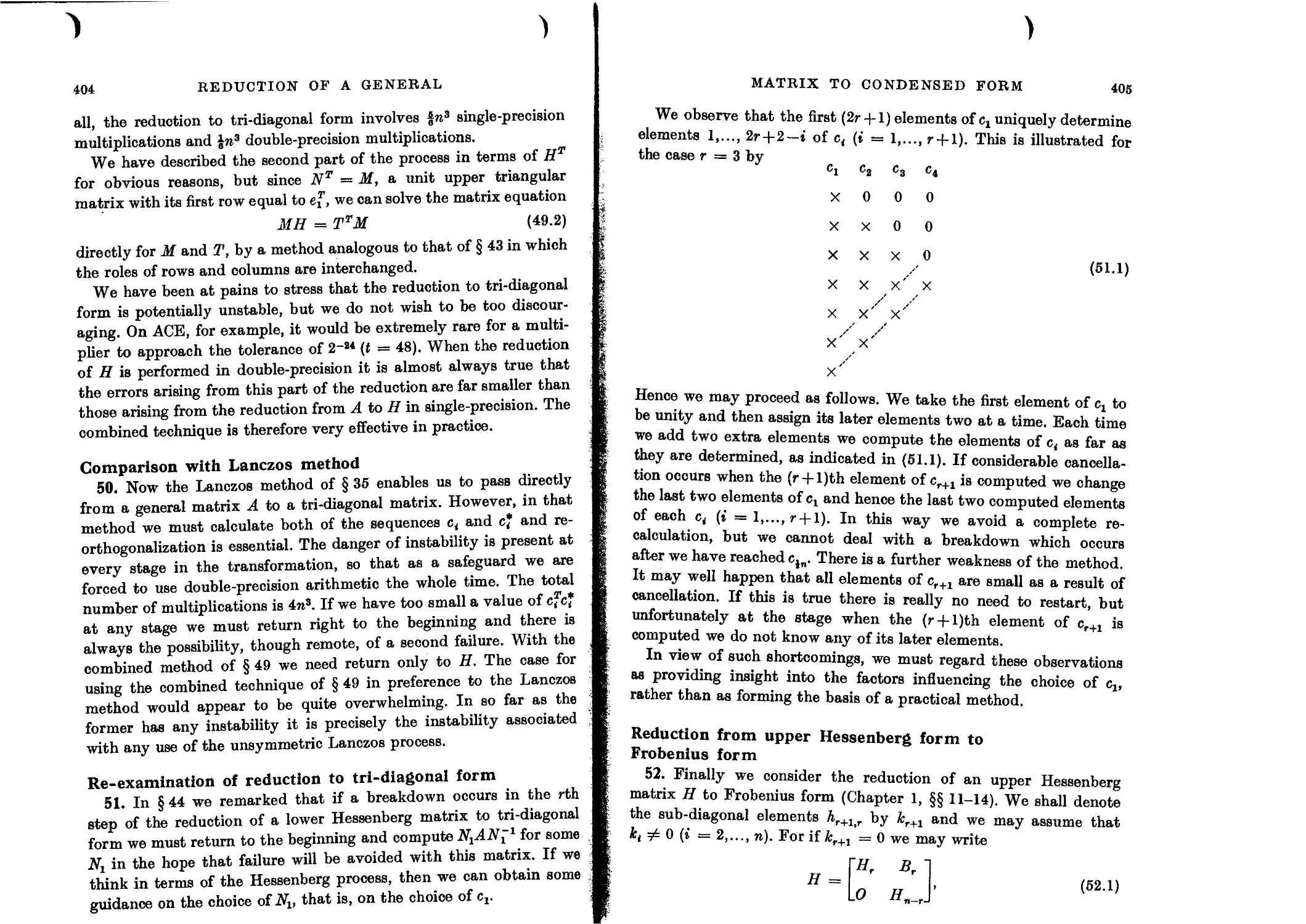

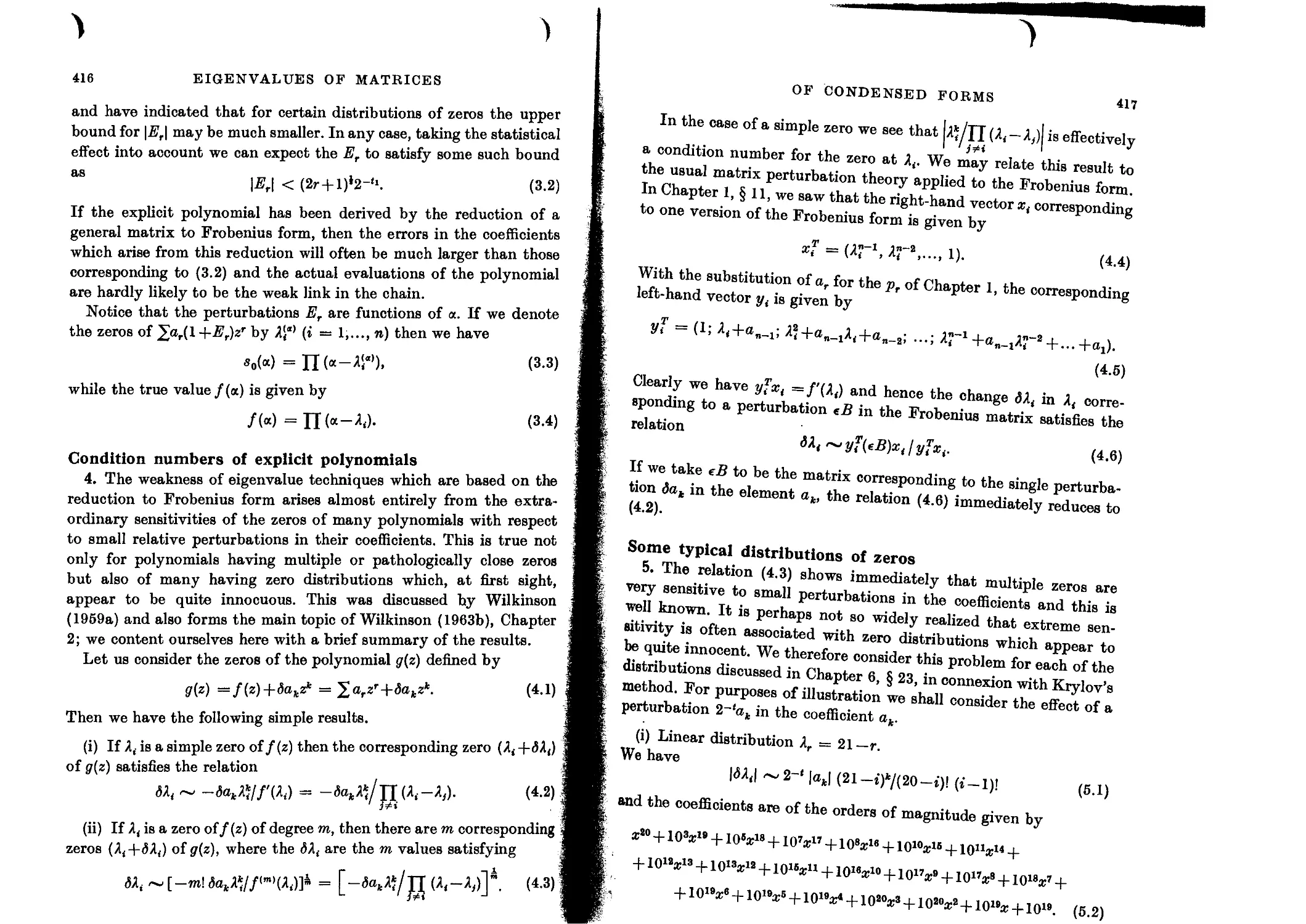

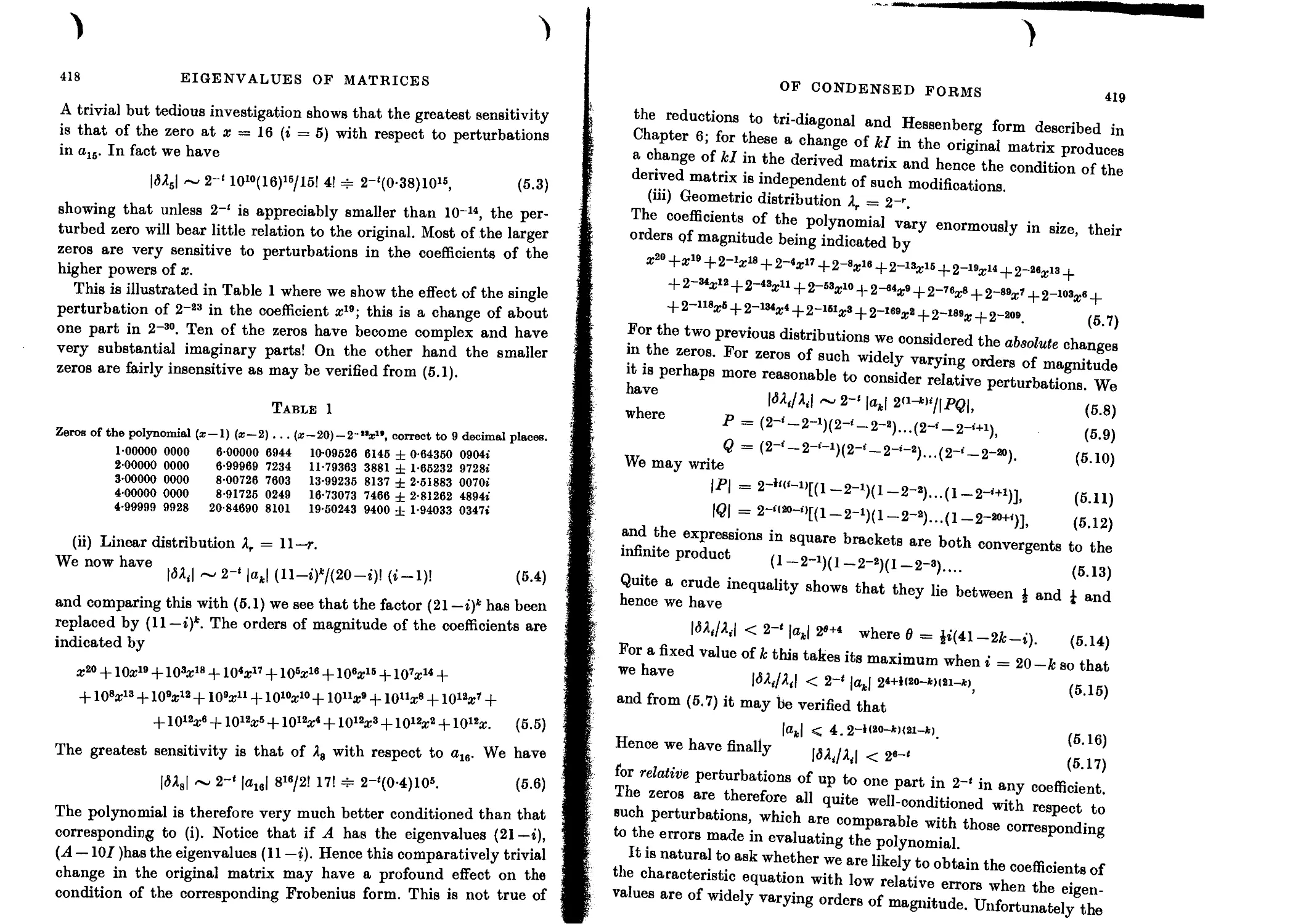

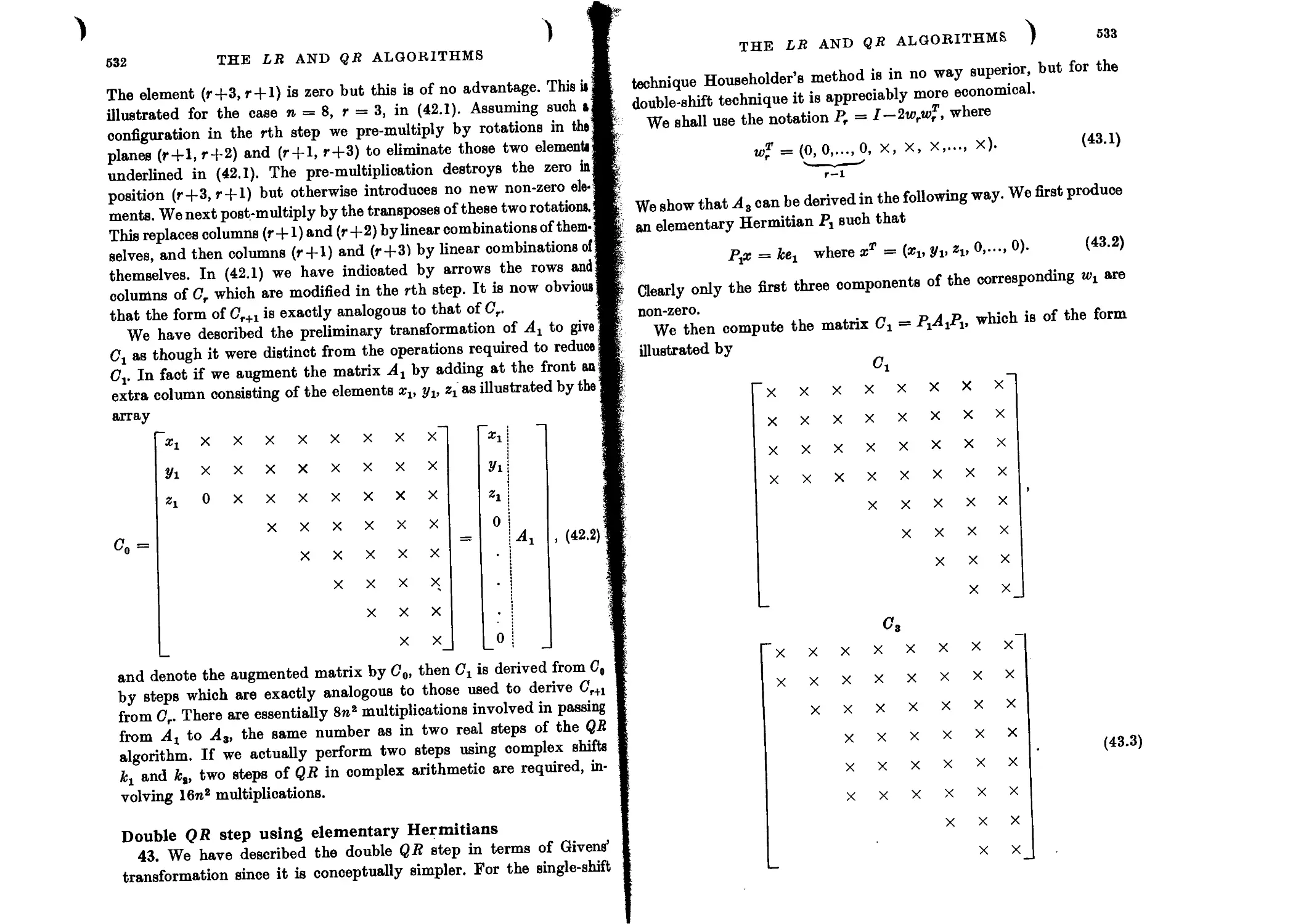

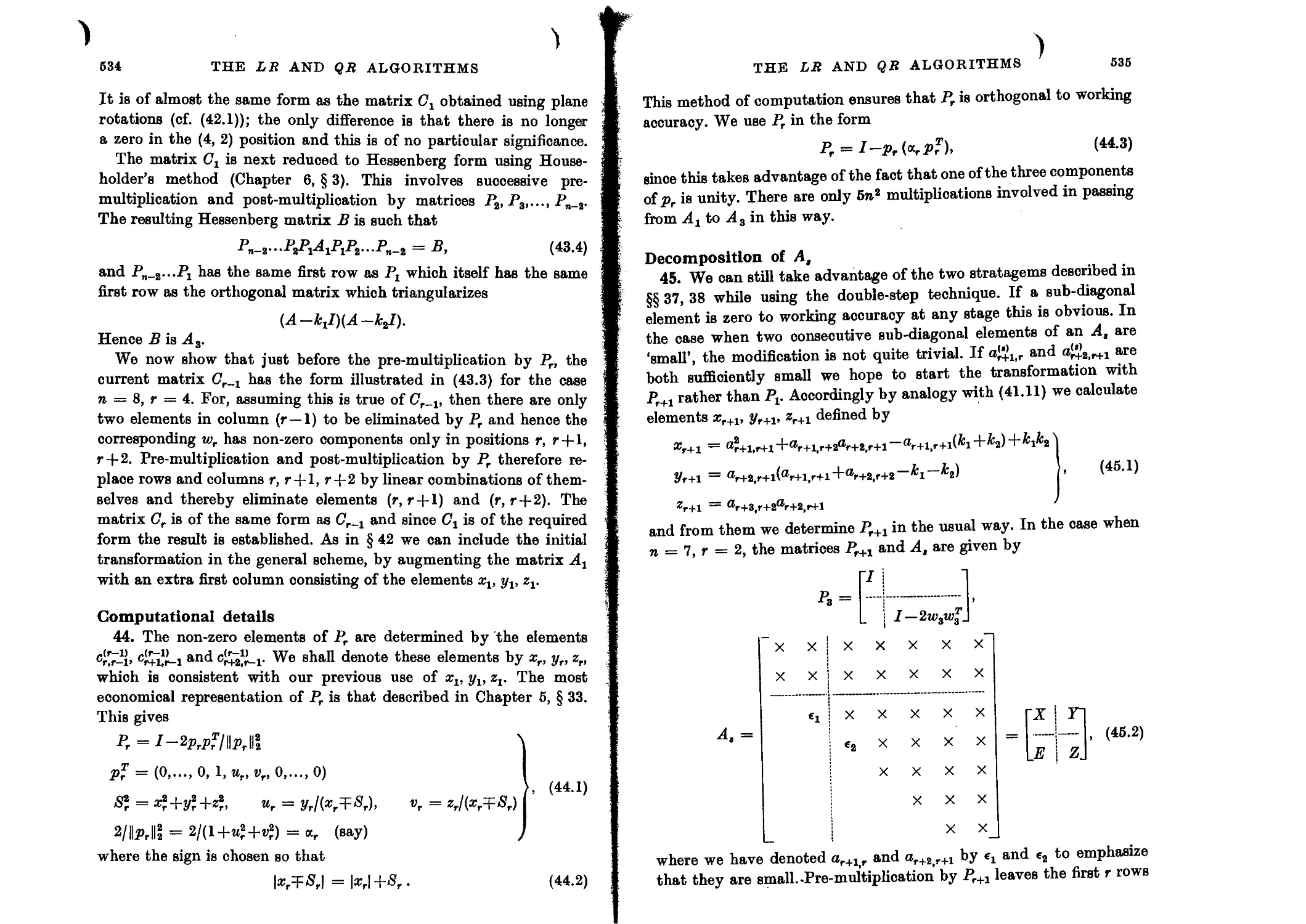

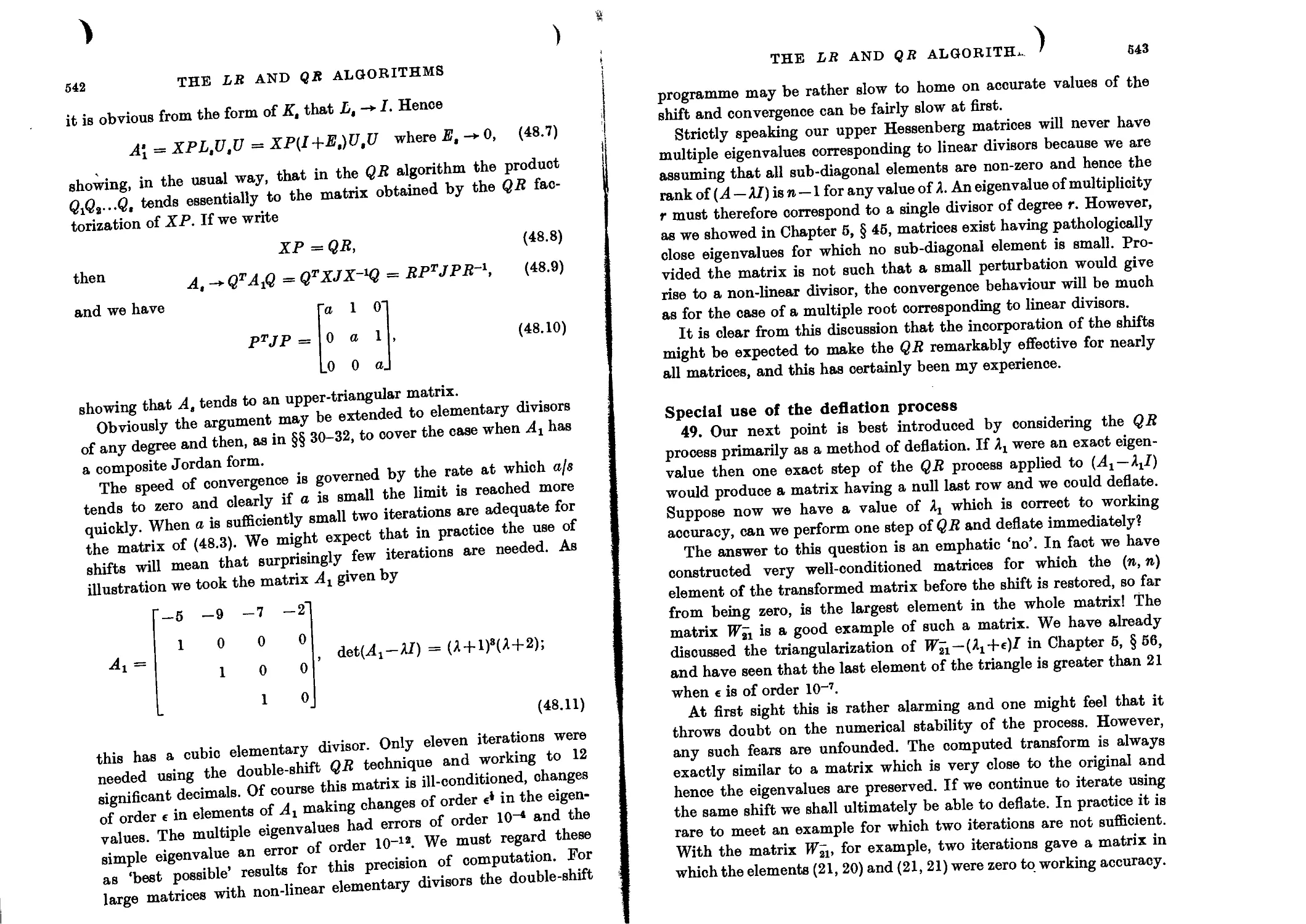

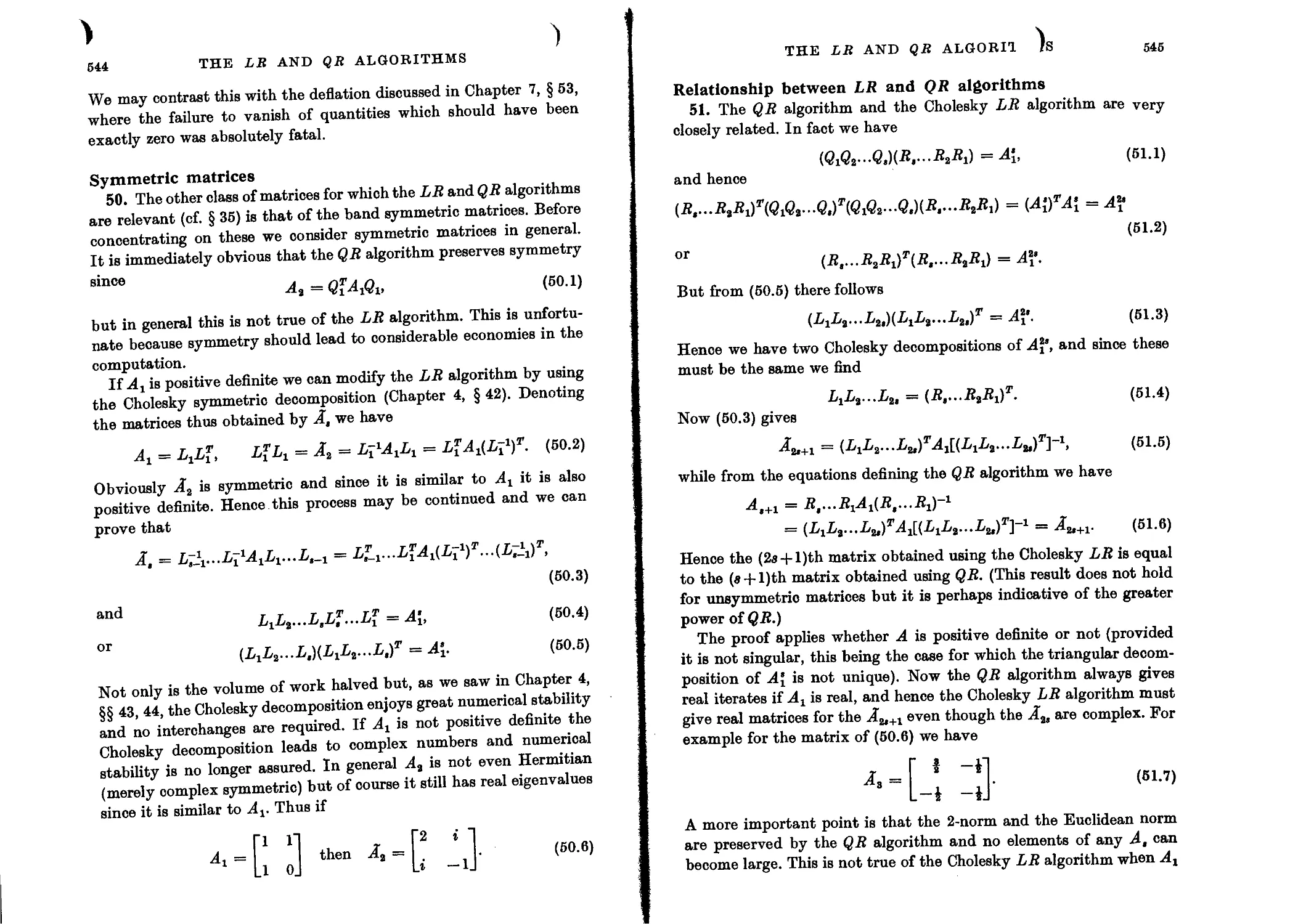

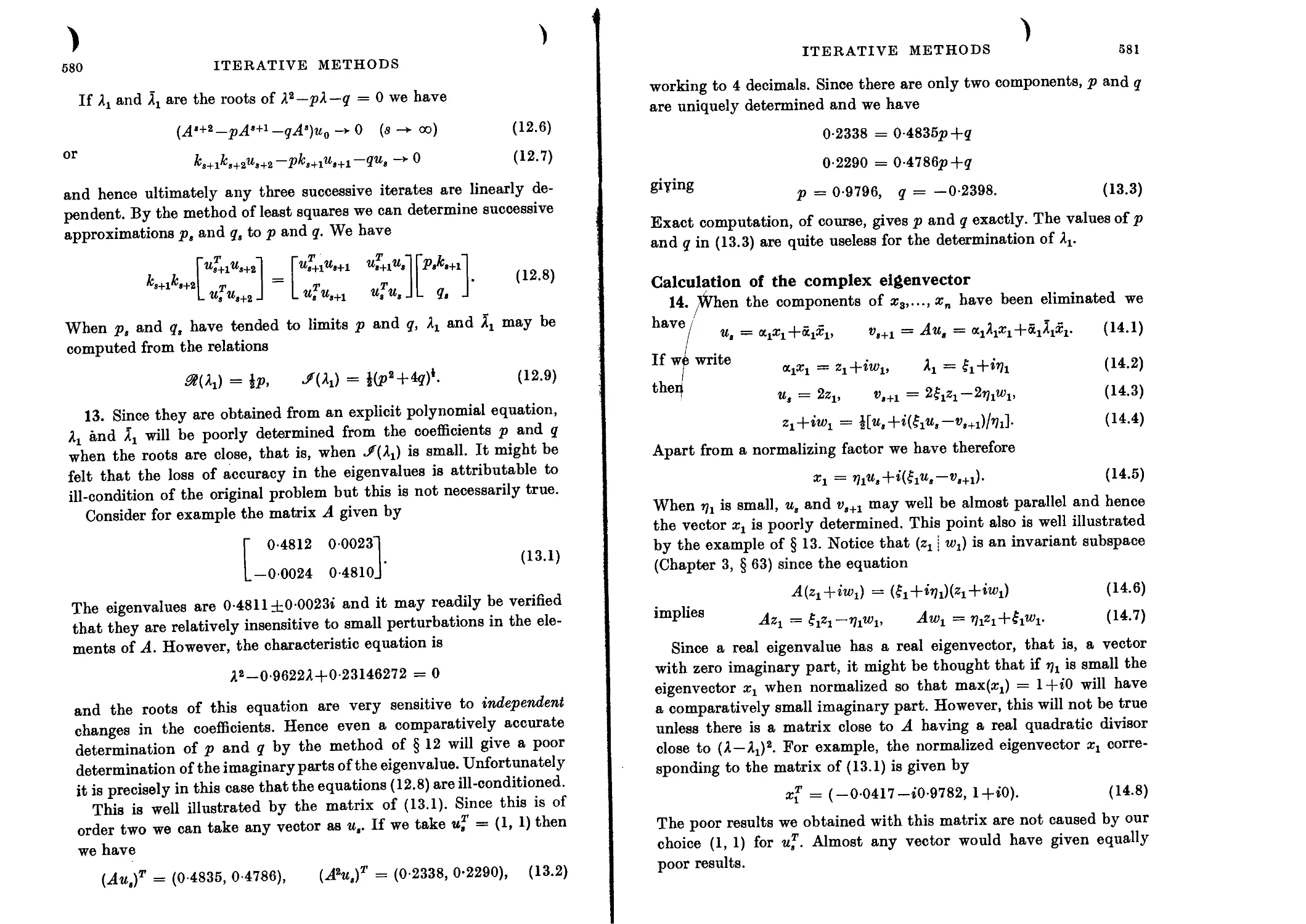

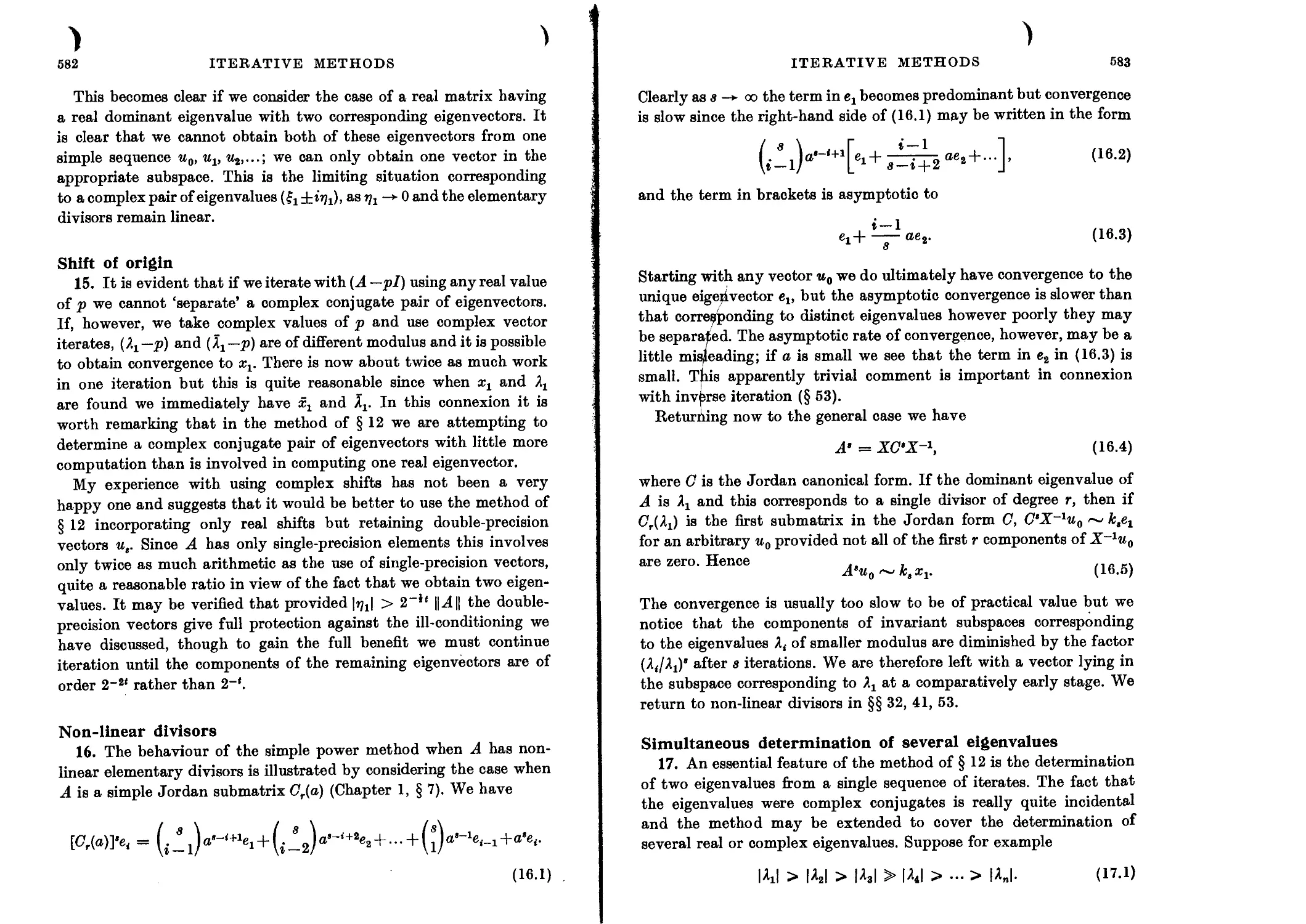

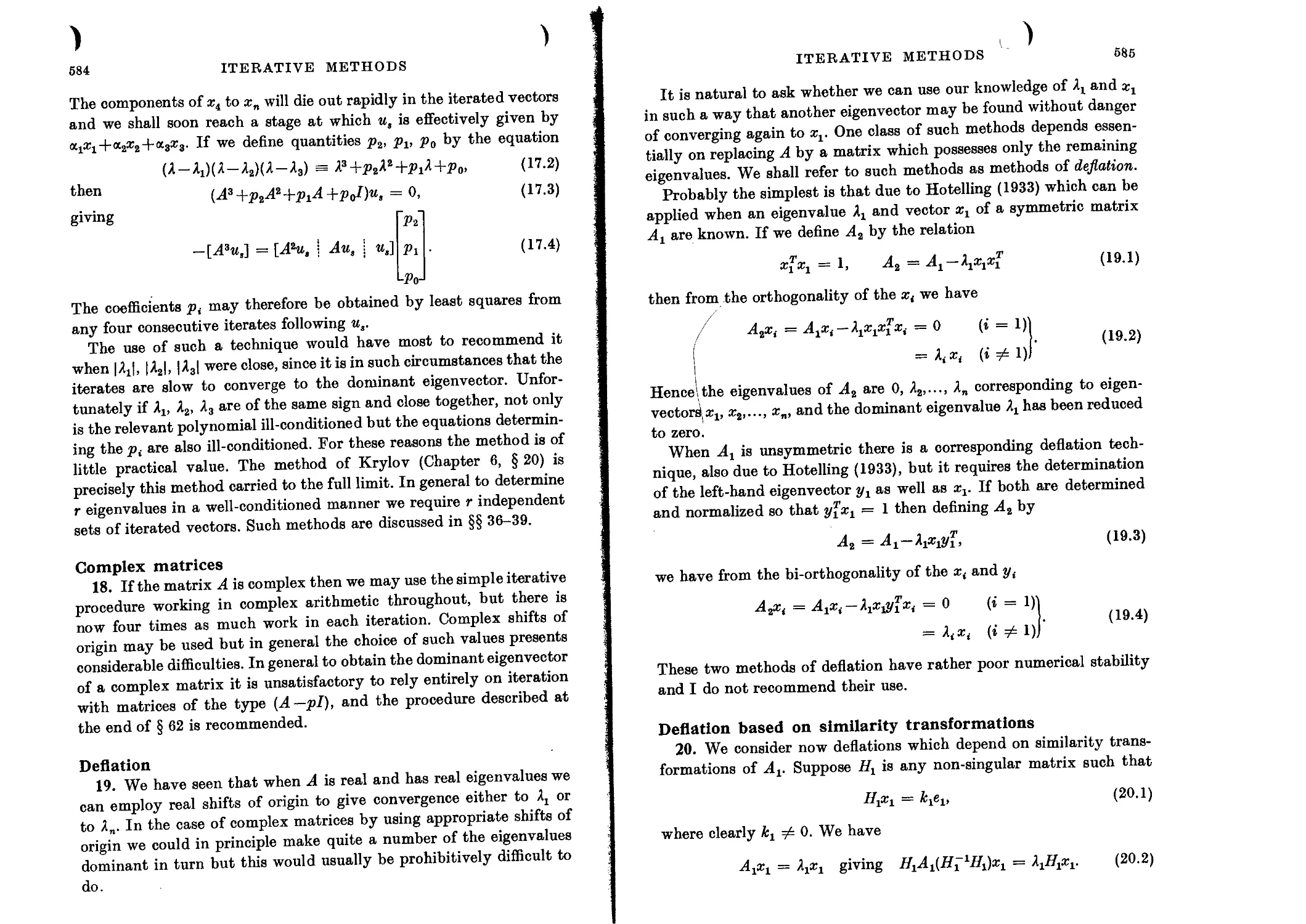

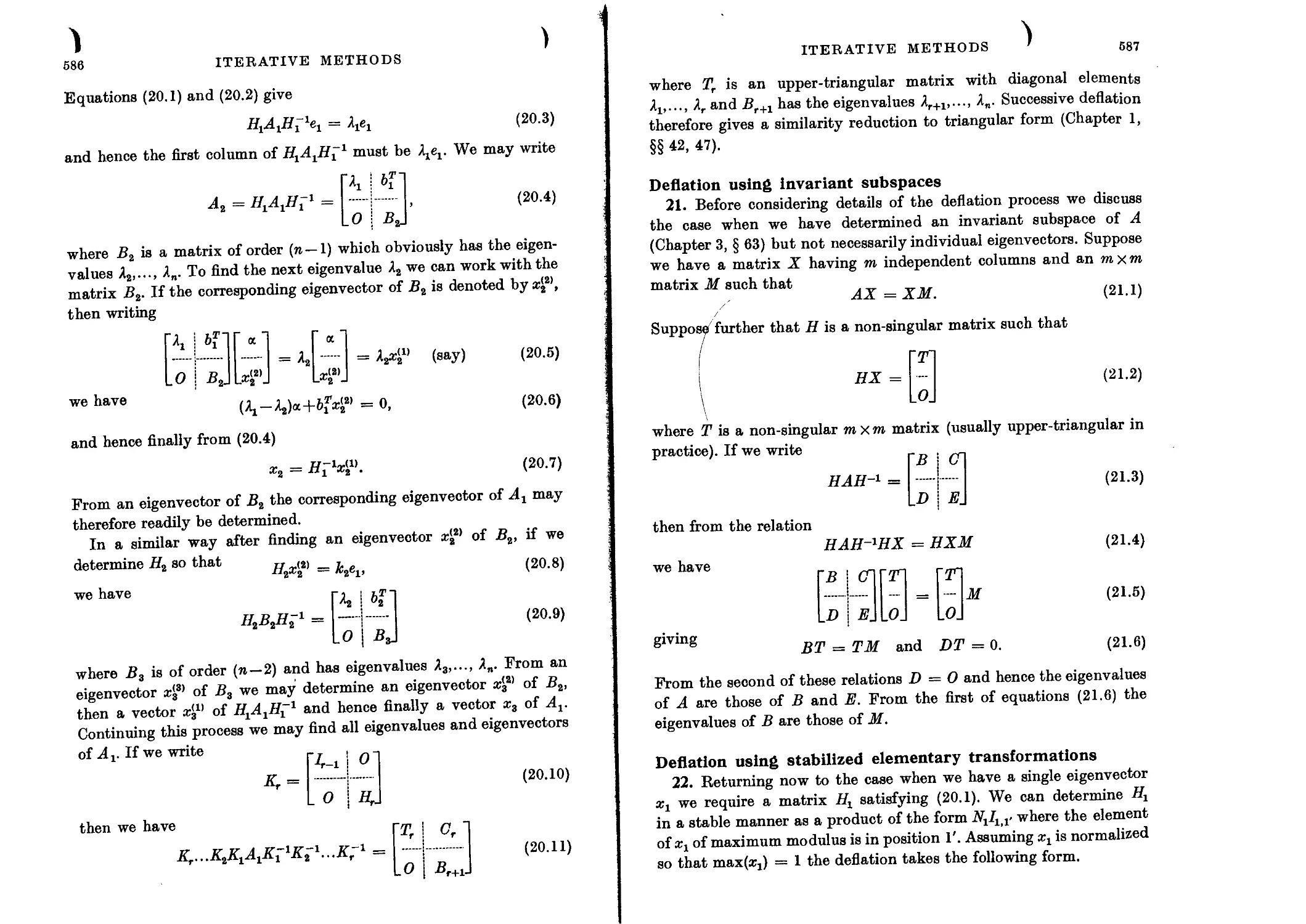

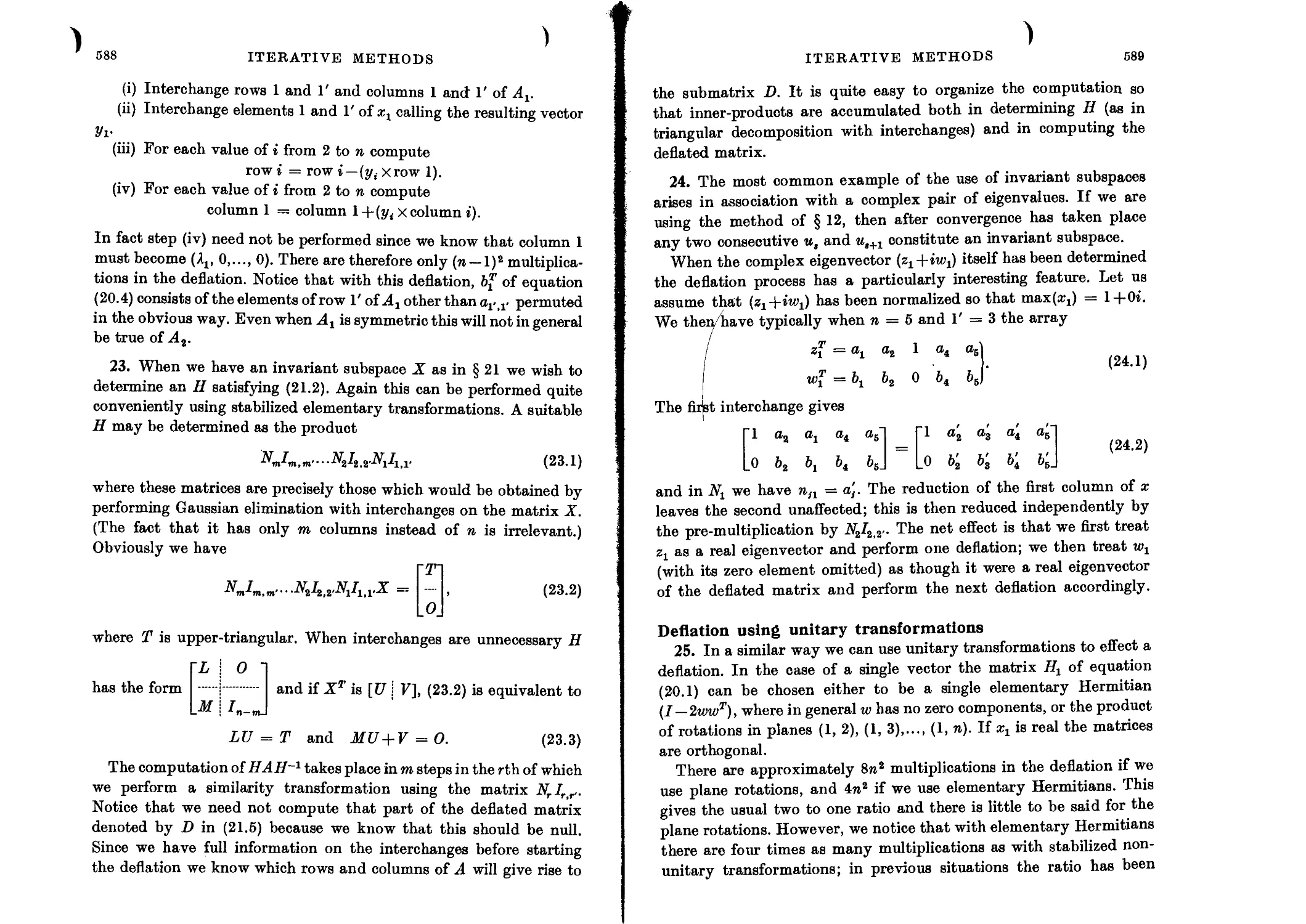

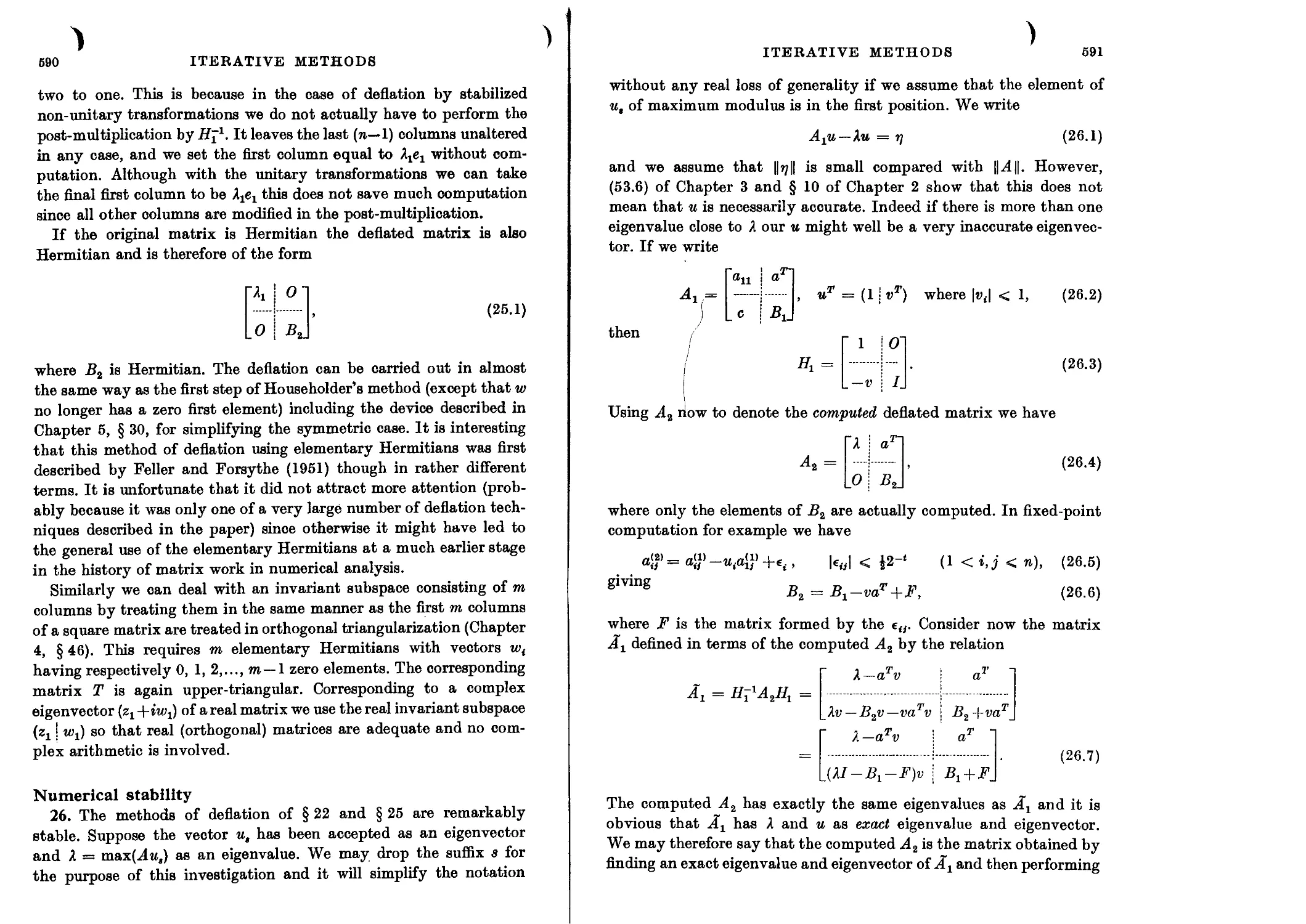

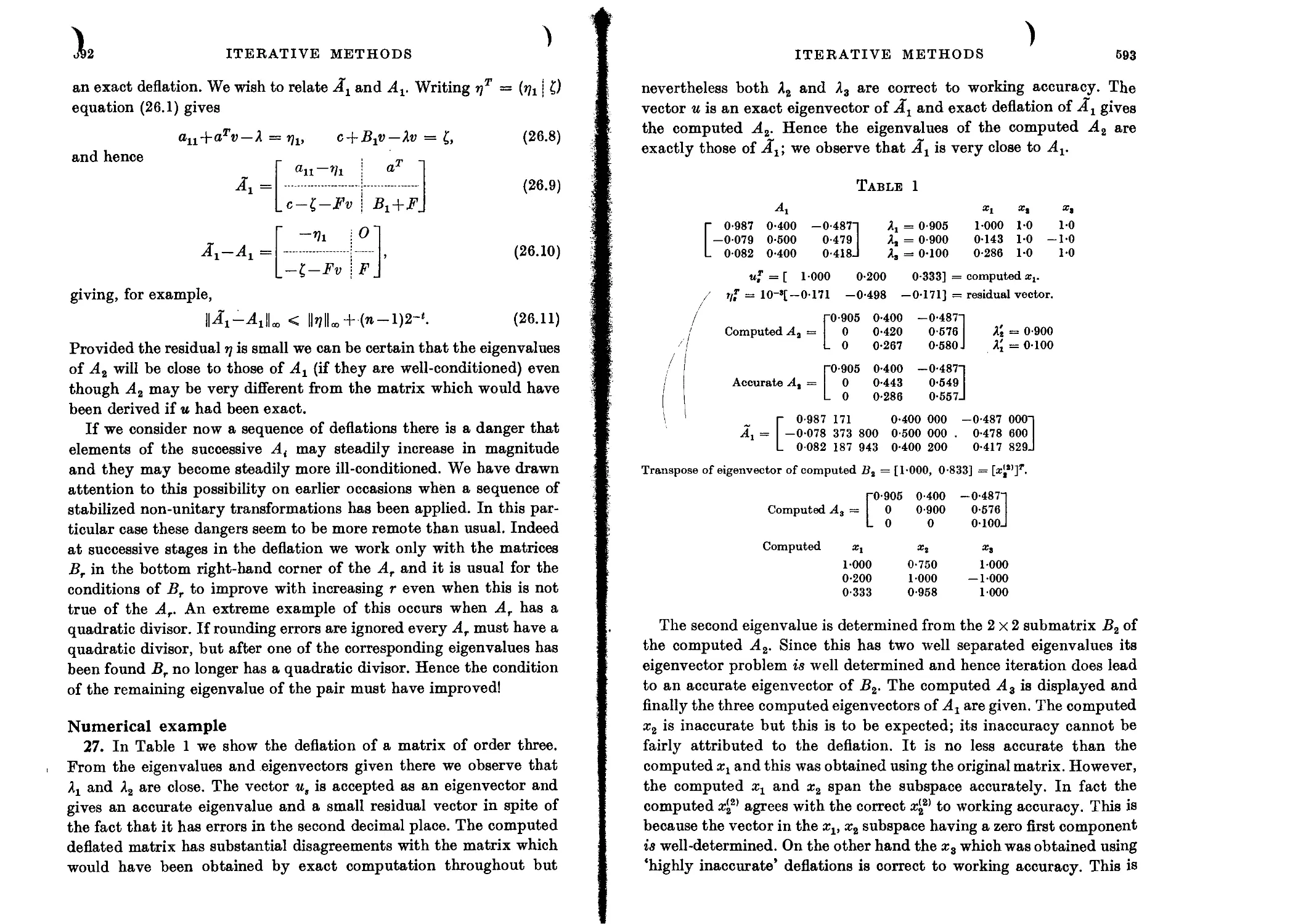

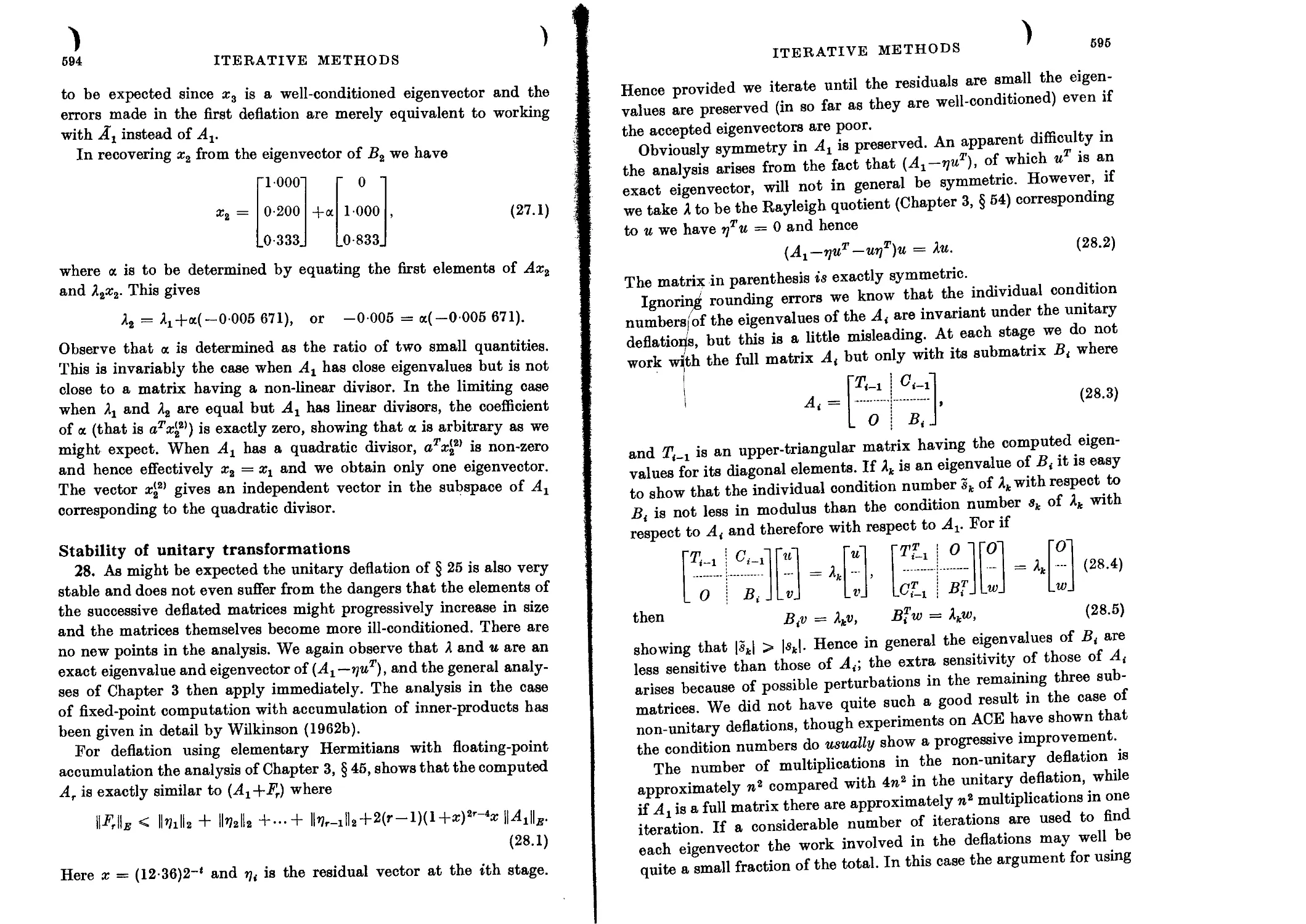

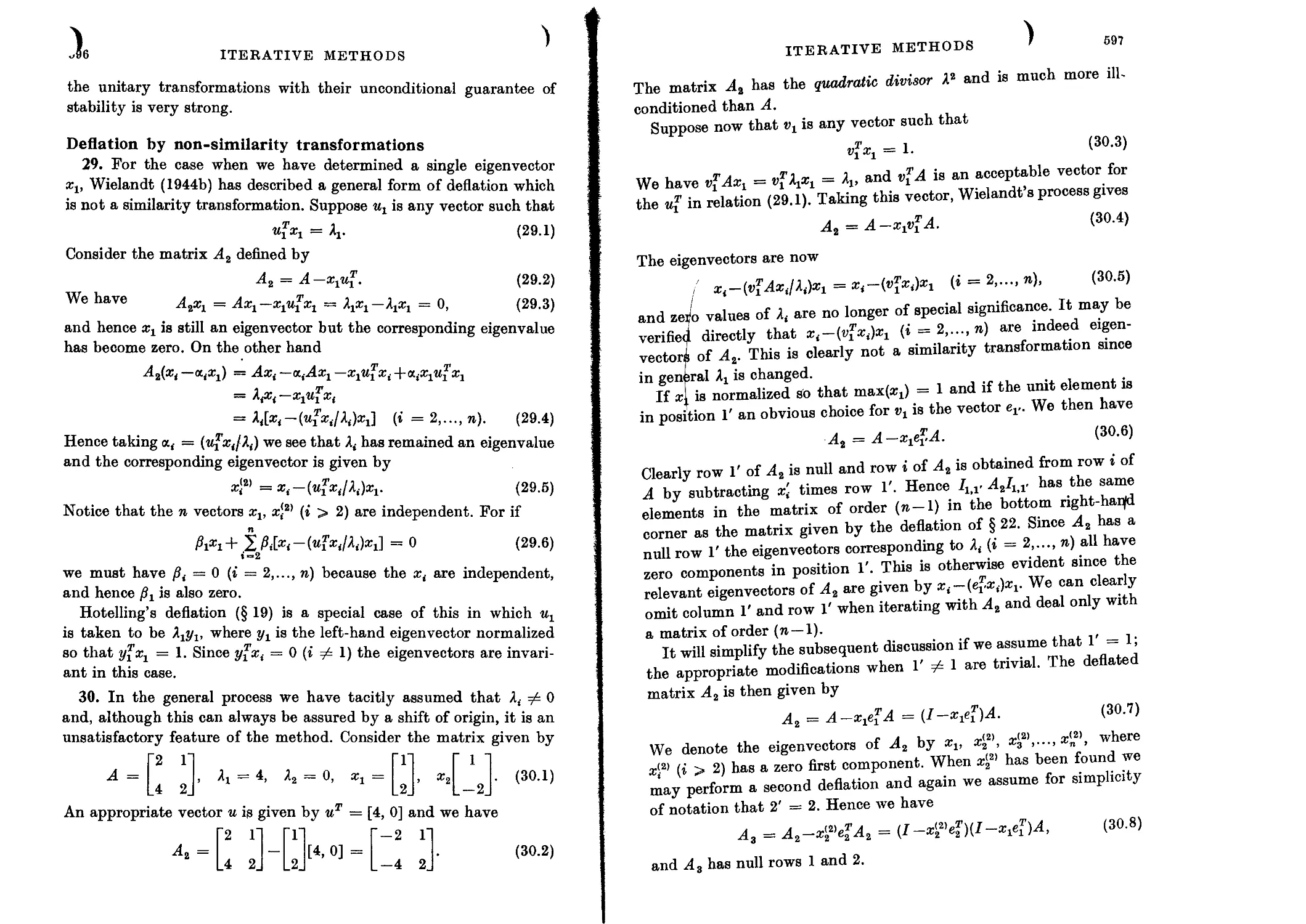

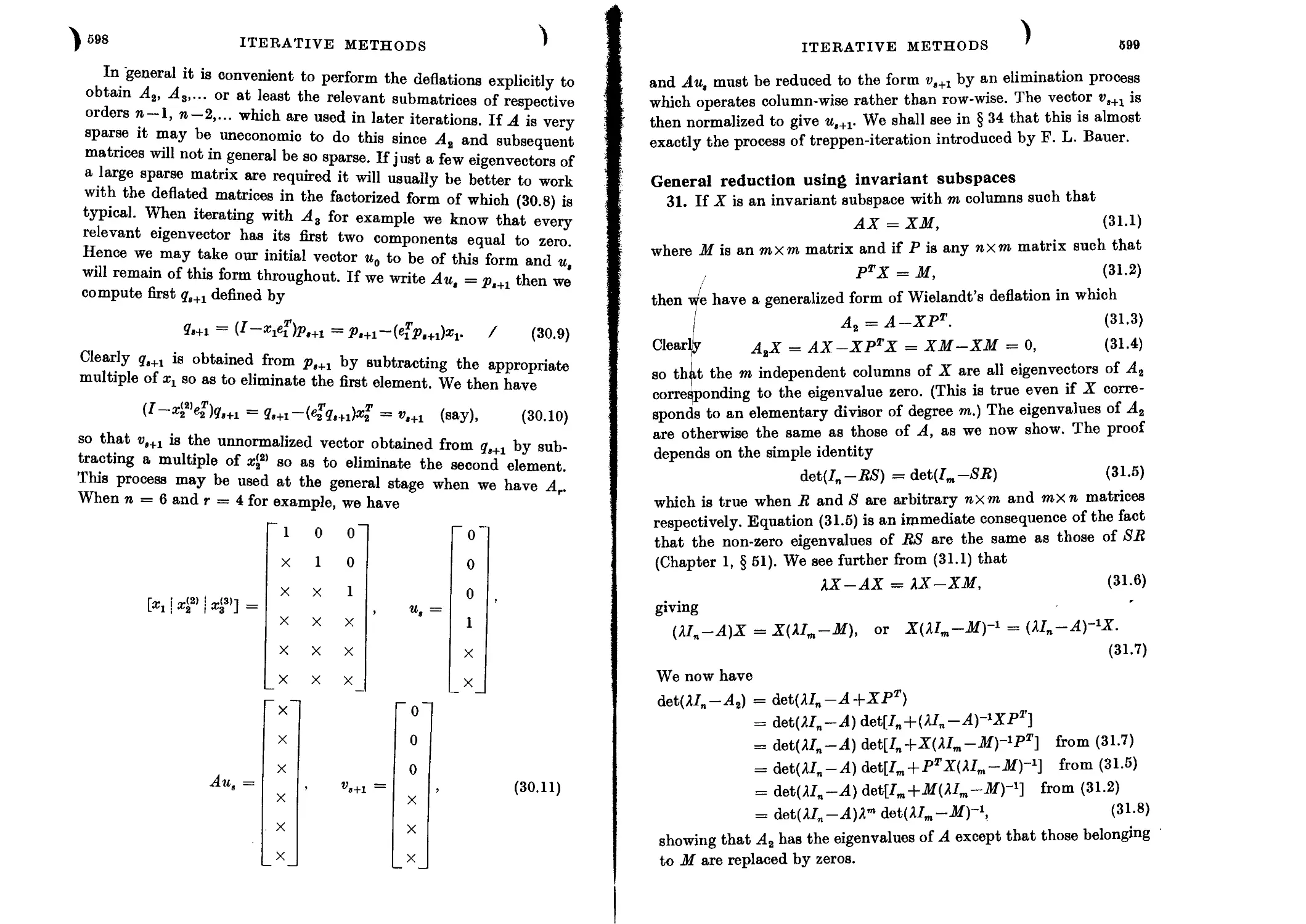

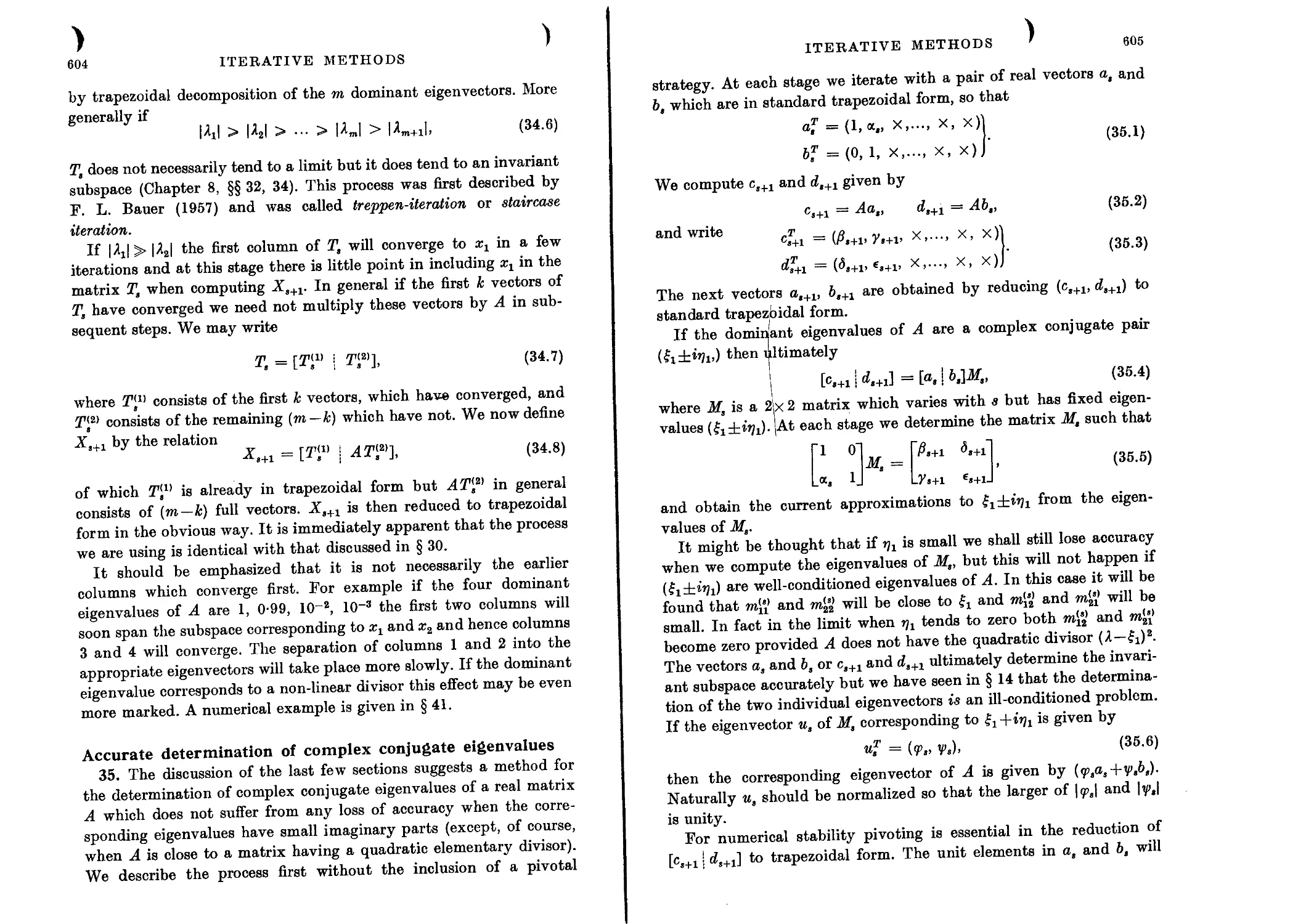

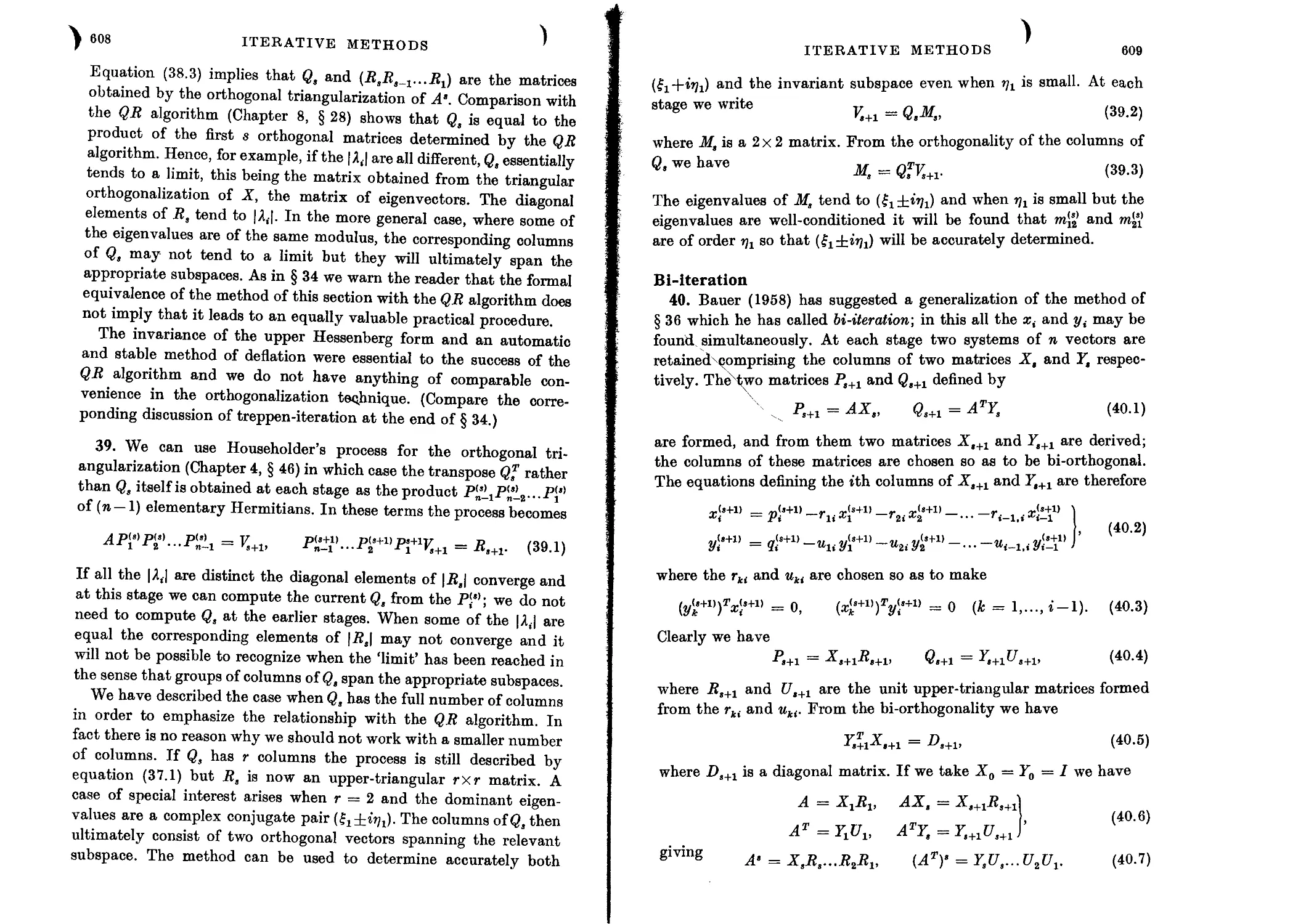

complex.