Текст

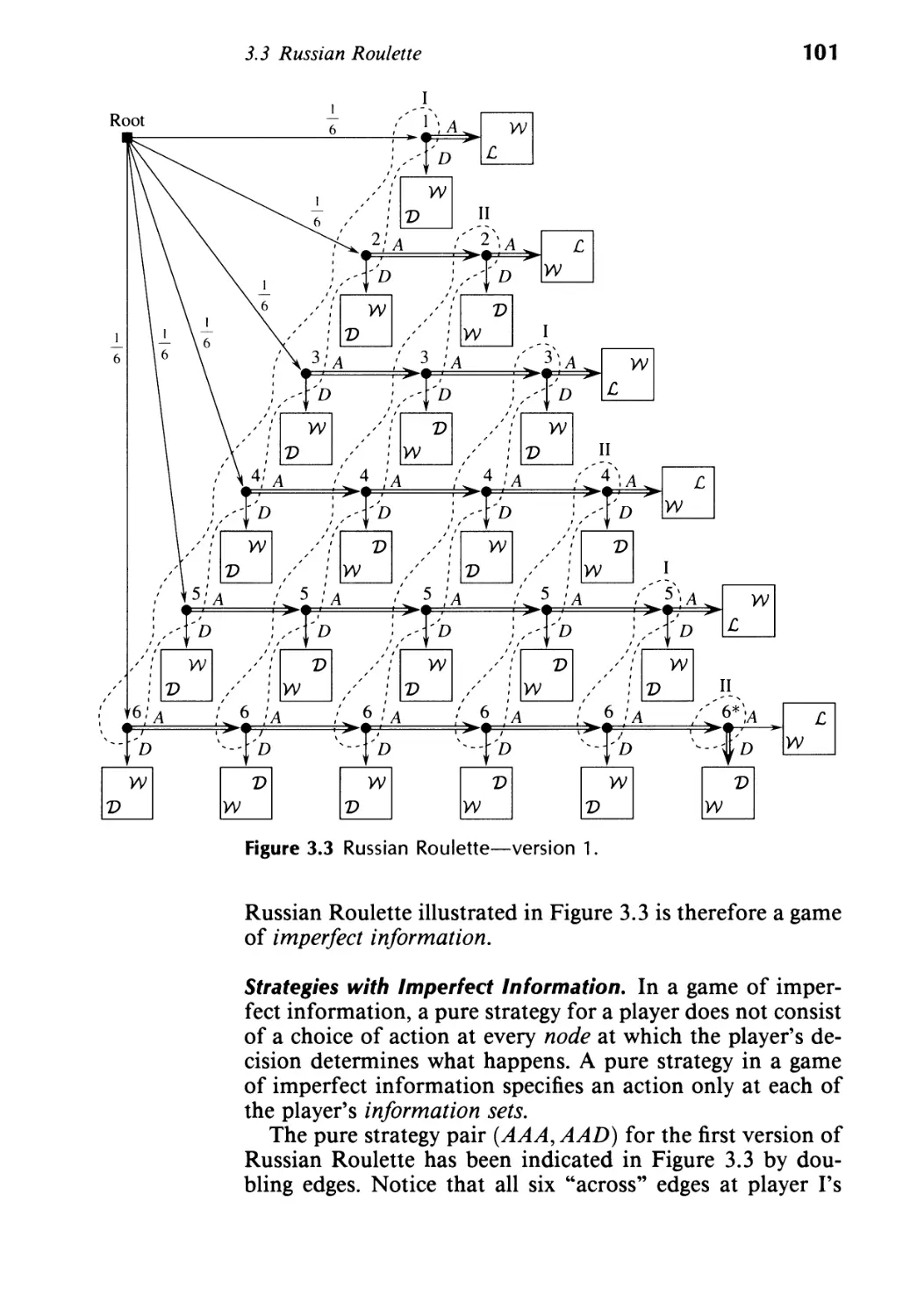

&

.

,

,

I

I

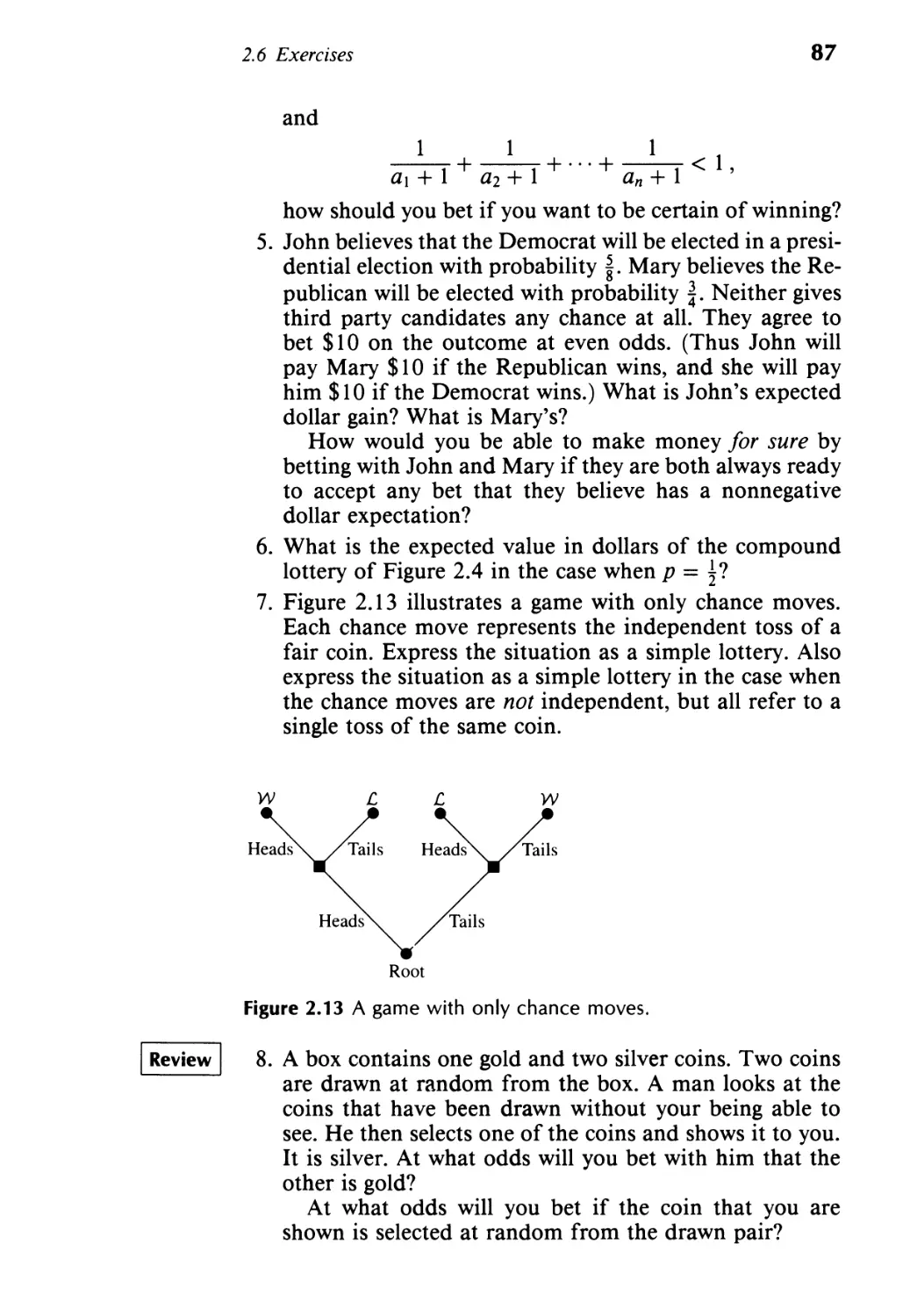

. .

&

Fun and Games

A Text on Game Theory

Ken Binmore

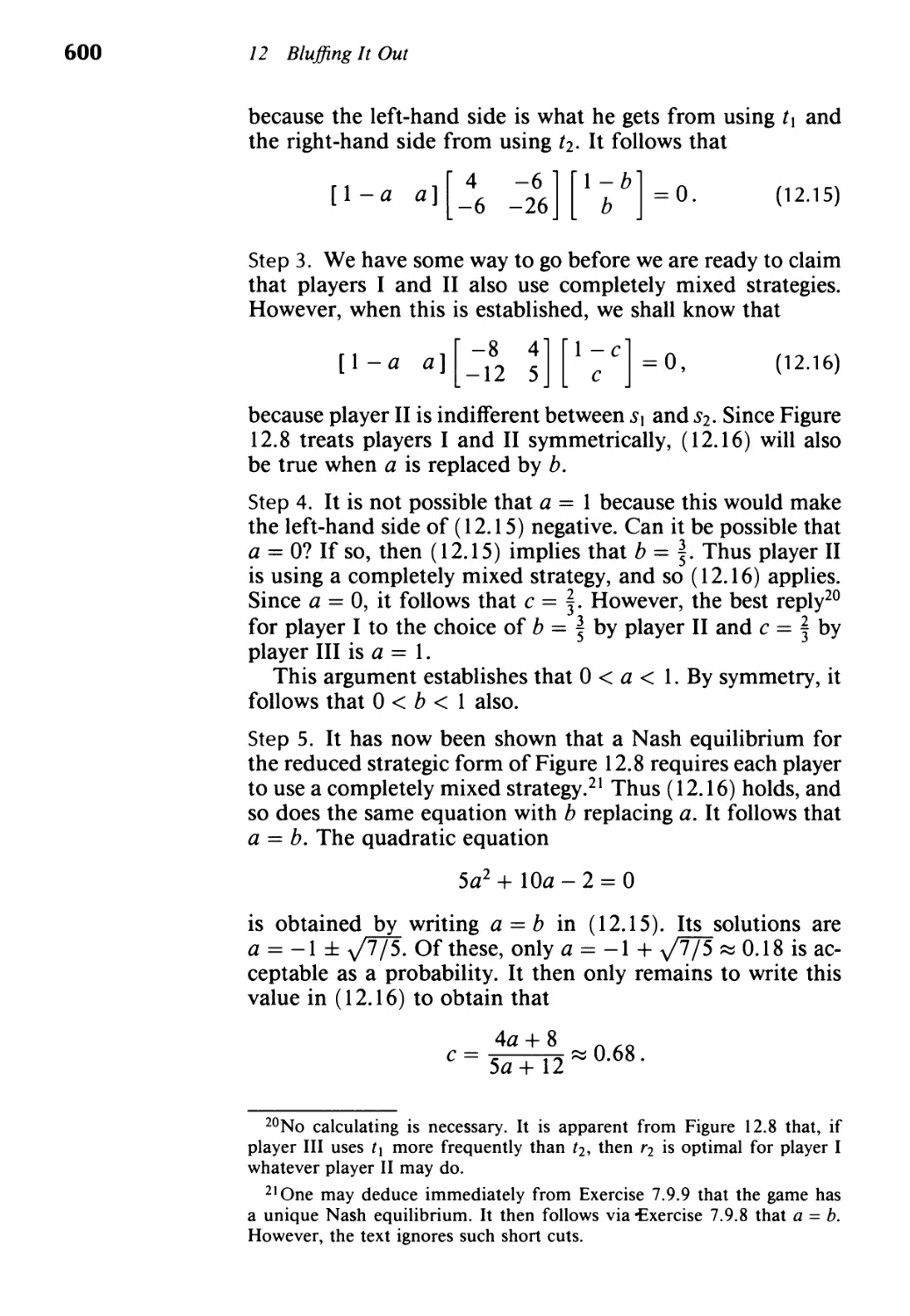

University of Michigan

Ann Arbor

D. C. Heath and Company

Lexington, Massachusetts Toronto

Address editorial correspondence to:

D. C. Heath

125 Spring Street

Lexington, MA 02173

Cover Photograph: Eric S. Fordham

The John Tenniel illustrations on pages v, vii, 1, 23, 65, 93, 127,

167,217,275,345,391,443,499, and 571, and the "Ocean Chart"

on page xv by Henry Holiday are taken from The Complete Il-

lustrated Works of Lewis Carroll, published by Chancellor Press,

London, 1982.

Technical art by Tech-Graphics, Woburn, Mass.

Copyright @ 1992 by D. C. Heath and Company.

All rights reserved. No part of this publication may be reproduced

or transmitted in any form or by any means, electronic or mechan-

ical, including photocopy, recording, or any information storage or

retrieval system, without permission in writing from the publisher.

Published simultaneously in Canada.

Printed in the United States of America.

International Standard Book Number: 0-669-24603-4

Library of Congress Catalog Number: 90-83786

10 9 8 7 6 5 4 3 2 1

I dedicate

Fun and GaInes

to my wife

Josephine.

Preface

......

o

Game theory is an activity like skiing or tennis that is only

fun if you try to do it well. But learning to do something well

is seldom quick and easy, and game theory is no exception.

Moreover, unlike skiing or tennis, it really matters that we

learn to use game theory well. Its aim is to investigate the

manner in which rational people should interact when they

have conflicting interests. So far, it provides answers only

in simple situations, but these answers have already led to

a fundamental restructuring of the way in which economic

theorists think about the world, and the time is perhaps not

too far distant when the same will be true for all the social

sCiences.

Thus, although I have tried to develop the theory with a

light touch, this is a serious book about a serious subject.

..

VII

...

VIII

Preface

Above all, Fun and Games is a how-to-do-it book. It is not

like one of those television programs in which you look over

an artist's shoulder while he briskly applies ready-mixed

paints to an already-prepared canvas using techniques that

are never fully explained lest the viewer lose interest and

switch to another channel. Encouraging people to appreciate

art is doubtless a worthy activity, but I am not interested in

trying to do the same thing for game theory. My aims are

more ambitious. I hope that, after reading this book, you

will be able to do more than admire how other people have

used game theory. My hope is that you will be able to apply

game-theoretic skills to simple problems all by yourself.

Fun and Games is intended to be a serious book on game

theory that is suitable for teaching both undergraduate and

graduate students. I know that the book works for both pur-

poses because I have been using it and its less-polished pre-

decessors for more than fifteen years at various institutions

both in the United States and in Europe. I also know that

other approaches that seem as though they might work do

not. The practicalities of running a serious course in game

theory for undergraduates impose severe limitations on what

it is wise to attempt both in terms of content, and in terms of

presentation. To these I have added two further constraints.

The first is that the focus of the book is on noncooperative

game theory. Very little is said about cooperative game the-

ory. This is partly because of the second constraint, which

may be less popular. I have to confess to being squeamish

about teaching things to undergraduates that might turn out

to be wrong. I know that it is fun for the teacher to weigh the

pros and cons of new ideas, but undergraduates tend to treat

anything said in class as though Moses had brought it down

from the mountain engraved on tablets of stone. Where in-

tellectual honesty allows, I have therefore tried very hard to

exclude controversial theoretical ideas altogether.!

The book is suitable for teaching students from a number

of disciplines. However, the binding practical constraint is

that of attracting and holding an audience large enough to

merit a case for game theory being retained on the list of

undergraduate courses offered by an economics department.

Perhaps the increasing recognition of the importance of game

1 Game theory is still being developed at a very fast rate. In such cir-

cumstances, it is inevitable that many of the new ideas being tried out will

be found wanting and discarded after enjoying a brief period in the lime-

light. I have adopted the very conservative policy of including only those

ideas that I believe are sure to survive. This still leaves more than enough

material to fill a semester course for undergraduates twice over.

Preface

.

IX

theory will change things in the future, but as things stand at

present, one cannot realistically ask for much in the way of

mathematical prerequisites from the students; nor can one

restrict entry to economics majors-unless one is to aban-

don the ambition of teaching a serious how-to-do-it course

in favor of a superficial romp through a collection of over-

simplified applications whose significance the students will

have no way of evaluating. The Teaching Guide that follows

this Preface explains the implications in detail. In brief, there

is a limit to how much mathematical technique can be taught

along the way, and there is a need to respect the wide interests

of your audience in choosing the examples used to illustrate

the theory.

This is not a scholarly work. It contains no references and,

with a few exceptions, mention is made only of the great

pioneers in game theory. This would be standard in a book

at this level in more established disciplines. Apologies need to

be made on this count in a book on game theory only because

much of the work is recent and those who contributed are

mostly still alive and kicking. All I can say to those who feel

that they should have been cited explicitly is that they are in

very good company. However, I do want to express my thanks

to some individuals whose efforts helped to shape this book,

either directly, or indirectly through their published work.

First, there is the Victorian artist John Tenniel, whose

magnificent illustrations from Lewis Carroll's Alice Through

the Looking Glass and elsewhere I have shamelessly stolen.

Second, my thanks go to Donald Knuth and to Leslie Lamport

for providing the TEX typesetting program in which the text

was written. Third, I want to thank my publisher D. C. Heath

for tolerating such idiosyncracies as punctuating "like this",

instead of "like this." George Lobell and Jennifer Brett were

particularly helpful in getting the book on the road. Fourth,

Pat O'Connell-Young deserves thanks for typing most of the

current version, and Mimi Bell for typing a previous version

of the book. Fifth, there are D. C. Heath's reviewers: James

Bergin, Engelbert Dockner, Ray Farrow, Chris Harris, David

Levine, George Mailath, Andrew McLennan, Michael Meurer

and Max Stinchcombe. Finally, there is a long list of other

economists and mathematicians to whom I owe a debt of grat-

itude: Steve Alpern, Bob Aumann, Pierpaolo Battigalli, Ted

Bergstrom, Adam Brandenburger, James Friedman, Drew

Fudenberg, David Gale, John Harsanyi, David Kreps, Herve

Moulin, Roger Myerson, Barry O'Neill, Adam Ostaszewski,

Barry Nalebuff, John Nash, Andy Postelwaite, Phil Reny,

Bob Rosenthal, Ariel Rubinstein, Larry Samuelson, Reinhard

x

Preface

Selten, Avner Shaked, John Sutton, Jean Tirole, Hal Varian

and Robert Wilson.

Finally, on the subject of what pass for jokes in the text,

let me say that I feel entitled to the same immunity from

criticism as the musician in the Western saloon whose pi-

ano was reported by Oscar Wilde to carry a notice saying,

"Please do not shoot the pianist. He is doing his best". It

isn't easy to sustain a light-hearted atmosphere in a work of

this kind. Perhaps it was over-ambitious to promise both Fun

and Games. Certainly, I thought it wise to write in a more

deadpan style in the earlier chapters. However, I hope that

some readers at least will agree that I did eventually try to

live up to my title.

K. B.

Contents

Teaching Guide xv

Introduction 1

0.1 What Is Game Theory About? 3

0.2 Where Is Game Theory Coming From? 11

0.3 Where Is Game Theory Going To? 13

0.4 What Can Game Theory Do for Us? 14

0.5 Conclusion 21

1 Winning Out

1.1 Introduction 25

1.2 The Rules of the Game 25

1.3 Strategies 30

1.4 Zermelo's Algorithm 32

1.5 Nim 35

1.6 Hex 37

1. 7 Chess 41

1.8 Rational Play? 46

1.9 Conflict and Cooperation 51

1.1 0 Exercises 57

23

2 Taking Chances 65

2.1 Introduction 67

2.2 Lotteries 72

2.3 Game Values 75

2.4 Duel 76

2.5 Parcheesi 81

2.6 Exercises 86

.

XI

..

XII

Contents

3 Accounting for Tastes 93

3.1 Rational Preferences 95

3.2 Utility Functions 96

3.3 Russian Roulette 99

3.4 Making Risky Choices 104

3.5 Utility Scales 112

3.6 The Noble Savage 115

3.7 Exercises 120

4 Getting Paid Off 127

4.1 Payoffs 129

4.2 Bimatrix Games 133

4.3 Matrices 135

4.4 Vectors 138

4.5 Hyperplanes 142

4.6 Domination 146

4.7 Russian Roulette Again 153

4.8 Exercises 159

5 Making Deals 167

5.1 Introduction 169

5.2 Convexity 169

5.3 Cooperative Payoff Regions 174

5.4 The Bargaining Set 176

5.5 Nash Bargaining Solutions 180

5.6 Dividing the Dollar 191

5.7 Cooperative and Noncooperative Games 195

5.8 Bargaining Models 196

5.9 Exercises 212

6 Mixing Things Up 217

6.1 Introduction 219

6.2 Minimax and Maximin 219

6.3 Safety First 224

6.4 Mixed Strategies 227

Contents

...

XIII

6.5 Zero-Sum Games 237

6.6 Separating Hyperplanes 245

6.7 Battleships 254

6.8 The Inspection Game 257

6.9 N ash Threat Game 261

6.10 Exercises 265

7 Keeping Your Balance 275

7.1 Reaction Curves 277

7.2 Oligopoly and Perfect Competition 286

7.3 Equilibrium Selection 295

7.4 N ash Demand Game 299

7.5 Pre-play Negotiation 304

7.6 Pre-play Randomization 316

7.7 When Do Nash Equilibria Exist? 319

7.8 Hexing Brouwer 323

7.9 Exercises 329

8 Repeating Yourself 345

8.1 Reciprocity 347

8.2 Repeating a Zero-Sum Game 348

8.3 Repeating the Prisoners' Dilemma 353

8.4 Infinite Repetitions 360

8.5 Social Contract 379

8.6 Exercises 382

9 Adjusting to Circumstances 391

9.1 Spontaneous Order 393

9.2 Bounded Rationality 396

9.3 Economic Libration 398

9.4 Social Libration 412

9.5 Biological Libration 414

9.6 Evolutionary Stability 422

9.7 The Evolution of Cooperation 429

9.8 Exercises 434

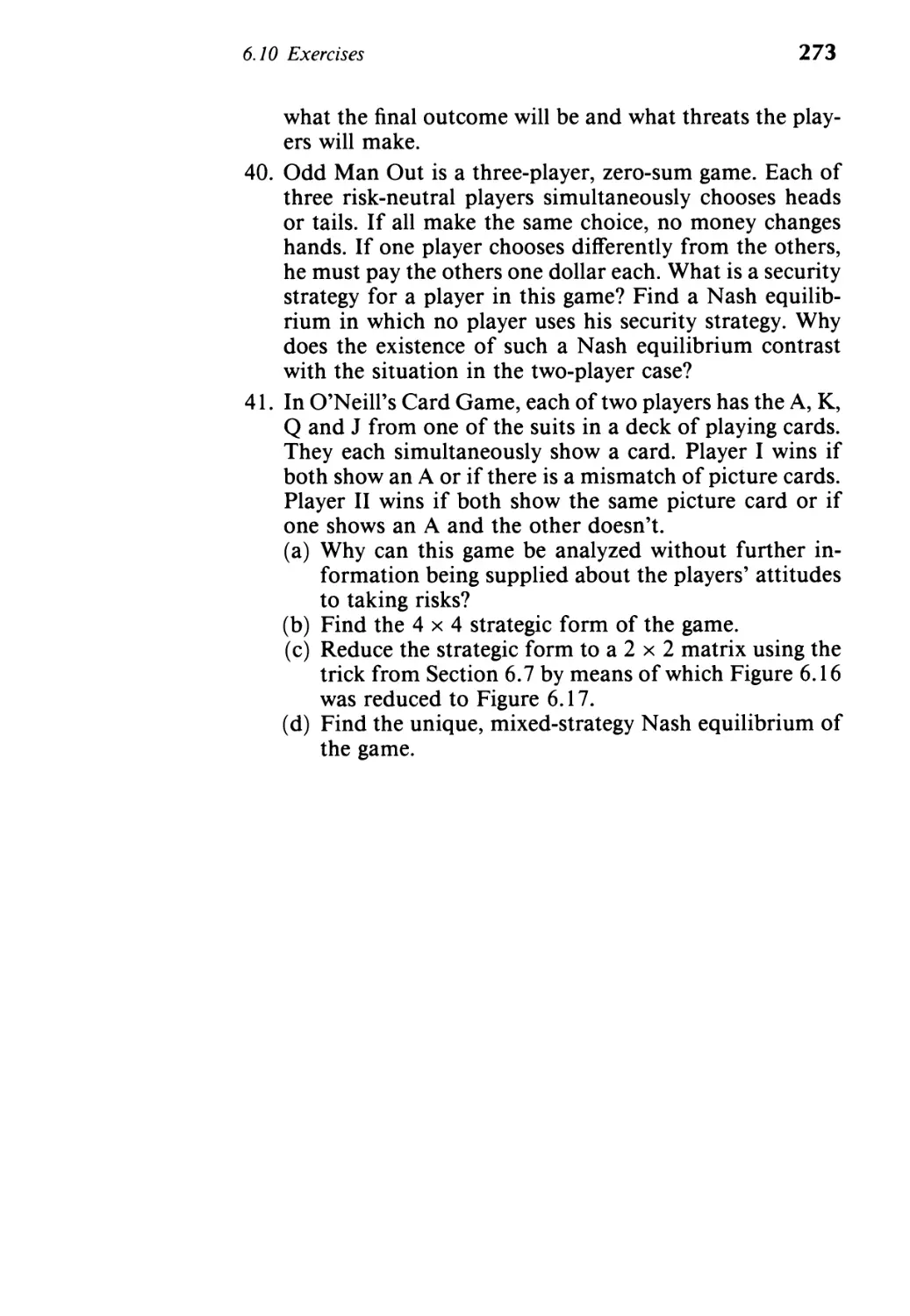

.

XIV

Contents

10 Knowing Your Place 443

10.1 Bob's Your Uncle 445

10.2 Knowledge 446

10.3 Possibility 449

10.4 Information Sets 454

10.5 Bayesian Updating 462

10.6 Common Knowledge 467

10.7 Agreeing to Disagree? 472

10.8 Common Knowledge in Game Theory 478

10.9 Exercises 488

11 Knowing Who to Believe 499

11.1 Complete and Incomplete Information 501

11.2 Typecasting 503

11.3 Bayesian Equilibrium 510

11.4 Continuous Random Variables 511

11.5 Duopoly with Incomplete Information 515

11.6 Purification 519

11.7 Auctions and Mechanism Design 523

11.8 Assessment Equilibrium 536

11.9 More Agreeing to Disagree 546

11.10 Exercises 549

12 Bluffing It Out 571

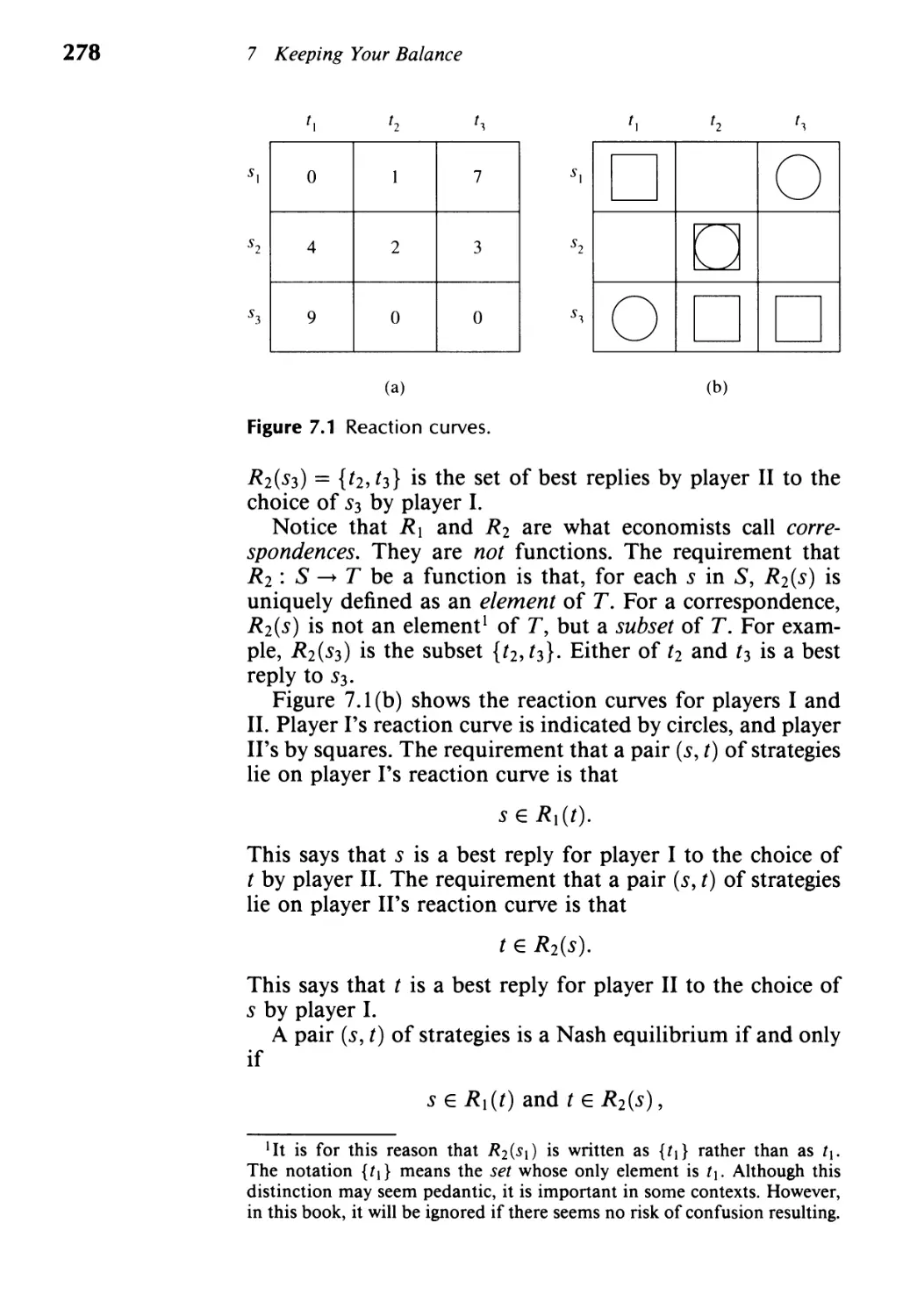

12.1 Poker 573

12.2 Conditional Probability Densities 577

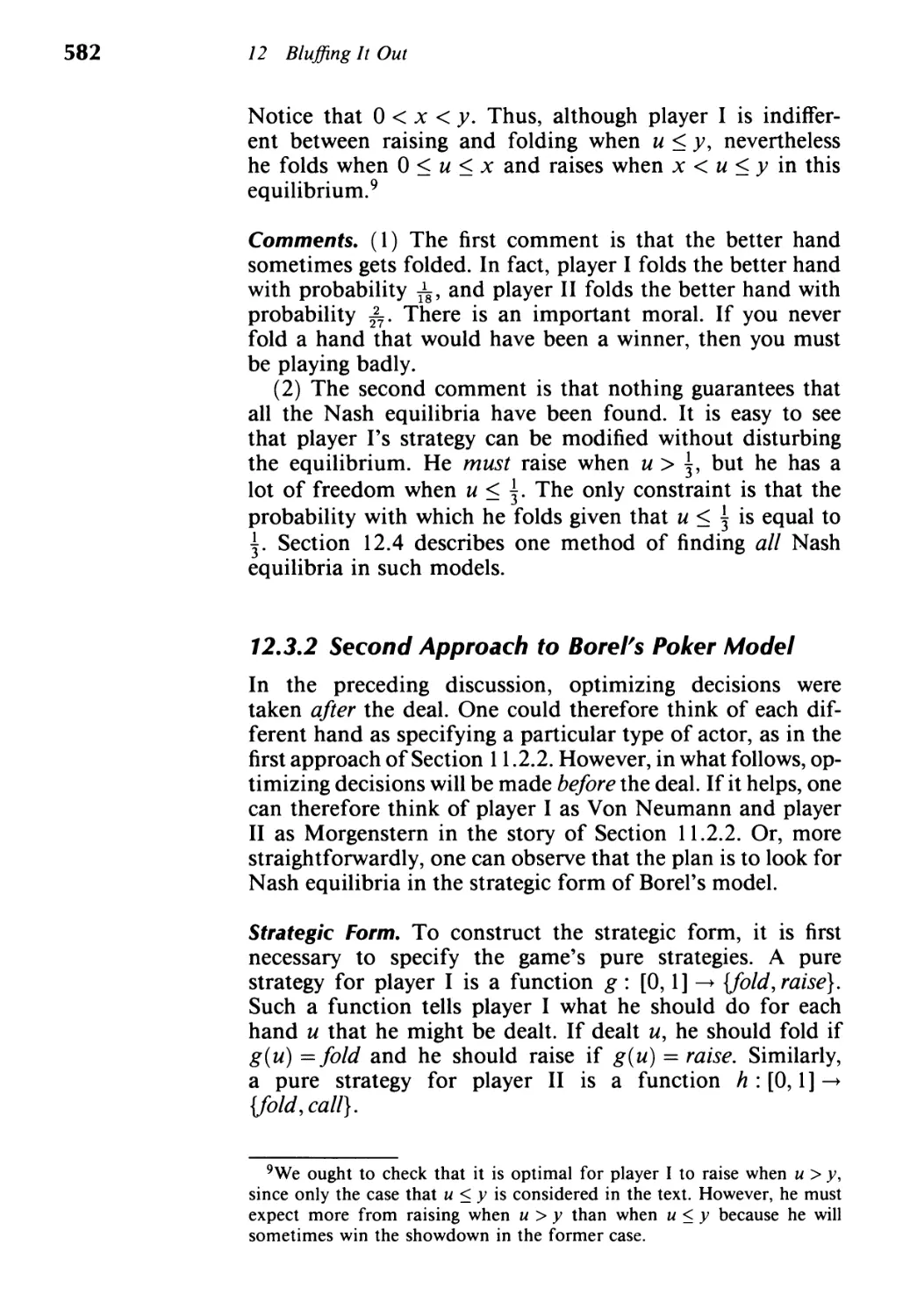

12.3 Borel's Poker Model 579

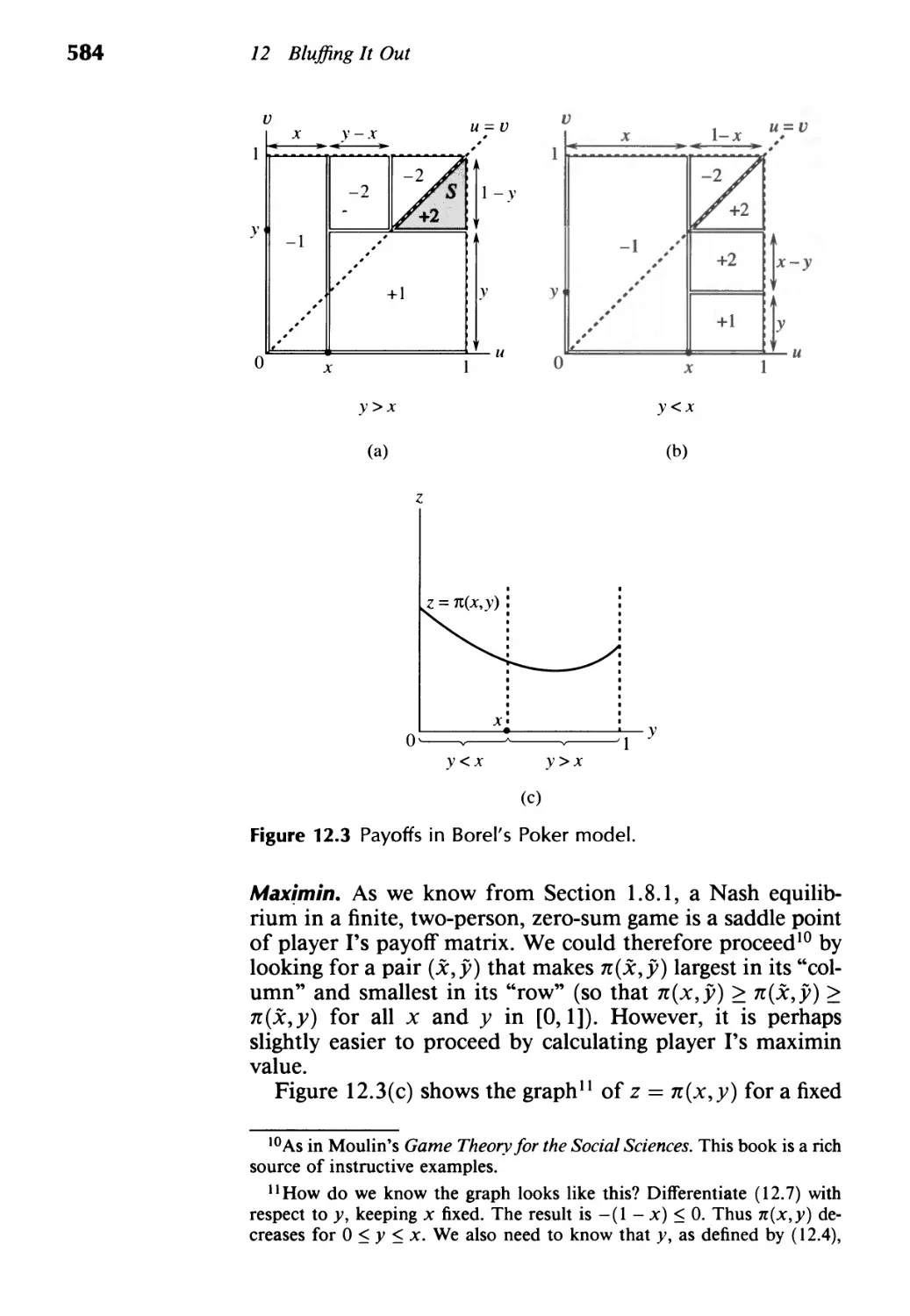

12.4 Von Neumann's Poker Model 585

12.5 Why Bluff? 591

12.6 Nash and Shapley's Poker Model 593

12.7 Conclusion 602

Answers Al

Index A34

Teaching Guide

LA.TITl DE

'lC'RTH

C"UA. "OR

1 2

2

.

Z

.

z

..

c

:

JI

r

I

...,

-!

..

2

I .

!

c

z

I

I

L

I

I

::'(al, -;;- iftf-;; -

OCEAN-CHART.

Charting a Course

Fun and Games is aimed at two distinct groups of students,

and is therefore written on two distinct levels. Teachers there-

fore need to chart a careful course through the material since

things could go awry if there is a mismatch between the stu-

dents and the topics chosen for study. Or, to say the same

thing more colorfully, in seeking to reveal the nature of the

snark to your students, beware lest you show them a boojum

by mistake. As Lewis Carroll put it, your audience will then

"softly and suddenly vanish away", like the Bellman's crew

in the Hunting of the Snark. However, the Bellman had only

the Ocean Chart shown above to guide him.

xv

.

XVI

Teaching Guide

One aim of Fun and Games is to provide a semester course

in game theory for undergraduates. The undergraduates do

not need to have much prior knowledge of anything, but they

will have to be well motivated. Note, in particular, that the

undergraduates do not need to be economics majors. This

latter point is quite important, since, in many institutions,

an undergraduate course in game theory at the level of this

book may not be viable if aimed exclusively at economists.

However, my experience is that one attracts a respectable

audience 1 if one is willing to admit students from all disci-

plines, and to teach material that takes account of their wide

range of interests.

Another aim is to provide "pre-med" material in game the-

ory for graduate students in economics. In this role, the book

is not intended as a competitor to the excellent books written

by Fudenburg and Tirole and by Myerson. It is intended to

serve only as an easy way into the serious stuff for those who

do not feel the urge to plunge in immediately at the deep

end.

The two aims frequently dovetail very neatly, and the join

between the two levels of exposition would not always be

easy to spot if it were not pointed out explicitly. Teachers

must decide for themselves how often to cross this boundary

line. I certainly cross it myself when the idiosyncracies of my

audience seem to call for it. However, it is unwise to cross the

line too often, since the risk of frightening off one audience

and boring the other is considerable. Of course, nothing will

or should prevent enthusiastic undergraduates from reading

the more advanced material on the side. At the same time,

graduate students will need to skim the more elementary ma-

terial in order to make sense of the topics written specifically

for them. In class, however, I recommend keeping on the

appropriate side of the demarcation line nearly all the time.

Material that is a candidate for being skipped when teach-

ing a semester course to undergraduates is indicated by a

stylized version of John Tenniel's Mad Hatter in the margin.

When the Mad Hatter is running away, consider very care-

fully whether the material following his appearance is suit-

able for your undergraduates. If not, then skip the material

and go to the section number indicated beneath the fleeing

Mad Hatter. When I teach economics undergraduates, for

example, my instructions are that the Mad Hatter is to be

1 Last term I taught game theory at the University of Michigan to 46

undergraduates taking the course for credit, and an indeterminate number

of auditors of various types.

Teaching Guide

..

XVII

followed wherever he goes, except when he is skipping some

economics. When I teach mathematics undergraduates, my

instructions are more complicated.

Nothing whatever in the book is too hard for graduate

students. They should certainly not follow the Mad Hatter

anywhere at all. On the contrary, his appearance should sig-

nal the fact that the material that follows contains something

that merits their close attention.

Sometimes the Mad Hatter in the margin is not running

away. Instead he is shown somewhat apprehensively hang-

ing around to see what happens next. Usually what happens

next is something mathematical. Such passages may be diffi-

cult for some undergraduates, but cannot be skipped without

disrupting the continuity of the exposition.

Review

Econ

As in the examples given above, all the marginal Mad Hat-

ters are equipped with a label indicating the type of material

to which they refer. The five labels used are

Review Math Econ Phil Fun

The same labeling is used to categorize many of the exercises

that appear at the end of each chapter. What do these labels

mean?

Review. My experience is that one cannot run a viable course

for undergraduates if the mathematical prerequisites are set

too high. The most one can realistically do is to restrict en-

try to students who have passed a serious freshman calculus

course. Many of the students will know more than this. The

others will have to pick up what extra mathematics they need

along the way. Students seem ready to put in the necessary

work on the side provided that what is required of them

is made very clear. This explains the presence of the REVIEW

sections. The chief topics covered in these sections are proba-

bility, matrices and vectors, and convexity. Calculus does not

get the same treatment, since students are assumed to know

what little is needed already. However, reminders about how

to do various things sometimes appear as footnotes. 2

2 Although I know that those who need such reminders do not read such

things as footnotes, appendices and introductions.

...

XV III

Teaching Guide

Do not think for one moment of teaching the review ma-

terial at the blackboard. I have already pulled the rug out

from under such a project by using the label REVIEW. It seems

a universal knee-jerk reaction in the human species to switch

off as soon as this word is mentioned in the classroom. What

I do myself is to point out those mathematical ideas in the

REVIEW sections that are indispensable, and then sneak some

discussion of them into the hours devoted to going over the

exercises.

Actually, there is a lot more in these REVIEW sections than

undergraduate students are going to need to study Fun and

Games. When writing them, I also had graduate students in

mind. If my experience is anything to go by, many gradu-

ate students would be advised to skim these mathematical

REVIEW sections very slowly.

Math. When teaching undergraduates, you will probably wish

to skip nearly all the MATH sections that the Mad Hatter

is shown running away from, unless your audience consists

primarily of mathematics majors. However, mathematicians

should not take this to imply that the MATH sections are

written in a formal style. None of the arguments offered

come anywhere near meeting the standards of a mathemati-

cal proof. Mostly, I follow the Bellman and use the principle

that what I tell you three times is true. On the other hand, I

have tried hard to be intellectually honest. All the arguments

offered can be fleshed out into formal proofs without call-

ing for mathematical techniques that would be beyond the

understanding of an average undergraduate willing to take

some time and trouble. I hope that these proof schemata will

be particularly useful to graduate students who are too of-

ten shortchanged with a list of cookbook techniques whose

validity they are expected to take on trust.

Econ. I have taught game theory in numerous institutions.

The undergraduate classes consist mostly of economists, but

there is always a substantial number of students majoring

in other disciplines whose presence livens things up a fair

amount. The disciplines include mathematics, engineering,

philosophy and political science. Some of the ECON sections

are intended to acquaint such students with relevant elemen-

tary ideas from economics. These sections are intended to be

read on the side. Later ECON sections indicate some of the more

straightforward applications of game theory to economics. Al-

though the Mad Hatter is shown running away from these sec-

tions because they are not usually strictly necessary to the flow

Teaching Guide

.

XIX

of the exposition, economists will want to teach this mate-

rial unless it has been adequately covered in other economics

courses. My experience with majors in other disciplines is that

they welcome the opportunity to learn a little economics.

If Fun and Games proceeds to a second edition, I plan to

include an organized supplement on simple applications of

game theory in economics and elsewhere. In the meantime,

three things should be noted. First, those applications cov-

ered in the text are not covered in a superficial way. That is,

the text provides enough detail to make it possible for the

students to work problems on the material. Second, many of

the ECON exercises at the end of each chapter are very instruc-

tive. (I hope that graduate students will not neglect to attempt

as many of these as time allows.) My own preference is to

encourage students to teach themselves how to apply game

theory to economics via these exercises. Finally, remember

that some of your audience will not be economics majors.

Phil. It is not wise to try to teach the foundations of game

theory to undergraduates in a formal way. On the other

hand, your more adventurous students are not going to be

fobbed off with easy answers. Somehow it is necessary to

satisfy the sceptics without bewildering the rest of the class.

I try to do this with the PHIL sections. My recommendation

is to soft-pedal this material when teaching undergraduates.

Personally, I find the issues fascinating. However, trying

to discuss them seriously with undergraduates can some-

times be very frustrating. Fortunately, most undergraduates

are only too happy to leave philosophical questions to the

philosophers.

Graduate students, on the other hand, cannot dispense

with a view on these issues. But a fully rounded view will

have to be obtained from some other source since I have

tried hard in writing this book to steer clear of controver-

sial matters. However, perhaps the PHIL sections will make it

clear what some of the issues are on which a view is needed.

Perhaps they will also serve to instill a little scepticism about

some of the views that are currently fashionable.

Fun. I hope that you will not skip all the FUN sections. Some

of them are very instructive.

How Far to Go

My advice to graduate students is simple. I advocate reading

everything (including the final chapter on Poker) as much

xx

Teaching Guide

for the mathematical techniques that will be encountered as

for the content. As a focus for your studies, I suggest that

you make it your objective to get to Chapters 7, 8, 10 and

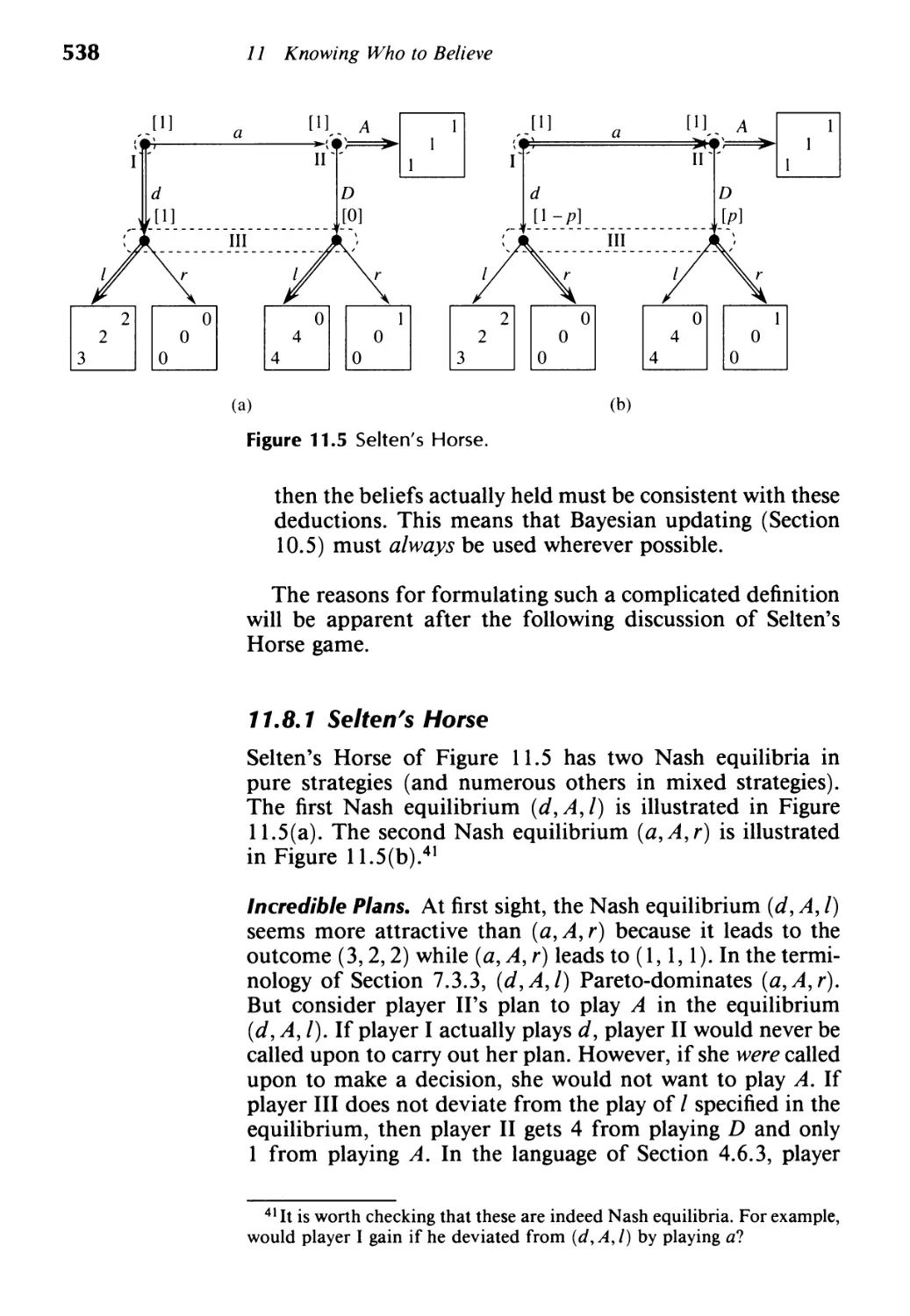

11 as soon as possible. Otherwise there is nothing more that

needs to be said to a graduate student except that it is a

waste of time to read anything at all without planning to

work at least some of the exercises. However, the position

for undergraduates is more complicated.

Even if the Mad Hatter is followed wherever he goes, Fun

and Games still contains a lot more material than it would

be wise to try and teach to undergraduates in a one-semester

course. Given that adequate time is set aside for discussion

of the exercises, there is a choice to be made about what

topics to cover. To a large extent, this is a judgment call.

However, if this book is to be used, I do not recommend

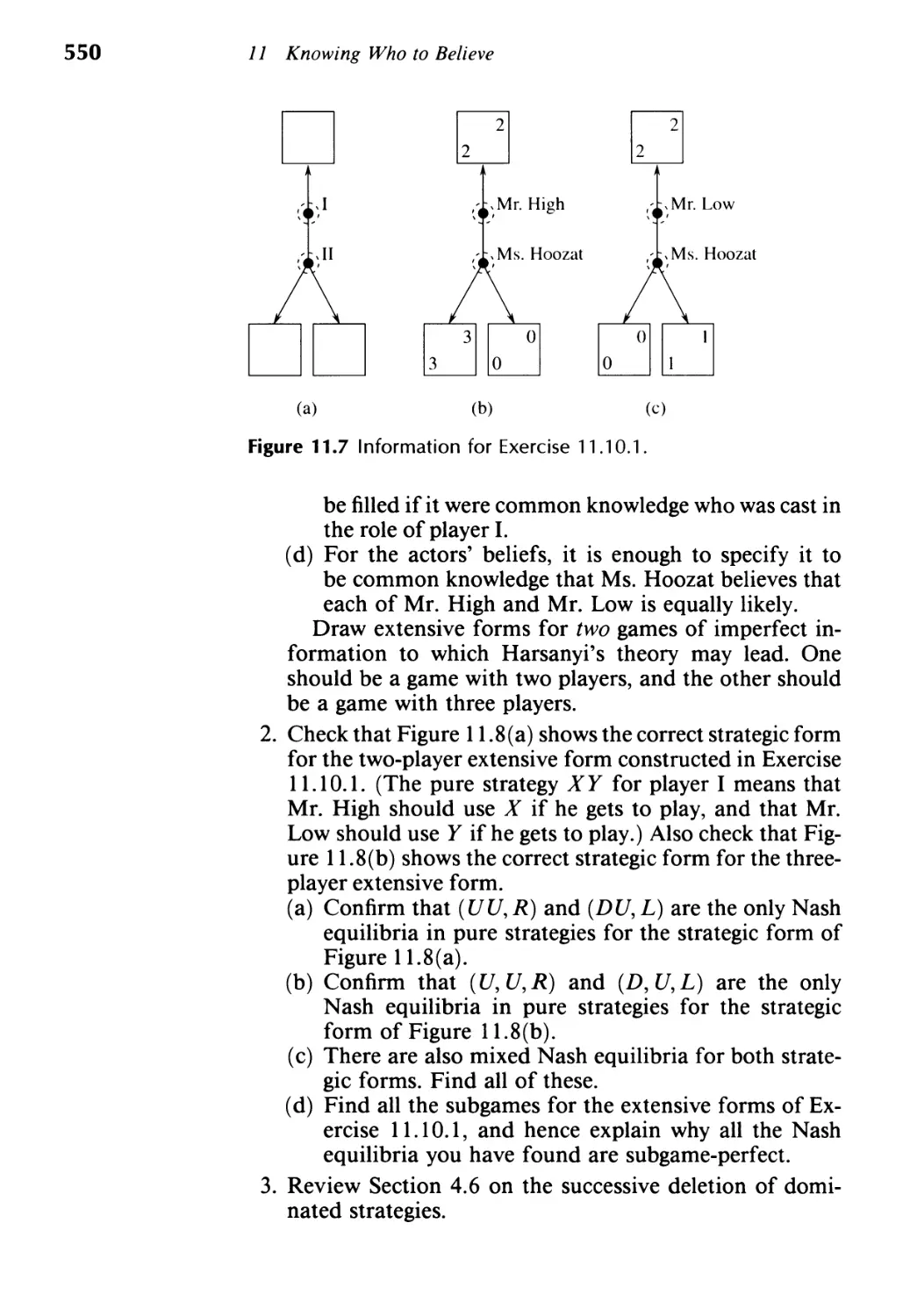

changing the order in which topics are introduced, even when

the order I have chosen may seem curious. I have been ex-

perimenting with various approaches for more than fifteen

years, and my reasons for doing it my way may not always

be immediately obvious. For example, why does the chapter

on bargaining precede the chapter on zero-sum games? The

reason is that the chapter on zero-sum games is more tech-

nically demanding for undergraduates than you might think.

In particular, whatever their background, you cannot always

rely on your students' knowing anything useful about con-

vexity at all. However, after the chapter on bargaining, they

will no longer think of a convex set as being some mysteri-

ous and incomprehensible mathematical object. Why is the

notion of a Nash equilibrium introduced in Chapter 1 and

then kept simmering all the way up to Chapter 7 before it is

discussed comprehensively? This is because undergraduates

can become overwhelmed if you try to tell them everything

at once. They often need time to build up some confidepce

in their ability to cope before you hit them with too mtich.

Why so much fuss about Hex in Chapter I? Because it is

going to get used in Chapter 7 in a discussion of fixed point

theorems. 3 Such a litany of questions and answers could be

continued indefinitely. In brief, some of the later material

depends on earlier material in ways that may not be imme-

diately apparent.

The list of chapters given next indicates what each chapter

is intended to achieve. Three routes through the material are

3 However, if you follow the Mad Hatter, you will skip most of the fuss

about Hex and all the discussion of fixed point theorems-although I have

taught both topics successfully to undergraduates.

Teaching Guide

.

XXI

then proposed, of which I would advocate the first if you are

teaching game theory to undergraduates for the first time.

Introduction. The introduction provides some ammuni-

tion for a preliminary pep-talk if this seems necessary. It is

about where game theory came from and where it is going

to, what it can do and why it matters.

1. Winning Out. The formal definition of a game is not in-

troduced all at once. This chapter introduces two-player

games of perfect information with no chance moves. A

few results about the strictly competitive case are proved

carefully to indicate the flavor of what is to come. The

ideas of Nash and subgame-perfect equilibrium make a

first tentative appearance.

2. Taking Chances. Chance moves and lotteries are intro-

duced. Elementary probability ideas are reviewed and put

to use.

3. Accounting for Tastes. A review of elementary utility the-

ory is followed by an extended example that touches upon

the modeling of imperfect information and emphasizes

that rational behavior may depend on the players' atti-

tudes to taking risks. Von Neumann and Morgenstern util-

ity theory is then discussed.

4. Getting Paid Off. The notion of a Von Neumann and Mor-

genstern payoff is systematically exploited. After an ex-

tended review of vectors and matrices, the idea of succes-

sively deleting dominated strategies is explained. Common

knowledge is mentioned briefly, and the distinction be-

tween Nash and subgame-perfect equilibrium is stressed.

5. Making Deals. This chapter is about bargaining. After

reviewing convexity, it introduces the Nash bargaining

solution. (This is the only use of cooperative game the-

ory in the book.) The remainder of the chapter uses the

Rubinstein bargaining model to press home the idea of a

subgame-perfect equilibrium.

6. Mixing Things Up. Mixed strategies are introduced as a

preliminary to a conventional analysis of zero-sum games.

(Note that mixed strategies are needed in all later chap-

ters, and minimax ideas are relevant in studying repeated

games. )

7. Keeping Your Balance. Now that Nash equilibrium is fa-

miliar, its properties are studied in some detail. Here is

where the Prisoners' Dilemma is first discussed. Reac-

tion curves, applications to oligopoly, equilibrium selec-

tion and existence are all in this long chapter. Even the

mathematics of fixed point methods is not neglected.

..

XXII

Teaching Guide

8. Repeating Yourself. This chapter is about repeated games.

It begins with an example intended to emphasize how

complicated strategies can get in this context. This ex-

ample motivates the chapter's use of finite automata in

describing strategies. A simple version of the folk the-

orem is given together with some philosophizing about

social contracts.

9. Adjusting to Circumstances. This chapter describes some

simple trial-and-error adjustment processes. It includes an

introduction to the use of game theory in evolutionary bi-

ology and a discussion of the "evolution of cooperation".

10. Knowing Your Place. This chapter contains an unusually

careful account of the role of the theory of knowledge

in game theory. Information sets are finally tied down

properly, and signaling is introduced. Common knowl-

edge gets a thorough airing, and some foundational issues

are raised.

11. Knowing Who to Believe. Incomplete information is dis-

cussed in detail. The chapter includes material on sig-

naling, auctions, the revelation principle and mechanism

design. The reasons why refinement theory is controver-

sial are mentioned.

12. Bluffing It Out. This chapter is ostensibly about Poker,

but is really an extended exercise in the use of the tech-

niques the book has introduced.

There are at least three viable routes through the book for

a one-semester course for undergraduates. Whether you wish

to prefix any of these routes with some propaganda about the

importance of game theory drawn from the Introduction is

a matter of taste. I find that there is time for a few excuri

sions off the main routes. What diversions I make depend

on whom I am teaching. When teaching mostly economists,

for example, I do not always follow the Mad Hatter past the

economics sections.

. Route 1. Begin at Chapter 1 and follow the Mad Hatter all

the way through to the end of Chapter 7.

. Route 2. Follow the first route until the end of Chapter 4,

and then skip forward to Chapter 7 and follow the Mad

Hatter onwards from there (perhaps omitting Chapter 9

and looking instead at some of the more advanced material

in Chapters 10 and 11). You will need to do some patching

on the subject of mixed strategies in Chapter 7.

. Route 3. Spend as little time as possible on Chapters 1, 2

and 3, and then follow the Mad Hatter from Chapter 4

Teaching Guide

...

XXIII

onwards (perhaps omitting some of Chapters 5, 6 or 9 to

leave more time for Chapters 10 and 11).

If the third and most ambitious route is followed, some

cautionary remarks may be helpful. Chapters 2 and 3 can be

telescoped into discussions of the games Duel and Russian

Roulette. You will not be able to evade saying something

about lotteries and expected utility, but what you say can

be kept very brief. 4 However, Chapter 1 does not compress

so readily. You will need at least to cover the extensive and

strategic forms of a game, some version of Zermelo's algo-

rithm, the notion of the value of a strictly competitive game

like Chess, and the ideas of Nash and subgame-perfect equi-

librium. However, I have two reasons for suggesting that this

chapter not be taken quite so swiftly. The first is that it is

never wise to hurry the first few lectures of any course. The

second is that Chapter 1 is partly designed to fulfill a screen-

ing role. Some of the arguments offered are therefore more

closely reasoned than is perhaps strictly necessary so as not

to give a false impression about what is coming later.

Questions and Answers

I attach very great importance to the working of exercises in

whatever course I teach. For this reason, Fun and Games con-

tains large numbers of exercises for students to work by them-

selves. Normally, I insist on written answers being handed in

to about five exercises a week. If you do this, it is impor-

tant to explain that you understand that your class is only

human, and that you are not so unreasonable as to expect

perfect answers to every problem. Some of the problems are,

and ought to be, challenging. What you want from the stu-

dents is their best shot. However, this includes being careful

about the presentation of their answers. Students learn aston-

ishingly quickly that it is not acceptable to embed a correct

answer in a few lines of indecipherable scrawl, or to sub-

merge the answer in several pages of irrelevant waffle. My

impression is that they like being made to do things right.

The students will, of course, be worried about your grading

policy, so make it clear from the outset that you understand

4Provided that your students are confident about their ability to cope with

simple probabilistic problems. Personally, I think it a great pity to skip the

Yon Neumann and Morgenstern theory of decision-making under risk. All

sorts of misunderstandings are commonly made because this theory is not

understood. However, I recognize that this is a judgment call.

.

XXIV

Teaching Guide

that they are not a randomly drawn sample from the student

population. They are a highly self-selected group and your

grades will reflect this reality. After setting some demand-

ing exercises, you can reinforce this piece of propaganda by

complimenting them on how cleverly the class as a whole ap-

proached the difficulties, even though few of them may have

been able to see their way through to a triumphant conclu-

sion. Usually, this will be nothing less than the plain truth.

Things will be going well if, after some time, you find the stu-

dents arguing among themselves about last week's problems

when you enter the classroom. As for examinations, I think

it reasonable to tell the students that you plan to ask them

questions that are similar to those that they have been asked

to do for homework, or else are even more similar to other

exercises of comparable difficulty that appear in the book.

The exercises at the end of each chapter are a mixed bunch.

They range from questions that require little more than re-

peating a definition to problems that might stump Von Neu-

mann himself. A little care is therefore necessary in selecting

problems for your students to answer. The list below is a set

of possible weekly assignments. The starred items are suitable

Assignment Exercise section

Questions

1 1.10 2 3 7 10 20

2t 1.10 21 22 23 24 25

3 2.6 5 7 1 1 21 23

4 3.7 5 9 1 1 14 22

5 4.8 2 16 21 24 25

6 5.9 13 15 21 23 25

7 6.10 4 14 15 18 19

8 6.10 27 29 34 36 41

9 7.9 1 1 1 13 14 18

lOt 7.9 27 29 34 35 40

1 1 8.6 5 8 10 21 23

12* 9.8 2 7 15 17 23

13 10.9 1 1 1 12 15 17

14*t 10.9 20 23 30 31 35

15 11.10 1 2 3 4 1 1

16*t 11.10 22 23 24 27 29

17*t 11.10 38 41 42 43 44

Teaching Guide

xxv

only for graduate students. Those marked with a dagger cover

topics that you may prefer to incorporate into the main body

of your course.

I am grateful to Bruce Linster for providing outline an-

swers to a selection of ten questions from each chapter at

the end of the book. For the earlier chapters, the answers

are mostly to questions that one might reasonably ask un-

dergraduates to attempt. These mainstream answers are sup-

plemented with a few hints on how to solve the occasional

brainteaser with which the exercises have been salted. There

is no overlap between the questions to which answers are

provided and those that appear in the suggested assignments.

Teachers can obtain a full set of answers from the publisher.

Why Teach This Way?

Notice the Mad Hatter in the margin inviting you to escape

the philosophical remarks coming up by skipping forward to

Intro the Introduction. The philosophical question to be consid-

ered is why I chose to write a book like this, rather than a

romp through some undemanding "applications" of the the-

ory in easily described economic models.

Early this morning, one of the more talented students in

my undergraduate Intermediate Microeconomics class at the

University of Michigan asked me when he was going to be

taught something with some substance: something that he

could get his teeth into. I had to tell him that Intermediate

Microeconomics is about as challenging as regular undergrad-

uate courses in economics get. Later this morning, I have to

teach a graduate class, Microeconomic Theory II. The con-

tent of this graduate course is not intrinsically difficult and

the students are intelligent, but many of them have to work

very hard to keep abreast of things. They are very ready to

work hard, but such effort would not be necessary in an ideal

world. In such a world, a person at the end of his or her un-

dergraduate career would have been equipped with the learn-

ing skills needed to cope comfortably with serious material

presented seriously. I am not talking here about a lack of

technical skills, although this is certainly part of the problem.

What holds the students back is their lack of self-confidence.

Before many of them can begin to learn in earnest, they first

have to learn that they really are able to learn. Such confi-

dence comes only through studying at least one challenging

subject in depth. Nobody can be confident about their learn-

ing skills if they have never been offered the opportunity to

do more than skim the surface of the subjects they are taught.

.

XXVI

Teaching Guide

However, too many undergraduates are never offered such an

opportunity.

Much of what passes for an undergraduate education, both

in the United States and in Europe, seems to me little more

than an unwitting conspiracy between the teacher and the stu-

dent to defraud whoever is paying the fees. The teacher pre-

tends to teach, and the student pretends to learn, material that

both know in their hearts is so emasculated that it cannot pos-

sibly be properly understood in the form in which it is pre-

sented. Even the weaker students grow tired of such a diet of

predigested pap. They understand perfectly well that "appre-

ciating the concepts" is getting them nowhere except nearer to

a piece of paper that entitles them to write letters after their

names. But most students want more than this. They want to

learn things properly so that they are in a position to feel that

they can defend what they have been taught without having

to resort to the authority of their teachers or the textbook. Of

course, learning things properly can be hard work. But my ex-

perience is that students seldom protest at being worked hard

provided that their program of study is organized so that they

quickly see that their efforts are producing tangible dividends.

However, it is one thing to see that our system of education

could be improved, but quite another to do something about

it. When teaching traditional courses in economics and the

other social sciences, one tends to get trapped by the system.

It is particularly hard to organize a serious course in a way that

allows students to see their efforts being quickly rewarded.

Teachers of introductory courses will typically already have

skimmed the cream from the material that you are supposed

to teach by discussing most of the intellectually exciting ideas

in some diluted form. But you will seldom be able to rely on

students' knowing what is taught in such introductory courses

sufficiently well that you can make use of their knowledge. In-

deed, what lodges in the minds of students when they are "ap-

preciating the concepts" in introductory courses is sometimes

so confused that they need to be "untaugpt" before further

progress is possible. A great deal of what students will despise

as "review material" is therefore inevitable unless you are will-

ing to be innovative about the syllabus. But monkeying around

with the syllabus is seldom a practical option unless your col-

leagues teaching companion courses are unusually tolerant.

Teaching game theory provides an opportunity to break

free from some of these constraints, because traditions about

how it should be taught have yet to evolve. In particular, it

usually gets only a passing mention in introductory courses.

Most students who sign up for game theory therefore come

Teaching Guide

..

XXVII

with an open mind in the hope of being taught something

new and interesting. They sometimes even think that the ma-

terial may be fun to learn. They also know that the subject

does not depend too heavily on material that should have

been mastered in earlier courses but is now water long gone

under the bridge. In none of these expectations will they be

disappointed. It is also true that game theory has assumed

a central role in much of economic theory, and so students

who are contemplating graduate work can congratulate them-

selves on doing something that will certainly be useful to

them. At the same time, you can truthfully tell students that

the role of game theory is bound to expand in the future, not

only within economics, but also in the other social sciences.

It already has, for example, firm footholds in biology and

political science.

All of this means that someone teaching game theory can

afford to be more ambitious than the teacher of a more tra-

ditional subject. The students come with a positive attitude,

and you can carry them forward quickly into areas that will

seem new and exciting to them. An opportunity therefore ex-

ists to provide some genuine education-to train some minds

in how to think about serious problems seriously. I believe

that, although the substantive content of game theory is cer-

tainly of great importance, it is this opportunity that should

excite us most. That is to say, in teaching game theory, it really

is true that the medium is at least as important as the message.

Other Books

Robert Aumann, Lectures on Game Theory, Westview Press

(Underground Classics in Economics), 1989. These are the

classroom notes of one of the great game theorists.

Robert Aumann and Sergio Hart, Handbook of Game Theory.

This will be a comprehensive collection of survey articles on

game theory and its applications for research workers in the

field.

Elwyn Berlekamp, John Conway and Richard Guy, Winning

Ways for Your Mathematical Plays, Academic Press, 1982.

This is a witty and incredibly inventive book about com-

binatorial game theory. The authors try very hard to make

their ideas accessible to the layman, but I suspect one needs

mathematical training for it to hold the attention.

Ken Binmore, Essays on the Foundations of Game Theory,

Blackwell, 1990. Some of these essays grapple with the con-

troversial issues that Fun and Games avoids, but don't expect

...

XXV III

Teaching Guide

any definitive answers. (Readers of Fun and Games will be

able to skip Chapters 2, 3 and 4.)

Steven Drams, Superior Beings, Springer-Verlag, 1983. If you

thought game theory had no applications in theology-think

again!

Avinash Dixit and Darry Nalebuff, Thinking Strategically,

Norton, 1991. This is a delightful collection of stories and

anecdotes drawn from real life that illustrate game theory in

action. Its accessibility is attested to by its appearance on the

Book-of-the-Month Club list.

James Friedman, Game Theory with Applications to Econom-

ics, second edition, MIT Press, 1990. James Friedman pio-

neered the application of repeated games to problems in indus-

trial organization. Here you can learn the tricks of the trade.

Drew Fudenberg and Jean Tirole, Game Theory, MIT Press,

1991. This is the book to read if you want to be published

in Econometrica. It is remarkable how much ground they

manage to cover.

Josef Hofbauer and Karl Sigmund, The Theory of Evolution

and Dynamical Systems, Cambridge University Press, 1988.

This is a highly accessible and unfussy introduction to the

mathematics of evolutionary systems.

Tashiro Ichiishi, Game Theory for Economic Analysis, Aca-

demic Press, 1983. This is a formal book that concentrates

on cooperative game theory. The mathematical exposition is

admirably clear and unfussy.

David Kreps, A Course in Microeconomic Theory, Princeton

University Press, 1990. This big book takes it for granted

that game theory is part of microeconomic theory. It is a

must for those planning to make a career in economics. The

problems are often very instructive indeed.

David Kreps, Game Theory and Economic Modelling, Ox-

ford University Press, 1990. Listen to what daddy says on

economic modeling and you won't go far wrong!

Duncan Luce and Howard Raiffa, Games and Decisions, Wi-

ley, 1957. This evergreen classic is a model for how a book

should be written.

John Maynard Smith, Evolution and the Theory of Games,

Cambridge University Press, 1982. Everyone should at least

dip into this beautiful book.

Herve Moulin, Game Theory for the Social Sciences, second

revised edition, New York University Press, 1986. This is an

elementary introduction to game theory in which the mathe-

Teaching Guide

.

XXIX

matics is allowed to speak for itself. It is particularly valuable

as a source of instructive problems. (You want both volumes.)

Roger Myerson, Game Theory: Analysis of Conflict, Harvard

University Press, 1991. Roger Myerson is the prime mover in

the subject of mechanism design. His book is an encyclopedic

introduction to game theory in which each brick is carefully

laid precisely where it belongs. This is a book for those who

feel that things should be done properly.

Peter Ordeshook, Game Theory and Political Theory, Cam-

bridge University Press, 1986. This is the place to look for

applications in political science.

Martin Osborne and Ariel Rubinstein, Bargaining and Mar-

kets, Academic Press, 1990. If you want more on bargaining

than Chapter 5 of Fun and Games has to offer, this is an

excellent place to begin.

Guillermo Owen, Game Theory, second edition, Academic

Press, 1982. This elegant book is particularly strong on co-

operative game theory.

Eric Rasmusen, Games and Information, Blackwell, 1989.

This is a book for those who want to get straight to the eco-

nomic applications without having to fiddle around with the

theory first.

Thomas Schelling, The Strategy of Conflict, Harvard Univer-

sity Press, 1960. This is a classic that will remain on every-

body's reading list for many years to come.

Martin Shubik, Game Theory in the Social Sciences, MIT

Press, 1984. Martin Shubik is one of the great pioneers in

applying game theory to economics. 'The second volume of

this monumental work is where he gives us the benefit of his

wide experience.

Jean Tirole, The Theory of Industrial Organization, MIT

Press, 1988. This beautiful book is a model of its kind. Those

who want a quick introduction to game theory will find their

needs catered to in a magnificently concise appendix.

John Yon Neumann and Oskar Morgenstern, The Theory of

Games and Economic Behavior, Princeton University Press,

1944. I have read the great classic of game theory from cover

to cover, but I do not recommend the experience to others!

Its current interest is largely historical.

Herbert Yardley, The Education of a Poker Player, Jonathan

Cape, 1959. Don't waste time on Chapter 12 of Fun and

Games if what you care about is how to make money playing

Poker.

Introduction

1

0.1 What Is Game Theory About?

3

The poet Horace advised his young disciples to begin in me-

dia res. I think he was right. The way to learn game theory is

to turn straight to the first chapter and plunge into the mid-

dle of things. This introduction is for the faint-hearted and

the middle-aged who want some questions answered before

making a commitment.

. What is game theory about?

. Where is it coming from?

. Where is it going to?

. What can it do for us?

These are big questions to which there are no neat and tidy

answers. An introduction can only give some flavor of the

answers that game theorists offer. l But perhaps this will be

enough to whet your appetite.

0.1 What Is Game Theory About

A game is being played whenever people interact with each

other. If you drive a car in a busy city street, you are playing

a game with the drivers of the other cars. When you make

a bid at an auction, you are playing a game with the other

bidders. When a supermarket manager decides the price at

which she will try to sell cans of beans, she is playing a game

with her customers and with the managers of rival supermar-

kets. When a firm and a union negotiate next year's wage

contract, they are playing a game. The prosecuting and de-

fending attorneys are playing a game when each decides what

arguments to put before the jury. Napoleon and Wellington

were playing a game at the Battle of Waterloo, and so were

Khrushchev and Kennedy during the Cuban missile crisis.

If all these situations are games, then game theory is clearly

something important. Indeed, one could argue that all the so-

cial sciences are nothing more than subdisciplines of game

theory. However, this doesn't mean that game theorists are

the people to ask for answers to all the world's problems.

This is because game theory, as currently developed, is mostly

about what happens when people interact in a rational man-

ner. If this observation tempts you to return Fun and Games

1 Chapter 1 of my Essays on the Foundations of Game Theory (Black-

well, 1990) is a longer introduction to the subject. Dixit and Nalebuff's

Thinking Strategically (Norton, 1991) is a delightful collection of stories

and anecdotes showing game theory in action in real-life situations. Kreps'

Game Theory and Economic Modelling (Oxford University Press, 1990) is

an introduction that emphasizes the uses of game theory in economics.

4

Introduction

to the bookstore in the hope of getting your money refunded,

think again. Nobody is saying that people always behave ra-

tionally. But neither is it true that people always act irra-

tionally. Most of us try at least to spend our money sensibly,

and most of the time we don't do too badly. Otherwise, eco-

nomic theory wouldn't work at all. Even when we are not

actively thinking things out in advance, it does not neces-

sarily follow that we are behaving irrationally. In fact, game

theory has had some notable successes in analyzing the be-

havior of insects and plants, neither of which can be said

to think at all. Their behavior is rational because those in-

sects and plants whose genes programmed them to behave

irrationally are now extinct. Evolution removed them. And,

just as biological evolution acts to remove biologically un-

fit behavior, so other kinds of evolution can act to remove

socially or economically unfit behavior. For example, firms

that manage their affairs stupidly tend to go bankrupt and so

disappear from the scene.

Perhaps you will agree that rational interaction between

groups of people is an exciting area to study, but why call it

game theory? Do we not trivialize the problems that people

face by calling them games? Worse still, do we not devalue

our humanity by reducing our struggle for fulfillment to the

status of mere play in a game?

The proper answers to these questions stand them on their

heads. The more deeply we feel about issues, the more im-

portant it is that we do not allow ourselves to be misled by

wishful thinking. Game theory makes a virtue out of using

the language of parlor games like Chess or Poker to discuss

the logic of strategic interaction. I know that Bridge players

sometimes shoot their partners. I have sometimes felt the

urge myself. Nevertheless, most of the time, people are able

to think about the strategic issues that arise in parlor games

dispassionately. That is to say, they are willing to follow the

logic wherever it goes without throwing their hands up in

horror if it leads to an unwelcome destination. In insisting

on using the language of parlor games, game theorists are

therefore not revealing themselves to be cold-hearted follow-

ers of Machiavelli who care nothing for the sorrows of the

world. They are simply attempting to separate those features

of a problem that are susceptible to uncontroversial rational

analysis from those that are not.

Human beings are not naturally very good at thinking about

problems of strategic interaction. We become distressed when

confronted with circular reasoning. But circular reasoning

cannot be evaded in considering strategic issues. If John and

0.1 What Is Game Theory About?

5

Mary are playing a game, then John's choice of strategy will

depend on what he predicts Mary's choice of strategy will be.

But she is simultaneously choosing a strategy, using her pre-

diction of John's strategy choice. Given that it is necessarily

based on such involuted logic, it is perhaps not surprising that

game theory abounds with surprises and paradoxes.

. Is it possible, do you think, that someone at a committee

meeting might find it optimal to vote for the alternative

they like the least?

. Could it be be a good idea for a general to toss a coin to

decide whether to attack today or tomorrow?

. Can it be optimal for a Poker player always to bet the max-

imum when dealt the worst possible hand?

. Could it ever make sense for someone with goods to trade

to begin by throwing some of them away?

. Might it ever be rational for someone with a house to sell to

use an auction in which the highest bidder gets the house, but

only has to pay the bid made by the second-highest bidder?

You will have guessed, of course, that the seemingly crazy

answer is correct in each case. Let us look at the first and

last questions to try and understand why our intuitions are

so unhelpful. This will simultaneously provide some feel for

the whole book, since problems of the first kind are discussed

in Chapter 1 at the beginning of the book, and problems of

the last kind are discussed in Chapter 11 towards its end.

0.1. 1 Strategic Voting

Boris, Horace and Maurice are the membership committee

of the very exclusive Dead Poets Society. The final item on

their agenda one morning is a proposal that Alice should be

admitted as a new member. No mention is made of another

possible candidate called Bob, and so an amendment to the

final item is proposed. The amendment states that Alice's

name should be replaced by Bob's. The rules for voting in

committees call for amendments to be voted on in the order

in which they are proposed. The committee therefore begins

by voting on whether Bob should replace Alice. If Alice wins,

they then vote on whether Alice or Nobody should be made

a new member. If Bob wins, they then vote on whether Bob

or Nobody should be made a new member. Figure O.I(a) is a

diagrammatic representation of the order in which the voting

takes place. Figure 0.1 (b) shows how the three committee

members rank the three possible outcomes.

6

Introduction

Alice

Alice

or

Nobody Bons Horace Maunce

Nobody I. Alice I. Nobody I. Bob

Alice

or 2. Nobody 2. Alice 2. Alice

Bob

Nobody 3. Bob 3. Bob 3. Nobody

Bob

or (b)

Nobody

Bob

(a)

Figure 0.1 Strategic voti ng.

The doubled lines in Figure 0.1 (a) show who would win

the vote if everybody just voted according to their rankings.

For example, in a vote between Alice and Bob, Alice would

win because both Boris and Horace rank Alice above Bob and

so Maurice would be outvoted. Thus, if there is no strategic

voting, Alice will be elected to the club because she will also

win when she is matched against Nobody.

However, if Horace looks ahead, he will see that there is

no point in voting against Bob at the first vote. If Bob wins

the first vote, then Nobody will triumph at the second vote,2

and Nobody is Horace's first preference. Thus, Horace should

switch his vote from Alice at the first vote, and cast his vote

instead for Bob, who is the candidate he likes the least. If

Boris and Maurice do not also vote strategically, the result

will be that Nobody is elected.

But this is not the end of the story. Maurice may anticipate

that Horace will vote strategically. If so, Maurice will also

vote strategically by switching his vote from Bob to Alice.

Maurice will then fail to vote for the candidate he likes the

most. His reason for doing so is that he thereby ensures that

Alice is elected rather than Nobody.

The reasoning used by Horace and Maurice in this story is

called backwards induction. They predict what would happen

in the future and then reason backwards to the present. The

mathematician Zermelo applied the same type of reasoning

2 Why is there no point in anyone voting strategically at the second vote?

0.1 What Is Game Theory About?

7

to Chess as long ago as 1912. For this reason, the process is

called Zermelo's algorithm in Section 1.4 of the text.

Game theorists care about what happens when everybody

reasons optimally. Here this means that everybody uses Zer-

melo's algorithm, and hence everybody votes strategically.

Does this mean that Alice gets elected? You will be able to

work this out for sure after reading Chapter I!

Econ

0.1.3

o. 1.2 Auctions

Mad Hatters, like the little guy who has just appeared in the

margin, are designed to help you find your way around the

text. The Teaching Guide explains their significance in detail.

) Here he is rushing on to Section 0.1.3 to avoid learning some

economics. Mathematical folk may think him wise. As Lewis

Carroll put it:

And what mean all these mysteries to me

Whose life is full of indices and surds?

x 2 + 7x + 53

11

=.'.3

However, you won't get much from this book if you just read

the mathematical bits.

Alice wants to get the best price she can for her very fancy

house. There are two potential buyers, Horace and Maurice.

If she knew the maximum each would be willing to pay, her

problem would be easy. However, although she doesn't know

their reservation prices, she is not entirely ignorant. It is com-

mon knowledge that each potential buyer has a reservation

price of either three million or four million dollars, and that

each value is equally likely. Moreover, the two reservation

prices are independent of each other (Section 2.1.2).

An auctioneer advises her to run a "second-price auction".

In such an auction, each bidder secretly seals his bid in an

envelope. The envelopes are then publicly opened, and the

house is sold to the highest bidder,3 but not at the price he

bid. Instead it is sold to him at the highest price bid by a

loser. The advantage of such an arrangement, the auctioneer

explains, is that it induces rational people to bid their true

reservation prices. Horace, for example, would reason like

this. Suppose that Maurice's bid is for less than my reser-

vation price. Then I want to win the auction, and I can do

so just by bidding my own reservation price truthfully. On

3 If there is a tie, it is broken at random.

8

Math

o. 1.3

Introduction

the other hand, if Maurice's bid is for more than my reser-

vation price, then I don't want to win, and I can guarantee

not doing so by bidding my own reservation price truthfully.

Thus, in a second-price auction, telling the truth about your

reservation price is a heads-I-win-tails-you-Iose proposition.

Game theorists say that bidding truthfully is a strategy that

dominates all its alternatives.

Alice understands all this, but she doesn't much like what

she hears. It seems obvious to her that she must do better by

selling the house for the highest price that gets bid rather than

for the second highest price. So she fires the first auctioneer

and hires instead a second auctioneer who tells her what she

wants to hear. He organizes a sealed-bid auction just like the

first auctioneer's, except that the house is now sold to the

highest bidder at whatever price he bids.

But Alice is wrong. Her expected selling price is $3!m in

both cases. 4 This is easy to see in the case of the second-price

auction. Alice will then make $3m except when both Horace

and Maurice have a reservation price of $4m. The probabil-

ity that both have a high reservation price is ! x ! = !. Her

expected selling price in millions of dollars is therefore

3 x + 4 x ! = 3!.

Perhaps this mention of probabilities and expected values

makes you feel apprehensive. If so, take careful note of the

Mad Hatter who has appeared in the margin, and follow him

by rushing on to the next section. What you need to know

about probabilities and expected values (which isn't much)

will be reviewed in Chapter 2. As for the piece of mathemat-

ics that the Mad Hatter is rushing on to avoid, you might use

this as a test for how suitable Fun and Games is for someone

with your background. At the end of the book, I hope that

such an argument will seem transparently clear. However, if

you get more than a general impression about what is going

on right now, then you should perhaps be thinking of reading

a more advanced book 5 than this!

To figure out what selling price Alice can expect in a "first-

price" sealed-bid auction, we need to solve the bidding prob-

lem such an auction poses for Horace and Maurice. A

consultant game theorist will see no reason to advise someone

with a reservation price of $3m to do anything but bid this

truthfully. However, if Horace has a high reservation price of

4 Provided that Horace and Maurice bid rationally and are risk-neutral

(Section 3.4.3).

5Like Fudenberg and Tirole's Game Theory (MIT Press, 1991) or Myer-

son's Game Theory: Analysis of Conflict (Harvard University Press, 1991).

0.1 What Is Game Theory About?

9

$4m, he may get some curious advice from the game theorist.

The game theorist will explain that, whatever bid Horace may

think of sealing in his envelope, there is a risk that Maurice

will predict it. If he does, Maurice will then win the auction

by bidding one penny more on those occasions when he also

has a high reservation price. The only way that Horace can be

sure of keeping Maurice guessing is by using a mixed strategy.

What this means is that Horace should randomize over the

bids that it is sensible for him to make.

If Horace is still listening, the game theorist will now start

getting serious about how Horace should randomize. The ad-

vice might go like this. Never bid less than $3m or more than

$3 m. Randomize over all the bids between $3m and $3 m

in such a way that the probability of your bidding less than

$b is precisely (b - 3)/(4 - b).

If Maurice is also getting exactly the same advice from an-

other game theorist, how much should Horace expect to make

when his randomizing device tells him to seal $bm in his enve-

lope? If he outbids Maurice, he will make $ (4 - b )m, because

this is the difference between what he pays and what the house

is worth to him. If he loses, he makes nothing. How often will

Horace win? Half the time he will win for certain because

Maurice's reservation price will be only $3m. The other half

of the time, Horace will win with probability (b - 3)/( 4 - b),

because this is the probability that Maurice will bid less than

$bm when his reservation price is $4m. Thus, the total prob-

ability that Horace wins when he bids $bm is

11 ( b-3 ) 1

2 + 2 4 - b = 2(4 - b) ·

His expected gain is obtained by multiplying this probabil-

ity by $(4 - b)m, which is what he gets when he wins. His

expected gain is therefore always $ m whatever bid $bm be-

tween $3m and $3 m he may make.

The reason that game theorists will offer such complicated

advice to Horace and Maurice is that, if they follow it, then

each will be responding optimally to the strategy choice of

the other. In particular, there is nothing that Horace can do

to improve on $ m. He will certainly never want to bid $Bm

when B > 3 because, although this guarantees his winning

the auction, his victory will be hollow since he will make only

$(4 - B) from his triumph. 6

It will be clear that Alice was premature in thinking that

6 Assuming that his reservation price is high. If his reservation price is

low, he will never want to bid more than $3m.

10

Introduction

anything in this situation is "obvious". Much of the time, Ho-

race and Maurice will be bidding far below their true reserva-

tion prices. Alice therefore needs to calculate quite hard

before she is entitled to an opinion on the relative merits of

first and second price auctions. If she assumes that Horace and

Maurice follow the advice of their tame game theorists, she

will find that her expected selling price will be exactly the same

in a first-price auction as in a second-price auction-namely

$3!m. It will also be the same in various other types of auction.

In particular, the expected selling price is exactly the same in

the familiar type of auction in which the buyers keep raising

each other's bids until only one contender is left bidding.

Should we conclude that Alice is wasting her time in look-

ing for a better auction? Far from it! If Alice reads Fun and

Games as far as Section 11.7.4, she will discover that, if she

is clever, she can design an auctioning mechanism that raises

her expected selling price to a magnificent $3tm.

o. 1.3 What Is the Mora/I

The two preceding examples are intended to make it clear

that game theory is good for something. Our untutored in-

tuition is not very reliable in strategic situations. We need

to train our strategic intuition by considering instructive ex-

amples. These need not be realistic. On the contrary, there

are often substantial advantages in studying toy games, if

these are carefully chosen. In such toy games, all the irrel-

evant clutter that typifies real-world problems can be swept

away so that attention can be focused entirely on the strategic

questions for which game theory exists to provide answers.

Often, game theorists introduce their toy games with silly

stories. It is a little silly, for example, that the committee mem-

bers in the strategic voting example were called Boris, Horace

and Maurice. 7 But even such silliness is not without its value.

Here, it allows us to disengage our emotions from the prob-

lem. Nobody can get worked up about fictions like Boris, Ho-

race and Maurice. However, if they were recognizable as real

people, we might let our indignation at the fact that the chair-

man has "rigged the agenda", or that Horace is planning to

"vote dishonestly", distract our attention from the underlying

strategic realities. For a game theorist, this would be an error

comparable to a mathematician's fudging on the laws of arith-

metic because he dislikes the answers he is coming up with.

7Those whose native language is not the Queen's English may be surprised

to learn that these names rhyme.

Phil

0.4

0.2 Where Is Game Theory Coming From?

11

0.2 Where Is Game Theory

Coming From

Game theory was created by Von Neumann and Morgenstern

in their classic book The Theory of Games and Economic

Behavior, published in 1944. Others had anticipated some

of the ideas. The economists Cournot and Edgeworth were

particularly innovative in the nineteenth century. Later con-

tributions mentioned in this book were made by the math-

ematicians Borel and Zermelo. Von Neumann himself had

already laid the groundwork in a paper published in 1928.

However, it was not until Von Neumann and Morgenstern's

book appeared that the world realized what a powerful tool

for studying human interaction had been discovered.

When the penny dropped, people went overboard in their

enthusiasm. The social sciences, so it was thought, would be

revolutionized overnight. But this was not to be. Von Neu-

mann and Morgenstern's book proved to be just he first step

on a long road. However, there is no mind more closed than

that of a disappointed convert, and once it became clear that

The Theory of Games and Economic Behavior was not be

compared with the tablets of stone that Moses brought down

from the mountain, game theory languished in the doldrums.

One can still find old men who will explain that game theory

is useless because life is not a "zero-sum game", or because

one can get any result one likes by selecting an appropriate

"cooperative solution concept".

Fortunately, things have moved on very rapidly in the last

twenty years, and this and other modern books on game the-

ory are no longer hampered by some of the restrictive assump-

tions that Von Neumann and Morgenstern found necessary to

make progress. As a consequence, the early promise of game

theory is beginning to be realized. Its impact on economic

theory in recent years has been nothing less than explosive.

However, it is still necessary to know a little about game the-

ory's short history, if only to understand why some of the

terminology is used.

Yon Neumann and Morgenstern investigated two distinct

possible approaches to the theory of games. The first of these

is the strategic or noncooperative approach. This requires

specifying in close detail what the players can and cannot

do during the game, and then searching for a strategy for

each player that is optimal. At first sight, it is not even clear

what optimal might mean in this context. What is best for

one player depends on what the other players are planning

to do, and this in turn depends on what they think the first

12

Introduction

player will do. Von Neumann and Morgenstern solved this

problem for the particular case of two-player games in which

the players' interests are diametrically opposed. Such games

are called strictly competitive or zero-sum because any gain

by one player is always exactly balanced by a corresponding

loss by the other. Chess, Backgammon and Poker are games

that usually get treated as zero-sum.

In the second part of their book, Von Neumann and Mor-

genstern developed their coalitional or cooperative approach,

in which they sought to describe optimal behavior in games

with many players. Since this is a much more difficult prob-

lem, it is not surprising that their results were much less

sharp than for the two-player, zero-sum case. In particu-

lar, they abandoned the attempt to specify optimal strate-

gies for individual players. Instead, they aimed at classifying

the patterns of coalition formation that are consistent with

rational behavior. Bargaining, as such, had no explicit role

in this theory. In fact, they endorsed a view that had held

sway among economists since at least the time of Edgeworth,

namely that two-person bargaining problems are inherently

indeterminate.

In a sequence of remarkable papers in the early fifties, the

mathematician John Nash broke open two of the barriers

that Von Neumann and Morgenstern had erected for them-

selves. On the noncooperative front, they seem to have felt

that the idea of an equilibrium in strategies, as introduced

by Cournot in 1832, was not an adequate notion in itself

on which to build a theory-and hence their restriction to

zero-sum games. However, Nash's general formulation of the

equilibrium idea made it clear that no such restriction is nec-

essary. Nowadays the notion of a Nash equilibrium 8 is per-

haps the most important of the tools that game theorists have

at their disposal. Nash also contributed to Von Neumann and

Morgenstern's cooperative approach. He disagreed with the

view that game theorists must regard two-person bargaining

problems as indeterminate, and proceeded to offer arguments

for determining them. His views on this subject were widely

misunderstood and, perhaps in consequence, game theory's

years in the doldrums were largely spent pursuing Von Neu-

mann and Morgenstern's cooperative approach along lines

that ultimately turned out to be unproductive.

8This arises when each player's strategy choice is a best reply to the

strategy choices of the other players. Horace and Maurice were advised to

use a Nash equilibrium by their consultant game theorist when wondering

how to bid in the first-price auction of Section 0.1.2.

0.3 Where Is Game Theory Going To?

13

The history of game theory in the last twenty years is too

packed with incident to be chronicled here. However, there

are some names that cannot pass unmentioned. The acronym

NASH may assist in remembering who they are. Nash himself

gets the letter N; A is for Aumann; S is for both Shapley and

Selten; and H is for Harsanyi. By the end of the book, you

won't be needing an acronym!

What is perhaps most important about the last twenty

years in game theory is that all the major advances have

been made in noncooperative theory. For this reason, Fun and