Автор: Jurafsky D. Martin J.

Теги: linguistics language processing pearson publisher speech speech recognition

Год: 2000

Текст

SPEECH AND

LANGUAGE PROCESSING

An bilrodwtim io Natural Language Processing,

4 • vputattonal Linguistics, and Speech Reto*

Speech and Language Processing

An Introduction to Natural Language Processing,

Computational Linguistics, and Speech Recognition

Second Edition

Daniel Jurafsky

Stanford University

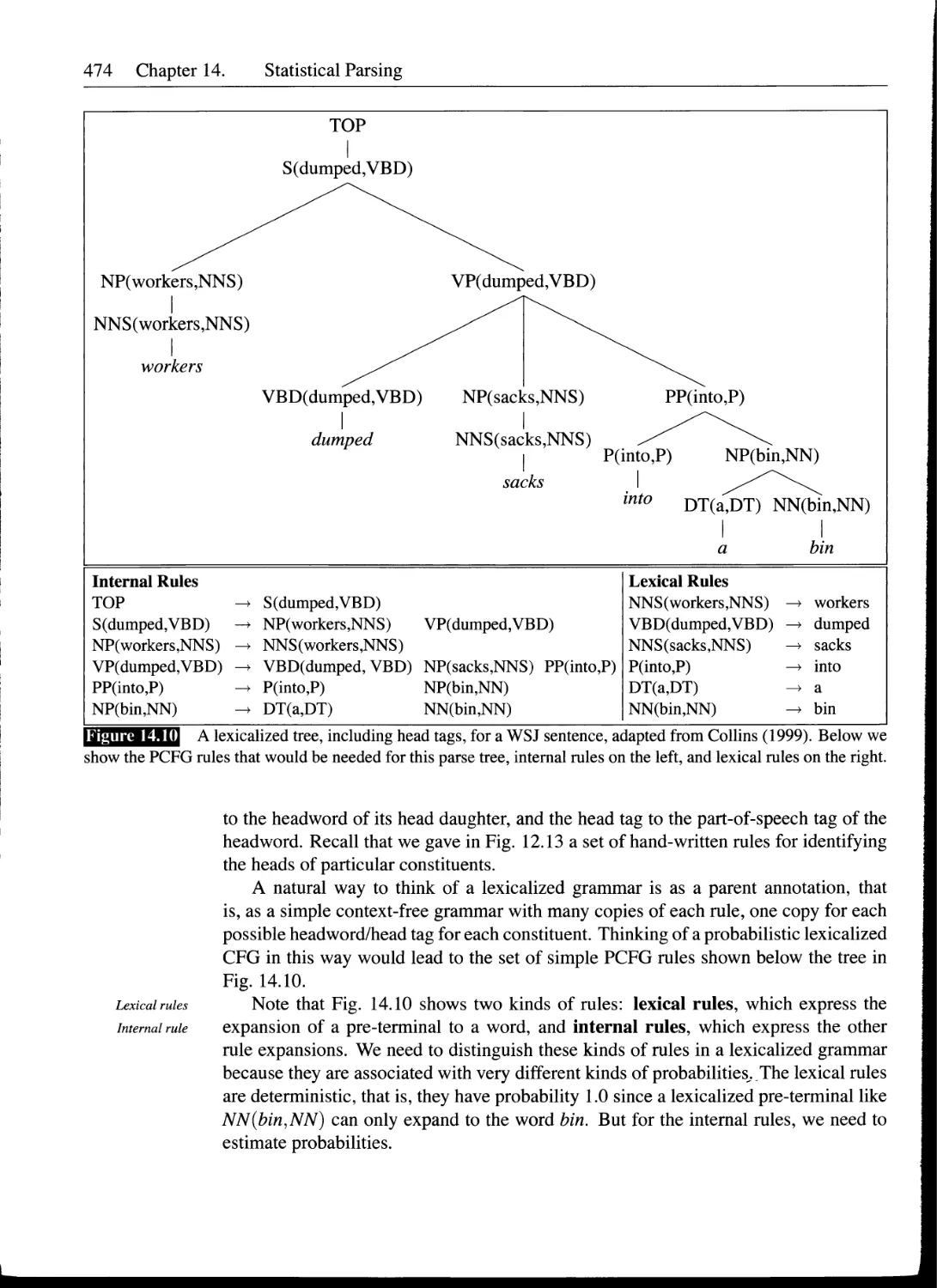

James H. Martin

University of Colorado at Boulder

PEARSON

Prentice

Hall

Upper Saddle River, New Jersey 07458

Summary of Contents

Foreword......................................................-xxiii

Preface..........................................................xxv

About the Authors...............................................xxxi

1 Introduction...................................................1

I Words

2 Regular Expressions and Automata..............................17

3 Words and Transducers.........................................45

4 N-Grams.......................................................83

5 Part-of-Speech Tagging.......................................123

6 Hidden Markov and Maximum Entropy Models.....................173

II Speech

7 Phonetics....................................................215

8 Speech Synthesis.............................................249

9 Automatic Speech Recognition.................................285

10 Speech Recognition: Advanced Topics.........................335

11 Computational Phonology......................................361

III Syntax

12 Formal Grammars of English...................................385

13 Syntactic Parsing............................................427

14 Statistical Parsing..........................................459

15 Features and Unification.....................................489

16 Language and Complexity......................................529

IV Semantics and Pragmatics

17 The Representation of Meaning................................545

18 Computational Semantics......................................583

19 Lexical Semantics............................................611

20 Computational Lexical Semantics..............................637

21 Computational Discourse......................................681

V Applications

22 Information Extraction.......................................725

23 Question Answering and Summarization.........................765

24 Dialogue and Conversational Agents...........................811

25 Machine Translation..........................................859

Bibliography.....................................................909

Author Index.....................................................959

Subject Index....................................................971

vii

Contents

Foreword xxiii

Preface xxv

About the Authors xxxi

1 Introduction 1

1.1 Knowledge in Speech and Language Processing..........................2

1.2 Ambiguity............................................................4

1.3 Models and Algorithms................................................5

1.4 Language, Thought, and Understanding.................................6

1.5 The State of the Art.................................................8

1.6 Some Brief History...................................................9

1.6.1 Foundational Insights: 1940s and 1950s........................9

1.6.2 The Two Camps: 1957-1970.....................................10

1.6.3 Four Paradigms: 1970-1983....................................11

1.6.4 Empiricism and Finite-State Models Redux: 1983-1993 .. 12

1.6.5 The Field Comes Together: 1994-1999..........................12

1.6.6 The Rise of Machine Learning: 2000-2008......................12

1.6.7 On Multiple Discoveries......................................13

1.6.8 A Final Brief Note on Psychology.............................14

1.7 Summary.............................................................14

Bibliographical and Historical Notes.......................................15

1 Words

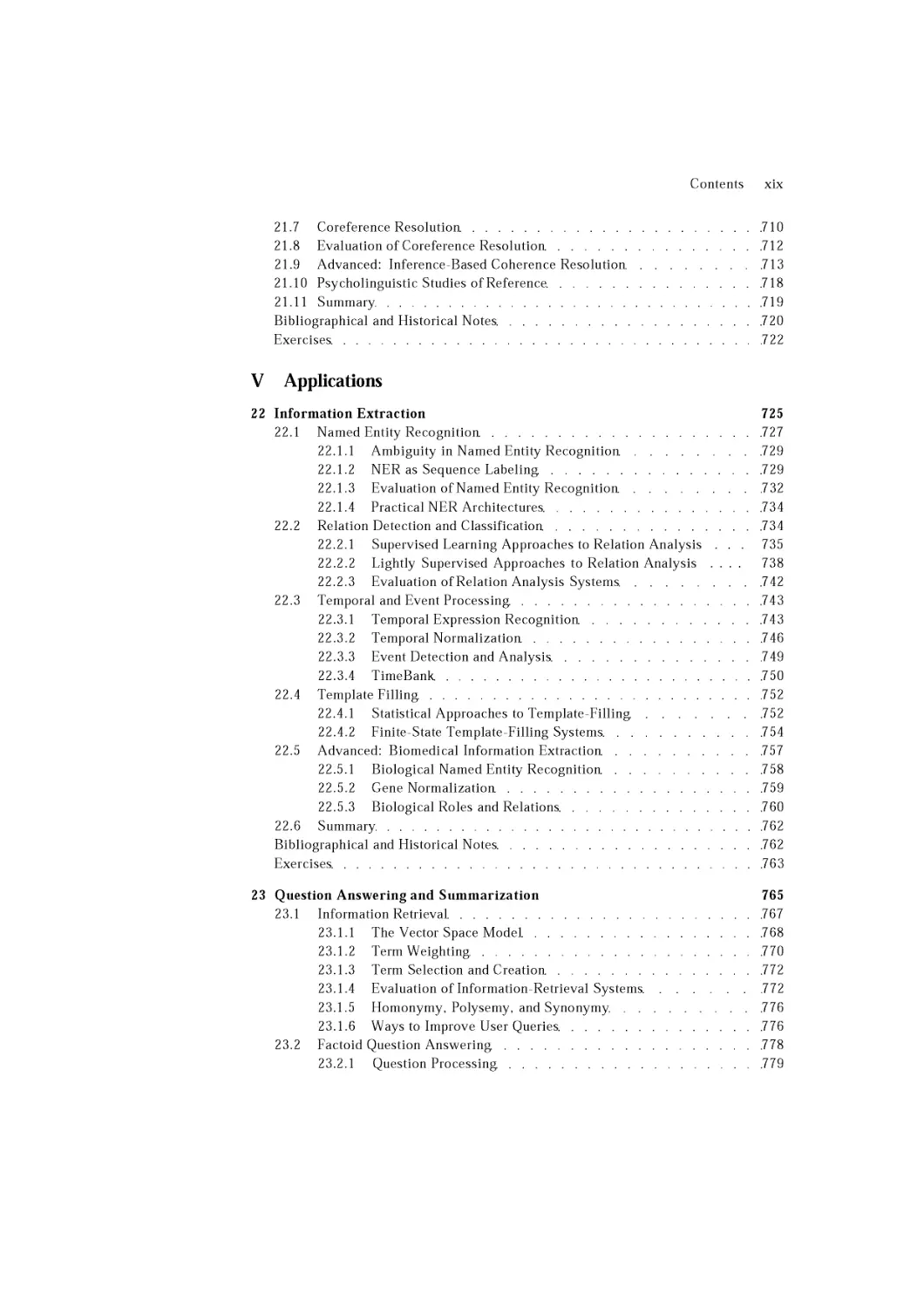

2 Regular Expressions and Automata 17

2.1 Regular Expressions.................................................17

2.1.1 Basic Regular Expression Patterns..........................18

2.1.2 Disjunction, Grouping, and Precedence......................21

2.1.3 A Simple Example...........................................22

2.1.4 A More Complex Example.....................................23

2.1.5 Advanced Operators.........................................24

2.1.6 Regular Expression Substitution, Memory, and ELIZA ... 25

2.2 Finite-State Automata...............................................26

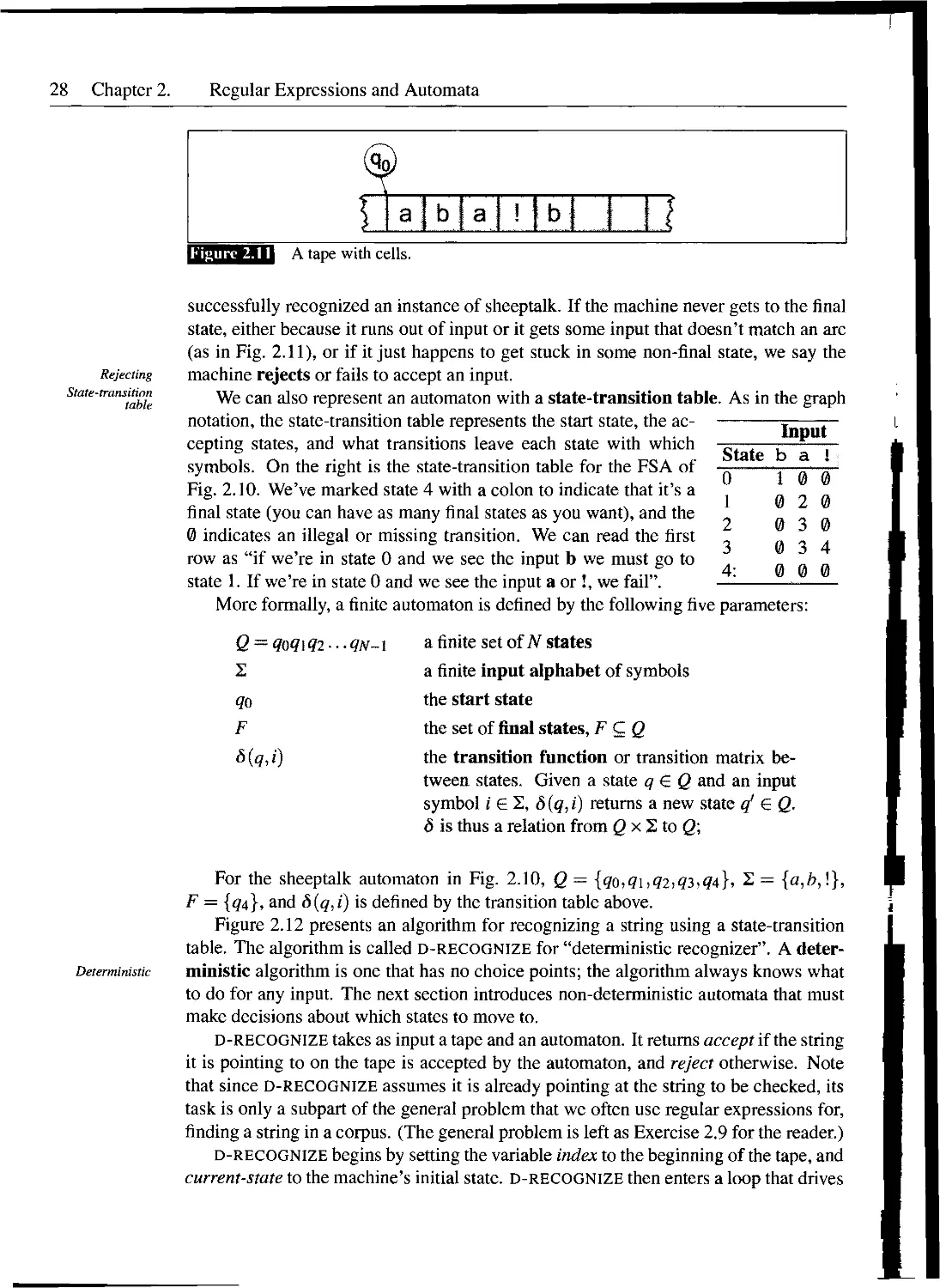

2.2.1 Use of an FSA to Recognize Sheeptalk.......................27

2.2.2 Formal Languages...........................................30

2.2.3 Another Example............................................31

2.2.4 Non-Deterministic FSAs.....................................32

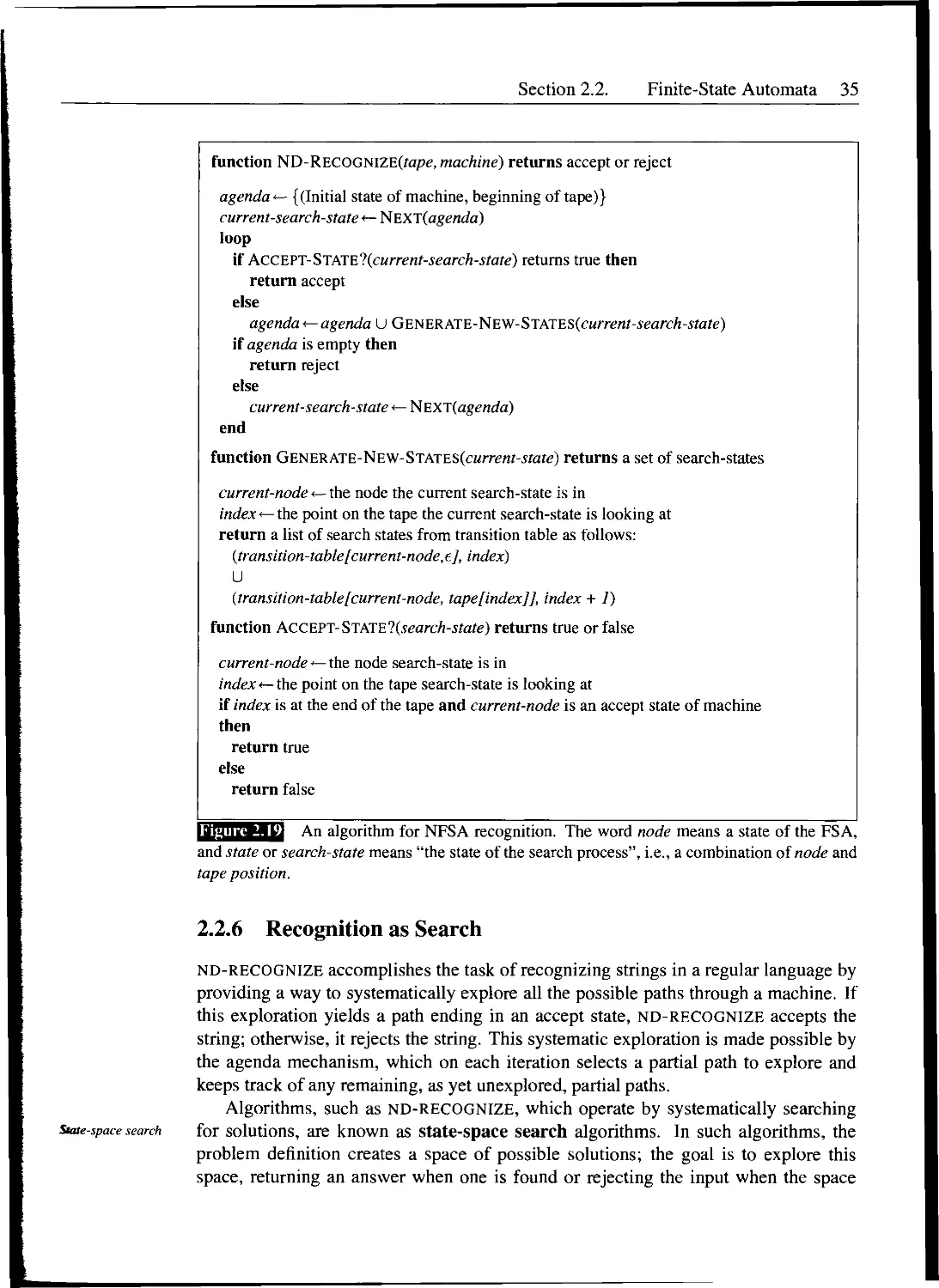

2.2.5 Use of an NFSA to Accept Strings...........................33

2.2.6 Recognition as Search......................................35

2.2.7 Relation of Deterministic and Non-Deterministic Automata 38

2.3 Regular Languages and FSAs..........................................38

2.4 Summary.............................................................41

ix

X

Contents

Bibliographical and Historical Notes.......................................42

Exercises..................................................................42

3 Words and Transducers 45

3.1 Survey of (Mostly) English Morphology................................47

3.1.1 Inflectional Morphology......................................48

3.1.2 Derivational Morphology......................................50

3.1.3 Cliticization................................................51

3.1.4 Non-Concatenative Morphology.................................51

3.1.5 Agreement....................................................52

3.2 Finite-State Morphological Parsing...................................52

3.3 Construction of a Finite-State Lexicon...............................54

3.4 Finite-State Transducers.............................................57

3.4.1 Sequential Transducers and Determinism.......................59

3.5 FSTs for Morphological Parsing.......................................60

3.6 Transducers and Orthographic Rules...................................62

3.7 The Combination of an FST Lexicon and Rules..........................65

3.8 Lexicon-Free FSTs: The Porter Stemmer................................68

3.9 Word and Sentence Tokenization.......................................68

3.9.1 Segmentation in Chinese.......................................70

3.10 Detection and Correction of Spelling Errors..........................72

3.11 Minimum Edit Distance................................................73

3.12 Human Morphological Processing.......................................77

3.13 Summary..............................................................79

Bibliographical and Historical Notes......................................80

Exercises..................................................................81

4 W-Grams 83

4.1 Word Counting in Corpora.............................................85

4.2 Simple (Unsmoothed) V-Grams..........................................86

4.3 Training and Test Sets...............................................91

4.3.1 N-Gram Sensitivity to the Training Corpus.....................92

4.3.2 Unknown Words: Open Versus Closed Vocabulary Tasks . . 95

4.4 Evaluating Af-Grams: Perplexity......................................95

4.5 Smoothing............................................................97

4.5.1 Laplace Smoothing............................................98

4.5.2 Good-Turing Discounting.....................................101

4.5.3 Some Advanced Issues in Good-Turing Estimation..............102

4.6 Interpolation.......................................................104

4.7 Backoff.............................................................105

4.7.1 Advanced: Details of Computing Katz Backoff a and P* . . 107

4.8 Practical Issues: Toolkits and Data Formats.........................108

4.9 Advanced Issues in Language Modeling................................109

4.9.1 Advanced Smoothing Methods: Kneser-Ney Smoothing . . 109

4.9.2 Class-Based W-Grams..........................................Ill

4.9.3 Language Model Adaptation and Web Use......................112

Contents xi

4.9.4 Using Longer-Distance Information: A Brief Summary . . . 112

4.10 Advanced: Information Theory Background...........................114

4.10.1 Cross-Entropy for Comparing Models.........................116

4.11 Advanced: The Entropy of English and Entropy Rate Constancy . . 118

4.12 Summary...........................................................119

Bibliographical and Elistorical Notes...................................120

Exercises...............................................................121

5 Part-of-Speech Tagging 123

5.1 (Mostly) English Word Classes.....................................124

5.2 Tagsets for English...............................................130

5.3 Part-of-Speech Tagging............................................133

5.4 Rule-Based Part-of-Speech Tagging.................................135

5.5 HMM Part-of-Speech Tagging........................................139

5.5.1 Computing the Most Likely Tag Sequence: An Example . . 142

5.5.2 Formalizing Hidden Markov Model Taggers.....................144

5.5.3 Using the Viterbi Algorithm for HMM Tagging................145

5.5.4 Extending the HMM Algorithm to Trigrams....................149

5.6 Transformation-Based Tagging......................................151

5.6.1 How TBL Rules Are Applied..................................152

5.6.2 How TBL Rules Are Learned...................................152

5.7 Evaluation and Error Analysis.....................................153

5.7.1 Error Analysis..............................................156

5.8 Advanced Issues in Part-of-Speech Tagging.........................157

5.8.1 Practical Issues: Tag Indeterminacy and Tokenization . ... 157

5.8.2 Unknown Words..............................................158

5.8.3 Part-of-Speech Tagging for Other Languages.................160

5.8.4 Tagger Combination.........................................163

5.9 Advanced: The Noisy Channel Model for Spelling....................163

5.9.1 Contextual Spelling Error Correction.......................167

5.10 Summary...........................................................168

Bibliographical and Historical Notes....................................169

Exercises...............................................................171

6 Hidden Markov and Maximum Entropy Models 173

6.1 Markov Chains.....................................................174

6.2 The Hidden Markov Model...........................................176

6.3 Likelihood Computation: The Forward Algorithm.....................179

6.4 Decoding: The Viterbi Algorithm...................................184

6.5 HMM Training: The Forward-Backward Algorithm......................186

6.6 Maximum Entropy Models: Background................................193

6.6.1 Linear Regression..........................................194

6.6.2 Logistic Regression........................................197

6.6.3 Logistic Regression: Classification........................199

6.6.4 Advanced: Learning in Logistic Regression................200

6.7 Maximum Entropy Modeling..........................................201

xii Contents

6.7.1 Why We Call It Maximum Entropy..............................205

6.8 Maximum Entropy Markov Models.....................................207

6.8.1 Decoding and Learning in MEMMs..............................210

6.9 Summary...........................................................211

Bibliographical and Historical Notes....................................212

Exercises...............................................................213

II Speech

7 Phonetics 215

7.1 Speech Sounds and Phonetic Transcription..........................216

7.2 Articulatory Phonetics............................................217

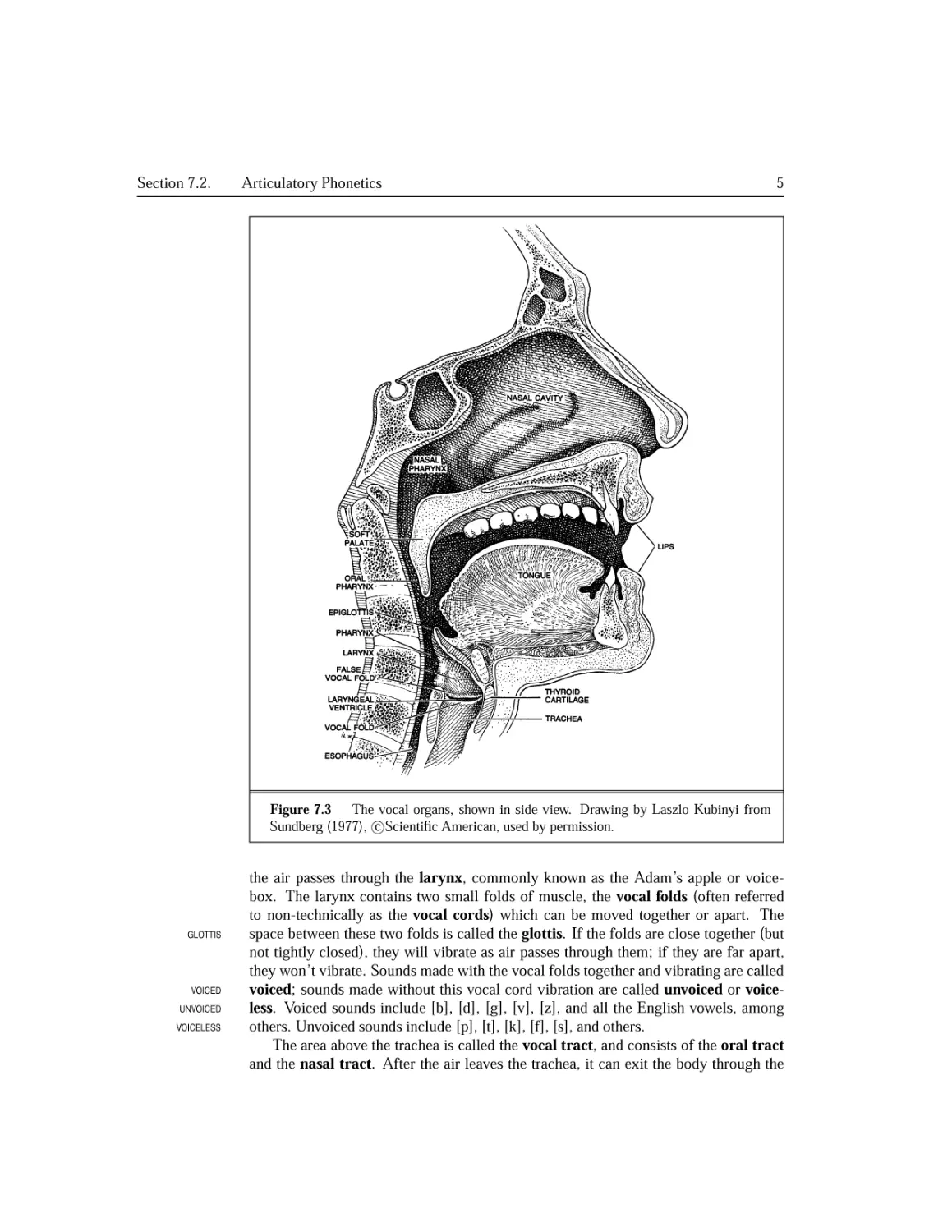

7.2.1 The Vocal Organs............................................218

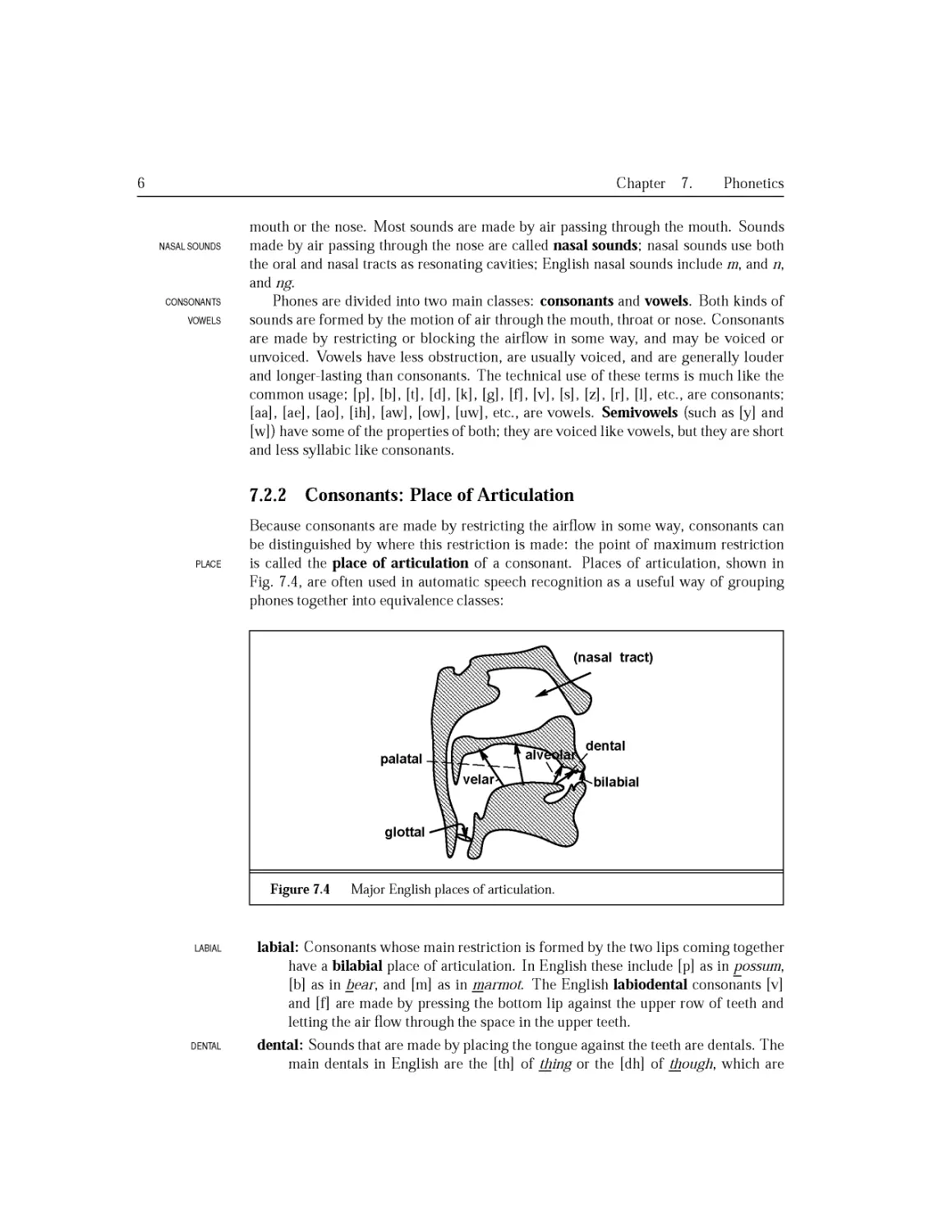

7.2.2 Consonants: Place of Articulation...........................220

7.2.3 Consonants: Manner of Articulation..........................221

7.2.4 Vowels.....................................................222

7.2.5 Syllables..................................................223

7.3 Phonological Categories and Pronunciation Variation...............225

7.3.1 Phonetic Features..........................................227

7.3.2 Predicting Phonetic Variation..............................228

7.3.3 Factors Influencing Phonetic Variation.....................229

7.4 Acoustic Phonetics and Signals....................................230

7.4.1 Waves......................................................230

7.4.2 Speech Sound Waves.........................................231

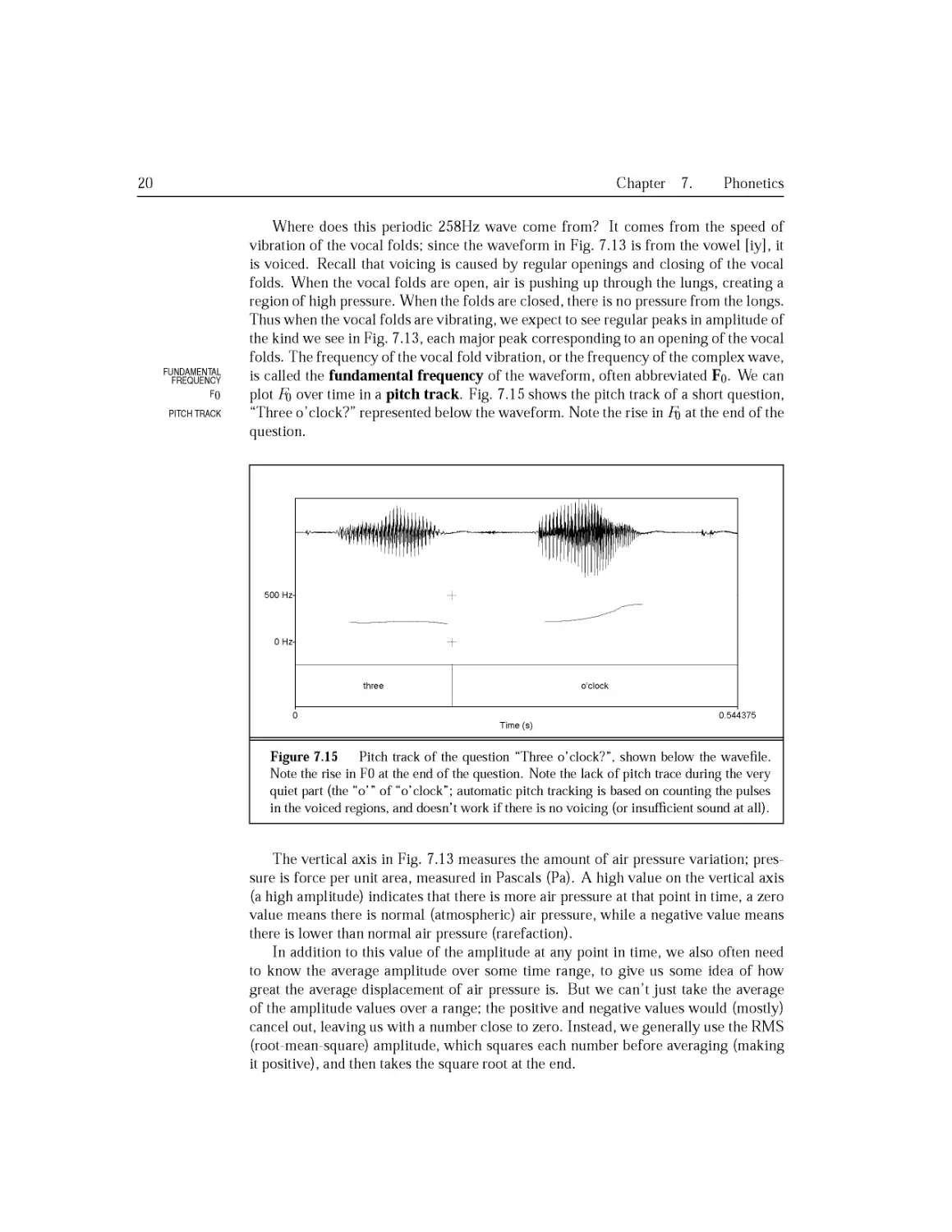

7.4.3 Frequency and Amplitude; Pitch and Loudness................233

7.4.4 Interpretation of Phones from a Waveform...................236

7.4.5 Spectra and the Frequency Domain...........................236

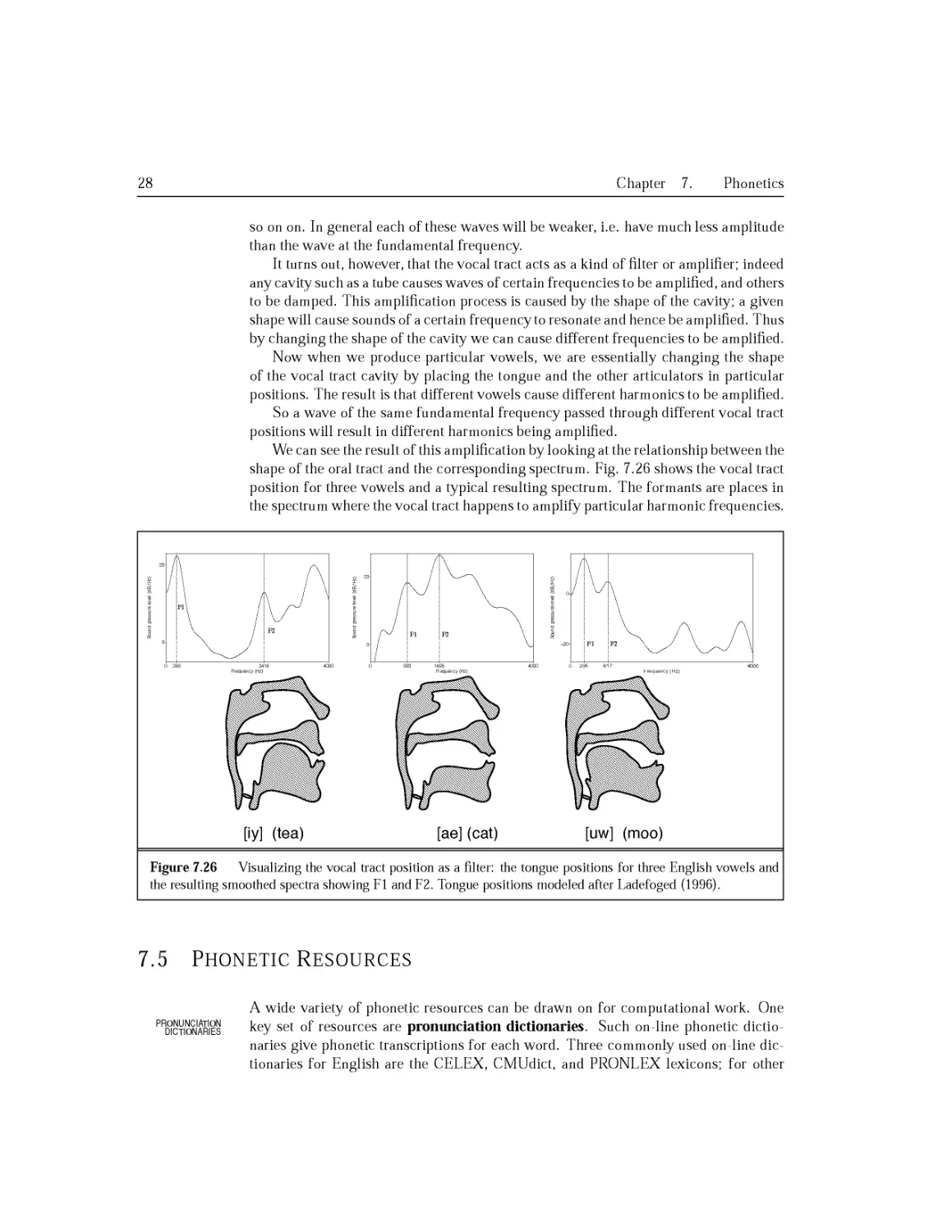

7.4.6 The Source-Filter Model....................................240

7.5 Phonetic Resources................................................241

7.6 Advanced: Articulatory and Gestural Phonology.....................244

7.7 Summary...........................................................245

Bibliographical and Historical Notes....................................246

Exercises...........................................•...................247

8 Speech Synthesis 249

8.1 Text Normalization................................................251

8.1.1 Sentence Tokenization......................................251

8.1.2 Non-Standard Words.........................................252

8.1.3 Homograph Disambiguation...................................256

8.2 Phonetic Analysis.................................................257

8.2.1 Dictionary Lookup..........................................257

8.2.2 Names......................................................258

8.2.3 Grapheme-to-Phoneme Conversion.............................259

8.3 Prosodic Analysis.................................................262

8.3.1 Prosodic Structure.........................................262

8.3.2 Prosodic Prominence........................................263

Contents xiii

8.3.3 Tune........................................................265

8.3.4 More Sophisticated Models: ToBI.............................266

8.3.5 Computing Duration from Prosodic Labels.....................268

8.3.6 Computing FO from Prosodic Labels...........................269

8.3.7 Final Result of Text Analysis: Internal Representation ... 271

8.4 Diphone Waveform Synthesis..........................................272

8.4.1 Steps for Building a Diphone Database.......................272

8.4.2 Diphone Concatenation and TD-PSOLA for Prosody .... 274

8.5 Unit Selection (Waveform) Synthesis.................................276

8.6 Evaluation..........................................................280

Bibliographical and Historical Notes......................................281

Exercises.................................................................284

9 Automatic Speech Recognition 285

9.1 Speech Recognition Architecture.....................................287

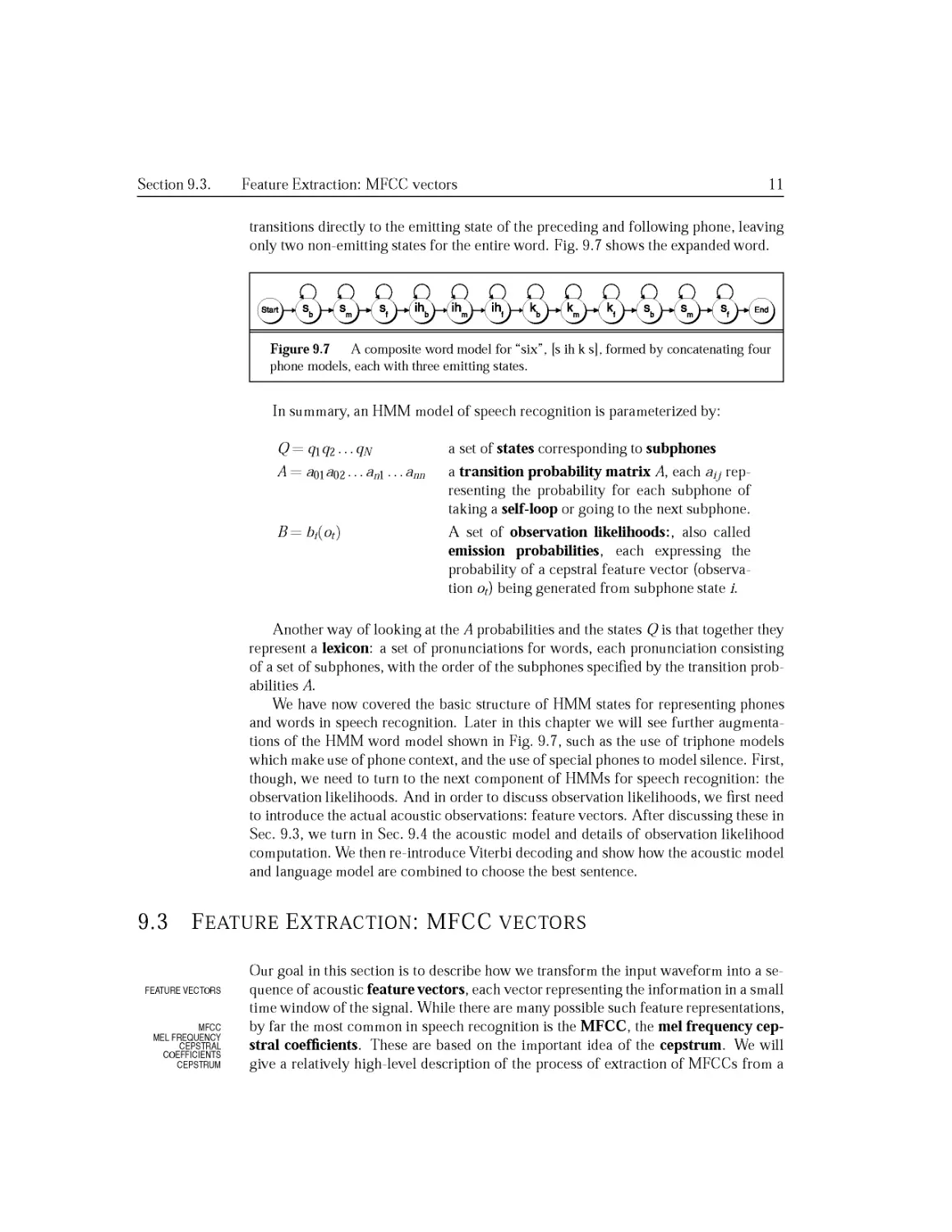

9.2 The Hidden Markov Model Applied to Speech........................291

9.3 Feature Extraction: MFCC Vectors....................................295

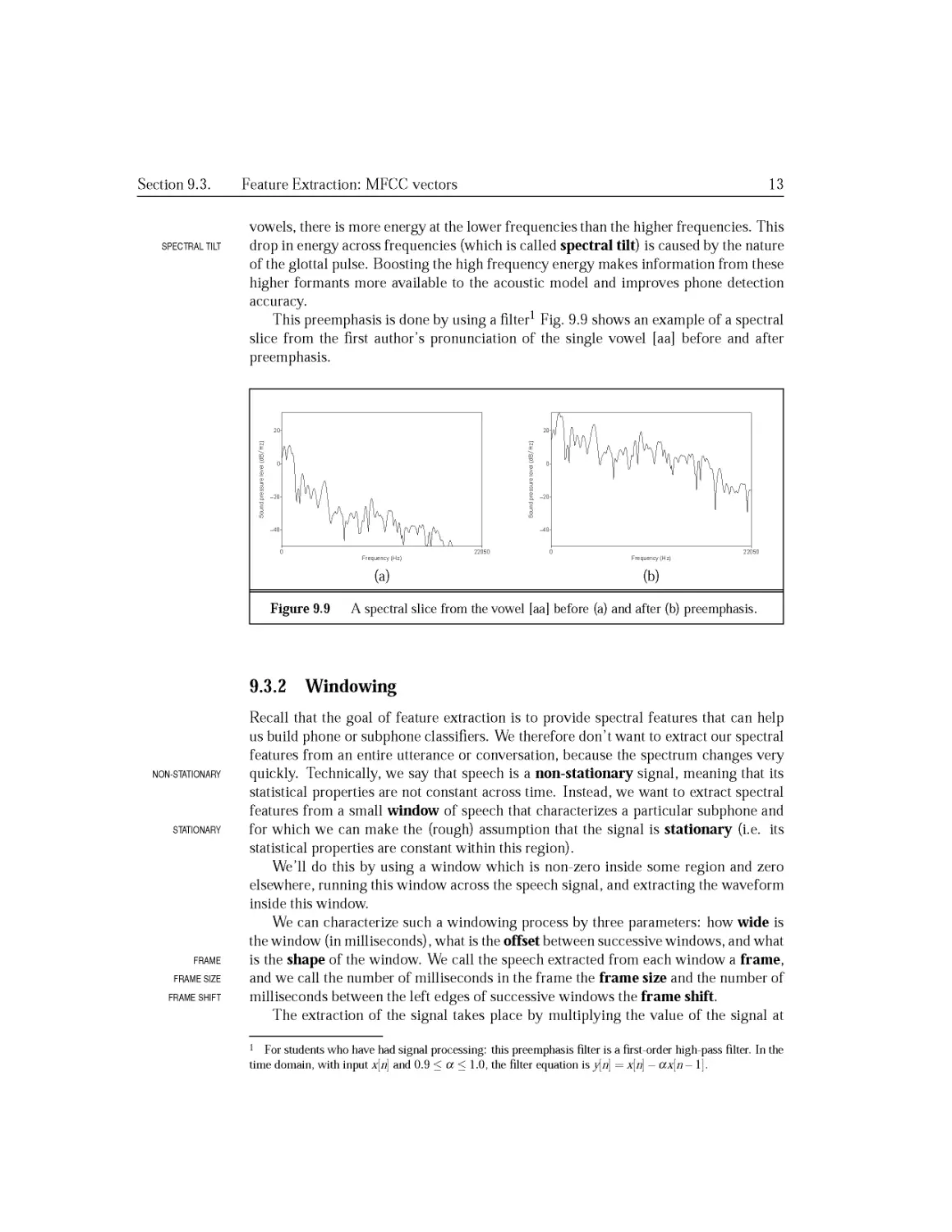

9.3.1 Preemphasis.................................................296

9.3.2 Windowing...................................................296

9.3.3 Discrete Fourier Transform..................................298

9.3.4 Mel Filter Bank and Log.....................................299

9.3.5 The Cepstrum: Inverse Discrete Fourier Transform . . . 300

9.3.6 Deltas and Energy...........................................302

9.3.7 Summary: MFCC...............................................302

9.4 Acoustic Likelihood Computation.....................................303

9.4.1 Vector Quantization.........................................303

9.4.2 Gaussian PDFs...............................................306

9.4.3 Probabilities, Log-Probabilities, and Distance Functions . . 313

9.5 The Lexicon and Language Model......................................314

9.6 Search and Decoding.................................................314

9.7 Embedded Training...................................................324

9.8 Evaluation: Word Error Rate.........................................328

9.9 Summary.............................................................330

Bibliographical and Historical Notes'.....................................331

Exercises.................................................................333

10 Speech Recognition: Advanced Topics 335

10.1 Multipass Decoding: V-Best Lists and Lattices.......................335

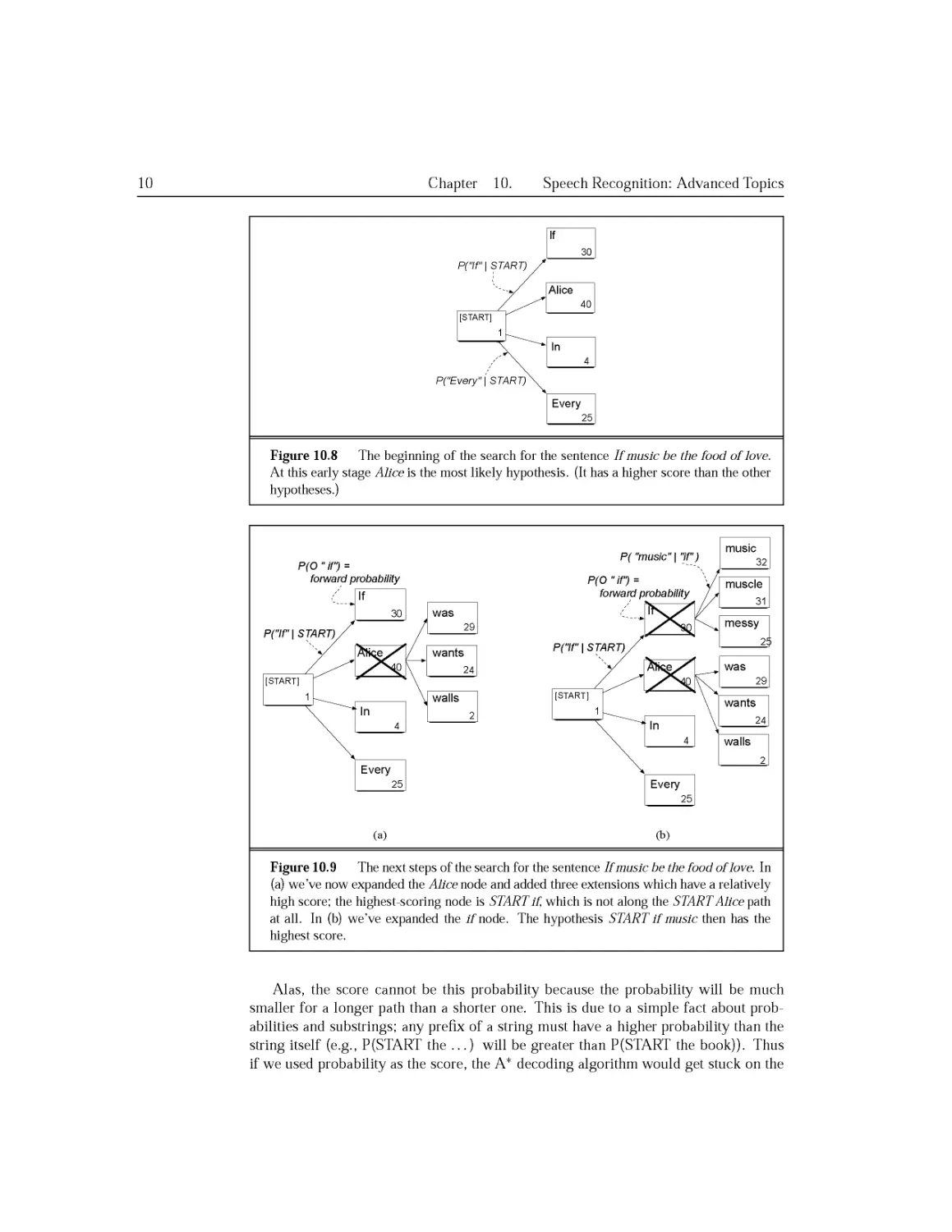

10.2 A* ("Stack") Decoding...............................................341

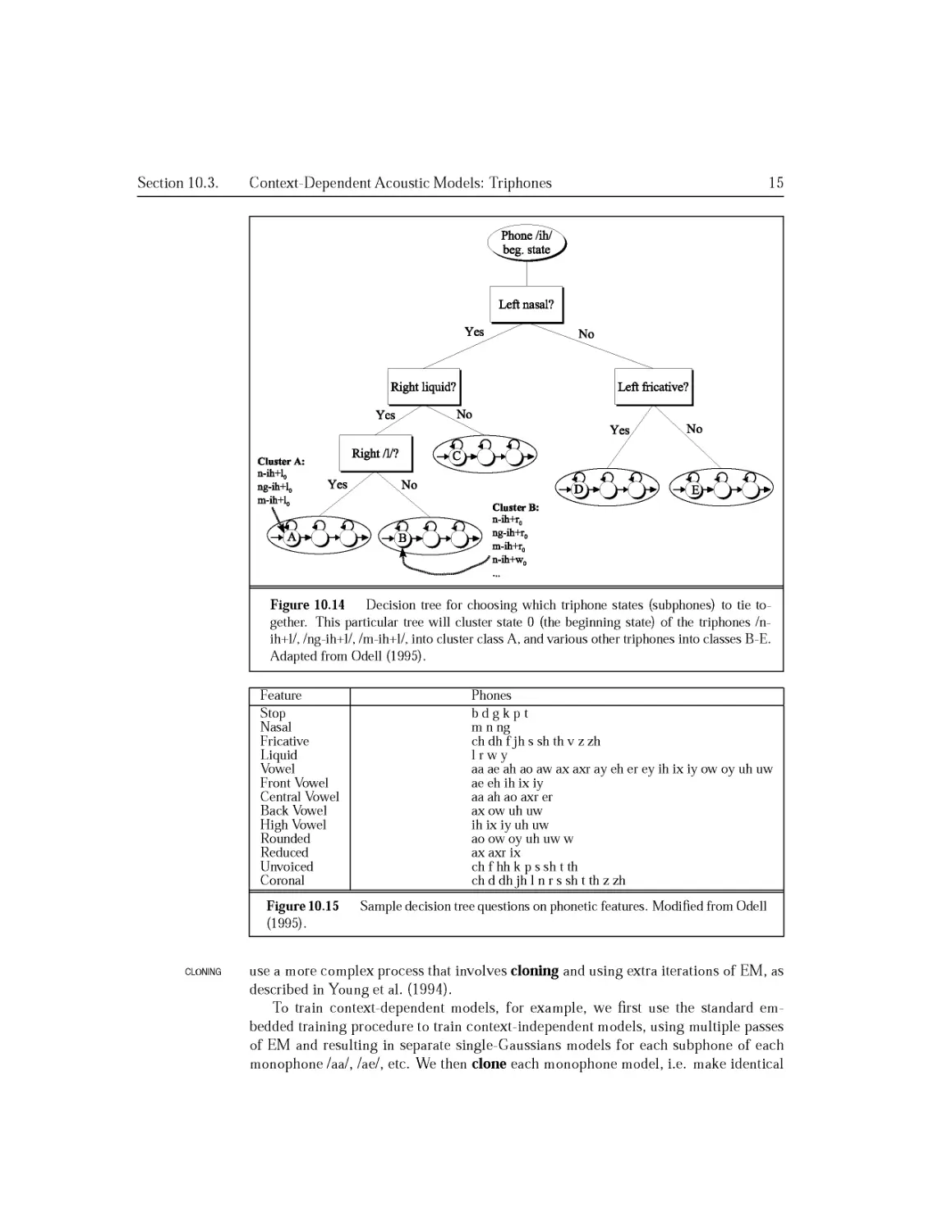

10.3 Context-Dependent Acoustic Models: Triphones......................345

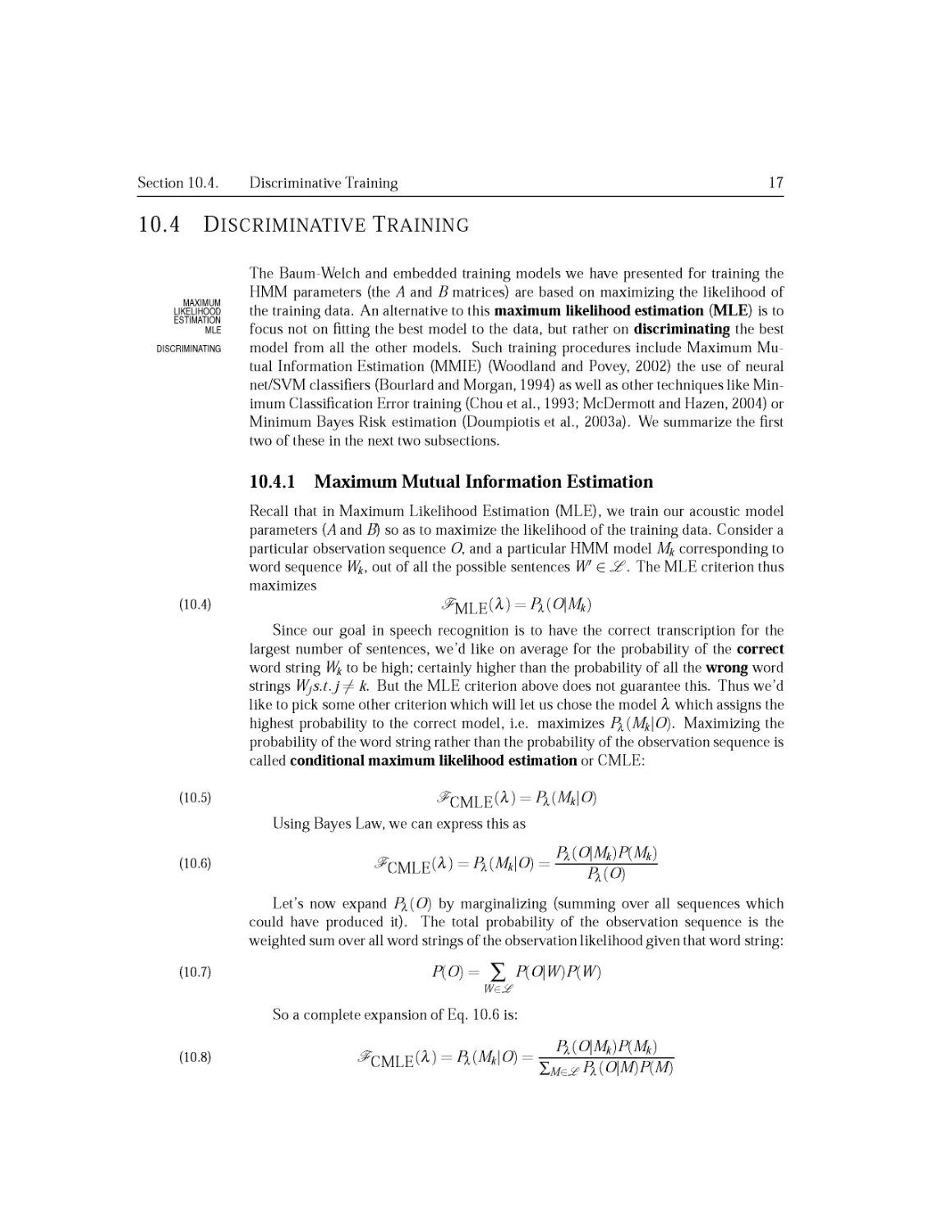

10.4 Discriminative Training.............................................349

10.4.1 Maximum Mutual Information Estimation.......................350

10.4.2 Acoustic Models Based on Posterior Classifiers..............351

10.5 Modeling Variation..................................................352

10.5.1 Environmental Variation and Noise........................352

10.5.2 Speaker Variation and Speaker Adaptation....................353

xiv Contents

10.5.3 Pronunciation Modeling: Variation Due to Genre..............354

10.6 Metadata: Boundaries, Punctuation, and Disfluencies...............356

10.7 Speech Recognition by Humans......................................358

10.8 Summary............................................................359

Bibliographical and Historical Notes...........................359

Exercises................................................................360

11 Computational Phonology 361

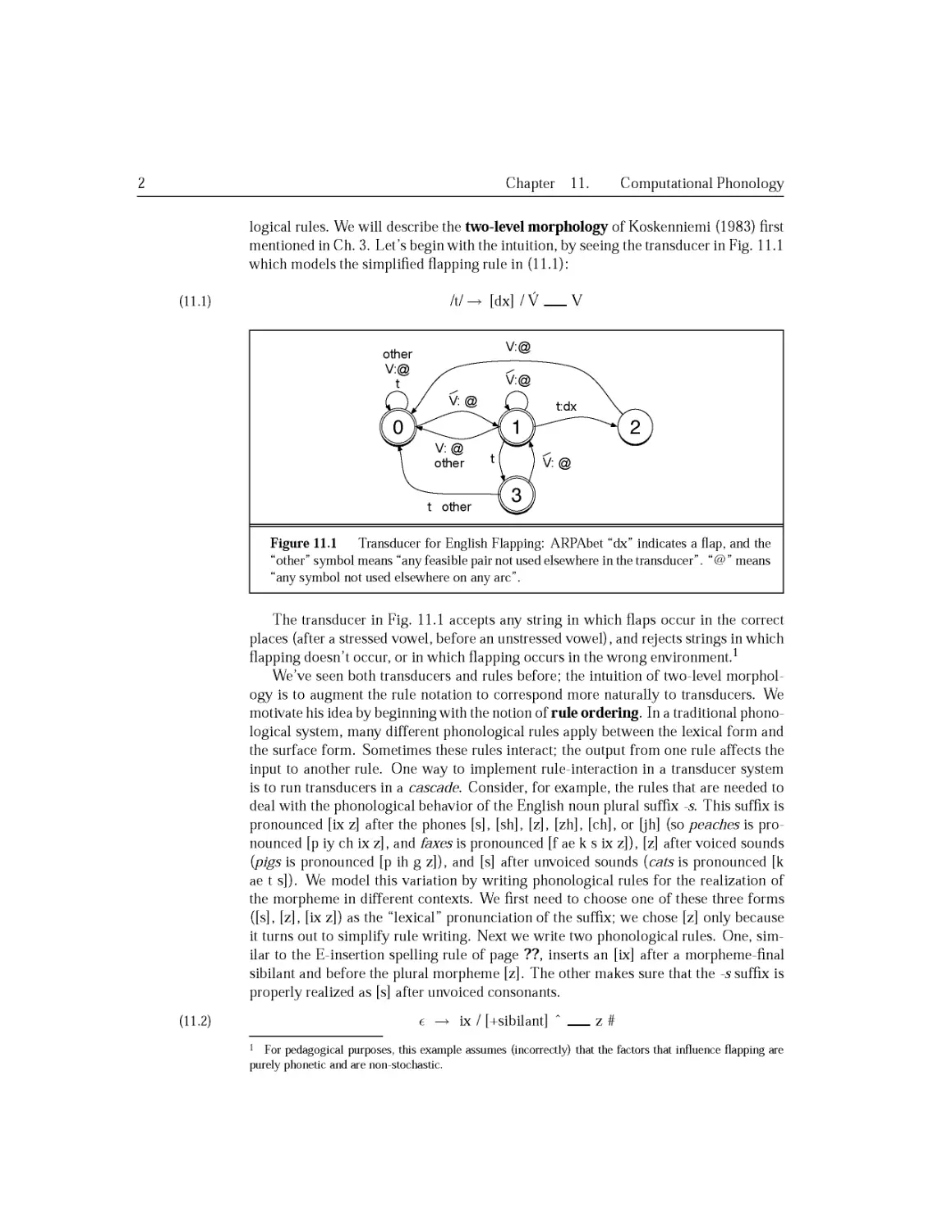

11.1 Finite-State Phonology.............................................361

11.2 Advanced Finite-State Phonology....................................365

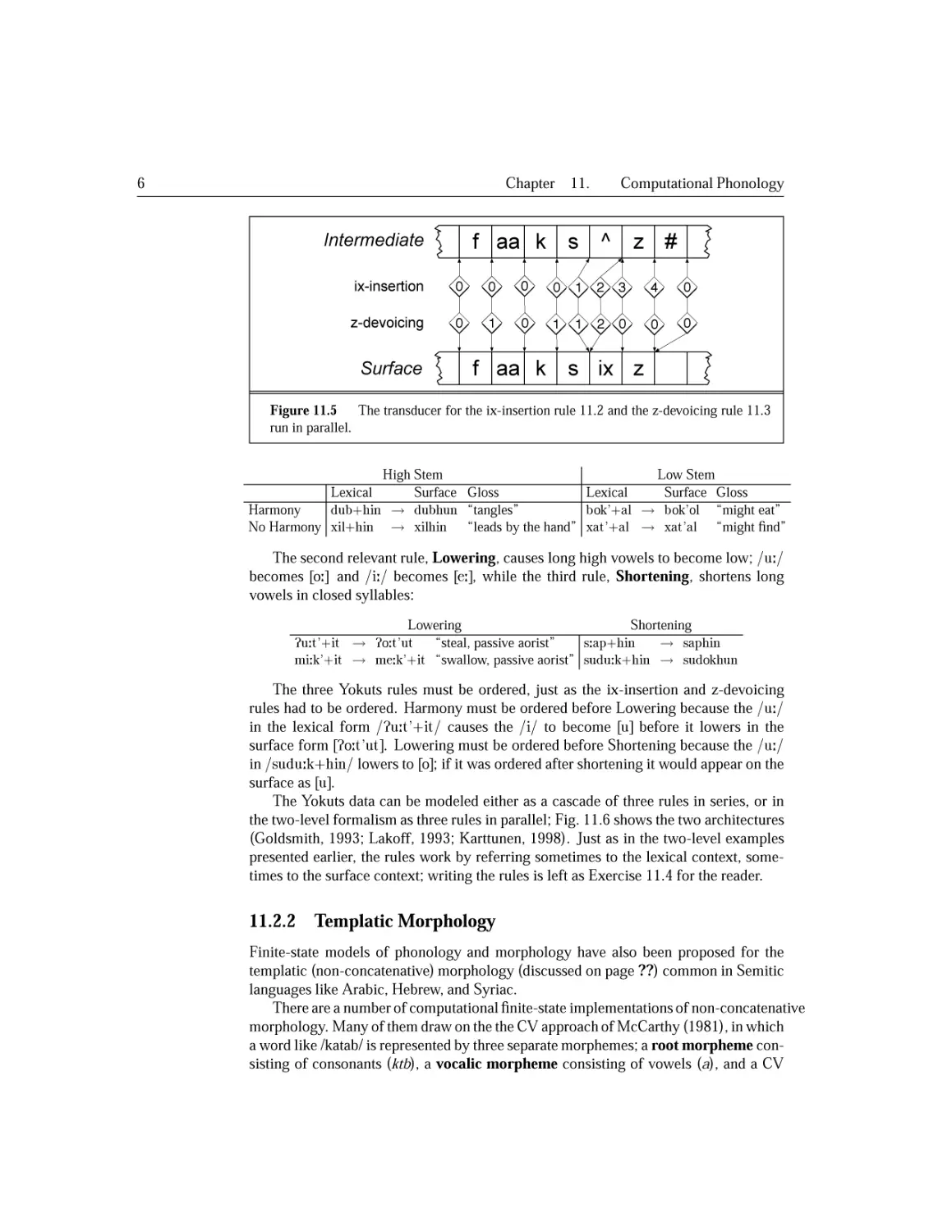

11.2.1 Harmony.....................................................365

11.2.2 Templatic Morphology........................................366

11.3 Computational Optimality Theory....................................367

11.3.1 Finite-State Transducer Models of Optimality Theory . . . . 369

11.3.2 Stochastic Models of Optimality Theory.....................370

11.4 Syllabification....................................................372

11.5 Learning Phonology and Morphology..................................375

11.5.1 Learning Phonological Rules................................375

11.5.2 Learning Morphology........................................377

11.5.3 Learning in Optimality Theory..............................380

11.6 Summary............................................................381

Bibliographical and Historical Notes.....................................381

Exercises................................................................383

III Syntax

12 Formal Grammars of English 385

12.1 Constituency.......................................................386

12.2 Context-Free Grammars..............................................387

12.2.1 Formal Definition of Context-Free Grammar..................391

12.3 Some Grammar Rules for English.....................................392

12.3.1 Sentence-Level Constructions...............................392

12.3.2 Clauses and Sentences......................................394

12.3.3 The Noun Phrase............................................394

12.3.4 Agreement..................................................398

12.3.5 The Verb Phrase and Subcategorization......................400

12.3.6 Auxiliaries................................................402

12.3.7 Coordination...............................................403

12.4 Treebanks..........................................................404

12.4.1 Example: The Penn Treebank Project.........................404

12.4.2 Treebanks as Grammars......................................406

12.4.3 Treebank Searching.........................................408

12.4.4 Heads and Head Finding.....................................409

12.5 Grammar Equivalence and Normal Form..............................412

12.6 Finite-State and Context-Free Grammars.............................413

12.7 Dependency Grammars................................................414

Contents

xv

12.7.1 The Relationship Between Dependencies and Heads .... 415

12.7.2 Categorial Grammar.........................................417

12.8 Spoken Language Syntax..............................................417

12.8.1 Disfluencies and Repair....................................418

12.8.2 Treebanks for Spoken Language..............................419

12.9 Grammars and Human Processing.......................................420

12.10 Summary............................................................421

Bibliographical and Historical Notes......................................422

Exercises.................................................................424

13 Syntactic Parsing 427

13.1 Parsing as Search...................................................428

13.1.1 Top-Down Parsing...........................................429

13.1.2 Bottom-Up Parsing..........................................430

13.1.3 Comparing Top-Down and Bottom-Up Parsing...................431

13.2 Ambiguity...........................................................432

13.3 Search in the Face of Ambiguity.....................................434

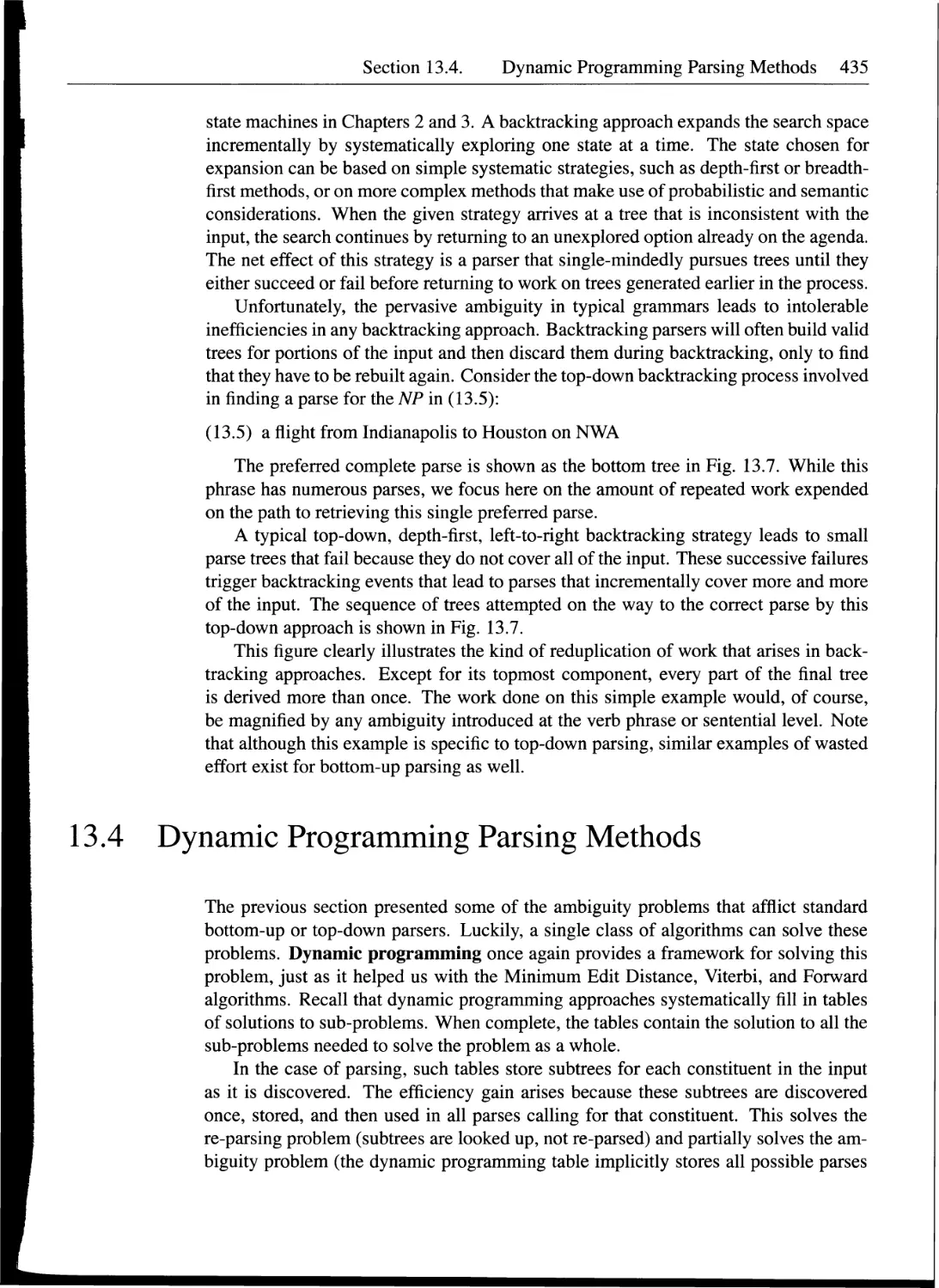

13.4 Dynamic Programming Parsing Methods.................................435

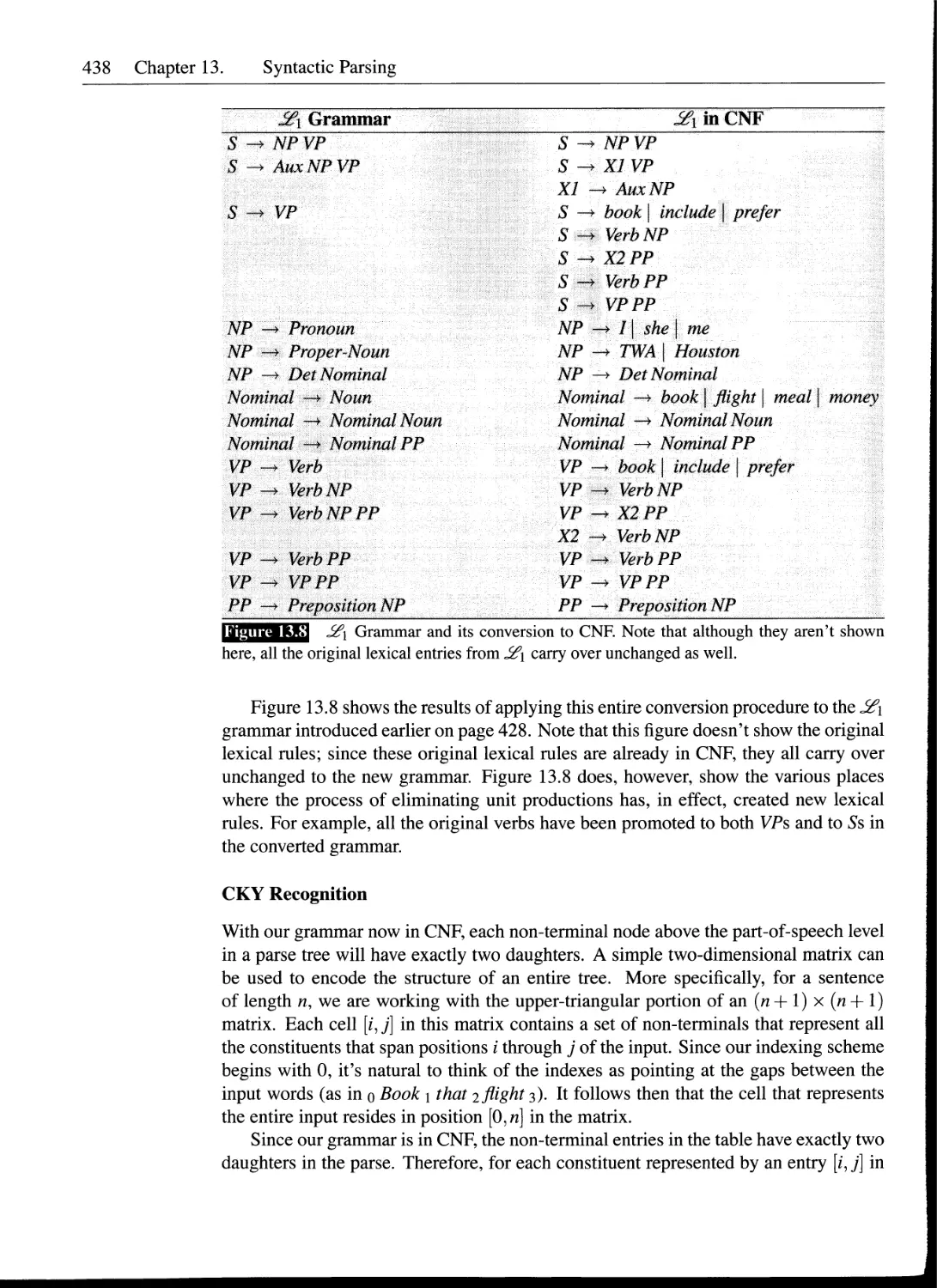

13.4.1 CKY Parsing................................................436

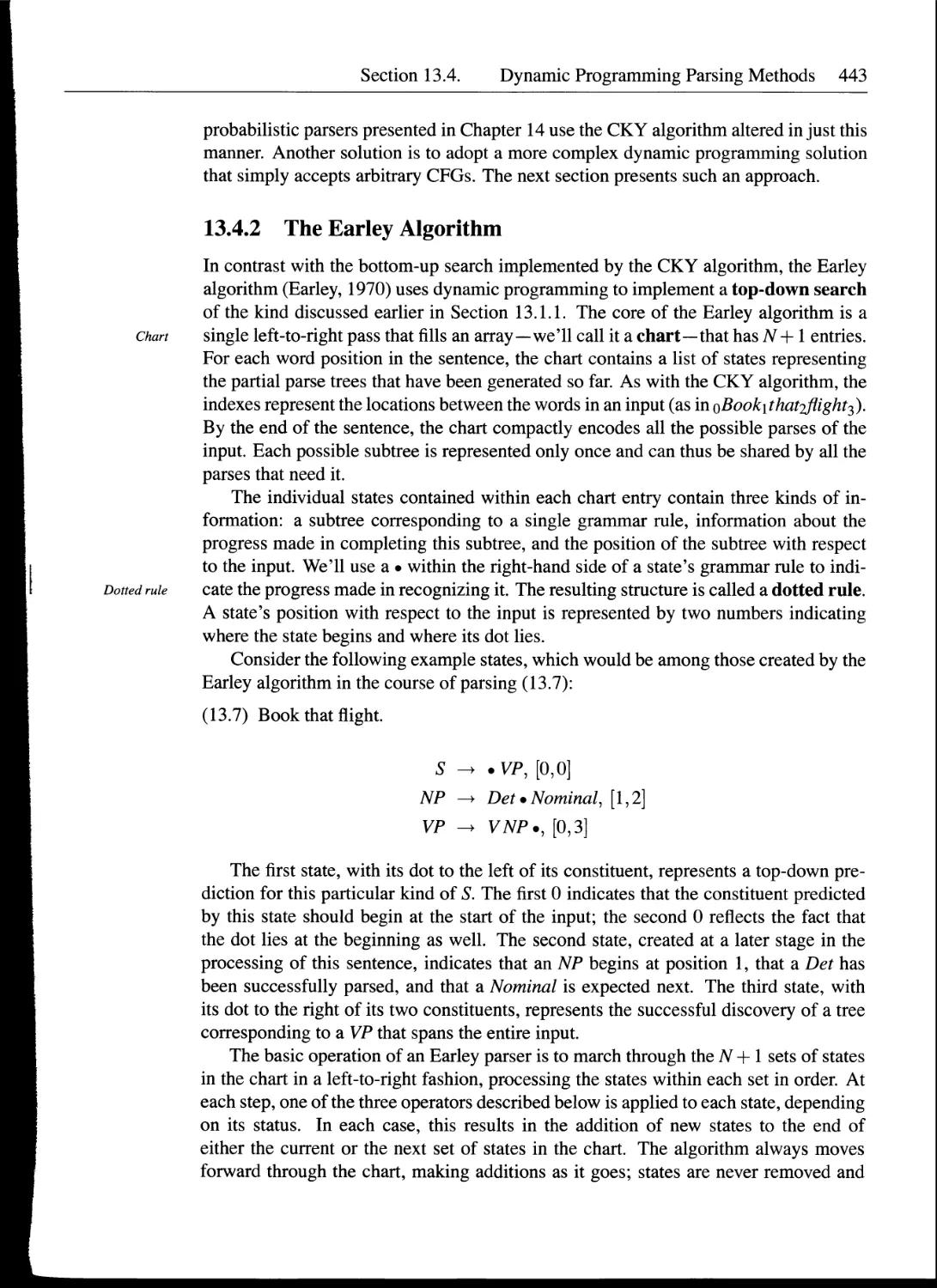

13.4.2 The Earley Algorithm.......................................443

13.4.3 Chart Parsing..............................................448

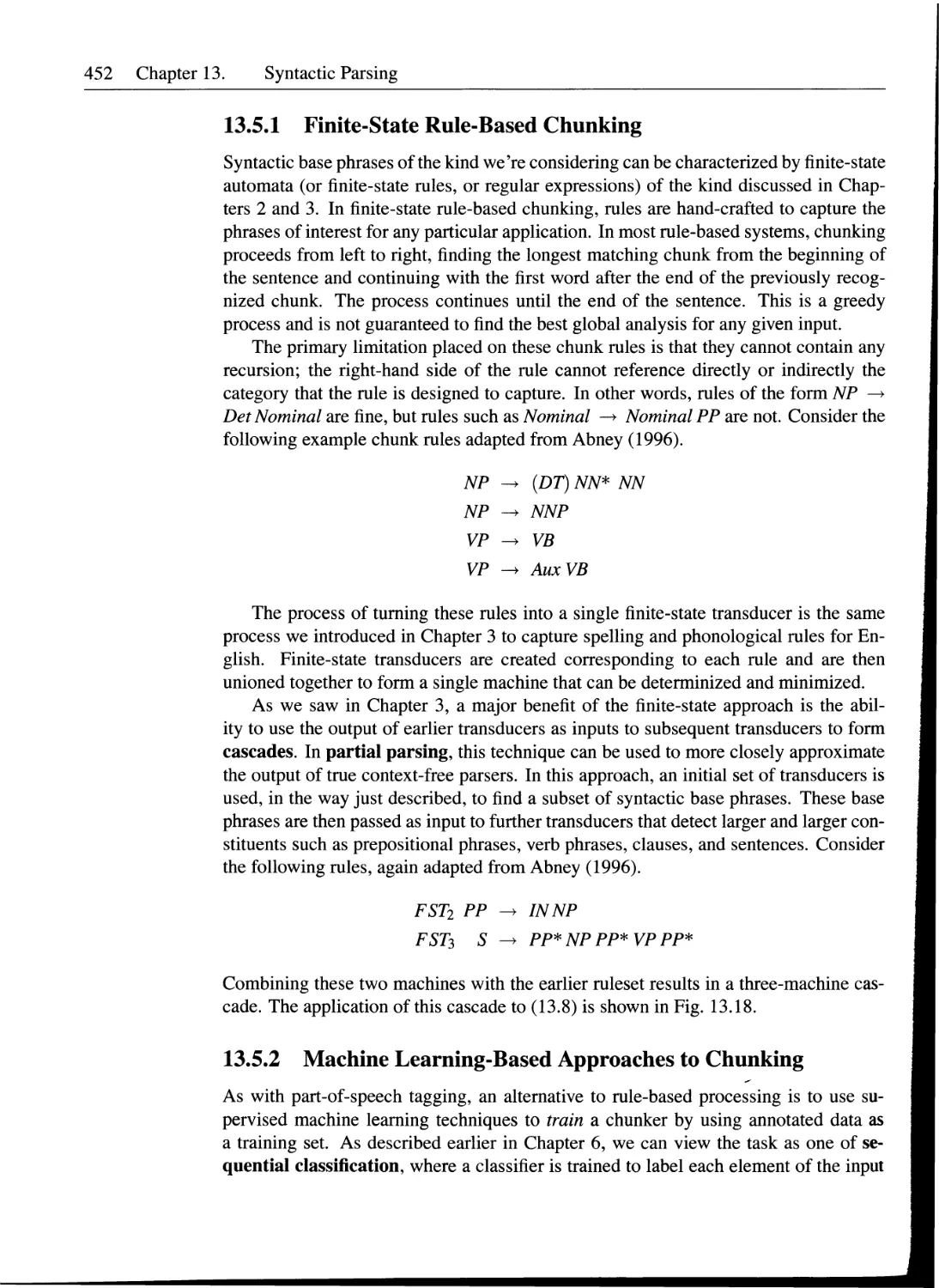

13.5 Partial Parsing.....................................................450

13.5.1 Finite-State Rule-Based Chunking............................452

13.5.2 Machine Learning-Based Approaches to Chunking . . .452

13.5.3 Chunking-System Evaluations.................................455

13.6 Summary.............................................................456

Bibliographical and Historical Notes......................................457

Exercises.................................................................458

14 Statistical Parsing 459

14.1 Probabilistic Context-Free Grammars.................................460

14.1.1 PCFGs for Disambiguation....................................461

14.1.2 PCFGs for Language Modeling.................................463

14.2 Probabilistic CKY Parsing of PCFGs..................................464

14.3 Ways to Learn PCFG Rule Probabilities...............................467

14.4 Problems with PCFGs.................................................468

14.4.1 Independence Assumptions Miss Structural Dependencies Be-

tween Rules...................................................468

14.4.2 Lack of Sensitivity to Lexical Dependencies.................469

14.5 Improving PCFGs by Splitting Non-Terminals..........................471

14.6 Probabilistic Lexicalized CFGs......................................473

14.6.1 The Collins Parser..........................................475

14.6.2 Advanced: Further Details of the Collins Parser.............477

14.7 Evaluating Parsers..................................................479

14.8 Advanced: Discriminative Reranking..................................481

14.9 Advanced: Parser-Based Language Modeling............................482

xvi Contents

14.10 Human Parsing......................................................483

14.11 Summary.............................................................485

Bibliographical and Historical Notes......................................486

Exercises.................................................................488

15 Features and Unification 489

15.1 Feature Structures..................................................490

15.2 Unification of Feature Structures...................................492

15.3 Feature Structures in the Grammar...................................497

15.3.1 Agreement...................................................498

15.3.2 Head Features...............................................500

15.3.3 Subcategorization..........................................501

15.3.4 Long-Distance Dependencies.................................506

15.4 Implementation of Unification.......................................507

15.4.1 Unification Data Structures.................................507

15.4.2 The Unification Algorithm..................................509

15.5 Parsing with Unification Constraints................................513

15.5.1 Integration of Unification into an Earley Parser............514

15.5.2 Unification-Based Parsing...................................519

15.6 Types and Inheritance...............................................521

15.6.1 Advanced: Extensions to Typing..............................524

15.6.2 Other Extensions to Unification.............................525

15.7 Summary.............................................................525

Bibliographical and Historical Notes......................................526

Exercises.................................................................527

16 Language and Complexity 529

16.1 The Chomsky Hierarchy...............................................530

16.2 Ways to Tell if a Language Isn't Regular............................532

16.2.1 The Pumping Lemma...........................................533

16.2.2 Proofs that Various Natural Languages Are Not Regular . . 535

16.3 Is Natural Language Context Free?...................................537

16.4 Complexity and Human Processing.....................................539

16.5 Summary.............................................................542

Bibliographical and Historical Notes......................................543

Exercises.................................................................544

IV Semantics and Pragmatics

17 The Representation of Meaning 545

17.1 Computational Desiderata for Representations........................547

17.1.1 Verifiability...............................................547

17.1.2 Unambiguous Representations.................................548

17.1.3 Canonical Form.............................................549

17.1.4 Inference and Variables....................................550

17.1.5 Expressiveness..............................................551

Contents xvii

17.2 Model-Theoretic Semantics..........................................552

17.3 First-Order Logic..................................................555

17.3.1 Basic Elements of First-Order Logic........................555

17.3.2 Variables and Quantifiers..................................557

17.3.3 Lambda Notation............................................559

17.3.4 The Semantics of First-Order Logic.........................560

17.3.5 Inference..................................................561

17.4 Event and State Representations....................................563

17.4.1 Representing Time..........................................566

17.4.2 Aspect.....................................................569

17.5 Description Logics.................................................572

17.6 Embodied and Situated Approaches to Meaning........................578

17.7 Summary............................................................580

Bibliographical and Historical Notes.....................................580

Exercises................................................................582

18 Computational Semantics 583

18.1 Syntax-Driven Semantic Analysis....................................583

18.2 Semantic Augmentations to Syntactic Rules..........................585

18.3 Quantifier Scope Ambiguity and Underspecification..................592

18.3.1 Store and Retrieve Approaches..............................592

18.3.2 Constraint-Based Approaches................................595

18.4 Unification-Based Approaches to Semantic Analysis..................598

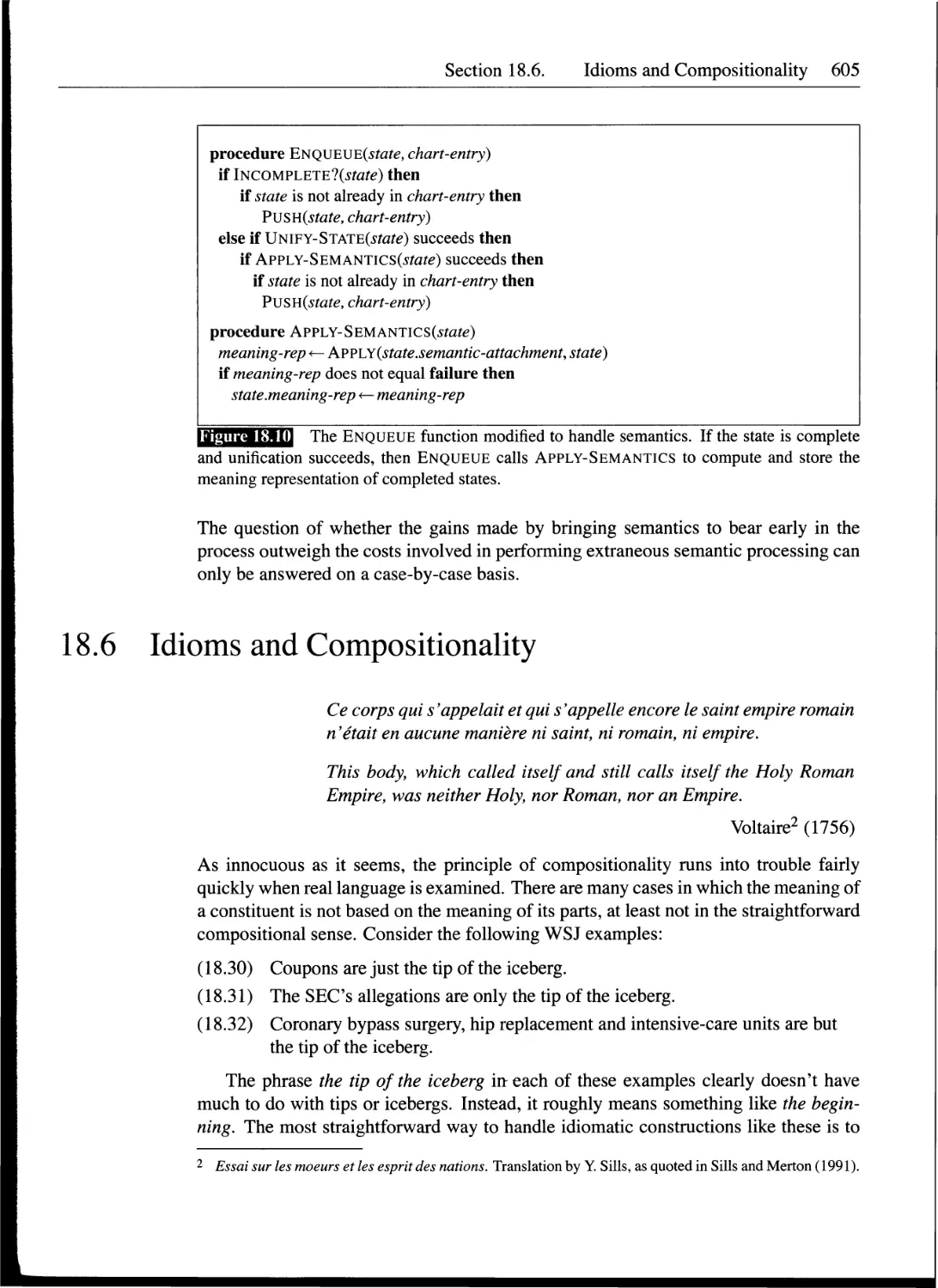

18.5 Integration of Semantics into the Earley Parser....................604

18.6 Idioms and Compositionality........................................605

18.7 Summary............................................................607

Bibliographical and Historical Notes.....................................607

Exercises................................................................609

19 Lexical Semantics 611

19.1 Word Senses........................................................612

19.2 Relations Between Senses...........................................615

19.2.1 Synonymy and Antonymy.....................................615

19.2.2 Hyponymy.................•.................................616

19.2.3 Semantic Fields...........................................617

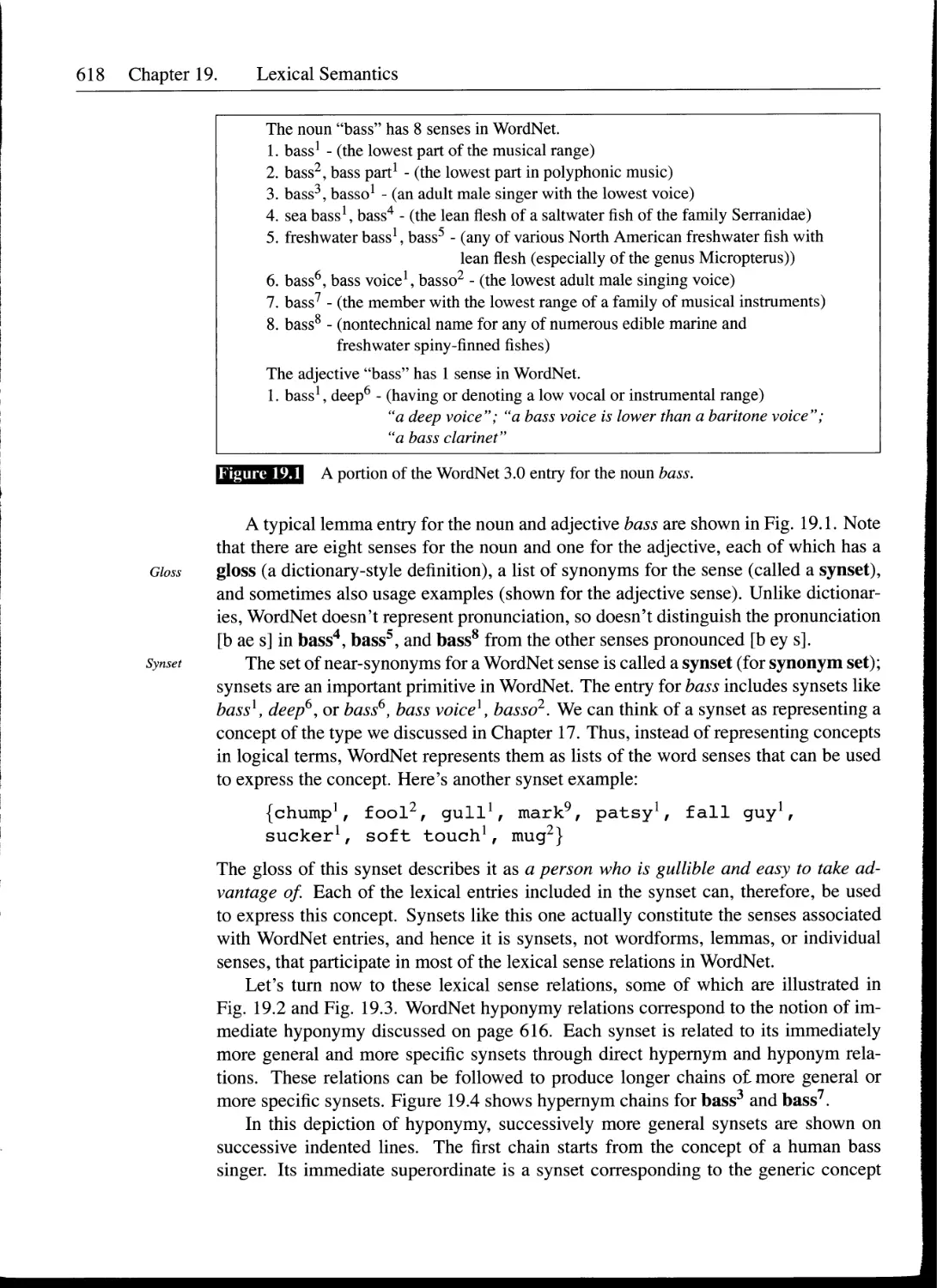

19.3 WordNet: A Database of Lexical Relations..........................617

19.4 Event Participants................................................619

19.4.1 Thematic Roles............................................620

19.4.2 Diathesis Alternations....................................622

19.4.3 Problems with Thematic Roles..............................623

19.4.4 The Proposition Bank......................................624

19.4.5 FrameNet..................................................625

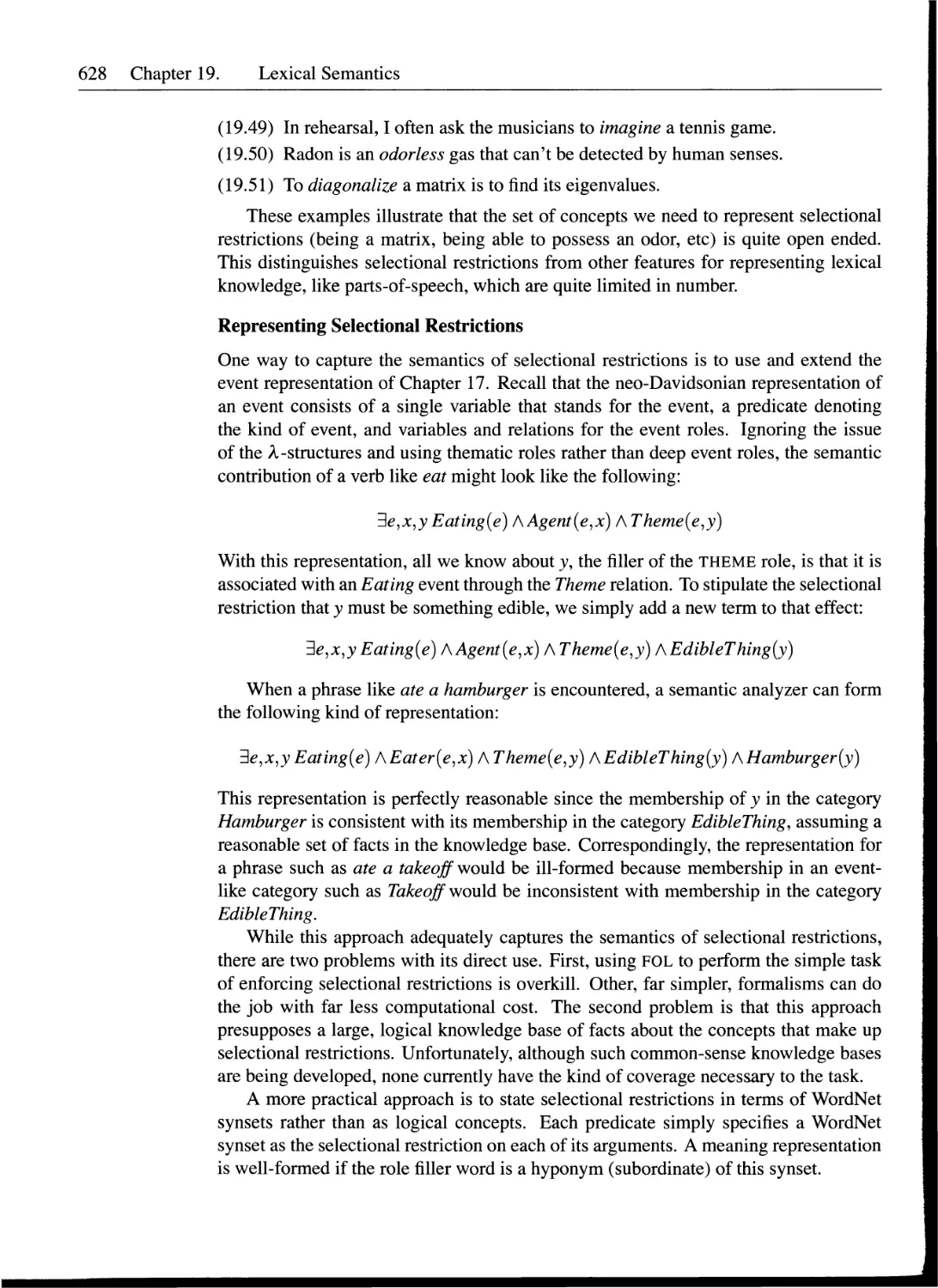

19.4.6 Selectional Restrictions..................................627

19.5 Primitive Decomposition...........................................629

19.6 Advanced: Metaphor................................................631

19.7 Summary...........................................................632

xviii Contents

Bibliographical and Historical Notes......................................633

Exercises.................................................................634

20 Computational Lexical Semantics 637

20.1 Word Sense Disambiguation: Overview.................................638

20.2 Supervised Word Sense Disambiguation................................639

20.2.1 Feature Extraction for Supervised Learning..................640

20.2.2 Naive Bayes and Decision List Classifiers...................641

20.3 WSD Evaluation, Baselines, and Ceilings.............................644

20.4 WSD: Dictionary and Thesaurus Methods...............................646

20.4.1 The Lesk Algorithm..........................................646

20.4.2 Selectional Restrictions and Selectional Preferences .... 648

20.5 Minimally Supervised WSD: Bootstrapping.............................650

20.6 Word Similarity: Thesaurus Methods..................................652

20.7 Word Similarity: Distributional Methods.............................658

20.7.1 Defining a Word's Co-Occurrence Vectors.....................659

20.7.2 Measuring Association with Context..........................661

20.7.3 Defining Similarity Between Two Vectors.....................663

20.7.4 Evaluating Distributional Word Similarity...................667

20.8 Hy pony my and Other Word Relations.................................667

20.9 Semantic Role Labeling..............................................670

20.10 Advanced: Unsupervised Sense Disambiguation........................674

20.11 Summary............................................................675

Bibliographical and Historical Notes......................................676

Exercises.................................................................679

21 Computational Discourse 681

21.1 Discourse Segmentation..............................................684

21.1.1 Unsupervised Discourse Segmentation.........................684

21.1.2 Supervised Discourse Segmentation...........................686

21.1.3 Discourse Segmentation Evaluation..........................688

21.2 Text Coherence......................................................689

21.2.1 Rhetorical Structure Theory.................................690

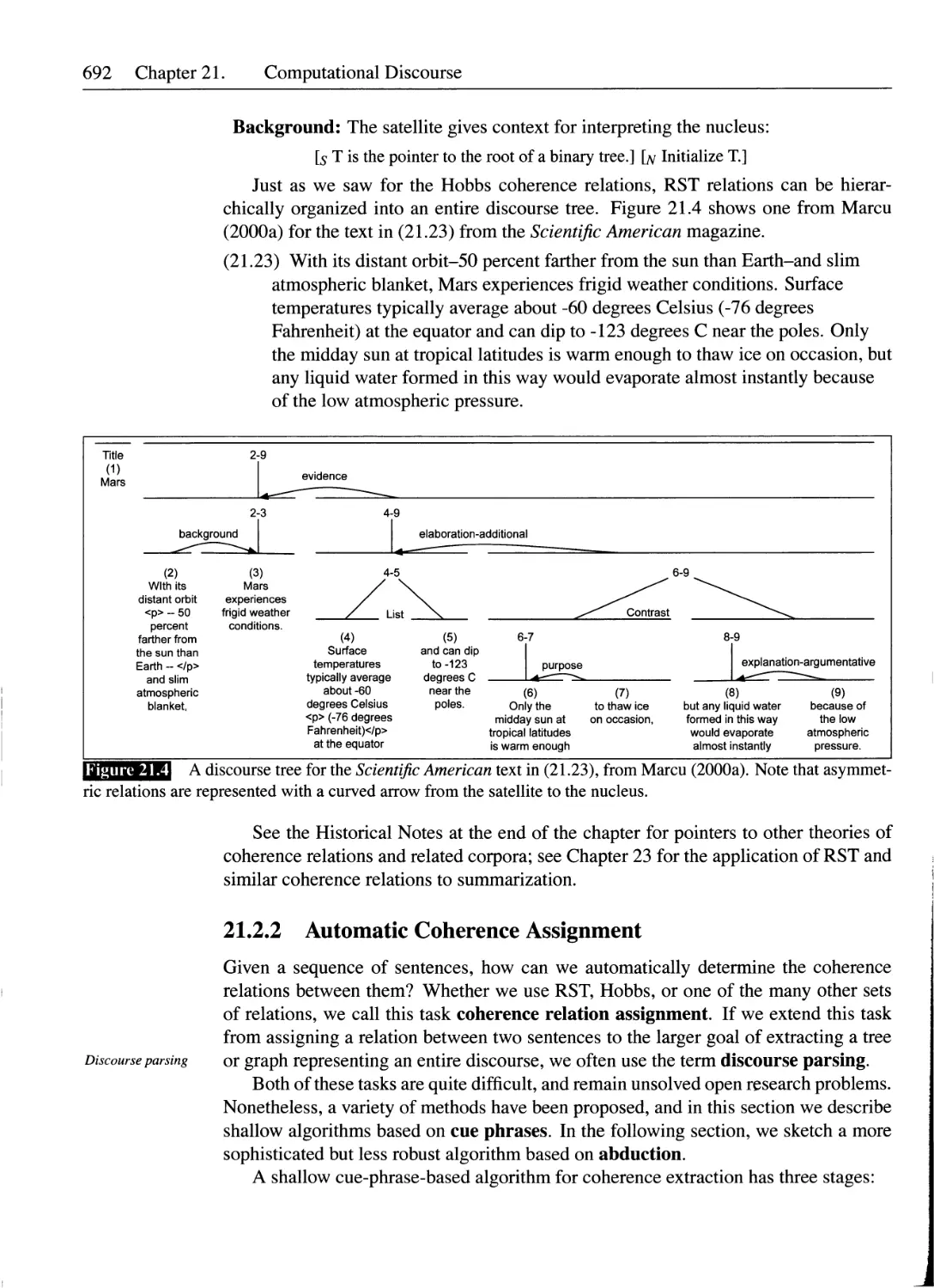

21.2.2 Automatic Coherence Assignment..............................692

21.3 Reference Resolution................................................695

21.4 Reference Phenomena.................................................698

21.4.1 Five Types of Referring Expressions.........................698

21.4.2 Information Status..........................................700

21.5 Features for Pronominal Anaphora Resolution.........................701

21.5.1 Features for Filtering Potential Referents..................701

21.5.2 Preferences in Pronoun Interpretation.......................702

21.6 Three Algorithms for Anaphora Resolution............................704

21.6.1 Pronominal Anaphora Baseline: The Hobbs Algorithm . . 704

21.6.2 A Centering Algorithm for Anaphora Resolution...............706

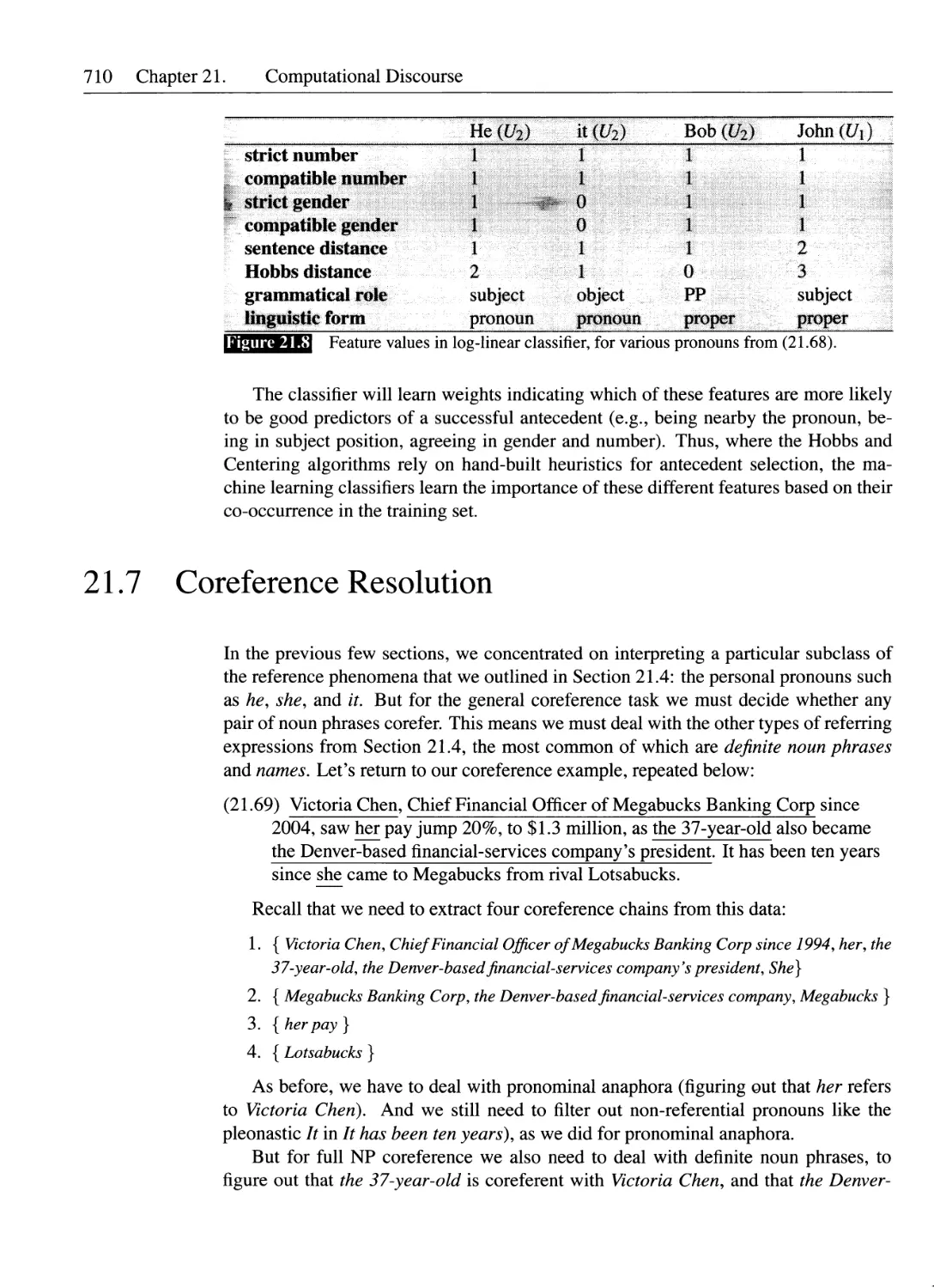

21.6.3 A Log-Linear Model for Pronominal Anaphora Resolution . 708

21.6.4 Features for Pronominal Anaphora Resolution................709

Contents xix

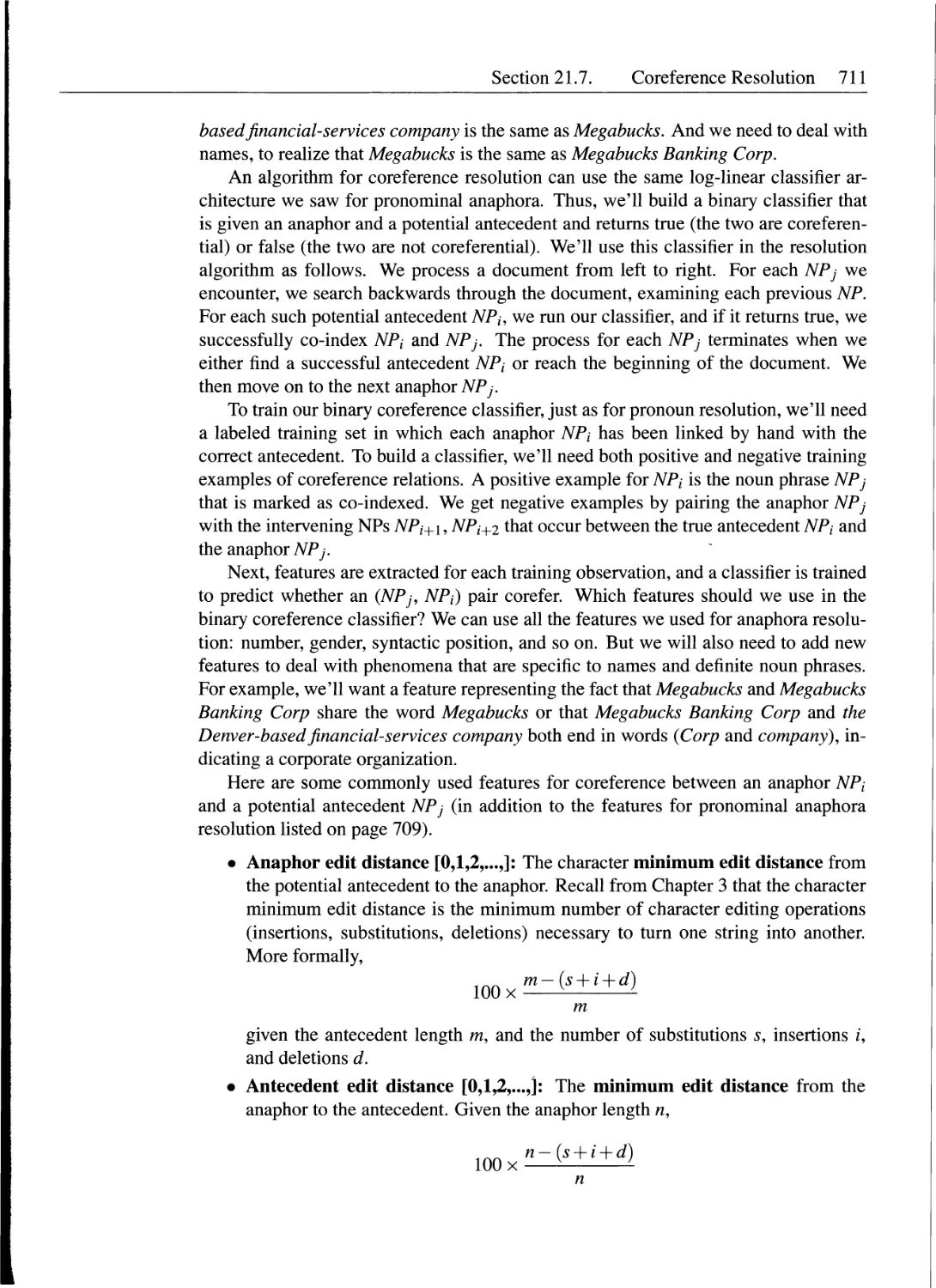

21.7 Coreference Resolution............................................710

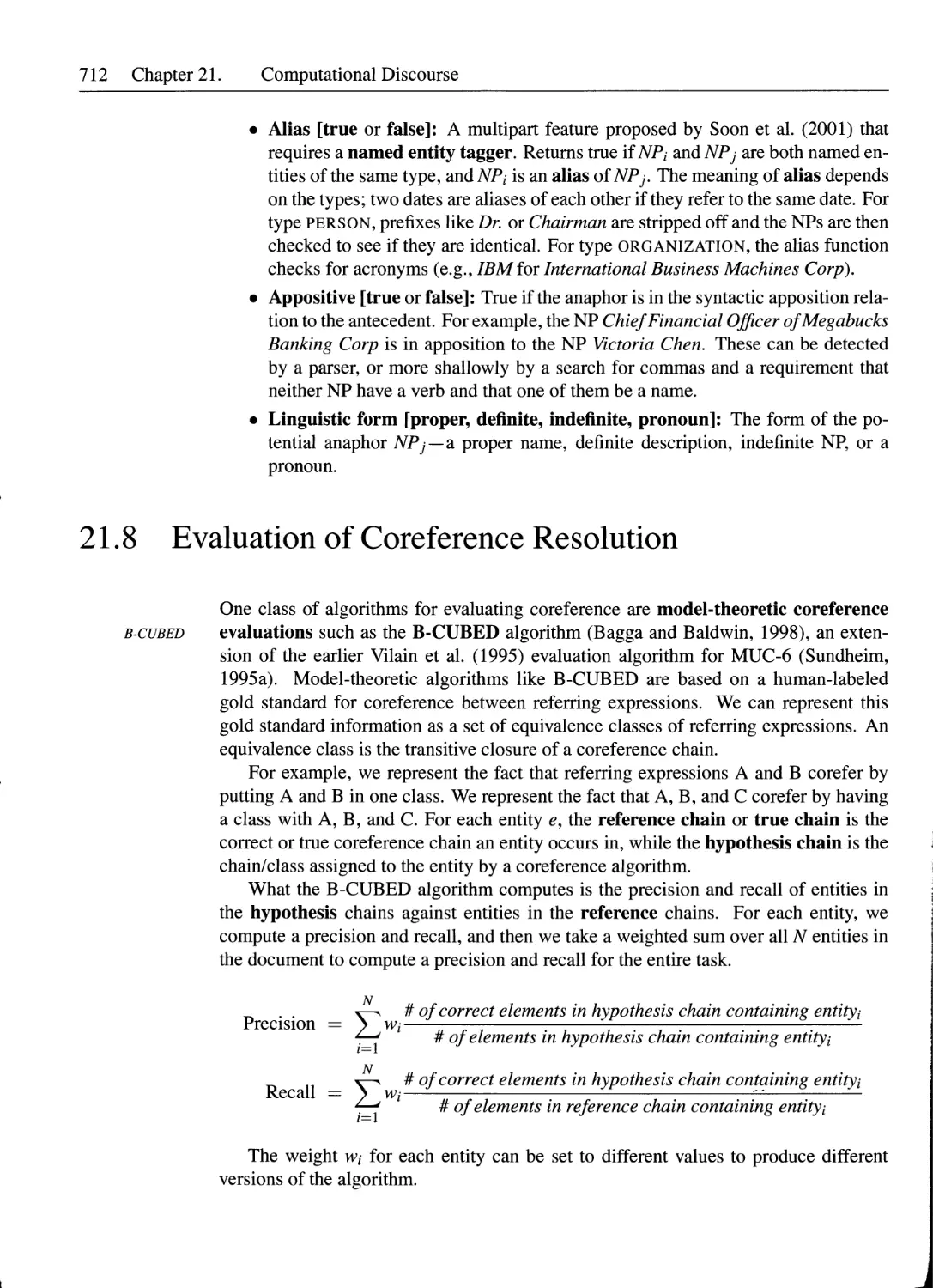

21.8 Evaluation of Coreference Resolution..............................712

21.9 Advanced: Inference-Based Coherence Resolution....................713

21.10 Psycholinguistic Studies of Reference.............................718

21.11 Summary...........................................................719

Bibliographical and Historical Notes....................................720

Exercises...............................................................722

V Applications

22 Information Extraction 725

22.1 Named Entity Recognition..........................................727

22.1.1 Ambiguity in Named Entity Recognition.....................729

22.1.2 NER as Sequence Labeling..................................729

22.1.3 Evaluation of Named Entity Recognition....................732

22.1.4 Practical NER Architectures...............................734

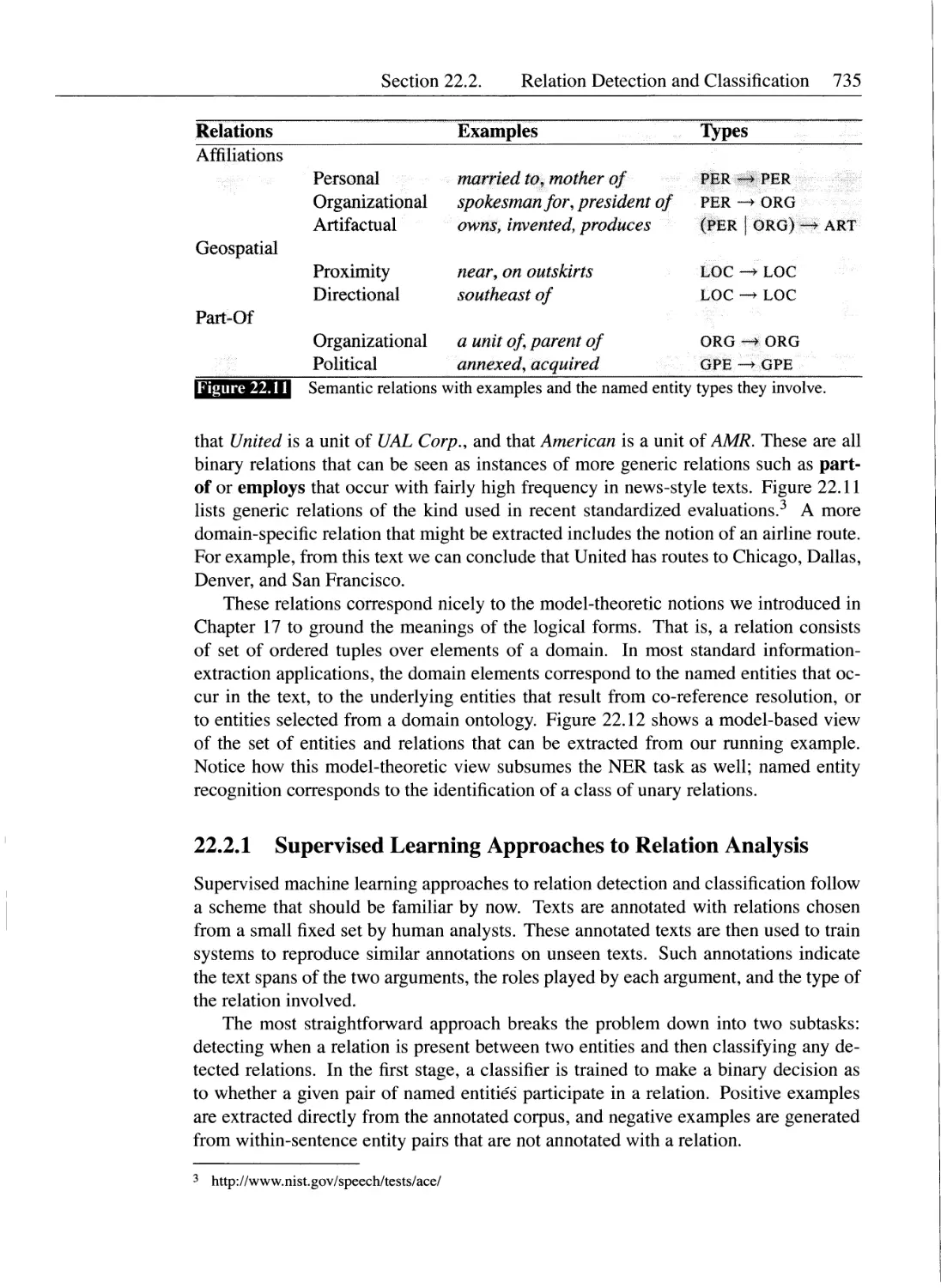

22.2 Relation Detection and Classification.............................734

22.2.1 Supervised Learning Approaches to Relation Analysis . . . 735

22.2.2 Lightly Supervised Approaches to Relation Analysis .... 738

22.2.3 Evaluation of Relation Analysis Systems...................742

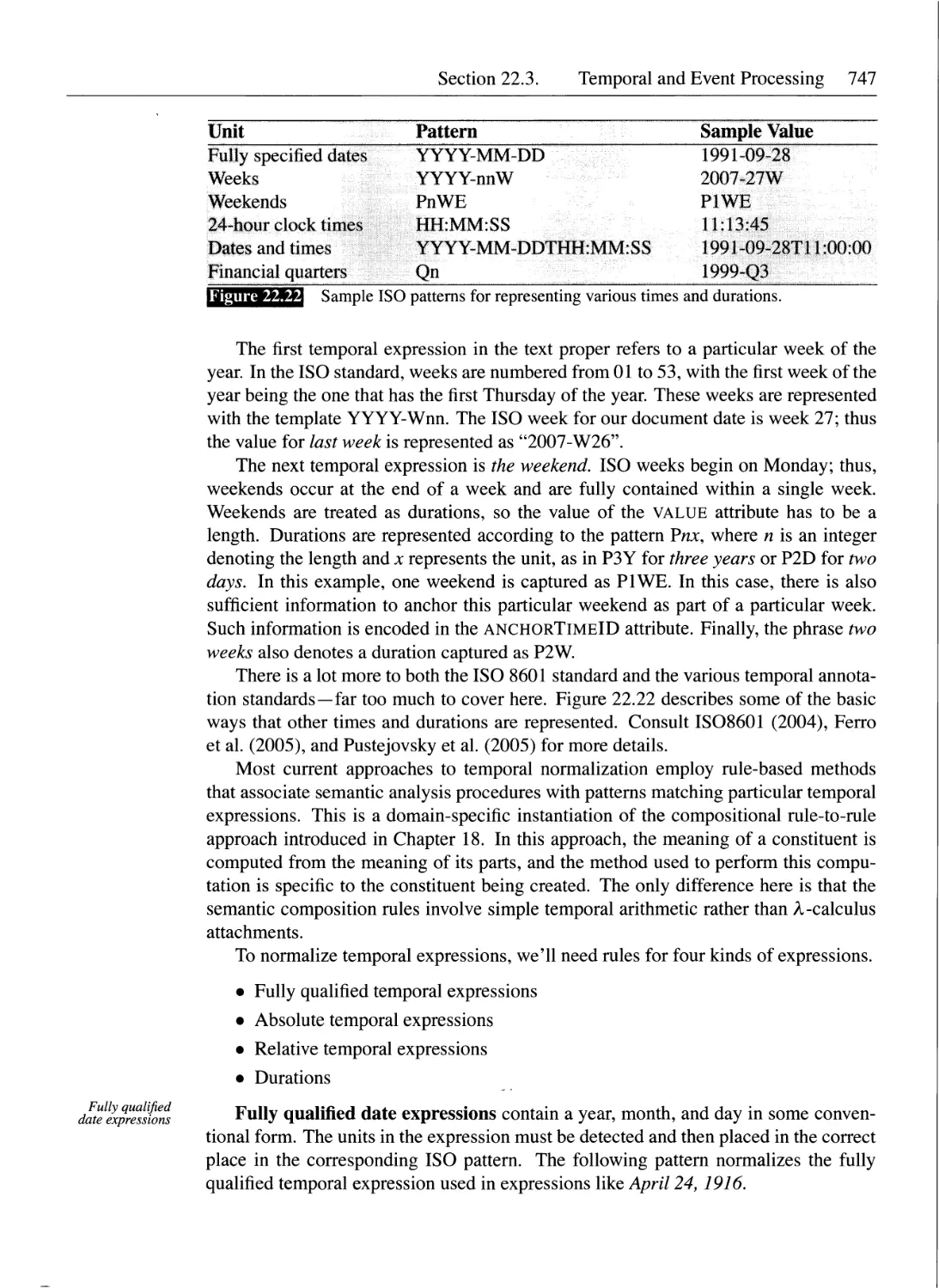

22.3 Temporal and Event Processing.....................................743

22.3.1 Temporal Expression Recognition...........................743

22.3.2 Temporal Normalization....................................746

22.3.3 Event Detection and Analysis..............................749

22.3.4 TimeBank..................................................750

22.4 Template Filling..................................................752

22.4.1 Statistical Approaches to Template-Filling................752

22.4.2 Finite-State Template-Filling Systems.....................754

22.5 Advanced: Biomedical Information Extraction.......................757

22.5.1 Biological Named Entity Recognition.......................758

22.5.2 Gene Normalization........................................759

22.5.3 Biological Roles and Relations............................760

22.6 Summary...........................................................762

Bibliographical and Historical Notes....................................762

Exercises...............................................................763

23 Question Answering and Summarization 765

23.1 Information Retrieval.............................................767

23.1.1 The Vector Space Model....................................768

23.1.2 Term Weighting............................................770

23.1.3 Term Selection and Creation...............................772

23.1.4 Evaluation of Information-Retrieval Systems...............772

23.1.5 Homonymy, Polysemy, and Synonymy..........................776

23.1.6 Ways to Improve User Queries..............................776

23.2 Factoid Question Answering........................................778

23.2.1 Question Processing.......................................779

XX

Contents

23.2.2 Passage Retrieval..........................................781

23.2.3 Answer Processing..........................................783

23.2.4 Evaluation of Factoid Answers..............................787

23.3 Summarization......................................................787

23.4 Single-Document Summarization......................................790

23.4.1 Unsupervised Content Selection..............................790

23.4.2 Unsupervised Summarization Based on Rhetorical Parsing . 792

23.4.3 Supervised Content Selection...............................794

23.4.4 Sentence Simplification....................................795

23.5 Multi-Document Summarization.......................................796

23.5.1 Content Selection in Multi-Document Summarization . . . 797

23.5.2 Information Ordering in Multi-Document Summarization . 798

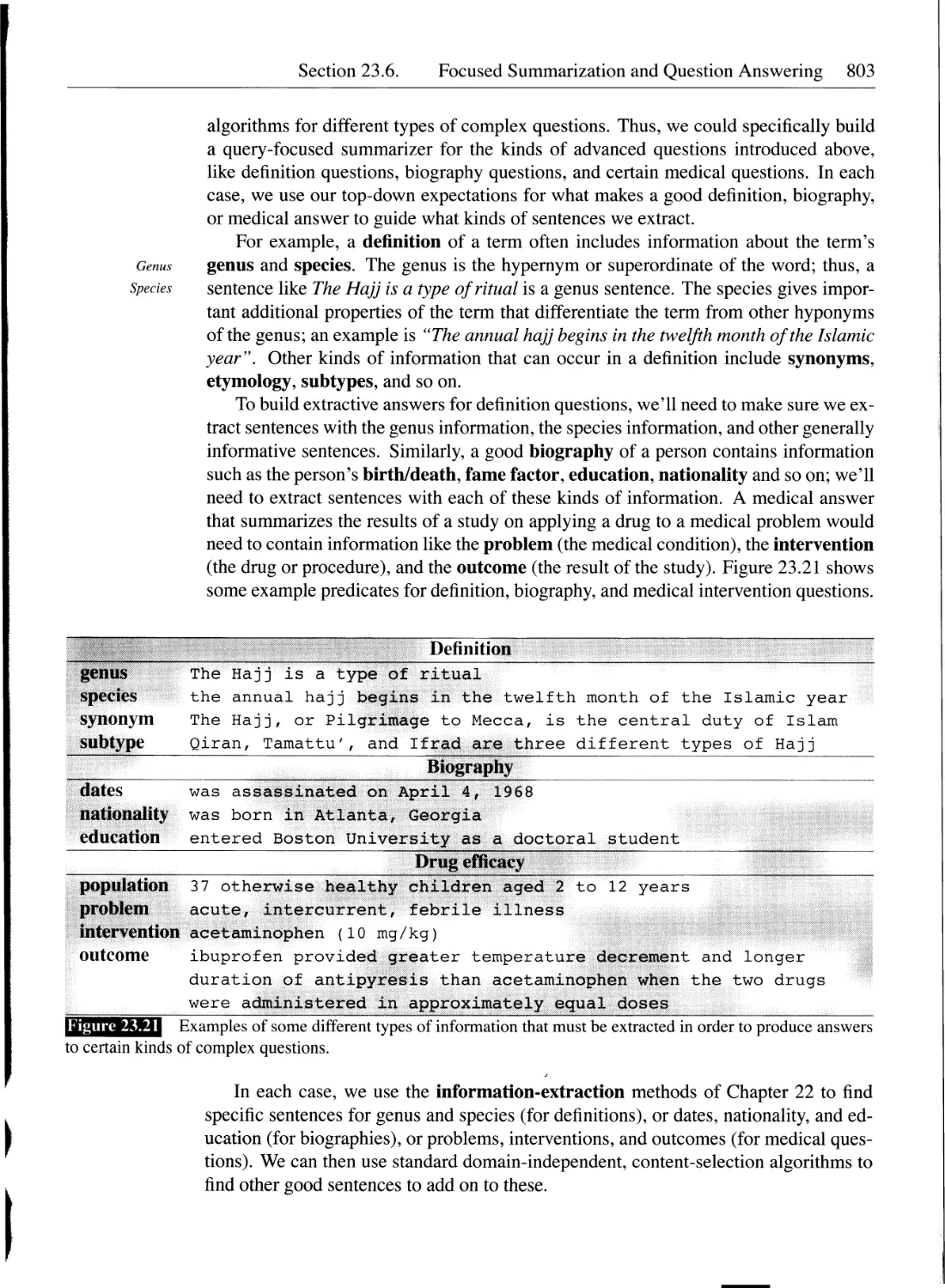

23.6 Focused Summarization and Question Answering.......................801

23.7 Summarization Evaluation...........................................805

23.8 Summary............................................................807

Bibliographical and Historical Notes......................................808

Exercises.................................................................810

24 Dialogue and Conversational Agents 811

24.1 Properties of Human Conversations..................................813

24.1.1 Turns and Turn-Taking.......................................813

24.1.2 Language as Action: Speech Acts............................815

24.1.3 Language as loint Action: Grounding........................816

24.1.4 Conversational Structure...................................818

24.1.5 Conversational Implicature.................................819

24.2 Basic Dialogue Systems.............................................821

24.2.1 ASR Component...............................................821

24.2.2 NLU Component..............................................822

24.2.3 Generation and TTS Components..............................825

24.2.4 Dialogue Manager...........................................827

24.2.5 Dealing with Errors: Confirmation and Rejection............831

24.3 VoiceXML...........................................................832

24.4 Dialogue System Design and Evaluation..............................836

24.4.1 Designing Dialogue Systems..................................836

24.4.2 Evaluating Dialogue Systems................................836

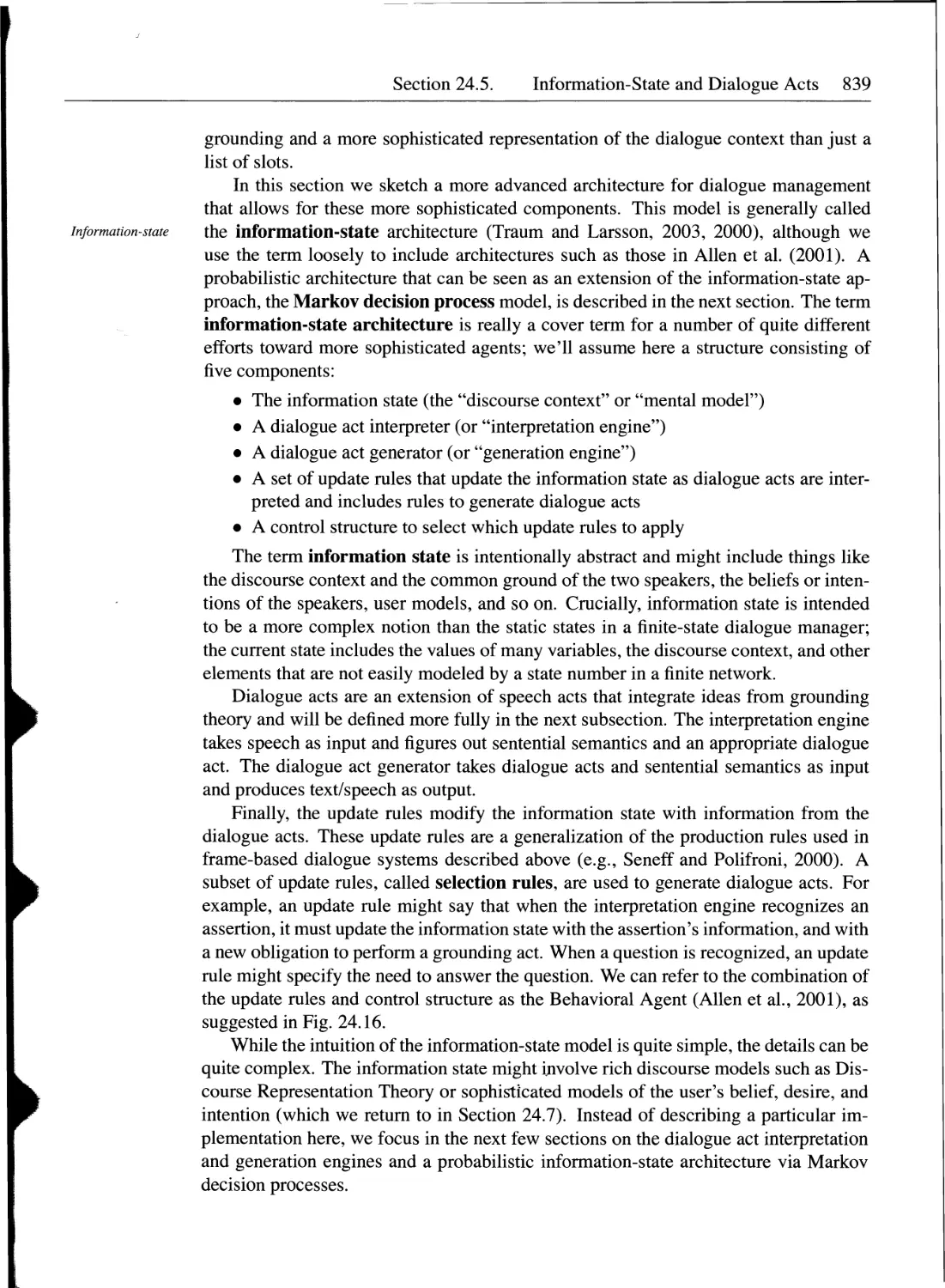

24.5 Information-State and Dialogue Acts................................838

24.5.1 Using Dialogue Acts........................................840

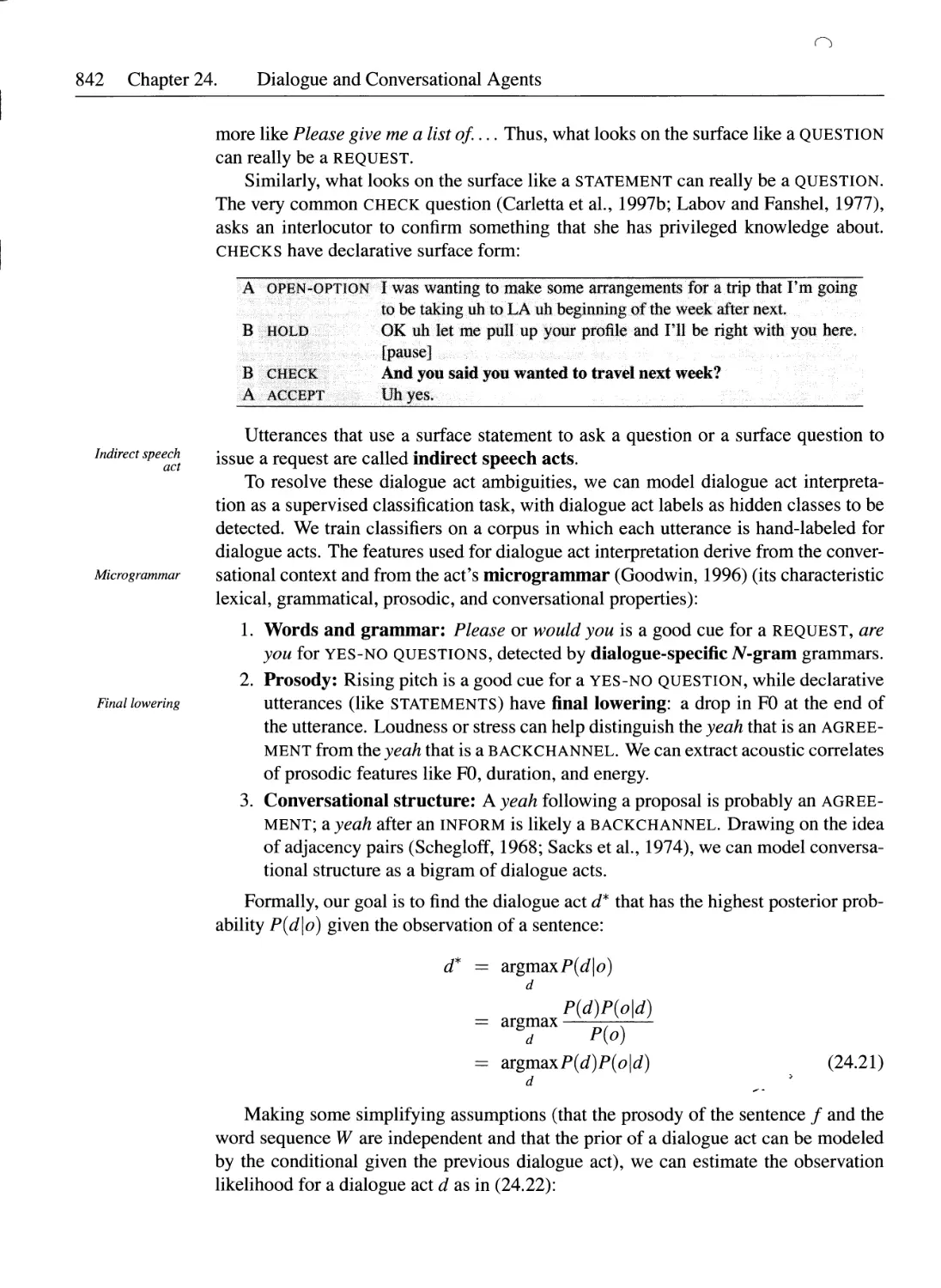

24.5.2 Interpreting Dialogue Acts.................................841

24.5.3 Detecting Correction Acts..................................844

24.5.4 Generating Dialogue Acts: Confirmation and Rejection . . . 845

24.6 Markov Decision Process Architecture...............................846

24.7 Advanced: Plan-Based Dialogue Agents...............................850

24.7.1 Plan-Inferential Interpretation and Production.............851

24.7.2 The Intentional Structure of Dialogue....................853

24.8 Summary............................................................855

Bibliographical and Historical Notes.....................................856

Contents xxi

Exercises...................................................................858

25 Machine Translation 859

25.1 Why Machine Translation Is Hard.....................................862

25.1.1 Typology...................................................862

25.1.2 Other Structural Divergences...............................864

25.1.3 Lexical Divergences........................................865

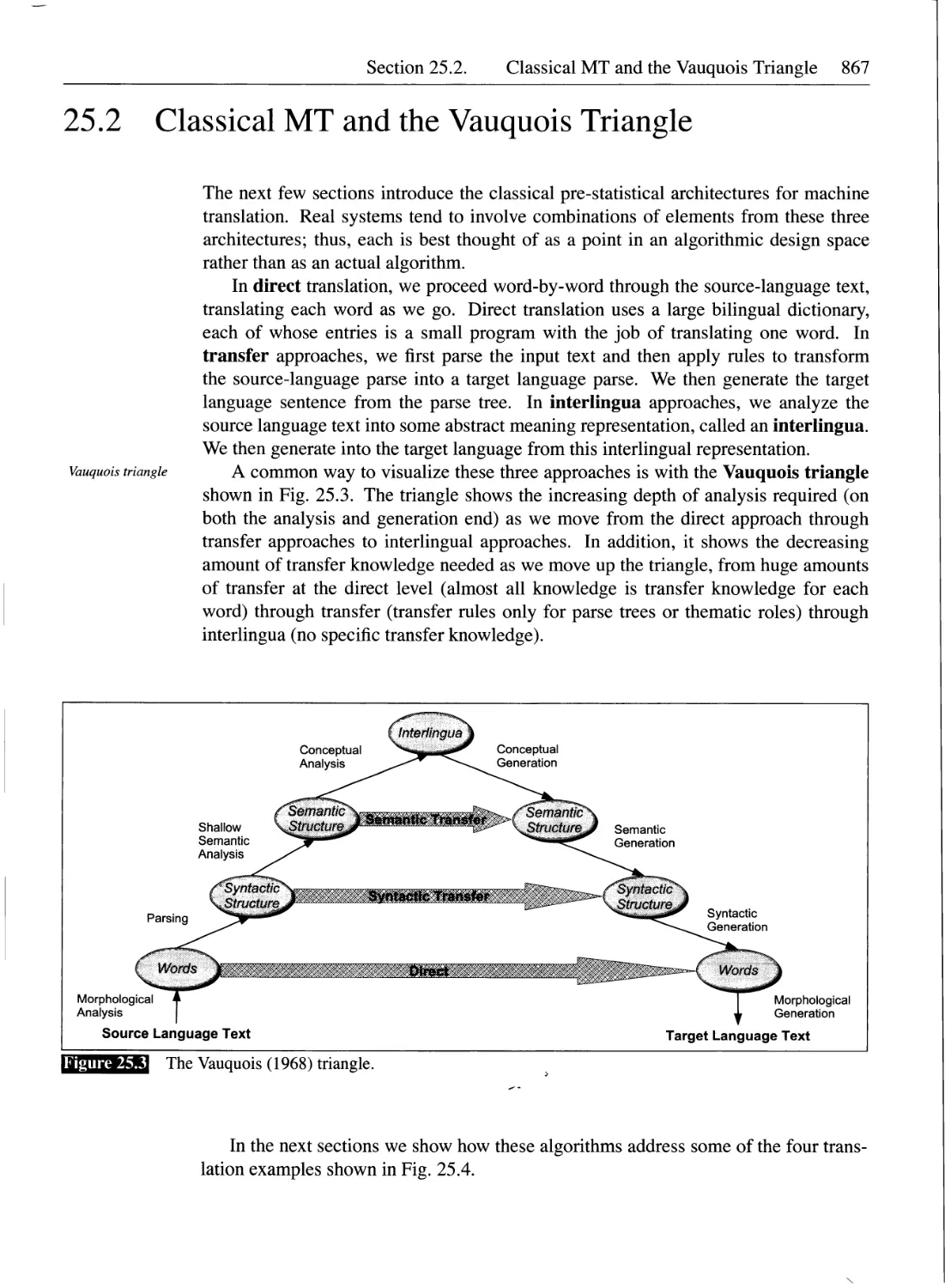

25.2 Classical MT and the Vauquois Triangle..............................867

25.2.1 Direct Translation.........................................868

25.2.2 Transfer...................................................870

25.2.3 Combined Direct and Transfer Approaches in Classic MT . 872

25.2.4 The Interlingua Idea: Using Meaning........................873

25.3 Statistical MT......................................................874

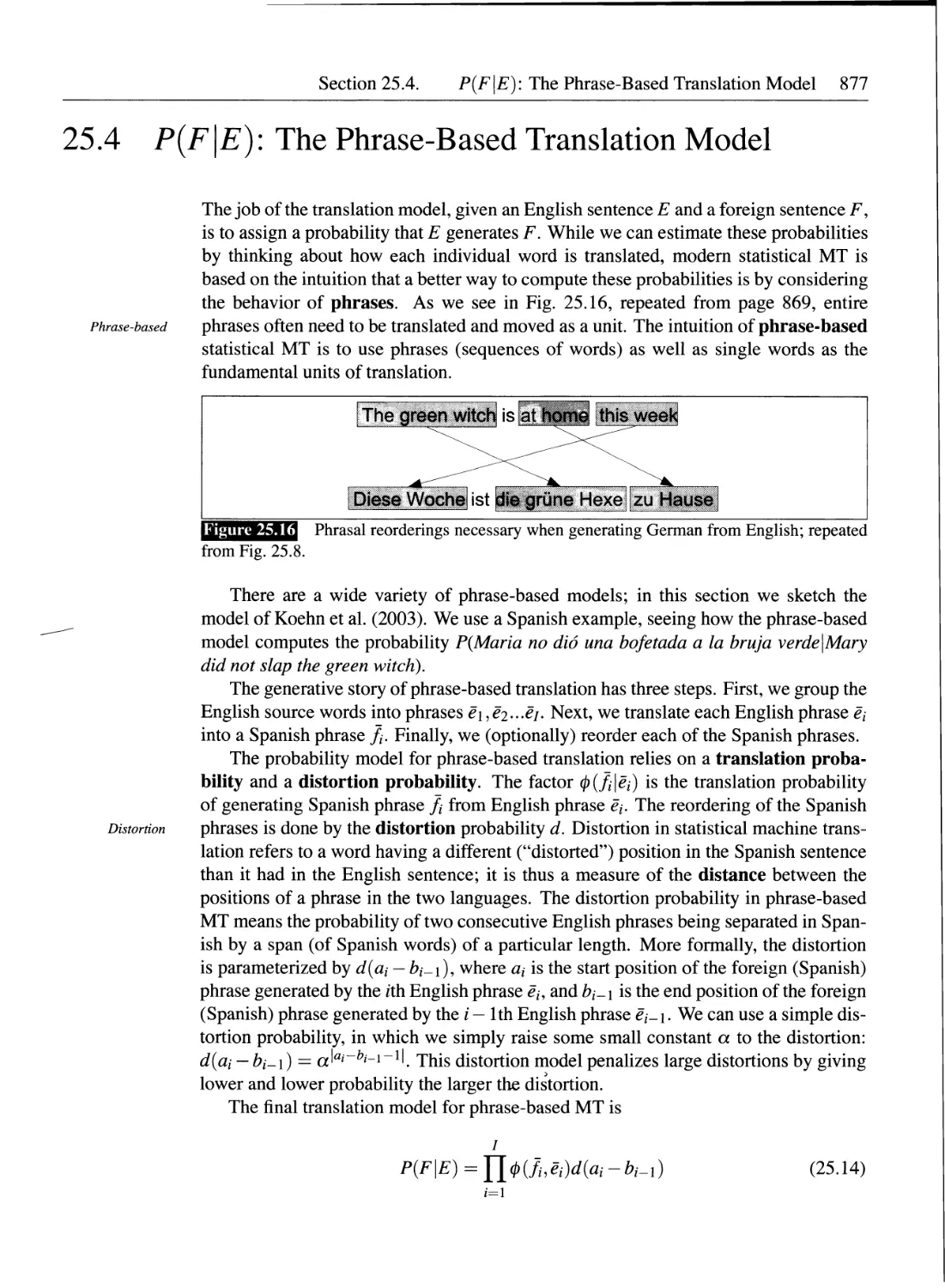

25.4 P(F\E): The Phrase-Based Translation Model..........................877

25.5 Alignment in MT.....................................................879

25.5.1 IBM Model 1................................................880

25.5.2 HMM Alignment..............................................883

25.6 Training Alignment Models...........................................885

25.6.1 EM for Training Alignment Models...........................886

25.7 Symmetrizing Alignments for Phrase-Based MT.........................888

25.8 Decoding for Phrase-Based Statistical MT............................890

25.9 MT Evaluation.......................................................894

25.9.1 Using Human Raters.........................................894

25.9.2 Automatic Evaluation: BLEU.................................895

25.10 Advanced: Syntactic Models for MT..................................898

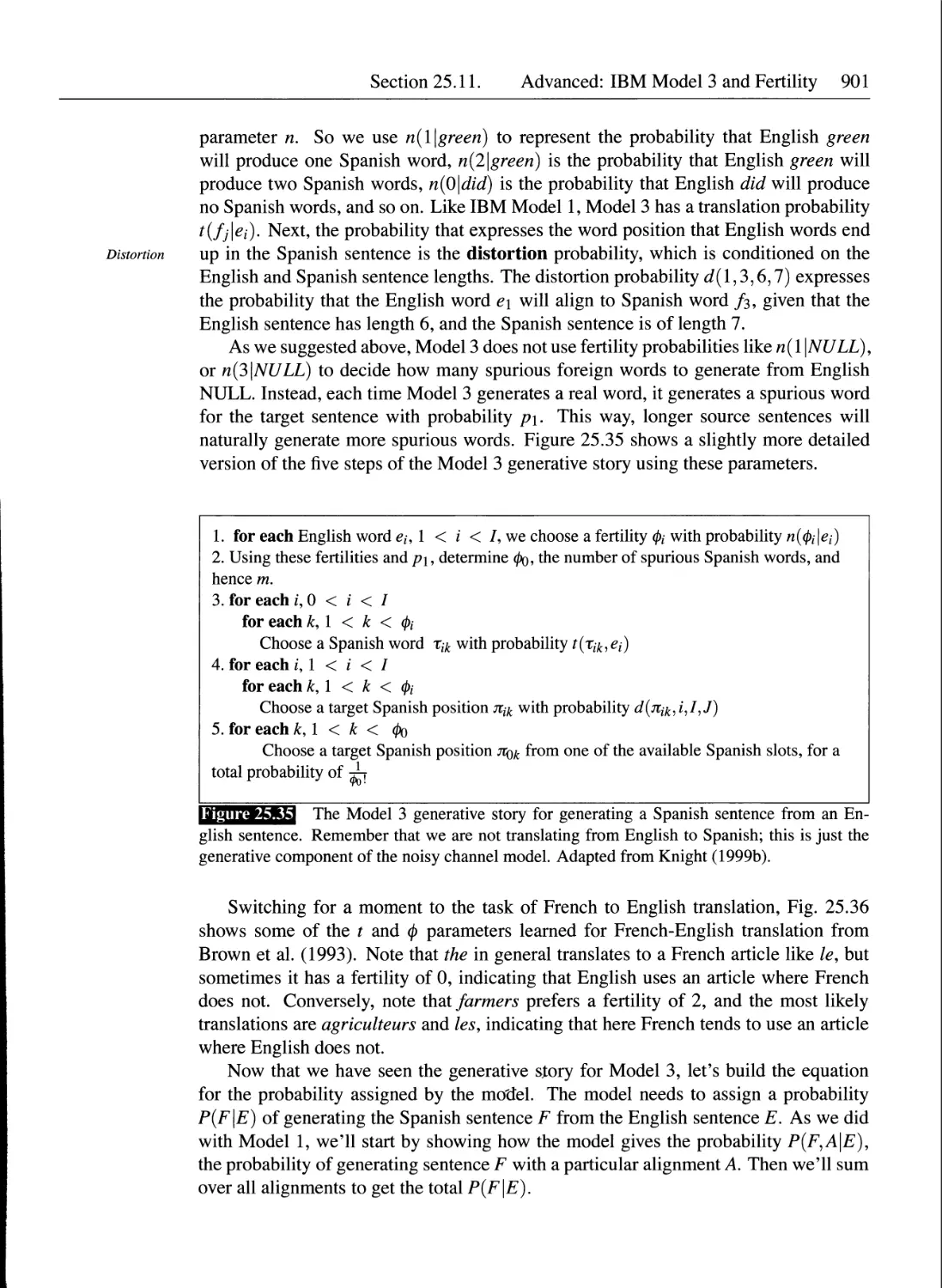

25.11 Advanced: IBM Model 3 and Fertility................................899

25.11.1 Training for Model 3........................................903

25.12 Advanced: Log-Linear Models for MT.................................903

25.13 Summary............................................................904

Bibliographical and Historical Notes.......................................905

Exercises..................................................................907

Bibliography 909

Author Index 959

Subject Index 971

Foreword

Linguistics has a hundred-year history as a scientific discipline, and computational

linguistics has a fifty-year history as a part of computer science. But it is only in the last

decade or so that language understanding has emerged as an industry reaching millions

of people, with information retrieval and machine translation available on the Internet,

and speech recognition becoming popular on desktop computers. This industry has

been enabled by theoretical advances in the representation and processing of language

information.

Speech and Language Processing is the first book to thoroughly cover language

technology, at all levels and with all modern technologies. It combines deep linguistic

analysis with robust statistical methods. From the point of view of levels, the book

starts with the word and its components, covers the properties of sequences of words

and how they are spoken and understood, then moves up to the way words fit together

(syntax), form meaning (semantics), and serve as the basis of question answering, di-

alog, and translation between languages. From the point of view of technologies, the

book covers regular expressions, information retrieval, context free grammars, unifi-

cation, first-order predicate calculus, hidden Markov and other probabilistic models,

rhetorical structure theory, and others. Previously you would need two or three books

to get this kind of coverage. Speech and Language Processing covers the full range

in one book, but more importantly, it relates the technologies to each other, giving the

reader a sense of how each one is best used and how they can be used together. It

does all this with an engaging style that keeps the reader's interest and motivates the

technical details in a way that is thorough but not dry. Whether you're interested in

the field from the scientific or the industrial point of view, this book serves as an ideal

introduction, reference, and guide to future study of this fascinating field.

Since the first edition of this book in 2000, the field has advanced in several ways.

There are more applications of language technology in widespread use. The availability

of large collections of language data—both written and spoken—has brought on an

even greater reliance on statistical machine learning. This second edition of the book

covers these new developments well from both a theoretical and practical viewpoint.

The organization of the book also makes it easier for the reader or instructor to choose

which parts to learn—there are fewer dependencies between sections and chapters.

While the field of language processing has seen some excellent expository writing since

the publication of the first edition, this book remains the best introduction to the field

as a whole.

PETER NORVIG and STUART RUSSELL, Editors

Prentice Hall Series in Artificial Intelligence

xxiii

Preface

This is an exciting time to be working in speech and language processing. Histori-

cally distinct fields (natural language processing, speech recognition, computational

linguistics, computational psycholinguistics) have begun to merge. The explosion of

Web-based language techniques, the commercial availability of telephone-based dia-

logue systems, and speech synthesis and speech recognition have provided an impor-

tant impetus for the development of real systems. The availability of very large on-line

corpora has enabled statistical models of language at every level, from phonetics to

discourse. We have tried to draw on this emerging state of the art in the design of this

pedagogical and reference work.

1. Coverage

In attempting to describe a unified vision of speech and language processing,

we cover areas that traditionally are taught in different courses in different de-

partments: speech recognition in electrical engineering; parsing, semantic in-

terpretation, and machine translation in natural language processing courses in

computer science departments; and computational morphology, phonology, and

pragmatics in computational linguistics courses in linguistics departments. The

book introduces the fundamental algorithms of each of these fields, whether orig-

inally proposed for spoken or written language, whether logical or statistical in

origin, and attempts to tie together the descriptions of algorithms from differ-

ent domains. We have also covered applications like machine translation, spell-

checking, and information retrieval and extraction as well as areas like cogni-

tive modeling. A potential problem with this broad-coverage approach is that it

required us to include introductory material for each field; thus, linguists may

want to skip our description of articulatory phonetics, computer scientists may

not need such sections as regular expressions, and electrical engineers may not

need to use the sections on signal processing. Of course, even in a book this long,

we didn't have room for everything. Thus, this book should not be considered a

substitute for important relevant courses in linguistics, automata and formal lan-

guage theory, artificial intelligence, machine learning, statistics, or information

theory.

2. Emphasis on practical applications

It is important to show how language-related algorithms and techniques (from

hidden Markov models (HMMs) to unification, from the lambda calculus to log-

linear models) can be applied to important real-world problems: speech recogni-

tion, machine translation, information extraction from the Web, spell checking,

text document search, and spoken-language dialogue. We have attempted to do

this by integrating the description of language processing applications into each

chapter. The advantage of this approach is that as the relevant linguistic knowl-

edge is introduced, the student has the background to understand and model a

particular domain.

3. Emphasis on scientific evaluation

The recent prevalence of statistical algorithms in language processing and the

growth of organized evaluations of speech and language processing systems has

xxv

xxvi Preface

led to a new emphasis on evaluation. Most chapters therefore include an evalua-

tion section describing modern empirical methods for evaluating systems and an-

alyzing errors, including such concepts as training and test sets, cross-validation,

and information-theoretic evaluation metrics like perplexity.

4. Description of widely available language processing resources

Modern speech and language processing is heavily based on common resources:

raw speech and text corpora, annotated corpora and treebanks, and standard

tagsets. We have tried to introduce many of these important resources through-

out the book (e.g., the Brown, Switchboard, Fisher, CALLHOME, ATIS, TREC,

MUC, and BNC corpora) and provide complete listings of many useful tagsets

and coding schemes (such as the Penn Treebank, CLAWS tagset, and the ARPA-

bet), but some inevitably got left out. Rather than include references to URLs for

many resources directly in the textbook, we have generally placed them on the

book's Web site, where they can more readily be updated, at

http: L/www.cs.Colorado. edu/-martin/slp. h tm 1

The book is primarily intended for use in a graduate or advanced undergraduate

course or sequence. Because of its comprehensive coverage and the large number of

algorithms, the book is also useful as a reference for students and professionals in any

of the areas of speech and language processing.

Overview of the Book

The book is divided into five parts in addition to an introduction and end matter.

Part I, "Words", introduces concepts related to the processing of words and simple

word sequences: word segmentation, word morphology, word edit distance, parts-

of-speech, and the algorithms used to process them: regular expressions, finite au-

tomata, finite transducers, N-grams, Hidden Markov Models, and log-linear models.

Part II, "Speech", introduces linguistic phonetics and then covers speech synthesis,

speech recognition, and linguistic topics in computational phonology. Part III, "Syn-

tax", introduces phrase-structure grammars for English and gives essential algorithms

for processing structured syntactic relationships among words: the CKY and Earley

algorithms for parsing, statistical parsing, unification and typed feature structures, and

analytical tools like the Chomsky hierarchy and the pumping lemma. Part IV, "Se-

mantics and Pragmatics", introduces first-order logic and other ways of representing

meaning, the lambda calculus, lexical semantics, lexical semantic resources such as

WordNet, PropBank, and FrameNet, and computational models of lexical semantics

for word similarity, word sense disambiguation, and discourse topics like coreference

and coherence. Part V, "Applications", covers information extraction, machine transla-

tion, and dialog and conversational agents.

Using This Book

The book provides enough material to be used for a fulLyear sequence in speech and

language processing. It is also designed so that it can be used for a number of different

useful one-term courses:

Preface xxvii

NLP 1 quarter NLP 1 semester Speech + NLP 1 semester Comp. Ling. 1 quarter

1. Intro 1. Intro 1. Intro 1. Intro

2. Regex, FSA 2. Regex, FSA 2. Regex, FSA 2. Regex, FSA

4. iV-grams 4. iV-grams 4. TV-grams 3. Morph., FST

5. PCS tagging 5. PCS tagging 5. POS tagging 4. yV-grams

12. CFGs 6. HMMS 6. HMMs 5. POS tagging

13. Parsing 12. CFGs 8. TTS 13. Parsing

14. Stat. Parsing 13. Parsing 9. ASR 14. Stat. Parsing

19. Lex. Semantics 14. Stat. Parsing 12. CFGs 15. Complexity

20. Comp. Lex. Sem. 17. Semantics 13. Parsing 16. Unification

23. QA & Summar. 18. Comp. Semantics 14. Stat. Parsing 20. Comp. Lex. Sem.

25. MT 19. Lex. Semantics 17. Semantics 21. Discourse

20. Comp. Lex. Sem. 19. Lex. Sem.

21. Discourse 20. Comp. Lex. Sm.

22. IE 22. IE

23. QA & Summar. 24. Dialog

25. MT 25. MT

Selected chapters from the book could also be used to augment courses in Artifi-

cial Intelligence, Cognitive Science, Information Retrieval, or Electrical Engineering-

oriented courses in Speech Processing.

All resources associated with this book can be accessed at

http://www.prenhall.com/iurafsky-martin

Password protected instructor materials include lecture PowerPoint slides and solu-

tions to exercises in the text. Other resources including online versions of figures and

pointers to web content can be accessed via this URL or directly at

http://www.es.colorado.edu/~martin/slp.html

Acknowledgments

Andy Kehler wrote the Discourse chapter for the first edition, which we used as the

starting point for this second-edition chapter. Much of Andy's prose and structure re-

main throughout the chapter. Similarly, Nigel Ward wrote most of the Machine Trans-

lation chapter for the first edition, which we used as the starting point for the MT

chapter of this second edition. Much of his text remains, particularly in Sections 25.2,

25.3, and the exercises. Kevin Bretonnel Cohen wrote Section 22.5 on biomedical in-

formation extraction. Keith Vander Linden wrote the Generation chapter in the first

edition. Feng Zhiwei, aided by Sun Le, translated the first edition to Chinese.

Boulder and Stanford have been very rewarding places to work on speech and lan-

guage processing. We'd like to thank our departments, our colleagues, and our students

at both places for their collaborations, which have greatly influenced our research and

teaching.

Dan would like to thank his parents for always encouraging him to do the right

thing and do it in a timely fashion. He would also like to thank Nelson Morgan, for

introducing him to speech recognition and teaching him to ask "but does it work?";

Jerry Feldman, for sharing his commitment to finding the right answers and teaching

him to ask "but is it really important?"; Chuck Fillmore, his first advisor, for sharing

xxviii Preface

his love for language and teaching him to always go look at the data; Robert Wilensky,

his dissertation advisor, for teaching him the importance of collaboration and group

spirit in research; Chris Manning for being a superb collaborator at Stanford, and of

course all his fantastic former colleagues at Boulder.

Jim would like to thank his parents for encouraging him and allowing him to fol-

low what must have seemed like an odd path at the time. He would also like to thank

his dissertation advisor, Robert Wilensky, for giving him his start in NLP at Berkeley;

Peter Norvig, for providing many positive examples along the way; Rick Alterman, for

encouragement and inspiration at a critical time; Chuck Fillmore, George Lakoff, Paul

Kay, and Susanna Cumming for teaching him what little he knows about linguistics;

and Martha Palmer, Tammy Sumner, and Wayne Ward for being wonderful collabora-

tors at Boulder. Finally, Jim would like to thank his wife Linda for all her support and

patience through the years, and his daughter Katie, who has waited her entire life for

the completion of this edition.

We are grateful to the many people who helped enormously on the first edition of

the book. This second edition has also benefited from our many readers and from their

course-testing. Special thanks for very helpful comments and ideas on wide swaths of

the book to Regina Barzilay, Philip Resnik, Emily Bender, and Adam Przepiorkowski.

Our editor Tracy Dunkelberger, our senior managing editor Scott Disanno, our series

editors Peter Norvig and Stuart Russell, and our production editor Jane Bonnell made

many helpful suggestions on design and content. We are also indebted to many friends

and colleagues who read individual sections of the book or answered our many ques-

tions for their comments and advice, including the students in our classes at the Univer-

sity of Colorado, Stanford University, and the LSA Summer Institutes at the University

of Illinois at Urbana-Champaign (1999), MIT (2005), and Stanford (2007), as well as

Ricks op den Akker, Kayra Akman, Angelos Alexopoulos, Robin Aly, S. M.

Niaz Arifin, Nimar S. Arora, Tsz-Chiu Au, Bai Xiaojing, Ellie Baker, Jason

Baldridge, Clay Beckner, Rafi Benjamin, Steven Bethard, G. W. Blackwood,

Steven Bills, Jonathan Boiser, Marion Bond, Marco Aldo Piccolino Boniforti,

Onn Brandman, Chris Brew, Tore Bruland, Denis Bueno, Sean M. Burke, Dani

Byrd, Bill Byrne, Kai-Uwe Carstensen, Alejandro Cdebaca, Dan Cer, Nate Cham-

bers, Pichuan Chang, Grace Chung, Andrew Clausen, Raphael Cohn, Kevin B.

Cohen, Frederik Copperis, Stephen Cox, Heriberto Cuayahuitl, Martin Davids-

son, Paul Davis, Jon Dehdari, Franz Deuzer, Mike Dillinger, Bonnie Dorr, Jason

Eisner, John Eng, Ersin Er, Hakan Erdogan, Giilsen Eryigit, Barbara Di Euge-

nio, Christiane Fellbaum, Eric Fosler-Lussier, Olac Fuentes, Mark Gawron, Dale

Gerdemann, Dan Gildea, Filip Ginter, Cynthia Girand, Anthony Gitter, John A.

Goldsmith, Michelle Gregory, Rocio Guillen, Jeffrey S. Haemer, Adam Hahn,

Patrick Hall, Harald Hammarstrom, Mike Hammond, Eric Hansen, Marti Hearst,

Paul Hirschbiihler, Julia Hirschberg, Graeme Hirst, Julia Hockenmaier, Jeremy

Hoffman, Greg Hiillender, Rebecca Hwa, Gaja Jarosz, Eric W. Johnson, Chris

Jones, Edwin de Jong, Bernadette Joret, Fred Karlsson, Graham Katz, Stefan

Kaufmann, Andy Kehler, Manuel Kirschner, Dan Klein Sheldon Klein, Kevin

Knight, Jean-Pierre Koenig, Greg Kondrak, Selcuk Kopru, Kimmo Koskenniemi,

Alexander Kostyrkin, Mikko Kurimo, Mike LeBeau, Chia-Ying Lee, Jaeyong

Preface xxix

Lee, Scott Leishman, Szymon Letowski, Beth Levin, Roger Levy, Liuyang Li,

Marc Light, Greger Lind'en, Pierre Lison, Diane Litman, Chao-Lin Liu, Feng

Liu, Roussanka Louka, Artyom Lukanin, Jean Ma, Maxim Makatchev, Inder-

jeet Mani, Chris Manning, Steve Marmon, Marie-Catherine de Marneffe, Hen-

drik Maryns, Jon May, Dan Melamed, Laura Michaelis, Johanna Moore, Nelson

Morgan, Emad Nawfal, Mark-Jan Nederhof, Hwee Tou Ng, John Niekrasz, Rod-

ney Nielsen, Yuri Niyazov, Tom Nurkkala, Kris Nuttycombe, Valerie Nygaard,

Mike O'Connell, Robert Oberbreckling, Scott Olsson, Woodley Packard, Gabor

Palagyi, Bryan Pellom, Gerald Penn, Rani Pinchuk, Sameer Pradhan, Kathryn

Pruitt, Drago Radev, Dan Ramage, William J. Rapaport, Ron Regan, Ehud Re-

iter, Steve Renals, Chang-han Rhee, Dan Rose, Mike Rosner, Deb Roy, Teodor

Rus, William Gregory Sakas, Murat Saraclar, Stefan Schaden, Anna Schapiro,

Matt Shannon, Stuart C. Shapiro, Ilya Sherman, Lokesh Shrestha, Nathan Sil-

berman, Noah Smith, Otakar Smrz, Rion Snow, Andreas Stolcke, Niyue Tan,

Frank Yung-Fong Tang, Ahmet Ciineyd Tantug, Paul Taylor, Lome Temes, Rich

Thomason, Aimer S. Tigelaar, Richard Trahan, Antoine Trux, Clement Wang,

Nigel Ward, Wayne Ward, Rachel Weston, Janyce Wiebe, Lauren Wilcox, Ben

Wing, Dean Earl Wright III, Dekai Wu, Lei Wu, Eric Yeh, Alan C. Yeung, Mar-

galit Zabludowski, Menno van Zaanen, Zhang Sen, Sam Shaojun Zhao, and

Xingtao Zhao.

We are also grateful for kind permission to reprint two figures: Fig. 7.3 (©Laszlo

Kubinyi and Scientific American) and Fig. 9.14 (©Paul Taylor and Cambridge Uni-

versity Press). In addition, we created many of our figures as adaptations of pre-

vious figures; we are grateful to the people listed below (for brevity we've listed

just one author in each case) for permission to adapt their figures. The following

three figures are ©IEEE and the authors; we thank Esther Levin (Fig. 24.22) and

Lawrence Rabiner (Figs. 6.14 and 6.15). The other adapted figures below are © the

authors; thanks to the Association of Computational Linguistics, the Journal of Com-

putational Linguistics and its editor Robert Dale, and to Regina Barzilay (Fig. 23.19),

Michael Collins (Figs. 14.7, 14.10, and 14.11), John Goldsmith (Fig. 11.18), Marti

Hearst (Figs. 21.1 and 21.2), Kevin Knight (Fig. 25.35), Philipp Koehn (Figs. 25.25,

25.26, and 25.28), Dekang Lin (Fig. 20.7), Chris Manning (Fig. 14.9), Daniel Marcu

(Fig. 23.16), Mehryar Mohri (Figs. 3.10 and 3.11), Julian Odell (Fig. 10.14), Marilyn

Walker (Figs. 24.8, 24.14, and 24.15), David Yarowsky (Fig. 20.4), and Steve Young

(Fig. 10.16).

DANIEL JURAFSKY

Stanford, California

JAMES H. MARTIN

Boulder, Colorado

About the Authors

Dan Jurafsky is an associate professor in the Department of Linguistics, and by cour-

tesy in the Department of Computer Science, at Stanford University. Previously, he was

on the faculty of the University of Colorado, Boulder, in the Linguistics and Computer

Science departments and the Institute of Cognitive Science. He was born in Yonkers,

New York, and received a B.A in Linguistics in 1983 and a Ph.D. in Computer Science

in 1992, both from the University of California at Berkeley. He received the National

Science Foundation CAREER award in 1998 and the Mac Arthur Fellowship in 2002.

He has published over 90 papers on a wide range of topics in speech and language

processing.

James H. Martin is a professor in the Department of Computer Science and in the

Department of Linguistics, and a fellow in the Institute of Cognitive Science at the

University of Colorado at Boulder. He was born in New York City, received a B.S. in

Computer Science from Columbia University in 1981 and a Ph.D. in Computer Science

from the University of California at Berkeley in 1988. He has authored over 70 publi-

cations in computer science including the book A Computational Model of Metaphor

Interpretation.

xxxi

Chapter 1

Introduction

Dave Bowman: Open the pod bay doors, HAL.

HAL: I'm sorry Dave, I'm afraid 1 can't do that.

Stanley Kubrick and Arthur C. Clarke,

screenplay of 2001: A Space Odyssey

Conversational

agent

Dialogue system

Machine

translation

Question

answering

The idea of giving computers the ability to process human language is as old as the idea

of computers themselves. This book is about the implementation and implications of

that exciting idea. We introduce a vibrant interdisciplinary field with many names cor-

responding to its many facets, names like speech and language processing, human

language technology, natural language processing, computational linguistics, and

speech recognition and synthesis. The goal of this new field is to get computers

to perform useful tasks involving human language, tasks like enabling human-machine

communication, improving human-human communication, or simply doing useful pro-

cessing of text or speech.

One example of a useful such task is a conversational agent. The HAL 9000 com-

puter in Stanley Kubrick's film 2007: A Space Odyssey is one of the most recognizable

characters in 20th century cinema. HAL is an artificial agent capable of such advanced

language behavior as speaking and understanding English, and at a crucial moment in

the plot, even reading lips. It is now clear that HAL's creator, Arthur C. Clarke, was

a little optimistic in predicting when an artificial agent such as HAL would be avail-

able. But just how far off was he? What would it take to create at least the language-

related parts of HAL? We call programs like HAL that converse with humans in natural

language conversational agents or dialogue systems. In this text we study the vari-

ous components that make up modern conversational agents, including language input

(automatic speech recognition and natural language understanding) and language

output (dialogue and response planning and speech synthesis).

Let's turn to another useful language-related task, that of making available to non-

English-speaking readers the vast amount of scientific information on the Web in En-

glish. Or translating for English speakers the hundreds of millions of Web pages written

in other languages like Chinese. The goal of machine translation is to automatically

translate a document from one language to another. We introduce the algorithms and

mathematical tools needed to understand how modern machine translation works. Ma-

chine translation is far from a solved problem; we cover the algorithms currently used

in the field, as well as important component tasks.

Many other language processing tasks are also related to the Web. Another such

task is Web-based question answering. This is a generalization of simple Web search,

where instead ofjust typing keywords, a user might ask complete questions, ranging

from easy to hard, like the following:

• What does "divergent" mean?

• What year was Abraham Lincoln born?

• How many states were in the United States that year?

1

2 Chapter 1.

Introduction

• How much Chinese silk was exported to England by the end of the 18th century?

• What do scientists think about the ethics of human cloning?

Some of these, such as definition questions, or simple factoid questions like dates

and locations, can already be answered by search engines. But answering more com-

plicated questions might require extracting information that is embedded in other text

on a Web page, doing inference (drawing conclusions based on known facts), or syn-

thesizing and summarizing information from multiple sources or Web pages. In this

text we study the various components that make up modern understanding systems of

this kind, including information extraction, word sense disambiguation, and so on.

Although the subfields and problems we've described above are all very far from

completely solved, these are all very active research areas and many technologies are

already available commercially. In the rest of this chapter, we briefly summarize the

kinds of knowledge that are necessary for these tasks (and others like spelling cor-

rection, grammar checking, and so on), as well as the mathematical models that are

introduced throughout the book.

1.1 Knowledge in Speech and Language Processing

What distinguishes language processing applications from other data processing sys-

tems is their use of knowledge of language. Consider the Unix wc program, which

counts the total number of bytes, words, and lines in a text file. When used to count

bytes and lines, wc is an ordinary data processing application. However, when it is

used to count the words in a file, it requires knowledge about what it means to be a

word and thus becomes a language processing system.

Of course, wc is an extremely simple system with an extremely limited and impov-

erished knowledge of language. Sophisticated conversational agents like HAL, ma-

chine translation systems, or robust question-answering systems require much broader

and deeper knowledge of language. To get a feeling for the scope and kind of required

knowledge, consider some of what HAL would need to know to engage in the dia-

logue that begins this chapter, or for a question-answering system to answer one of the

questions above.

HAL must be able to recognize words from an audio signal and to generate an audio

signal from a sequence of words. These tasks of speech recognition and speech syn-

thesis require knowledge about phonetics and phonology: how words are pronounced

in terms of sequences of sounds and how each of these sounds is realized acoustically.

Note also that unlike Commander Data in "Star Trek", HAL is capable of producing

contractions like I'm and can't. Producing and recognizing these and other variations

of individual words (e.g., recognizing that doors is plural) requires knowledge about

morphology, the way words break down into component parts that carry meanings like

singular versus plural.

Moving beyond individual words, HAL must use structural knowledge to properly

string together the words that constitute its response. For example, HAL must know

that the following sequence of words will not make sense to Dave, despite the fact that

Section 1.1.

Knowledge in Speech and Language Processing 3

it contains precisely the same set of words as the original.

(1.1) I'm I do, sorry that afraid Dave I'm can't.

The knowledge needed to order and group words comes under the heading of syn-

tax.

Now consider a question-answering system dealing with the following question:

(1.2) How much Chinese silk was exported to Western Europe by the end of the 18th

century?

To answer this question, we need to know something about lexical semantics, the

meaning of all the words {export or silk) as well as compositional semantics (what

exactly constitutes Western Europe as opposed to Eastern or Southern Europe, what

does end mean when combined with the 18th century. We also need to know something

about the relationship of the words to the syntactic structure. For example, we need to

know that by the end of the 18th century is a temporal end-point and not a description

of the agent, as the by-phrase is in the following sentence:

(1.3) How much Chinese silk was exported to Western Europe by southern

merchants?

We also need the kind of knowledge that lets HAL determine that Dave's utterance

is a request for action, as opposed to a simple statement about the world or a question

about the door, as in the following variations of his original statement.

REQUEST: ElAL, open the pod bay door.

STATEMENT: ElAL, the pod bay door is open.

INFORMATION QUESTION: HAL, is the pod bay door open?

Next, despite its bad behavior, HAL knows enough to be polite to Dave. It could,

for example, have simply replied No or No, I won't open the door. Instead, it first

embellishes its response with the phrases I'm sorry and I'm afraid, and then only indi-

rectly signals its refusal by saying I can't, rather than the more direct (and truthful) I

won't.1 This knowledge about the kind of actions that speakers intend by their use of

sentences is pragmatic or dialogue knowledge.

Another kind of pragmatic or discourse knowledge is required to answer the ques-

tion

(1.4) How many states were in the United States that year?

What year is that year? To interpret words like that year, a question-answering

system needs to examine the earlier questions that were asked; in this case, the previous

question talked about the year that Lincoln was born. Thus, this task of coreference

resolution makes use of knowledge about how words like that or pronouns like it or

she refer to previous parts of the discourse.

To summarize, engaging in complex language behavior requires various kinds of

knowledge of language:

• Phonetics and Phonology — knowledge about linguistic sounds

1 For those unfamiliar with HAL, it is neither sorry nor afraid, nor is it incapable of opening the door. It

has simply decided in a fit of paranoia to kill its crew.

4 Chapter 1.

Introduction

• Morphology — knowledge of the meaningful components of words

• Syntax — knowledge of the structural relationships between words

• Semantics — knowledge of meaning

• Pragmatics — knowledge of the relationship of meaning to the goals and inten-

tions of the speaker

• Discourse — knowledge about linguistic units larger than a single utterance

1.2 Ambiguity

A perhaps surprising fact about these categories of linguistic knowledge is that most

Ambiguity tasks in speech and language processing can be viewed as resolving ambiguity at one

Ambiguous of these levels. We say some input is ambiguous if multiple, alternative linguistic

structures can be built for it. Consider the spoken sentence 1 made her duck. Here are

five different meanings this sentence could have (see if you can think of some more),

each of which exemplifies an ambiguity at some level:

(1.5) I cooked waterfowl for her.

(1.6) I cooked waterfowl belonging to her.

(1.7) I created the (plaster?) duck she owns.

(1.8) I caused her to quickly lower her head or body.

(1.9) I waved my magic wand and turned her into undifferentiated waterfowl.

These different meanings are caused by a number of ambiguities. First, the words

duck and her are morphologically or syntactically ambiguous in their part-of-speech.

Duck can be a verb or a noun, while her can be a dative pronoun or a possessive

pronoun. Second, the word make is semantically ambiguous; it can mean create or

cook. Finally, the verb make is syntactically ambiguous in a different way. Make can

be transitive, that is, taking a single direct object (1.6), or it can be ditransitive, that is,

taking two objects (1.9), meaning that the first object {her) was made into the second

object {duck). Finally, make can take a direct object and a verb (1.8), meaning that the

object {her) was caused to perform the verbal action {duck). Furthermore, in a spoken

sentence, there is an even deeper kind of ambiguity; the first word could have been eye

or the second word maid.

We often introduce the models and algorithms we present throughout the book as

ways to resolve or disambiguate these ambiguities. For example, deciding whether

duck is a verb or a noun can be solved by part-of-speech tagging. Deciding whether

make means "create" or "cook" can be solved by word sense disambiguation. Reso-

lution of part-of-speech and word sense ambiguities are two important kinds of lexical

disambiguation. A wide variety of tasks can be framed as lexical disambiguation

problems. For example, a text-to-speech synthesis system reading the word lead needs

to decide whether it should be pronounced as in lead pipe or as in lead me on. By

contrast, deciding whether her and duck are part of the same entity (as in (1.5) or (1.8))

or are different entities (as in (1.6)) is an example of syntactic disambiguation and

can be addressed by probabilistic parsing. We also consider ambiguities that don't

Section 1.3.

Models and Algorithms 5

arise in this particular example, such as determining whether a sentence is a statement

or a question (which can be resolved by speech act interpretation).

1.3 Models and Algorithms

One of the key insights of the last 50 years of research in language processing is that

the various kinds of knowledge described in the last sections can be captured through

the use of a small number of formal models or theories. Fortunately, these models and

theories are all drawn from the standard toolkits of computer science, mathematics, and

linguistics and should be generally familiar to those trained in those fields. Among the

most important models are state machines, rule systems, logic, probabilistic models,

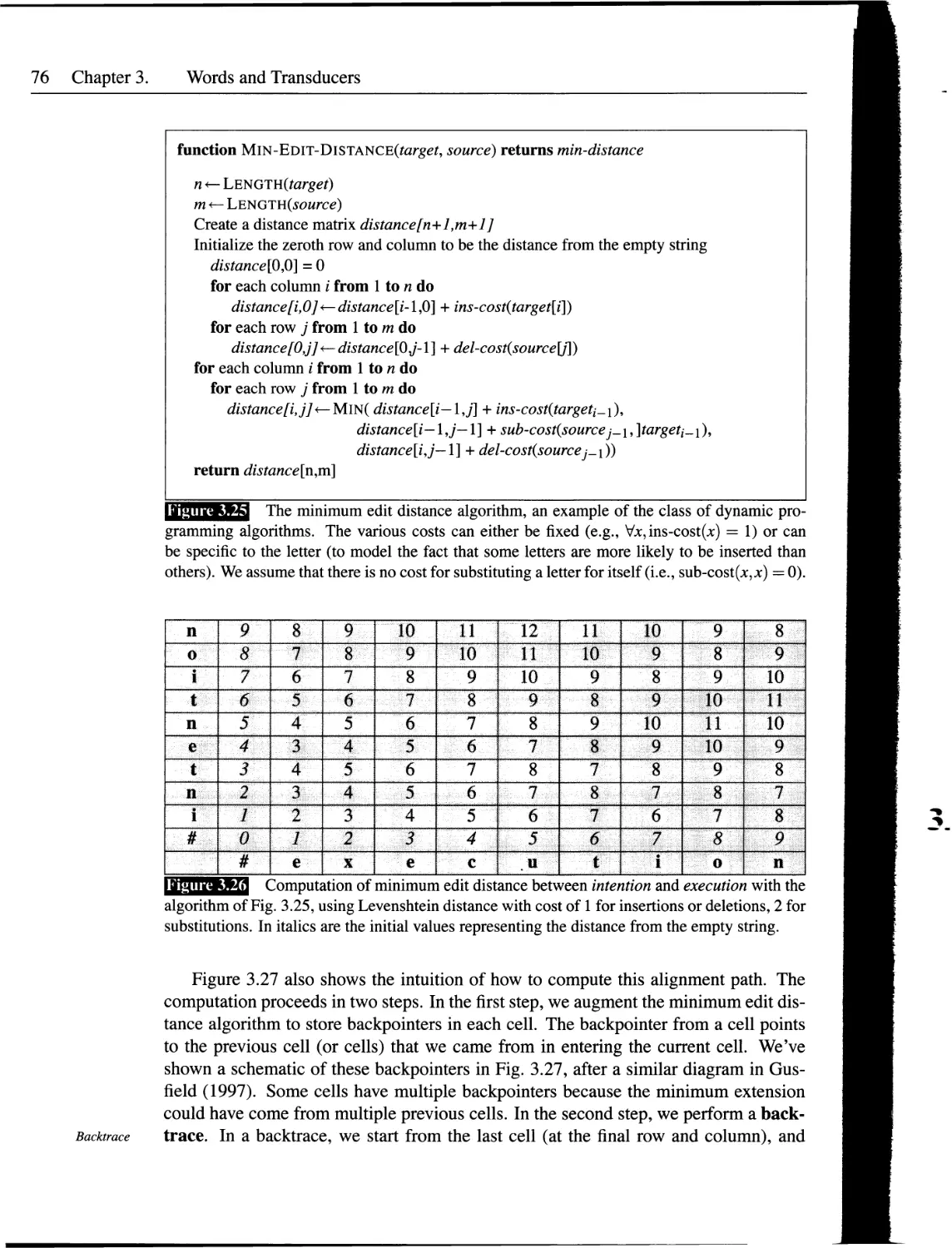

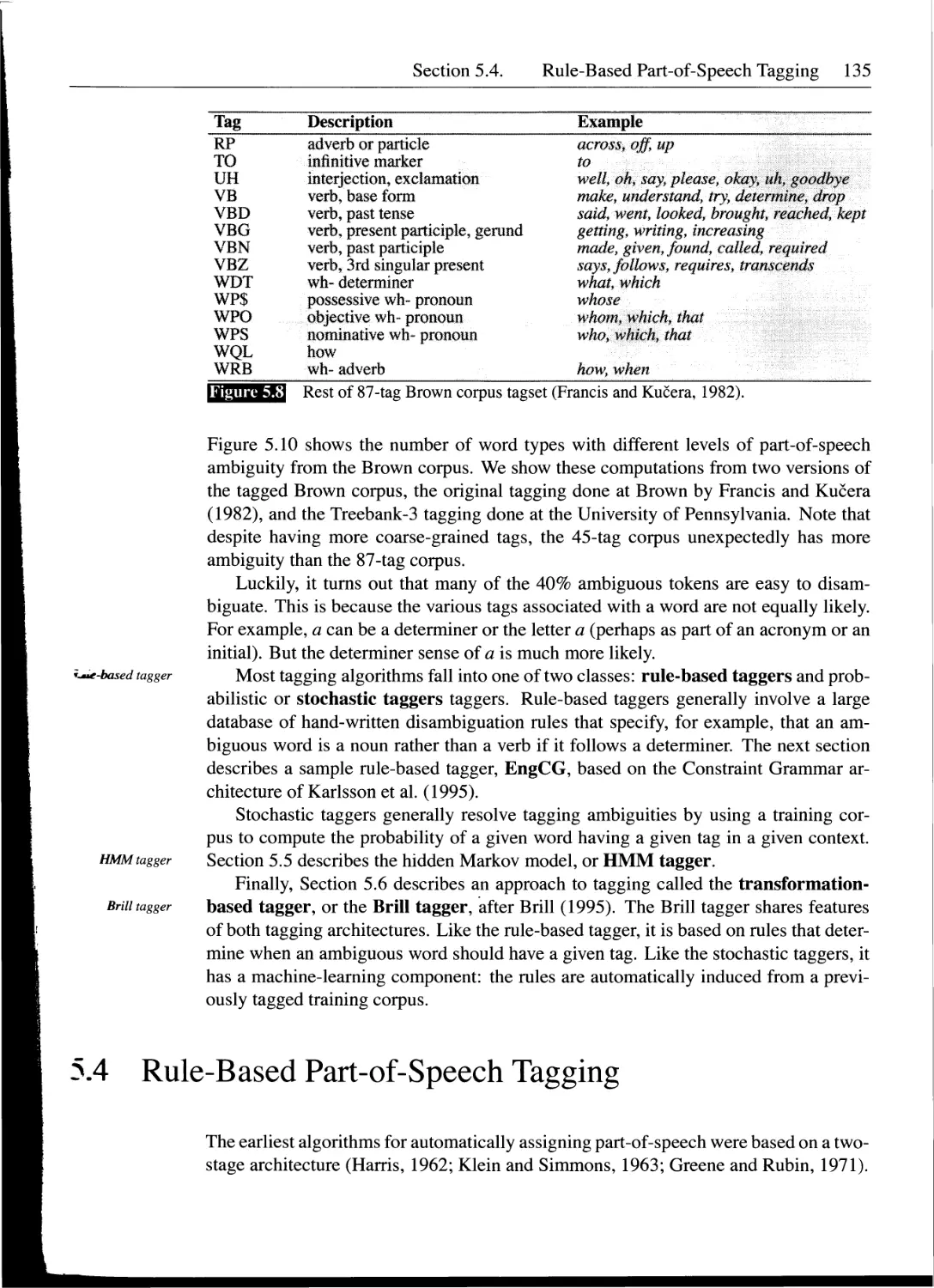

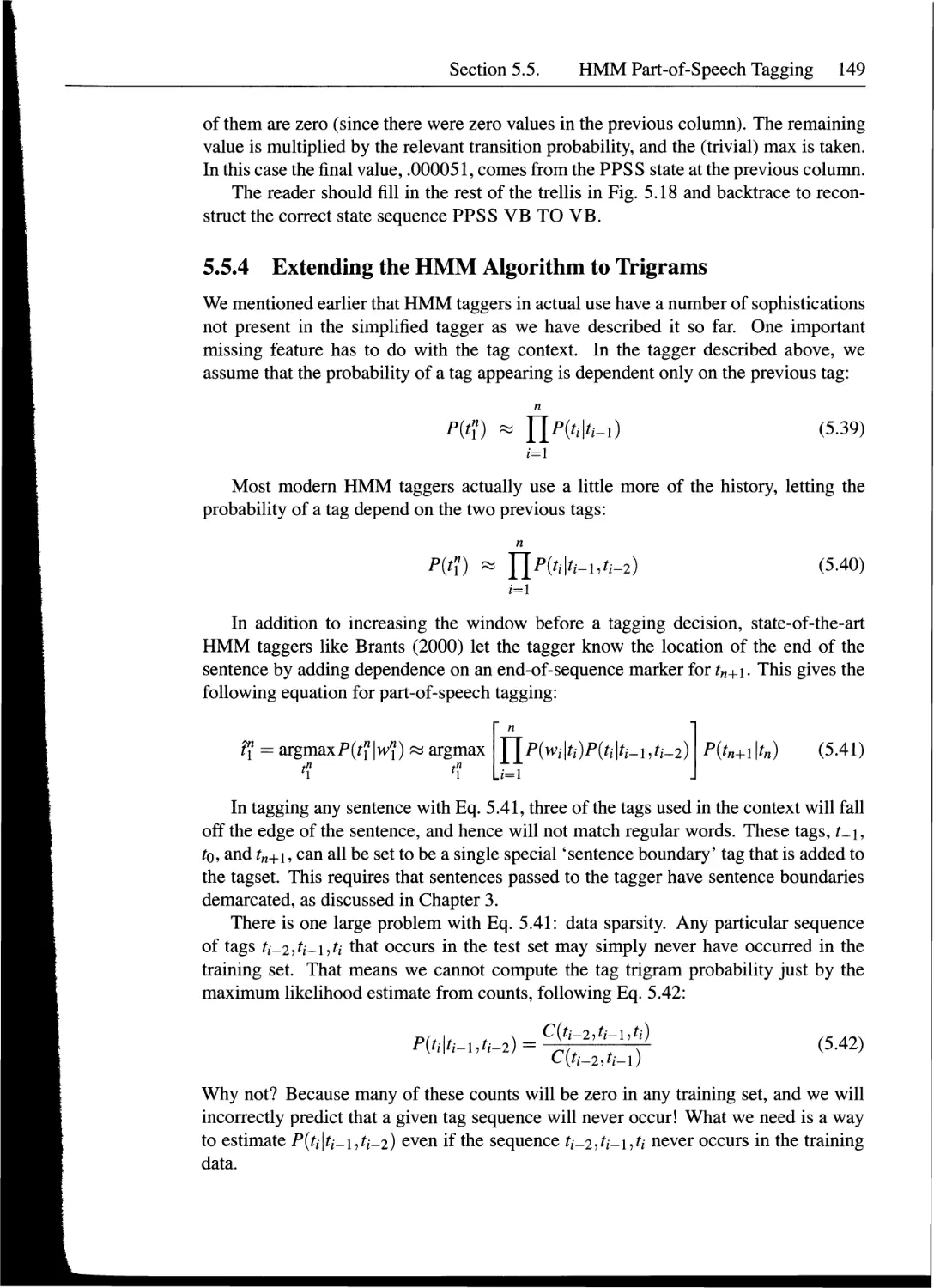

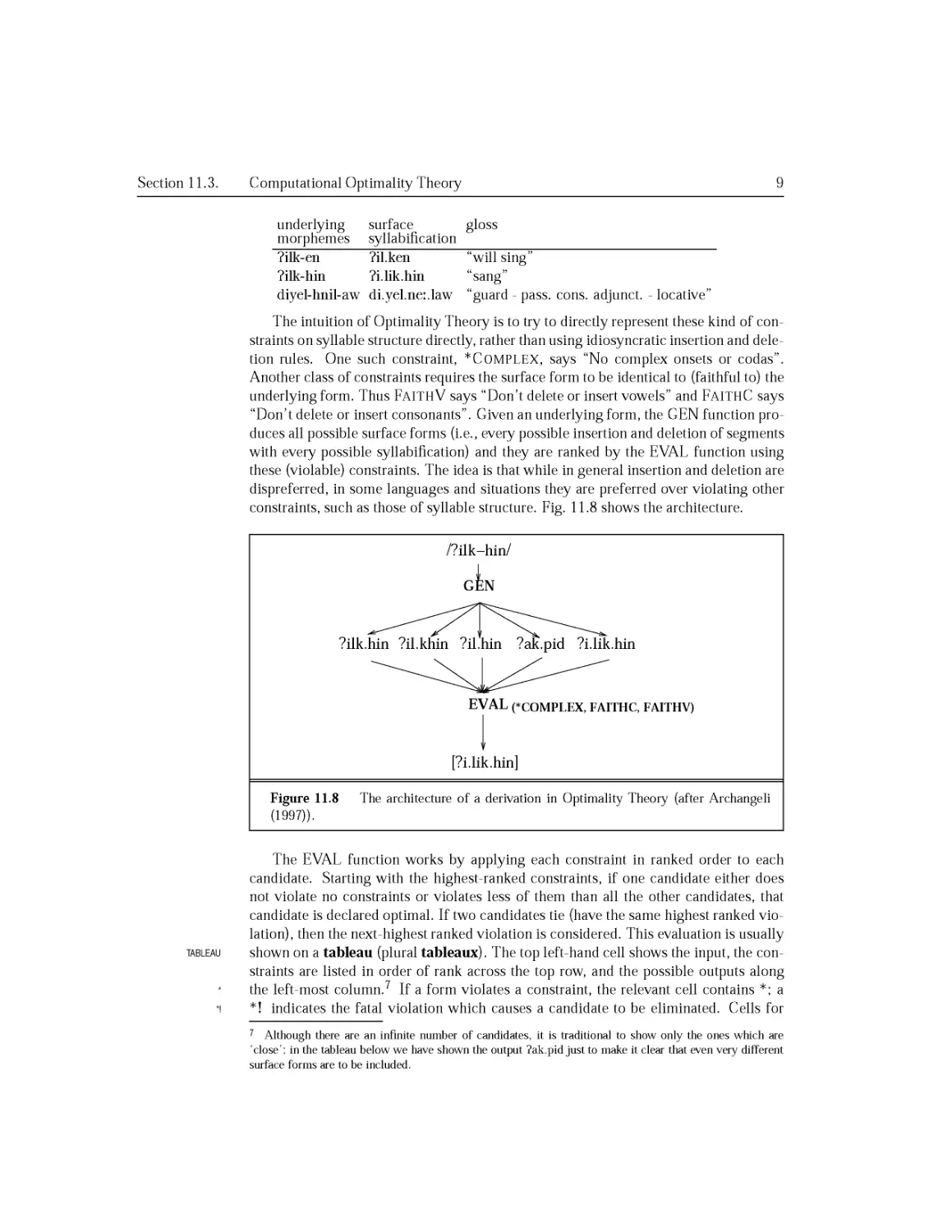

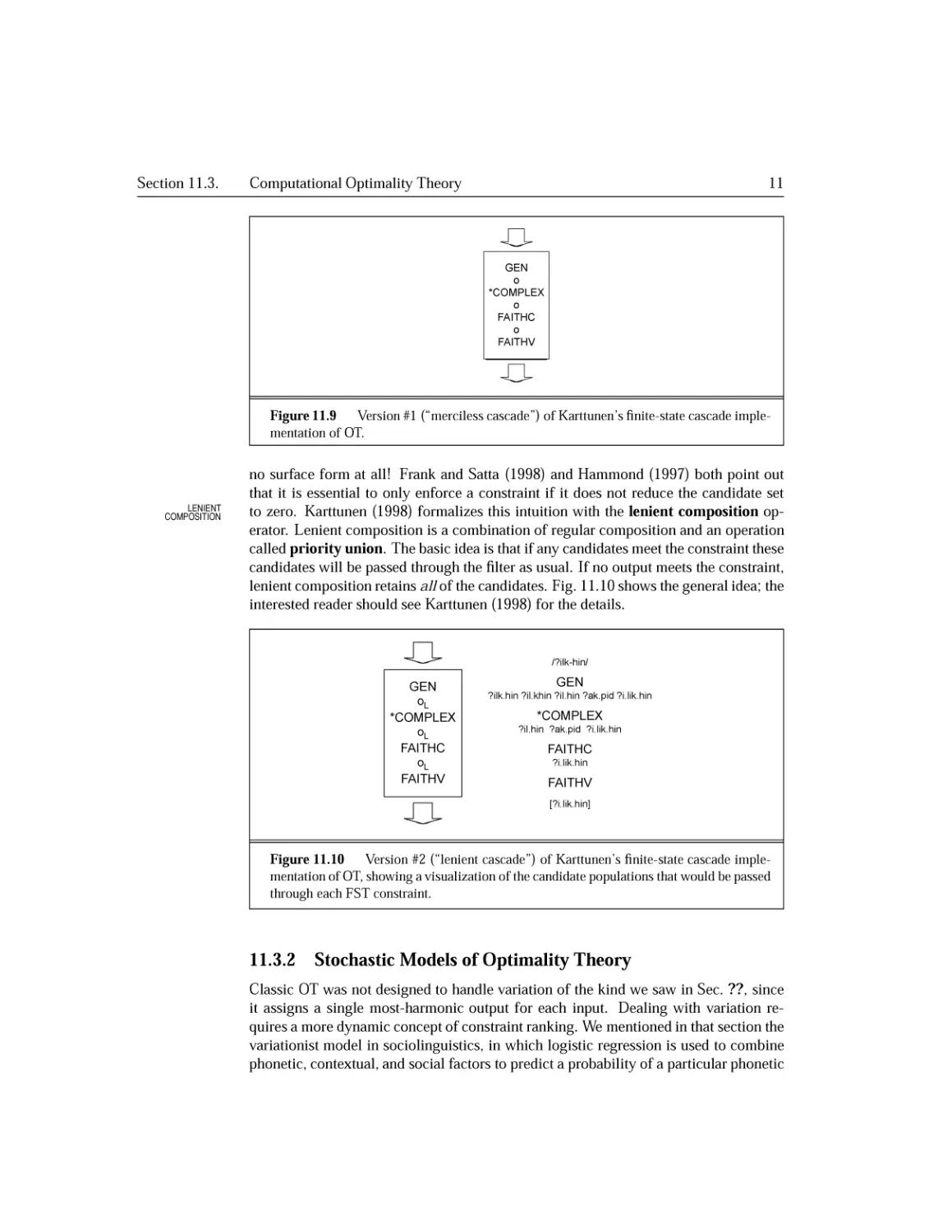

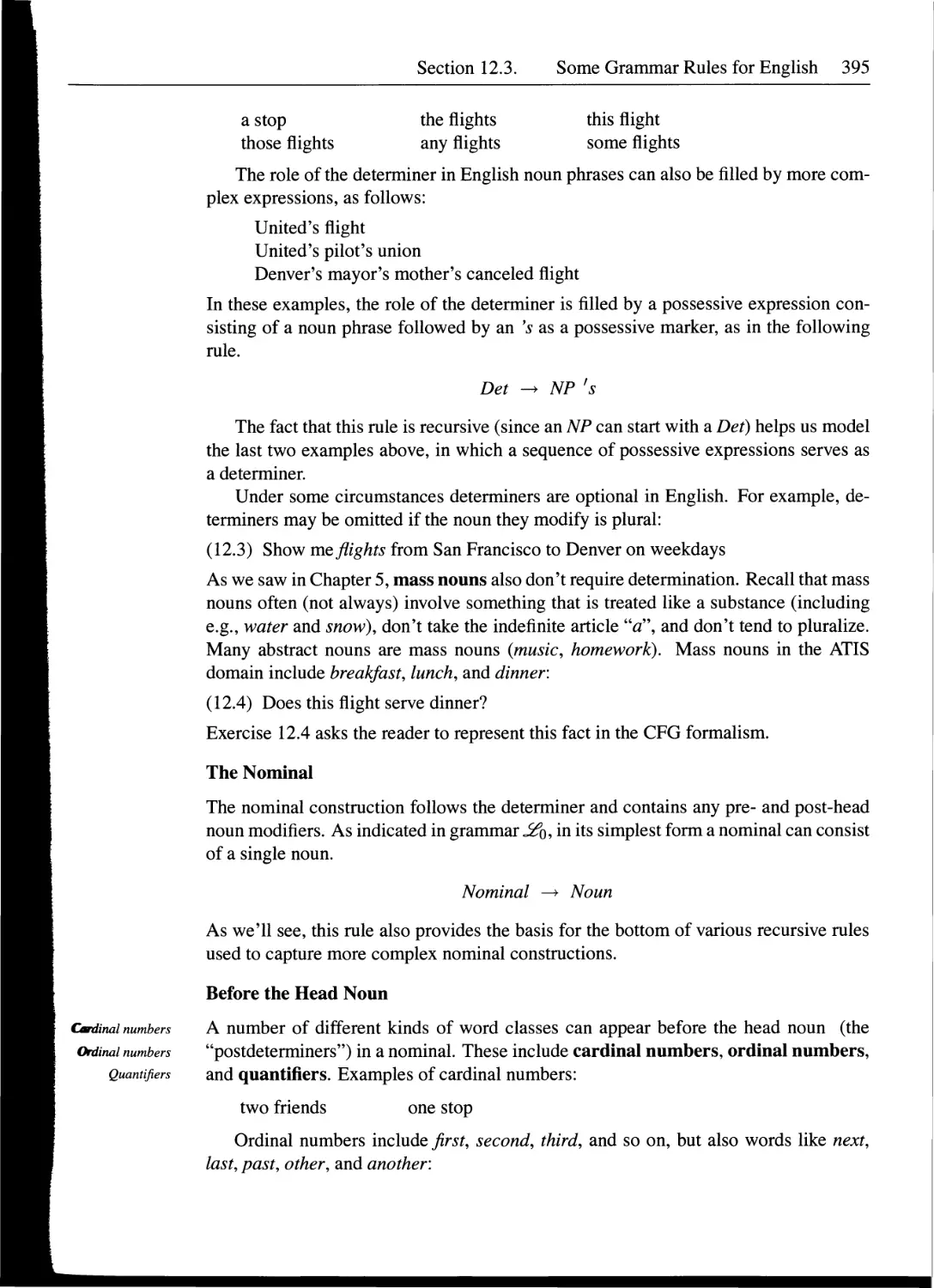

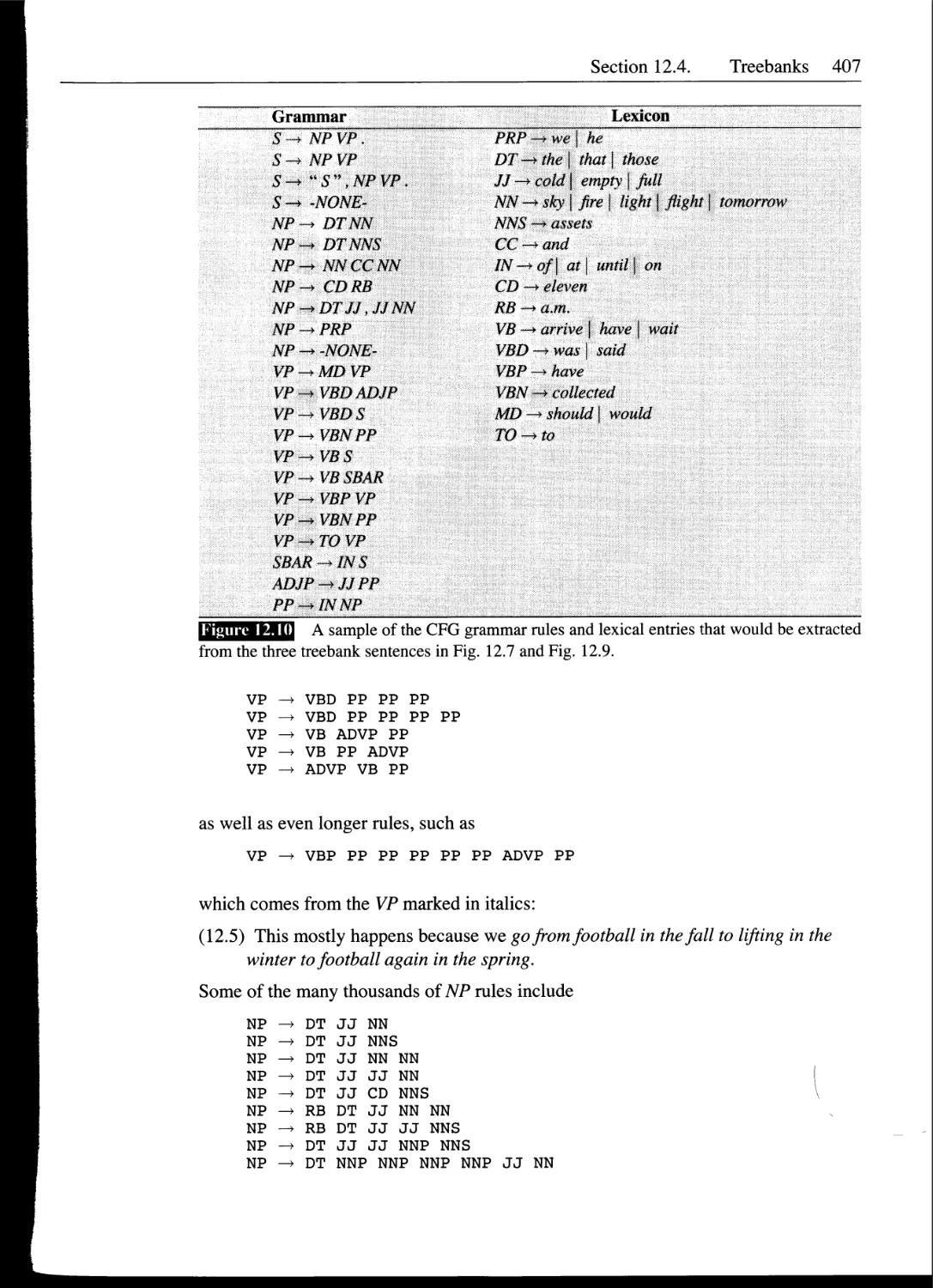

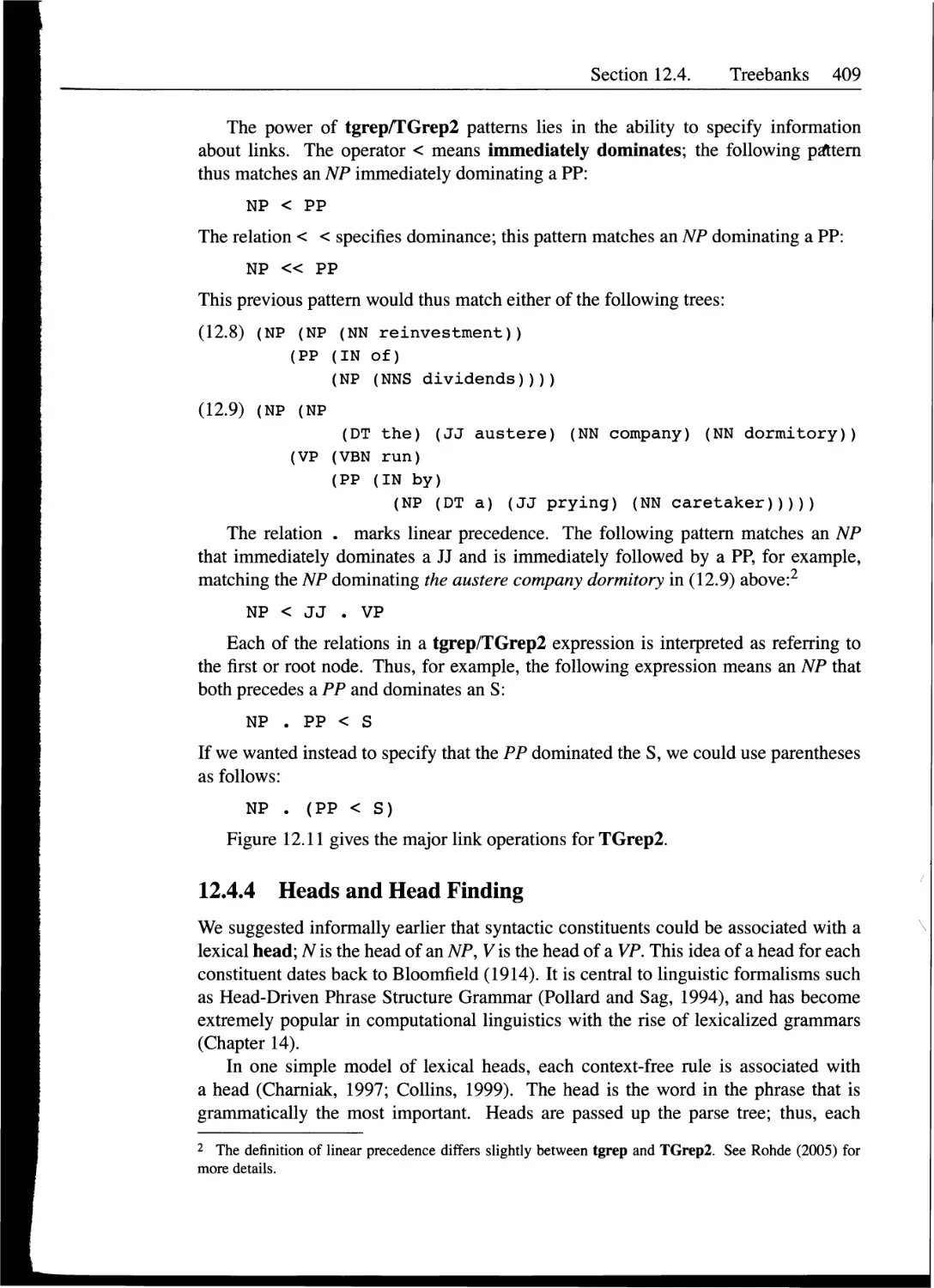

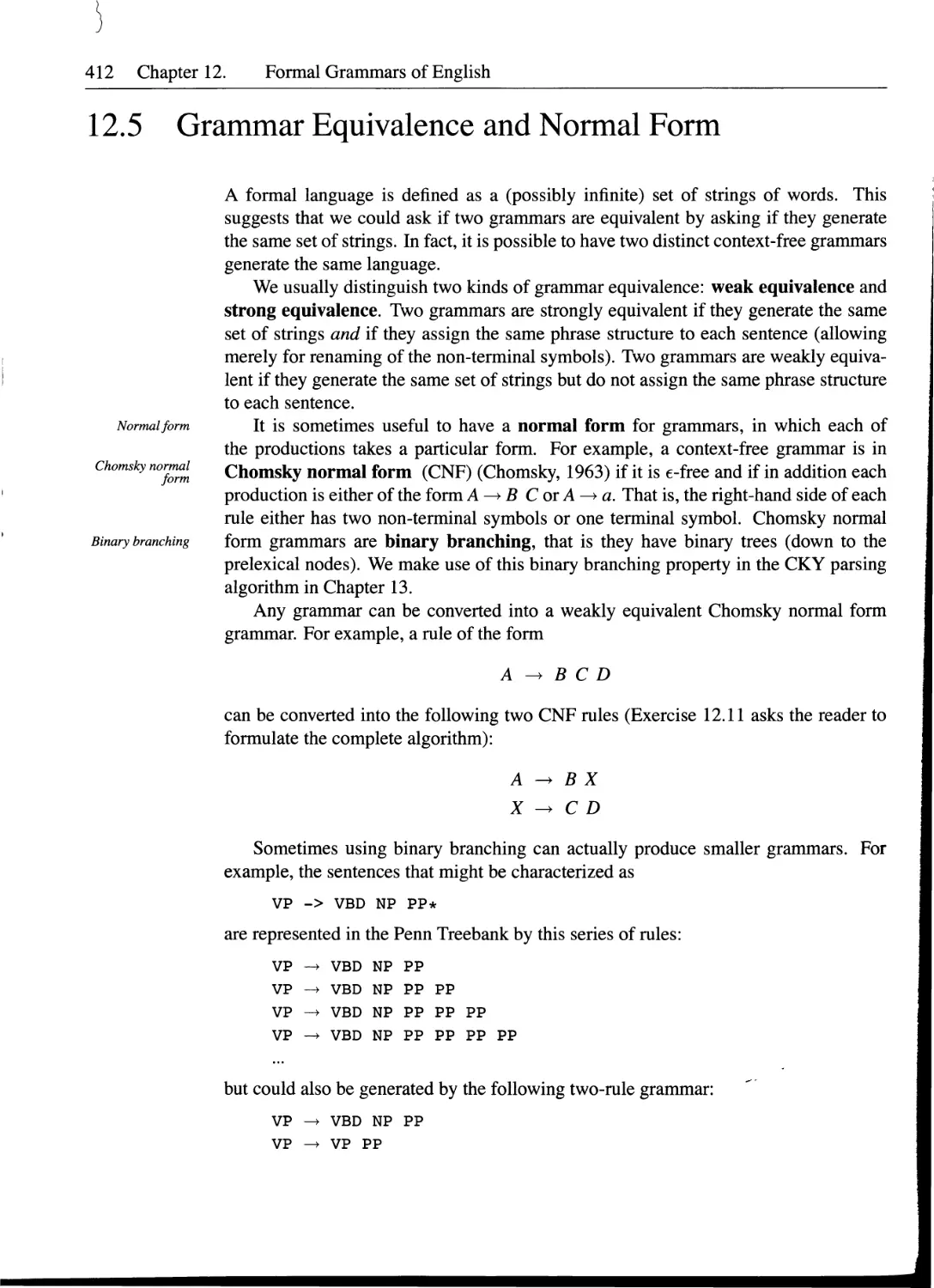

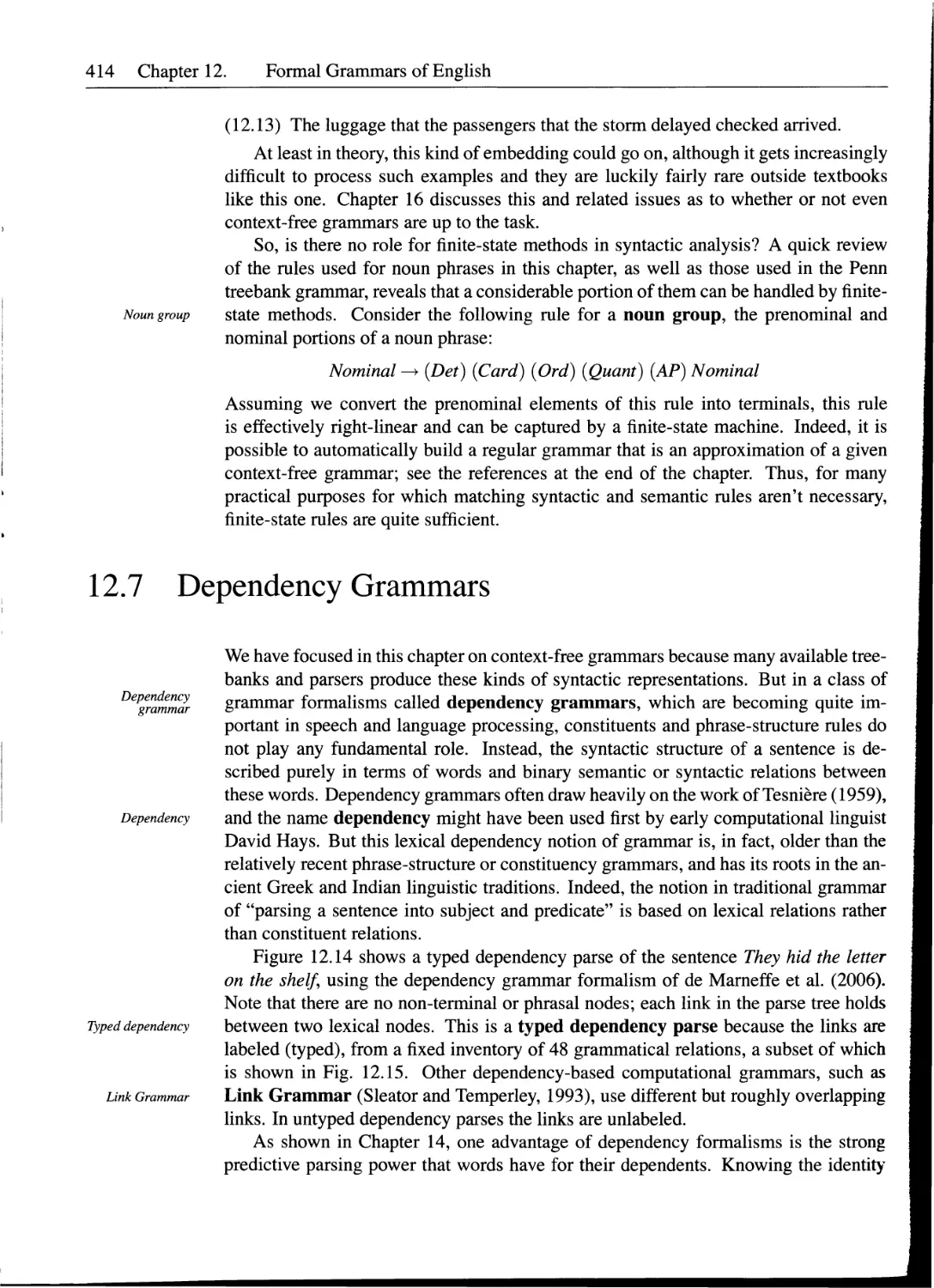

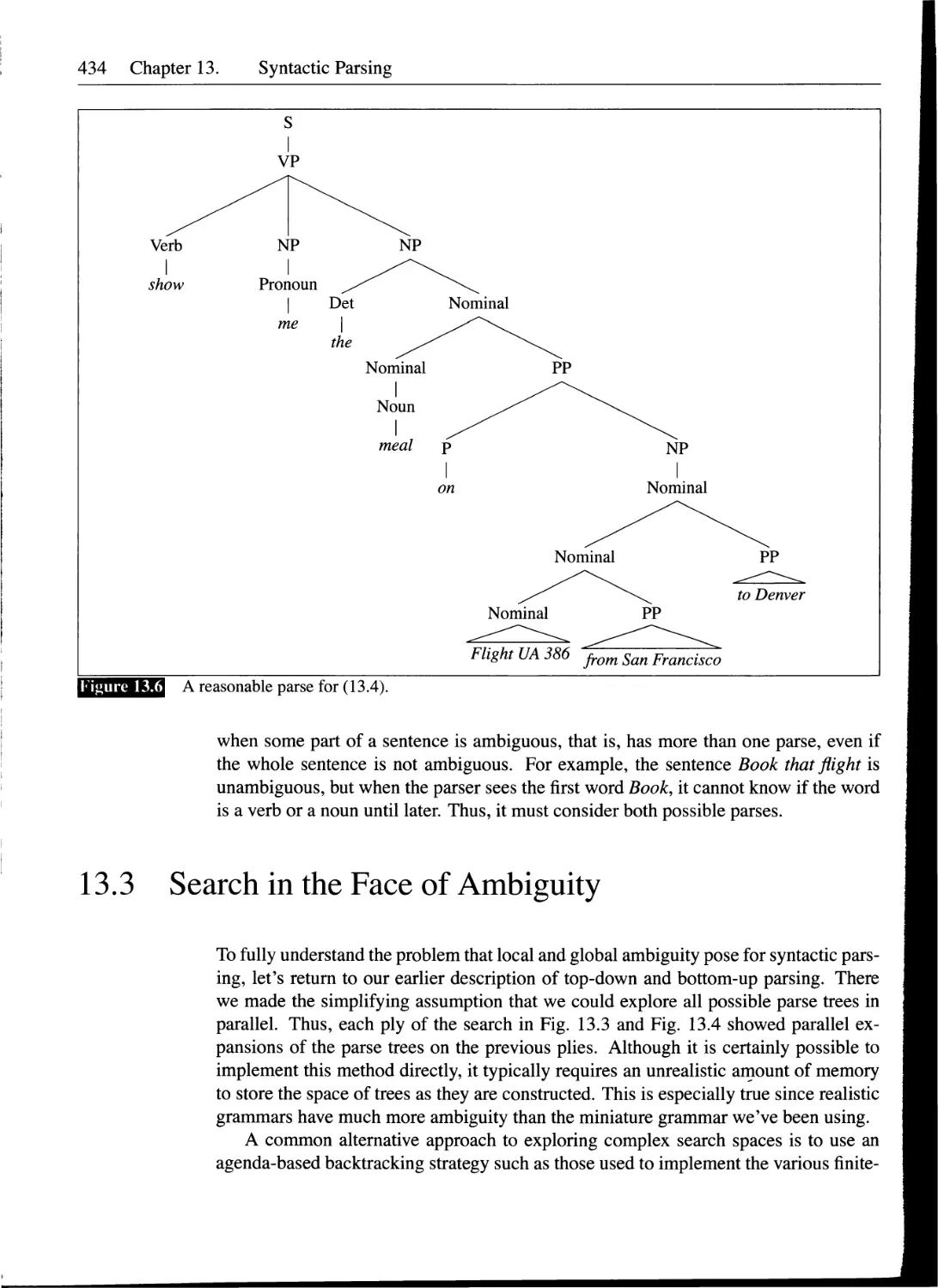

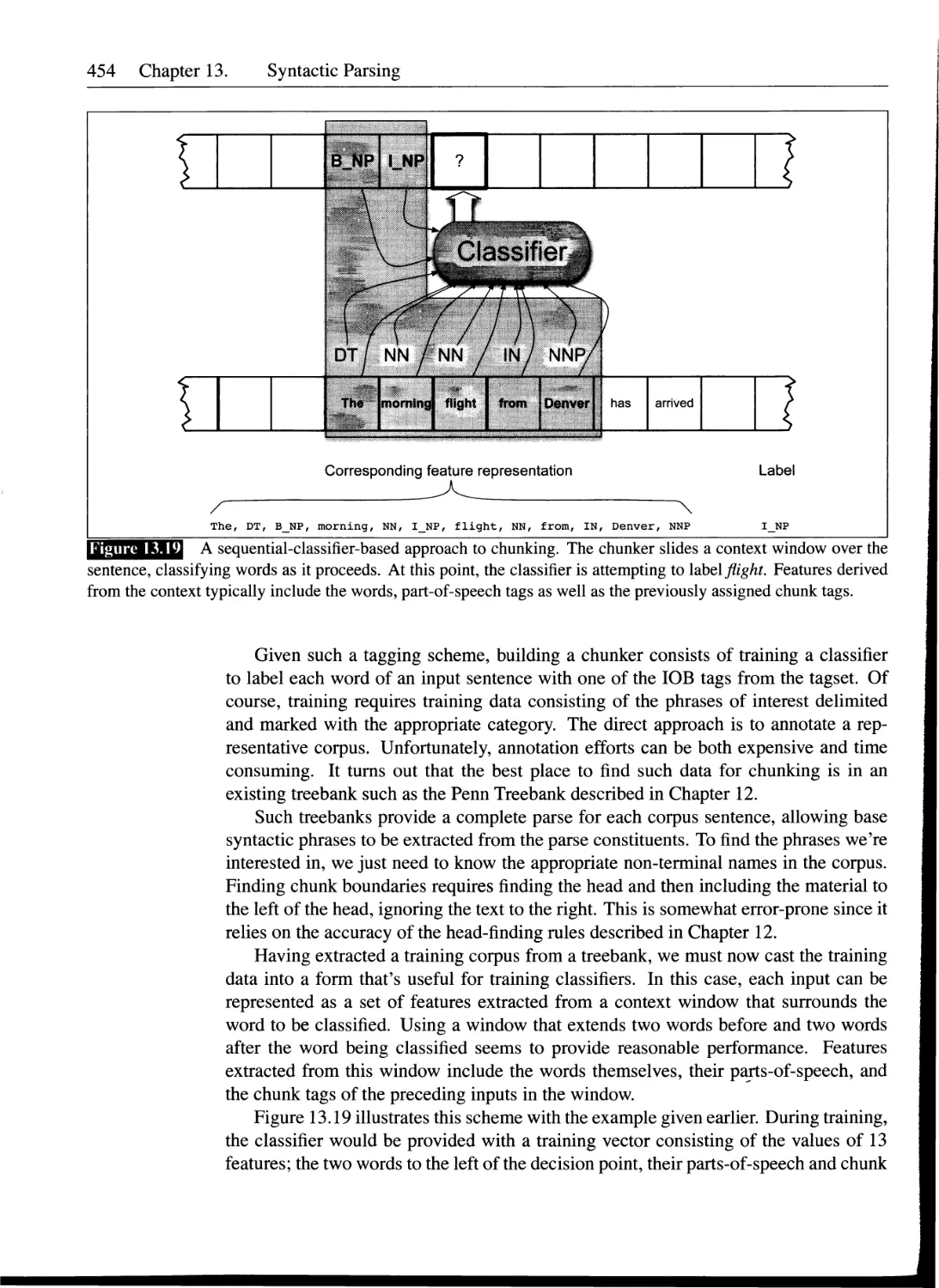

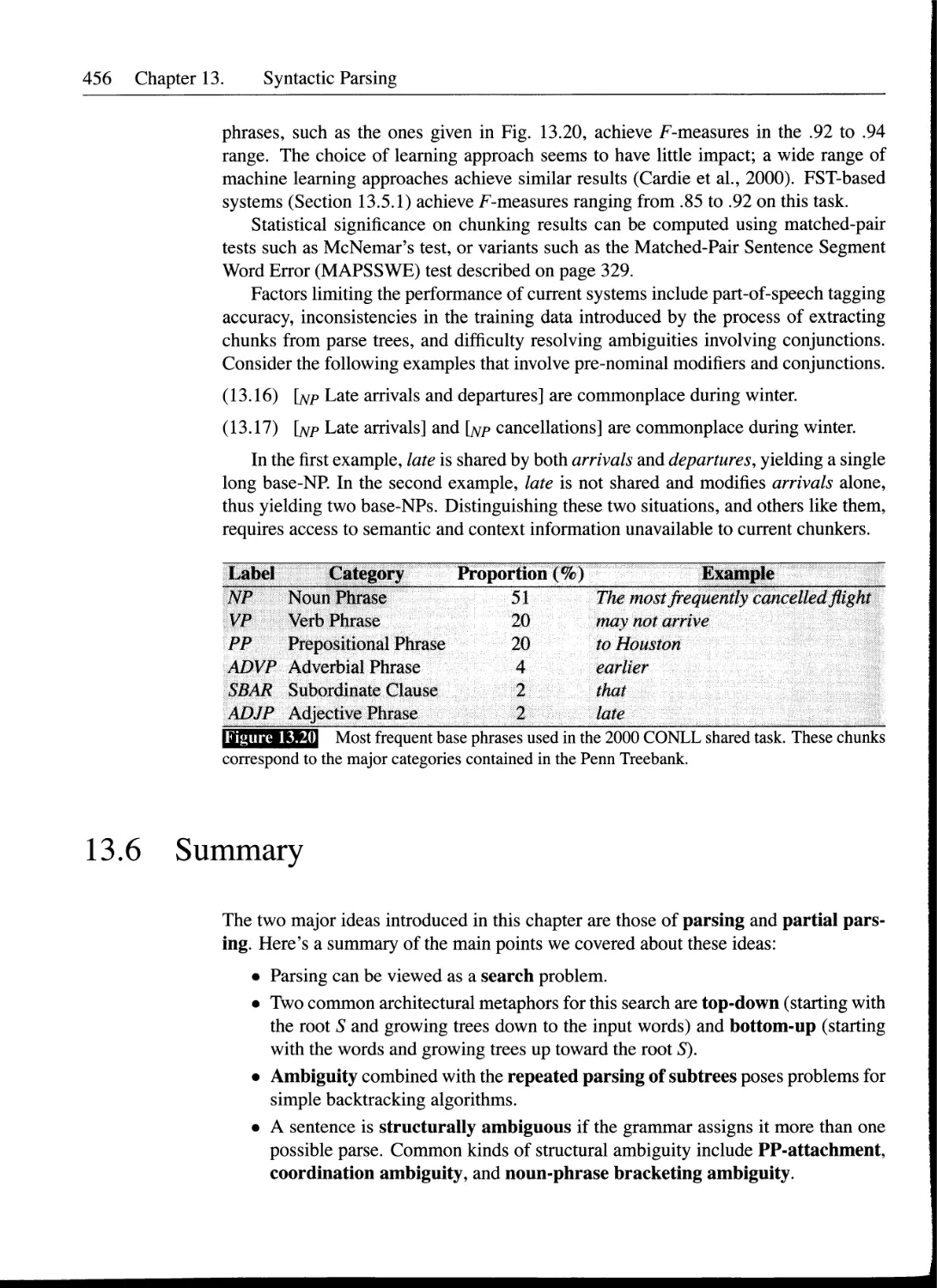

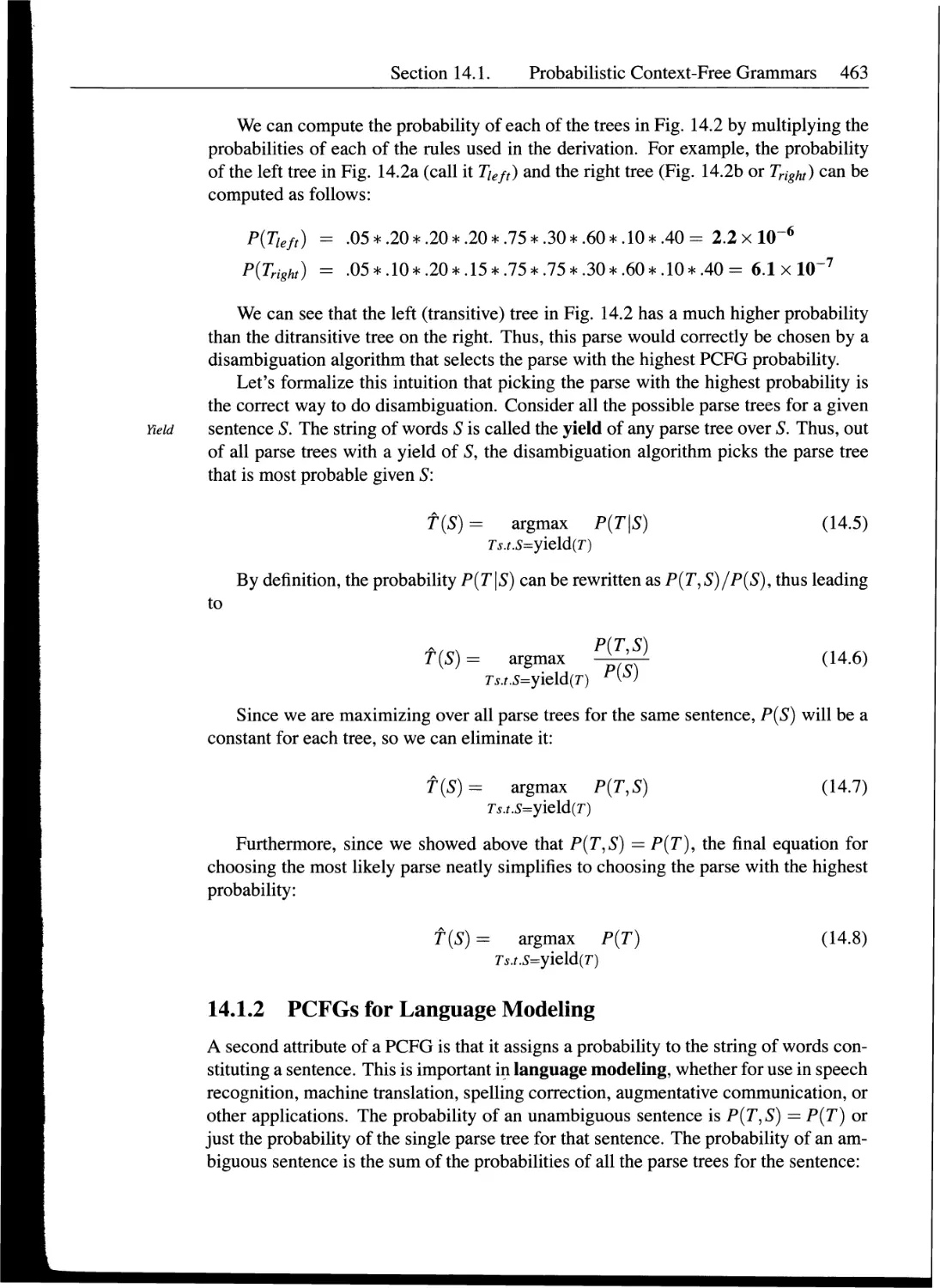

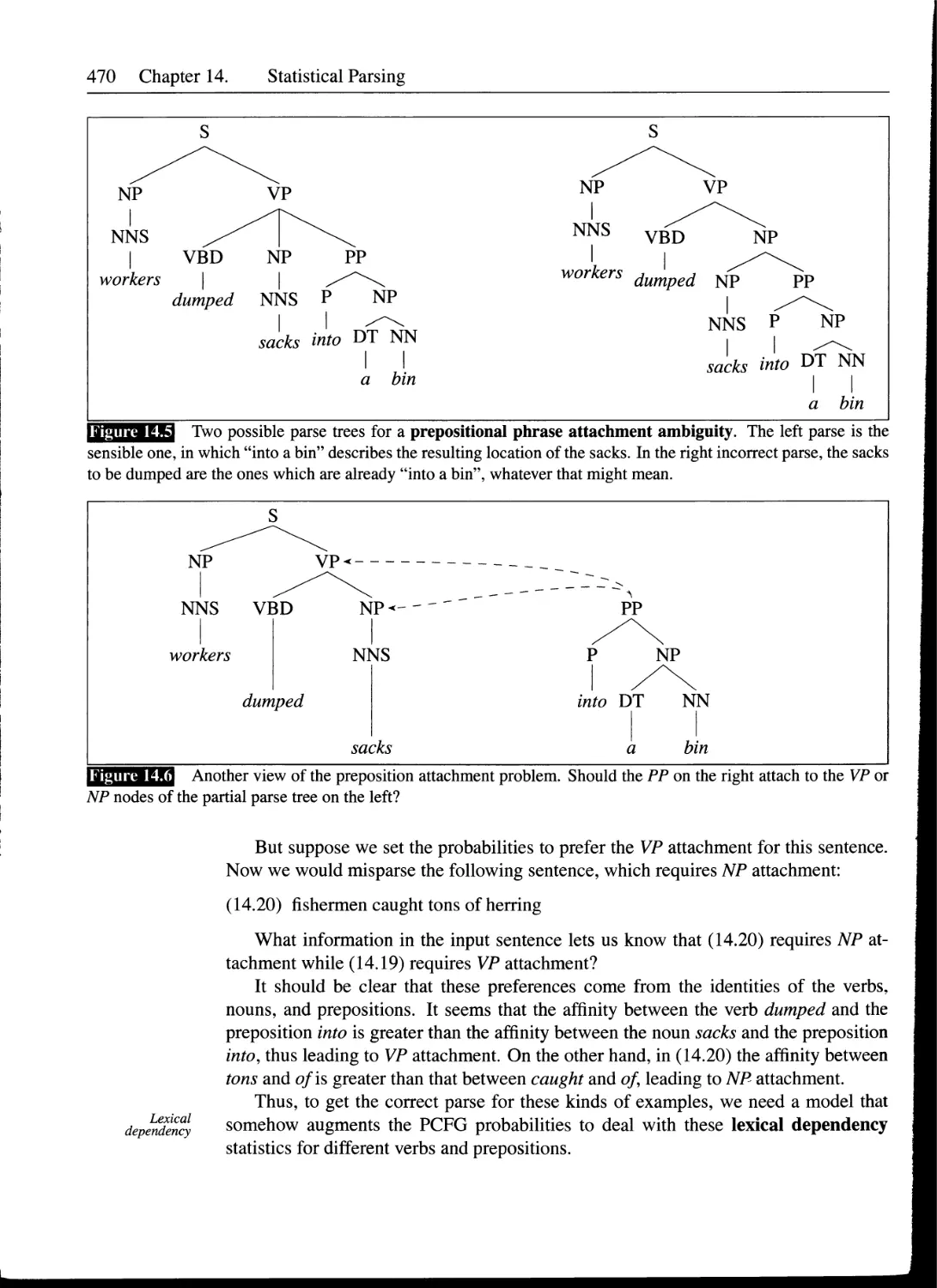

and vector-space models. These models, in turn, lend themselves to a small number